D A2.2 - Network architecture and functional specifications for ...

-

Upload

khangminh22 -

Category

Documents

-

view

1 -

download

0

Transcript of D A2.2 - Network architecture and functional specifications for ...

Project Deliverable IST - 6th FP

Contract N° 507295

MUSE_DA2.2_V02.doc 1/193 PUBLIC

D A2.2 - Network architecture and functional specifications for the multi-service access and edge

François Fredricx Alcatel Research & Innovation [email protected]

Identifier Deliverable D A2.2

Class Report

Version 02

Version Date 19th January 2005

Distribution Public

Responsible Partner Alcatel

Filename WPA2_0038_V02_DA2.2.doc

Project Deliverable IST - 6th FP

Contract N° 507295

MUSE_DA2.2_V02.doc 2/193 PUBLIC

DOCUMENT INFORMATION Project ref. No. IST-6thFP-507295

Project acronym MUSE

Project full title Multi-Service Access Everywhere

Security (distribution level) Public

Contractual delivery date 31st October 2004

Actual delivery date 19th January 2005

Deliverable number D A2.2

Deliverable name Network architecture and functional specifications for the multi-service access and edge.

Type Report

Status & version V02

Number of pages 193

WP / TF contributing WPA2

WP / TF responsible François Fredricx - ALC B

Main contributors See list p.3.

Editor(s) François Fredricx (ALC) Acknowledgement to Christofer Flinta (EAB) and Rainer Stademann (SIE) as co-editors of MA2.7

EU Project Officer Pertti Jauhiainen

Keywords Architecture, Data Plane, QoS, Auto-Configuration, Multicast, Security, IP routing and forwarding, IPv6

Abstract (for dissemination) Network architecture solutions and specifications for generic mechanisms, full model of Ethernet-based network, data plane model of IP-based networks.

Project Deliverable IST - 6th FP

Contract N° 507295

MUSE_DA2.2_V02.doc 3/193 PUBLIC

LIST OF CONTRIBUTORS Note The content of this deliverable is based on previous Milestones MA2.3, MA2.5, and MA2.7. The names of all authors of these Milestones have also been included in the following list. François Fredricx ALC Jeanne de Jaeger ALC Ali Rezaki ALC Christèle Bouchat ALC Erwin Six ALC Lieve Bos ALC Sven Ooghe ALC Peter Domschitz ALC Peter Adams BT Les Humphrey BT Dave Thorne BT Csaba Lukovszki BUTE Sandro Krauß DT Thomas Monath DT Christofer Flinta EABS Hans Mickelsson EABS Anders Eriksson EABS Zere Ghebretensaé EABS Annikki Welin EABS Romain Vinel FT Frédéric Jounay FT Jean-Philippe Luc FT Michel Borgne FT Gilbert Le Houreou FT Michel Herve FT Andreas Foglar IFX Stefan Wavering IFX Tim Stevens IME Koert Vlaeminck IME Govinda Rajan LU NL Miroslav Zivkovic LU NL Philippe Hervé LU NL Antonio Gamelas PTI Teresa Almeida PTI Vitor Pinto PTI Vitor Simoes Ribeiro PTI Francisco Fontes PTI Manuel Fernandes PTI Vitor Marques PTI Enrique Areizaga Sanchez ROB Rainer Stademann SIE Johannes Bergmann SIE Thomas Gremmer SIE Norbert Boll SIE

Project Deliverable IST - 6th FP

Contract N° 507295

MUSE_DA2.2_V02.doc 4/193 PUBLIC

Alun Foster STM Pascal Moniot STM Sylvie Danton THO N Hervé Le Bihan THO N Manuel Sanchez Yangüela TID Gabriel Moreno TID Antonio Elizondo TID Jan de Nijs TNO Rob Kooij TNO Pieter Nooren TNO Arnoud van Neerbos TNO Nils Bjorkman TS

Project Deliverable IST - 6th FP

Contract N° 507295

MUSE_DA2.2_V02.doc 5/193 PUBLIC

DOCUMENT HISTORY Version Date Comments and actions Status

V01 Jan 17th, 2005 DA2.2 based on MA2.7, including review comments on MA2.7, new introduction paragraph 1.1, new chapter 5 on conclusions

V02 Jan 19th , 2005 More descriptive section 1.1. Clarifications in section 2.2.3. Final corrections

Final

Project Deliverable IST - 6th FP

Contract N° 507295

MUSE_DA2.2_V02.doc 6/193 PUBLIC

TABLE OF CONTENTS DOCUMENT INFORMATION .....................................................ERROR! BOOKMARK NOT DEFINED.

DOCUMENT HISTORY............................................................................................................................5

TABLE OF CONTENTS...........................................................................................................................6

LIST OF FIGURES AND TABLES ..........................................................................................................8

ABBREVIATIONS..................................................................................................................................12

REFERENCES .......................................................................................................................................18

EXECUTIVE SUMMARY........................................................................................................................21 Introduction.....................................................................................................................................21 Generic architecture considerations...............................................................................................22 Ethernet Network Model.................................................................................................................23 IP Network Model ...........................................................................................................................24 What's next?...................................................................................................................................26

1 INTRODUCTION ............................................................................................................................27 1.1 Scope of the deliverable .........................................................................................27

1.1.1 Context ...................................................................................................................27 1.1.2 Scope......................................................................................................................29 1.1.3 Content ...................................................................................................................30

1.2 Positioning of DA2.2 ...............................................................................................30 1.3 Focus on Multi-service............................................................................................32

2 GENERIC ASPECTS .....................................................................................................................33 2.1 Positioning of the Access and Aggregation network ..............................................33

2.1.1 Terminology and logical model...............................................................................33 2.1.2 Connectivity models................................................................................................35 2.1.3 Reference Control Architecture ..............................................................................37

2.2 Model of Residential Gateway................................................................................41 2.2.1 Definitions ...............................................................................................................41 2.2.2 Access Gateway – general boundary assumptions ...............................................42 2.2.3 The Residential Gateway Architecture Model ........................................................46

2.3 General Connectivity ..............................................................................................52 2.3.1 Business models in an Access Network.................................................................52 2.3.2 Peer-to-peer traffic..................................................................................................60 2.3.3 Multicast and Multipoint Delivery ............................................................................63

2.4 QoS Architectures...................................................................................................70 2.4.1 QoS architecture principles ....................................................................................71 2.4.2 Traffic classes in the network .................................................................................79 2.4.3 3GPP/IMS-based architecture................................................................................81

2.5 AAA Architectures...................................................................................................88 2.5.1 Auto-configuration in a multi-provider environment................................................88 2.5.2 Control Plane options .............................................................................................91 2.5.3 One Step Configuration ..........................................................................................95 2.5.4 IMS Model adaptation.............................................................................................99 2.5.5 Open Issues......................................................................................................... 107

3 ETHERNET NETWORK MODEL................................................................................................ 108 3.1 Connectivity in the Ethernet Network Model ....................................................... 108

3.1.1 Overview of Ethernet network model................................................................... 108 3.1.2 Using MPLS......................................................................................................... 123 3.1.3 Providing end-end connectivity............................................................................ 126 3.1.4 Summary Ethernet Network Model connectivity.................................................. 140

3.2 AAA architectures................................................................................................ 143 3.2.1 Control Plane....................................................................................................... 143

Project Deliverable IST - 6th FP

Contract N° 507295

MUSE_DA2.2_V02.doc 7/193 PUBLIC

3.2.2 Open Issues......................................................................................................... 144 3.3 Qos architectures................................................................................................. 144

3.3.1 Mapping of the 3GPP/IMSarchitecture to the Ethernet model ............................ 144 3.4 Security................................................................................................................ 147

3.4.1 Scope................................................................................................................... 147 3.4.2 Generalities.......................................................................................................... 147 3.4.3 Security threats & mechanisms with IPoPPPoE traffic........................................ 147 3.4.4 Security threats & mechanisms with IPoE traffic................................................. 148 3.4.5 Overview of security mechanisms....................................................................... 149

4 IP NETWORK MODEL................................................................................................................ 150 4.1 Overview.............................................................................................................. 150

4.1.1 Network Scenario ................................................................................................ 150 4.1.2 IP Network Model Characteristics ....................................................................... 151 4.1.3 Use Cases ........................................................................................................... 152

4.2 Use Cases For PPPoE Handling......................................................................... 153 4.2.1 PPP Use Case 1: L2 switching of PPPoE traffic ................................................ 153 4.2.2 PPP Use Case 2: PPPoE relay of PPP traffic ..................................................... 155 4.2.3 PPP Use Case 3: LAC / PTA in the IP Forwarder............................................... 156

4.3 Use Cases For IPoE Handling............................................................................. 158 4.3.1 NAP provides IP transport service....................................................................... 158 4.3.2 NAP provides routed IP service for application wholesale.................................. 167 4.3.3 NAP provides routed IP service for IP wholesale to third-party NSPs ................ 172

4.4 Use Cases For IPv6............................................................................................. 173 4.4.1 Access network assumptions for the IPv6 use cases ......................................... 173 4.4.2 IPv6 address structure......................................................................................... 173 4.4.3 Allocation Efficiency Considerations.................................................................... 174 4.4.4 Static Addressing Schemes................................................................................. 177 4.4.5 Dynamic Addressing............................................................................................ 180 4.4.6 Integration of dynamic and static addressing ...................................................... 189 4.4.7 Access network routing issue .............................................................................. 190

4.5 Topics for further consideration ........................................................................... 190

5 CONCLUSIONS .......................................................................................................................... 191 5.1 Generic mechanisms........................................................................................... 191 5.2 Ethernet-based network model............................................................................ 192 5.3 IP-based network model...................................................................................... 193

Project Deliverable IST - 6th FP

Contract N° 507295

MUSE_DA2.2_V02.doc 8/193 PUBLIC

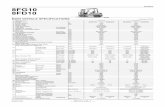

LIST OF FIGURES AND TABLES Figure 1-1. Relation between the different milestones and deliverables for the MUSE

Network architecture. ......................................................................................................31

Figure 2-1. Reference Network based on the DSL-Forum reference service provider interconnection model. ....................................................................................................33

Figure 2-2. In the Ethernet Transport scenario there is Ethernet connectivity between the CPN and the Access Edge Node. In an extended scenario Ethernet frames may also be tunneled further up to the NSP Edge Nodes...................................................................35

Figure 2-3. In the IP Transport scenario there is Ethernet connectivity only in the First Mile link, and optionally in the aggregation part or regional part of the network.....................36

Figure 2-4. Reference control architecture – top view............................................................38

Figure 2-5. Business roles according to MUSE [1].................................................................39

Figure 2-6. The MUSE business roles mapped onto the reference control architecture........41

Figure 2-7 : Bridged RGW model ...........................................................................................49

Figure 2-8: Routed RGW model without NAPT......................................................................50

Figure 2-9: Routed RGW with NAPT......................................................................................51

Figure 2-10: Unified model of a hybrid Residential Gateway configuration............................52

Figure 2-11 : Full wholesaling with PPP to third-party ISPs/NSPs.........................................55

Figure 2-12 : Business model (b) ...........................................................................................56

Figure 2-13 : Business model (c) ...........................................................................................57

Figure 2-14 : Business model (d) ...........................................................................................58

Figure 2-15 : Business model (e) ...........................................................................................59

Figure 2-16. Upstream tunnelling. All traffic is routed by destination address. Upstream traffic is tunnelled up to the Edge Node. Downstream traffic is not tunneled............................62

Figure 2-17 : Service provider oriented model .......................................................................71

Figure 2-18 : Application signalling based model with policy push ........................................72

Figure 2-19. Centralised resource management based on pre-provisioned QoS pipes between access and edge nodes....................................................................................74

Figure 2-20. Centralised resource management based on the capacity of the links of the Ethernet aggregation network. ........................................................................................75

Figure 2-21. Example of resource reservation using signalling..............................................77

Figure 2-22: Components of QoS control in network provider’s domain................................78

Figure 2-23: Main elements of 3GPP/IMS-based architecture for QoS and resource control.........................................................................................................................................83

Figure 2-24 : Set-up of end-to-end session in the application signalling based model. .........83

Figure 2-25. Generalization of architecture for roaming, based on 3GPP IMS roaming. .......85

Figure 2-26. Set up of QoS connection in 3GPP/IMS implementation of service provider oriented model. ...............................................................................................................86

Project Deliverable IST - 6th FP

Contract N° 507295

MUSE_DA2.2_V02.doc 9/193 PUBLIC

Figure 2-27, Mapping of IMS-based architecture to DA1.1 business roles. ...........................87

Figure 2-28: Relationship between service session and PPPoE session. .............................89

Figure 2-29: Option #1 for DHCP and RADIUS interaction....................................................92

Figure 2-30: Option #2 for DHCP and RADIUS interaction....................................................93

Figure 2-31: One step configuration and AAA process, DHCP server in AN .........................96

Figure 2-32 : One step configuration and AAA process, DHCP relay agent in AN ................98

Figure 2-33: 3GPP IMS Architecture....................................................................................100

Figure 2-34: 3GPP2 IMS Architecture..................................................................................101

Figure 2-35: IMS architecture with ACS. ..............................................................................103

Figure 2-36: IMS AAA architecture with NAP PDF and Radius. ..........................................105

Figure 2-37: IMS AAA with NAP PDF and public Gq interface. ...........................................106

Figure 2-38: 3GPP IMS architecture with direct link between AAA and AF. ........................107

Figure 3-1 : Functional basis of Ethernet network model .....................................................108

Figure 3-2 : Intelligent bridging (residential users) ...............................................................109

Figure 3-3 : Cross-connecting (residential users) ................................................................110

Figure 3-4 : Business users in the Ethernet Network Model ................................................111

Figure 3-5 : Illustration of MAC FF .......................................................................................119

Figure 3-6 : MPLS for L2 VPN business services ................................................................123

Figure 3-7 : MPLS for L3 VPN service .................................................................................124

Figure 3-8 : MPLS Encapsulation in cross-connect mode ...................................................125

Figure 3-9 : MPLS Encapsulation in bridging mode .............................................................125

Figure 3-10 : Possible failures in the NAP............................................................................127

Figure 3-11: Peer-peer in intelligent bridging mode and cross-connect mode.....................134

Figure 3-12: Multicast server connected to the aggregation network...................................137

Figure 3-13 : Multicast server connected via the ASP .........................................................138

Figure 3-14: IGMP functionalities in the Ethernet NW model...............................................139

Figure 3-15: Connectivity in the Ethernet NW model (Intelligent bridging model) ................142

Figure 3-16: One step configuration and AAA process for a L2 network model. .................144

Figure 3-17, IMS in a cross connected Ethernet model .......................................................146

Figure 3-18, IMS in a bridged Ethernet model .....................................................................146

Figure 4-1: Network Scenario for the IP Models ..................................................................150

Figure 4-2: IP network model characteristics .......................................................................151

Figure 4-3 : L2 switching of IPoPPPoE traffic, combined with IPoE traffic...........................153

Figure 4-4: PPPoE relay.......................................................................................................155

Figure 4-5 : IPoPPPoE traffic handled in IP forwarder (LAC/PTA), combined with IPoE traffic......................................................................................................................................156

Project Deliverable IST - 6th FP

Contract N° 507295

MUSE_DA2.2_V02.doc 10/193 PUBLIC

Figure 4-6: Basic scenario with NAP providing IP and PPP transport services ...................158

Figure 4-7: Data plane example for IP transport service......................................................159

Figure 4-8: Session and service aware IP forwarding (network to user forwarding) ............162

Figure 4-9: 802.x based service selection with RADIUS proxy chaining..............................163

Figure 4-10: DHCP based session binding ..........................................................................165

Figure 4-11: An ARP proxy in the IP forwarder always replies with its own MAC address ..166

Figure 4-12: Most applicable business scenario for routed IP in the NAP network..............167

Figure 4-13: VLAN Aggregation ...........................................................................................169

Figure 4-14: IPv6 address ....................................................................................................173

Figure 4-15: division of free bits ...........................................................................................173

Figure 4-16: HD-ratio for an increasing number of ISPs when only static address allocation is deployed. Note that the number of ISPs does not influence the HD-ratio in case of dynamic address allocation...........................................................................................176

Figure 4-17: ISP prefix propagation .....................................................................................177

Figure 4-18: Basic hierarchical model ..................................................................................177

Figure 4-19: Redundancy.....................................................................................................178

Figure 4-20: NAP ER interconnection ..................................................................................179

Figure 4-21: NAP proprietary addressing.............................................................................180

Figure 4-22 Hierarchical aggregation points ........................................................................181

Figure 4-23 Address delegation entities...............................................................................184

Figure 4-24 Address delegation policies ..............................................................................185

Figure 4-25: IPv6 prefix delegation architecture...................................................................187

Figure 4-26: Dynamic/static addressing integration .............................................................189

Table 2-1: Relevant DSL-Forum recommendations...............................................................42

Table 2-2: Configuration of RGW parameters by providers ...................................................51

Table 2-3 : Considered business models ..............................................................................54

Table 2-4: Comparison of different multimedia content adaptation techniques .....................68

Table 2-5: Multicast capabilities per application.....................................................................69

Table 2-6: Proposed traffic classes ........................................................................................80

Table 3-1 : Summary of the basic use of VLANs in Ethernet network model.......................122

Table 3-2 : Possible failures and required updates ..............................................................129

Table 3-3: Summary direct peer-peer ..................................................................................134

Table 3-4: Summary peer-peer via EN.................................................................................136

Table 3-5: IGMPv2 messages..............................................................................................137

Table 3-6 : Security measures for Ethernet Network Model.................................................149

Project Deliverable IST - 6th FP

Contract N° 507295

MUSE_DA2.2_V02.doc 11/193 PUBLIC

Table 4-1: Binding of IP sessions to service connections in the IP forwarder......................160

Table 4-2: Session and service aware IP forwarding (user to network forwarding) .............161

Table 4-3: Session and service aware IP forwarding (network to user forwarding) .............162

Table 4-4: address utilisation figures for distribution of /48 IPv6 prefixes ............................176

Project Deliverable IST - 6th FP

Contract N° 507295

MUSE_DA2.2_V02.doc 12/193 PUBLIC

ABBREVIATIONS 3GPP 3rd Generation Partnership Project

AAA Authentication, Authorisation & Accounting

AAL ATM Adaptation Layer

ABR Available Bit Rate

ABT ATM Block Transfer

ACS Auto-Configuration Server

ADSL Asymmetric Digital Subscriber Line

AF Assured Forwarding

ALG Application Layer Gateway

AM Access Multiplexer

APON ATM Passive Optical Network

ARP Address Resolution Protocol

ASP Application Service Provider

ASR Aggregation/Switching/Routing

ATC ATM Transfer Capability

ATM Asynchronous Transfer Mode

BAS Broadband Access Server

BER Bit Error Rate

B-NT Broadband Network Termination

BoD Broadband on Demand

BRAS Broadband Remote Access Server

BROL Basic Recursive Operating Language

BPDU Bridge Protocol Data Units

CAC Call Admission Control

CATV Cable TV

CBR Constant Bit Rate

CDN Content Distribution Network

CHAP Challenge Handshake Authentication Protocol

CIDR Classless InterDomain Routing

CLEC Competitive Local Exchange Carrier

CLP Cell Loss Priority

CO Central Office

COPS Common Open Policy Service

CoS Class of Service

CPE Customer Premises Equipment

Project Deliverable IST - 6th FP

Contract N° 507295

MUSE_DA2.2_V02.doc 13/193 PUBLIC

CPN Customer Premises Network

CRC Cyclic Redundancy Check

CSP Corporate Service Providers

CST Common Spanning Tree

C-VLAN Customer Virtual Local Area Network

CWDM Coarse Wavelength Division Multiplexing

DBA Dynamic Bandwidth Assignment

DF Default Forwarding

DiffServ Differentiated Services

DHCP Dynamic Host Configuration Protocol

DMT Discrete Multi-Tone modulation

DNS Domain Name System

DoS Denial of Service

DP Distribution Point

DSCP Differentiated Services Code Point

DSL Digital Subscriber Line

DSLAM DSL Access Multiplexer

DSM Dynamical Spectrum Management

EAP Extensible Authentication Protocol

EF Expedited Forwarding

EFM Ethernet in the First Mile

EPG Electronic Program Guide

EPON Ethernet Passive Optical Network

ER Edge Router

EUT End-User Terminal

EVC Ethernet Virtual Connection

FDB Forwarding Data Base

FEC Forward Error Correction

FOO Sample name for absolutely anything ("whatever"). See RFC3092

FPD Functional Processing Device

FQDN Fully Qualified Domain Name

FTTB Fibre To The Building/Business

FTTCab Fibre To The Cabinet

FTTEx Fibre To The Exchange

FTTH Fibre to the Home

FTTO Fibre To The Office

FWA Fixed Wireless Access

GARP Generic Attribute Registration Protocol

Project Deliverable IST - 6th FP

Contract N° 507295

MUSE_DA2.2_V02.doc 14/193 PUBLIC

GFR Generic Frame Rate

GMRP GARP Multicast Registration Protocol

GPON Gigabit-capable Passive Optical Network

HSI High Speed Internet

HSS (ETSI TISPAN) Home Subscriber Server

IAD Integrated Access Devices

IANA Internet Assigned Numbers Authority

IFG interframe Gap

IGMP Internet Group Management Protocol

ILEC Incumbent Local exchange Carrier,

ILMI Interim Local Management Interface

IMS IP Multimedia Subsystem

IntServ Integrated Services

IP Internet Protocol

IPCP IP Control Protocol

IPDV IP packet Delay Variation

IPER IP packet Error Ratio

IPG Interpacket Gap

IPLR IP packet loss ratio

IPTD IP packet Transfer Delay

IPv4 Internet Protocol version 4

IPv6 Internet Protocol version 6

ISDL ISDN Digital Subscriber Line

ISP Internet Service Provider

LAC L2TP Access Concentrator

LACP Link Aggregation Control Protocol

LCP Link Control Protocol

LER Label Edge Router

LEx Local eXchange

LMI Local Management Interface

LNS L2TP Network Server

LSP Label Switched Path

LSR Label Switching Router

MAC Media Access Control

MAC DA Media Access Control Destination Address

MAC SA Media Access Control Source Address

MAC FF MAC Forced Forwarding

MBGP Multicast Border Gateway Protocol

Project Deliverable IST - 6th FP

Contract N° 507295

MUSE_DA2.2_V02.doc 15/193 PUBLIC

MDF Main Distribution Frame

MEF Metro Ethernet Forum

MIDCOM MIDdlebox COMmunications

MITM Man-in-the-Middle

MLDv2 Multicast Listener Discovery version 2 protocol

MPLS Multi-protocol Label Switching

MSDP Multicast Source Distribution Protocol

MSDSL Multirate Symmetric Digital Subscriber Line

MST Multiple Spanning Tree

MSTI Multiple Spanning Tree Instance

MSTP Multiple Spanning Tree Protocol

NAI Network Access Identifier

NAP Network Access Provider

NAPT Network Address and Port Translator

NAS Network Access Server

NAT Network Address Translator

NGN Next Generation Network

NRCS Network Resource Control Servers

NSP Network Service Provider

NT Network Termination

NT2L / NT2W NT2 functional block in RGW : NT2-LAN part, NT2-WAN part

NV-RAM Non-Volatile Random Access Memory

OSGi Open Services Gateway Initiative

OAM Operations, Administration & Maintenance

OLT Optical Line Terminator

ONT Optical Network Terminator

ONU Optical Network Unit

PADI PPPoE Active Discovery Initiation

PADO PPPoE Active Discovery Offer

PAP Password Authentication Protocol

PBX Private Branch eXchange

PDH Plesiochronous Digital Hierarchy

PDU Protocol Data Unit

PDV Packet Delay Variation

PHY Physical Layer

PIM-DM Protocol Independent Multicast-Dense Mode

PIM-SM Protocol Independent Multicast-Sparse Mode

PIM-SSM Protocol Independent Multicast Single Source Multicast

Project Deliverable IST - 6th FP

Contract N° 507295

MUSE_DA2.2_V02.doc 16/193 PUBLIC

PLOAM Physical Layer Operation, Administration and Maintenance

PON Passive Optical Network

PoP Points of Presence

PPP Point-to-Point Protocol

PPPoA PPP over ATM

PPPoE PPP over Ethernet

PPV Pay Per View

PSTN Public Switched Telephone Network

PTA PPP Termination and Aggregation

P-t-MP Point to Multi Point

P-t-P Point to Point

PVC Permanent Virtual Connection

QoS Quality of Service

RACS (ETSI TISPAN) Resource and Admission Control Subsystem

RADIUS Remote Authentication Dial In User Service

RADSL Rate-Adaptive Asymmetric Digital Subscriber Line

RE-ADSL Reach Extended Asymmetric Digital Subscriber Line

RFC Request for Comment

RGW Residential Gateway

RIR Regional Internet Registries

RNP Regional Network Provider

RP Rendezvous Point

RPF Reverse Path Forwarding

RPR Resilient packet ring

RSTP Rapid Spanning Tree Protocol

SAR Segmentation and Reassembly

SDH Synchronous Digital Hierarchy

SDSL Symmetric Digital Subscriber Line

SFM Source Filtered Multicast

SHDSL Single-pair high-speed digital subscriber line

SIP Session Initiation Protocol

SLA Service Level Agreement

SLS Service Level Specification

SNMP Simple Network Management Protocol

SP (MUSE terminology) Sub Project

SPOF Single Point of Failure

SSM Single Source Multicast

SSP Service Selection Portal (website)

Project Deliverable IST - 6th FP

Contract N° 507295

MUSE_DA2.2_V02.doc 17/193 PUBLIC

STB Set Top Box

STP Spanning Tree Protocol

S-VLAN Service Virtual Local Area Network

T-CONT Traffic Container

TCP Transmission Control Protocol

TDM Time Division Multiplexing

TDMA Time Division Multiple Access

ToIP Telephony over IP

ToS Type of Service

TVoIP TV over IP

UBR Unspecified Bit Rate

UDP User Datagram Protocol

UNI User to Network Interface

URL Universal Resource Locator

VBR Variable Bit Rate

VC Virtual Channel

VCC Virtual Channel Connection

VCI Virtual Channel Identifier

VDSL Very high speed Digital Subscriber Line

VID VLAN-ID

VLAN Virtual Local Area Network

VoATM Voice over ATM

VoD Video on Demand

VoDSL Voice-over Digital Subscriber Line

VoIP Voice over IP

VP Virtual Path

VPI Virtual Path Identifier

VR Virtual Router

WAN Wide Area Network

WFQ Weighted Fair Queuing

WP (MUSE terminology) Work Package

WRR Weighted Round Robin

xDSL xDSL refers to different variations of DSL, such as ADSL, HDSL, and RADSL

Project Deliverable IST - 6th FP

Contract N° 507295

MUSE_DA2.2_V02.doc 18/193 PUBLIC

REFERENCES [1] MUSE deliverable DA1.1, "Towards multi-service business models"

[2] MUSE deliverable DA1.2, "Network requirements for multi-service access"

[3] MUSE deliverable DA2.1, “From Reference Applications to Layer 2 and Layer 3 Services”

[4] MUSE milestone MA2.3, "Network Architecture: high-level description for individual architectural issues"

[5] MUSE milestone MA2.5, "Network architecture and functional specifications for the multi-service access and edge – Step 1: shortlist of options and feature groups prioritisation "

[6] MUSE milestone MA2.7, "Network architecture: detailed solution of individual architectural issues for more urgent features group "

[7] MUSE deliverable D TF1.2 – Overview of QoS principles in access networks

[8] Overall description of Public Ethernet Architecture, WPC1_1_1_2v08

[9] IETF RFC 2327: "SDP: Session Description Protocol"

[10] IETF RFC 2373, “IP Version 6 Addressing Architecture”. R. Hinden, S. Deering. July 1998.

[11] IETF RFC 2460, “Internet Protocol, Version 6 (IPv6) Specification”. S. Deering, R. Hinden. December 1998.

[12] IETF RFC 2473, “Generic Packet Tunneling in IPv6 Specification”. A. Conta, S.Deering. December 1998.

[13] IETF RFC 2516, “A Method for Transmitting PPP Over Ethernet (PPPoE)”. L. Mamakos, K. Lidl, J. Evarts, D. Carrel, D. Simone, R. Wheeler. February 1999.

[14] IETF RFC 2543: "SIP: Session Initiation Protocol"

[15] IETF RFC 2547, "BGP/MPLS VPNs", March 1999.

[16] IETF RFC 2607, “Proxy Chaining and Policy Implementation in Roaming”. B. Aboba, J. Vollbrecht. June 1999.

[17] IETF RFC 2748: "The COPS (Common Open Policy Service) Protocol"

[18] IETF RFC 2753: "A Framework for Policy-based Admission Control"

[19] IETF RFC 3021, “Using 31-Bit Prefixes on IPv4 Point-to-Point Links”. A. Retana, R. White, V. Fuller, D. McPherson. December 2000.

Project Deliverable IST - 6th FP

Contract N° 507295

MUSE_DA2.2_V02.doc 19/193 PUBLIC

[20] IETF RFC 3069, “VLAN Aggregation for Efficient IP Address Allocation”. D. McPherson, B. Dykes. February 2001.

[21] IETF RFC 3084: "COPS Usage for Policy Provisioning (COPS-PR)"

[22] IETF RFC 3090, “DNS Security Extension Clarification on Zone Status”. E. Lewis. March 2001.

[23] IETF RFC 3162, “RADIUS and IPv6”. B. Aboba, G. Zorn, D. Mitton. August 2001.

[24] IETF RFC 3194, “The H-Density Ratio for Address Assignment Efficiency An Update on the H ratio”. A. Durand, C. Huitema. November 2001.

[25] IETF RFC 3580, " IEEE 802.1X Remote Authentication Dial In User Service (RADIUS), Usage guidelines", September 2003.

[26] IETF RFC 3633, “IPv6 Prefix Options for Dynamic Host Configuration Protocol (DHCP) version 6”. O. Troan, R. Droms. December 2003.

[27] IETF RFC 3769, “Requirements for IPv6 Prefix Delegation”. S. Miyakawa, R. Droms. June 2004.

[28] DSL Forum PD-022, "Ethernet Network architecture", May 2004

[29] DSL Forum WT-101 v4, "Migration to Ethernet Based DSL Aggregation", November 2004

[30] DSL Forum TR-025, "Core Network Architecture Recommendations for Access to Legacy Data Networks over ADSL", September 1999

[31] DSL Forum TR-042, "ATM Transport over ADSL Recommendation", August 2001

[32] DSL Forum TR-058, "Multi-Service Architecture & Framework Requirements", September 2003

[33] DSL Forum TR-059, “DSL Evolution - Architecture Requirements for the Support of QoS-Enabled IP Services”, September 2003.

[34] DSL Forum TR-094, "Multi-Service Delivery Framework for Home Networks", August 2004.

[35] www.3gpp.org

[36] News release ETSI TISPAN, at http://www.etsi.org/pressroom/Previous/2004/2004_06_tispan_3gpp.htm

[37] 3GPP TS 23.228 V6.6.0 (2004-06), Technical Specification 3rd Generation Partnership Project; Technical Specification Group Services and System Aspects; IP Multimedia Subsystem (IMS); Stage 2 (Release 6)

Project Deliverable IST - 6th FP

Contract N° 507295

MUSE_DA2.2_V02.doc 20/193 PUBLIC

[38] 3GPP TS 23.060 V6.5.0 (2004-06), Technical Specification 3rd Generation Partnership Project; Technical Specification Group Services and System Aspects; General Packet Radio Service (GPRS); Service description; Stage 2 (Release 6)

[39] 3GPP TS 29.207 V6.1.0 (2004-09), 3rd Generation Partnership Project; Technical Specification Group Core Network; Policy control over Go interface (Release 6)

[40] 3GPP TS 29.209 V1.0.0 (2004-5), Technical Specification 3rd Generation Partnership Project; Technical Specification Group Core Network; Policy control over Gq interface (Release 6)

[41] 3GPP TS 23.107: "Quality of Service (QoS) concept and architecture"

[42] 3GPP TS 23.002: "Network architecture"

[43] 3GPP TS 23.221: "Architecture requirements"

[44] 3GPP TS 29.208: "End-to-end Quality of Service (QoS) signalling flows"

[45] 3GPP TS 29.060: "General Packet Radio Service (GPRS); GPRS Tunnelling Protocol (GTP) across the Gn and Gp interface"

[46] [36] 3GPP TS22.105, "UMTS : Service and service capabilities," Oct. 2001

[47] ITU-T G.1010 "End-user multimedia QoS categories", November 2001

[48] Monath et al., “Business Role Model for Broadband Access”, BB Europe, Brugge, December 8-10, 2004

[49] Cortese et. Al, "CADENUS: Creation and deployment of end-user services in premium IP networks", IEEE Comm. Mag., January 2003.

[50] J.W. Roberts, "Internet Traffic, QoS and Pricing", J. Roberts, to appear in Proceedings of IEEE, 2004.

Project Deliverable IST - 6th FP

Contract N° 507295

MUSE_DA2.2_V02.doc 21/193 PUBLIC

EXECUTIVE SUMMARY Introduction The access networks to the telecom world are witnessing some major evolutions, where pure hunger for bandwidth is not the only driving force. New applications and associated connectivity modes are emerging, each with particular quality of service requirements and functional mechanisms. The access architecture must be suited to support these services in an efficient and cost-effective manner. The definition of such access architecture is a central goal of WPA2 in MUSE, considering the overall mission statement "to research and develop a future low-cost, full-service access and edge network, which enables the ubiquitous delivery of broadband services to every European citizen."

A service-centric access and edge network architecture, both in terms of design and operation, is the key to achieve this prominent goal. The expectations both from the end-users and from the operators and service providers are key to the success of the architecture. The end-users will expect free choice of services and providers, quality of experience, and cost-effectiveness. The operators demand means for control in terms of security and service-dependent quality guarantees, appropriate accounting, flexibility in terms of service deployment and service support, and cost-effectiveness in terms of deployment and operations. Finally, the service providers will look for ways to deliver more value-generating services not just in terms of content (e.g. broadcasting TV) but also in terms of connectivity (e.g. location-based services).

MUSE addresses these network requirements while also introducing Ethernet and IPv4 / IPv6 (Internet Protocol) technologies in the access network. The trend to evolve to packet-based connection-less technologies (Ethernet, IP) in access & aggregation is one of many evolutions, other examples being the connectivity models (multicasting, peer-peer), the choice of auto-configuration protocol, the definition of a specific AAA architecture and QoS architecture, the role and relationships between the access and service providers, etc... Of course, real-world deployments will have a phased approach to the introduction of these evolutions, and the migration aspects starting from the current situation are covered in MA 2.6 and in SP B. The current document however aims at defining a network architecture that has integrated all the different evolutions (to service-awareness, to Ethernet/IP, etc...).

DA2.2 is the first deliverable on the way to this definition of the MUSE network architecture. It is a summary of the studies in WP A2 during the first year of the project, which were also documented in intermediate milestones (MA2.3, MA2.5, MA2.7).

The scope of DA2.2 has been structured along two lines, namely generic considerations and specific network models. On one hand, generic considerations involve broad issues that are independent of the choice of the network model. The study concentrates on the fundamental features that must be supported in a multi-service, multi-provider, multi-technology environment. Such considerations include a reference interface model, the business roles (wholesale models) and implications, the interworking with the user's residential gateway, the Quality of Service (QoS) architecture, the AAA architecture and the new types of connectivity (peer-peer and multicast). They are described in section 2 of this document. On the other hand, these considerations have been elaborated for two specific network models, namely the Ethernet-based network model (section 3) and the IP-based network model (section 4). These models were identified already at the start of the project as the two main modes of operation for the access and aggregation architectures. Very bluntly summarized, the first is based on Layer 2 connectivity in the access and aggregation network, whereas the second is based on Layer 3 connectivity (both IPv4 and IPv6 being addressed). Both network models

Project Deliverable IST - 6th FP

Contract N° 507295

MUSE_DA2.2_V02.doc 22/193 PUBLIC

of course imply a managed respectively Ethernet-based and IP-based access and edge network. Each model has its own variants and options, which were first identified, then analysed and assessed, and finally narrowed down where possible.

Generic architecture considerations Firstly, the access and aggregation network (a.k.a. access and edge network, a.k.a. access and metro network) is positioned in terms of terminology. A basic reference architecture describes the main provider networks and their main nodes. A first sketch of the definition of interfaces at the data plane and control plane is also drawn. The descriptions refer as much as possible to existing material in the DSL-Forum, aiming at alignment. The two main connectivity models, Ethernet and IP, are also briefly introduced.

An adequate model for the Residential Gateway (RGW) is required for the end-to-end story in terms of QoS, connectivity and auto-configuration. Therefore a model for the RGW is presented, based on the on-going work in TF3. It is assumed that the RGW will be either bridged or routed, or a hybrid of both. Routed gateways in IPv4 are assumed to incorporate NAPT, whereas NAT has been ruled out for IPv6. The aim of the model is to use the functional blocks of the CPE (the set of devices present at the customer premises) for defining the interaction with the network, in particular the parameters that have to be addressable the network side (at L1, L2, L3, L4+). Please note that MUSE does however not define the LAN side (technologies) of the home network.

The general connectivity is of course the basis for the architecture. It's all about providing correct forwarding of packets between a server and one or multiple hosts, or between multiple hosts themselves, based on Layer 2 and/or Layer 3 address information. Before taking a technical dive, it is worthwhile to review the different possibilities of connectivity wholesaling and retailing that a Network Access Provider (NAP) can offer to its customers. This leads to several business models describing the possible roles of the providers (NAP, Network Service Provider (NSP), Internet Service Provider (ISP), and Application Service Provider (ASP)). At the same time the new roles of Packager and Connectivity Provider as defined in [1] are introduced. Four business models are retained for residential users, plus one based on Layer 2 (Ethernet) wholesaling for business users.

The more technical considerations review the stakes of peer-peer connectivity; connecting at Layer 2 versus at Layer 3, connecting locally (as close as possible to the users) versus forcing this traffic to an edge node. The conclusion is that while business users require L2 peer-peer connectivity (e.g. for L2 VPN), there is no such requirement for residential users, which will then be connected at L3. Multicasting also poses specific choices and requirements as a connectivity model. A high-level review of several underlying concepts is articulated with relevant multicast applications in paragraph 2, and a more detailed technical implementation (for the Ethernet network model) is given in paragraph 3.

Another topic of research is a QoS architecture that allows for implementing QoS guarantees prescribed by SLSs, whereas also enabling flexible and scalable QoS adjustments following individual service demands (based on requests). The solution also aims for ease of management for all the players in the service chain. The general principles and basic options for such an architecture are reviewed. They are then worked out for the application signalling with policy pull method, following and extending the 3GPP's IP

Project Deliverable IST - 6th FP

Contract N° 507295

MUSE_DA2.2_V02.doc 23/193 PUBLIC

Multimedia Subsystem (IMS) approach. This approach is based on linking a network resource management platform (one per involved network) with the service platform (contacted by the user at session start). The network provider interprets the application signalling messages and knows the required resources for the session, which are then negotiated with the resource management platform. The resource management platform keeps a view of the available resources, receives resource requests, deduces the flow path that will be taken, checks for availability and finally grants or refuses the flow establishment by controlling the entry nodes. Resources are reserved after a request has been accepted and released after the session has finished. When multiple domains are involved the platforms must also co-ordinate their reservations to ensure end-end QoS. The resource management itself can make use of pre-provisioned (and controlled) QoS pipes or alternatively request and reserve QoS by means of network signalling. The principles are illustrated by an end-end session set-up.

The QoS study also refers to DTF1.2 for a detailed explanation on traffic classes.

Authentication of the end-user can take place at multiple levels, namely by the NAP and/or the NSP, prior to be granted access to the network. After authentication, auto-configuration is the process by which the Customer Premises Equipment (CPE) obtains autonomously configuration information from the network and its service providers. It is necessary to avoid end-user taking part in CPE configuration process because residential users do not have technical expertise. Auto-configuration must provide all the information necessary to create automatically layer 2 connections and layer 3 flows. There are two main protocols for auto-configuration. Point-Point Protocol (PPP) is currently well-established but is not suited for local peer-peer, QoS nor multicasting. Dynamic Host Configuration Protocol (DHCP) is coming up for new applications, has the advantage to uncouple the data and control plane, but lacks some inherent features and established architectures of PPP.

Both authentication and auto-configuration must work in a multi-provider and multi-service environment. There must be an interaction between the different Authentication, Authorisation & Accounting (AAA) platforms and the auto-configuration servers in order to correlate the authentication records and the associated IP addresses. A suitable AAA architecture is needed to achieve this goal, aiming at reaching feature parity of DHCP-based auto-configuration with current PPP-based auto-configuration. Useful mechanisms that are used in solutions include 802.1x authentication, DHCP options to be added at the CPE and DHCP relay, and including a Remote Authentication Dial In User Service client (RADIUS) in DHCP servers. A review of several tracks is given, and in particular a solution based on a single-step approach.

Finally, the link is made between AAA architecture and IMS architecture, where possible IMS model adaptations are listed.

Ethernet Network Model The first model is based on a layer 2 connectivity from the RGW (or user's terminal in case the RGW is bridged) to the Edge Node (EN). The Access Node (AN) performs connectivity, subscriber management, accounting and security features. The AN is an (enhanced) Ethernet switch. The aggregation network carries traffic between ANs and ENs and is involved in multicast replication. It is composed of plain Ethernet switches. The EN is responsible for providing connectivity to the relevant ISP/NSP/ASP and for implementing accounting and security features. The EN must ensure Ethernet connectivity, at least at the aggregation network side, and further handles the traffic at Layer 3 (except for L2 wholesale).

Project Deliverable IST - 6th FP

Contract N° 507295

MUSE_DA2.2_V02.doc 24/193 PUBLIC

Connectivity throughout the access and aggregation network is based on Ethernet principles.

Depending on the use of Virtual Local Area Network tags (VLAN tags), there are two possible connectivity modes. In a first option, called "Intelligent Bridging", the connectivity in the AN is based on the MAC (Medium Access Control) addresses, as in an ordinary Ethernet switch, with additional intelligence for security, traffic management and accounting. The VLANs in the aggregation network are used to further separate the aggregated traffic from the different ANs. A typical use is to allocate one VLAN per AN-EN pair. In the second option, the connectivity at the AN is no longer based purely on MAC address but on VLAN-IDs, namely by associating one (or more) individual 802.1Q VLAN-ID to every end-user (i.e. to every line aggregated in the AN). This is called "Cross-connecting", using VLAN stacking in the aggregation network to overcome the scalability problem of a single 802.1Q VLAN. Both options have their pro's and con's, and the intelligent bridging mode has been selected for residential users for its lower complexity and compatibility with existing edge nodes. Business users are a special case, requiring another sort of cross-connecting, this time based on S-VLANs. Residential and business users can be combined in the same network (and on the same platform if required).

The data plane requirements for allowing basic connectivity are analysed in detail for both modes. Each node must contain connectivity parameters that must be set and also updated in several cases. The IP subnetting of the end-users in the NAP can be chosen from different schemes but will have an impact on the requirements for non-PPP based peer-peer communication. Conforming to the decision to switch peer-peer at Layer 3, in the Ethernet network model all peer-peer must be forced to edge nodes to be switched. This can be achieved by a customer separation method based on MAC forced forwarding or based on ARP filtering in the AN and ARP agent in the EN. The basic use of VLANs can be extended with additional meanings, which are listed for completeness. Using VLANs for business users can lead to scalability problems, which can be alleviated by introducing MPLS in the aggregation network. Note that MPLS will be compatible with residential traffic. Multicasting is another connectivity mode that is analysed in detail. An efficient solution is presented based on IGMP as established multicasting protocol for flow replication and multicast tree building.

On top of providing basic connectivity, the network should also provide a level of security against malicious users or malfunctions. The different types of security threats are classified for convenience, and an overview has been established of specific threats together with appropriate security mechanisms, both for the IPoPPPoE traffic and for the IPoE traffic.

Finally, the basic concepts of the Ethernet model are recapitulated in a short summary.

The one-step configuration and AAA process is further elaborated in the specific case of Ethernet network model. Also some concepts of the IMS-based architecture are conjugated in terms of the Ethernet network model.

IP Network Model The IP awareness and functions can be brought closer to the end-user by introducing aggregation nodes acting as layer 3 routers or forwarders in the aggregation network. Traffic flows can then be processed at IP level for QoS, security (there is now clear separation at layer 2 between the aggregated users and the rest of the aggregation network), multicast

Project Deliverable IST - 6th FP

Contract N° 507295

MUSE_DA2.2_V02.doc 25/193 PUBLIC

criteria. Another advantage is that peer-peer traffic can be routed at L3 at that node, which is more efficient than via the EN as in the previous model. Note that this node can be based on IPv4, or IPv6, or combining both IPv4 and IPv6.

IPoE traffic is forwarded according to the service policies in the aggregation node, but the traffic handling can be different for IPoPPPoE. Therefore the different use cases for IPoE and IPoPPPoE traffic have been defined and analysed (for IPv4). A single AN can then freely combine an IPoE use case with an IPoPPPoE use case.

In L2 forwarding of PPP, IPoPPPoE traffic is basically forwarded at the AN at layer 2 and sent transparently across the aggregation network to the EN (BRAS (Broadband Remote Access Server)). The aggregation network must be layer 2. This traffic is not dealt with at layer 3 in the AN.

A variant to this is to apply layer 2 termination in the AN for this traffic by including a PPPoE relay functionality in the AN, forwarding based on PPPoE session IDs and using the AN as PPPoE originator (in upstream) and destination (downstream) instead of the end-user.

A different use case is to handle PPP completely in the AN, either by means of PPP termination and further routing at layer 3, or by means of L2TP tunneling to the EN (BRAS or L2TP tunneling switch). The aggregation network can be layer 2 or layer 3, or a mixture of both (e.g. Ethernet islands).

The handling of IPoE traffic can also be categorised in use cases. A lightweight approach is to perform Layer 2 termination at the AN without the need for routing protocols between AN and EN. In this case the AN acts more like an IP forwarder, linking IP sessions with IP service connections in the NAP (connectivity between AN and EN).

The AN can also be a full IP router. Care must be taken not to waste IP addresses (assuming the scarce IPv4 address space), therefore the AN must be able to put the aggregated users in the same subnet and allocate them the same default gateway IP address. Note that the location of the first IP-aware node (seen from user's side) can be chosen in the first aggregation node (e.g. a DSLAM (DSL Access Multiplexer) or fibre terminator), or the second one (e.g. a layer 3 hub DSLAM terminating small layer 2 remotes), or even another one (e.g. a node in the CO aggregating multiple small-size DSLAMs).

In the case of application wholesale (single NSP, NAP controlling the IP address allocation in its network), the AN will not require dynamic routing exchanges with the ENs. However in the case of IP wholesale to multiple third-party NSPs, the routing requirements become more complex and must be investigated. This is for further study.

The introduction of IPv6 presents opportunities and inevitably also new technical challenges. The first and most basic aspect to be analysed for IPv6 is the addressing. It is proposed to include a NAP topology field in the IPv6 addressing structure. An allocation efficiency study quantifies the (in)efficiency of the static addressing method. Two types of static addressing schemes are presented, one based on introducing a strict NAP topological hierarchy in the prefix (from the ISP to the subscriber), another based on NAP-proprietary addressing of its different nodes. Dynamic addressing schemes are also analysed, using dynamic prefix delegation following certain policies and dynamicity. Finally, a possible method is shown to integrate the dynamic prefix delegation mechanism with static addressing schemes as defined above.

Project Deliverable IST - 6th FP

Contract N° 507295

MUSE_DA2.2_V02.doc 26/193 PUBLIC

What's next? This deliverable reflects the status of the network architecture studies and solutions after the first year of the project. They have been disseminated to the other subprojects and task forces. The basic mechanisms and features for a multi-service, multi-provider, multi-protocol access architecture have been worked and the Ethernet network model is stable and well elaborated. However some models and aspects still require further consolidation.

In the second year, attention will be paid both on the continuation of the current models, especially on the IP network model (routing requirements, IPv6), the AAA architecture, the IMS architecture, the interaction with the CPE, the definition of open interfaces, and on extending the features with e.g. nomadism and service enablers.

A final overview of the MUSE architecture will be presented in DA2.4 at the end of phase I of MUSE.

Project Deliverable IST - 6th FP

Contract N° 507295

MUSE_DA2.2_V02.doc 27/193 PUBLIC

1 INTRODUCTION 1.1 Scope of the deliverable 1.1.1 Context Broadband access has become widespread over the past few years, spurred amongst others by the vast deployment of DSL networks. But traditional access networks have been built to deliver primarily high-speed Internet access (HSI), and are now facing the demand (both from consumers and from providers) for support of triple play services. Not only bandwidth is impacted by the new applications, but more fundamentally they introduce new connectivity modes, service-specific expectations in terms of quality of experience, and open new roles for the different players (providers). It is clear that a service-centric access and edge network architecture, both in terms of design and operation, is the key for supporting this in an efficient and cost-effective manner.

This document addresses the changes needed in the access and aggregation network from an architectural and functional point of view. It forms a reference within MUSE for a fully evolved access and aggregation network. Of course in real-world situations a phased approach can be taken to the introduction of these evolutions.

Considering the present situation, current broadband access networks can be characterised by several key characteristics. First, they typically use ATM switching as aggregation technology. One (or multiple) ATM PVC provides Layer 2 connectivity between the subscriber modem and the Broadband Access Server (BRAS). The PPP protocol is almost exclusively used (for residential users). The data format is IPoPPPoA or IPoPPPoEoA. Secondly, the subscriber and service intelligence is centralized in the network, e.g. in BRASes where PPP is terminated for subscriber management. Thirdly, the main service offered is HSI (with telephony services being out-of-band), with some limited cases of video distribution.

This results in most cases in all subscribers being connected with best effort connections to a single or one of several Internet Service Providers (ISPs) via a single edge in the aggregation network.

How would a more user-centric interpretation of broadband access look like? As it is all about user experience, he/she would want to

- select a multimedia application from a triple-play service bundle with associated quality (multi-service),

- that is offered by an appropriate provider (multi-provider),

- that is ubiquitously available (reaching most users and allowing nomadism),

- with a specific subscription (accounting, new business roles for providers).

The operator is responsible for providing secure network connections between end-user terminals in the home network and edge nodes (or other end-users) in a multi provider environment. Just keeping pace with the associated bandwidth increase will not be sufficient. From an operator's perspective, several drivers are encouraging network evolution;

- Bandwidth is becoming a commodity, and the resulting price erosion has advanced the introduction of new services in broadband access. Content delivery and value added

Project Deliverable IST - 6th FP

Contract N° 507295

MUSE_DA2.2_V02.doc 28/193 PUBLIC

service enablers become vital for the access provider, who is facing the largest investments in the infrastructure. As the applications commonly use IP, this involves bringing IP awareness closer to the end-users.

- Fierce competition also puts heavy pressure on prices and consequently urges operators towards a CAPEX and OPEX optimized network design. For the inevitable bandwidth upgrade in the network, Ethernet technologies are presented to offer a cost advantage over ATM technologies. Furthermore, packet-based technologies allow a more straightforward way to deliver broadcast channles in a bandwidth-efficient (and hence cost-efficient) way by using multicasting techniques.

- The multiple services have their own requirements and characteristics. Supporting multiple services by means of multiple specialized edges has several advantages;

- It is a clean solution supporting evolution from existing high speed Internet networks to the delivery of multiple services by allowing the parallel and cost-efficient introduction of new services or the capacity upgrade of existing services.

- It allows traffic separation at the edge. High availability traffic (such as PSTN replacement VoIP) does not share nodes such as a BRAS with best-effort internet traffic. By separating traffic onto different nodes engineered to different standards, risk is diversified, and the node can be built for an optimal trade-off between functionality and complexity for the set of services it carries.

- Each Edge Node can enforce the security policy which is relevant to the set of services it carries. For example, a Voice Gateway will in general filter out everything that does not look like SIP or H.323 traffic.

The current broadband access networks need to evolve in order to meet these expectations and allow new or additional revenues for the different players (providers). Several functional service enablers (for QoS, accounting, security, auto-configuration, accounting) must be introduced, taking also into account their integration with the deployment of packet-based technologies (Ethernet, IPv4, IPv6). This is the scope of DA2.2.

It is important to compare this declared objective with the current situation (Jan 2005) in standardisation.

- ATM technology and ATM-based networks offering best effort IP connectivity to residential users are well standardised (DSL-Forum TR-25 [30], TR-42 [31]) and widely deployed. A recent standard has extended the scope to multiple services via a single-edge, DSL-F TR-59 [33]. It describes an ATM-based network architecture with the following characteristics; single-edge (1 PVC between CPE and single Edge Node (BRAS)), primary support for DiffServ QoS enabled unicast IP services, no use of L2 QoS in access and aggregation network, QoS mechanisms at BRAS in downstream (hierarchical scheduling) and upstream (packet discard), focus on PPP (2 PPP sessions, one for HSI, one for new services).

- Ethernet is a well established technology readily used in enterprise networks and Metro Ethernet networks of carriers. Ethernet as a technology is quite extensively addressed in standardisation (IEEE). Ethernet-based Metro networks are also covered (MEF, ITU-T), but Ethernet-based acess and aggregation networks for residential services has appeared in 2004 as a new standardisation topic. One main on-going document is DSL-F WT-101 "Migration to Ethernet-based DSL aggregation" [29]. Its current network architecture scope covers Ethernet on DSLAM uplink, L2 QoS-awareness in the access

Project Deliverable IST - 6th FP

Contract N° 507295

MUSE_DA2.2_V02.doc 29/193 PUBLIC

and aggregation network, multicasting, single edge with second one for video.

It appears that a complete framework does not exist yet in standardisation for an access and edge network architecture that combines all of the following:

- multiple services

- multiple edges (application gateways or IP QoS enforcement points)

- multiple data formats (IPoPPPoE and IPoE), with corresponding authentication and autoconfiguration phases for DHCP-based services

- per-session QoS-awareness and negociation+enforcement

- efficient multicast replication

- IP awareness closer to the user (at some aggregation point)

- Ethernet-based

- Support of both residential and business users.

1.1.2 Scope The scope of DA2.2 can be summarized by reviewing the evolutions and their drivers:

• Service-driven evolutions

- Higher requirements on bandwidth (especially for video).

- Support of DHCP as auto-configuration protocol for some applications (VoIP, video). As DHCP has no authentication phase like PPP has, extra mechanisms must be foreseen in the AAA architecture for authentication and user record correlation.

- "Voice" (voice over IP and videotelephony over IP) and "Video" (streaming) require service-specific QoS in terms of loss, jitter, and delay. The QoS architecture must guarantee per-session QoS by negociation and enforcement steps.

- Separation of the multiple services in the network. A cost-efficient way is to foresee (specialized) multiple edges in the aggregation network, e.g. a BRAS for HSI and an edge router for video services.

- Some applications rely on new connectivity modes for an efficient data delivery (e.g. gaming via peer-peer, e.g. tele-teaching via multicasting). The access and aggregation network must support such secure connectivities.

• User-driven evolutions

- Nomadism, the ability to retrieve a service environment at different places of the network. Note that this aspect has not yet been elaborated much in this deliverable.

- Combining multiple simultaneous services, each possibly from a different service provider. Increasing the "plug-and-play" feel by shifting the configuration burden from the user to the provider. This has direct impact on the control (e.g. auto-configuration) and management (e.g. installation of a firewall) of the customer premises equipment.

• Technology-driven evolutions

- The introduction of IPv6 brings new opportunities (e.g. addressing schemes, stateless auto-configuration). They must be supported by the network and coexist with installed base of IPv4 equipment and services.

• Cost-driven evolutions

Project Deliverable IST - 6th FP

Contract N° 507295

MUSE_DA2.2_V02.doc 30/193 PUBLIC

- Ethernet as low-cost technology for BW upgrades.

- Bandwidth-efficient support of multicast streams in the network.

• Business-driven evolutions

- New roles for the players (e.g. emergence of application wholesale offered by the NAP). The different business models imply differences where PPP is terminated and how IP addresses are allocated.

1.1.3 Content The document first describes the generic functional mechanisms in Chapter 2. It summarises the topics of the architecture that are independent of the transport layer: Reference models, General connectivity, Model of residential gateway, QoS and AAA.

These principles are then mapped on two concrete network models, which propose two different levels of IP awareness for the connectivity handling in the network.

The first is based on a layer 2 (Ethernet) connectivity between the home and the aggregation edge and is described in Chapter 3. The current situation is also based on layer 2 connectivity (via ATM) but the proposed network model has fundamental differences; it is packet-based and incorporates functional mechanisms (QoS, security, AAA, multicast and peer-peer connectivity) for meeting the expectations described above.

The second network model provides layer 3 (IP) connectivity in the aggregation network, as described in Chapter 4. Having IP awareness closer to the end-users opens possibilities for better scalability (layer 2 separation between access and aggregation), more efficient forwarding (local peer-peer), and additional IP features (QoS, security).

Finally, Chapter 5 reviews the conclusions that can be drawn.

1.2 Positioning of DA2.2 This document is the first deliverable in the work of architecture within MUSE. It is the result of the first year’s efforts according to the current planning. As is shown in Figure 1-1 DA2.2 is part of a larger suite of reports and deliverables that eventually will lead to the MUSE architecture deliverable DA2.4 at the end of phase 1.

The architecture work also relies on input from other work packages especially WPA1, business models and services and WPA3, techno-economics. The result also in great deal relies on interaction between the other sub-projects and task forces.

Project Deliverable IST - 6th FP

Contract N° 507295

MUSE_DA2.2_V02.doc 31/193 PUBLIC

MA2.5 Network architecture

step 1

DA2.2

Network architecture Step 2

DA2.4

Network architecture

Step 3MA2.3

research on individual

issue

WPA.3 SPs & TF

WPA.1 DA2.1 MA2.7

research

on individual

issue

time

Figure 1-1. Relation between the different milestones and deliverables for the MUSE Network architecture.

The architectural work has progressed stepwise with different scope of the milestones MA2.3, MA2.5, MA2.7 and deliverable DA2.2.

Milestone MA2.3 contains a detailed set of considerations for multi-service network architectures, as well as a reference terminology. The considerations cover a wide range of topics on Data plane, QoS architecture, Auto-configuration, Multicasting, Physical Layer infrastructures, Service Enablers and Security.

Two basic network models are introduced in MA2.3:

- Ethernet-based network model, with access node (AN) and the aggregation network being L2.

- IP-based network model, with access node (AN) being IPv4 / IPv6 and aggregation network either L2 or L3.

Milestone MA2.5 focuses on the Ethernet-based network model, building on the results of MA2.3. Since MA2.3 does not offer a complete solution, and does not present complete alignment between the separate topics, MA2.5 further works out issues regarding the Ethernet-based network model, and aligns different topics. It also investigates generic issues such as the relationship with the residential gateway and business models.

Milestone MA2.7 refines the work further. Solutions for general aspects are presented. The Ethernet model and the IP model are also further developed. The scope for the IP model in MA2.7 is general connectivity, while other IP related topics are planned for milestones next year.

The DA2.2 deliverable is essentially a condensed version of MA2.7. The results from the previous milestones have been compiled and summarised into three distinct parts: General aspects, Ethernet solutions and IP solutions.

Project Deliverable IST - 6th FP

Contract N° 507295

MUSE_DA2.2_V02.doc 32/193 PUBLIC

1.3 Focus on Multi-service MUSE focuses on access to multiple services. This means that

- a user can get multiple services, each service with an associated CoS

- a customer can receive L3 services (e.g. Internet access or IP VPN service, see ([14]) and L2 services (e.g. L2VPN or PWE3 type services )

- a customer can simultaneously receive multiple services over the same physical access line (service multiplexing).

- a user can be connected to one or to multiple edges nodes of the NAP, depending on the considered services

- a user can be connected to one or multiple NSPs (and hence can receive multiple IP @s) in function of the considered services

- a user can be nomadic, connecting at different entry points of to the NAP and retrieve its profile and connectivity.

A distinction is made between "users" and "customers". Customers buy a service form a service provider, while users use a service. In the residential case usually “user” equals “customer”. However in the business customer case the customer is usually a company and a user is an employee of the company located e.g. at a specific location

Project Deliverable IST - 6th FP

Contract N° 507295

MUSE_DA2.2_V02.doc 33/193 PUBLIC

2 GENERIC ASPECTS 2.1 Positioning of the Access and Aggregation network 2.1.1 Terminology and logical model The following figure illustrates a high-level logical model where the key elements and logical networks have been defined with the DSL-Forum TR-058 terminology in mind. TR-058 is a good starting framework for a MUSE reference architecture.

However, some modifications are necessary in order to fully reflect the multi-service architecture of MUSE. Hence a change of the definitions might be necessary once business and service roles evolve together with the architecture.

Note that the roles of Packager, Loop Provider and Connectivity Provider are not mapped onto networks or elements in the architecture.

ASP 1

ISP

First

Mile

CPN Aggregation

Network

Regional

Network

Service

Network

End U

ser Terminal

Residental G

ateway

Access N

ode

Access E

dge Node

“Access Network in MUSE”

NSPService E

dge Node

NAP RNP NSP, ISP, ASP

Remote Unit

Figure 2-1. Reference Network based on the DSL-Forum reference service provider interconnection model.

The following high-level definitions of each of the logical networks, elements and business roles are valid for the architecture.