Indexing reliability for condition survey data

-

Upload

independent -

Category

Documents

-

view

5 -

download

0

Transcript of Indexing reliability for condition survey data

49

AbstractThis paper discusses the applicationof existing methods for measuringreliability to collection conditionsurvey data. The essentialrequirement of reliability isunderstood by conservators but hasnever been quantified. The relativelyrecent introduction of computermethods that calculate reliability ismentioned as a significant steptowards introducing the practice ofensuring reliability during pilotstudies. The paper discusses differentmethods of quantification, and theirqualities, as well as the aims ofassessing reliability for conditionsurveys. The most satisfactorymethods, and choosing anappropriate level of reliability forconservation applications aresuggested. However, no singlenumber is offered as a standard. It isrecommended that more than oneindex should be used. The paper alsodescribes techniques to use indices todetermine causes of disagreement, soreliability can be increased.

Key words: Condition Survey,Reliability, Index, Data, Measurement

The Conservator volume 30 2007

Joel Taylor and David Watkinson

Indexing Reliability for Condition Survey Data

IntroductionCollection condition surveys involve evaluating the condition of large numbersof museum objects that can vary in a number of ways. These assessments aremore complicated than they might seem, as many different factors contribute toan object’s condition. The term ‘Condition’, like ‘Health’, can be used in a varietyof different ways, which vary according to context. Condition can incorporate awide range of issues, including stability, need of treatment, utility, completeness,evidence of past damage and vulnerability. These evaluations usually result inthe objects being assigned an overall score or grade on a scale of condition.However, the way in which people interpret definitions and information canvary, leading to different approaches to object assessment, even with guidance.As a result, differences of opinion can arise, and it has been demonstrated thatone cannot assume condition data to be reliable (Taylor & Stevenson, 1999).A reliability index is a method for giving an indication of the reliability of the

data. It uses a formula to provide numerical value to express the agreementbetween different assessments of the same phenomena. Measuring reliability is aprocess often carried out in fields that use semi-quantitative data. The techniquesare used in fields, such as content analysis, marketing and social science surveys(Neuendorf, 2002). They are used to check and improve data reliability of avariety of phenomena.“A reliable procedure should yield the same results from the same set of

phenomena regardless of the circumstances of application” (Krippendorff, 1980,p.129), such as surveyors involved, the survey form, the working conditions, thesample chosen and the number of surveyors. Reliability is the extent to whichindependent surveyors evaluate a characteristic of an object or population, suchas condition, and reach the same conclusions. It is a function of the precision ofthe category definitions, the ambiguity of objects being assessed, and thesurveyor’s judgment. How these factors can be analysed separately is discussedlater. It is important to recognise that reliability is not a measure of the validityof judgements, although its influence on this is strong, as it determines howmuch people agree.Existing conservation practice does not currently include measurement of

reliability, so these differences of opinion can go undetected. If significantdecisions on collection preservation are undermined by the absence of reliability,the extent to which survey data can meet the needs of the conservator needs tobe understood and verified. The first step in determining reliability is itsexpression. Methods to measure reliability, such as the indices that will bediscussed here, are not well known and are difficult to carry out by hand.Relatively recent developments have allowed these tasks to be carried out bycomputer and equip the conservator with a way to assess data reliability. Thispaper aims to introduce some methods and key issues that surround them, aswell as refer to computer software that can make reliability measurementsuitable for the practising conservator. The opportunity to measure and improvereliability of condition data has benefits for any institution that carries out suchactivities. These methods can now be applied to condition surveying practice.There is seldom perfect agreement in semi-quantitative data collection but

equipping the conservator with the tools to measure reliability means thatcondition surveying data can be can influenced in a number of ways. Reliability

50 Taylor and Watkinson

The Conservator volume 30 2007

indices can be used to;• express reliability (in various ways with different indices)• determine the effectiveness of data to aid judgments about the collection• develop an idea of what level of reliability should be achieved• diagnose causes of variation to assess and reduce disagreement• monitor improvements in reliability of data• assist in the training of conservators• determine effectiveness of survey categories for future use• if reliability can be verified as high, more surveyors can be used with greaterconfidence

This article summarises ways of measuring reliability and some software toolsthat the practising conservator can use. By examining the qualities of differentindices, their application to condition surveys can be understood.

Applying reliability indices to condition surveysThe issue of reliability in condition surveying has been recognised (Keene, 1991;Newey et al., 1993; Taylor, 1996; Taylor & Stevenson, 1999), though its expressionhas never been addressed. Providing a level of certainty, or uncertainty, for thesurvey data allows a conservator to allocate an appropriate amount ofsignificance to that information, and the opportunity to reduce that ambiguity.Recommendations that a condition survey should be carried out by more thanone person means that conservators have the opportunity to predict thereliability of the survey results, by comparing the decisions reached by eachsurveyor. Reliability indices can cope with any number of surveyors but need thesame objects to be surveyed at least twice, since some comparison of assessmentsfor the same objects is required.Statistical methods used to determine representative samples of objects to be

surveyed, and confidence levels for these samples, have been developed for useduring pilot studies. This would be an appropriate time to assess the reliabilityof the data that is being collected. Pilot studies have been used to determinestatistical samples (Keene and Orton, 1992; Kingsley and Payton, 1994; Orton,2000). Since pilot studies already exist, measuring reliability could easily beintegrated into the condition survey process.

Assessing reliability of condition dataIndices are central to reliability measurement. An index is a scale derived from aset of observations to allow comparison. Reliability indices are designed toexpress the agreement between different assessments of the same thing. They areexpressed as a numerical value between 0.00 and 1.00 or between -1.00 and +1.00,depending on whether chance agreement is taken into account. There are variousindex methods for measuring reliability, which have different qualities. Theseindices, as well as chance agreement, are explained and discussed.It is not only how often surveyors agree, that is of importance to condition

surveys, but also the degree of their (dis)agreement. For example, two surveyorsscoring object condition grades ‘1’ and ‘2’ respectively, have a closer agreementthan two people scoring ‘1’ and ‘4’. Because of this, measuring the reliability ofcondition scores partly depends on the metric used – the mathematical functionbased on distances. Three types of metric useful to condition surveys are;

• nominal, where there is no weighting or hierarchy between categories, such as‘tick one box’ or ‘yes or no’;

• ordinal where there is an informal hierarchy, such as a league table or acondition score;

• interval where values are evenly distributed across a scale, such as the Celsiusand Fahrenheit scales.

The kind of index used to assess reliability will have to be based on the datathat condition surveys create. Condition scores are ordinal, since there is clearly

Indexing Reliability for Condition Survey Data 51

The Conservator volume 30 2007

an order to the possible responses that create a hierarchy (Suenson-Taylor et al.,1999). The intervals are not necessarily even but the scores are given as a scale,which means that the data often behaves as interval data in practice. Damagecategories in condition surveys, such as ‘Biological Damage’, can be used for anyobject (theoretically). However, category information such as the type or cause ofdamage basically denotes the presence or absence of that quality, subdivided intoa set of different variables - binary, nominal data. The categories are all differentvariables. Fortunately, the possible responses for condition grades and categoriesare mutually exclusive, which makes the measurement of reliability much easier.Since the category data is nominal and condition data is ordinal, different indicesare appropriate for the data.In other fields that use reliability measurement, such as content analysis, data

is frequently referred to as either manifest or latent, that has parallels inconservation. Examples of manifest data are counting the number of times aspeech includes the word ‘rights’, or symptoms of damage on an object, such ascorrosion. Examples of latent data would be counting instances of humour in anovel, or causes of damage to an object. Ensuring reliability is more challengingfor latent content than manifest, since the meaning present in latent contentinvolves more human judgement and offers more opportunity for subjectiveerror, whereas manifest content is more clerical (Potter and Levine-Donnerstein,1999). Keene’s (2002) definition of condition includes both manifest content (suchas appearance) and latent content (such as stability). This suggests that there maybe some elements of condition that are easier to reproduce than others. As aresult, it is arguably even more important to test for reliability. The informationbeing sought by condition surveys means that latent content is often moreinformative when its subjective judgements are carried out in context, such aswithin a museum store in which a collection is housed, rather than duringremote analysis in an office or laboratory.Another important quality of condition data is that there is no ‘right answer’.

One cannot measure the accuracy of a judgment about condition, only itsconsistency with other judgements.Condition data is rich in meaning, and this can lead to differences in opinion

and ambiguity in definitions. Appropriate scores will vary from survey tosurvey, depending on what the surveys are intended to achieve. By carrying outa test for reliability at the pilot study stage, the quality of the data collected in thesurvey itself can be expressed and improved. It is important to know that thekind of metric used is appropriate for the data, and often to know appropriateindices to use. This will be discussed in the next section.

Kinds of indexDeciding on the index or indices most suitable for assessing the reliability ofcondition assessments is partly based on the kind of data produced and what iswanted from the survey. It is important to remember that different indicesmeasure different things (Krippendorff, 2005a) and the results that they provideare just one perspective on the reliability of the survey data. If one index suggestsdata is reliable it does not necessarily follow that another will do the same. Thismeans that it is often advisable to use more than one index and compareoutcomes to gain a clearer perspective. Commonly used indices that will bediscussed here are;

• Percentage agreement• Scott’s Pi• Cohen’s Kappa• Krippendorff’s Alpha• Perreault’s Pi• Pearson’s r• Spearman’s Rho

52 Taylor and Watkinson

The Conservator volume 30 2007

Standard methods for data analysisMany indices exist for qualitative data analysis. Popping (1988) counted thirty-nine different indices for nominal data alone but pointed out that only a few areused widely. However, “there are few standards or guidelines availableconcerning how to properly calculate and report on inter-coder reliability[agreement between surveyors]” (Lombard et al., 2002, authors’ brackets). Thesecalculations differ from internal-consistency reliability applications, such asCronbach’s alpha, which is commonly used to measure consistency. Internalconsistency calculations examine inter-item correlations which measure differentscales, not the same scale for different people. As a result, other kinds of indexcalculations are required, which are sometimes novel and innovative. Existingsignificance tests such as the chi-square test, which compares observed andexpected distribution, based on testing a hypothesis, are inappropriate. It wouldbe possible to construct a test comparing a particular surveyor’s results againsta theoretically ‘perfect’ set of scores. However because the test only considers theoverall distribution of scores awarded, rather than individual objects, it couldeasily give a misleadingly high level of reliability. Furthermore, there is no ‘rightanswer’ for condition data, there is not a perfect set of scores against which asurveyor’s results can be compared in a chi-square test. However, conditionsurvey data may show significant agreement and disagreement in the samesurvey. For example, stable but separate objects like broken pots, can divideopinion with little middle ground, so this problem is quite real.

Chance agreementChance agreement is an important issue in reliability assessment, as aconservator needs to know that reliability comes from conscious, intentionalagreement, rather than chance agreement. Consequently data is often measuredagainst agreement that would come about by chance instead of totaldisagreement. Reliability is often measured as the difference between perfectagreement and how much agreement would come from chance. The use of twocategories can be compared to flipping a coin. There is a 50% chance of a coinlanding on either side, and with enough flips, two people flipping coins arelikely to ‘agree’ by coincidence 50% of the time. This is chance agreement, andwould be useless for the purposes of condition surveys. With four categories, theprobability of agreement by chance is reduced to 25%, since the possibility ofcoincidental agreement is only one in four. As a result, some indices account forchance agreement, based on the number of categories used. Chance agreement isusually expressed as 0 on a scale, with 1 being total agreement and –1 being totaldisagreement. Any value below 0 means that disagreement is occurring morefrequently than if a coin were flipped. If an index does not deal with chance, ituses 0 to express complete disagreement.The possibility of agreement by chance can sometimes be affected by simply

changing the number of categories. For example, changing the number ofcondition scores from five grades to four grades means that the possibility ofagreement by chance is increased from 20% to 25%. At face value, there appearsto be more agreement, but it does not make the data any more reliable. Thisproblem is accounted for with some indices.Chance agreement is calculated in different ways with different indices, and

has a significant effect on the calculated value. What can be attributed to chanceis debatable and indices give the impression of seeming either ‘conservative’ or‘liberal’ in terms of how easy it is to reach high levels of reliability. This will beexamined further, with different examples of survey data.

Reliability indicesA brief review of the indices being discussed will define the field further, andindicate the qualities that these indices possess. For reference, table 1 summarisessome qualities of the indices discussed.This table is a guide to help decide which index might be most appropriate for

the data collected. Some indices can accommodate multiple surveyors in one

Indexing Reliability for Condition Survey Data 53

The Conservator volume 30 2007

calculation, whereas others calculate two at a time (less of a problem withcomputers, as they can calculate each pair and give an average), the metric of thedata that the index measures, whether chance agreement is taken intoconsideration (although the methods by which this carried out differ, and will bediscussed later), the kind of reliability that is measured, and the numerical rangein which the reliability value will fall. All of these issues are important inconsidering the choice of index and the way in which the value is used.Definitions and descriptions of each of these indices can be found in the

appendix. The descriptions discuss the way the calculations are performed andsome of the assumptions involved in the calculation. They will be a helpfulreference when comparing the different qualities of the indices.

Choosing a reliability indexIn terms of deciding upon an index, there is no ‘best index’ or index that isaccepted by everyone. Percentage agreement is most commonly used index butthis is frequently criticised and is considered only to be popular because it is easyto use.Krippendorff’s alpha is a useful overall index, since it can operate for nominal

and ordinal data. Because it accommodates multiple surveyors it is useful forreporting a single reliability coefficient, although this obscures the differencesbetween pairs of surveyors that other indices pick up. For reporting the overall,reliability, Krippendorff’s alpha is the most suitable. Contrasting this withPerreault’s pi can be informative, since the assumptions are different. During apilot study, indices that accommodate two surveyors, such as Scott’s pi, orCohen’s kappa, would also be useful for diagnostic purposes, especially if thedata is nominal. Category data would benefit from these two indices. Mostindices are based on paired agreement – the agreement between two surveyors.Overall values are often the average of all of the paired agreements. It isrecommended that more than one index is used to assess reliability (Lombard etal., 2002), especially if percentage agreement is chosen.

Defining an acceptable level of reliabilitySo what is an acceptable level of reliability? In fact, there is no one satisfactoryindex level. Ellis (1994) suggests a rule of thumb of 0.75-0.80. Frey et al. (2000)suggest 0.7. Krippendorff (1980) suggests cautious conclusions can be drawnwith reliability between 0.67 and 0.80, and condition survey data can beconsidered reliable above 0.80. Riffe et al. (1998) suggest a minimum of 0.70, andideally 0.80 or higher. However, none of them stipulate which index is used, andthis can make a very significant difference, and can render the target almostmeaningless. A satisfactory level depends on the index used.Banerjee et al. (1999) produced a sensible criteria for Cohen’s kappa, a popular

index for nominal data; >0.75 is excellent agreement beyond chance, 0.40–0.70fair to good agreement beyond chance and <0.40 is poor agreement, andKrippendorff (2004) suggests 0.70 or more with his alpha. However, using thesame level of reliability for different indices can be very misleading. Literature

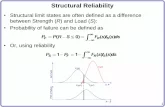

Index Multiple Metric Chance Correlation/ Rangesurveyors agreement agreement expressed

Percentage N Nominal N Agreement 0.00 – 1.00agreement

Scott’s Pi N Nominal Y Agreement -1.00 – +1.00

Cohen0appa Y Nominal Y Agreement -1.00 – +1.00

Krippendorff’s Y Nominal, Y Both -1.00 – +1.00Alpha Ordinal

Interval, Ratio

Perreault’s Pi N Nominal Y Agreement 0.00 – 1.00

Spearman’s Rho Y Ordinal N Correlation -1.00 – +1.00

Pearson’s r Y Interval N Correlation -1.00 – +1.00Table 1. Qualities of the different reliabilityindices discussed.

54 Taylor and Watkinson

The Conservator volume 30 2007

(e.g. Neuendorf, 2002; Lombard et. al., 2002) often suggests that the index scalesare comparable, because the scores are expressed in similar ways, but it is vitallyimportant to recognise that the indices measure different things. Some mayappear more conservative or liberal but they are not variations on the same scale.

Acceptable reliability for condition surveysIt is not the authors’ intention to prescribe an index level or develop a newstandard, but a point at which survey data can be considered reliable enough fora condition survey should be suggested. For practical purposes, based on therecommendations above and recommendations from other fields, conditiongrade data should exceed 0.7 with Krippendorff’s alpha and 0.85 with Perreault’spi for excellent agreement, and alpha exceed 0.6 and pi exceed 0.7 for fairagreement. For binary category data, Cohen’s kappa levels should exceed 0.7 forexcellent agreement, and exceed 0.5 for fair agreement.

Comparing index results for condition survey dataIn order to demonstrate the application of reliability indices for assessing datafrom condition surveys, sample data sets are analysed below using standardmethods.The data are overall condition scores, an ordinal metric, so Kripendorff’s alpha

is the most suitable index and will be referred to most frequently. A look at theindex results for different data helps to illustrate indices in action and comparestheir qualities. Table 2 offers six data sets, based on two surveyors (Evan andChan) assessing the same objects with condition grades ‘1’ to ‘4’. Only eightobjects are shown in the table 2, to illustrate the pattern of the data, but eachcalculation used 40 objects. Evan and Chan are hypothetical names used toindicate when two surveyors are assessing the same objects. The data comesfrom a variety of real and contrived sources.The first four data sets have been contrived to illustrate the values that would

be reached with certain kinds of data. For example, data set 3 is the correlationexample discussed in Pearson’s r (see discussion of Pearson’s r in appendix). Thecalculations were made for forty ‘objects’ but the pattern of the first eight‘objects’ is repeated, except in sets 5 and 6 which are real collected condition data.Data sets 5 and 6 are examples from actual condition surveys of the same objects.The data is mostly hypothetical in order to provide reference points about whatkind of data will create high and low values for different indices. This willillustrate the qualities of different indices. The last two data sets illustratepractical situations where reliability is harder to determine by simply looking atthe data. The different data sets are scenarios that illustrate the differencesbetween the indices, and the kinds of differences created by different data.

Data sets and Objects number (first eight objects only)surveyors 1 2 3 4 5 6 7 8

1 Evan 1 1 1 1 2 2 2 2

Chan 1 2 3 4 1 2 3 4

2 Evan 1 1 2 2 2 3 3 4

Chan 2 1 1 2 3 3 4 4

3 Evan 1 2 1 2 1 2 1 2

Chan 3 4 3 4 3 4 3 4

4 Evan 1 1 2 1 3 1 4 1

Chan 1 4 2 4 3 4 4 4

5 Evan 1 2 2 2 2 2 3 2

Chan 2 2 2 2 3 2 3 1

6 Evan 2 2 3 2 3 2 3 1

Chan 2 2 1 2 2 2 3 1

Table 2. Six data sets of two surveyors assessingthe same objects. The data have differentpatterns in each set. The data represents overallcondition scores from 1 to 4, where 1=goodcondition, 4=poor condition.

Indexing Reliability for Condition Survey Data 55

The Conservator volume 30 2007

• Set 1 is Evan changing scores by increments of four objects, and Chanchanging scores constantly. There is an even distribution that spans from directagreement to complete disagreement, similar to chance.

• Set 2 is a mixture of direct agreement and disagreement by one grade.Therefore there is only direct agreement 50% of the time, but disagreement isclose.

• Set 3 shows the same ordering of objects (correlation), but always a two gradedifference.

• Set 4 shows a mixture, like set 2, of direct agreement and disagreement, butdisagreement is total (‘1’ and ‘4’) in this case.

• The final two sets are real data that have been included to provide context.

In table 3, the values of each data set have been calculated with all of theindices discussed earlier. This shows the extent to which the indices measure thesame qualities. The differences vary with different patterns in data. Agreementthat corresponds to chance shows similar results for all indices that account forchance within their calculation. Data set 3 never shows direct agreement butperfect correlation and shows striking differences between index values in table4.This comparison also allows one to see that some indices tend to haveconsistently lower values than others, such as low values for Cohen’s kappa, andhigh values for Perreault’s pi. It should be remembered that only Krippendorff’salpha and Spearman’s rho are designed for ordinal data, and percentageagreement and Perreault’s pi operate between 0.00 and 1.00.The differences evident in table 3 illustrate that the concept of reliability can

be extremely complicated. It is essential to know the assumptions of each indexto help explain counterintuitive results, and to use more than one index to helpinterpretation.Because the even distribution of condition grades in data set 1 is similar to

chance, it is calculated as zero by all the indices that include chance agreement.Only percentage agreement gives a value beyond 0.00 (since it does not accountfor chance), the expected 25% chance with four categories. The data wouldcertainly be too unreliable for a condition survey. Data set 2, however, shows ahigh level of reliability for Krippendorff’s alpha, which was expected from theclose scoring shown in table 2. The way the grades are distributed shows highcorrelation, so Spearman’s rho is also high. The values for the other indices aremisleading, since the nominal metric measures direct agreement but does nottake into account that on the occasions where the surveyors disagree, differencesare small. This level of reliability, as illustrated with Krippendorff’s alpha themost suitable index, would be acceptable for making judgments about collectioncondition.Data sets 3 and 4, in which poor agreement was expected, showed negative

values for Krippendorff’s alpha. In both cases, the level of disagreement isprofound, and beyond coincidence (although possible). Data set 3 ispurposefully constructed to represent perfect correlation with no directagreement, as discussed earlier in the paper. It can be seen that Spearman’s rhogives a misleadingly high value, revealing the index to be unsuitable forevaluating reliability. All of the other indices, particularly Krippendorff’s alphaand Cohen’s kappa, provide low values for data that could not effectively used for

Data Percentage Scott’s Cohen’s Krippendorff’s Perreault’s Spearman’s Pearson’sset agreement pi kappa alpha (ordinal) pi rho r

1 0.25 0.00 0.00 0.00 0.00 0.00 0.00

2 0.50 0.33 0.33 0.77 0.58 0.81 0.81

3 0.00 -0.33 0.00 -0.58 0.00 1.00 1.00

4 0.50 0.27 0.39 -0.27 0.58 -0.19 0.05

5 0.60 0.34 -0.03 0.74 0.86 0.44 0.46

6 0.80 0.70 -0.11 0.43 0.68 0.72 0.75Table 3. The reliability values, calculated for eachindex discussed, from the data in table two.

56 Taylor and Watkinson

1 PRAM has been developed by Skymegsoftware and can be downloaded from theirwebsite (see suppliers). It calculates percentageagreement, Cohen’s kappa, Scott’s pi Pearson’s r,Spearman’s rho, and other indices.

The Conservator volume 30 2007

making decisions about a collection. Data set 4 contains some direct agreementand some profound disagreement. Krippendorff’s alpha shows that the data lacksreliability but the nominal indices Cohen’s kappa and Scott’s pi givecomparatively high values, due to the direct agreement. The extent ofdisagreement is not accounted for, so the kappa and pi values are misleadinglyhigh. Perreault’s pi has a range of 0.00 – 1.00, so the value provided is actuallyquite low. Spearman’s rho reveals the anti-correlation evident, illustrating thatany reliability must be due to direct agreement – an occasion when a correlativeindex can be useful to a condition survey, albeit to analyse other indices andsituations rather than to directly measure reliability.Data sets 5 and 6 come from conservators examining the same objects, so the

data is closer to what one might expect during a condition survey. However, theyare small examples of data and the values do not necessarily indicate expectedreliability. Because of their authenticity, the patterns in the data are lesspronounced than the previous illustrative examples. Data set 5 shows a highvalue for Krippendorff’s alpha and Perreault’s pi, which indicates reliable datathat can be used to make judgments about the collection’s condition. Asmentioned before, alpha and pi are the most useful indices for these data. Thedata in table 3 shows that direct agreement is not particularly frequent for dataset 5, but the objects are graded in a similar way and differences are small. Theobjects considered good by the first surveyor are generally considered good bythe second surveyor, so reliability is clearly well beyond chance agreement. Thelack of direct agreement leads to a very low value for Cohen’s kappa and a lowvalue for Scott’s pi. Data set 6 shows more direct agreement but table 3 shows alarge difference in the surveyors’ judgements for the third object. Amongst the 40objects examined, this reoccurs. The value for Krippendorff’s alpha is low as aresult. The percentage agreement and Scott’s pi values are high due to thefrequent direct agreement and the little importance placed on extent ofdisagreement by those indices. The high, again misleading, percentageagreement for data set 6 indicates that it should only be used for a cursoryindication of reliability, and ideally for nominal data. Data set 6 shows potentialfor reliability through high direct agreement, but steps to increase reliabilityshould be taken during the pilot study, such as discussing condition gradedefinitions, before the conservators begin to survey the collection. Comparingdata sets 5 and 6, one can see that the various indices differ in indicating whichdata set is the most reliable. In this case, Krippendorff’s alpha is the most suitable.Although data set 6 shows both higher correlation in Spearman’s rho and highagreement with percentage agreement and Scott’s pi, these indices (evencombined) do not effectively characterise the reliability of this ordinal data. Thefewer disagreements in data set 6 show larger differences, which indicatepotentially misleading data. The data in set 5, although shown to be imperfect(particularly in terms of direct agreement), has the potential to be a reliabledepiction of the condition of a collection.

Calculating reliability with softwareWithout computers calculating reliability can often be complex and time-consuming. Fortunately all the indices mentioned can be calculated by computer.Kang et al. (1993, authors’ brackets) point out that “the calculation of intercoder[between surveyor] agreement is essentially a two-stage process. The first stageinvolves constructing an agreement matrix which summarises the coding[recording] of results”. An agreement matrix is simply the plotted agreement ordisagreement of results in a table format, which can also be useful for visualisingreliability. Spreadsheets tend not to have the matrix building facility that is oftenthe first stage of calculating reliability. The last few years have seen severalsoftware developments for calculating reliability indices. Software forcalculating percentage agreement, Scott’s pi, Cohen’s kappa, Spearman’s rho,Pearson’s r and other indices, using Microsoft Excel files, called Program forReliability Assessment for Multiple coders [surveyors] (PRAM) was developed.While it is downloadable free of charge from their website1, at the time of writing

Indexing Reliability for Condition Survey Data 57

2 Krippendorff’s homepage has a document oncalculating alpha for nominal, ordinal, intervaland ratio metrics with two or multiple surveyorsat http://www.asc.upenn.edu/usr/krippendorff/dogs.html

The Conservator volume 30 2007

it is an early version of the software.PRAM can vary the number of surveyors, categories and grades included in

the assessment. As a result, different relationships can be observed closely fordiagnostic purposes, which is very useful for conditions surveying. For indicesthat will only assess two surveyors at a time, such as Cohen’s kappa and Scott’spi, the combinations of each surveyor pair are given a value (e.g. surveyors Evan& Chan, Evan & Jenny and Chan & Jenny). Calculating reliability for eachcategory, or each surveyor is therefore possible. The programme does not requirea statistics software platform, and can be downloaded for immediate use.Krippendorff’s alpha is notoriously difficult to calculate by hand, although

there are instructions available2. Software is being developed by Krippendorff,but is in production. Kang et al. (1993) published a computer ‘macro’ to calculateKrippendorff’s alpha for nominal, ordinal and interval metrics, Perreault’s pi,Cohen’s kappa and other indices. The macro uses text files which can beconverted from Excel, and requires the Statistical Analysis Software (SAS®)package as a platform. The authors have a copy of this macro. The statisticalpackage for the social sciences (SPSS®) can calculate Cohen’s kappa, and can beused as a platform for a computer ‘macro’ designed to calculate agreementindices, including Krippendorff’s alpha (Lombard et al., 2003).Although in early stages, such developments make reliability testing in

condition survey pilot studies a real possibility for conservation practice.Calculations that are time–consuming and often complicated can be carried outin moments. Feedback can then be given on the same day, and using index valuesas a guide, definitions can be refined to improve the data collection process. Thismarks a significant step forward in collection condition survey methodology,since reliable, reproducible survey data is vital for making informed decisions oncollections care.

Using a reliability index to diagnose problems in a pilot studyIf reliability is thought to be unsatisfactory, ways to increase it need to bedeveloped. This can only be achieved by knowing the reason for disagreement,and indices have an important role to play in this. Isolating elements of thesurvey can help determine the kinds of improvements that would be mosteffective. Reliability levels and methods of increasing reliability are the subject offorthcoming work, but the diagnosis of causes is an important part of thatprocess which relies on the indices used.Since pilot studies are used to iron out ambiguities and differences of opinion,

reliability indices can become an invaluable tool for identifying problems andmonitoring improvement. There are various ways in which reliability isthreatened; the categories, the surveyors and the objects. They can be isolatedand examined for causes of disagreement. When looking at individualcategories, practitioners may find it useful to use ordinal data for the categoriesand use Krippendorff’s alpha during a pilot study, since this is more informativefor diagnosis and corresponds more closely with condition scores. Once indexvalues have been calculated for categories, one can determine if any categoriesare problematic, and how they affect overall reliability. Definitions can bediscussed and changed.PRAM software1 shows the reliability of each pair of surveyors on a

spreadsheet page, so a value is given for all pairings. Consequently, values thatlower the average can be attributed to specific pairings. Looking at each surveyoras half of a pair will reveal if one surveyor consistently has a different opinion toothers or if there is general disagreement. This affords the opportunity to checkif any surveyors need further guidance.Also, certain objects will be more difficult to assess than others. It is possible

to measure reliability for object groups that comprise a certain quality ormaterial, so definitions for certain kinds of material or object can be changed orrefined. Subsets of the collection can be created for any group that may increasedisagreement, such as broken objects. Since it is intended that the same grade isawarded to the same object, Krippendorff’s alpha is unsuitable but Perreault’s pi

58 Taylor and Watkinson

The Conservator volume 30 2007

has been helpful with these calculations, despite being an index for nominal data.Specific definitions to deal with these objects can then be devised.

ConclusionProducing data that can be trusted is a vital component of condition surveys andcollections management as a whole. Reliability cannot be assumed, so creatingways to test and monitor changes is an important step in ensuring the surveydata can be used with confidence. Computer programs recently developed inother fields should make this process easier and could well make the reliabilityindex and assessment a common part of survey practice. This could be extendedto other areas of conservation that require semi-quantitative assessment.Each index has its own qualities and assumptions, so one single number or

index does not define the whole situation. As a result, there is no one index thatwill be appropriate in every situation. This situation is not uncommon inreliability measurement, and it is better to know different options than to usemisleading information. What reliability indices can do is equip the conservatorwith a comprehensive understanding of their data’s reliability, and what aspectsof it require change to make improvements. Krippendorff’s alpha is most usefulfor ordinal condition scores, and Cohen’s kappa for category data. Perreault’s piprovides a useful alternative perspective on chance agreement. The use of morethan one index is encouraged, which can be carried out simultaneously withcomputer software.Encouraging the use of reliability indices will help acknowledge the

uncertainty inherent in data created by judgment, such as condition assessment.By raising the issue of reliability, conservators’ understanding of the qualities ofcondition data is also likely to increase.

Appendix: Descriptions of the reliability indices

Percentage agreementThis is a simple assessment of the number of agreements divided by the number ofdecisions. It is simple, efficient, intuitive, easy to calculate and can accommodate a numberof surveyors. Its simplicity is the reason for its common usage. It’s disadvantage is thatbinary data such as ‘present’ and ‘not-present’, can reach an agreement of ~50% throughsheer chance. Consequently, percentage agreement gives an over-inflated depiction ofreliability. It is often criticised for this but it does reflect what is actually happening, interms of agreement. The rigidity of only recording direct agreement is limiting forcondition surveys, though, and only applicable to nominal data. It is not recommendedand if used at all, it should be in connection with another index (Lombard et al., 2003).

Scott’s piScott (1955) developed his pi index to address the chance agreements that percentagereliability ignores. Expected agreement through chance is calculated, and the index isexpressed as ‘agreement beyond chance’. It is designed for two surveyors using nominaldata, taking into account the number of categories. Scott points out that assuming thateach category has an equal chance of being used is spurious (Scott, 1955). Expected chancemay evenly distribute amongst these categories but the population data will notnecessarily be distributed this way. In condition surveys, some grades may be used morethan others, depending on the condition of the collection. For example, were only two offour condition grades used throughout the survey, it would have the same effect as onlyusing two categories – appearing more reliable than it necessarily is. The method ignoresdifferences in two surveyors’ distributions of grades, though.The matrix of results is compared to a matrix with data that would be expected by chancefrom the number of categories involved. In correcting for chance, the method counts thecollective distribution of grades across two surveyors’ results. If more than two surveyorsare used, the overall value is the average of these. Scott (1955) expresses his pi index as;

π = ( )

π = Scott’s pi reliabilityPo = proportion agreement, observedPe = proportion agreement, expected by chance

(Neuendorf, 2002)

Po � Pe1 � Pe

Indexing Reliability for Condition Survey Data 59

The Conservator volume 30 2007

Cohen’s kappaCohen’s kappa (1960) is a development from Scott’s pi that follows the same conceptualformula, but also offers standard error and confidence limits. This is a popular index thatgives low estimates of agreement. There are several variations on it.Kappa also includes the frequency of use for scores but uses a multiplicative term, ratherthan Scott’s additive term, which has the effect of accounting for distribution of grades foreach surveyor (Cohen, 1960). Scott’s pi and Cohen’s kappa have both been criticised forproducing low levels of reliability. Their assumption that any expected agreement shouldbe attributed to chance means that agreement is diminished if the distribution of grades isnarrow, as this presents greater likelihood that answers will co-incide. Perreault and Leigh(1989) point out that if two categories are joint-coded at 10% and 90% (table 4), the chanceagreement is so high that 82% agreement would appear as 0.44 beyond chance withCohen’s kappa. The jointly recorded data are referred to as ‘marginals’ (the total number oftimes a category is used and this is often recorded in the margins of matrices). They areused to calculate chance agreement by calculating the total number of times a category isused.The conservative nature of agreement beyond chance means that smaller samples withfew categories can appear less reliable than they are. This is normally not applicable tocondition surveys, which often have quite complex and sometimes ambiguous categories.Like Scott’s pi, kappa it is designed for two surveyors only.

Surveyor AYes No

Yes 81 9 0.9No 9 1 0.1

0.9 0.1 Marginals

Krippendorff’s alphaAlpha appears to be the gold standard of reliability measurement (Neuendorf, 2002,Lombard et al., 2002; 2003) and is one of the few indices that accommodates ordinal data,but it is very complex and tedious to calculate by hand. It is designed for multiplesurveyors, so is an attractive option. It has been criticised for its complexity and is notfrequently used, but it is a good choice for ordinal condition scores. Kang et al. (1993) havepublished a ‘macro’ programme that can be used with Statistical Analysis Software® tocalculate Krippendorff’s alpha, which increases the ease with which it can be used.However, the only computer programmes for calculating alpha are informally produced atthe time of writing.Krippendorff’s alpha is unusual in that it can be calculated for nominal, ordinal, intervaland ratio metrics. Alpha reliability takes chance agreement into account, as well as missingdata (Krippendorff, 1980; 2004). The nominal version is very similar to Scott’s pi - the two-surveyor nominal version of Krippendorff’s alpha asymptotically converges with it(Krippendorff, 2005b.). Chance agreement is accounted for in with marginal data, butincludes extent of disagreement in the hierarchy. High levels of reliability can be difficultto achieve.Like Cohen’s kappa, the way in which chance agreement is calculated for alpha can beproblematic for assessing reliability, especially with small samples. If only a narrow spreadof the condition grades available is used by surveyors, chance agreement is calculated tobe very high. As a result, high reliability from objects representing only two conditiongrades would not be reported as reliable by this method. The equation varies withdifferent metrics but the basic concept of alpha can be expressed as below.

α = 1 �

α = Krippendorff’s alpha reliabilityDo = disagreement, observedDe = disagreement, expected by chance (Krippendorff, 2004)

Perreault’s piPerreault’s pi is a nominal index, which holds a more optimistic approach to chanceagreement, stating that scores are rarely intended to be evenly distributed. As a result,Perreault’s pi values often appear higher than most of the other indices discussed(Perreault and Leigh, 1989). It is closest to percentage agreement, from which thefrequency of agreement is based, and moderated by a form of chance agreement whichdiffers from Scott’s pi, Krippendorff’s alpha and Cohen’s kappa. Perreault and Leigh’smethod for calculating chance assumes that the ratio of chance agreements to the totalnumber of judgements “will be proportionate to the ratio of possible ways in which theycould agree to the possible number of combinations” (1989). This method appears less

DoDe

SurveyorB

Table 4 An example of marginal probabilitiesgenerated by 100 assessments, where there areonly two categories (yes or no).

60 Taylor and Watkinson

The Conservator volume 30 2007

theoretically robust but does avoid the problem of low reliability levels for responses withnarrow spreads of grades that affect Cohen’s kappa, Scott’s pi and Krippendorff’s alpha. Asa result, it has a useful practical application. Perreault’s pi assumes that surveyors operateindependently of categories, definitions and instructions and assumes that all surveyorsare equally reliable, so the index accounts for random but not systematic bias. These areerroneous assumptions for condition surveys, as surveyors are not equally reliable, andthey do rely on definitions and follow instructions. However, the advantages anddisadvantages are complementary to other techniques for calculating chance agreement,which makes pi a useful tool when considered along with other reliability tests results. Theindex ranges from 0.00 to 1.00 and is designed for only two surveyors. The equation isgiven below.

π = N(1 – Ir 2)/k

π = Perreault’s pi reliabilityN = Total number of judgmentsIr2 = Total agreements squaredk = possible ways to record judgement

Spearman’s rho and Pearson’s rSpearman’s rho and Pearson’s coefficient r is a measure of correlation, rather thanagreement. Correlative indices are the recommended measure for social science surveys(Litwin, 1995) but are not appropriate for condition survey data. It is for two surveyorswith ordinal (Spearman), or interval or ratio data (Pearson), and expresses reliability from1.00 (total correlation), through 0.00 (no correlation, equal to chance) to –1.00 (high valuesfor one and low values for the other). Correlation coefficients are measures of linearassociation designed to accommodate different scales of measurement, so differentvariables can be compared. Since condition scores use the same scale this function isactually problematic, rather than beneficial. The different scores (x and y) are related bydetermining their standard deviation from the mean average, and then compared.A big problem in this method for condition surveys occurs because correlation does notrequire direct agreement, so survey data do not need to be the same to appear reliable.Therefore, systematic errors can go unrecorded. One surveyor could record 1-2-1-2-1-2,whereas another surveyor could record 3-4-3-4-3-4, and there would be perfect correlationbut quite different consequences for the collection. The occurrence of systematic errorswithin condition survey can be likely, since people have different frames of reference,which leads to reliability being over-estimated (Neuendorf, 2002). For example, this canoccur when people agree on which objects are better or worse than others but disagree onthe exact condition grade.While the coefficient is clearly misleading for condition surveys, it can lead to insights

if related to other index values. This can help determine if reliability is direct agreement orrelated to the amount of difference between scores. This provides an opportunity todetermine the nature of reliability, as well as the extent, which can be useful in diagnosticexaminations of unreliability. The equation for Pearson’s r is expressed below.

r = ∑ ZxZy

N

Zx = one surveyor’s standardised scoresZy = the other surveyor’s standardised scoresN = number of objects

(Hinton, 1995)

Another issue that must be considered with the Spearman and Pearson coefficients is thesignificant impact of sample sizes. With small samples, values must be very high to beconsidered significant, a less important issue for other indices such as Krippendorff’s alpha(Krippendorff, pers. comm.). In the case of testing reliability of surveys, sample sizes arelikely to be low.

Indexing Reliability for Condition Survey Data 61

The Conservator volume 30 2007

AcknowledgementsThe authors would like to thank Dr. Jim Helgeson, Gonzaga University,WA, USA for providing the SAS® macro and for discussion, Prof. KlausKrippendorff, University of Pennsylvania, PA, USA for discussions aboutalpha, Prof. Jonathan Ashley-Smith for discussion about condition data,Harry Bruhns, University College London, for assistance with SAS®.

References

Banerjee, M., Capozzoli, M., McSweeney, L. and Sinha, D. (1999) ‘Beyondkappa: A review of interrater agreement measures’, in Canadian Journal ofStatistics, vol. 27, no.1, pp.3–23.

Cohen, J. (1960) ‘A coefficient of agreement for nominal scales’, inEducational and Psychological Measurement, vol. 20, no. 1, pp.37–46

Ellis, L. (1994) Research methods in the social sciences, Madison, WI: WCBBrown & Benchmark.

Frey, L. R., Botan, C.H. and Kreps, G.L. (2000) Investigating communication:An introduction to research methods, 2nd edition, Boston: Allyn and Bacon.

Hinton, P. (1995) Statistics Explained: A guide for the social science students,London: Routledge

Kang, N., Kara, A. Laskey, H. A. and Seaton, F. B. (1993) ‘A SAS Macro forCalculating Intercoder Agreement in Content Analysis’, in Journal ofAdvertising, vol. 22, no. 2, pp.17–28.

Keene, S. (1991) ‘Audits of care: a framework for collections conditionsurveys’, in Storage, ed.s M. Norman & V. Todd, UKIC, pp.6–16.

Keene, S. (2002) Managing conservation in museums, 2nd edition, London:Butterworths Heinmann, Chapter 9.

Keene, S. and Orton, C. (1992) ‘Measuring the condition of museumcollections’, in CAA91: Computer Applications and Quantitative Methods inArchaeology, eds. G.L. and J. Moffett, BAR international series, pp.163–166.

Kingsley, H. & Payton, R. (1994) ‘Condition surveying of large, variedstored collections’, in Conservation News 54, UKIC, pp. 8–10.

Krippendorff, K. (1980) Content Analysis: An introduction to its methodology,Beverly Hills CA: Sage, Chapter 12.

Krippendorff, K. (2004) Content Analysis: An introduction to its methodology,2nd edition, Beverly Hills CA: Sage, Chapter 11.

Krippendorff, K. (2005a) ‘Reliability in content analysis: Some commonmisconceptions and recommendations’, in Human Communication Research,vol. 30, no.3, pp. 411–433.

Krippendorff, K., (2005b). Personal communication about softwaredevelopment for alpha and the effects of sample size via emails in May2005.

Litwin, M. S. (1995) How to measure survey reliability and validity: The surveykit volume 7, Thousand Oaks, CA: Sage Publications.

Lombard, M., Snyder-Duch, J. and Campanella Bracken, C. (2002) ‘Contentanalysis and mass communication assessment and reoprting of intercoderreliability’, in Human communication research, vol. 28, no. 4, pp.587–604.

Lombard, M., Snyder-Duch, J. and Campanella Bracken, C. (2003) Practicalresource for assessing and reporting intercoder reliability in contentanalysis research projects, www.temple.edu/mmc/reliability, accessed09/12/2003

Neuendorf, K. A. (2002) The content analysis guidebook, Thousand Oaks: SagePublications, Chapter 7.

Newey, H.M., Bradley, S.M. and Leese, M.N. (1993) ‘Assessing thecondition of archaeological iron: an intercomparison’, ICOM-CC 10thTriennial, Washington: ICOM, pp.786–791.

Orton, C. (2000) Sampling in archaeology, Cambridge: Cambridge UniversityPress.

Perreault, W. D. Jr. and Leigh, L. E. (1989) ‘Reliability of nominal data basedon qualitative judgments’, in Journal of marketing research, vol. 26,pp.135–148.

Popping, R. (1988) ‘Computer programs for the analysis of texts andtranscripts’, in Text analysis for the social sciences: Methods for drawingstatistical inferences from texts and transcripts, Ed. C. Roberts, Mahwah, NewJersey: Lawrence Erlbaum, pp. 209–221.

Potter, W.J. and Levine-Donnerstein, D. (1999) ‘Rethinking validity andreliability in content analysis’, in Journal of applied communication, vol. 27, pp.258–284.

Riffe, D., Lacy, S. and Fico, F. (1998) Analyzing Media Messages: UsingQuantitative Content Analysis in Research, Mahwah, New Jersey, USA:Lawrence Erlbaum Associates.

Scott, W. A. (1955) ‘Reliability of content analysis: The case of nominalcoding’, in Public opinion quarterly, vol. 19, pp. 321–325.

Suenson-Taylor, K., Sully, D. & Orton, C. (1999) ‘Data in conservation: themissing link in the process’, in Studies in conservation, vol. 44, pp. 184–194.

Taylor, J. (1996) An investigation of subjectivity in collection condition surveys,Cardiff University: unpublished dissertation.

Taylor, J. and Stevenson, S. (1999) ‘Investigating subjectivity withincollection condition surveys’, in Journal for museums management andcuratorship, vol. 18, pp. 18–42.

Suppliers

PRAM – Program for Reliability Assessment ofMultiple codershttp://www.geocities.com/skymegsoftware/pram.html

SAS® – Statistical Analysis Softwarewww.sas.com

SPSS® – Statistical Package for the SocialScienceswww.spss.com

62 Taylor and Watkinson

The Conservator volume 30 2007

Biographies

Joel Taylor

Centre for Sustainable HeritageBartlett School of Graduate StudiesUniversity College LondonGower StreetLondon wc1e [email protected]

Joel Taylor is a research fellow at the University College London Centre forSustainable Heritage. He received a BSc (hons) in ArchaeologicalConservation from Cardiff University in 1996, before joining NationalMuseums and Galleries of Wales, then English Heritage. He has publishedvarious articles on the subject of collection condition surveys, and iscurrently reading for a PhD in this area. He has also been keynote speakerat a conference in Australia dedicated to collection surveys. Other researchinterests include preventive conservation strategy and risk management.

David Watkinson ACRSchool of History and ArchaeologyCardiff UniversityPO Box 909Cardiffcf10 1xuUKEmail: [email protected]

Diploma in Archaeological Conservation, Institute of Archaeology London.Research MSc Cardiff University – thesis on extraction of chloride fromarchaeological iron. Senior Lecturer and Head of Conservation at CardiffUniversity, which provides an undergraduate degree in conservation ofobjects and two Masters Degree options in conservation. His publicationsare mostly centred on corrosion and conservation of iron, decay of glass,practical conservation and conservation training. Professionally active inUKIC during 1980’s, when he was chair of the Archaeology Section. Servedon various science and other committees in archaeology and conservation.Current research centres on corrosion of the SS Great Britain.