Data filtering with support vector machines in geometric camera calibration

Transcript of Data filtering with support vector machines in geometric camera calibration

Data filtering with support vector machines in geometric camera calibration

B. Ergun, T. Kavzoglu, I. Colkesen and C. Sahin

Gebze Institute of Technology, Department of Geodetic and Photogrammetric Engineering, Muallimkoy Campus, 41400, Gebze-Kocaeli, Turkey

Abstract: The use of non-metric digital cameras in close-range photogrammetric applications and machine vision has become a popular research agenda. Being an essential component of photogrammetric evaluation, camera calibration is a crucial stage for non-metric cameras. Therefore, accurate camera calibration and orientation procedures have become prerequisites for the extraction of precise and reliable 3D metric information from images. The lack of accurate inner orientation parameters can lead to unreliable results in the photogrammetric process. A camera can be well defined with its principal distance, principal point offset and lens distortion parameters. Different camera models have been formulated and used in close-range photogrammetry, but generally sensor orientation and calibration is performed with a perspective geometrical model by means of the bundle adjustment. In this study, support vector machines (SVMs) using radial basis function kernel is employed to model the distortions measured for Olympus Aspherical Zoom lens Olympus E10 camera system that are later used in the geometric calibration process. It is intended to introduce an alternative approach for the on-the-job photogrammetric calibration stage. Experimental results for DSLR camera with three focal length settings (9, 18 and 36mm) were estimated using bundle adjustment with additional parameters, and analyses were conducted based on object point discrepancies and standard errors. Results show the robustness of the SVMs approach on the correction of image coordinates by modelling total distortions on-the-job calibration process using limited number of images.

© 2010 Optical Society of America

OCIS codes: (150.1488) Machine vision; (120.3940) Metrology

References and links

1. J. Cardenal, E. Mata, P. Castro, J. Delgado, M. A. Hernandez, J. L. Perez, M. Ramos, and M. Torres, “Evaluation of a digital non metric camera (Canon D30) for the photogrammetric recording of historical buildings,” International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Istanbul, Turkey, 34, Part XXX (2004).

2. F. Remondino, and C. Fraser, “Digital camera calibration methods: considerations and comparisons,” Int. Arch. Photogramm. Remote Sens. XXXVI(5), 309–314 (2006).

3. C. S. Fraser, and M. R. Shortis, “Variation of distortion within the photographic field,” Photogramm. Eng. Remote Sensing 58, 851–855 (1992).

4. K. B. Atkinson, Close Range Photogrammetry and Machine Vision (Whittles Publishing, 1996). 5. A. Mathur, and G. M. Foody, “Crop classification by support vector machines with intelligently selected training

data for an operational application,” Int. J. Remote Sens. 29(8), 2227–2240 (2008). 6. V. N. Vapnik, The Nature of Statistical Learning Theory (Springer-Verlag, 1995). 7. M. R. Shortis, S. Robson, and H. A. Beyer, “Principal point behaviour and calibration parameter models for

Kodak DCS cameras,” Photogramm. Rec. 16(92), 165–186 (1998). 8. C. S. Fraser, “Digital camera self-calibration,” ISPRS J. Photogramm. 52(4), 149–159 (1997). 9. D. C. Brown, “Close-range camera calibration,” Photogramm. Eng. Remote Sensing 37, 855–866 (1971). 10. T. A. Clarke, and J. G. Fryer, “The development of camera calibration methods and models,” Photogramm. Rec.

16(91), 51–66 (1998). 11. A. Gruen, and H. A. Beyer, “System calibration through self-calibration,”. Chapter 7 in Calibration and

Orientation of Cameras in Computer Vision (Eds. A. Gruen and T.S. Huang), Springer Series in Information Sciences 34, Springer, Berlin. 235 pages: 163–194 (2001).

#120238 - $15.00 USD Received 2 Dec 2009; revised 5 Jan 2010; accepted 8 Jan 2010; published 15 Jan 2010

(C) 2010 OSA 1 February 2010 / Vol. 18, No. 3 / OPTICS EXPRESS 1927

12. C. S. Fraser, “On the use of non-metric cameras in analytical non-metric photogrammetry,” Int. Arch. Photogramm. Remote Sens. 24, 156–166 (1982).

13. C. S. Fraser, M. R. Shortis, and G. Ganci, “Multi-sensor system self-calibration,” in Proceedings of Videometrics IV Conference (SPIE, 1995) 2598, pp 2–18.

14. S. Abraham, and T. Hau, “Towards autonomous high precision calibration of digital cameras,” in Proceedings of SPIE Annual Meeting, San Diego, 82–93 (1997).

15. C. S. Fraser, and S. Al-Ajlouni, “Zoom-dependent camera calibration in digital close-range photogrammetry,” Photogramm. Eng. Remote Sensing 72, 1017–1026 (2006).

16. C. Bellman, and M. R. Shortis, “A machine learning approach to building recognition in aerial photographs,” in Proceedings ISPRS Commission III Symposium on Photogrammetric Computer Vision 2002, Graz, Austria, 9–13 September, Part A, pp 50–54 (2002).

17. C. Bellman, and M. R. Shortis, “Using support vector machines for building recognition in aerial photographs,” presented at 11th Remote Sensing and Photogrammetry Conference on Images to Information, Brisbane, Australia, 2–6 Sept. 2002.

18. R. Mohamed, A. Ahmed, A. Eid, and A. Farag, “Support vector machines for camera calibration problem,” in Proceedings of IEEE International Conference on Image Processing (ICIP'06), Atlanta, USA. pp. 1029–1032 (2006).

19. K. S. Choi, E. Y. Lam, and K. K. Y. Wong, “Automatic source camera identification using the intrinsic lens radial distortion,” Opt. Express 14(24), 11551–11565 (2006).

20. B. Möller, and S. Posch, “Identifying lens distortions in image registration by learning from examples,” in Proceedings of British Machine Vision Conference (BMVC '07), University of Warwick, Coventry, UK. pp. 152–161 (2007).

21. A. J. Smola, and B. Schölkopf, “A tutorial on support vector regression,” Stat. Comput. 14(3), 199–222 (2004). 22. J. Meng, Y. Gao, and Y. Shi, “Support vector regression model for measuring the permittivity of asphalt

concrete,” IEEE Microw. Wirel. Co. 17(12), 819–821 (2007). 23. Y. Pan, J. Jiang, R. Wang, and H. Cao, “Advantages of support vector machine in QSPR studies for predicting

auto-ignition temperatures of organic compounds,” Chemometr. Intell. Lab. 92(2), 169–178 (2008). 24. T. T. Zou, Y. Dou, H. Mi, J. Y. Zou, and Y. L. Ren, “Support vector regression for determination of component

of compound oxytetracycline powder on near-infrared spectroscopy,” Anal. Biochem. 355(1), 1–7 (2006). 25. D. Sebald, and J. Bucklew, “Support vector machines and the multiple hypothesis test problem,” IEEE T, Signal

Process. 49, 2865–2872 (2001). 26. B. E. Boser, I. M. Guyon, and V. Vapnik, “A training algorithm for optimum margin classifiers,” in Proceedings

of the Fifth Annual Workshop on Computational Learning Theory 5, (ACM, 1992), pp. 144–152. 27. C. Huang, L. S. Davis, and J. R. G. Townshend, “An assessment of support vector machines for land cover

classification,” Int. J. Remote Sens. 23(4), 725–749 (2002). 28. C. W. Hsu, C. C. Chang, and C. J. Lin, “A practical guide to support vector classification,”

http://www.csie.ntu.edu.tw/~cjlin/papers/guide/guide.pdf.

Introduction

Last decade has witnessed an extensive use of digital non-metric SLR cameras for use in low cost applications in archaeology, architecture, cultural heritage and others. Increase in image resolution, the dropping prices, present facilities in storing/transferring images files and easy direct image acquisition (without digitizing films or paper prints) are the main reasons increasing the use of these instruments in photogrammetry. Also, the use of low cost digital photogrammetric systems has contributed to the use of these “off the shelf” cameras among photogrammetrists and non photogrammetrists [1].

Digital cameras have been widely used for close range photogrammetry and machine vision applications. For any photogrammetric application, the accuracy of the derived object data is dependent on the accuracy of the camera calibration, amongst many other factors. Camera calibration has always been an essential component of photogrammetric measurement, with self-calibration nowadays being an integral and routinely applied operation within photogrammetric triangulation, especially in high-accuracy close-range measurement. With the very rapid growth in adoption of off-the-shelf digital cameras for a host of new 3D measurement applications, however, there are many situations where the geometry of the image network will not support robust recovery of camera parameters via on the job calibration. For this reason, stand-alone camera calibration has again emerged as an important issue in close-range photogrammetry, and it also remains a topic of research interest in computer vision [2]. Various calibration studies have been reported in the literature using additional parameters, and the effect of changes in calibration parameters are examined with regard to the camera and lens properties [3, 4].

#120238 - $15.00 USD Received 2 Dec 2009; revised 5 Jan 2010; accepted 8 Jan 2010; published 15 Jan 2010

(C) 2010 OSA 1 February 2010 / Vol. 18, No. 3 / OPTICS EXPRESS 1928

Support vector machines (SVMs), based on statistical learning theory, have been recently employed in many applications in diverse fields. It is generally agreed that support vector machines produce results with higher accuracies when the available data are limited in terms of the number of control points [5]. An important characteristic of SVMs is their non-parametric nature, which assumes no a priori knowledge, particularly of the frequency distribution of the data. It can be stated that SVMs are based on the theory of structural risk minimization [6]. Because of their adaptability and their ability to produce high accurate result, their use has spread in the scientific community. SVMs have been successfully employed in many fields for solving complex modeling, prediction and simulation problems that are often represented by noisy and missing data.

This study overviews the current methods and SVMs approach adopted for camera calibration in close-range photogrammetry, and discusses their operational aspects in calibration process. Also, the results of camera calibration using two different algorithms are examined and discussed thoroughly. Experimental results for DSLR camera with three different lens settings derived from the two calibration methods are presented and the effectiveness of the techniques in terms of 3D positional corrections for the principal point is quantitatively analyzed.

Photogrammetric camera calibration

A camera is considered calibrated if the principal distance, principal point offset and lens distortion parameters are known. In many applications, especially in computer vision, only the focal length is recovered while for precise photogrammetric measurements all the calibration parameters are generally employed. Over the years, some algorithms have been proposed for camera calibration using two and three dimensional test fields within distinct studies [7]. Planar target fields tend to offer a better scope for projective compensation of IO instability; with 3D target fields the resulting degradation of the photogrammetric triangulation is more clearly pronounced [8].

Different camera models have been formulated and used in close-range photogrammetry, but generally sensor orientation and calibration is performed with a perspective geometrical model by means of the bundle adjustment [9]. A review of the methods and models is provided in [10]. The basic mathematical model is provided by the non-linear collinearity equations, usually extended by correction terms (i.e. additional parameters or APs) for interior orientation, and radial and decentering lens distortions [4, 11]. The bundle adjustment provides a simultaneous determination of all system parameters along with estimates of the precision and reliability of the extracted calibration parameters. Also, correlations between the interior (IO) and exterior orientation (EO) parameters, and the object point coordinates, along with their determinability can be quantified. A favorable network geometry is required, i.e. convergent and rotated images of a preferably 3D object should be acquired, with well distributed points throughout the image format. If the network is geometrically weak, correlations may lead to instabilities in the least-squares estimation. The use of inappropriate APs can also weaken the bundle adjustment solution, leading to over-parameterization, in particular in the case of minimally constrained adjustments [12].

The self-calibrating bundle adjustment can be performed with or without object space constraints, which are usually in the form of known control points. A minimal constraint to define the network datum is always required, though this can be through implicit means such as inner constraint, free-network adjustment, or through an explicit minimal control point configuration (arbitrary or real). Calibration using a test field is possible, though one of the merits of the self-calibrating bundle adjustment is that it does not require provision of any control point information. Recovery of calibration parameters from a single image is also possible through the collinearity model [3], though this spatial resection with APs is not widely adopted due to both the requirement for an accurate test field and the lower accuracy calibration provided.

The most common set of APs employed to compensate for systematic errors in CCD cameras is the 8-term ‘physical’ model originally formulated in [9]. This comprises interior

#120238 - $15.00 USD Received 2 Dec 2009; revised 5 Jan 2010; accepted 8 Jan 2010; published 15 Jan 2010

(C) 2010 OSA 1 February 2010 / Vol. 18, No. 3 / OPTICS EXPRESS 1929

orientation (IO) parameters of principal distance and principal point offset (xp, yp), as well as the three coefficients of radial and two of decentering distortion. The model can be extended by two further parameters to account for affinity and shear within the image plane, but such terms are rarely if ever significant in modern digital cameras. It should be mentioned that in [13] extended the model using eleven parameters in multi sensor calibration and in [4] employed a model with eight parameters in digital camera self-calibration. Numerous investigations of different sets of APs have been performed over the years (e.g [14].), yet this model still holds up as the optimal formulation for digital camera calibration.

The three APs used to model radial distortion ∆r are generally expressed by the odd-order polynomial ∆r = K1r

3 + K2r

5 + K3r

7, where r is the radial distance. The coefficients Ki are

usually highly correlated, with most of the error signal generally being represented by the cubic term K1r

3. The K2 and K3 terms are typically included for photogrammetric (low

distortion) and wide-angle lenses, and in higher-accuracy vision metrology applications. The commonly encountered third-order barrel distortion seen in consumer-grade lenses is accounted for by K1and K2 [5].

Decentering distortion is due to a lack of centering of lens elements along the optical axis. Decentering distortion parameters P1 and P2 are invariably coupled with x0 and y0. This distortion is usually an order of magnitude or more less than radial distortion and it also varies with focus, but to a much less extent. An empirical correction model is introduced which overcomes serious shortcomings for the traditional geometric correction approach. This model has been tested with success on a number of medium- and wide-angle, medium- and large-format lenses [15]. The projective coupling between P1 and P2 and the principal point offsets increases with increasing focal length and can be problematic for long focal length lenses. The extent of coupling can be diminished through both use of a 3D object point array and the adoption of higher convergence angles for the images.

If the lens distortion is known, the image co-ordinates can be adjusted before the bundle adjustment, particularly for on-the-job calibration. This process may be considered as image refining. Even in case of some distortions, the refining is ineffective, because with non-metric cameras it changes in time. It is more effective for the image defects to be reduced by introduction of additional parameters into the mathematical model.

Camera and image acquisition

The Olympus E10 digital DSLR camera contains a 4 megapixel CCD sensor with a format size of 2240×1680 pixel with approximately 3.9 µm pixel spacing. In this study, in order to perform a metric calibration of the digital camera a 3D calibration test field having 112 circular coded targets is used. Using the calibration grid, images were taken from different angles for each focal distance setting. Thus, 5 images for 9mm, 18mm and 36mm focal distances were used for the 3D calibration field. It is obvious that by acquiring more images for a scene better geometrical accuracy can be achieved for projection center and 3D target coordinates. However, in many cases of close range and architectural applications a few number of images are available for stereo and convergent orientation. Therefore, lower level of accuracy can be achieved for such cases (i.e. on the job calibration). This study suggests the use of support vector regression approach to increase the level of accuracy in the calibration through the block adjustment process.

The bundle adjustment was used to calculate geometrical calibration parameters and distortion parameters photogrammetrically with additional parameters using PICTRAN software package, as shown in Fig. 1. As a result of the bundle adjustment process, inner orientation parameters, projection center coordinates for each image and the corrections of these values are calculated for the three focal length settings.

#120238 - $15.00 USD Received 2 Dec 2009; revised 5 Jan 2010; accepted 8 Jan 2010; published 15 Jan 2010

(C) 2010 OSA 1 February 2010 / Vol. 18, No. 3 / OPTICS EXPRESS 1930

Fig. 1. Calibration using bundle adjustment with additional parameters for the 3D test field.

Support vector machines

Support vector machines (SVMs) are a group of classification and regression algorithms that have been successfully used in many applications [16–20]. Although SVMs were developed for binary classification problems, they were later generalized for multiclass classification and regression problems [8, 21]. SVR approach predicts the output based on the input by estimating the nonlinear function between a given input and its corresponding output in the training data set. Thus, generated SVR model can be applied to predict outputs for given inputs, which are not included in the training data set [22]. The aim of the support vector

regression with ε - intensive loss function is to find optimum hyper plane, from which the

distance to all the data points is minimum. Assume that a training data set containing n

number of samples is represented by { ,i i

x y } (i = 1, …, n) where i

x is the input and i

y is the

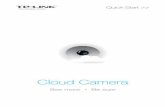

output. Optimum hyper plane works in the manner that approximates the training points well by minimizing the prediction error. As it can be seen from Fig. 2, the hyper plane is defined as

a linear function ( )f x w x b= ⋅ + , where x is a point lying on the hyper plane, parameter w

determines the orientation of the hyper plane in space, b is the bias that the distance of hyper plane from the origin. SVR seeks an optimal hyper plane that can estimate y without errors in the given ε -insensitive loss function. In other words, the distance from the optimal hyper

plane to any data point is less than ε [23]. SVR performs linear regression in the high

dimension feature space using ε -insensitive loss function and, at the same time, tries to

reduce model complexity by minimizing2

w . Thus, SVR is formulated as minimization of

the following optimization problem;

Minimize 2 *

1

1

2

l

i i

i

w C ξ ξ=

+ +∑ (1)

subject to *

*, 0

i i i

i i i

i i

y w x b

w x b y

ε ξ

ε ξ

ξ ξ

− ⋅ − ≤ +

⋅ + − ≤ +

≥

(2)

#120238 - $15.00 USD Received 2 Dec 2009; revised 5 Jan 2010; accepted 8 Jan 2010; published 15 Jan 2010

(C) 2010 OSA 1 February 2010 / Vol. 18, No. 3 / OPTICS EXPRESS 1931

where iξ and *

iξ (i = 1 … n) are slack variables that measure the deviation of training

samples outside ε -insensitive zone and C is the regularization constant or penalty parameter

that determines the trade-off between training error and model complexity [24]. In many regression applications data are in nonlinear forms. In such cases, SVRs map an input sample to a high dimensional feature space and try to find an optimal hyper plane that minimizes the recognition error for the training data using the non-linear transformation function

( )( , ) ( ) ( )i i

K x x x xφ φ= ⋅ known as a kernel function [25]. A linear model is then constructed

in this feature space [8, 21, 26]. Consequently, the SVR function that approximates the non-linear training data can be written as;

( ) ( ) ( )*

1

( ) ( ) ,n

i i i

i

f x w x b K x x bφ α α=

= ⋅ + = − +∑ (3)

where i

α and *

iα are Lagrange multipliers ( *0,

i iCα α≥ ≤ ).

Fig. 2. The illustration of SVM for linear regression problem.

The performance of SVR varies depending on the choice of the kernel function and its parameters. Kernel functions commonly used in SVRs can be aggregated into four groups; namely, linear, polynomial, radial basis function (RBF) and sigmoid kernels. In this study, radial basis function preferred as the kernel function due to its effectiveness and speeding in training phase [23, 27]. The generalization performance of the SVMs relies on the proper selection of three parameters, which are regularization parameter (C), RBF kernel width ( γ )

and threshold value ( ε ). These parameters can largely affect the accuracy produced at the end

of a modeling process. As the optimum parameters vary for each data set, they must be determined before the training phase. Several methods have been suggested to determine the optimum values for the parameters. For this purpose, grid search method using a cross validation approach was used to find optimum parameter values for each focal length setting (i.e. 9, 18 and 36mm). The main idea behind the grid search method is that different pairs of parameters are tested and the one with the highest cross validation accuracy is selected [28].

Results and discussions

Two calibration processes were applied for the selected lenses with 9, 18 and 36mm focal lengths. The calibration of the Olympus E10 digital camera was performed using PICTRAN self calibration module for several images of a 3D test field. 3D coordinates of the coded

#120238 - $15.00 USD Received 2 Dec 2009; revised 5 Jan 2010; accepted 8 Jan 2010; published 15 Jan 2010

(C) 2010 OSA 1 February 2010 / Vol. 18, No. 3 / OPTICS EXPRESS 1932

targets were calculated using geodetic surveying techniques and conventional photogrammetric approach. The coordinates estimated with conventional surveying techniques were assumed as the real coordinates, which were produced at ±0.04mm accuracy in a real scale. However, the accuracy of the control points have been assumed without errors geometrically so that the estimated errors of the adjustment have not be dependent the weight matrix of the target points. Bundle adjustment with additional parameters, which is an iterative method, was applied for the three focal distances and, thus, the geometrical calibration and distortion parameters were photogrammetrically estimated. This method provides iterative corrections to the image coordinates. When the iterations are completed, correction terms become minimum. Then, the calibrated values of inner orientation parameters and focal length are calculated. At the end of the process, the distortions were estimated for x and y coordinate directions from the differences between measured and adjusted values.

Total distortions estimated through the initial calibration were modeled by support vector regression (SVR) to understand the characteristics of the lenses with various focal distances. The SVR process is carried out using radial basis function kernel with ε -insensitive loss

function. Optimum parameters for the kernel were determined employing grid search method. Whilst the threshold value ( ε ) was kept constant for all cases as 0.001, the regularization

parameter (C) and kernel width values were set as 10 and 0.5 for 9mm focal length, 12 and 3.0 for 18mm focal length, and 30 and 4.0 for 36mm focal length. In all experiments 50 well-distributed control points on the 3D test field were used for training and 96 points were used for testing the performance of the modeling. Thus, trained SVR models were tested on how well they had learnt the distortion surfaces in x- and y- directions. Distortion differences were estimated at each point and then statistically analyzed (Table 1). It is clear from the table that the support vector machines model trained for 9mm lens produced better results compared to those for 18mm and 36mm lenses, specifically considering the standard deviations. Detailed analysis shows that the SVMs in fact create trend surfaces representing the complicated distortion surface. These surfaces are more complicated than a two or three-dimensional polynomial surfaces.

Table 1. Performance analysis of support vector machines for the targets determined on the test field with different focal length settings.

Image Coordinate Errors of Targets

9mm 18mm 36mm

x (µm) y (µm) x (µm) y (µm) x (µm) y (µm)

Minimum −2.1 −5.7 −2.3 −5.1 −3.6 −6.6

Maximum 3.1 6.2 3.6 9.6 4.4 8.4

Standard Dev. 0.9 1.9 1.0 2.1 1.3 2.9

Trained SVR models can be considered as distortion surfaces for the lenses. Thus, the distortion value for any required point on the grid can be easily estimated. Correction terms estimated from the trained models are added to image coordinates. Instead of using a simple polynomial function, which has been a traditional way for representing distortions, a more complex representation of the distortion for any camera can be achieved with the SVR.

After obtaining adjusted image coordinates, the calibration process is repeated in order to test the effectiveness of SVR modeling. It should be pointed out that standard deviations of the coordinates (X, Y and Z directions) are not considered in all bundle adjustment processes in order to concentrate on the effect of SVR-based distortion corrections. In other words, geodetic coordinates are regarded as real coordinates, ignoring the geodetic errors in order to assign equal weights to each coordinate. As a result of the adjustment process, 3D coordinates

#120238 - $15.00 USD Received 2 Dec 2009; revised 5 Jan 2010; accepted 8 Jan 2010; published 15 Jan 2010

(C) 2010 OSA 1 February 2010 / Vol. 18, No. 3 / OPTICS EXPRESS 1933

of image projection points, inner orientation parameters, distortion values and their root mean square errors were calculated. Results of this process are summarized in Tables 2, 3 and 4 for 9, 18 and 36mm focal lengths. It should be noted that projection centre coordinates are provided for five images shot at 9, 18 and 36mm focal distances, respectively. The effectiveness of the SVR method in distortion modeling can be easily seen from these tables in that root mean square errors (RMSE) of projection centre coordinates were drastically reduced to several millimeters compared to centimeter level RMSE values. It should be noted that performance analyses were reported for projection centers since limited number of images were considered for on-the-job calibration process. Maximum RMSE values reached to 74mm without SVR and 4.7mm with SVR method for 9mm focal length, 54mm without SVR and 3.2mm with SVR for 1mm focal length, and 97mm without SVR and 7mm with SVR for 36mm focal length settings. On the other hand, a significant improvement was achieved for the estimation of inner orientation parameters. It is also found that the longer the focal length, the less improvement in the RMSE of all parameters. This finding is particularly important for the calibration of images acquired at short focal lengths (e.g. fisheye focal lengths and close range applications). Another important result was that RMSE values of Z-coordinates decreased with the addition of SVM-derived corrections. With the improvement in Z-coordinates the reliability of bundle adjusted network was thus increased.

Table 2. RMSE values of projection centers estimated through bundle block adjustment with and without SVR processes and inner orientation parameters for 9mm focal length.

Projection Centre

RMSE Without SVR (mm) RMSE With SVR (mm)

X Y Z X Y Z

1 71.9 73.5 61.1 3.2 3.3 4.7

2 64.6 65.1 63.1 2.1 2.9 4.0

3 57.0 58.6 47.4 2.6 2.4 3.6

4 64.7 65.4 47.4 3.8 3.9 4.0

5 55.8 55.6 57.5 1.8 2.7 3.1

Inner Orientation Parameters

x0 (µ) y0 (µ) c (µ) x0 (µ) y0 (µ) c (µ)

1.2 1.2 3.7 1.0 1.0 2.8

Focal length: 9.11822mm, x0: −0.00276mm, y0: 0.00442mm

Table 3. RMSE values of projection centers estimated through bundle block adjustment with and without SVR processes and inner orientation parameters for 18mm focal length.

Projection Centre

RMSE Without SVR (mm) RMSE With SVR (mm)

X Y Z X Y Z

1 25.1 18.7 32.1 3.2 2.4 2.7

2 30.4 17.8 36.8 2.8 2.0 2.4

3 16.9 27.8 30.1 3.1 2.5 2.6

4 30.2 42.1 53.6 2.3 1.5 2.3

5 15.5 13.3 17.8 2.8 1.9 2.8

Inner Orientation Parameters

x0 (µ) y0 (µ) c (µ) x0 (µ) y0 (µ) c (µ)

0.9 0.9 3.5 0.7 0.7 3.1

Focal length: 17.67949mm, x0: −0.05926mm, y0: 0.08680mm

#120238 - $15.00 USD Received 2 Dec 2009; revised 5 Jan 2010; accepted 8 Jan 2010; published 15 Jan 2010

(C) 2010 OSA 1 February 2010 / Vol. 18, No. 3 / OPTICS EXPRESS 1934

Table 4. RMSE values of projection centers estimated through bundle block adjustment with and without SVR processes and inner orientation parameters for 36mm focal length.

Projection Centre

RMSE Without SVR (mm) RMSE With SVR (mm)

X Y Z X Y Z

1 33.9 45.0 95.5 2.3 3.4 5.1

2 20.0 21.8 97.2 1.4 2.2 5.3

3 7.2 11.4 96.0 3.2 4.4 5.5

4 6.4 11.1 94.9 6.4 1.1 4.9

5 16.9 20.0 95.5 1.6 0.0 2.7

Inner Orientation Parameters

x0 (µ) y0 (µ) c (µ) x0 (µ) y0 (µ) c (µ)

0.6 0.6 2.0 0.5 0.5 1.8

Focal length: 35.93247mm, x0: −0.03774mm, y0: 0.01018mm

For detailed analysis of the results comparisons were made through object point discrepancies and standard errors produced for 50 selected object points that are also used in SVR training stage. Whilst the analyses for the bundle block adjustment without the SVR process is given in Table 5, those for the adjustment with the SVR process is shown in Table 6. It is clear from the tables that lower coordinate discrepancies were estimated for the adjustments conducted using SVR approach. It can be seen that the accuracy in height is more improved than the planimetric accuracy for all cases. It is obvious that the use of SVR had considerable impact on standard errors by about 1.5mm. Compared to the X coordinate discrepancies, better improvements were achieved for Y and Z coordinates. Results clearly indicate the value of the suggested SVR operation in modeling total distortions in an on-the-job calibration process.

Table 5. Summary of adjustment results for the 9mm, 18mm and 36mm lenses before SVM application. Coordinate discrepancies are shown as minimum and maximum

values.

Focal Lengths

Number of

Images

Coordinate discrepancies (mm) Object point standard errors (mm)

∆x ∆y ∆z σ∆x σ∆y σ∆z

9mm 5 0.1325 6.7105

0.0907 9.4160

0.0228 8.0913

2.67 3.48 3.14

18mm 5 0.0567 6.7469

0.0200 9.4641

0.0276 9.2876

2.70 3.61 3.45

36mm 5 0.1222 8.2345

0.1977 7.2872

0.0048 6.7121

3.12 2.88 2.71

Table 6. Summary of adjustment results for the 9mm, 18mm and 36mm lenses after SVM application. Coordinate discrepancies are shown as minimum and maximum values.

Focal Lengths

Number of

Images

Coordinate discrepancies (mm) Object point standard errors (mm)

∆x ∆y ∆z σ∆x σ∆y σ∆z

9mm 5 0.0058 5.1470

0.1056 6.3611

0.0331 4.6685

2.26 2.65 1.94

18mm 5 0.0153 4.5930

0.0187 6.3751

0.1599 5.4692

2.07 2.42 2.34

36mm 5 0.0404 6.6883

0.0200 6.1938

0.0296 4.1331

2.89 2.33 2.13

#120238 - $15.00 USD Received 2 Dec 2009; revised 5 Jan 2010; accepted 8 Jan 2010; published 15 Jan 2010

(C) 2010 OSA 1 February 2010 / Vol. 18, No. 3 / OPTICS EXPRESS 1935

Conclusion

With recent developments and achievements in digital photography and technology, digital cameras have become popular and used for variety of purposes. Calibration of these cameras has become an important issue and a research agenda in the scientific community. Camera calibration is regarded as an essential step in photogrammetric 3D object restitution. Calibration parameters including focal length, principal point location and lens distortion are usually determined by a self-calibrating approach. In the calibration of non-metric cameras, accurate determination of optical distortions due to lens (i.e. radial and tangential lens distortions) is of vital importance.

Low order polynomials have been conventionally used to model the distortions, particularly for radial lens distortion. The use of polynomials can give inferior results for some particular cases and camera types [4]. In this study, support vector machines, specifically a ε -insensitive loss function with Radial Basis Function kernel, is suggested to

model the distortions existing for an Olympus E10 digital SLR camera. The distortions estimated through bundle adjustment with additional parameters were used as inputs to SVMs. After training the SVMs with some intelligent approaches, model performance tested both graphically and statistically. Results show that distortions can be modeled with standard deviation of less than 3 micron. Several important conclusions can be drawn from the outputs of the study. Firstly, it is noticed that errors of control point coordinates estimated through SVMs are more homogenously distributed. Secondly, the positive effect of training sample selection process in terms of their distribution can be easily seen from the results produced for the 3D calibration test field. Thirdly, it should be highlighted that the suggested SVR model can be particularly valuable for photogrammetric and machine vision studies utilizing short focal distances and a few images in the on-the-job calibration studies. Finally, results produced in this study confirm the value of support vector machines in this particular modeling problem (i.e. distortion modeling for a non-metric digital camera).

#120238 - $15.00 USD Received 2 Dec 2009; revised 5 Jan 2010; accepted 8 Jan 2010; published 15 Jan 2010

(C) 2010 OSA 1 February 2010 / Vol. 18, No. 3 / OPTICS EXPRESS 1936