ADAPTIVE LEARNING vs. EQUILIBRIUM REFINEMENTS IN AN ENTRY LIMIT PRICING GAME*

Transcript of ADAPTIVE LEARNING vs. EQUILIBRIUM REFINEMENTS IN AN ENTRY LIMIT PRICING GAME*

The Economic Journal, (Ma�), –. # Royal Economic Society . Published by BlackwellPublishers, Cowley Road, Oxford OX JF, UK and Main Street, Malden, MA , USA.

ADAPTIVE LEARNING �s. EQUILIBRIUM

REFINEMENTS IN AN ENTRY LIMIT

PRICING GAME*

Da�id J. Cooper, Susan Gar�in and John H. Kagel

Signalling models are studied using experiments and adaptive learning models in an entry limitpricing game. Even though high cost monopolists never play dominated strategies, the easier it isfor other players to recognise that these strategies are dominated, the more likely play is to convergeto the undominated separating equilibrium and the more rapidly limit pricing develops. This isinconsistent with the equilibrium refinements literature (including Cho–Kreps’ intuitive criterion)and pure (Bayesian) adaptive learning models. An augmented adaptive learning model in whichsome players recognise the existence of dominated strategies and their consequences predicts theseoutcomes.

In signalling games there are typically a multiplicity of sequential equilibria.This has motivated the search for equilibrium refinements to narrow down theset of ‘plausible ’ equilibria (for example, Grossman and Perry, ; Banksand Sobel, ; Cho and Kreps, ; Mailath et al. ). These equilibriumrefinements all involve important elements of forward induction: following adeviation from equilibrium, players are assumed to follow a chain of logicabout why the deviation might have occurred. Given this logic, constraints areput on admissible out-of-equilibrium beliefs. Constraining beliefs in turnreduces the set of potential equilibria.

Experiments have raised serious doubts about the validity of equilibriumrefinements. Papers by Brandts and Holt (, ) and Partow andSchotter () indicate that the success of Cho–Kreps’ intuitive criterion restson ‘ inconsequential ’ changes in payoffs rather than the underlying structure ofthe game. Cooper et al. (a), while not providing any direct contradictionof the intuitive criterion, show that equilibrium refinements fail to characteriseboth the history of play and whether or not a pure strategy pooling orseparating equilibrium will emerge when both exist."

In this paper, we not only show clear violations of the intuitive criteria, butalso develop an augmented adaptive learning model which predicts when theintuitive criteria will be violated, as well as when they will be satisfied. In thismodel, players are treated as being boundedly rational, learning to play thegame through a trial and error process. Behaviour is history dependent ratherthan forward looking as in the refinements approach. The novel feature of our

* This research has been supported by a grant from the National Science Foundation. Earlier versions ofthis paper were presented at the Experimental Game Theory Conference at SUNY Stony Brook, the ESAmeetings in Tucson and in seminars at a number of universities. We have benefited from comments anddiscussions resulting from these presentations. Alexis Miller provided valuable research assistance. Thanksto charles Plott for providing us with speedy access to the data from Miller and Plott. The usual caveatapplies.

" Also see the discussion of Miller and Plott () below, which provides quite possibly the earliestexperimental evidence of equilibrium problems.

[ ]

learning model is that we introduce limited deductive reasoning on the part ofsome players.

This limited deductive reasoning is necessary to characterise the data. Therefinements literature provides a clue as to why this is necessary. The weakestpossible refinement used in signalling games is a single round of elimination ofdominated strategies (Milgrom and Roberts, ; Cho and Kreps, ). Noequilibrium which fails to survive this criterion will survive any of the strongerrefinements such as the intuitive criterion or divinity. For many simplesignalling games (two types, single crossing property), a single round ofelimination of dominated strategies is sufficient to yield the Riley outcome (theefficient pure strategy separating equilibrium) as the unique outcome. Implicitin the successful application of elimination of dominated strategies is a strongcommon knowledge assumption: not only is it necessary that ‘ low quality’signallers not use their dominated strategies, it is also necessary that receiversanticipate that dominated strategies will never be used. Without thisanticipation, a weak form of forward induction, the refinement will not besatisfied.

Analogous logic can be applied in a learning model. In any learning modelwhich employs maximisation, players never choose to play dominated strategiesfor themselves (except as a result of ‘errors ’ or ‘experimentation’). However,it need not follow that players anticipate that others will not use dominatedstrategies. This is consistent either with a failure to recognise the dominatedstrategies (bounded rationality) or a belief that others will fail to recognise thedominated strategies (strategic uncertainty). Thus, some players might attachpositive prior probability to ‘ low quality’ types playing their dominatedstrategies rather than the zero prior probability predicted by elimination ofdominated strategies. The existence of such ‘ irrational ’ players affects earlyplay of the game, distorting belief formation by making the Riley outcome lookless safe for ‘high quality’ types. The net result is that learning may convergeto an equilibrium other than the Riley outcome.

To incorporate this effect into our model, we augment a standard fictitiousplay (or Bayesian) learning model by giving some proportion (π) of players theability to anticipate that no player will use a dominated strategy. With π¯ (no players anticipate that the dominated strategies will be unused), our modelconverges very slowly to equilibrium and does not converge to the Rileyequilibrium. As π increases the speed of convergence also increases and agreater proportion of simulations converge to the Riley outcome.

To test these predictions of the model, we introduce experimental treatmentsthat make it easier or harder for other players to recognise low quality types’dominated strategies. The results of the experiment are consistent with thepredictions of the augmented learning model : in treatments where it is difficultto recognise the dominated strategies, play converges more slowly and tendstowards either a mixed strategy equilibrium or an inefficient separatingequilibrium. (Both these equilibria are eliminated via a single round deletionof dominated strategies.) In treatments where it is trivial to recognise thedominated strategies, play converges quickly to the Riley equilibrium.

# Royal Economic Society

[

]

In summary, our work makes several contributions to the literature onsignalling. First, our data provide clear violations of the weakest equilibriumrefinement, single round elimination of dominated strategies. These resultsreinforce the conclusions of earlier experimental papers, making it very difficultto accept the refinements literature as a good model of players’ behaviour.Second, we provide an alternative model which is capable of characterising theexperimental results, in particular allowing us to explain why the intuitivecriterion fails in some cases but not in others. The model explains the sensitivityof experimental outcomes to what, from a standard game theoretic approach,appear to be relatively minor variations in players’ payoffs. Our results suggestthat at least some subjects, while not the ultra-rational beings of therefinements literature, are capable of basic strategic reasoning. Furtherresearch is needed to determine which factors influence the learning processand the depth of reasoning employed by subjects.

There has been some perception that experiments of the sort presented hereare ‘anti-theory’. Nothing could be further from the truth. We believe thatexperiments and theory must proceed together if either is to avoid becomingcompletely ad hoc. In Cooper et al. (a) we abandoned the equilibriumrefinements approach and developed our augmented adaptive learning modelbecause the existing theory was unable to explain the experimental data. In ourcurrent work the model is used to design experiments suggesting what sort oftreatments might lead to interesting results. We in no way claim that we havedeveloped the canonical theory of how people play games. However,developing a theory rooted in the data is indispensable as a way of organisingthe data, anticipating interesting new effects, and (most importantly)generalising our results beyond a single game played under a very specific setof conditions.

Game theory is at its foundation an hypothesis about how people behave. Inparticular, it posits that individuals will attempt to anticipate the behaviour ofothers and respond accordingly. This is the soul of game theory, and theexperiments indicate that it is alive and well. What may not be so healthy arethe legion of assumptions which have been added to the theory in order to gettractable results. In the rush to get theories which give sensible outcomes andare easily employed by theorists, the reality of how people actually behave mayhave been left behind. We do not suggest that game theory be abandoned, butrather as a descriptive model that it needs to incorporate more fully how peopleactually behave.

The plan of the paper is as follows: Section I characterises our basicexperimental design: the entry limit pricing game and the contrastingpredictions for experiment based on equilibrium refinements, pure adaptivelearning, and the augmented adaptive learning model first developed inCooper et al. (a). The procedures underlying the first experiment aredescribed in Section II and the results reported in Section III. Section IVdescribes the procedural changes underlying experiment , the contrastingmodel predictions, and the results of the experiment. A brief concludingSection summarises our main results and their broader implications, relates our

# Royal Economic Society

results to other experiments reported in the literature, and indicates therelationship between our adaptive learning model and others proposed in theliterature.

.

The payoffs and signals employed are based on Milgrom and Roberts’ ()model of entry limit pricing. They model a two-period game with ahomogeneous good and a linear market demand curve. There are two players,a monopolist (M) and a (potential) entrant (E). M is either a high cost (M

H)

or a low cost (ML) type. M observes her type before the game begins. E does

not know M’s type, but the probability of each type is common knowledge(% in all our experimental sessions).

In the first period M chooses a production level which is observed by E. E

then decides whether or not to enter in the second period. If E enters, the twofirms compete as Cournot duopolists. If E stays out, M is an uncontestedmonopolist in the second period. M is always better off with no entry. If E knewM’s type, it is profitable to enter against M

H, but not against M

L. Since E does

not know M’s type, there is an incentive for MHs to imitate M

Ls, and for M

Ls

to attempt to distinguish themselves from MHs. Both types of signalling involve

producing above full information levels, hence pricing below full informationlevels (limit pricing).

Our experiment focuses on the equilibrium predictions of the underlyingsignalling game. To do this we collapse the game into a standard signallinggame by imposing the Cournot outcome in the second stage if entry occurs,and the profit-maximising monopoly outcome in the second stage if no entryoccurs. We also make the set of first-period actions discrete according to the set², ,…, ´, corresponding to different ‘production’ levels. The E player’saction set subject to M’s choices are ²IN, OUT ´.#

I.A. Nash Equilibria and Equilibrium Refinements

Table a, b show the basic payoffs employed in our experiment. (Numbers inbold are used in the current experiments, numbers in italics are fromtreatments used in Cooper et al. (a).) E’s payoffs (Table b) reflect thesecond period returns for staying out and receiving their reservation value orentering and playing a Cournot duopoly game with M. E’s payoffs are designednot to permit the existence of any pure strategy pooling equilibria : given theprior probability of an M

H(%) versus the prior probability of an M

L

(%), the expected value of IN is greater than OUT ( �s. ).Thus, the only pure strategy sequential equilibria for the limit pricing game

are separating equilibria in which M’s choices reveal their type, and E’s playIN (OUT) in response to M

H’s (M

L’s) choice.$ Two pure strategy separating

equilibria exist : (i) the Riley () outcome in which MH

chooses and ML

# A more detailed examination of the Milgrom–Roberts model and the way it was mapped into our payofftables is provided in Garvin ().

$ Unless otherwise noted, all the equilibria we describe are sequential (Kreps and Wilson, ).

# Royal Economic Society

[

]

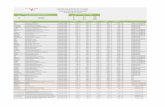

Table a

(Monopolist) A Pla�er’s Pa�offs as a Function of B Pla�er’s Choice

A(High cost)

A(Low cost)

Your choiceX

(IN)Y

(OUT)X

(IN)Y

(OUT) Your choice

®���

®��

®���

®���

Terms in () not included in experiment.Payoffs are in ‘ francs ’. Francs converted to dollars at franc¯ $..Bold faced payoffs used in present experiment.Italicised payoffs used in Cooper et al. (a).

Table b

(Entrant) B’s Pa�offs

A Player’s type

A(High cost)

A(Low cost)

Your action choice Your payoff Your payoff

X (IN) Y (OUT)

chooses and (ii) an inefficient equilibrium in which MH

chooses and ML

chooses . With MLs choosing or , it does not pay for M

Hs to imitate them

since payoffs at dominate or independent of E’s choice at this outputlevel. Out-of-equilibrium beliefs (OEB) which support these equilibria are thatany deviation from M

L’s equilibrium choice involves an M

Hwith sufficiently

high probability (% }) to induce entry. Given this, MLs are better off using

the equilibrium strategy than choosing a lower output level.There are many partial-pooling (mixed-strategy) equilibria for this game,

but we deal with one that has drawing power in both the experiments and thesimulations : in this equilibrium M

Ls always choose and M

Hs mix between

and , choosing with probability ±. Es always choose IN on , but play amixed strategy in response to , playing IN with probability ±. M

Hs are

indifferent between and and Es are indifferent between IN and OUT on .The OEB that support this equilibrium are that any deviation from mustrepresent an M

Htype with sufficiently high probability to induce entry.

# Royal Economic Society

The multiplicity of equilibria in the entry-limit pricing game is a commonfeature of signalling games. This occurs because sequential equilibrium puts(virtually) no restrictions on the OEB, leading to equilibria which aresupported by ‘ implausible ’ beliefs. The equilibrium refinements literature hasattempted to narrow down the set of equilibria by providing criteria foreliminating ‘ implausible ’ beliefs. This is typically done by forward induction;the receiver is hypothesised to follow some chain of reasoning about what typeof sender could (or could not) have made a deviation, thus restricting OEB.

One of the weakest equilibrium refinements in terms of the demands it makeson players’ cognitive capacities is elimination of dominated strategies. Wefollow the lead of Milgrom and Roberts () in applying a single round ofdeletion of strictly dominated strategies and looking at the sequential equilibriaof the reduced game.% Restricting the equilibrium set in this way eliminates theinefficient equilibrium with M

Ls choosing , since it depends on Es believing

that MHs will play , a strictly dominated strategy. It also rules out the beliefs

underlying the partial pooling equilibrium: eliminating the OEB that MHs will

play strictly dominated strategies and , MLs obtain a higher payoff at

() where there is no entry compared to an expected return of ± fromchoosing with its entry rate of ±. The equilibria which survive a singleelimination of dominated strategies are a superset of those which survive theintuitive criterion (Cho and Kreps, ) which in turn are a superset of thosewhich survive divinity (Banks and Sobel, ). Thus, both of these refinementsyield the Riley outcome as the unique equilibrium. Perfect sequentialequilibrium (Grossman and Perry, ) and undefeated equilibrium (Mailathet al. ) select the Riley outcome as well.

I.B. Equilibria and Adapti�e Learning

The adaptive learning model described here was first used to organisebehaviour in a related signalling game experiment (Cooper et al. a). Inwhat follows we briefly characterise the adaptive learning model and itspredictions regarding changes in π, the proportion of Es who anticipate thatM

Hs will not use their dominated strategies.

In our adaptive learning model, players learn to play the game through aprocess of trial and error loosely based on fictitious play. As such it falls underthe rubric of Bayesian learning. Players act as if they are playing against astable distribution of strategies which they learn about over time. At any giventime, a best response is played versus their beliefs about the distribution ofstrategies.&

% A single round of eliminating dominated strategies only requires mutual knowledge of rationality ratherthan common knowledge, to use the terminology of Aumann and Brandenburger (). In this sense therefinement is cognitively less demanding than either iterated elimination of dominated strategies or theintuitive criterion.

& It has been argued that fictitious play and similar models should really be known as misspecifiedBayesian learning, since players fail to account for the changing distribution of their opponents’ strategies.For recent papers exploring adaptive learning in a more general setting, see among others Milgrom andRoberts () and Fudenberg and Kreps (, ).

# Royal Economic Society

[

]

In our model, Ms start out with prior beliefs regarding the probability of Eschoosing IN or OUT in response to each of the output levels. Es start with priorbeliefs about the probability of a particular output level being chosen by an M

H

versus an ML. The distribution of initial beliefs for Ms and Es are fitted from

actual first period data. After initial beliefs are generated Ms and Es then play(typically) sixty rounds of the entry limit pricing game. In each round, Ms andEs are randomly matched. Ms’ choices and Es’ responses maximise theirpayoffs, conditional on beliefs. Following each play of the game, beliefs areupdated based on each player’s own outcome and the outcomes from allmatchings in the previous period. The updating rule is a weighted average ofeach players’ initial beliefs (which vary across players) and historicalexperience, where all prior experience receives equal weight. (See the Appendixfor a detailed description of the adaptive learning model.)

Players in the adaptive learning model are rational in a limited sense. Theymaximise payoffs subject to their beliefs and update their beliefs in accordancewith observed outcomes. Unlike the refinements literature, players are notassumed either to ‘know’ their opponent’s strategy or to reason carefullythrough the motives behind their opponent’s strategy. Thus, the mostdemanding assumptions of the refinements literature are absent.

We do not develop the general theoretical properties of the simulation modelin this paper. However, a few remarks are in order. Adaptive learning modelsof this sort need not converge to a steady state. However, in simulations for ourgame, play has always converged to a Nash equilibrium. For any two-persongame in which the model does converge, there must exist some Nashequilibrium which induces the observed steady-state strategies. This need notbe true for stronger refinements, such as the intuitive criterion or subgameperfection. However, any steady state of the adaptive learning model for thelimit entry pricing game must be consistent with a sequential equilibrium.'

As noted in the introduction, simulations of the adaptive learning model aresensitive to whether or not Es anticipate that M

Hs will not use their dominated

strategies. To capture this strategic anticipation, the pure adaptive learningmodel is augmented by assuming that some proportion π of the Es recognisethat and are dominated for M

Hs and that these strategies will never be

chosen. These Es place zero weight on an MH

choosing or and thus neverenter following these choices.

Holding π fixed, simulations of the augmented adaptive learning model yielda consistent pattern of play. As demonstrated in Cooper et al. (a), thispattern of play replicates the major features of limit pricing game data. Fig. provides two representative runs of the simulation model with π¯ ±. In thetop panel play converges to the efficient separating equilibrium, while in thebottom panel play converges to the partial pooling equilibrium. In both cases

' Existence of a Nash equilibrium which induces steady-state strategies follows from the fact that steady-state strategies must be a self-confirming equilibrium (Fudenberg and Levine, ). For the limit pricinggame, there exist beliefs which can justify any strategy off the equilibrium path for Es. It follows that anysteady state must be sequential.

# Royal Economic Society

100

80

60

40

20

01 2 3 4 5 6 7

100

80

60

40

20

01 2 3 4 5 6 7

1 2 3 4 5 6 7 1 2 3 4 5 6 7

1 2 3 4 5 6 7 1 2 3 4 5 6 7Monopolists’ choice

%%

Rounds 1–3 Rounds 7–9 Rounds 58–60

Panel 1: undominated separating (Riley) equilibrium

Panel 2: partial pooling equilibrium

Fig. . Representative simulation results of Ms play: in panel play converges to the undominated(Riley) separating equilibrium. In panel play converges to the partial pooling equilibrium with

MLs playing . +, M

H; 8, M

L.

play starts at the myopic maxima, with MLs mostly choosing and M

Hs mostly

choosing . Play typically passes through a ‘temporary pooling’ outcome, withboth M types choosing most often, before M

Ls cleanly separate. With Ms

starting at their respective myopic maxima, there is initially limited entry on. Only when M

Hs begin to imitate M

Ls by playing will the entry rate on

rise sufficiently to induce limit pricing by MLs. After M

Ls begin to limit price,

MHs slowly return to their equilibrium output level of .

Changes in π, the proportion of Es who anticipate that MHs will not play

their dominated strategies, have two broad effects on the simulations. First, theequilibrium outcomes are changed. If π¯ , % of the simulations convergeto the partial pooling equilibrium while the remaining simulations converge tothe Riley equilibrium. In contrast, with π¯ , % of the simulationsconverge to the Riley equilibrium while only % converge to the partialpooling equilibrium. Intermediate cases fall somewhere in between these twoextremes: for example, if π¯ ±, % of the simulations converge to the Rileyequilibrium and % to the partial pooling equilibrium.

The effect of π on equilibrium outcomes is driven by early differences in play.When π¯ , there is no difference in the way Es treat or initially. In theearly stages of play, only M

Ls are observed playing or , with chosen more

often than , due to its more favourable payoff. Given its greater initialfrequency of play, Es learn that represents M

Ls faster than they learn that

does. The net result is that Es play OUT on earlier than on , reinforcing MLs’

natural bias towards . As a result, in many simulations ceases to be playedbefore Es learn that only M

Ls choose it. However, when π¯ , the difference

# Royal Economic Society

[

]

in entry rates on versus is large enough to overcome the higher payoff MLs

get for choosing .(

Increasing π also increases the speed with which limit pricing (play of , ,or by M

Ls) emerges in the simulations. This is shown in Fig. . Increasing π

100

80

60

40

20

01–3 4–6 7–9 10–12 13–15 16–18 19–21 22–24 25–27 28–30

% o

f M

Ls’

lim

it p

rici

ng

Rounds

Fig. . Simulation model predictions for the frequency of limit pricing under different assumptionsabout the percentage of Es who recognise that and are dominated for M

Hs and that these

strategies will never be chosen. Percentage of Es recognising dominated strategies : +, %; U,%; *, %.

increases the speed with which separating emerges by decreasing initial entryrates on . The lower the entry rate on , the lower the entry rate on neededto induce limit pricing. Hence, the more rapidly limit pricing emerges.

The simulations actually converge on a dynamic version of the partialpooling equilibrium. M

Ls always choose . M

Hs cycle between choosing and

choosing . These cycles are driven by the entry rate on . When all MHs play

, the entry rate on falls, inducing play of by MHs. The entry on then rises

in response, causing MHs to revert to . The cumulative (historical) distribution

of MHs’ and Es’ choices converges to the predicted mixed strategy frequencies.

Convergence is quite slow, taking approximately plays of the game in oursimulations. This is due to the lengthy time needed for M

Hs to begin playing

rather than or ; there being virtually no choice of by MHs in the first

plays of the game.For sensitivity analysis, we ran simulations which did not use fitted initial

beliefs, simulations in which the weights on past experience rose exponentiallyover time, simulations in which players made errors in maximisation,simulations in which the number of players was changed to match the numberin the experiments, and simulations in which players alternated between roles(as in the experiments). None of these alternatives, either alone or incombination with each other, affected the primary qualitative features of thesimulations.

( Variations are driven by (random) early differences in play between simulations in MLs’ play of and

and Es’ frequency of IN on and .

# Royal Economic Society

.

Neutral terms were used throughout, with Ms called A players (A and Atypes) and Es called B players. Ms first chose a ‘number’ between and which was sent (by computer) to the E they were paired with. Es respondedwith X (IN) and Y (Out).

Before each play of the game the computer randomly determined M’s type.)

Following each play of the game subjects learned the outcome of their ownchoice and the type of M player with whom they were paired. In addition, thelower left hand portion of each subject’s screen displayed the results of allpairings (Ms’ choices, Es’ responses, and Ms’ types). Subject identificationnumbers were always suppressed.

Each experimental session employed between and subjects. Roles wereswitched after every plays of the game, with M (E) players becoming E (M)players. Within each plays of the game each M player was paired with adifferent E player. With one exception (see below) experimental sessions had plays of the game with the number of plays announced in advance.

Subjects were recruited through announcements in undergraduate classesand posters placed throughout the University of Pittsburgh and CarnegieMellon University. The posters resulted in recruiting a broad cross-section ofundergraduate and graduate students from both campuses. Each experimentaltreatment employed two inexperienced subject sessions. Experienced subjectswere recruited back for sessions with exactly the same parameter values, withthis fact publicly announced at the start of the session. Earnings averaged$. for inexperienced subject sessions which lasted a little under hours.

Recall that our simulation model makes sharply contrasting predictionswhen all Es and no Es recognise the existence of M

Hs’ dominated strategies.

Earlier experiments (Cooper et al. a), using the italicised payoffs on Tablea, demonstrated that even with fairly large negative payoffs, initial entry rateson and are significantly greater than zero. This was true even though M

Hs

never used these strategies ; players who themselves never played the dominatedstrategies still failed to anticipate that others will not use them. To accentuatethis effect, the payoffs employed in Table a (in boldface type) for M

Hs choice

of and were deliberately set to positive values only slightly below the payoffsat . Not only does this modification make it relatively difficult for subjects torecognise the dominated strategies, it also makes it less likely that subjects willanticipate that others will recognise these dominated strategies. We refer to thisnew payoff table as the % anticipation treatment. Although this change doesnot insure π¯ , we are confident that it induces a marked decrease in π.

In games designed to induce π¯ , MHs were publicly prohibited from

choosing or .* This prohibition was not a binding constraint on MHs’ play,

) A block random design was used so that with an even number of Ms half were MLs and half where M

Hs

in each play of the game, with individual player types determined randomly. With an uneven number of Msthe computer randomly determined the type of the odd numbered M in each play of the game.

* The payoffs of MHs for choosing or were replaced with the words ‘No Choice ’ and the following

phrase was added to the instructions : ‘In other words, both A players can choose any number between and, but onl� A players can choose numbers or .’

# Royal Economic Society

[

]

since even in the % anticipation treatment MHs never chose or . Rather,

the prohibition served to provide public notice that a choice of or could notcome from an M

Hs type. This treatment is referred to as the % anticipation

treatment.

.

Fig. reports results for games with inexperienced subjects for the % and% anticipation treatments. Results are reported for all plays of the gameso that subjects have played the role of both M and E six times each. We referto each set of plays of the game as an experimental cycle. The histogramsshow Ms’ choices, with entry rates shown just below each output level. Data forthe two inexperienced subject sessions under each treatment are pooled.Outcomes across replications were quite similar."!

The data support the predictions of the augmented adaptive learning model.First, in each cycle of play limit pricing was significantly more frequent in the% anticipation treatment than in the % anticipation treatment, with thedifference in frequencies rising over time: the difference between the twotreatments in frequency of limit pricing (choice of – by M

Ls) is % in cycle

, % in cycle , and % in cycle . Second, the limit pricing output levelsdiffered substantially between the two treatments with attracting the mostlimit pricing in the % anticipation treatment (% averaged over all cycles)and attracting the lion’s share in the % anticipation treatment (%).Replicating the results of Cooper et al. (a) the dynamic pattern of play isconsistent with the model : in both cases play starts off with Ms generallychoosing their respective myopic maxima and much of the early actioninvolved efforts by M

Hs to mimic M

Ls’ initial choice of .

Fig. reports data for experienced subjects from the two treatments. In bothcases play starts out close to where it left off for inexperienced subjects.Contrasting patterns of play between the treatments regarding the speed withwhich limit pricing develops and the output level used in limit pricing continueto appear in the data. In the % anticipation treatment, entry on comparedto was high enough in both cycles and that limit pricing at clearly paid.Further, M

Ls made few choices of or and these choices were met with the

same or higher entry rates than choice of . As such clearly provided thehigher payoff. In the % anticipation treatment there were some choices of by both M types in cycle , but close to perfect separating in cycles and .""

Es’ choices clearly supported the Riley equilibrium, making play of optimalfor M

Ls in all cycles."#

"! Data for each experimental session are reported separately in our working paper, Cooper et al. (b)."" We did not invite back subject from the earlier sessions : in response to a post session questionnaire

this subject indicated that a choice of or very likely represented an MH

type."# Z statistics comparing the frequency of limit pricing and the frequency was chosen as the limit output

level by experimental cycle were statistically significant between treatments at the % level or better in allcycles.

# Royal Economic Society

100

80

60

40

20

0

0% Anticipation treatment

100

80

60

40

20

0

100% Anticipation treatment

%%

Ms’ choice:Entry (%):

155

289

369

445

533

6100

7 180

283

383

458

550

60

7 1100

293

3100

471

571

60

70

Ms’ choice:Entry (%):

175

282

338

447

556

614

750

1100

292

365

462

550

620

7 186

296

379

472

557

64

7

Cycle 1 Cycle 2 Cycle 3

Fig. . Inexperienced subject play under the % and % anticipation treatments. Each cycleconsists of consecutive plays of the game. Percentage of M

Land M

Hchoices taken separately

sum to ± within each cycle. Entry rates conditional on Ms’ choices are shown below these choices.+, M

H; 8, M

L.

100

80

60

40

20

0

0% Anticipation treatment

100

80

60

40

20

0

100% Anticipation treatment

%%

Ms’ choice:Entry (%):

1100

295

367

442

5100

6 70

1100

292

356

469

517

650

1100

294

386

472

547

6 750

Ms’ choice:Entry (%):

1 296

3100

463

550

68

7 1100

2100

3100

492

5 60

7 10

2100

3100

4100

5 65

7

733

Cycle 1 Cycle 2 Cycle 3

Fig. . Experienced subject play under the % and % anticipation treatments in experiment. +, M

H; 8, M

L.

III.A. Discussion of Results from Experiment

Experiment highlights the role of Es’ ability to anticipate MHs’ elimination

of dominated strategies in determining equilibrium play. There is nothing inthe standard game theoretic approach which explains the differences between

# Royal Economic Society

[

]

the % and % recognition treatments. In terms of single round eliminationof dominated strategies, the only important feature of either the % or %anticipation treatments is that M

Hs’ strategies and are dominated. Yet, the

data clearly indicate that differences in payoffs for MHs’ dominated strategies

do influence play, suggesting that cognitive and perceptual factors thatstandard economic theory ignores play an important role in determiningwhether or not Es’ anticipate that dominated strategies will not be used. This,in turn, has a critical effect on the speed with which limit pricing emerges andthe equilibrium to which play converges.

The ‘cognitive’ hypothesis is not the only possible reason that differencesmight emerge between the treatments. One alternative hypothesis is that the‘no choice’ option in the % recognition treatment eliminates the possibilityof M

H‘ trembles ’, and that this change in error rates, not some cognitive effect,

produces the differences between treatments. However, no such change in errorrates exists ; even in the % anticipation treatment, M

Hs never play their

dominated strategies. Although Es may anticipate a change in the error rate,this would be consistent with a ‘cognitive’ hypothesis. Another alternativehypothesis is that the ‘no choice’ option makes play of a focal point ; whilethis argument cannot be rejected, it only serves to reinforce our point that playis driven by cognitive elements beyond standard game theory.

The driving force behind the differential predictions of our augmentedadaptive learning model for the % and % anticipation treatments is thediffering ability of Es to anticipate M

Hs’ elimination of dominated strategies.

What limited data we have for choices of and in the % anticipationtreatment supports this assumption of our simulation model : for inexperiencedsubjects the entry rate on and was % in the % anticipation treatmentversus % in the % anticipation treatment ; for experienced subjects theentry rates were % in the % recognition treatment versus % in the %anticipation treatment."$ Further, in Cooper et al. (a) we report atreatment using the italicised payoffs on Table for choice of or by an M

H.

This treatment falls somewhere between the % and % anticipationtreatments ; choice of or is possible for an M

H, but yields a negative payoff.

Consistent with our augmented adaptive learning model, the entry rate forexperienced players on and was %, between the percentages for the %and % anticipation treatments. Limit pricing emerged more rapidly thanin the % anticipations treatment but not as quickly as in the %anticipation treatment."%

The augmented adaptive learning model does not posit that any players willactually use the dominated strategies, only that some Es will fail to anticipatethat other players will not use these strategies. The data are consistent with themodel. Even Es who entered on and in the % anticipation treatment never

"$ Whether the entry on and in the % recognition treatment results from ‘trembles ’,experimentation, or rivalry cannot be determined. The key point is that these factors should be constantacross treatments so that differential entry rates between treatments should reflect differences in the abilityto recognise dominated strategies.

"% In this treatment MHs essentially never chose and as well ( out of M

Hchoices). Explicit

comparisons between all three treatments are provided in our working paper (Cooper et al. b).

# Royal Economic Society

selected these strategies themselves as MHs. Whether this resulted from the fact

that (most) subjects (i) never explicitly recognised that and were dominatedstrategies in their role as M

Hs, but acted ‘as if ’ they did due to the low payoffs

from these strategies, or (ii) truly recognised that and were dominatedstrategies for M

Hs, but did not believe other players would do so, remains an

open question at this point.One serious discrepancy exists between the augmented adaptive learning

model’s predictions and the experimental data. In the simulations, theproportion of limit pricing is identical in early play for π¯ and π¯ . Therates at which and are used for limit pricing are also identical initially.Differences between M

Lstrategies in π¯ and π¯ simulations do not

appear until Ms have an opportunity to learn about Es differing entry rates on and . In contrast, the experimental data shows more rapid limit pricing inthe % anticipation treatment than in the % anticipation treatment, withmore frequent use of (see Table ). While the sample size is too small for these

Table

LoW Cost Monopolists’ Frequenc� of Choosing �, �, or � in Earl� Pla�s of the Game

(raW data)

Playsof

% recognition % recognition

game

Simulationresults*

– ± ± ± ± ± ±

Experimental – ± ± ± ± ± ±results (}) (}) (}) (}) (}) (})

– ±(})

±(})

±(})

±(})

±(})

±(})

* simulation runs in each case.

differences to be statistically significant, they are still disturbing. It is possiblethat random variability is the cause of these differences ; individual effects cancause wide variance between experiments which are identical ex ante. However,a more plausible explanation is that we have incorrectly estimated initialbeliefs.

In particular, we have ignored any potential impact of the differenttreatments on Ms’ initial beliefs. In the simulations we endowed someproportion π of Es with the ability to anticipate that M

Hs would never use

the dominated strategies and . We hypothesised that the differing treatmentsinduce differing levels of π, and thus differing initial entry rates on and . Inthe same spirit, it seems likely that our treatments would also affect Ms’ initialbeliefs. Just as some Es anticipate that the dominated strategies will not beused, some proportion π

#of Ms should anticipate that at least some Es will

never enter on and due to their anticipation that only MLs will use these

strategies. Just as π is hypothesised to be greater in the % anticipation

# Royal Economic Society

[

]

treatment, π#should also be larger. Not only is it easier for Ms to recognise the

dominated strategies, but Ms should also anticipate that a greater proportionof Es will recognise the dominated strategies and thus never enter on and .Given this, at least some Ms should initially place less weight on entry following and in the % anticipation treatment than in the % anticipationtreatment. For early rounds this would sharply increase the expected return tothese strategies, increasing both the overall probability of limit pricing by M

Ls

and the likelihood that MLs would choose over . The data in the %

recognition treatment suggest just such expanded deductive reasoning as wasfavoured over in the first several plays of the game."& Experiment wasdesigned to test for expanded deductive reasoning of this sort, as well as toprovide a more definitive violation of single round elimination of dominatedstrategies.

.

IV.A. H�potheses and Procedures

In experiment payoffs and procedures were exactly the same as in experiment with the exception of Ms’ payoffs for choices and (see Table ). In both

Table

Ms’ Pa�offs for Choice of � and � in Experiment �*

A(High cost)

A(Low cost)

Yourchoice

X(IN)

Y(OUT)

X(IN)

Y(OUT)

Yourchoice

No choice Treatment No choice Treatment

* Payoffs for choices – the same as Table a.

treatments MHs have the same positive payoffs for choosing as in the %

anticipation treatment, but are publicly prohibited from choosing (as in the% anticipation treatment). In treatment M

Ls’ payoffs remain unchanged

from experiment . In treatment MLs’ payoffs for were increased to make

a more attractive alternative. These changes in payoffs do not affectequilibrium outcomes in the refinements approach; even the weakestrefinement, single round elimination of dominated strategies, picks the Rileyoutcome in both treatments.

Simulations were run under three different specifications regarding theextent to which Es and M

Ls recognised M

Hs dominated strategies : (S) All Es

anticipate that , as a dominated strategy, is never played by MHs. As such, no

Es enter on (π¯ for ). Es continue to enter on , failing to anticipate that

"& Other forms of expanded (but still limited) deductive reasoning could be playing a role in addition toiterated elimination of dominated strategies as there is some evidence that initial entry rates were lowerfollowing strategies – than following strategies –.

# Royal Economic Society

MHs will never use this strategy either (π¯ for ). Ms’ initial beliefs are

unaffected (π#¯ ). This is a direct extension of experiment simulations to

experiment . (S) As in , π¯ for , and π¯ for . Some MLs (%)

recognise that is dominated and anticipate that this will induce substantiallylower entry on . We model this in an extreme manner, with these M

Ls putting

zero weight on entry following (π#¯ ± on ). (S) All Es anticipate that

only MLs use , and all Ms anticipate that Es will never enter on . This differs

from (S) only in setting π#¯ on . The results of these simulations are

summarised in Table .

Table

Augmented Adapti�e Learning Model: contrasting predictions in experiment � as the

proportion of MLs Who recognise that � is dominated �aries*

π#¯ π

#¯ ± π

#¯

Treatment

Partialpooling

equilibrium(%)

Dominatedseparatingequilibrium

(%)

Partialpooling

equilibrium(%)

Dominatedseparatingequilibrium

(%)

Partialpooling

equilibrium(%)

Dominatedseparatingequilibrium

(%)

†

* π#¯proportion of M

Ls putting zero weight on entry following . π¯ for ; π¯ for where π¯

proportion of Es who recognise MHs dominated strategies and that these strategies will never be chosen.

† There are some plays of in early rounds, but choice of dies out over time.

100

80

60

40

20

0

Treatment 1

100

80

60

40

20

0

Treatment 2

%%

Ms’ choice:Entry (%):

1100

280

364

443

536

6100

70

1100

288

375

440

538

6 1100

2100

390

454

544

650

70

Ms’ choice:Entry (%):

180

286

380

445

550

6100

75

1100

295

388

492

5 60

7 1100

293

391

470

50

6 74

718

Cycle 1 Cycle 2 Cycle 3

Fig. . Inexperienced subject play under treatments and in experiment . +, MH

; 8, ML.

# Royal Economic Society

[

]

Under treatment , play in S simulations typically converges to the partialpooling equilibrium as in the % anticipation treatment. More striking is thefact that without any Ms anticipating no entry on , we never observe a singleplay of in the simulations."' In contrast, if the limited deductive reasoningaccorded Es extends to Ms, as in the S and S simulations, play of emergesin a significant percentage of the simulations. Thus, in the simulationsemploying treatment payoffs, (a) any significant play of is indicative of theability of M

Ls to recognise that is dominated for M

Hs, and to understand the

implications of this fact for Es’ behaviour, and (b) the partial poolingequilibrium still has considerable drawing power.

It is difficult to get MLs to play in treatment because the payoffs at are

too low relative to ."( In contrast, with the higher payoffs for in treatment, even the S simulations converge to the dominated separating equilibrium% of the time. Further, a dramatic impact is created by allowing even asmall percentage of M

Ls to anticipate a lack of entry on ; % of the S

simulations converge to the dominated separating equilibrium.

IV.B. Results for Experiment

Fig. shows inexperienced subject play under treatment (top panel) and (bottom panel). Under treatment play does not look very different from the% anticipation treatment with the exception of the relatively high frequencywith which M

Ls chose in cycles and . In contrast, treatment does not look

much different from the % anticipation treatment except that play of hasbeen displaced by . Note, in both treatments play of is very limited, neveraccounting for more than or M

Lchoices in any given cycle.

Fig. shows experienced subject play under the two treatments.") Intreatment (top panel) M

Ls had clearly begun to distinguish themselves by

cycle with choices of and accounting almost evenly for MLs’ limit pricing

(± and ±% of total choices were at and , respectively). Entry rates incycle supported limit pricing at both and , but not at (there was %entry on ). Given the even split of choices between and , we allowed playto continue for one more cycle to see if it would converge to one of these twochoices. Limit pricing continued to account for ±% of M

Lchoices, but now

slightly favoured over , with choice of completely eliminated.Under treatment (bottom panel) play started where it left off in cycle of

inexperienced subject play. Although some MLs limit priced at in cycles and

, the relatively high entry rates on versus made it far more profitable tochoose throughout. By cycle , % of M

Ls’ choices were at and % of

MHs’ choices were at , with essentially % entry on all choices other than

; play had essentially converged to the dominated separating equilibrium.The results of experiment are remarkably consistent with the augmented

adaptive learning model in which we assume that at least some MLs are able

"' Sensitivity analysis with a variety of random trembles indicates that these results are robust."( Under treatment , play of must be % more likely than before it is profitable for M

Ls to prefer

. In treatment , this threshold has been reduced to %.") For treatment we did not invite back one subject from the earlier sessions since in the post session

questionnaire this subject noted that a choice of very likely represented an MH

type.

# Royal Economic Society

100

80

60

40

20

0

Treatment 1

100

80

60

40

20

0

Treatment 2

%%

Ms’ choice:Entry (%):

1100

293

380

476

575

6 75

1100

295

375

482

560

6 710

1 296

3100

4100

5 6 70

Cycle 1 Cycle 2 Cycle 3 Cycle 4

Cycle 1 Cycle 2 Cycle 3

Ms’ choice:Entry (%):

1100

290

391

444

544

60

70

1100

2100

3100

473

538

6 6100

6 70

722

1 2100

3100

479

547

70

2100

3100

535

473

1100

Fig. . Experienced subject play under treatments and in experiment . +, MH

; 8, ML.

to recognise obvious dominated strategies as easily as Es do and anticipate thatthese dominated strategies will yield a lower entry rate. Under treatment ,without this expanded deductive reasoning the simulations yield essentially nochoice of . However, with expanded deductive reasoning, in individualsimulations is chosen relatively often for many rounds, even if the simulationeventually settles down to the partial pooling equilibrium. This is consistentwith the pattern of play observed in the data. Further, the data from treatment suggest that far less than % of M

Ls anticipate that there will be little or

no entry on , since the simulations imply that if this were the case MLs would

begin to play much more rapidly than was observed. It is impossible todetermine whether M

Ls are slow to play because they fail to recognise it as

a dominated strategy or because they anticipate some Es will not anticipate theelimination of by M

Hs, and thus enter.

The failure of the Riley separating equilibrium as an attractor in experiment is far more dramatic than in the % recognition treatment of experiment .In treatment a majority (±%) of experienced M

Lchoices were at and

in the last cycle of play, with no choices of . In treatment , % ofexperienced M

Lchoices were at in cycle , and the Riley outcome, , had

essentially no drawing power for either experienced or inexperienced players.

.

Equilibrium refinement models, pure (Bayesian) adaptive learning models,and an augmented adaptive learning model that endows agents with limiteddeductive reasoning make contrasting predictions in Milgrom and Roberts’() entry limit pricing game. The augmented adaptive learning model best

# Royal Economic Society

[

]

organises the experimental data as it reliably predicts which equilibrium playconverges to and the speed with which limit pricing develops. Clear violationsof one of the simplest equilibrium refinements, single round elimination ofdominated strategies, are reported.

One potential objection to the failure of single round elimination ofdominated strategies is that the resulting inefficiencies are minimal ; in the %anticipation treatment of experiment and in both treatments of experiment, M

Ls’ choices of and are adjacent to the undominated separating output

level, . If the role of economic theory is viewed as providing qualitativelycorrect predictions, rather than precise point predictions, it is tempting todismiss the inefficiencies reported as slight and of little importance. Fortunately,we need not conduct additional experiments to show that this line of defencedoes not save the refinements approach.

Miller and Plott ( ; MP) ran a continuous double auction experiment inwhich each ‘seller ’ was endowed with units of two basic quality types – supersand regulars. The marginal cost of adding quality (the signalling instrument)to supers was substantially cheaper than for regulars. Quality could be addedin integer values between and , with quality levels of for supers in theRiley separating equilibrium versus or for regulars."* In sessions with lowsignalling costs for supers, average quality of supers was ± �s. ± for regulars(averaged over the last auction periods in each session). That is, on average± units of excess quality were added to supers, over % more quality thanat the efficient separating equilibrium. This excess quality reduced producerprofit by ± cents per unit, ±% of total profits at the competitiveequilibrium. Since MP was published before the explosion in the equilibriumrefinements literature, they do not discuss their results in terms of thisliterature. Our analysis is, we believe, the first to point out the strikingviolations of single round elimination of dominated strategies inherent in theseresults.#!

MP largely eliminated the excess quality production by adding an additionalquality signal which, from a game theoretic point of view, is completelyredundant. Midway through the set of experiments they began to circle supercontracts with red chalk and regular contracts with green chalk. In sessionswith this additional quality signal, the average quality of supers was reducedto ± units, resulting in excess production costs of ± cents for each unitsold.#"

"* The use of a continuous double auction, in conjunction with limited information about costs andvaluations, makes for a substantially more complicated information structure than in our design.Nevertheless, buyers had sufficient information to determine that the maximum profitable qualityenhancement for regulars was . This calculation was far from trivial, but a far simpler calculation put themaximum at .

#! In the MP sessions with high signalling costs for supers, play converged to a pooling equilibrium (in theabsence of the additional coloured chalk signal discussed below). Single round elimination of dominatedstrategies, in conjunction with the intuitive criterion, eliminates pooling equilibria for games of this sort(Milgrom and Roberts, ; Cho and Kreps, ).

#" In the one session where the coloured chalk was introduced mid-way through the session, there was noreduction in the quality enhancements for supers. This suggests that once the OEB supporting a dominatedseparating equilibrium are established, they will be difficult to eliminate. In computing average qualityenhancements with the redundant signal we have eliminated this session.

# Royal Economic Society

Both our results and MP’s results indicate that cognitive elements, which arenot captured in most economic models, can affect equilibrium outcomes.##

Standard game theory has no room for such cognitive features of games,restricting its attention solely to payoffs. In signalling games it is generallyassumed that players receiving messages are able to detect accurately the signalinherent in the message. However, as shown, failure to decode these messagesaccurately can have radical effects on the equilibrium outcome achieved andthe speed with which equilibrium is achieved.

Two alternative explanations are available for these ‘cognitive effects ’. Oneis that subjects are boundedly rational. Unlike game theorists, most people arenot trained to search for and identify dominated strategies. Without obviousclues, subjects may plausibly fail to recognise the existence of dominatedstrategies, even though they themselves do not choose these strategies. Analternative explanation is that players face strategic uncertainty. Even thoughsubjects may recognise that certain strategies are dominated, they might stillanticipate that others will fail to recognise the dominated strategies and playthem. Rather than making it easier to recognise the dominated strategies,obvious clues simply allay subjects’ fears that others will miss the dominatedstrategies. Either explanation fits the observed data, and we have no way withthe present data to distinguish whether one or the other (or maybe both) arecorrect.

Either explanation is ‘bad news’ for game theory; to the extent that gametheory and the refinements literature ignore these behavioural elements, it willmake incorrect predictions. There is, however, ‘good news’ for game theory aswell. It seems clear that for many subjects once the dominated strategies aremade obvious enough they are able to draw the logical implications of theirexistence. On a broad level, this suggests that the strategic foresight which liesat the heart of game theory has some empirical validity. Additionally, gametheory is not devoid of tools for dealing with the presence of boundedrationality and strategic uncertainty. We speculate that approaches which arebetter suited to capturing these issues, such as ε-equilibrium, quantal responseequilibrium (McKelvey and Palfrey, ), and risk dominance (Harsanyi andSelten, ), may do a better job of characterising long-run outcomes thanmore standard approaches.

We do not suggest that we have developed the canonical learning model forsignalling games. Our approach strongly limits players’ reasoning abilities ; wedo not consider the possibility that they account for the maximising behaviourof others or anticipate this dynamic, an element of play that is likely to be atwork to some extent. On the other hand, some might argue that we do not limitplayers’ rationality sufficiently since we allow them to maximise and, some ofthem at least, to recognise the existence of dominated strategies when thesestrategies are relatively transparent. Adaptive learning models with even fewercognitive demands on players include evolutionary models (for example,Crawford () and Friedman ()) and reinforcement-based models

## Schotter et al. () report similar results in two-person, two-move games which they label as‘presentation effects ’ (see Schotter et al., experiment ).

# Royal Economic Society

[

]

(Roth and Erev, ). However, extensions of the Roth and Erev model toour design indicate that it is unable to predict the differences in equilibriumoutcomes accurately between our % and % anticipation treatments(Cooper and Feltovich, ). The one evolutionary}learning model explicitlydeveloped for signalling games of this sort, No$ ldeke and Samuelson (),predicts that play will converge to the Riley separating equilibrium under alltreatments in both experiments (treating whatever experimentation subjects doas mutation within their model).#$ The data are clearly at odds with thisprediction. Finally, our adaptive learning model, and closely related models,do a good job of organising play from other signalling games as well (seeCooper et al. a ; Brandts and Holt, ).

Our experimental results have implications beyond the laboratory. Equi-librium selection via adaptive learning implies that there exist cases where theequilibrium established under a given market structure will be historydependent. For example, Cooper et al. (a) show that in an entry limitpricing game where pure strategy pooling equilibria exist, the emergence of apooling equilibrium as opposed to a separating equilibrium depends criticallyon past history of play (see Berkowitz and Cooper, , for another example,this in the context of transitional economies). Further, the cognitive effectsidentified here suggest that there may be quite a bit of room for redundantsignals to help clarify messages in signalling games, a notion that is routinelydismissed in most economic models (see Bagwell and Ramey, , forexample).

Uni�ersit� of Pittsburgh

Date of receipt of final t�pescript: Jul� ����

R

Aumann, R. and Brandenburger, A. (). ‘Epistemic conditions for Nash equilibrium.’ Econometrica,vol. , pp. –.

Bagwell, Kyle and Ramey, G. (). ‘Advertising and limit pricing.’ RAND Journal of Economics, vol. ,(Spring), –.

Banks, Jeffrey S. and Sobel, Joel, (). ‘Equilibrium selection in signalling games.’ Econometrica, vol. ,pp. –.

Berkowitz, D. M. and Cooper, D. J. (). ‘Equilibrium selection in a differentiated duopoly.’Mimeo, University of Pittsburgh.

Brandts, J. and Holt, C. A. (). ‘An experimental test of equilibrium dominance in signaling games’.American Economic Re�ieW, vol. , pp. –.

Brandts, J. and Holt, C. A. (). ‘Adjustment patterns and equilibrium selection in experimentalsignalling games.’ International Journal of Game Theor�, vol. , pp. –.

Brandts, Jordi and Holt, C. A. (). ‘Naive Bayesian learning and adjustment to equilibrium in signallinggames.’ Mimeo.

Cho, I. and Kreps, D. (). ‘Signaling games and stable equilibria.’ Quarterl� Journal of Economics, vol. ,pp. –.

Cooper, David J. and Feltovich, Nikolas (). ‘Reinforcement based learning �s. Bayesian learning: acomparison.’ Mimeo, University of Pittsburgh.

Cooper, David J., Garvin, Susan and Kagel, John H. (a). ‘Signalling and adaptive learning in an entrylimit pricing game.’ Mimeo, University of Pittsburgh.

#$ No$ ldeke and Samuelson essentially assume that they will be a steady stream of ‘mutant ’ MLs entering

the population who play the Riley outcome. However, the nearly complete failure of inexperienced MLs to

play in experiment suggests that new MLs would rarely choose as well.

# Royal Economic Society

Cooper, David J., Garvin, Susan and Kagel, John H. (b). ‘Adaptive learning �s. equilibriumrefinements in an entry limit pricing game.’ Mimeo, University of Pittsburgh.

Crawford, Vincent P. (). ‘An evolutionary interpretation of Van Huyck, Battalio, and Beil’sexperimental results on coordination.’ Games and Economic Beha�ior, vol. , pp. –.

Friedman, Daniel (). ‘Evolutionary games in economics.’ Econometrica, vol. , pp. –.Fudenberg, Drew and Kreps, David (). ‘Learning mixed equilibria.’ Games and Economic Beha�ior, vol. ,

pp. –.Fudenberg, Drew and Kreps, David (). ‘Learning in extensive form games. I. Self-confirming

equilibria.’ Games and Economic Beha�ior, vol. , pp. –.Fudenberg, Drew and Levine, David K. (). ‘Self-confirming equilibria.’ Econometrica, vol. ,

pp. –.Garvin, S. (). ‘Experiments in entry deterrence.’ PhD Dissertation, University of Pittsburgh.Grossman, Sanford and Perry, Motty (). ‘Sequential bargaining under asymmetric information.’

Journal of Economic Theor�, vol. , pp. –.Harsanyi, J. and Selten, R. (). A General Theor� of Equilibrium Selection in Games. Cambridge, MA: MIT

Press.Kreps, David W. and Wilson, Robert (). ‘Sequential equilibria.’ Econometrica, vol. , pp. –.Mailath, George, Okuno-Fujiwara, Masahiro and Postlewaite, Andrew (). ‘Belief based refinements in

signalling games.’ Journal of Economic Theor�, vol. , pp. –.McKelvey, R. and Palfrey, T. (). ‘Quantal response equilibria for normal form games.’ Games and

Economic Beha�ior, vol. , pp. –.Milgrom, Paul and Roberts, John (). ‘Limit pricing and entry under incomplete information: an

equilibrium analysis.’ Econometrica, vol. , pp. –.Milgrom, Paul and Roberts, John (). ‘Price and advertising signals of product quality.’ Journal of

Political Econom�, vol. , pp. –.Milgrom, Paul and Roberts, John (). ‘Adaptive and sophisticated learning in normal form games.’

Games and Economic Beha�ior, vol. , pp. –.Miller, Ross M. and Plott, Charles R. (). ‘Product quality signalling in experimental markets.’

Econometrica, vol. , pp. –.No$ ldeke, G. and Samuelson, L. (). ‘Learning to signal in markets.’ SSRI paper no. , Madison, WC.Partow, Z. and Schotter, A. (). ‘Does game theory predict well for the wrong reasons : an experimental

investigation.’ RR no. -, C.V. Starr Center for Applied Economics, New York University.Riley, John (). ‘Informational equilibrium.’ Econometrica, vol. , pp. –.Roth, Alvin E. and Erev, Ido (). ‘Learning in extensive-form games: experimental data and simple

dynamic models in the intermediate term.’ Games and Economic Beha�ior, vol. , pp. –.Schotter, A., Weigelt, K. and Wilson, C. (). ‘A laboratory investigation of multi-person rationality and

presentation effects.’ Games and Economic Beha�ior, vol. , pp. –.

A

Detailed Specification of the Adapti�e Learning Model

There are Ms and Es in the population. In each round Ms have a – chanceof being an M

Hor M

L. Each M calculates the expected payoff from each of the seven

strategies conditional on his type, and chooses the strategy which maximises expectedpayoff. Es are randomly matched with Ms. Each E observes the choice of the M he ispaired with, calculates his expected payoff from IN or OUT, and takes the actionwhich maximises his expected payoff. Each simulation is run for a minimum of rounds.

Each M has an initial set of expectations about Es’ play conditional on their choice.The strength of each M’s initial beliefs, WM

i() are represented by an integer randomly

drawn from a uniform distribution on the integers [, ] (a different integer for eachi). The larger the integer, WM

i(), the stronger i’s initial beliefs are and the smaller the

response to observed outcomes (i.e. the slower the rate of learning). Each M’s initialbeliefs about the probability of IN conditional on choice j, pM

ij(), are constructed as

follows: for each choice j, random numbers are drawn from a uniform distributionon the real numbers [, WM

i()]. The average of these numbers, �M

ij"(), is the initial

weight i places on IN following choice j ; with the weight i puts on E playing OUTbeing �M

ij#()¯WM

i()®�M

ij"(). These weights are used to calculate the probability

of IN given i’s choice of j in period , pM

ij()¯ �M

ij"()}[�M

ij"()�M

ij#()] ; with ®pM

ij()

# Royal Economic Society

[

]

being i’s expectation of OUT for choice j. The number of random draws used tocalculate �M

ij"() is based on maximum likelihood estimates using first-period data from

inexperienced subjects. The more random draws used, the closer Ms’ first-periodchoices come to the myopic maximum.

Each E has an initial set of expectations about M’s type conditional on M’s choice.These expectations are constructed in the same way as M’s : first, for each E an integeris randomly selected from a uniform distribution on the integers [,]. This variable,WE

i(), determines the strength of i’s initial beliefs. The distribution of initial weights i

places on an MH

conditional on choice j, �Eij"

(), and the corresponding weightattached to an M

L, WE

i()–�E

ij"(), is determined from the distribution of initial period

choices for inexperienced Es. These are characterised using a beta distribution. In thesimulations with pure adaptive learning, each �E

ij"() is determined by a random draw

from the estimated beta distribution multiplied by WE

i(). These weights are then used

to calculate the probability each E places on the MH

following choice j, in period ,pE

ij()¯ �E

ij"()}[�E

ij"()�E

ij#()] ; with ®pE

ij() being i’s expectation of an M

Lfollowing

choice j.#% For those Es who recognise that and are dominated strategies,pE

ij()¯ for i¯ and .Following each round of play. Ms’ expectations are updated by observing the

strategy used and the response for all pairings and adding one to the appropriateweights �M

ij"() or �M

ij#() for each choice observed. Probabilities are updated accordingly,

and expected values are calculated for the next round of play. Es’ expectations areupdated in a similar manner.

Maximum likelihood estimates of initial period beliefs are explained in detail inCooper et al. (a).

#% Using experimenter-induced prior probabilities of the different M types provides a very poor fit to first-period data.

# Royal Economic Society