Towards automated classification of intensive care nursing narratives

Transcript of Towards automated classification of intensive care nursing narratives

i n t e r n a t i o n a l j o u r n a l o f m e d i c a l i n f o r m a t i c s 7 6 S ( 2 0 0 7 ) S362–S368

journa l homepage: www. int l .e lsev ierhea l th .com/ journa ls / i jmi

Towards automated classification of intensivecare nursing narratives

Marketta Hiissaa,b,∗, Tapio Pahikkalaa,c, Hanna Suominena,c, Tuija Lehtikunnasd,e,Barbro Backa,b, Helena Karstena,c, Sanna Salanterad, Tapio Salakoskia,c

a Turku Centre for Computer Science, Joukahaisenkatu 3-5 B, 20520 Turku, Finlandb Abo Akademi University, Department of Information Technologies, Turku, Finlandc University of Turku, Department of Information Technology, Turku, Finlandd University of Turku, Department of Nursing Science, Turku, Finlande Turku University Hospital, Turku, Finland

a r t i c l e i n f o

Article history:

Received 29 December 2006

Received in revised form

20 March 2007

Accepted 28 March 2007

Keywords:

Computerized patient records

Intensive care

Natural language processing

Nursing

Nursing records

a b s t r a c t

Background: Nursing narratives are an important part of patient documentation, but the

possibilities to utilize them in the direct care process are limited due to the lack of proper

tools. One solution to facilitate the utilization of narrative data could be to classify them

according to their content.

Objectives: Our objective is to address two issues related to designing an automated classi-

fier: domain experts’ agreement on the content of classes Breathing, Blood Circulation and

Pain, as well as the ability of a machine-learning-based classifier to learn the classification

patterns of the nurses.

Methods: The data we used were a set of Finnish intensive care nursing narratives, and

we used the regularized least-squares (RLS) algorithm for the automatic classification. The

agreement of the nurses was assessed by using Cohen’s �, and the performance of the

algorithm was measured using area under ROC curve (AUC).

Results: On average, the values of � were around 0.8. The agreement was highest in the class

Blood Circulation, and lowest in the class Breathing. The RLS algorithm was able to learn

the classification patterns of the three nurses on an acceptable level; the values of AUC were

generally around 0.85.

Conclusions: Our results indicate that the free text in nursing documentation can be auto-

matically classified and this can offer a way to develop electronic patient records.

port nurses in gathering information, but not in the active

1. Introduction

During the past years, health-care providers have been chang-ing paper-based patient records to electronic ones. This has,

on the one hand, made more data available on each patient,but on the other hand, also offered new possibilities to utilizethe gathered data. However, the effects of this switch have not∗ Corresponding author. Tel.: +358 2 215 4078; fax: +358 2 241 0154.E-mail address: [email protected] (M. Hiissa).

1386-5056/$ – see front matter © 2007 Elsevier Ireland Ltd. All rights resdoi:10.1016/j.ijmedinf.2007.03.003

© 2007 Elsevier Ireland Ltd. All rights reserved.

only been positive. It has been found that electronic chartingmay not provide nurses with more time for tasks unrelatedto manipulating data [1,2] and that electronic systems sup-

utilization of it [3].In Finland, most of the intensive care nursing documenta-

tion, i.e. documentation about the state of the patient, goals for

erved.

i n f o

crmuemDiftawa

naiwstOoitfnatibs

demtsdstt

apnfiiccPL

2

2

Tnf

ing, guidance in care, follow-up and emotional support. Thethree chosen issues, Breathing, Blood Circulation and Pain, areall assessed within the Breathing, Circulation and Symptoms

i n t e r n a t i o n a l j o u r n a l o f m e d i c a l

are, nursing interventions and evaluation of delivered care, isecorded as free text notes, i.e. narratives, and there is no com-

on practise about how the narratives are written. In somenits, paragraph names such as breathing, hemodynamics,limination and consciousness are used to guide the docu-entation, but in general, each nurse uses her/his own style.ue to this, incoherence between one document and another

s almost unavoidable, which in turn makes it more difficultor example to find relevant information from the records, andhus complicates the utilization of gathered narratives. Thective utilization of narrative data is problematic in particularhen the patient stays in the ward for several days, and the

mount of documentation is large.Narratives, in addition to serving nurses’ information

eeds about the state of the patient and care given, also serves basis for, e.g. writing the discharge report when the patients discharged from the Intensive Care Unit (ICU) to anotherard, and when defining the caring needs and nursing inten-

ity of the patient. The classification used in Finland to definehe patient’s caring needs is the Oulu Patient Classification,PC [4,5] that makes a distinction between six different areasf intensive care nursing. Nurses give scores to each patient

n compliance with the categories based on the severity andhe degree of difficulty of the problems the patient is sufferingrom. Based on the given scores, patients are divided into fiveursing intensity categories. If it would be possible to system-tically classify the written narratives so that they correspondo the six areas used in the OPC, both the defining of the nurs-ng intensity and the writing of the discharge report wouldecome easier, and it would also be easier to search for somepecific information needed.

Our approach to facilitate the utilization of narratives is toevelop automatic tools that classify texts, and thus make itasier to utilize the information the narratives include. In theedical domain, classification has recently been applied, e.g.

o classifying texts such as chief complaint notes, diagnostictatements, and injury narratives into different kinds of syn-romic, illness and cause-of-injury categories [6–9]. However,tudies applying natural language processing to clinical con-ents are still relatively rare [10], and there is little research onhe automated processing of nursing narratives [11].

In this paper, we use a machine-learning approach, i.e. anlgorithm that learns the classification patterns directly fromre-classified data, to classify Finnish intensive care nursingarratives. We address two issues related to designing a classi-er: domain experts’ agreement on the content of the classes

nto which the data are to be classified, and the ability of thelassification algorithm to perform on an acceptable level. Thelasses used in this study are Breathing, Blood Circulation andain, and the machine-learning algorithm is the Regularizedeast-Squares Algorithm.

. Material and methods

.1. Material

he data we used were a set of Finnish intensive care nursingarratives. The documents were gathered in the spring of 2001

rom 16 intensive care units, two or three documents per unit.

r m a t i c s 7 6 S ( 2 0 0 7 ) S362–S368 S363

In total, we had 43 copies of patient records including nursingnotes written down during one day and night. Nurses wrotethe notes during the care process; in intensive care, the doc-umentation always takes place at the patient’s bedside. Thelanguage of the notes was Finnish.

The research permission for the study was applied andachieved according to the Finnish laws and ethical guide-lines for research. We applied for: (a) a permission from eachparticipating hospital authority that has the right to acknowl-edge the permission, and (b) a statement about the ethicsof the study from an ethical committee for health research.The gathered documents included only nursing notes writ-ten down during one day and night, and the documents wereanonymized, i.e. no patient identification information wasgiven to the researchers. Within our research group, only onenurse handled the original documents, respecting the pro-fessional secrecy. The other researchers had access to a filein which the narrative statements from the individual doc-uments were aggregated, but the recognition of individualpatients was impossible. Thus, the anonymity of the patientswas preserved during the whole research process.

In the gathered data, the style of the documentation variedfrom one nurse to another: some had written short sentencessuch as “Hemodynamics ok. Very tired.”,1 whereas others hadwritten long sentences in which different matters were sepa-rated with commas, e.g. “Patient has extra systoles, EKG controltomorrow morning, became bluish when turning him around and theoxygen saturation decreased, with extra oxygenation the situationimproved, we made his sedation deeper.” In order to standardisethe style of the documentation, we divided the long sentencesinto smaller pieces consisting of one matter or thought. Thiswas done manually by one of the authors with nursing expe-rience, and resulted in 1363 pieces, with the average length of3.7 words.

2.2. Methods

In this section, we firstly discuss the classes into which thedata was classified, and then we describe how the nurses’agreement on the content of the classes was measured. Wealso introduce the Regularized Least-Squares Algorithm, anddescribe how the performance of the algorithm was measured.

2.2.1. ClassesWe classified the data according to classes Breathing, BloodCirculation and Pain, which represent a subset of the contentof intensive care nursing. The OPC classification used to assesspatients’ caring needs and nursing intensity, divides the inten-sive care nursing into six areas. These areas are planning andco-ordination of care; breathing, circulation and symptomsof diseases; nutrition and medication; personal hygiene andexcretion; activity, movement, sleep and rest; as well as teach-

of Diseases category [4].

1 The citations were translated from the notes written in Finnish.During the study, the original notes in Finnish were used.

a l i n

S364 i n t e r n a t i o n a l j o u r n a l o f m e d i cAlthough assessed within the same category in the OPC,the chosen classes represent different aspects of nursingwork. Breathing and blood circulation are vital functions, themaintenance and monitoring of which is the first priority ofintensive care nursing, and there are different kinds of devicesto assist in the monitoring of them [12]. Pain, on the otherhand, has to be monitored mainly on the basis of differentkinds of behavioural and physiological indicators, which is dueto patients being incapable of communicating because of theircritical illnesses [13]. We wanted to investigate if the differentnature of the documentation of pain had any effect on theperformance of the automated classifier.

2.2.2. Nurses’ agreement on the content of the classesIn order to assess domain experts’ agreement on the contentof the three classes, we asked three nurses to manually clas-sify the data, independently of each other. The agreement wasmeasured in order to get an insight on how similar opinionsnurses have on what kind of statements should be included inthe chosen classes. The nurses were advised to label each textpiece they considered to contain useful information given thespecific class. All the nurses were specialists in nursing docu-mentation; two had a long clinical experience in ICUs (N1 andN2), and one was a nursing scientist having a doctoral degreein nursing science and a position as an assistant professor ina University Department of Nursing Science (N3). The classifi-cation process was done as three separate classification tasks,i.e. each of the classes was considered separately of the others.

We measured the agreement on the content of the classesby using Cohen’s � [14]. Cohen’s � is a chance-corrected mea-sure of agreement, which considers the classifiers equallycompetent to make judgments, places no restriction on thedistribution of judgments over classes for each classifier, andtakes into account that a certain amount of agreement is tobe expected by chance. It is an appropriate measure espe-cially in situations where there are no criteria for a correctclassification, which is the case in our study.

The formulas for � and its standard error are the following[14]. If P(A) denotes the proportion of times the judges agree,and P(E) the proportion of times they would be expected toagree by chance, then

� = P(A) − P(E)1 − P(E)

. (1)

The standard error for � is

�� =√

P(A)(1 − P(E))

N(1 − P(E))2, (2)

where P(A) and P(E) are as before, and N is the total numberof classified objects. This was used when computing the 95%confidence intervals for �.

2.2.3. Regularized Least-Squares Algorithm forclassification

Automatic classification was performed using RegularizedLeast-Squares Algorithm (RLS) (see, e.g. [15,16]). The algorithmis also known as Least-Squares Support Vector Machine [17].The implementation of the algorithm we used was the onef o r m a t i c s 7 6 S ( 2 0 0 7 ) S362–S368

presented in Ref. [18], and it is briefly described in the follow-ing.

Let {(x1, y1), . . ., (xm, ym)} be the set of m training exam-ples, where X is a set of bag-of-words vectors, xi ∈ X denotesthe training data examples, and yi ∈ R are their class labelsset by a human expert. We consider the RLS algorithm as aspecial case of the following regularization problem known asTikhonov regularization (for a more comprehensive introduc-tion, we refer to Refs. [15,16]):

minf

m∑i=1

l(f (xi), yi) + �||f ||2k, (3)

where l is the loss function used by the learning machine,f: X → R the function that maps the inputs x ∈ X to the out-puts y ∈ R, � ∈ R+ the regularization parameter and ||·||k is thenorm in a reproducing kernel Hilbert space defined by a pos-itive definite kernel function k. The second term in Eq. (3) iscalled a regularizer. The loss function used with RLS is theleast-squares loss defined as

l(f (xi), yi) = (f (xi) − yi)2. (4)

By the Representer Theorem [19], the minimizer of Eq. (3)with the least-squares loss function has the following form:

f (x) =m∑

i=1

˛ik(x, xi), (5)

where ˛i ∈ R and k is the kernel function associated with thereproducing kernel Hilbert space mentioned above. As the ker-nel function, we use the cosine of the word feature vectors,that is,

k(x, xi) = 〈x, xi〉√〈x, x〉〈xi, xi〉. (6)

2.2.4. Evaluation of the performance of the classificationalgorithmThe performance of the RLS algorithm was measured by com-paring its output to the data classified manually by the nurses.The data were divided into a training set and a test set so that708 out of the total of 1363 text pieces belonged in the trainingset, and the remaining 655 pieces in the test set. The divisionwas done so that text pieces from one document belongedonly in one of the two sets.

Nine automated classifiers were trained by using the train-ing data labelled by the three nurses, i.e. one classifier foreach nurse–class pair. Because of the perceived differences inthe nurses’ manual classifications, we did not combine thenurses’ responses into a one single data set, but consideredthem separately. This enabled us to study if the performanceof the algorithm was affected by the different opinions of thenurses, i.e. if some manual classifications were harder for thecomputer to learn than the others were.

The performance of the algorithm was tested in two ways.Firstly, we tested how well the algorithm was able to captureclassification patterns of one nurse by comparing the out-put of the algorithm with the test data manually classified

i n t e r n a t i o n a l j o u r n a l o f m e d i c a l i n f o r m a t i c s 7 6 S ( 2 0 0 7 ) S362–S368 S365

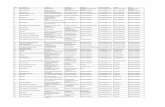

Table 1 – The values of Cohen’s � and the respective 95% confidence intervals (CI) for the agreement between the threenurses

Breathing Blood Circulation Pain

� 95% CI � 95% CI � 95% CI

N1–N2 0.73 (0.68–0.78) 0.89 (0.85–0.92) 0.88 (0.82–0.94)N1–N3 0.67 (0.62–0.72) 0.81 (0.77–0.86) 0.79 (0.73–0.86)

bSedte

r(aniftS

3

3

Ti1cdo

p

N2–N3 0.85 (0.82–0.89) 0.87

y the same nurse who also had classified the training data.econdly, we tested how well the algorithm was able to gen-ralize by comparing the output of the algorithm with the testata classified by other nurses than the one, with whose datahe algorithm was trained. This resulted in six comparisons inach of the three classes.

The performance of the algorithm was measured withespect to the test data by using the area under ROC curveAUC) [20], which corresponds to the probability that given

randomly chosen positive example and a randomly chosenegative example, the classifier will correctly determine which

s which. Data were pre-processed with the Snowball stemmeror Finnish [21] in order to reduce different inflection forms ofhe words, and all the statistical analyses were performed withPSS 11.0 for Windows.

. Results

.1. Nurses’ agreement on the content of the classes

he amount of data the nurses included in the classes Breath-ng, Blood Circulation and Pain was, respectively, around 20,5 and 6% of the 1363 text pieces. This reflects the extensiveontent of the narrative documentation, and illustrates the

ifficulty of finding relevant information from large amountsf text.The comparisons between the nurses showed that the textieces describing blood circulation were selected quite simi-

Table 2 – The values of AUC and the respective 95% confidence

Breathing Bloo

AUC 95% CI AUC

N1 0.86 (0.82–0.90) 0.89N2 0.88 (0.85–0.91) 0.93N3 0.87 (0.84–0.91) 0.91

Table 3 – The values of AUC for testing the automatic classifiers

Breathing Bl

N1 N2 N3 N1

Machine N1 0.86 0.74 0.72 0.89Machine N2 0.83 0.88 0.86 0.88Machine N3 0.84 0.88 0.87 0.89

(0.83–0.90) 0.76 (0.69–0.83)

larly (� > 0.80 in each comparison), whereas there were moredifferences in selecting text pieces related to Pain or Breathing(Table 1). The range of the values of � was the largest, from 0.67to 0.85, in the class Breathing. In addition, given the classesPain and Blood Circulation, the two clinical nurses N1 and N2

were the most unanimous in the classification, whereas in theclass Breathing, the clinical nurse N2 and the nursing scienceresearcher N3 were the most unanimous.

3.2. Learning ability of the RLS algorithm with respectto the data classified by the nurses

The learning ability of the RLS algorithm was tested in twoways. Firstly, the algorithm was tested against the test dataclassified by the same nurse whose data was used when train-ing the algorithm. The results showed that the algorithm wasable to learn the classification patterns from the training set(Table 2). The values of AUC were in general around 0.85. Thehighest values, from 0.89 to 0.93, were achieved for the clas-sification of blood circulation statements, whereas the lowestvalues, from 0.71 to 0.81 were measured for the class Pain.Except the class Pain, the performance of the algorithm wason a similar level in the classes independently of the nursewhose classification was used to train the algorithm, also inthe class Breathing, in which the disagreement between the

nurses was the highest. In the class Pain, the performance ofthe algorithm trained with the data classified by the nurse N2was better than that of those trained with the data classifiedby the other two nurses.

intervals (CI) for the automatic classifiers

d Circulation Pain

95% CI AUC 95% CI

(0.84–0.93) 0.71 (0.61–0.80)(0.90–0.97) 0.81 (0.73–0.89)(0.86–0.95) 0.71 (0.61–0.80)

against manual classifications of all the nurses

ood Circulation Pain

N2 N3 N1 N2 N3

0.93 0.91 0.71 0.81 0.720.93 0.91 0.71 0.81 0.710.93 0.91 0.67 0.77 0.71

a l i n

S366 i n t e r n a t i o n a l j o u r n a l o f m e d i cSecondly, we tested the classifiers against the test dataclassified by other nurses than the one whose data was usedwhen training the algorithm. The values of AUC were cal-culated for six comparisons in each of the three classes(Table 3).

The results showed that the differences in the AUCs werevery small, especially in the class Blood Circulation. Thismeans that given a manually established reference classifi-cation, the two RLS classifiers trained with other data thanthat of the given nurse performed as well as the classifiertrained by using the classification of the given nurse. In theclass Pain, the values of AUC decreased a little, except whentesting against data classified by nurse N3; in this case allthe classifiers performed equally well. In the class Breath-ing, there were larger differences in the values of AUC, andthe classifiers were not able to generalize as well as in theother two classes. We also calculated the average decrease inthe values of AUC compared with the situation in which boththe training and the testing data were classified by the samenurse. The average decrease in the values was 0.06 in the classBreathing, 0.01 in the class Pain, and 0.00 in the class BloodCirculation.

4. Discussion

We have assessed the agreement of three nurses on the con-tent of the classes Breathing, Blood Circulation and Pain byusing Cohen’s �, and the ability of the RLS machine-learningalgorithm to learn the classification patterns of the nursesby using AUC as the performance measure. On average, thevalues of � were around 0.8. According to the obtained AUC val-ues, the RLS algorithm performed on an acceptable level; thevalues were generally around 0.85, and the decreases in thesewere rather small when using test data classified by anothernurse than the one who classified the training data.

We measured rather high values of Cohen’s � in the classBlood Circulation, whereas the values were somewhat lowerin the other two classes. Some suggestive limits about howto interpret the observed values have been discussed withinboth computational linguistics [22] and medical informatics[23], concluding that the interpretation of the values dependsheavily on the task, categories and the purpose of the mea-surement, and thus no universal guidelines can be given. Inaddition, the skewed distributions of categories may affect �

[24]. In our case, all the values of � were above 0.67, and themajority of them were above 0.8, which indicates quite goodagreement, but because of the differences in the values in theclasses Breathing and Pain in particular, we had a closer lookat the disagreement cases.

We analyzed the disagreement cases, and found out thatthe effects of the subjective considerations on important infor-mation were the most visible in the classes Breathing andPain. For example, given the class Breathing, nurses disagreedon whether or not the statements related to the mucus inpatient’s lungs should be included in the class, and given the

class Pain, the disagreements were mainly due to sentencessuch as “Reacts to interventions by moving extremities and furrow-ing eyebrows.” or “Reacts against when turning him over.”, whichdid not include any direct mention of pain. However, the dis-f o r m a t i c s 7 6 S ( 2 0 0 7 ) S362–S368

agreement cases appeared to be not only due to the subjectiveconsiderations and interpretations, but also to matters suchas the different handling of non-standard abbreviations. Forexample, one of the nurses decided not to accept any textpieces containing non-standard and ambiguous abbreviationsto belong in any class, whereas the others classified thesepieces based on the meaning they thought the abbreviationscould have in the context they appeared.

The different opinions of the experts have to be somehowcombined when establishing training material or a referencestandard for the application. If the experts work indepen-dently, their responses can be combined by, e.g. taking amajority vote, an average, a median, or other functions thatare suitable for the application [25]. An alternative is to letthe experts come to a consensus, and as a formal approachto this, Hripcsak and Wilcox [25] refer to the Delphi methodand its modifications. In the Delphi method, experts’ individ-ual responses are collated and sent back to each expert forreview, experts may then change their own responses basedon others’ opinions, and the process is repeated until a con-vergence criterion is met [26]. Our aim being to classify thenarratives such that the nurses’ information needs in theireveryday work are supported, combining experts’ opinionsusing a method such as the Delphi method could be moti-vated. Allowing the nurses to modify their responses basedon others’ opinions might improve the responses, and thusthe process could end up with a more appropriate classifica-tion than, e.g. combining the independent responses by takingthe majority vote. However, the disagreement cases can alsobe utilized in establishing training material. For example, theindirect pain statements described earlier could be consid-ered, e.g. as weaker pain statements than those on which allthe nurses agreed, and this information could be used to givedifferent weights to different kinds of statements with respectto the given class. This approach has been applied in order toreceive a ranking order for the statements that were includedin the given class [27].

According to the obtained values of AUC, the learning abil-ity of the RLS algorithm was on a rather high level. The resultsfor the classes Blood Circulation and Breathing were good, andthe performance in the class Pain was somewhat lower mainlydue to the small amount of available data and the differentnature of the documentation. The sentences in the classesBlood Circulation and Breathing contained many keywords,such as heart rate, blood pressure and hemodynamics in the classBlood Circulation, and oxygen saturation, respirator and intu-bation in the class Breathing. In contrast to these, the classPain included non-direct statements, in which no keywordswere present. Compared to the other nurses, the nurse N2

included fewer non-direct statements in the class Pain, whichexplains the better performance of the automated classifierin that case. In the other two classes, the performance of thealgorithm was on a similar level independently of the nursewhose manual classification was used in training, i.e. noneof the classification patterns of the nurses was harder for themachine to learn than the others were.

The results also showed that in the class Blood Circula-tion, there were no decreases in the values of AUC when usingthe test data classified by another nurse than the one withwhose classification the algorithm was trained. The decreases

i n f o r m a t i c s 7 6 S ( 2 0 0 7 ) S362–S368 S367

itccbs

feptFtdrootts

utddtsootsatp

5

OdnCwcdTfortp

A

W(nF

Summary pointsKnowledge before this study:

• Compared to paper based patient records, electronicpatient records make more data available on eachpatient and offer new possibilities to utilize the gath-ered data.

• Nursing narratives are an important part of patientdocumentation, but the possibilities to utilize them inthe direct care process are limited due to the lack ofproper tools.

• Classification of patient record narratives accordingto their content has been successfully applied in themedical domain. However, more research about theautomated processing of nursing narratives is needed.

This study has added to the body of knowledge that:

• Nurses seem to have somewhat different opinionson how to divide information in free text notes intodifferent classes. We used classes Breathing, Blood Cir-culation and Pain, and the agreement was highest inthe class Blood Circulation, and lowest in the classBreathing. The disagreements should be taken intoaccount when designing an automated classifier.

• The Regularized Least-Squares Algorithm was able tolearn classification patterns from the data classified bythe nurses, and its performance on unseen materialwas on an acceptable level.

• The free text in nursing documentation can be auto-matically classified and this can offer a way to developelectronic patient records.

r

i n t e r n a t i o n a l j o u r n a l o f m e d i c a l

n the values of AUC in the classes Breathing and Pain reflecthe nurses’ agreement on the content of these classes; in thelass Blood Circulation, the values of � were higher than in thelasses Breathing and Pain. However, we can still conclude thatased on these results, the algorithm was able to generalizeubstantially well.

Although our results showed that the RLS algorithm per-ormed on an acceptable level, improvements could be gained,.g. by using more training data, and by increasing the pre-rocessing of the data. In the current study, we used a stemmero reduce different inflection forms of the words, but becauseinnish is a highly inflectional language, techniques that findhe real base form instead of just stemming could make theata less sparse and increase the performance of the algo-ithm. Another topic of further research is the pre-processingf the long sentences, which here was done manually byne researcher, and is thus a possible source of bias. Fur-her research is also needed to assess the performance ofhe algorithm on other classes than the three used in thistudy.

In the forthcoming studies, we will test the performancesing cross-validation, and thus the possible dependencies inhe data set need to be taken into account. Because of theependencies, the data set might be clustered, meaning thatata points forming a cluster are more similar to each otherhan to other data points. With biomedical texts, it has beenhown that if the data set is clustered, the traditional leavene out cross-validation may overestimate the performancef the automatic application [18]. In our case, it is possible thathe text pieces extracted from one patient’s record are moreimilar to each other than to the text pieces extracted fromnother patients’ documents. In the future, we will explorehis, and intend to use the leave cluster out cross-validationroposed in Ref. [18].

. Conclusions

ur results show that the nurses seem to have somewhatifferent opinions on how to divide information in free textotes into different classes. We used classes Breathing, Bloodirculation and Pain, and the agreement between the nursesas highest in the class Blood Circulation, and lowest in the

lass Breathing. There are several possible ways to handle theisagreement cases when designing an automated classifier.he RLS algorithm was able to learn classification patterns

rom the data classified by the nurses, and its performancen unseen material was on an acceptable level. Based on ouresults, we conclude that the free text in nursing documen-ation can be automatically classified, and consider this as aossible way to develop electronic patient records.

cknowledgments

e gratefully acknowledge the financial support of Tekesgrant number 40435/05) and the Academy of Finland (grantumber 104639). We wish to thank Helja Lundgren-Laine andilip Ginter for their contributions.

e f e r e n c e s

[1] G.L. Pierpont, D. Thilgen, Effect of computerized charting onnursing activity in intensive care, Crit. Care Med. 23 (6)(1995) 1067–1073.

[2] K. Smith, V. Smith, M. Krugman, K. Oman, Evaluating theimpact of computerized clinical documentation, Comput.Inform. Nurs. 23 (3) (2005) 132–138.

[3] R. Snyder-Halpern, S. Corcoran-Perry, S. Narayan, Developingclinical practice environments supporting the knowledgework of nurses, Comput. Nurs. 19 (1) (2001) 17–23, quiz 24–6.

[4] T. Kaustinen, Hoitoisuusluokituksen kehittaminen jaarviointi, Licentiate Thesis, University of Oulu, Departmentof Nursing Science, Oulu, 1995 (in Finnish).

[5] L. Fagerstrom, A.K. Rainio, A. Rauhala, K. Nojonen,Validation of a new method for patient classification, theOulu Patient Classification, J. Adv. Nurs. 31 (2) (2000) 481–490.

[6] W.W. Chapman, J.N. Dowling, M.M. Wagner, Fever detectionfrom free-text clinical records for biosurveillance, J. Biomed.Inform. 37 (2) (2004) 120–127.

[7] S.V. Pakhomov, J.D. Buntrock, C.G. Chute, Using compound

codes for automatic classification of clinical diagnoses,Medinfo 11 (Pt 1) (2004) 411–415.[8] H.M. Wellman, M.R. Lehto, G.S. Sorock, G.S. Smith,Computerized coding of injury narrative data from the

a l i n

intensive care nursing narratives, in: B. Gabrys, R.J. Howlett,

S368 i n t e r n a t i o n a l j o u r n a l o f m e d i c

National Health Interview Survey, Accid. Anal. Prev. 36 (2)(2004) 165–171.

[9] W.W. Chapman, L.M. Christensen, M.M. Wagner, P.J. Haug, O.Ivanov, J.N. Dowling, R.T. Olszewski, Classifying free-texttriage chief complaints into syndromic categories withnatural language processing, Artif. Intell. Med. 33 (1) (2005)31–40.

[10] N. Collier, A. Nazarenko, R. Baud, P. Ruch, Recent advancesin natural language processing for biomedical applications,Int. J. Med. Inform. 75 (6) (2006) 413–417.

[11] S. Bakken, S. Hyun, C. Friedman, S.B. Johnson, ISO referenceterminology models for nursing: applicability for naturallanguage processing of nursing narratives, Int. J. Med.Inform. 74 (7–8) (2005) 615–622.

[12] N.S. Ward, J.E. Snyder, S. Ross, D. Haze, M.M. Levy,Comparison of a commercially available clinical informationsystem with other methods of measuring critical careoutcomes data, J. Crit. Care 19 (1) (2004) 10–15.

[13] J.F. Payen, O. Bru, J.L. Bosson, A. Lagrasta, E. Novel, I.Deschaux, P. Lavagne, C. Jacquot, Assessing pain in criticallyill sedated patients by using a behavioral pain scale, Crit.Care Med. 29 (12) (2001) 2258–2263.

[14] J. Cohen, A coefficient of agreement for nominal scales,Educ. Psychol. Meas. 20 (1) (1960) 37–46.

[15] T. Poggio, S. Smale, The mathematics of learning: dealingwith data, Am. Math. Soc. Notice 50 (2003) 537–544.

[16] R.M. Rifkin. Everything old is new again: a fresh look athistorical approaches in machine learning, Ph.D. Thesis,Massachusetts Institute of Technology, 2002.

[17] J.A.K. Suykens, T. Van Gestel, J. De Brabanter, B. De Moor, J.

Vandewalle, Least Squares Support Vector Machines, WorldScientific Publishing, Singapore, 2002.[18] T. Pahikkala, J. Boberg, T. Salakoski, Fast n-foldcross-validation for regularized least-squares, in: T. Honkela,T. Raiko, J. Kortela, H. Valpola (Eds.), Proceedings of the

f o r m a t i c s 7 6 S ( 2 0 0 7 ) S362–S368

Ninth Scandinavian Conference on Artificial Intelligence(SCAI 2006), Otamedia Oy, Espoo, Finland, 2006, pp. 83–90.

[19] B. Scholkopf, R. Herbrich, A.J. Smola, A generalizedRepresenter Theorem, in: COLT ’01/EuroCOLT’01:Proceedings of the 14th Annual Conference onComputational Learning Theory and 5th EuropeanConference on Computational Learning Theory,Springer-Verlag, London, UK, 2001, pp. 416–426.

[20] J.A. Hanley, B.J. McNeil, The meaning and use of the areaunder a receiver operating characteristic (ROC) curve,Radiology 143 (1) (1982) 29–36.

[21] The Snowball Stemming Algorithm, available at:http://snowball.tartarus.org/algorithms/finnish/stemmer.html (accessed 01/10, 2006).

[22] J. Carletta, Assessing agreement on classification tasks: thekappa statistic, Comput. Linguistics 22 (2) (1996) 249–254.

[23] G. Hripcsak, G.J. Kuperman, C. Friedman, D.F. Heitjan, Areliability study for evaluating information extraction fromradiology reports, J. Am. Med. Inform. Assoc. 6 (2) (1999)143–150.

[24] B. Di Eugenio, M. Glass, The kappa statistics: a second look,Comput. Linguistics 30 (1) (2004) 95–101.

[25] G. Hripcsak, A. Wilcox, Reference standards, judges, andcomparison subjects: roles for experts in evaluating systemperformance, J. Am. Med. Inform. Assoc. 9 (1) (2002) 1–15.

[26] C.P. Friedman, J.C. Wyatt, Evaluation Methods in MedicalInformatics, Springer-Verlag, New York, USA, 1997.

[27] H. Suominen, T. Pahikkala, M. Hiissa, T. Lehtikunnas, B. Back,H. Karsten, S. Salantera, T. Salakoski, Relevance ranking of

L.C. Jain (Eds.), Lecture Notes in Computer Science, LNAI4251. Knowledge-Based Intelligent Information andEngineering Systems, 10th International Conference,Springer-Verlag, Heidelberg, Germany, 2006, pp. 720–727.