Thermodynamic efficiency of information and heat flow

-

Upload

independent -

Category

Documents

-

view

1 -

download

0

Transcript of Thermodynamic efficiency of information and heat flow

arX

iv:0

907.

3320

v1 [

cond

-mat

.sta

t-m

ech]

19

Jul 2

009

Thermodynamic efficiency of information and heat flow

Armen E. Allahverdyan1, Dominik Janzing2, Guenter Mahler31Yerevan Physics Institute, Alikhanian Brothers Street 2, Yerevan 375036, Armenia,

2 MPI for Biological Cybernetics, Spemannstrasse 38 72076 Tuebingen, Germany,3Institute of Theoretical Physics I, University of Stuttgart, Pfaffenwaldring 57, 70550 Stuttgart, Germany

A basic task of information processing is information transfer (flow). Here we study a pairof Brownian particles each coupled to a thermal bath at temperature T1 and T2, respectively.The information flow in such a system is defined via the time-shifted mutual information. Theinformation flow nullifies at equilibrium, and its efficiency is defined as the ratio of flow over the totalentropy production in the system. For a stationary state the information flows from higher to lower

temperatures, and its the efficiency is bound from above by max[T1,T2]|T1−T2|

. This upper bound is imposed

by the second law and it quantifies the thermodynamic cost for information flow in the present classof systems. It can be reached in the adiabatic situation, where the particles have widely differentcharacteristic times. The efficiency of heat flow—defined as the heat flow over the total amountof dissipated heat—is limited from above by the same factor. There is a complementarity betweenheat- and information-flow: the setup which is most efficient for the former is the least efficientfor the latter and vice versa. The above bound for the efficiency can be [transiently] overcome incertain non-stationary situations, but the efficiency is still limited from above. We study yet anothermeasure of information-processing [transfer entropy] proposed in literature. Though this measuredoes not require any thermodynamic cost, the information flow and transfer entropy are shown tobe intimately related for stationary states.

PACS numbers: 89.70.Cf, 02.50.-r, 05.10.Gg

I. INTRODUCTION

Relations between statistical thermodynamics and in-formation science have long since been recognized; theyhave been the source of mutual fertilization, but occasion-ally also of confusion. Pertinent examples are the natureof the maximum entropy principle [1] and the analysis ofthe Maxwell demon concept [2]. Then there is the hugefield of infomation processing devices. Their continuingminiaturization [3] is approaching already the nanometerscale, which makes it obligatory to study the informationcarriers as physical entities subject to the laws of statisti-cal physics. Note that information processing constitutesa certain class of tasks (functionality) to be implementedon a physical system. Basic tasks of such kind are, interalia, information erasure and information transfer.

The thermodynamic cost of information erasure re-ceived much attention [4, 5, 6, 7, 8, 9, 10, 11]; see [7]for a review. The information erasure is governed by theLandauer principle [4, 5, 6, 7], which —together withits limitations [8, 9, 10, 11]—has been investigated fromdifferent perspectives.

The task of information transfer is not uniquely de-fined, but has to be specified before one can start anydetailed physical investigation (see below). Its thermo-dynamical cost in the presence of a thermal bath hasbeen studied in [12, 13, 14, 15, 16, 17, 18, 19]. There isan essential difference between the problem of thermo-dynamic costs during information erasure versus thoseduring information transfer. In the former case the in-formation carrier has to be an open, or even dissipativesystem, while for the latter case the external bath fre-quently plays a role of a hindrance [16, 17, 19]. For

a finite, conservative system the problem of thermody-namic costs is not well-defined, since for such systemsthe proper measures of irreversibility and dissipation arelacking; see, however, [24] in this context. Thus, the ther-modynamic cost for information transfer is to be studiedin specific macroscopic settings.

The current status of the problem is controversial: inthe literature there are statements claiming both the exis-tence [17, 18, 19] and the absence [12, 13, 14, 15, 16] of in-evitable thermodynamic costs during information trans-fer. The arguments against the fundamental bounds onthermodynamic costs in information transfer [12, 13, 14,15, 16] rely in essence on the known statistical physicsfact that the entropy generated during a process can benullified by nullifying the rate of this process [20]. How-ever, this and related arguments leave unanswered thequestion on whether there are thermodynamic costs in amore realistic case of finite-rate information transfer. Inpractice, the information transfer should normally pro-ceed at a finite rate.

The presently known arguments in favour of the exis-tence of thermodynamic cost during information transferare either heuristic [17, 18], or concentrate on those as-pects of computation and communication, which requiresome energy for carrying out the task, but this energyis not necessarily dissipated; see [19, 21, 22, 23] 1. Thepresent work aims at clarifying how processes of informa-tion and heat flow relate to dissipative charateristics such

1 The authors of [23] point out that although in principle the en-ergy required for carrying out these tasks need not be dissipated,in practice it is normally dissipated, at least partially.

2

as entropy production or heat dissipation. In particular,we clarify which taks of information processing do (not)reqiure thermodynamic cost.

We shall work with the—supposedly—simplest set-upthat allows to study the above problem: two (classical)Brownian particles each interacting with a thermal bath.The model combines two basic ingredients needed forstudying the information transfer: randomness—whichis necessary for the very notion of information—and di-rectedness, i.e., the possibility of inducing a current ofphysical quantities via externally imposed gradients oftemperature and/or various potentials.

For the present bi-partite problem, whose dynamicsis formulated as a Markov process for random coordi-nates X1(t) and X2(t) of Brownian particle, the mutualinformation is given by the known Shannon expressionI[X1(t) : X2(t)] [25, 26, 27] 2. This is an ensemble prop-erty, which is naturally symmetric I[X1(t) : X2(t)] =I[X2(t) : X1(t)], and has the same status as other macro-scopic observables in statistical physics, e.g., the averageenergy. The information flow ı2→1 is defined via the time-shifted mutual information via ı2→1 = ∂τI[X1(t + τ) :X2(t)]|τ→+0. The full rate d

dtI[X1(t) : X2(t)] of mutual

information is now separated into the information thathas flown from the first to the second particle ı1→2 andvice versa: d

dtI[X1(t) : X2(t)] = ı1→2 + ı2→1. We discuss

this definition in section IV, explain its relation to pre-diction and clarify the meaning of a negative informationflow. The usage of the time-shifted mutual informationfor quantifying the information flow was advocated in[29, 30, 31, 32].

The information flow is an asymmetric quantity,ı2→1 6= ı1→2, and it allows to distinguish between thesource of information versus its recipient (such a dis-tinction cannot be done via the mutual information,which is a symmetric quantity). Another importantfeature of information flow is that—for the consideredbi-partite Markovian system—it nullifies in equilibrium:ı2→1 = ı1→2 = 0, because the information flow appearsto be related to the entropy flow; see section V.

Once we have shown that the information flow is ab-sent in equilibrium, we concentrate on the case when thisflow is induced by a temperature gradient. Despite someformal similarities, a temperature gradient and a poten-tial gradient—two main sources of non-equiilbrium—areof different physical origin and enjoy different features;see [44] for a recent discussion. Hence we expect that thespecific features of the information flow will differ de-pending on the type of non-equilibrium situation. In thiscontext, the temperature-gradient situation is perhapsthe first one to study, since the function of the informa-

2 We stress that the employed notion of information concerns itssyntactic aspects and does not concern its semantic [meaning]and pragmatic [purpose] aspects. Indeed, the problems we intendto study—physics of information carriers, thermodynamic cost ofinformation flow, etc—refer primarily to syntactic aspects.

tion transfer comes close to the function of a thermody-namic machine. This far reaching analogy reflects itselfin the features of the efficiency of information transfer,which is defined as the useful product, i.e., the informa-tion flow, divided over the total waste, as quantified bythe entropy production in the overall system. In the sta-tionary two-temperature state, where both the informa-tion flow and entropy production are time-independent,the efficiency of information flow is limited from above

by max[T1,T2]|T1−T2|

. This expression depends only on tempera-

tures and does not depend on various details of the sys-tem (such as damping constants, inter-particle potentials,etc). The existence of such an upper bound implies a def-inite thermodynamic cost for information flow. Interest-ingly, the upper bound for the efficiency can be reachedin the adiabatic situation, when the source of informa-tion is much slower than its recipient. This fact to someextent resembles the reachability of the Carnot bound forthermodynamic efficiency of heat-engines and refrigera-

tors [20]. The upper bound max[T1,T2]|T1−T2|

for the efficiency

of information flow can be well surpassed in certain non-stationary, transient situations. Even then the efficiencyof information flow is limited from above via the physicalparameters of the system.

We shall argue that there is a clear parallel in the def-inition of information flow and heat flow. This is ad-ditionally underlined by the fact that in the stationarystate both heat and information flow from higher tem-peratures to lower ones. Moreover, the efficiency of heatflow—defined accordingly as the ration of the heat flowand heat dissipation rate—appears to be limited from

above by the same factor max[T1,T2]|T1−T2|

. There is however an

important complementarity here: the upper bound forthe efficiency of heat flow is is reached exactly for thatsetup which is the worst one for the efficiency of infor-mation flow; see section VIII.

The information flow ı2→1 characterizes the pre-dictability of (future of) 1 (first particle) from the view-point of 2 (second particle). Another aspect of infor-mation processing in stochastic systems concerns pre-dicting by 1 its own future, and the help provided by2 in accomplishing this task. This is essentially the no-tion of Granger-causality first proposed in econometricsfor quantifying causal relation between coupled stochas-tic processes [33, 34], and formalized in information-theoretic terms via the concept of transfer entropy[35, 36]. It appears that this type of information pro-cessing does not require any thermodynamic cost, i.e., thetransfer entropy—in contrast to information flow—doesnot necessarily nullify at equilibrium. Despite of this,there are interesting relations between the transfer en-tropy and information flow, which are partially uncov-ered in section IX.

The paper is organized as follows. Section II remindsthe definition of entropy and mutual information; thisreminder is continued in Appendix A. Section III definesthe class of models to be studied. In section IV we discuss

3

in detail the information-theoretic definition of informa-tion flow. This discussion is continued in section V withrelating the information flow to the entropy flow. Thesame section recalls the concepts of entropy productionand heat dissipation. The efficiency of information flow isdefined and studied in section VI. Many of the obtainedresults will be illustrated via an exactly solvable exampleof coupled harmonic oscillators; see section VII. SectionVIII studies the efficiency of heat flow, while in sectionIX we compare the concept of information flow with thenotion of transfer entropy. Our results are shortly sum-marized in section X. Some technical questions are rele-gated to Appendices.

II. BASIC CONCEPTS: ENTROPY AND

MUTUAL INFORMATION

The purpose of this section is to recall the definitionof entropy and mutual information.

A. Entropy

How can one quantify the information content of a ran-dom variable X with realizations x1, . . . xn and probabil-ities p(x1), . . . p(xn)? This is routinely done by means ofthe entropy

S[X ] = −∑n

k=1p(xk) ln p(xk). (1)

S[X ] is well known in physics. Its information-theoreticmeaning, which is based on the law of large numbers, isreminded in Appendix A. One can arrive at the sameform (1) by imposing certain axioms, which are intu-itively expected from the notion of uncertainty or theinformation content [25, 26, 27].

For a continuous random variable X the entropy con-verges to

S[X ] = −∫

dxP (x) ln P (x) + additive constant, (2)

where P (x) is the probability density of X , and where theadditive constant is normally irrelevant, since it cancelsout when calculating any entropy differences. The quan-tity e−

R

dx P (x) lnP (x) characterizes the [effective] volumeof the support of P (x) [25, 26, 27].

B. Mutual Information

We shall consider the task to predict some present stateY (t) of one subsystem from the present state X(t) ofanother subsystem and vice versa. For this purpose weintroduce two dependent random variables X and Y withrealizations x1, . . . xn and y1, . . . yn and joint probabilities p(xk, yl) n

k,l=1.

Assume that we learned a realization xl of X . Thisallows to redefine the probabilities of various realizationsof Y : p(yk) → p(yk|xl), where p(yk|xl) is the conditionalprobability. Due to this redefinition also the entropy Ychanges: S[Y ] → S[Y |xl] = −∑n

k=1p(yk|xl) ln p(yk|xl).Averaging S[Y |xl] over p(xl) we get

S[Y |X ] = −∑n

k,l=1p(yk, xl) ln p(yk|xl).

This conditional entropy characterizes the average resu-dial entropy of Y : S[Y |X ] < S[Y ] 3. If there is a bijectivefunction f(.) such that Y = f(X) (X = f−1(Y )), thenS[Y |X ] = 0. We have S[Y |X ] = S[Y ] for independentrandom variables X and Y .

The mutual information I[Y : X ] between X and Yis that part of entropy of Y , which is due to the missingknowledge about X . To define I[Y : X ] we subtract theresidual entropy S[Y |X ] from the unconditional entropyS[Y ]:

I[Y : X ] = S[Y ] − S[Y |X ] (3)

=∑n

k,l=1p(xk, yl) ln

p(xk, yl)

pX(xk)pY (yl), (4)

where pX and pY are the marginal probabilities pX(xk) =∑nl=1p(xk, yl) and pY (yl) =

∑nk=1p(xk, yl).

The mutual information is non-negative, I[Y : X ] ≥0, symmetric, I[Y : X ] = I[X : Y ], and characterizesthe entropic response of one variable to fluctuations ofanother. For two bijectively related random variablesI[X : Y ] = S[X ], and we return to the entropy. Forindependent random quantities X and Y , I[X : Y ] =0. Conversely, I[X : Y ] = 0 imlpies that X and Y areindependent. Thus I[X : Y ] is a non-linear correlationfunction between X and Y .

The information-theoretic meaning of the mutual in-formation I[X : Y ] is recalled in Appendix A. I[X : Y ]is related to the information shared via a noisy channelwith input X and output Y , or, alternatively, with inputY and output X .

For continuous random variable X and Y with the jointprobability density P (x, y), respectively, the mutual in-formation reads

I[X : Y ] =

∫dxP (x, y) ln

P (x, y)

PX(x)PY (y), (5)

where additive constants have cancelled after taking thedifference in (3).

3 Note that generally S[Y |xl] < S[Y ] does not hold, i.e., it is nottrue that any single observation reduces the entropy. Such areduction occurs only in average.

4

III. MODEL CLASS: TWO COUPLED

BROWNIAN PARTICLES

Consider two brownian particles with coordinates x =(x1, x2) interacting with two independent thermal bathsat temperatures T1 and T2, respectively, and subjectedto a potential [Hamiltonian] H(x). The correspondingtime-dependent random variables will be denoted via(X1(t), X2(t) ); their realizations are (x1, x2).

The overdamped limit of the Brownian dynamics isdefined by the following two conditions [46]: i) The char-acteristic relaxation time of the (real) momenta mxi ismuch smaller than the one of the coordinates. This con-dition is satisfied due to strong friction and/or small mass[46]. ii) One is interested in times which are much largerthan the relaxation time of the momenta, but which canbe much smaller than [or comparable to] the relaxationtime of the coordinates. Under these conditions the dy-namics of the system is described by Langevin equations[46]:

0 = −∂iH − Γixi + ηi(t), (6)

〈ηi(t)ηj(t′)〉 = 2 Γi Ti δijδ(t − t′) i, j = 1, 2,

where Γi are the damping constants, which characterizethe coupling of the particles to the respective baths, δij

is the Kronecker symbol, and where ∂i ≡ ∂/∂xi. It isassumed that the relaxation time toward the total equi-librium (where T2 = T1) is much larger than all consid-ered times; thus for our purposes T2 and T1 are constantparameters.

Eq. (6) comes from the Newton equation (mass × ac-celeration = conservative force + friction force + ran-dom force) upon neglecting the mass × acceleration dueto strong friction and/or small mass [46]. Among manyother realizations, Eq. (6) may physically be realized viatwo coupled RLC circuits. Then Γi = Ri corresponds tothe resistance of each circuit, xi is the charge, while thenoise ηi refers to the random electromotive force. Theoverdamped regime would refer here to small inductanceLi and/or large resistance Ri, while the Hamiltonianpart H(x1, x2) collects separate effects of capacitancesC1 and C2, as well as capacitance-capacitance coupling,

e.g., H =x21

2C1+

x22

2C2+ κx1x2 in the harmonic regime.

This example will be studied in section VII.Below we use the following shorthands:

∂t ≡ ∂/∂t, ∂i ≡ ∂/∂xi, x = (x1, x2), dx = dx1dx2,

y = (y1, y2), dy = dy1dy2. (7)

The joint probability distribution P (x1, x2; t) satisfiesthe Fokker-Planck equation [46]

∂tP (x; t) +∑2

i=1∂iJi(x; t) = 0, (8)

ΓiJi(x; t) = −P (x; t) ∂iH(x) − Ti∂iP (x; t), (9)

where J1, J2 are the currents of probability. Eq. (8) issupplemented by the standard boundary conditions

P (x1, x2; t) → 0 when x1 → ±∞ or x2 → ±∞. (10)

A. Chapman-Kolmogorov equation.

To put our discussion in a more general context, let usrecall that the process described by (8, 9) is Markovianand satisfies the Chapman-Kolmogorov equation [46]:

P (x; t + τ) =

∫dyP (x; t + τ |y; t)P (y; t), (11)

where for τ → 0 the conditional probability densityP (x; t + τ |y; t) is written as [46]

P (x; t + τ |y; t) = δ(x1 − y1)δ(x2 − y2)

+τ δ(x2 − y2)G1(x1|y1; x2) + τ δ(x1 − y1)G2(x2|y2; x1)

+O(τ2), (12)

where for i = 1, 2 we have defined

G1(x1|y1; x2) (13)

≡ 1

Γ1∂1 [δ(y1 − x1)∂1H(x) + T1∂1δ(y1 − x1)] .

G2(x2|y2; x1) (14)

≡ 1

Γ2∂2 [δ(y2 − x2)∂2H(x) + T2∂2δ(y2 − x2)] .

Note from (12–14) that

P (x1; t + τ |y; t)P (x2; t + τ |y; t)

= P (x1, x2; t + τ |y; t) + O(τ2), (15)

which means that the conditional dependence of X1(t+τ)and X2(t+τ), given X1(t) and X2(t) vanishes with secondorder in τ .

Eqs. (8, 9) are recovered after substituting (12–14) into(11) and noting P (x; t+τ) = P (x; t)+τ∂tP (x; t)+O(τ2):

∂tP (x; t) =

∫dy1G1(x1|y1; x2)P (y1, x2; t)

+

∫dy2G2(x2|y2; x1)P (x1, y2; t). (16)

IV. INFORMATION-THEORETICAL

DEFINITION OF INFORMATION FLOW

A. Task

We consider the task of predicting gain (or loss) of thefuture of subystem 1 from the present state of subsys-tem 2. This task can by quantified by the informationflow ı2→1, which is defined via the time-shifted mutualinformation

ı2→1(t) = ∂τ I[ X1(t + τ) : X2(t) ] |τ→+0 (17)

= limτ→+01

τ(I[X1(t + τ) : X2(t)] − I[X1(t) : X2(t)]) .

ı1→2(t) is defined analogously with interchanging 1 and2.

5

Recall that the mutual information I[X1(t+τ) : X2(t)]quantifies (non-linear) statistical dependencies betweenX1(t + τ) and X2(t), i.e., it quanties the extent of whichthe present (at time t) of X2 can predict the future ofX1. Thus a positive ı2→1(t) means that the future of X1

is more predictable for X2 than the present of X1. Thus,for ı2→1(t) > 0, 2 is gaining control over 1 (or 2 sendsinformation to 1) 4. Likewise, ı2→1(t) < 0 means that 2is loosing control over 1, or that 1 gains autonomy withrespect to 2. This is the meaning of negative informationflow.

Noting from (5) that

I[ X1(t + τ) : X2(t) ] =

∫dx1dy2P2(y2; t) ×

P1|2(x1; t + τ |y2; t) lnP1|2(x1; t + τ |y2; t)

P1(x1; t + τ),

we work out ı2→1(t) with help of (12–14):

ı2→1 =

∫dy dx1G1(x1|y1; y2)P (y; t) ln

P1|2(x1|y2; t)

P1(x1; t).

(18)

Employing now (8, 9) and the boundary conditions (10)we get from (18)

ı2→1(t) =

∫dx ln

[P1(x1; t)

P (x; t)

]∂1J1(x; t). (19)

Parametrizing the Hamiltonian as

H(x1, x2) = H1(x1) + H2(x2) + H12(x1, x2), (20)

where H12(x1, x2) is the interaction Hamiltonian, we ob-tain from (8, 9, 19)

ı2→1(t) =1

Γ1

∫dxP (x; t) [ ∂1H12(x)

+T1∂1 lnP (x; t) ] ∂1 lnP1(x1; t)

P (x; t). (21)

The time-shifted mutual information was employed forquantifying information flow in reaction-diffusion sys-tems [29], neuronal ensembles [30], coupled map lattices[31], and ecological dynamics [32].

B. Basic features of information flow

1. As deduced from (19), the information flow is gen-erally not symmetric

ı2→1(t) 6= ı1→2(t),

4 For ı2→1(t) > 0, 2 is like a chief sending orders to its subordinate1; the fact that 1 will behave according to these orders makes itsfuture more predictable from the vewpoint of the present of 2.

but the symmetrized information flow (17) is equal to therate of mutual information

ı2→1(t) + ı1→2(t) =d

dtI[X1(t) : X2(t)]. (22)

While the mutual information is symmetric with re-spect to inter-changing its arguments I[X1(t) : X2(t)] =I[X2(t) : X1(t)] and it quantifies correlations betweentwo random variables, the information flow is capableof distinguishing the source versus the recipient of in-formation: ı2→1(t) > 0 means that 2 is the source ofinformation, and 1 is its recipient.

For ı2→1(t) > 0 and ı1→2(t) > 0 we have a feedbackregime, where 1 and 2 are both sources and recipientsof information. Now the interaction between 1 and 2builts up the mutual information I[X1(t) : X2(t)]; see(22). In contrast, for ı2→1(t) < 0 and ı1→2(t) < 0 bothparticles are detached from each other, and the mutualinformation naturally decays.

For ı2→1(t) > 0 and ı1→2(t) < 0 we have one-wayflow of information: 2 is source and 1 is recipient; like-wise, for ı2→1(t) < 0 and ı1→2(t) > 0, 1 is source and2 is recipient. A particular, but important case of thissituation is when the mutual information is conserved:ddt

I[X1(t) : X2(t)] = 0. This is realized in a stationarycase; see below. Now due to ı2→1(t) + ı1→2(t) = 0 theinformation behaves as a conserved resource (e.g., as en-ergy): the amount of information lost by 2 is received by1, and vice versa. Another example of one-way fow ofinformation is ı2→1(t) > 0 and ı1→2(t) ≃ 0.

2. The information flow ı2→1 can be represented as[see (3, 17)]

ı2→1 =dS[X1(t)]

dt− ∂τS[X1(t + τ) |X2(t)]|τ→0. (23)

The first term in the RHS of (23) is the change of themarginal entropy of X1, while the second term is thechange of the conditional entropy of X1 with X2 beingfrozen to the value X2(t). In other words, ı2→1(t) is thatpart of the entropy change of X1 (between t and t +τ), which exists due to fluctuations of X2(t); see sectionII B. This way of looking at the information flow is closeto that suggested in [40]; see also [41, 42] for relatedworks. Appendix B studies in more detail the operationalmeaning of the freezing operation.

3. Eq. (21) implies the information flow ı2→1(t) can bedivided in two components: a force-driven part ıF2→1(t)and bath-driven (or fluctuation-driven) part ıB2→1(t)

ı2→1(t) = ıF2→1(t) + ıB2→1(t),

ıF2→1(t) ≡1

Γ1

∫dxP [∂1H12] ∂1 ln

P1

P, (24)

ıB2→1(t) = −T1

Γ1

∫dxP1 P2|1 [∂1 lnP2|1]

2, (25)

where for simplicity we omitted all integration variables.The force-driven part ıF2→1(t) nullifies together with theforce −∂1H12 acting from the second particle on the first

6

particle. The fluctuation-driven part ıB2→1(t) nullifies to-gether with the bath temperature T1 (which means thatthe random force acting from the first bath is zero).

Note that although ıF2→1(t) is defined via the interac-tion Hamiltonian H12, it does not suffer from the knownambiguity related to the definition of H12. That is re-defining H12(x) via H12(x) → H12(x) + f1(x1) + f2(x2),where f1(x1) and f2(x2) are arbitrary functions will notalter ıF2→1(t) (and will not alter ı2→1(t), of course). Thus,changes in separate Hamiltonians H1(x1) and H2(x2)that do not alter the probabilities (e.g., sudden changes)do not influence ıF2→1(t). In contrast, sudden changes ofthe interaction Hamiltonian will, in general, contributeto ıF2→1(t).

The bath-driven contribution ıB2→1(t) into the infor-mation flow is negative, which means that for 2 to bea source of information, i.e., for ı2→1(t) > 0, the force-driven part ıF2→1(t) should be sufficiently positive. Inshort, there is no transfer of information without force.

4. It is seen from (19) [or from (21)] that if thevariables X1 and X2 are independent, i.e., P (x; t) =P1(x1; t)P2(x2; t) the information flow ı2→1 nullifies:

ı2→1(t) = ı1→2(t) = 0 for P = P1P2. (26)

In fact, both ıF2→1(t) and ıB2→1(t) nullify for P = P1P2.If the two particles were interacting at times t < tswitch,

but the interaction Hamiltonian H12 [see (20)] is switchedoff at t = tswitch the information flow ı2→1 = ıB2→1(t) willbe in general different from zero for times trelax+tswitch >t > tswitch—where trelax is the relaxation time— sinceat these times the common probability will be still non-factorized, P 6= P1P2. However, since now ı2→1(t) =ıB2→1(t) < 0 the particles can only decorrelate from eachother: neither of them can be a source of information foranother.

We note that ıB2→1(t) = −T1

Γ1

∫dx1P1(x1)F1(x1) in (25)

is proportional to the average Fisher information F1(x1)[27]:

F1(x1) ≡∫

dx2 P2|1(x2|x1) [∂1 lnP2|1(x2|x1)]2.

1/F1(x1) is the minimal variance that can be reachedduring any unbiased estimation of x1 from observing x2

[27]. In the sense of estimation theory F1(x1) quantifiesthe information about x1 contained in x2, since F1(x1) islarger for those distributions P2|1(x2|x1) whose support is

concetrated at x2 ≃ x15. We conclude that once the in-

teraction between 1 and 2 is switched off, 1 gets detached(diffuses away) from 2 by the rate equal to the diffusionconstant T1

Γ1times the average Fisher information about

x1 contained in x2.

5 For various applications of the Fisher information in the physicsof Brownian motion see [45].

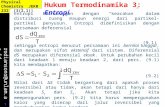

FIG. 1: Time-discretized version of the joint dynamical evo-lution.

5. To visualize information flow, we time-discretizethe two-particle system and consider the two time seriesX1(t), X2(t) with t = 1, 2, . . .. We model the systemdynamics by a first order Markov process, as being thediscrete analogue of a first order differential equation. Itis described by the graphical model depicted in Fig. 1.

The graph is indeed meant in the sense of a causalstructure [28], where an arrow indicates direct causal in-fluence. In particular, the arrows on Fig. 1 mean thatX1(t + 1) and X2(t + 1) become independent after con-ditiong over (X1(t), X2(t) ); see (15) in this context. As-sume, for the moment, that the arrrow between X2(t)and X1(t + 1) were missing, e.g., X2(t) is simply a pas-sive obervation of X1(t). Then X2(t) and X1(t + 1) areconditionally independent given X1(t). Now the dataprocessing inequality [27] imposes negativity of the (time-discretized) information flow

I[X1(t + 1) : X2(t)] − I[X1(t) : X2(t)] ≤ 0 , (27)

because X1(t + 1) is obtained from X1(t) by a stochas-tic map (which can never increase the information aboutX2(t) without having access to this quantity). Expres-sion (27) can, however, be properly below zero if thebath dissipates some of the information. If (27) is posi-

7

tive there must be an arrow from X2(t) to X1(t+1), i.e.,there is information flowing from X2 to X1. However, thepresence of such an arrow does not guarantee positivityof (27) because the amount of information dissipated bythe bath can exceed the one provided by the arrow. Wecan nevertheless interpret I[X1(t+1) : X2(t)]− I[X1(t) :X2(t)] as the information in the sense of a net effect.

V. THERMODYNAMIC ASPECTS OF

INFORMATION FLOW

A. Information flow nullifies in equilibrium

So far we have discussed the formal definition of in-formation flow ı2→1. This definition relates ı2→1 to theconcept of mutual information. It is however expectedthat there should be a more physical way of understand-ing ı2→1, since the concepts of entropy and entropy floware defined and discussed in thermodynamics of non-equilibrium systems [48, 49, 50, 53]. We now turn tothis aspect.

Recall that the Fokker-Planck equation (8, 9) describesa system interacting with two thermal baths at differenttemperatures T1 and T2. If T1 = T2 = T then the two-particle system relaxes with time to the Gibbs distribu-tion with the common temperature T [46]:

Peq(x) =1

Ze−βH(x), Z =

∫dx e−βH(x). (28)

An important feature of the equilibrium probabilitydistribution (28) is that the currents of probability (9)do not depend on time and explicitly nullify in that state

J1(x) = J2(x) = 0. (29)

This detailed balance feature— which is both necessaryand sufficient for equilibrium— indicates that in the equi-librium state there is no transfer of any physical quantity,e.g., there is no transfer of energy (heat).

Eq. (19) implies that the same holds for ı2→1: in theequilibrium state there is no information flow,

ı2→1 = ı1→2 = 0.

In other words, the transfer of information should alwaysbe connected with a certain non-equilibrium situation.One may distinguish several types of such situations:

i) Non-stationary (transient) states, where the jointdistribution P (x1, x2; t) is time-dependent.

ii) Stationary state, but non-equilibrium states real-ized for T1 6= T2. Now the probability currents J1 and J2

are not zero (only ∂1J1 + ∂2J2 = 0 holds), and so are theinformation transfer rates ı2→1 and ı1→2. However, sincethe state is stationary, their sum nullifies due to (22)

ı2→1 + ı1→2 = 0. (30)

This on indicates on one-way flow of information. Be-low we clarify how this flow relates to the temperaturedifference.

iii) Non-equilibrium states can be maintained exter-nally by time-varying conservative forces, or, alterna-tively, by time-independent non-conservative forces ac-companied by cyclic boundary conditions. Here we donot consider this type of non-equilibrium.

B. Entropy production and heat dissipation.

A non-equilibrium state will show a tendency towardsequilibrium, or, as expressed by the second law, by theentropy production of the overall system (in our case thebrownian particles plus their thermal baths). Since forthe model (6, 8, 9) the baths are in equilibrium and thecause of non-equilibrium is related to the brownian par-ticles, the overall entropy production can be expressedvia the variables pertaining to the brownian particles[48, 49, 50]. Recall (16) and define for i = 1, 2

diQ

dt= −

∫dxH(x) ∂iJi(x; t), (31)

diS

dt=

∫dx [ lnP (x; t) ] ∂iJi(x; t), (32)

and note that these quantities satisfy

ℓi =diS

dt− βi

diQ

dt(33)

= βiΓi

∫dx

J2i (x; t)

P (x; t)≥ 0, (34)

where ℓ1 = ℓ2 = 0 holds in the equilibrium only, whereJ1 = J2 = 0; see (29).

Our discussion in section III A implies that diQdt

is thechange of energy of the two-particle system due to thedynamics of xi. Since in the overdamped regime thisdynamics is driven by the bath at temperature Ti, we seethat diQ

dtis the heat received by the two-particle system

from the bath at temperature Ti [50, 53]. Thus the energy

(heat) recieved by the bath is −diQdt

. Likewise, diSdt

is thechange of entropy of the two-particle system due to thedynamics of xi. Then (34) is the local version of theClausius inequality [50, 53], which implies that ℓi is the[local] entropy produced per time due to the interactionwith the bath at temperature Ti, while

ℓ = ℓ1 + ℓ2 =dS

dt− β1

d1Q

dt− β2

d2Q

dt(35)

is the total entropy produced per unit of time in theoverall system: two equilibrium baths plus two brownianparticles [46, 48, 50, 53].

Noting our discussion in Appendix C one can see thatℓi

βiΓi

is equal to the space-average∫

dxP (x; t)v2i (x; t),

where vi(x; t) is the coarse-grained velocity; see (C4, C4)

8

6. Similarly, integrating (31) by parts one notes that the

−diQdt

amounts to the space-averaged work done by the

potential force −diQdt

=∫

dxP (x; t)vi(x; t)∂iH(x) 7.

Note that in general ℓi (and thus ℓ) are non-zero forthe non-equilibrium stationary state realized for T1 6= T2,since then Ji(x) 6= 0. This is consistent with the inter-pretation of these states as “metastable” non-equilibriumstates, where some heat flows between the two baths, butthe temperatures Ti do not change in time due to themacroscopic size of the baths (these temperatures wouldchange for very large times, which are, however, beyondthe time-scales considered here.)

Likewise, Tiℓi is the heat dissipated per time due tothe interaction with the bath at temperature Ti, whileT1ℓ1 +T2ℓ2 is the total dissipated energy (heat) per time[53].

C. Equivalence between flow of mutual

information and flow of entropy

The picture that emerges from the above considerationis as follows. The entropy is produced with the rate ℓ1

somewhere at the interface between the first brownianparticle and its bath. Eq. (34) expresses the fact that d1S

dtis the rate by which a part of the produced entropy flowsinto the two-particle system. The rest of the producedentropy goes to the bath with the rate −β1

d1Qdt

. This isconsistent with the conservation of energy and the factthat the bath itself is in equilibrium; then −d1Q

dtis the

rate by which heat goes to the bath, and after dividingby T1—since the bath is in equilibrium—this becomesthe rate with which the bath receives entropy.

The corresponding argumentation can be repeated forthe second particle and its thermal bath.

The thermodynamic definition of entropy flow eventu-ally reads

ı2→1 =dS1

dt− d1S

dt. (36)

Once d1Sdt

is the entropy entering into the two-particle

system via the first particle, then subtracting d1Sdt

from

6 Note from (34) that for T1 = T2 —where the non-stationarity isthe sole cause of non-equilibrium— the heat dissipation, or thetotal entropy production ℓ times T1 = T2 = T , is equal to thenegative rate of free energy [46]: −Tℓ = dF

dt≡ d

dt

R

dx [H(x) +T ln P (x; t) ] P (x; t). Note that the free energy here is definedwith respect to the bath temperature T and that P (x; t) is anarbitrary non-equilibrium distribution that relaxes to the equi-librium: Peq(x) ∝ exp [−βH(x)]. The difference F−Feq betweenthe non-equilibrium and equilibrium free energy is the maximalwork that can be extracted from the non-equilibrium system incontact with the thermal bath [20].

7 A similar argument to the physical meaning of diQ

dtcan be given

via stochastic energetics [56].

the entropy rate dS1

dtof the first particle itself, we get the

entropy flow from the second particle to the first one.It should be now clear that this definition of entropy

flow is just equivalent to the definition of informationflow (17). Eq. (36) can also be written as

ı2→1 =d1S1

dt+

d1S2

dt− d1S

dt=

d1S1

dt− d1S

dt, (37)

making clear again that ı2→1 is the change of mutualinformation due to dynamics of the first particle.

VI. EFFICIENCY OF INFORMATION FLOW

Once it is realized that non-zero information transferis possible only out of equilibrium, the existence of sucha transfer is related to entropy production. This is anentropic cost of the information transfer. One can definea dimensionless ratio:

η2→1 =ı2→1

ℓ, (38)

which is the desired output (= information transfer) overthe irreversibility cost (= total entropy production). Thisquantity characterizes the efficiency of information trans-fer. A large η is desirable, since it gives larger informationtransfer rate at lesser cost.

Note that η2→1 is more similar to the coefficient ofpreformance of thermal refrigerators—which is also de-fined as the useful output (heat extracted from a colderbody) to the cost (work)—than to the efficiency of heatengines. The latter is defined as the useful output overthe resource entered into the engine. We shall still callη2→1 efficiency, but this distinction is to be kept in mind.

Below in several different situations we shall establishupper bounds on η. These determine the irreversibility(entropy) cost of information transfer. The usage of theefficiency for informational processes was advocated in[51].

A. Stationary case

In the stationary two-temperature scenario the jointprobability P (x1, x2) and the probability currentsJ1(x1, x2) and J2(x1, x2) do not depend on time. Thusmany observables—e.g., the average energy of the twoBrownian particles, their entropy, entropies of separateparticles— do not depend on time either.

Employing (35), ı2→1 = −d1Sdt

= d2Sdt

and (33) we get

ℓ = (β2 − β1)d1Q

dt, T2 ı2→1 +

ℓ

β2 − β1= T2ℓ2 ≥ 0,

ı2→1 =T1ℓ

T2 − T1+ ℓ2. (39)

After interchanging the indices 1 and 2 we get the anal-ogous equation for ı1→2. Recalling that (30) holds in the

9

stationary state, we get

ı2→1 =T2ℓ

T2 − T1− ℓ1. (40)

First of all, (39) implies that ı2→1 ≥ 0 for T2 ≥ T1, whichmeans that in the stationary two-temperature scenariothe information (together with heat) flows from higherto lower temperature.

For the efficiency (38) we get from (39, 40):

η2→1 =T1

T2 − T1+

ℓ2

ℓ1 + ℓ2(41)

=T2

T2 − T1− ℓ1

ℓ1 + ℓ2. (42)

This then implies [since ℓ1 ≥ 0, ℓ2 ≥ 0]

T1

T2 − T1≤ η2→1 ≤ T2

T2 − T1. (43)

For T2 > T1, η2→1 is positive and is bounded from aboveby T2

T2−T1, which means that in the stationary state the

ratio of the information transfer (rate) over the entropyproduction (rate) is bounded.

It is important to note from (43) that for T2 approach-ing T1 from above, T2 → T1, the efficiency of informationtransfer tends to plus infinity, since in this reversible limitthe entropy production, which should be always positive,naturally scales as (T1 −T2)

2, while the information flowscales as T2−T1. Thus very slow flow of information canbe accompanied by very little entropy production suchthat the efficiency becomes very large. A similar argu-ment led some authors to conclude that there is no funda-mental cost for the information transfer at all [12, 13, 16].Our analysis makes clear that this interpretation wouldbe misleading. It is more appropriate to to say that in thereversible limit the thermodynamic cost, while becomingless restrictive [since the efficiency can be very large], iscertainly still there, because the difference T2 −T1 is, af-ter all, always finite; otherwise the very information flowwould vanish.

It remains to stress that the relations (39–43) are gen-eral and do not depend on the details of the consid-ered Brownian system. They will hold for any bi-partiteMarkovian system which satisfies to master equation (16)and local formulations of the second law (33, 34).

B. Reachability of the upper efficiency bound

In the remaining part of this section we show that theupper bound (43) for the efficiency of information flow isreached in a certain class of Brownian systems satisfyingtime-scale separation.

1. The stationary probability in the adiabatic case.

For T1 = T2, the stationary probability distribu-tion P (x) of the two-particle system (8, 9) is Gibbsian:

P (x) ∝ e−β1H(x). For non-equal temperatures T1 6= T2 ageneral expression for this stationary probability can bederived in the adiabatic situation, where x2 changes intime much slower than x1 [52, 53, 54, 55]. This is ensuredby

ε ≡ Γ1/Γ2 ≪ 1. (44)

Below we present a heuristic derivation of the stationarydistribution P (x) in the order ε0 [52, 53, 54, 55]; a moresystematic presentation, as well as higher-order correc-tions in ε are discussed in [53].

On the time scales relevant for x1, the variable x2 isfixed, and the conditional probability P1|2(x1|x2) is Gibb-sian [β1 = 1/T1]:

P1|2(x1|x2) =e−β1H(x)

Z(x2), Z(x2) =

∫dx1 e−β1H(x).(45)

The stationary probability P2(x2) for the slow variableis found by noting that on the times relevant for x2,x1 is already in the conditional steady state. Thus theforce ∂2H(x1, x2) acting on x2 can be averaged overP1|2(x2|x1)

∫dx1 ∂2H(x)P1|2(x1|x2) = ∂2F (x2),

where F (x2) = −T1 lnZ(x2) is the conditional free en-ergy. Once the averaged dynamics of x2 is governedby the effective potential energy F (x2), the stationaryP2(x2) is Gibbsian at the inverse temperature β2 = 1/T2:

P2(x2) =e−β2F (x2)

Z , Z =

∫dx2 e−β2F (x2).

Thus the joint stationary probability is

P (x) = P2(x2)P1|2(x1|x2) =1

Z e(β1−β2)F (x2)−β1H(x).

(46)

For further calculations we shall need the stationarycurrent J2(x), which reads from (9, 20, 46)

J2(x) =T1 − T2

T1Γ2P (x)φ(x), (47)

φ1→2(x) ≡ −∂2H12(x) +

∫dyP1|2(y|x2)∂2H12(y, x2),

(48)

where φ1→2(x) is the force acting on 2 from 1 minus itsaverage over the fast x1. Both J1(x) and J2(x) are of thesame order O( ε

Γ1) = O( 1

Γ2).

Let us describe how to obtain J1(x) (we shall need be-low at least an order of magnitude estimate of J1). Uponsubstituting (46) into (9) we find that the current J1(x)nullifies. This means that J1(x) has to be searched for

10

to first order in ε = Γ1/Γ2. Assuming for the corrected

probability P (x) = P (x)[1 − εA(x)] + O(ε2) we get

J1(x) = εT1

Γ1P (x)∂1A(x), (49)

where A(x) does not depend on ε and is to be foundfrom the stationarity equation ∂1J1+∂2J2 = 0. Concreteexpressions for A(x) are presented in [53].

2. Entropy production and heat dissipation in the adiabatic

stationary case.

Using (34) together with (47) and (49) we get for thepartial entropy productions:

ℓ1 = εT1

Γ2

∫dx [∂1A(x)]2 P (x), (50)

ℓ2 =(T1 − T2)

2

T2T 21 Γ2

∫dxφ2

1→2(x)P (x). (51)

Note that ℓ1 contains an additional small factor ε ascompared to ℓ2. This is natural, since the fast system x1

is in a local thermal equilibrium. Thus in the consideredorder ε0 the overall entropy production ℓ is dominatedby the entropy production of the slow sub-system:

ℓ1 = 0, ℓ = ℓ2. (52)

Thus the heat dissipation is simply T2ℓ = T2ℓ2. Thephysical meaning of ℓ1 = 0 is that the fast system doesnot produce entropy [and does not dissipate heat], sincein its fast characteristic times it sees the slow variableas a frozen [not fluctuating] field. Thus the fast systemremains in local equilibrium.

3. Efficiency of information transfer in the adiabatic

stationary case.

Employing (52) we can immediately see from (42) thatthe efficiency in the considered order of ε reads:

η2→1 =T2

T2 − T1. (53)

Thus, the efficiency reaches its maximal value when thehotter system is the slowest one.

Recall that in the adiabatic limit the information flowis of order O(1/Γ2), i.e., it is small on the characteristictime scale of the fast particle, but sizable on the charac-teristic time of the slow particle. In more detail, using(46, 47) we get for the information flow ı2→1 from theslow to the fast particle:

ı2→1 =T2 − T1

T 21 Γ2

∫dxφ2

1→2(x)P (x). (54)

The reachability of the upper bound for the efficiencyη2→1 appears to resemble the reachability of the optimal

Carnot efficiency for heat engines. However, for heat en-gines the Carnot efficiency is normally reached for pro-cesses that are much slower than any internal character-isitc time of the engine working medium. In contrast, theinformation flow in (53) is sizable on the time-scale Γ2

(which is one of the internal time-scales).

VII. EXACTLY SOLVABLE MODEL: TWO

COUPLED HARMONIC OSCILLATORS

We examplify the obtained results by the exactly solv-able model of two coupled harmonic oscillators. We shallalso employ this model to check whether the upper bound(43) may hold in the non-stationary situation.

The Hamiltonian [or potential energy] is given as

H(x) =a1

2x2

1 +a2

2x2

2 + bx1x2, (55)

where the constants a1, a2 and b have to satisfy

a1 > 0, a2 > 0, a1a2 > b2, (56)

for the Hamiltonian to be positively defined.Let us assume that the probability of the two-particle

system is Gaussian [this holds for the stationary proba-bility as seen below]

− lnP (x) =A1

2x2

1 +A2

2x2

2 + Bx1x2 + constant, (57)

Here we did not specify the irrelevant normalization con-stant, and the constants A1, A2 and B read

(A1 BB A2

)−1

=

(〈x2

1〉 〈x1x2〉〈x1x2〉 〈x2

2〉

)≡

(σ11 σ12

σ12 σ22

),

(58)

where 〈. . .〉 is the average over the probability distribu-tion (57). All the involved parameters (A1, A2 and B)can be in general time-dependent. Note that in (57) weassumed 〈x1〉 = 〈x2〉 = 0.

For the Hamiltonian (55) and the probability (57) theinformation transfer reads from (21):

ı2→1 =σ12(T1B − b)

Γ1σ11. (59)

Likewise, we get for the local entropy production (34):

ℓ1 =T1

Γ1[ σ11(β1a1 − A1)

2 + σ22(β1b − B)2

+ 2σ12(β1a1 − A1)(β1b − B) ], (60)

where the information transfer ı1→2 and the entropy pro-duction ℓ2 are obtained from (59) and (60), respectively,by interchanging the indices 1 and 2.

11

A. Stationary case.

Provided that the stability conditions (56) hold, anyinitial probability of the two-particle system relaxes tothe time-independent Gaussian stationary probability(57) [46]. The averages 〈x2

1〉, 〈x1x2〉 and 〈x21〉 are deduced

from the stationarity equation ∂1J1(x) + ∂2J2(x) = 0:

〈x21〉 =

a2T1(a1γ1 + a2γ2) + b2γ1(T2 − T1)

(a1a2 − b2)(a1γ1 + a2γ2), (61)

〈x22〉 =

a1T2(a1γ1 + a2γ2) + b2γ2(T1 − T2)

(a1a2 − b2)(a1γ1 + a2γ2), (62)

〈x1x2〉 = − b(a2γ2T1 + a1γ1T2)

(a1a2 − b2)(a1γ1 + a2γ2), (63)

〈x21〉〈x2

2〉 − 〈x1x2〉2

=b2γ1γ2(T1 − T2)

2 + (a1γ1 + a2γ2)2T1T2

(a1a2 − b2)(a1γ1 + a2γ2)2, (64)

where

γ1 ≡ 1/Γ1, γ2 ≡ 1/Γ2,

and where the conditions 〈x1〉 = 〈x2〉 = 0 hold auto-matically. Let us introduce the following dimensionlessparameters:

ϕ =γ1a1

γ2a2=

Γ2a1

Γ1a2, ξ =

T1

T2, κ =

b2

a1a2, (65)

where ϕ is the ratio of time-scales, while κ characterizesthe interaction strength; note that 0 < κ < 1 due to (56).

Using (61–65) together with (59) and (60), we get forthe information flow, the total entropy production, andthe efficiency

ı2→1 =a2

Γ2

κ ϕ (1 − ξ) (ξ + ϕ)

κ ϕ(1 − ξ)2 + ξ(1 + ϕ)2, (66)

ℓ =a2

Γ2

(1 − ξ)2

ξ

κ ϕ

1 + ϕ, (67)

η2→1 =ξ

1 − ξ

(ξ + ϕ)(1 + ϕ)

κϕ(1 − ξ)2 + ξ(1 + ϕ)2. (68)

Let us for simplicity assume that ξ < 1, i.e., T2 >T1. Now ı2→1 > 0. It is seen that both ı2→1 and ℓ aremonotonically increasing function of ϕ. Thus both theinformation transfer and the overall entropy productionmaximize when the system attached to the hotter bathat the temperature T2 is slower [the same holds for theoverall heat dissipation]. The efficiency η2→1 = ı2→1/ℓis also equal to its maximal value (53) in the same limitϕ → ∞; see (68).

The behaviour with respect to the dimensionless cou-pling κ is different. Now ı2→1 and ℓ are again mono-tonically increasing functions of κ. They both maximizefor κ → 1, which—as seen from (61, 62, 63)—means atthe instability threshold, where the fluctuations are verylarge. However, the efficiency η2→1 is a monotonically

decreasing function of κ. It maximizes (as a function ofκ) in the almost uncoupled limit κ → 0. Thus, as faras the behaviour with respect of the coupling constant isconcerned, there is a complementarity between maximiz-ing the information flow and maximizing its efficiency.

B. Non-stationary situation.

Once the upper bound (43) on the efficiency of informa-tion transfer is established in the stationary situation, weask whether it might survive also in the non-stationarycase. Below we reconsider the coupled harmonic oscilla-tors and show that, although the upper limit (43) can beexceeded by some non-stationary states, the efficienciesη2→1 and η1→2 are still limited from above.

We assume that a non-equilibrium probability of thetwo harmonic oscillators is Gaussian, as given by (57,58). The information flow and the entropy production aregiven by (59) and (60), respectively. Any Gaussian prob-ability can play the role of some non-stationary state,which is chosen, e.g., as an initial condition.

To search for an upper bound of the efficiency in thenon-stationary situation, we assume a Gaussian probabil-ity (57) and maximize the efficiency (38) over the Hamil-tonian, i.e., over the parameters a1, a2 and b in (55). Thestability conditions (56), which are necessary for the exis-tence of the stationary state, are not necessary for study-ing non-stationary situations (imagine a non-stationarydown-hill moving oscillator). Thus conditions (56) willnot be imposed during the maximization over a1, a2 andb.

Note that ı2→1 in (59) does not depend on a1 and a2.Since we are interested in maximizing the efficiency (38),the first step in this maximization is to minimize thelocal entropy production ℓ1 [given by (60)] over a1. Thisproduces

a1 =T1 − bσ12

σ11, ℓ1 =

T1

Γ1

(β1b − B)2

A2. (69)

The second entropy production ℓ2 is analogously minizedover a2. The resulting expression for the efficiency reads:

η2→1 =σ12

Γ1σ11

T1B − bβ1

Γ1

(T1B−b)2

A2+ β2

Γ2

(T2B−b)2

A1

. (70)

Next, we maximize this expression over b. Let us forsimplicity assume σ12 > 0, since the conclusions do notchange for σ12 < 0. The maximal value of η2→1 reads

η2→1 =T1

2|T2 − T1|[ sign(T2 − T1) +

√1 + χ ], (71)

where

χ ≡ T2Γ2A1

T1Γ1A2=

T2Γ2σ22

T1Γ1σ11, (72)

12

and where the RHS of (71) is reached for

b = − σ12

σ11σ22 − σ212

(T1 +

|T2 − T1|√1 + χ

). (73)

The information flow ı2→1 at the optimal value of b reads

ı2→1 =σ2

12

σ11

|T1 − T2|√1 + χ

. (74)

Note that the maximal value of η2→1 does not requirea strong inter-particle coupling. This coupling, as quan-tified by (73) can be small due to σ12 → 0.

Now we assume that the temperatures T1 and T2 arefixed. It is seen from (71) that taking χ sufficientlylarge—either due to a large Γ2

Γ1, which means that the

second particle is slow, or due to a large σ22

σ11, which means

that its dispersion is larger—we can achieve efficienciesas large as desired. In all these cases, for a sufficientlylarge χ, η2→1 scales as

√χ. Thus one can overcome the

stationary bound (43) via some special non-stationarystates and the corresponding Hamiltonians. We, how-ever, see from (74) that increasing the efficiency due toχ → ∞ leads to decreasing the information flow. This isthe same trend as in the stationary case; see (43).

The large values of η2→1 do not imply anything specialfor the efficiency of the inverse information transfer effi-ciency η1→2. Indeed, the partially optimized η1→2 readsanalogously to (70)

η1→2 =σ12

Γ2σ22

T2B − bβ1

Γ1

(T1B−b)2

A2+ β2

Γ2

(T2B−b)2

A1

. (75)

At the values (69, 73), where η2→1 extremizes, η1→2 as-sumes a simple form

η1→2 =T2

2(T1 − T2),

i.e., η1→2 depends only on the temperatures and can haveeither sign, depending on the sign of T1 − T2. Note thatη1→2 follows the same logics as in the stationary state:it is positive for T1 > T2.

VIII. COMPLEMENTARITY BETWEEN HEAT-

AND INFORMATION-FLOW

Contrary to the notion of information flow, the heatflow from one brownian particle to another calls for anadditional discussion. The main reason for this is thatthe brownian particles could, in general, be non-weaklycoupled to each other, and then the local energy of asingle particle is not well defined; for various opinionson this point see [59, 60]. In contrast, the entropy of asingle particle is always well-defined; recall in this contextfeature 3 in section IV B. Nevertheless, the notion of aseparate energy can be applied once there are physicalreasons for selecting a particular form of the interaction

Hamiltonian H12(x1, x2) in (20), and the average value ofH12(x1, x2) is conserved in time, at least approximately.(A particular case of this is when H12(x1, x2) is small.)Now H1(x1) can be defined as the local energy of the firstparticle, while the average energy change is

d

dt

[∫dx1P1(x1)H1(x1)

]≡ dE1

dt.

The energy flow e 2→1 from the second particle to thefirst one is defined in full analogy with (37)

e 2→1 =dE1

dt− d1Q

dt, (76)

where d1Qdt

is defined in (31). In words (76) means: the

energy change dE1

dtof the first particle is equal to the

energy e 2→1 received from the second particle plus theenergy d1Q put into the system via coupling of the firstparticle to its thermal bath.

Working out (76) we obtain

e 2→1 = −∫

dxJ1(x; t)∂1H12(x). (77)

The interpretation of (77) is straightforward via con-cepts introduced in Appendix C, where we argue that

v1(x; t) = J1(x;t)P (x;t) can be regarded as a coarse-grained ve-

locity of the particle 1. The (77) becomes the averagework done on the particle 1 by the force ∂1H12(x) gen-erated the particle 2.

The symmetrized heat flow is equal to the minuschange of the interaction energy

e 2→1 + e 1→2 = − d

dt

∫dxH12(x)P (x; t). (78)

Thus the there is a mismatch between the heat flowingfrom 1 to 2, as compared to the energy flowing from 2to 1. This mismatch is driven by the change of inter-action energy, and it is small provided that the averageinteraction energy is [approximately] conserved in time.

It is important to stress that the ambiguity in the defi-nition of heat flow, which was related to the choice of thelocal energy E1, is absent in the stationary state, sincethe the average interaction energy is conserved by con-struction. Now the choice of the single-particle energyis irrelevant, since any such definition will lead to time-independent quantity, which would then disappear from(76), dE1

dt= 0, and from the RHS of (78).

As clear from its physical meaning, the efficiency ζ2→1

of heat flow is to defined as the ratio of the heat flow overthe total heat dissipation

ζ2→1 =e 2→1

T1ℓ1 + T2ℓ2. (79)

Let us work out ζ2→1 for the two-temperature station-ary case. Analogously to (41, 42) we obtain

ζ2→1 =T1

T2 − T1+

T1ℓ1

T1ℓ1 + T2ℓ2(80)

=T2

T2 − T1− T2ℓ2

T1ℓ1 + T2ℓ2. (81)

13

Eq. (80) shows that, as expected, the heat flows fromhigher to lower temperatures. In addition, (80, 81) implythe same bounds as in (43):

T1

T2 − T1≤ ζ2→1 ≤ T2

T2 − T1. (82)

Moreover, we note that the stationary efficiencies of in-formation flow and heat flow are related as

ζ2→1η2→1 =T1T2

(T2 − T1)2. (83)

Note that the efficiencies e 2→1 and η2→1 in (83) dependin general on the inter-particle and intra-particle poten-tials, temperatures, damping constants, etc. However,their product in the stationary state is universal, i.e., itdepends only on the temperatures.

Eq. (83) implies the following complementarity: forfixed temperatures the set-up most efficient for the infor-mation transfer is the least efficient for the heat transferand vice versa. Recalling our discussion in section VI Bwe see that the upper bound T2

T2−T1for the efficiency ζ2→1

is achieved for the adiabatic stationary state, where—onthe contrary to the information transfer— the hotter sys-tem is faster, i.e., ℓ2 → 0, but ℓ1 is finite.

Note from (47, 48) the following relation between be-tween the heat flow (77) and the information flow (54):

T1ı2→1 = e 2→1,

which is valid in the adiabatic stationary state. Recallthat T1 here is the temperature of the bath interactngwith the fast particle.

Let us illustrate the obtained results with the exactlysolvable situation of two coupled harmonic oscillators; seesection VII. Recalling (55–58) and (77) we obtain

e 2→1 =a2T2

Γ2κϕ

1 − ξ

1 + ϕ, (84)

where we employed the dimensionless variables (65).Likewise, we get for the heat dissipation and the efficiencyof the heat transfer [see (66–68) for similar formulas]

T1ℓ1 + T2ℓ2 =a2T2

Γ2

κϕ(1 − ξ)2(ξ + ϕ)

ξ(1 + ϕ)2 + κϕ(1 − ξ)2, (85)

ζ2→1 =ξ(1 + ϕ)2 + κϕ(1 − ξ)2

(1 + ϕ)(ξ + ϕ)(1 − ξ). (86)

It is seen that the efficiency ζ2→1 maximizes at the in-stability threshold κ → 1, in contrast to the efficiencyη2→1 of information transfer that maximizes at the weak-est interaction; see section VII. As we already discussedabove, as a function of the time-scale ϕ, ζ2→1 maximizesfor ϕ → 0, again in contrast to the behaviour of η2→1.

IX. TRANSFER ENTROPY

A. Definition

We consider the task of predicting the future of X1

from its own past (with or without the help of the presentX2). Quantifying this task leads to a concept, whichhas been termed directed transinformation [35] or trans-fer entropy [36]. In the following we will use the lattername, as this seems to be more broadly accepted. Closelyrelated ideas were expressed in [37]. The notion of trans-fer entropy became recently popular among researchersworking in various inter-disciplinary fields; see [38] for ashort review.

Our discussion of transfer entropy aims at two pur-poses. First, the information flow and transfer entropyare two different notions, and their specific differencesshould be clearly understood, so as to avoid any confu-sion [39]. Nevertheless, in one particular, but importantcase, we found interesting relations between these twonotions.

To introduce the idea of transfer entropy let us forthe moment assume that the random quantities X1(t)and X2(t) (whose realizations are respectively the co-ordinates x1 and x2 of the brownian particles) assumediscrete values and change at discrete instances of time:t, t + τ, t + 2τ, . . .. Recalling our discussion in section II,we see that the conditional entropy S[X1(t + τ)|X1(t)] isthe entropy reduction (residual uncertainty) of X1(t+ τ)due to knowing X1(t). Likewise, S[X1(t+τ)|X1(t), X2(t)]characterizes the uncertainty of X1(t + τ) given bothX1(t) and X2(t). The difference m 2→1 is the transferentropy:

m 2→1

≡ 1

τ(S[X1(t + τ)|X1(t)] − S[X1(t + τ)|X1(t), X2(t)] )

≡ 1

τ(I[X1(t + τ) : X1(t), X2(t)] − I[X1(t + τ) : X1(t)])

=1

τ

∑x1,y1,y2

p(y1, y2; t)p(x1; t + τ |y1, y2; t) ×

lnp(x1; t + τ |y1, y2; t)

p1(x1; t + τ |y1; t). (87)

m 2→1 measures the difference between predicting the fu-ture of X2 from the present for both X1 and X2 (quan-tified by I[X1(t + τ)|X1(t), X2(t)]) and predicting thefuture of X2 from its own present only (quantified byI[X1(t + τ)|X1(t)]). Note that m 2→1 is always positive,since additional conditiong decreases the entropy. m 2→1

is also equal to the mutual information I[X1(t + τ) :X2(t)|X1(t)] shared between the present state of X2 andthe future state of X1 conditioned upon the present stateof X1.

To explain the transfer entropy versus informationflow, we consider again the discretized version in Fig. 1(where for simplicity we take τ = 1). By standard prop-

14

erties of mutual information [27], we have

I[X1(t + 1) : X2(t)] ≤ I[X1(t + 1), X1(t) : X2(t)] (88)

= I[X1(t) : X2(t)] + I[X1(t + 1) : X2(t)|X1(t)] , (89)

where the inequality in (88) is related to the strong sub-additivity feature, and where the equality in (89) is thechain rule for the mutual information [27]. Hence,

ı2→1 = I[X1(t + 1) : X2(t)] − I[X1(t) : X2(t)]

≤ I[X1(t + 1) : X2(t)|X1(t)] = m 2→1 .

The LHS (left hand side) is the discrete version of infor-mation flow, the RHS the transfer entropy. If the arrowfrom X2(t) to X1(t + 1) was absent, the modified graphwould impose [28] that X1(t + 1) and X2(t) are condi-tionally independent, given X1(t), i.e.,

m 2→1 = I[X1(t + 1) : X2(t)|X1(t)] = 0 .

This shows that the transfer entropy vanishes in this case,as it should be because there is no arrow transmittinginformation. Thus, m 2→1 characterizes the strength ofthat arrow.

For continiuous time [but still discrete variables X1

and X2] we take τ → 0 in (87) producing

m 2→1 =∑

y2,x1 6=y1

p(y1, y2; t)g1(x1|y1; y2) ×

lng1(x1|y1; y2)∑

z2g1(x1|y1; z2)P2|1(z2|y1; t)

, (90)

where g1(x1|y1; y2) is defined analogously to G1 in (12),but with discrete random variables.

Eq. (90) cannot be translated to the continuous vari-able situation simply by interchanging the probabilitiesp with the probability densities P , since attempting sucha translation leads to singularities. The proper extensionof (87) to continuous variables and continuous time reads

m 2→1 = limτ→01

τ

∫dyP (y; t)

∫P [dx1

t+τt |y; t]×

lnP [dx1

t+τt |y; t]

P [dx1t+τt |y1; t]

, (91)

where P [dx1t+τt |y; t] (P [dx1

t+τt |y1; t]) is the measure of

all paths x1(t) starting from y = (y1, y2) (from y1) attime t and ending somewhere at time t + τ , i.e., notthe final point of the path is fixed, but rather the initialtime and final times 8. This extension naturally followsgeneral ideas of information theory in continuous spaces[26]. For our situation the measures P [dx1

t+τt |y; t] and

8 Integrating P[dx1t+τt |y; t] over all paths starting from y at time

t and ending at x1 in time t+τ we get the conditional probabilitydensity P (x1, t + τ |y, t) [61].

P [dx1t+τt |y1; t] refer to the stochastic process described

by (6, 8); see [61] for an introduction to such measures.

Eq. (91) is worked out in Appendix D producing

m 2→1 =1

2T1Γ1

∫dxP (x; t)φ2

2→1(x; t), (92)

φ2→1 ≡ ∂1H12(x) −∫

dy2 ∂1H12(x1, y2)P2|1(y2|x1; t),

where φ2→1 is the force acting from 2 to 1 minus itsconditional average; compare with (48).

B. Information flow versus entropy transfer:

General differences.

Let us compare features of the entropy transfer m 2→1

to those of information flow ı2→1. We remind that dif-ference between the information flow ı2→1 and transferentropy m 2→1 stem from the fact that m 2→1 refers to theprediction of the future of X2 from its own past (with orwithout the help of the present of X1), while ı2→1 refersto the prediction gain (or loss) of the future of X2 fromthe present of X1. Thus for m 2→1 the active agent is 1predicting its own future, while for ı2→1 the active agentis 2 prediciting the future of 1.

i) Both m 2→1 and ı2→1 are invariant with respect toredefining the interaction Hamiltonian; see (25) and com-pare with information flow ı2→1.

ii) In contrast to the information flow ı2→1, the entropytransfer m 2→1 is always non-negative.

iii) In contrast to ı2→1, m 2→1 does not nullify for fac-torized probabilities P (x) = P1(x1)P2(x2), provided thatthere is a non-trivial interaction H12. This because m 2→1

is defined with respect to the transition probabilities; see(87).

iv) m 2→1 nullifies whenever there is no force actingfrom one particle to another. Recall that the force-drivenpart ıF2→1 of the information flow also nullifies togetherwith the force, albeit ıF2→1 nullifies also for factorizedprobabilities; see (25).

v) In contrast to ı2→1, m 2→1 does not nullify at equi-librium. Thus, 2 can help 1 in predicting its future atabsolutely no thermodynamic cost. However, as we haveshown, there is a definite thermodynamic cost for 2 want-ing to predict the future of 1 better than it predicts thepresent of 1.

vi) m 2→1 is not a flow, since it does not add up ad-ditively to time-derivative of any global quantity. How-ever, obviously m 2→1 does refer to some type of infor-mation processing. In fact, m 2→1 underlies the notion ofGranger-causality, which was first proposed in the con-text of econometrics [33, 34] (see [43] for a review): theratio m 2→1

m 1→2quantifies the strength of causal influences

15

from 2 to 1 relative to those from 1 to 2 9. The notion ofGranger-causality is useful as witnessed by its successfulempirical applications [33, 34, 38, 39, 43]. For m 2→1

m 1→2≫ 1

we shall tell that 2 is Granger-driving 1.

C. Information flow versus transfer entropy in the

adiabatic stationary limit

Given the differences between the information flow andthe entropy transfer it is curious to note that in the adi-abatic stationary situation (see section VI B 1) there ex-ist a direct relation between them. We recall that theadiabatic situation is special, since the eficiency of infor-mation flow reaches its maximal value there. Recall thatthis situation is defined (besides the long-time limit) bycondition (44), which means that 2 is slow, while 1 is fast;at equilibrium, when T1 = T2, this slow versus fast sepa-ration becomes irrelevant. Reminding also the definition(25) of the force-driven part ıF2→1 of the information flow,we see that

m 2→1

m 1→2= O

(Γ2

Γ1

)≫ 1,

ıF2→1

ıF1→2

= O(

Γ2

Γ1

)≫ 1. (93)

The first relation in (93) indicates that the slow systemis Granger-driving the fast one, while the second relationimplies that the same qualitative conclusion is got fromlooking at ıF2→1. This point is strengthened by notingfrom (46, 92) that in in the adiabatic, stationary, two-temperature situation we have

m 2→1 =1

2ıF2→1. (94)

A less straighforward relation holds for the action of thefast system on the slow one

m 1→2 =T1

T2

1

2iF1→2. (95)

Recall that for the considered adiabatic stationary state,it is the action of the fast on the slow that determinesthe magnitude of the information flow ı1→2 (the sign ofı1→2 is fixed by the temperature difference):

ı1→2 = −ı2→1 =T1 − T2

T1ıF1→2. (96)

It is seen that provided T2 > T1 (i.e., the slow systemis attached to the hotter bath, a situation realized forthe optimal information transfer) the Granger-driving

9 The usage of ratio m 2→1

m 1→2is obligatory, since m2→1 does not char-

acterize the absolute strength of the influence of 2 on 1. Note,e.g., that m2→1 nullifies not only for independent process X1(t)and X2(t), but also for identical (strongly coupled) processesX1(t) = X2(t); see (87). This point is made in [39].

qualitatively coincides with the causality intuition im-plied by the sign of information flow: both ı2→1 > 0 andm 2→1

m 1→2≫ 1 hold, which means that 2 predicts better the

future of 1 (ı2→1 > 0) and that 2 is more relevant forhelping 1 to predict its own future (m 2→1

m 1→2≫ 1).

It is tempting to suggest that only when the causalityintuition deduced from ı2→1 agrees with that deducedfrom m 2→1, we are closer to gain a real understanding ofcausality (still without doing actual interventions). In-terestingly, the present slow-fast two-temperature adia-batic system was considered recently from the viewpointof other non-interventional causality detection methodsreaching a similar conclusion: unambiguous causality canbe detected in this system, if the slow variable is attachedto the hot thermal bath [62].

For the harmonic-oscillator example treated in sectionVII we obtain

m 2→1 =b2

2A2T1Γ1, ıF2→1 =

bB

A2Γ1. (97)

Employing formulas (57, 58) and (61–64) for the station-ary state we note the following relation

ıF2→1

ıF1→2

=T1

T2

m 2→1

m 1→2(98)

=1

ξ

κ(ξ − 1) + ϕ + 1

κ(1ξ− 1) + 1

ϕ+ 1

, (99)

where the dimensionless parameters κ, ξ and ϕ are de-fined in (65). In the adiabatic situation (98) is naturallyconsistent with (95, 96), but for the considered harmonicocillators it is valid more generally, i.e., for an arbitratystationary state. Eq. (99) explicitly demonstrates theconflict in Granger-driving between making the oscillator2 slow (i.e., ϕ → ∞) and making it cold (i.e., ξ → ∞):for ϕ → ∞, m 2→1

m 1→2tends to infinity, while for ξ → ∞,

m 2→1

m 1→2tends to zero.

Note finally that

ıF2→1

m 2→1=

ξ(1 + ϕ)(ξ + ϕ)

κϕ + ξ(1 + ϕ)2,

which means that apart from the adiabatic limit (ϕ → 0or ϕ → ∞) there is no straightforward relation betweenıF2→1 and m 2→1.

X. SUMMARY

We have investigated the task of information transferimplemented on a special bi-partite physical system (pairof Brownian particles, each coupled to a bath). Our mainconclusions are as follows:

0. The information flow ı2→1 from one Brownian par-ticle to another is defined via the time-shifted mutualinformation. For the considered class of systems this def-inition coincides with the entropy flow, as defined in sta-tistical thermodynamics.

16

1. The information flow ı2→1 is a sum of two terms:ı2→1 = ıF2→1 + ıB2→1, where the bath driven contributionıB2→1 ≤ 0 is the minus Fisher information, and wherethe force-driven contribution ıF2→1 has to be positive andlarge enough for the particle 2 to be an informationsource for the particle 1.

2. No information flow from one particle to another ispossible in equilibrium. This fact is recognized in litera-ture [17], though by itsels it does not yet point out to adefinite thermodynamic cost for information transfer.

3. For a stationary non-equilibrium state created bya finite difference between two temperatures T1 < T2,the ratio of the information flow to the total entropyproduction—i.e., the efficiency of information flow— islimited from above by T2

T2−T1. This bound for the effi-

ciency defines the minimal thermodynamic cost of infor-mation flow for the studied setup. Note that not the totalamount of transferred information, but rather its rate islimited. Thus the thermodynamic cost accounts also forthe time during which the information is transferred.

4. The upper bound T2

T2−T1is reachable in the adia-

batic limit, where the sub-systems have widely differentcharacteristic times. The information flow is then smallon the time-scale of the fast motion, but sizable on thetime-scale of the slow motion.

5. The information transfer between two sub-systems(Brownian particles) naturally nullifies, if these systemare not interacting, and were not interacting in thepast. It is thus relevant to study how the efficiencyand information flow depend on the inter-particle cou-pling strength. As functions of the inter-particle couplingstrength, the efficiency and information flow demonstratethe following complementarity. The information flowis maximized at the instability threshold of the system(which is reached at the strongest coupling compatiblewith stability). On the contrary, the efficiency is maxi-mized for the weakest coupling.

6. There are special two-temperature, but non-stationary scenarios, where the efficiency of informationflow is much larger than T2

T2−T1, but it is still limited by

the basic parameters of the system (the ratio of the time-scales and the ratio of temperatures).

7. Analogous consideration can be applied to the en-ergy (heat) flow from one sub-system to another. Theefficiency of the heat flow—which is defined as the heatflow over the total amount of the heat dissipated in theoverall system—is limited from above by the same factor

T2

T2−T1(assuming that T1 < T2). However, in the station-

ary state there is a complementarity between heat flowand information flow: the setup which is most efficientfor the information transfer is the least efficient for theheat transfer and vice versa.

8. There are definite relations between the informa-tion flow and the transfer entropy introduced in [35, 36].The transfer entropy is not a flow of information, thoughit quantifies some type of information processing in thesystem, a processing that occurs without any thermody-namic cost.

Acknowledgements

The Volkswagenstiftung is acknowledged for financialsupport (grant ”Quantum Thermodynamics: Energy andInformation flow at nano-scale”). A. E. A. was supportedby ANSEF and SCS of Armenia (grant 08-0166).

APPENDIX A: INFORMATION-THEORETIC

MEANING OF ENTROPY AND MUTUAL

INFORMATION

1. Entropy

Let us recall the information-theoretic meaning of en-tropy (1), i.e., in which specific operational sense S[X ]quantifies the amount of information contained in therandom variable X . To this end imagine that X is com-position of N random variables X(1), . . . , X(N), i.e.,X is a random process. We assume that this process isergodic [25]. The simplest example of such a process isthe case when X(1), . . ., X(N) are all independent andidentical,

p(x(1), . . . , x(N)) =∏N

k=1p(x(k)), (A1)

where x(k) = 1, . . . , n parametrize realizations of X(k).Note that for an ergodic process the entropy in the limitN ≫ 1 scales as ∝ N , e.g., S[X ] = −N

∑nk=1p(k) ln p(k)

for the above example (A1).For N ≫ 1, the set of nN realizations of the ergodic

process X can be divided into two subsets [25, 26, 27].The first subset Ω(X) is called typical, since this is theminimal subset with the probability converging to 1 forN ≫ 1 [25, 26, 27]; the convergence is normally expo-nential over N . The number of elements in Ω(X) growsasymptotically as eS[X] for N ≫ 1. These elements have(nearly) equal probabilities e−S[X]. Thus the numberof elements in Ω(X) is generally much smaller than theoverall number of realizations eN ln n.

The overall probability of those realizations which donot fall into Ω(X), scales as e−constN , and is neglegible inthe thermodynamical limit N ≫ 1. All these features aredirect consequences of the law of large numbers, whichholds for ergodic processes at least in its weak form [25,26, 27]. Since in the limit N ≫ 1 the realizations of theoriginal random variable X can be in a sense substitutedby the typical set Ω(X), the number of elements in Ω(X)characterizes the information content of X [25, 26, 27].

2. Mutual information

While the entropy S[X ] reflects the information con-tent of the (noiseless) probabilistic information source,the mutual information I[Y : X ] characterizes the max-imal information, which can be shared through a noisychannel, where X and Y correspond to the input and

17

output of the channel [25, 26, 27], respectively. To un-derstand the qualitative content of this relation consideran ergodic process XY = X(1)Y (1), . . . , X(N)Y (N),where x(l) = 1, . . . , n and y(l) = 1, . . . , n are the realiza-tions of X(l) and Y (l), respectively.

One now looks at X (Y ) as the input (output) of anoisy channel [25, 26, 27]. In the limit N → ∞ we canstudy the typical sets Ω(.) instead of the full set of real-izations for the random variables. It appears for N → ∞that the typical sets Ω(X) and Ω(Y ) can be representedas union of M ≡ eI[X:Y ] non-overlapping subsets [26]:

Ω(X) =⋃M

α=1ωα(X), Ω(Y ) =

⋃M

α=1ωα(Y ), (A2)

such that for N ≫ 1

p[y ∈ ωα(Y ) |x ∈ ωβ(X)] = δαβe−S(Y |X), (A3)

p[x ∈ ωα(X) | y ∈ ωβ(Y )] = δαβe−S(X|Y ). (A4)

Note that the number of elements in ωα(X) (ωα(Y )) isasymptotically eS[X|Y ] (eS[Y |X]). Eqs. (A3, A4) meanthat the realizations from ωα(Y ) correlate only withthose from ωα(X), and that all realizations within ωα(X)and within ωα(Y ) are equivalent in the sense of (A3, A4).It should be clear that once the elements of ωα(X) arecompletely mixed during the mapping to ωα(Y ), the onlyreliable way of sending information through this noisychannel is to relate the reliably shared words to the setsωα [26]. Since there are eI[X:Y ] such sets, the number ofreliably shared words is limited by eI[X:Y ]. Note that thenumber of elements in the typical set ΩN (X) is equal toeS(X), which in general is much larger than eI[X:Y ].

APPENDIX B: OPERATIONAL DEFINITION OF

INFORMATION FLOW

Here we demonstrate that the definition (17) of the in-formation flow is recovered from an operational approachproposed in [40]; see [41, 42] for related works.

Let us imagine that at some time t we suddenly in-crease the damping constant Γ2 to some very large value.As seen from (6, 8, 9), this will freeze the dynamics ofthe second particle, so that for t > t the joint proba-bility distribution P (x; t) satisfies the following modifiedFokker-Planck equation

∂tP (x; t) + ∂1J1(x; t) = 0, (B1)

−Γ1J1(x; t) = P (x; t)∂1H(x) + T1∂1P (x; t), (B2)

together with the boundary condition

P (x; t) = P (x; t), (B3)

where P (x; t) satisfies the Fokker-Planck equation (8, 9).Other methods of freezing the dynamics of X2(t) (e.g.,