Supporting Multiple View Maintenance Policies

Transcript of Supporting Multiple View Maintenance Policies

Supporting Multiple View Maintenance PoliciesLatha S. Colby�Red Brick [email protected] Akira KawaguchiyColumbia [email protected] Daniel F. LieuwenBell Labs, Lucent [email protected] Inderpal Singh MumickAT&T [email protected] A. RossyColumbia [email protected] views and view maintenance are becoming in-creasingly important in practice. In order to satisfy di�erentdata currency and performance requirements, a number ofview maintenance policies have been proposed. Immediatemaintenance involves a potential refresh of the view after ev-ery update to the deriving tables. When staleness of viewscan be tolerated, a view may be refreshed periodically or(on-demand) when it is queried. The maintenance policiesthat are chosen for views have implications on the valid-ity of the results of queries and a�ect the performance ofqueries and updates. In this paper, we investigate a numberof issues related to supporting multiple views with di�erentmaintenance policies.We develop formal notions of consistency for views withdi�erent maintenance policies. We then introduce a modelbased on view groupings for view maintenance policy as-signment, and provide algorithms, based on the viewgroupmodel, that allow consistency of views to be guaranteed.Next, we conduct a detailed study of the performance as-pects of view maintenance policies based on an actual im-plementation of our model. The performance study investi-gates the trade-o�s between di�erent maintenance policy as-signments. Our analysis of both the consistency and perfor-mance aspects of various view maintenance policies are im-portant in making correct maintenance policy assignments.1 IntroductionMaterialized views are becoming important for providing vi-able solutions to problems in applications related to billing,retailing, decision support, data warehousing and data inte-�The work of L. Colby was performed while at Bell Labs.yThe work of A. Kawaguchi and K. Ross was performed whilevisiting AT&T Laboratories and Bell Laboratories, and was partiallysupported by a grant from the AT&T Foundation, by a David andLucile Packard Foundation Fellowship in Science and Engineering, bya Sloan Foundation Fellowship, and by an NSF Young Investigatoraward.

gration [GM95, Mum95, ZGHW95, HZ96]. A materializedview is like a data cache; the main reason for de�ning andstoring a materialized view is to increase query performance.However, view maintenance imposes a penalty on updatetransactions. In order to minimize this penalty, di�erentpolicies for view maintenance have been proposed, depend-ing on the read/update transaction mix and on the needfor queries to see current data. Three common policies are:(1) Immediate Views: The view is maintained immediatelyupon an update to a base table, as a part of the transac-tion that updates the base table. Immediate maintenanceallows fast querying, at the expense of slowing down updatetransactions. (2) Deferred Views [RK86, AGK95, AKG96,CGL+96]: The view is maintained by a transaction thatis separate from the update transactions and is typicallyinvoked when the view is queried. Deferred maintenancethus leads to comparatively slower querying than immedi-ate maintenance, but allows faster updates. (3) SnapshotViews [AL80, LHM+86]: The view is maintained period-ically, say once a day or once a week, by an asynchronousprocess. Snapshot maintenance allows fast querying and up-dates, but queries can read data that is not up-to-date withbase tables.

ACM SIGMOD Conference, May 1997, pp.405{416

Motivation: When trying to implementmaterialized views,one has to decide what maintenance policy one should adopt.A system may require all three policies for di�erent views:An application has to make a view immediate if it expectsa very high query rate and/or real-time response require-ments. For example, in a cellular billing application, thebalance due is a view on cellular call data, and can be usedto block future calls. Clearly, this view must be immedi-ately maintained. However, immediate maintenance is notscalable with respect to the number of views, so a systemcannot de�ne many immediate views. Deferred and snap-shot maintenance are scalable with respect to the numberof views, so it is often desirable to de�ne most views as de-ferred or snapshot. If one can tolerate stale data, or needs astable data set, then snapshot views are probably the bestchoice. If it is important that a view be up-to-date withbase tables, but if the query response time is not critical,then a deferred view is appropriate. The following examplemotivates the need for di�erent view maintenance policies:EXAMPLE 1.1 Consider a membership based retailingcompany (such as Price Club, Sam's Club, or BJ's) with adatabase of sales and return transactions from several stores.Tables sales, customer, supplier, and supplies are main-tained in the database. The sales table contains the de-

tailed transaction data. The customer and supplier tablescontain information about customers and suppliers, respec-tively. The supplies table contains information about itemssupplied to a store by each supplier. The following materi-alized views are built.� CustReturns: De�ned as the join between customerand sales transactions that are marked as returns.This table may be queried by stores when processinga return and needs to be current.� TotalItemReturns: Total amount and number of re-turns for each item (aggregate over CustReturns). Thisview is used for decision support.� LargeSales: Customers who have made single pur-chases of more than $1,000 (join between customerand sales). This view is used for decision support,marketing, and promotions.� ItemStoreStock: For each item and store, the totalnumber of items in stock in the store (join over ag-gregates over sales and supplies). This is used totrigger re-stocking decisions and is queried frequently.� ItemSuppSales: Total sales for each item, supplier pair(aggregate over join of sales, supplies and supplier).The view is used for decision support.� ItemProfits: Contains the total pro�ts for each itemcategory (aggregate over join of ItemSuppSales andsupplies). This view is also used for decision support.The views can be de�ned in SQL, but the de�nitions are notimportant for our purpose. Let us consider the desired main-tenance policies for each view. CustReturns should provideup-to-date results since it is used for making return deci-sions. However, queries to this view are likely to be relativelyinfrequent, and the view could be maintained only when itis queried (deferred maintenance). TotalItemReturns andLargeSales are used for decision-support and marketing,need a stable version of data, and can be maintained peri-odically (snapshot maintenance). ItemStoreStock is moni-tored frequently and is used to trigger re-stocking decisions.It thus needs to be maintained using an immediate mainte-nance policy. ItemSuppSales and ItemProfits are used fordecision support, and can be maintained periodically|sayonce a day.The choice of a policy for each view is not as straight-forward as the above example may indicate. If the totalsystem throughput is of concern, converting an immediateview into a deferred view can sometimes help. For exam-ple, it may turn out that the immediate maintenance ofItemStoreStock is too expensive, and throughput can bemade acceptable by doing deferred maintenance instead.Also, the maintenance policy of one view cannot be chosenindependently of the policies of related views. For example,if two views have snapshot maintenance policies but withdi�erent refresh cycles and these views are used to derive athird view, this third view can re ect an inconsistent stateof the world.Most of the research on materialized views has focused onhigh level incremental algorithms for updating materializedviews e�ciently when the base tables are updated [BC79,SI84, BLT86, QW91, CW91, GMS93, GL95, LMSS95]. E�-cient data structures for supporting incremental view main-tenance in the presence of multiple views were described

in [KR87, SP89, Rou91]. However, global issues in sup-porting many views with di�erent policies have not beenexplored in detail.Summary of Contributions: This paper investigates a num-ber of issues related to supporting multiple views with dif-ferent maintenance policies. The maintenance policies thatare chosen for views have implications on the validity ofthe results of queries and a�ect the performance of queriesand updates. In designing an application based on viewswith di�erent maintenance schemes, it is important that thedesigner be aware of the consequences of the maintenancepolicy selections on the correctness and performance of theapplication. To this end, we study both consistency andperformance issues related to supporting many views withdi�erent maintenance policies.We develop formal notions of consistency for views withimmediate, deferred, and snapshot maintenance policies. Inorder to provide consistent information in a system allow-ing multiple views with di�erent maintenance policies, wedevelop a model based on the notion of viewgroups. Aviewgroup is a collection of views that are required to bemutually consistent. We show that certain combinationsof the di�erent maintenance policies and viewgroups can-not guarantee consistency or are otherwise not meaningful.We identify the legal combinations, and give an algorithmto maintain the views in a manner that guarantees consis-tency. The viewgroup model is also important in boundingthe amount of computation resulting from any given trans-action by con�ning the e�ects of the transaction to a portionof the database.In order to understand the impact of di�erent mainte-nance policies on the performance of queries and updates,we conduct a detailed performance analysis based on an ac-tual implementation. The trade-o�s between di�erent main-tenance policy assignments are studied by measuring refreshtimes, update times, query response times, total elapsedtimes and number of refreshes. The experimental studygives a very good understanding of the costs of each mainte-nance policy, and shows that an application can gain perfor-mance advantages by using multiple maintenance policies.Paper Outline: We develop our materialized view modelfor supporting multiple views with di�erent maintenancepolicies in Section 2. The overall architecture and algo-rithms for supporting view maintenance in such a model isdescribed in Section 3. An overview of our implementationgoals and methodology is given in Section 4. Section 5 givesresults from performance experiments. Sections 6 and 7 de-scribe related work and conclusions, respectively.2 A Model for Supporting Materialized ViewsIn this section, we investigate the impact on the validity ofquery results of allowing views with di�erent maintenancepolicies. An important issue when de�ning multiple mainte-nance policies is consistency between di�erent materializedviews. We will de�ne consistency formally in Section 2.1.For now, let us informally review the consistency expecta-tions for each maintenance policy.Immediate views have to be consistent with the tablesthey are de�ned over, as they exist in the current state.Deferred views need not be consistent, but queries over de-ferred views have to be answered as if views are consistent,and consistency is typically achieved by making the deferred

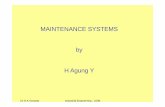

view consistent at query time. The process of re-establishingconsistency is called refresh. Snapshot views are required tobe consistent with the state of the deriving tables that ex-isted at the time of the last refresh. When we use views tode�ne other views, and have di�erent consistency require-ments for these, it is easy to get confused about what stateeach materialized view is meant to represent. Note that theconsistency discussion here is on the desired relationship be-tween states of materialized views and base tables in a cen-tral system with one user. A related problem of concurrencycontrol, to ensure that consistency is achieved in the pres-ence of concurrent transactions, is discussed in [KLM+97].Notions of consistency for views in distributed environmentshave been presented in [ZGHW95] and [HZ96].To identify related views that should be consistent witheach other, and to localize the maintenance activity withina small set of views, we place base and view tables into view-groups. A viewgroup consists of a set of tables, along withlogs holding the changes to the tables. A viewgroup shouldhave the property that there is some past (or current) statesj of base tables such that all views in the viewgroup are con-sistent with the state sj of the base tables. In other words,the result of evaluating a query over any materialized viewin a viewgroup must be the same as evaluating the equiv-alent query over base tables in state sj . Since this state iscommon to all views in the viewgroup, a query that involvesmultiple views in the viewgroup will return a consistent an-swer. In addition, viewgroups should be isolated in the sensethat (1) maintenance of a view in a viewgroup should nottrigger maintenance or updates in another viewgroup, and(2) it should be possible to answer a query without lookingoutside the queried viewgroup.In the rest of this section, we formally de�ne consistencyrequirements, specify the properties that we want views andviewgroups to satisfy, and develop a scheme for assigningmaintenance policies to views, and for assigning views toviewgroups.2.1 Notation and ConsistencyA table that is not de�ned as a view is called a base table.A materialized view V is a table that is de�ned through aquery Q over some set of tables fR1; :::; Rkg, denoted asV def= Q(R1; :::; Rk):R1; : : : ; Rk are called the referenced (or parent) tables of V ,and may be base tables or materialized view tables. A mate-rialized view is labeled as immediate, deferred , or snapshotto indicate the maintenance policy. A virtual view is a viewthat is not materialized. For simplicity of presentation, wedo not discuss virtual views in the rest of this paper.De�nition 2.1 (View-Dependency Graph:): The de-pendency graph G of a view V is a graph with a node foreach table referenced in the view de�nition, a node labeledV , and a directed edge from the node for each referencedtable to the node for V . The dependency graph shows howthe view is derived from base tables and/or other views.The view-dependency graph G of a database schema isthe union of the dependency graphs for all the views inthe schema. The view-dependency graph shows how all theviews in the schema are derived from each other and frombase tables.EXAMPLE 2.1 View-Dependency Graph: Figure 1illustrates a view-dependency graph. The Bi are base tables,

and the Vj are views. Ignore the dashed lines and theirlabels for now. V8 is a view that may be de�ned with a SQLstatement such as \CREATE VIEW V8 AS (SELECT ? FROM B3,V7 WHERE Predicate)". B3 and V7 are the referenced tables.The edge from V7 to V8 means that V7 is referenced in thede�nition of view V8. We say that V7 is a parent of V8, andthat V8 is a child of V7. In this formulation, ancestors of aview V represent tables used to derive view V . Descendantsof V represent views derived from V .States, Values, and Materializations: Informally, the valueof a table R is the bag of tuples that must be returned forthe query \SELECT ? FROM R". In other words, the valueof a table is the observed result of querying the table. Thematerialization of a table R is the bag of tuples that areactually stored in the database for the table.A database state s is a mapping from each (base andview) table R in the database schema to the value of R,denoted R(s), and the materialization of R, denoted RM (s).s : R! (R(s); RM(s))The current database state is denoted by scurr. We assumethat states are temporally ordered.For base tables, the value R(s) is equal to the bag of tu-ples stored for relation R (i.e. the materialization RM (s)).For immediate and snapshot views, the value R(s) is re-quired to be equal to the materialization RM(s). (For snap-shot views, both R(s) and RM (s) remain unchanged as svaries, until s reaches a state in which a new snapshot istaken.) For deferred views, the materialization and valuecan be di�erent. For a deferred view V def= Q(R1; :::; Rk),the value V (s) is the collection of tuples that we want to bereturned by the query \SELECT ? FROM V ". Since a deferredview is expected to be maintained at the time of querying,the value V (s) of a deferred view can be formally de�ned as:V (s) = Q(R1(s); :::; Rk(s)), where Ri(s) is the value of thereferenced table Ri in state s. The materialization VM (s)can be di�erent from the value V (s) in most states, but re-fresh would be required to make the two equal at the timeof querying.We now de�ne consistency between a view table, its par-ents, and the base tables.De�nition 2.2 (Reference and Base Consistency): LetV def= Q(R1; : : : ; Rk) be a materialized view, with R1; : : : ; Rkas the referenced tables. Let fB1; : : : ; Bmg be the base ta-bles from which V is ultimately derived. In each currentstate scurr, the view V is said to be reference consistentwith state sj if V (scurr) = Q(R1(sj); : : : ; Rk(sj)). Sim-ilarly, the materialization of view V is said to be refer-ence consistent with state sj (sj � scurr) if VM (scurr) =Q(R1(sj); : : : ; Rk(sj)).Let Q0 be the query obtained from query Q by substi-tuting each referenced view by its de�ning query until allthe referenced tables are base tables. In state scurr, theview table V is said to be base consistent with state sj ifV (scurr) = Q0(B1(sj); : : : ; Bm(sj)). Similarly, the materi-alization of view V is said to be base consistent with statesj (sj � scurr) if VM(scurr) = Q0(B1(sj); : : : ; Bm(sj)).By de�nition, base tables are base and reference consis-tent with the current state.From De�nition 2.2 and the de�nition of value V (s) fordeferred views, it follows that a deferred view is always ref-erence consistent with the current state. However, whether

B B B B B

D

S

SI

D

S

S

S

S S

I

VG VG

VG

VG

VG

1

2

3

54

VG base

I

D

D

S

S

S

B B B B B1 2 3 4 5

V V V V

V

V

V

V

V

V

V

V

V V

V

V

V

V

1 2 3 4

5

6

9

10 11

12

13

1415

16

17

18

I

7

8

Figure 1: An example of a view-dependency graphor not V is base consistent with any state at all depends onwhether or not its parents are base consistent.In any given state scurr, there can be several databasestates with which a view is base consistent. For example,suppose that a view is de�ned as a (duplicate preserving)projection over a base table. Now suppose that the view isbase consistent with the current state and that an updateis made to a tuple of the base table on a column that isnot included in the view de�nition. The view will be baseconsistent with both the old and new states. However, thereis a unique \intended" state, s, with which a view is baseconsistent. We will call this the e�ective state of the view (instate scurr), denoted e�ective(V; scurr). This is, essentially,the state of the base tables that was propagated into theview by the last refresh operation. In Section 3 we describehow this state is determined.2.2 Views and ViewgroupsWe now state the consistency requirements for views andcharacterize the di�erent maintenance policies:Properties of Materialized Views:Let V def= Q(R1; :::; Rk) be a materialized view. Then, in anystate scurr, the view V must satisfy the following properties:P1 If V is an immediate view then V is reference consistentwith scurr. Further, the materialization of V is equalto the value of V (i.e., VM (scurr) = V (scurr)).P2 If V is a deferred view, then V is reference consistentwith scurr, and there exists a past state sj (sj � scurr)such that the materialization of V is reference con-sistent with state sj . Further, immediately after anyquery, the materialization of V must be equal to thevalue of V .P3 If V is a snapshot view then there exists a past state sj(sj � scurr) such that V is reference consistent withstate sj . Further, the materialization of V is equal tothe value of V .

P4 There exist states si and sj , such that si � sj � scurr, siis the e�ective state of V , i.e., si = e�ective(V; scurr),V is base consistent with state si, and V is referenceconsistent with state sj .P5 (a) There exist states sj , sj1 ; : : : ; sjk , such that sj �sj1 � scurr; : : : ; sj � sjk � scurr, the materializationof V is reference consistent with state sj , and the mate-rializations of R1; : : : ; Rk are reference consistent withstates sj1 ; : : : ; sjk respectively. (b) For any two states,si < sk, e�ective(V; si) � e�ective(V; sk).The �rst three properties characterize the di�erent viewmaintenance policies in terms of reference consistency. Thefourth property says that the view must also be consistentwith some previous state of the base tables (the e�ectivestate) from which the view is ultimately derived. The �fthproperty requires that the materialization of a view followthose of its referenced tables and that e�ective states canonly progress forward. In other words, we cannot skip re-freshing one view before refreshing its descendants and arefresh cannot make a view more stale in a new state.Viewgroup: A viewgroup VG is a subset of nodes in theview-dependency graph that represents closely related viewssatisfying the following properties:Properties of Viewgroups:P6 The set of all viewgroups forms a partition over the setof nodes in the view-dependency graph. Recall that apartition over a set S is a set of subsets fS1; :::; Spg ofS such that (i) for each pair of sets Si and Sj , Si \Sj= � if i 6= j, (ii) each Si 6= �, and (iii) Spi=1 Si = S.P7 A query on a view in a viewgroup VG can be answeredwithout querying any other viewgroup.P8 Maintenance of a view in a viewgroup VG cannot betriggered by any operation in another viewgroup.P9 (Viewgroup Consistency): In any state scurr, foreach viewgroup VG, there exist past database states si

and sj such that si � sj � scurr, all the views V 2VG are reference consistent with state sj and are baseconsistent with state si, and si = e�ective(V; scurr)for all V 2 VG. The state si is also called the e�ectivestate of VG and is denoted as e�ective(VG; scurr).Properties P7 and P8 ensure that viewgroups identifymodules within which we can do maintenance and query-ing without a�ecting other modules. The modularization isimportant for a system to support a large number of views.Property P9 is important to make sure that queries can ac-cess multiple tables within a viewgroup and expect to seedata consistent with some state of the system.2.3 Constraints on Maintenance Policies and Viewgroups:Satisfying properties P1-P9 for a schema means that wecannot arbitrarily choose maintenance policies and view-groups for each view that we de�ne. We list the design rulesthat constrain the assignment of maintenance policies andviewgroups. See [CKL+96] for a detailed explanation of thereasons for these design rules. The maintenance policy canbe represented by a label (I for immediate, D for deferred,and S for snapshot) on each node of the view-dependencygraph, and a viewgroup can be represented by a set of nodesfrom the view-dependency graph. The design rules can thusbe described using the view-dependency graph.Rule 1 Each view can be assigned to exactly one viewgroup.Rule 2 Immediate and deferred views must belong to thesame viewgroup as their parents.Rule 3 All snapshot views in a viewgroup must have thesame refresh cycle.Rule 4 Snapshot views cannot occur in the same viewgroupas base tables.Rule 5 A viewgroup can be derived from at most one otherviewgroup.Rule 6 Deferred views cannot have children in other view-groups.In addition to the above design rules required to satisfy prop-erties P1-P9, certain assignments of maintenance policiesare semantically equivalent to more easily understood as-signments. We choose to disallow such assignments.Rule 7 An immediate view cannot have a deferred view ora snapshot view as a parent.Rule 8 A snapshot view cannot have a deferred view as aparent.Rule 9 All base tables must be placed in a single viewgroup.(We will call this viewgroup VGbase.)From Rules 1-9 we can further infer that (1) all imme-diate views must be placed in the base viewgroup VGbase,and (2) the viewgroup graph is a tree rooted at VGbase. Theviewgroup graph has a node for each viewgroup, and an edgefrom viewgroup VG1 to VG2 if there is an edge in the view-dependency graph from a view in viewgroup VG1 to a viewin viewgroup VG2.Figure 1 shows an example of a legal assignment of viewsto viewgroups and maintenance policies. The dashed linesrepresent viewgroups. The labels I, D and S denote imme-diate, deferred and snapshot views, respectively.

EXAMPLE 2.2 Consider the views of Example 1.1. Themaintenance policy assignments of all views except one sat-isfy Rules 1-9. However, having CustReturns as a deferredview violatesRule 8, since a snapshot view TotalItemReturnsis de�ned over CustReturns. We have three options for get-ting a legal assignment: (1) make the view CustReturnsan immediate view (if we want it to always contain currentdata), or (2) make CustReturns a snapshot view (if we cantolerate old data), or (3) rede�ne TotalItemReturns as anaggregate over the join of customer and sales.2.4 Declaring Views and ViewgroupsA user creates a viewgroup with a Data De�nition Language(DDL) statement indicating the name of the viewgroup andits refresh frequency. The base viewgroup is built-in, and allbase tables are automatically placed in the base viewgroup.Each materialized view is declared with its maintenance pol-icy. Each snapshot view must be assigned to a viewgroup bythe user; all views in a viewgroup are refreshed together. Animmediate or deferred view need not be explicitly assigned tothe viewgroup: It is assigned to the viewgroup of its parenttables automatically by the system (Rule 2). The systemchecks the view de�nition, maintenance policy assignment,and the viewgroup assignment to ensure that Rules 1-9 aresatis�ed. If not, the view de�nition is rejected. Finally, eachview is materialized upon creation.3 Maintaining Views and ViewgroupsSo far, we have introduced the concept of a viewgroup, anddescribed a model for ensuring the consistency of multiplematerialized views. In this section, we describe in more de-tail how view maintenance takes place in our implementa-tion, and how the \e�ective state" of a viewgroup is chosen.3.1 View Maintenance ArchitectureView maintenance requires additional work to be done inresponse to updates. When a table has an immediate viewde�ned on it, the extra work that has to be done in responseto an update on the table is refreshing the immediate view.If a table has a deferred or a snapshot view, the extra workis recording the updates to the table in logs.We maintain a special log per table for the explicit pur-pose of view maintenance. The log data structures and al-gorithms used to interface with the logs are realized in theOde database system. Section 4 describes our storage modeland implementation.The function that takes an update transaction and exe-cutes the transaction as well as the required additional workis called makesafe, as in [CGL+96], and the function thatchanges the view is called refresh. Figure 2 illustrates ourimplementation architecture.3.2 Algorithms for Maintaining ConsistencyThere are three types of events that can cause changes to thecontents of a view; (a) updates to a base table, (b) a queryinvolving a deferred view, and (c) explicit or periodic refreshof a viewgroup containing snapshot views. (Additions anddeletions of views are not discussed here; however, it mustbe noted that a view is materialized when it is de�ned.) Wesummarize the actions to be taken on each of these events.

table

log

refresh viewimmediate

log

refresh

refresh

log

log

update baseor view table

update view table

of a snapshot view

explicit or periodic refresh

query on a deferred view

update view table

update view table

view

viewsnapshot

deferred

makesafe

makesafe

makesafe makesafe

Figure 2: View maintenance architectureAn update to a table will result in a call to makesafe(Algorithm 3.1) which, in addition to the table update, up-dates logs (if the table has any deferred or snapshot viewsde�ned on it), and refreshes any immediate views de�ned onthe table.Algorithm 3.1 (makesafe)Input: Update command U .Result: Consistent database after execution of U .Method:if U is the update of base tablesfor each table T to be updated doupdate T ;update logs if T has deferred orsnapshot children;endforB updated base tables;done false;D views in VGbase that are descendants of B;while done is false doD some immediate view in Dwhose parents are not in D;if no such Ddone true;else refresh(D); remove D from D;endwhileelse /* U is the update of a view V */update V ;update logs if V has deferred orsnapshot children;3Algorithm 3.2 (refresh)Input: View V to be refreshed.Result: V is refreshed and its logs are updated.Method:compute incremental changes to V ;generate update command U to update V ;makesafe(U);3 The refresh-group operation (Algorithm 3.3) is calledon a (non-base) viewgroup in response to an explicit re-

quest by the user to refresh the viewgroup, or due to anasynchronous refresh of the viewgroup at the assigned re-fresh interval. The result of the operation is a refresh ofall snapshot views in the viewgroup. A refresh-group op-eration proceeds in a breadth-�rst manner where a view isrefreshed only after all of its parent views (that need to berefreshed) are refreshed.Algorithm 3.3 (refresh-group)Input: Viewgroup VG to be refreshed.Result: All snapshot views in the group are refreshed.Method:B views in parent viewgroup from which viewsin VG are derived;done false;D views in VG that are descendants of B;while done is false doD some snapshot view in Dwhose parents are not in D;if no such Ddone true;else refresh(D); remove D from D;endwhile3A query on a deferred view will result in a call to the pro-cedure refresh-deferred-view (Algorithm 3.4). The algo-rithm �rst checks if the view needs to be refreshed, to pre-vent multiple deferred views with a common deferred parentfrom causing multiple refreshes of the parent, as well as toavoid refreshing a view for which the underlying data hasnot changed. The test to determine if a view needs to berefreshed is described in Section 4, If the view needs to be re-freshed, the procedure refresh-deferred-view recursivelycalls itself on each deferred parent view, and then refreshesthe view.Algorithm 3.4 (refresh-deferred-view)Input: Deferred view VResult: Recursive refresh of deferred views subtree.Method:if V needs to be refreshedfor each parent A of V doif view-type of A is deferredrefresh-deferred-view(A);endforrefresh(V );33.3 E�ective State and CorrectnessIn Section 2.2, we presented various consistency require-ments for views and viewgroups. There can be several data-base states with which a view is base consistent. However,there must exist some unique \intended" state with whicha view or viewgroup is base consistent. This state is calledthe e�ective state, and it must move forward in time. Thee�ective state is determined as follows.1. The e�ective state of VGbase is scurr.2. The e�ective state of any other viewgroup VG is thee�ective state of its parent viewgroup VG0 in state s0,i.e., e�ective(VG; scurr) = e�ective(VG0; s0), where s0is the state in which the last refresh-group of VG wasperformed.

In other words, the e�ective state of a viewgroup is thecurrent database state if the viewgroup is the base view-group. Otherwise, it is the e�ective state of the parent groupthat was \propagated" into the viewgroup by the most re-cent refresh-group operation.We are now ready to state the following (a formal proofwill be given in the full paper):Consider a system permitting materialized views andviewgroups to be de�ned according to the design rules ofSection 2.3 and using the maintenance functions described inSection 3.2. Let the e�ective state be determined as above.Then,� A non-base viewgroup is reference consistent with thestate in which it was last refreshed.� Properties P1-P9 are satis�ed.4 Implementation Goals and OverviewThe focus of the previous two sections was on the modelingaspects of view maintenance. The viewgroup model is im-portant not only for de�ning and achieving consistency ofqueries but also for containing the amount of computationthat is done by any given transaction. The focus of the nextsection is on the performance aspects of view maintenance.In this section, we give an overview of the implementationon which the experimental study was based.When implementing our view maintenance and view-group algorithms, we had a number of goals in mind. Wewanted to be able to answer the following questions:Feasibility: Is it feasible to support multiple maintenancepolicies in a single system?Scalability: Can multiple views be materialized and main-tained in scalable manner?Performance: Can an application gain a performance ad-vantage by using multiple maintenance policies?Tradeo�s: What are the performance trade-o�s betweendi�erent maintenance policies, and between incremen-tal maintenance and recomputation itself?Cost: How expensive is each maintenance policy for multi-ple views?In this section, we highlight some of the important as-pects of the implementation. We chose the Ode databasesystem [AG89] as the implementation vehicle for material-ized views. This choice was made since we have expertiseand access to the source code for Ode, allowing us to exper-iment with special data structures for storing logs. Ode isan object-oriented database; its data manipulation languageis O++, a variant of C++ with persistence and transac-tions. It also o�ers relational features through the SWORDinterface [MRS93]. The ideas used in the implementationare equally applicable to commercial relational systems (e.g.Oracle, Sybase).Tables: A table (base or materialized view) is realized bya collection class in Ode. A collection instantiation returnsa descriptor (or handle) that is used to reference a material-ization of tuples. A tuple is an Ode object. The materializa-tion has a cluster of active tuples, representing the currentstate of the table, and a cluster of inactive tuples, that canbe used to compute the pre-update state of the table. The

insert() function creates a new tuple with the given val-ues, and inserts it into the active cluster. The remove()function removes a tuple from the active cluster, and placesit in the inactive cluster. The inactive tuple must stay inthe inactive cluster until the e�ect of its removal is propa-gated to all views de�ned on the table, after which it canbe garbage collected. The replace() function updates anexisting tuple, and stores the pre-update values in a newlycreated inactive tuple. Index structures can be built on thetuples in the active cluster.Log Data Structure: A log is maintained for each tablethat has a deferred or snapshot view de�ned over it. Thelog is physically independent of the table materialization,and there is only one log for each table regardless of thenumber of the views it derives. The problem of maintaininga single log for a table that has multiple views (with di�erentrefresh times) de�ned on it was also considered in [SP89],[KR87], and [CG96]. Our log structures are based on thegeneral principles presented in those papers.We create one log entry per update operation. Each logentry has an operation ag and the oid1 of the tuple inthe materialization to which the operation was applied. Forreplace(), the log entry contains a second oid pointing tothe pre-update value of the replaced tuple in the inactivecluster. We also have a separate table containing a collec-tion of tuples, each of which represents an edge of the viewdependency graph. These edge tuples are added when a new(materialized) view is de�ned in the system, and are mod-i�ed every time a view is maintained. An edge tuple for apair (B; V ) contains a pointer to the last log entry for Bthat was used to maintain V (if V is a deferred or snapshotview).Computing Incremental Changes: The implemented in-cremental maintenance algorithm is based on the countingalgorithm of [BLT86, GMS93]. The algorithm is slightly en-hanced to incorporate replace operations. To use the count-ing algorithm e�ciently, we found it important to be ableto access both the pre- and post-update states of the ta-bles [CM96, HZ96], and to avoid redundant computationscaused by deletions when maintaining a join of two or morederiving tables. The net base table changes are determinedby iterating through the portion of the log containing therelevant changes and collapsing and/or eliminating redun-dant changes (such as pairs of inserts and deletes).The makesafe operation (Algorithm 3.1) traverses a viewdependency graph to refresh all immediate views in a topo-logical order. Since the cost of computing the topological or-der is non-negligible (about one second per refresh operationin the preliminary experiments), we pre-compute the topo-logical order whenever an immediate view is added (deleted)to (from) the system, and store it in the descriptor of thebase table. This way makesafe will refresh immediate viewsby simply following the pre-computed order.Quick Maintenance Check: The deferred view mainte-nance policy requires checking whether a view needs to berefreshed before each query on the view. However, the costof testing whether a view needs to be refreshed is non-negligible. To minimize this cost, we designed a quick main-1A ROWID would be used instead of an oid in an implementation ona relational system.

tenance check for deferred views with a simple time-stamptechnique.In each viewgroup, a deferred view is rooted at a sub-tree containing only other deferred views as internal nodesand snapshot, immediate, or base tables as leaves (with thearrows in the reverse of the direction in the view depen-dency graph). For each deferred view, we store pointers tothe leaves of its deferred view subtree. The makesafe algo-rithm is extended to place a time-stamp on the table beingupdated. In refresh-deferred-view (Algorithm 3.4), thedeferred view's time-stamp and the time-stamps of the leaftables (described above) are compared to detect if any oneof the leaves has a more recent time-stamp.5 Performance StudyThis section describes an experimental performance studyon top of the disk-based Ode<EOS> database system. Theexperiments compare the performance of the di�erent main-tenance policies in the presence of multiple materializedviews. We �rst investigate the performance of the incremen-tal refresh algorithm against full recomputation. We thencompare the behavior of the di�erent maintenance policiesin response to di�erent transaction mixes. Lastly, we ver-ify that the implementation supports multiple views withdi�erent maintenance policies in a scalable manner.All experiments were run in single user mode on a SunUltraSparc 64 MB RAM machine running Solaris 2.5. Thedatabase was kept on a local 4.2 GB SPARC storage Uni-Pack disk drive attachment to eliminate NFS delays.5.1 Experimental SetupWe build two databases containing base tables and materi-alized views, run 1; 000 transactions against each database,and gather various statistics (e.g., total elapsed time, aver-age query response time, average update time) for the set of1,000 transactions.Databases: Figure 3 illustrates the base tables and viewsin one of the two experimental databases. The schema hasfour base tables and seven views. Tuples in the base ta-bles are 300 bytes long; view tuples are 50 bytes long. Twoof the materialized views (P-view1 and P-view2) are Projec-tion views, three (SPJ-view1, SPJ-view2, and SPJ-view3) areSelect-Project-Join views over the base tables, and the lasttwo (SPJ-view12 and SPJ-view23) are SPJ views over theview tables, as illustrated in the �gure.base1

base2

base3

base4

SPJ−view1

P−view2

P−view1

SPJ−view2

SPJ−view3

SPJ−view12

SPJ−view23Figure 3: Experimental database schemaBefore each experiment, each base table is initializedwith 100,000 tuples of uniformly-distributed and randomlygenerated data, and each view is materialized. (Experiments

with 1,000,000 tuples per base table produced similar re-sults.) For each base table, join column values are randomlychosen from the domain (0, 100,000). Thus, each SPJ viewis roughly the size of a base table (e.g., 100,000 tuples).B+tree indices are built on the join attributes of all basetables and views. These indices improve both query and(incremental) view maintenance performance.2Transactions: A program produces a stream of transac-tions, each of which either queries or updates the database.A query transaction contains only display operations on arandomly chosen view, while an update transaction containseither insert, remove, or replace operations on a randomlychosen base table. The replace operation updates non-indexed �elds of the chosen base table. The update ratio ofthe transaction stream is the number of update transactionsdivided by the total number of transactions in the stream.For instance, if the stream contains 750 updates and 250queries, then the update ratio is 0.75.Each transaction contains between one and eight opera-tions over the same table. Thus, a query transaction readsbetween one and eight tuples matching randomly chosenvalues from a single view table. We run experiments withnine di�erent update ratios (0, 0.05, 0.1, 0.25, 0.5, 0.75, 0.9,0.95, 1), and measured the total response time, the averagequery/update response time, and other statistics.5.2 Performance of Incremental MaintenanceThe �rst set of experiments investigates the basic perfor-mance aspects of the incremental maintenance algorithm.Purpose of experiment: It is well-known that incrementalrefresh outperforms full refresh if the size of the incrementalchange set (delta) is relatively small; however, what \small"is depends on the implementation logic of refresh, the na-ture of the updates, and also on the complexity of queryde�nitions. Here, we wish to investigate the performanceof incremental refresh versus full recomputation for an SPJview under di�erent types of updates (insertions, deletions,or replacements).Method of experiment: We use only a subset of the schemaof Figure 3 (base1, base2, and the SPJ-view1) for this experi-ment, and execute only update transactions (i.e., update ra-tio = 1:0). Both base tables are updated uniformly, thoughwe choose several di�erent types of update transaction setssuch as insert only, remove only, replace only, and a mix-ture of these operations. We also execute a transaction setwith skewed access that localizes 80% of the updates to 20%of the base tuples.We de�ne the delta ratio as the fraction of base tuplesthat are updated (number of updated tuples divided by totalnumber of tuples in base1 and base2 tables). We measurethe time taken to maintain the view at di�erent values ofthe delta ratio using (1) Incremental maintenance (labeledInc in Figure 4), and (2) Full recomputation (labeled Fullin Figure 4). A full recomputation re-materializes the viewby discarding the old contents, recomputing the view tuples,and rebuilding indices on them.2An index on a view degrades the performance of full recomputa-tion, since the old index contents are thrown away, and a new indexmust be built.

0

250

500

750

1000

1250

1500

1750

2000

0.05 0.1 0.15 0.2 0.25 0.3

Mai

nten

ance

Tim

e (s

ec.)

Delta Ratio

Inc1 (100% insert)Inc2 (100% remove)Inc3 (100% replace)

Full1 (100% insert)Full2 (100% remove)Full3 (100% replace)

0

250

500

750

1000

1250

1500

1750

2000

0.05 0.1 0.15 0.2 0.25 0.3

Mai

nten

ance

Tim

e (s

ec.)

Delta Ratio

Inc (25% ins, 25% rem, 50% rep)SkInc (80-20 skewed access of Inc)

Full (25% ins, 25% rem, 50% rep)

(a) Incremental maintenance vs. full recomputation (b) E�ect of skew on incremental maintenanceFigure 4: Incremental and full refresh response time at various delta ratesAnalysis: Graph (a) in Figure 4 shows the performance ofthe incremental maintenance for join views:1. When only inserts into the base tables occur (Inc1and Full1 in the graph), incremental refresh is supe-rior to full refresh until each base table has becomeabout 23% larger (i.e., after 46,000 insertions total).2. When only removes from the base tables occur (Inc2and Full2 in the graph), incremental refresh remainsbetter until about 15% of the tuples are removed fromeach base table (i.e., 30,000 deletions total).3. When only replaces to the base tables occur (Inc3and Full3 in the graph), incremental refresh performsbetter only up to around 7% replacement in each basetable (i.e., 14,000 tuple replacements total).Graph (b) in Figure 4 illustrates the bene�t of skewed up-dates on the performance of incremental maintenance. Theline labeled Inc gives the incremental maintenance time fora transaction stream with 25% inserts, 25% removes, and50% replaces uniformly distributed across the data set. Theline labeled SkInc gives the incremental maintenance timefor a transaction stream with a similar mix of operations,but with a skew based on the 80-20 rule { 80% of the trans-actions update 20% of the data. The third line labeled Fullgives the full recomputation time, which is the same for bothuniform and skewed updates. We see that incremental main-tenance performs much better when the updates are skewed,outperforming full recomputation until 24% of the data hasbeen updated. When updates are skewed, the same base tu-ples may be updated several times, and log trimming reducesthese several updates into a single update. Consequently, in-cremental maintenance works with a smaller number of netchanges and is more e�cient compared to a case where up-dates are uniformly distributed.5.3 Comparison of Maintenance PoliciesIn this subsection, we compare the performance of di�erentmaintenance policies in the presence of multiple views.

Purpose of experiment: We wish to see the performancecharacteristics of each view refresh policy under a varietyof update and query ratios. We expect to demonstratethat deferred maintenance leads to faster update times andslower query times than immediate maintenance. We wouldalso like to determine which policy leads to a better overallelapsed time.Method of experiment: We experiment with the follow-ing assignments of maintenance policies: (1) Immediate,(2) Deferred, (3) Snapshot(No Refresh) meaning that allviews are snapshots, but are not refreshed during the exper-iment, (4) Snapshot(75 Tran), Snapshot(150 Tran), andSnapshot(300 Tran), meaning that all views are snapshots,and are refreshed after every 75th, 150th and 300th transac-tion respectively,3 and (5) Combined, meaning that the viewsSPJ-view12 and SPJ-view23 use the deferred maintenancepolicy, while all other (�rst layer) views use the immediatemaintenance policy. For each maintenance policy, we varythe update ratio of the transaction stream, and measure theelapsed time, the number of refresh operations, the updateresponse time, and the query response time.Analysis: Graph (a) in Figure 5 shows the elapsed timesto complete 1,000 transactions under di�erent maintenancepolicies. The line for Snapshot (no refresh) gives the timerequired to execute the transactions without any mainte-nance overhead { the elapsed time increases with the up-date ratio simply because updates are more expensive thanqueries. A major observation is that the immediate main-tenance policy's cost increases linearly as the update ra-tio increases, while the deferred and combined maintenancepolicies show smaller elapsed times at higher update rates.Note that the sudden drop in the total elapsed time at up-date ratio 1.0 for the deferred policy is due to the absenceof queries (so that maintenance never occurs). For snapshot3Note that we chose 75, 150, and 300 because they do not evenlydivide 1,000. Otherwise, these policies would have to do maintenancefor the 1,000th transaction. This would penalize snapshot relativeto deferred, since snapshot would maintain all views, while deferredwould be able to leave some views unmaintained.

0

400

800

1200

1600

2000

2400

0 0.25 0.5 0.75 1

Tot

al E

laps

ed T

ime

(sec

.)

Update Ratio

ImmediateDeferred

CombinedSnapshot(no refresh)

Snapshot(every 75 tran)Snapshot(every 150 tran)Snapshot(every 300 tran)

0

500

1000

1500

2000

2500

3000

3500

4000

0 0.25 0.5 0.75 1

Tot

al N

umbe

r of

Mai

nten

ance

Ope

ratio

ns

Update Ratio

ImmediateDeferred

CombinedSnapshot(every 75 tran)

Snapshot(every 150 tran)Snapshot(every 300 tran)

(a) Total elapsed times (b) Total number of refresh operations

0

0.5

1

1.5

2

2.5

3

0 0.25 0.5 0.75 1Upd

ate

Res

pons

e w

ith M

aint

enan

ce O

verh

ead

(sec

.)

Update Ratio

ImmediateDeferred

CombinedSnapshot(no refresh)

0

2

4

6

8

10

12

14

16

18

20

0 0.25 0.5 0.75 1Que

ry R

espo

nse

with

Mai

nten

ance

Ove

rhea

d (s

ec.)

Update Ratio

ImmediateDeferred

CombinedSnapshot(no refresh)

(c) Update response times (d) Query response timesFigure 5: Comparison of di�erent maintenance policiespolicies, the larger the update interval, the faster the snap-shot maintenance policy runs since (1) some of the overheadsare constant, and (2) log trimming is more e�ective with alarger number of log records.Graph (b) in Figure 5 shows that the number of main-tenance operations goes up linearly with the number of up-dates under immediate maintenance. The number of main-tenance operations is roughly semi-elliptic for the deferredpolicy. When the number of read transactions goes down, sodoes the number of refreshes past a certain point (reachingzero when there are no read operations, since then the viewis never maintained). As expected, the combined behavioris between that of immediate and deferred.Graphs (c) and (d) respectively show the update andquery response times, including the time to maintain theviews when required. Graph (c) clearly shows that usingthe immediate policy signi�cantly increases the cost of up-date transactions. On the other hand, Graph (d) shows thatthe average query response time for snapshot and immedi-ate policies (both of which involve no maintenance work byqueries) is signi�cantly less than that for the deferred pol-icy (which must do maintenance work as part of the query).

The combined strategy reduces the query response time byshifting the maintenance work of the �rst level views to theupdate transactions | the average query response time goesdown by about half.5.4 Testing Scalability of Log OperationsWe wished to verify that the logging overhead, which mustbe paid by update transactions, is independent of the num-ber of deferred/snapshot views, so that the system can scalewith increasing numbers of deferred/snapshot views. Anexperiment conducted by varying the number of views from0 to 7, and measuring the update response time for di�er-ent maintenance policies con�rmed that the update responsetime was insensitive to the number of deferred and snapshotviews. However, the update response time was found toincrease linearly with the number of immediate views.5.5 A second database schemaThe experiments above were repeated with a linear treeshaped database schema (Figure 6). The linear tree schemais somewhat simpler, with the same base tables as in the

earlier schema, but with the views de�ned in a linear treeform. The results were found to be similar to those reportedabove for the schema of Figure 3.base1

base2

base3

base4

SPJ−view1

SPJ−view2

SPJ−view3Figure 6: Linear tree schema6 Related WorkADMS [RK86, Rou91] was the �rst system to realize the im-portance of supporting multiple maintenance policies. How-ever ADMS did not propose any model of consistency inthe presence of multiple policies. They concentrated on theadvantages of materializing a view using view-caches, whichare join indices, rather than the view tuples themselves. Ananalytical and experimental study comparing the bene�ts ofdeferred incremental maintenance over re-computation us-ing di�erent join methods was presented. However, they didnot compare the performance of the di�erent maintenancepolicies. Consistency of views in distributed warehousingenvironments was studied in [ZGHW95] and [HZ96]. Ourfocus, on the other hand, is on the consistency aspects ofviews with di�erent policies in the same system.Another analytical performance study by [BM90] com-pared the use of join indices, fully materialized views, andrecomputation for querying join views, and found that eithermethod can perform better depending upon the update rateand join selectivity.[Han87] presents an analytical performance comparisonof answering queries over a single view by (1) recomputation,(2) an immediately maintained materialized view, and (3) adeferred materialized view. The study is done for a singleSP view (recomputation is the best), a single SPJ (select-project-join) view (immediate maintenance is the best), anda single aggregate view (deferred maintenance is the best).In contrast, our performance study uses an actual imple-mentation, and compares the maintenance policies for setsof materialized views. Also, deferred view maintenance istreated somewhat di�erently in [Han87], where it is assumedthat the changes to the base tables are also deferred untilthe view is queried. An analytical study of optimal refreshpolicies, based on queuing models and parameterization ofresponse time and cost constraints, is described in [SR88].As one can see, many issues in the performance analysisof materialized views have yet to be explored. There havebeen isolated attempts to study certain narrow aspects, andthere is a need for a comprehensive performance study usingan actual implementation. Our study is a start in this direc-tion, and is the �rst one to compare the impact of di�erentmaintenance policies for di�erent views.Snapshots were �rst proposed in [AL80]. Implementationtechniques for snapshot views are described in [LHM+86,KR87, SP89]. These papers consider only SP (select-project)views. [LHM+86] focuses on detecting relevant changes toa snapshot based on update tags on base tables. [KR87]

and [SP89] present techniques for maintaining logs and com-puting the net update to a view when multiple views sharecommon parents. The log structures in our implementa-tion are based on the ideas in [SP89]. Replication serversfrom Oracle and IBM essentially implement snapshot mate-rialized views, where the snapshot view is materialized in aremote system.Our implementation uses the counting incremental main-tenance algorithm of [GMS93] for immediate, deferred, andsnapshot maintenance. Several other incremental algorithmshave been proposed [BLT86, CW91, QW91, GL95, CGL+96].In [CGL+96], equations that compute incremental changesto a view using only the post-update state of tables are de-rived. The paper also proposes a high-level scheme for min-imizing the time taken to refresh a view.Strategies for updating a view based on di�erent priori-ties for transactions that apply computed updates to a viewand transactions that read a view, are presented in [AGK95].Their experiments focus on that portion of the maintenanceprocedure that applies previously computed changes to aview.Concurrency control problems and a serializability modelto guarantee serializability in the presence of deferred viewsare discussed in [KLM+97]. The focus of that paper is ondoing concurrency control when multiple transactions read-ing and updating tables are executing concurrently in thesystem.7 ConclusionsIn this paper we investigated issues related to supportingmultiple materialized views with di�erent maintenance poli-cies. We considered the problem of supporting immediate,deferred, and snapshot materialized views in the same sys-tem. This problem is important because the di�erent main-tenance policies have di�erent performance characteristicsand consistency guarantees, and an application may need amix of the characteristics for di�erent materialized views.While researchers have looked at supporting each of thesepolicies in isolation, simultaneous support for these has notbeen investigated in detail. A complete solution to the prob-lem of supporting many views with di�erent maintenancepolicies must include both correctness and performance as-pects.We �rst studied the impact on the validity of query re-sults in a system supporting di�erent policies. We developedformal notions of consistency for views with di�erent poli-cies and proposed a model based on viewgroups to collecttogether related, mutually consistent, views. The viewgroupconcept is important in understanding the notion of consis-tency in the presence of multiple views, and to be able tosupport consistent querying over materialized views. View-groups also help place limits on the potential e�ects of a reador update transaction by isolating the resulting maintenanceactivities to a single viewgroup. We presented rules for as-signing maintenance policies, and an algorithm to maintainviews subject to the constraints.Next, we studied the performance aspects of a systemsupporting di�erent maintenance policies based on an actualimplementation. The implementation used e�cient datastructures and algorithms to provide a viable and scalablesystem for supporting multiple views and policies. We con-ducted a detailed performance study to compare the trade-o�s between the di�erent policies. We used two di�erentdatabases for the experiments, and obtained similar resultson both, giving us con�dence in the soundness of our conclu-

sions. In addition to demonstrating the feasibility and scal-ability of a system based on our model, we learned the fol-lowing: (1) Immediate maintenance penalizes update trans-actions, while deferred maintenance penalizes read transac-tions. Combining immediate and deferred policies, whereinsome views have immediate maintenance and others havedeferred maintenance, has a balancing e�ect. (2) Doing de-ferred maintenance for two levels (a deferred view de�nedusing another deferred view) degrades performance muchmore dramatically than when deferring maintenance for onelevel. (3) The number of levels of immediate and deferredviews have more impact on performance than the breadthof the schema. (4) Immediate and deferred maintenancehave similar throughput at low update ratios (that is, fewupdates and many queries). (5) Snapshot maintenance per-forms better than immediate or deferred maintenance (notsurprisingly). (6) Rematerialization is a viable alternativeto incremental maintenance once 10-20% of base tuples arechanged. The cut-over point occurs more quickly when basetuples are updated than when tuples are inserted into ordeleted from the base tables. This observation shows thatthere is scope for a better maintenance algorithm for updatesthan the currently published algorithms that treat updatesas deletions followed by insertionsThe experiments clearly illustrate that the di�erent main-tenance policies impact the system di�erently, and that onemay need to choose di�erent policies for di�erent views de-pending upon the data currency requirements and the per-formance implications. The results of our investigations onboth the consistency and performance aspects of variousview maintenance policies are important in choosing the ap-propriate maintenance policies.Acknowledgments We thank Robert Arlein for technicalassistance in the development of the prototype system. Wealso thank Damianos Chatziantoniou, Shu-Wie Chen, MattGreenwood, Pankaj Kulkarni, and Jun Rao, of ColumbiaUniversity's database research group for valuable commentson an earlier version of this paper. Finally, we are gratefulto the referees for their remarks.References[AG89] R. Agrawal and N. Gehani. Ode (object database andenvironment): the language and the data model. InSIGMOD 1989.[AGK95] B. Adelberg, H. Garcia-Molina, and B. Kao. Ap-plying update streams in a soft real-time databasesystem. In SIGMOD 1995.[AKG96] B. Adelberg, B. Kao, and H. Garcia-Molina.Database support for e�ciently maintaining deriveddata. In EDBT 1996.[AL80] M. Adiba and B. Lindsay. Database snapshots. InVLDB 1980.[BC79] P. Buneman and E. Clemons. E�ciently monitor-ing relational databases. ACM TODS, 4(3):368{382,September 1979.[BLT86] J. Blakeley, P. Larson, and F. Tompa. E�cientlyUpdating Materialized Views. In SIGMOD 1986.[BM90] J. Blakeley and N. Martin. Join index, materializedview, and hybrid hash join: A performance analysis.In Proc. Data Engineering, 1990.[CG96] L. Colby and T. Gri�n. An algebraic approach tosupporting multiple deferred views. In Proc. Int'lWorkshop on Materialized Views: Techniques andApplications, Montreal, Canada, June 7 1996.

[CGL+96] L. Colby, T. Gri�n, L. Libkin, I. Mumick, and H.Trickey. Algorithms for deferred view maintenance.In SIGMOD 1996.[CKL+96] L. Colby, A. Kawaguchi, D. Lieuwen, I. Mumick,and K. Ross. Supporting Multiple View Mainte-nance Policies: Concepts, Algorithms, and Perfor-mance Analysis. AT&T Technical Memo.[CM96] L. Colby and I. Mumick. Staggered maintenance ofmultiple views. In Proc. Int'l Workshop on Material-ized Views: Techniques and Applications, Montreal,Canada, June 7 1996.[CW91] S. Ceri and J. Widom. Deriving production rules forincremental view maintenance. In VLDB 1991.[GL95] T. Gri�n and L. Libkin. Incremental maintenance ofviews with duplicates. In SIGMOD 1995.[GM95] A. Gupta and I. Mumick. Maintenance of Material-ized Views: Problems, Techniques, and Applications.IEEE Data Engineering Bulletin, Special Issue onMaterialized Views and Data Warehousing, 18(2):3{19, June 1995.[GMS93] A. Gupta, I. Mumick, and V. Subrahmanian. Main-taining views incrementally. In SIGMOD 1993.[Han87] E. Hanson. A performance analysis of view material-ization strategies. In SIGMOD 1987.[HZ96] R. Hull and G. Zhou. A framework for supportingdata integration using the materialized and virtualapproaches. In SIGMOD 1996.[KLM+96] A. Kawaguchi, D. Lieuwen, I. Mumick, and K. Ross.View maintenance in nested data models. In Proc.Int'l Workshop on Materialized Views: Techniquesand Applications, Montreal, Canada, June 7 1996.[KLM+97] A. Kawaguchi, D. Lieuwen, I. Mumick, D. Quass, andK. Ross. Concurrency control theory for deferred ma-terialized views. In ICDT 1997.[KR87] B. K�ahler and O. Risnes. Extended logging fordatabase snapshots. In VLDB 1987.[LHM+86] B. Lindsay, L. Haas, C. Mohan, H. Pirahesh, and P.Wilms. A snapshot di�erential refresh algorithm. InSIGMOD 1986.[LMSS95] J. Lu, G. Moerkotte, J. Schu, and V. Subrahmanian.E�cient maintenance of materialized mediated views.In SIGMOD 1995.[MRS93] I. Mumick, K. Ross, and S. Sudarshan. De-sign and implementation of the SWORD declarativeobject-oriented database system, 1993. UnpublishedManuscript.[Mum95] I. Mumick. The Rejuvenation of Materialized Views.In Proc. Int'l Conf. on Information Systems andManagement of Data (CISMOD), Bombay, India,November 15-17 1995.[QW91] X. Qian and G. Wiederhold. Incremental recompu-tation of active relational expressions. IEEE TKDE,pages 337{341, 1991.[RK86] N. Roussopoulos and H. Kang. Principles and tech-niques in the design of ADMS+. IEEE Computer,pages 19{25, December 1986.[Rou91] N. Roussopoulos. The incremental access method ofview cache: Concept, algorithms, and cost analysis.ACM TODS, 16(3):535{563, September 1991.[SI84] O. Shmueli and A. Itai. Maintenance of Views. InSIGMOD 1984.[SP89] A. Segev and J. Park. Updating distributed materi-alized views. IEEE TKDE, 1(2):173{184, June 1989.[SR88] J. Srivastava and D. Rotem. Analytical modeling ofmaterialized view maintenance. In PODS 1988.[ZGHW95] Y. Zhuge, H. Garcia-Molina, J. Hammer, and J.Widom. View maintenance in a warehousing envi-ronment. In SIGMOD 1995.