NCP Computing Infrastructure & T2-PK-NCP Site Update

-

Upload

khangminh22 -

Category

Documents

-

view

0 -

download

0

Transcript of NCP Computing Infrastructure & T2-PK-NCP Site Update

NCP Computing Infrastructure

&

T2-PK-NCP Site Update

Saqib Haleem

National Centre for Physics (NCP), Pakistan

• NCP Overview

• Computing Infrastructure at NCP

• WLCG T2 Site status

• Network status and Issues

• Conclusion and Future work

Outline

• The National Centre for Physics (NCP), Pakistan has been established to promote research in Physics & applied disciplines in the country.

• Collaboration with many in International organizations including CERN, SEASME, ICTP, TWAS…

• Major Research Programs:

• Experimental High Energy Physics, Theoretical and Plasma Physics, Nano Sciences and Catalysis, Laser Physics, vacuum Sciences and technology, Earthquake studies.

• Organize/hosts International & National workshops, conferences every year.• International Scientific School (ISS), NCP school on LHC Physics….

About NCP

Computing Infrastructure Overview

No. of Physical Servers 89

Processor sockets 140

CPU Cores 876

Memory Capacity 1.7TB

No. of Disks ~600

Storage Capacity ~500TB

Network Equipment (Switches, Router, Firewalls)

~50

Installed Resources in Data Centre

• Computating Infrastructure of NCP is mainly utilized for two projects:

• Major Portion of compute and storage resources are being used in WLCG T2 site Project & Local Batch system focusing Experimental High Energy Physicist community

• Rest Allocated to High Performance Computing (HPC) cluster.

Computing Infrastructure Overview

WLCG Site at NCP status

• NCP-LCG2 site hosted at National Centre for Physics (NCP) , for WLCG CMS experiment.

• Site was established in 2005, with very limited resources. Later on resources upgraded in 2009.

• The Only CMS Tier-2 Site in Pakistan( T2_PK_NCP)

Physical Hosts 63

CPU sockets 126

No. of CPU Cores 524

HEPSPEC06 6365

Disk Storage Capacity 330 TB

Network Connectivity

1 Gbps (shared)

Compute Elements (CE)

0302( CREAM-CE)

01 (HTCONDOR-CE)

DPM StorageDisk Nodes

13

WLCG Resource Summary

NCP-LCG2

Installed Pledged

2018 2018 2019

CPU (HEP-SPEC06) 6365 6365 6365

Disk (TeraBytes) 330 330 500*

WLCG resource Pledges

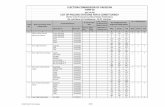

WLCG Hardware Status

Hardware Sockets/cores

Qty. Total Cores

Sun Fire X4150(Intel(R) Xeon(R) CPU X5460 @ 3.16GHz)

02/04 28 224

Dell Power Edge R610 (Intel(R) Xeon(R) CPU X5670 @ 2.93GHz)

02/06 25 300

524 cores

Storage Server Quantity Usable Capacity

Transtec Lynx 430023TB x1TB

15

330TBDell Power Edge T62024 X 2TB

2

Computing Servers

• Existing Hardware is very old, and replacement of hardware is in progress.Recent Purchase:

• Compute Servers : • 3 X Dell PowerEdge 740R (2 x Intel® Xeon® Silver 4116 Processor) 116 CPU cores• 1 X Lenovo systemX 3650 (2 x Intel® Xeon® E52699v4 Processor) Storage:Dell PowerEdge 740xd CPU (2 x Intel® Xeon® Silver 4116 Processor) = 16 cores (50TB) 150TB Raw Dell PowerEdge R430 CPU 2 x 2609v4 = 16 cores (100TB PV-MD1200)

More Procurement is in process…..

Storage Servers

NCP-LCG2 Site Availability & Reliability

http://argo.egi.eu/lavoisier/site_ar?site=NCP-LCG2,%20&cr=1&start_date=2018-01-01&end_date=2018-10-31&granularity=MONTHLY&report=Critical

HPC Cluster

• HPC cluster Deployed is available based on Rocks Linux Distribution, and available 24x7.

• 100 +CPU cores are available.

Research Areas

Material Science Condensed Matter Physics

Climate Modeling, Earthquake studies

Plasma Physics…..

Applications Installed

WEIN2K Suspect GEANT2 R/RMPI

Quantum Espresso Scotch MCR …..

Cluster Usage Trend---- Last 3 years

Cluster Usage ---- Last 2 years

Computing Model

• Partially Shifted the NCP compute servers hardware on Openstack Based Private Cloud, for efficient utilization of idle resources.

• Ceph based block storage model is also adopted for flexibility.

• Currently two computing projects are successfully running on cloud.

• WLCG T2 Site ( compute Nodes)

• Local HPC cluster ( Compute Nodes)

Network Status

• Currently 1Gbps of R&E link, between Pakistan Education and Research Network (PERN) and TEIN.

• Pakistan’s Connectivity to TEIN via Singapore (SG) PoP.

• R&E Link is shared among Universities/Projects in Pakistan…… ( i.e. Not dedicated for LHC traffic only)

T2_PK_NCP Network Status

• NCP has 1Gbps of Network connectivity with PERN, with following traffic policies:

• Commercial/commodity Internet 50 Mbps

• Bandwidth for International R&E destinations is kept un-restricted.

• Fully operational on IPv4 and IPv6 .

• IPv6 enabled NCP services ( Email, DNS, WWW, FTP WLCG Compute Elements (CEs), Storage Element(SE) & Pool nodes, PerfSonar Node

NCPWLCG site

Hu2wai-NE-40

Commercial Internet

R&E Networks

PERNAS45773

Commercial internet @50MbpsR&D link ( without restriction)

TEIN GEANT

Internet2

1Gbps

2400:FC00:8540::/44111.68.99.128/27111.68.96.160/27

1Gbps

Network Traffic Statistics ( 1 Year)

• ~ 50 % of NCP traffic is on IPv6 ( Mostly WLCG traffic)• IPv6 Traffic trend is increasing.

T2_PK_NCP Network Status

• Low Network Throughput is being observed from T1 sites, for last 1 year, which resulted in decommissioning T2_PK_NCP links.

• To become a commissioned site for production data transfers, a site must pass a commissioning test. [1]

• T1 to T2 site average data transfer rate must be > 20MB/s.

• T2 to T1 site average data transfer rate must be > 5MB/s.

• However, NCP is getting average ~ 5MB/s to 10MB/s transfer rate from almost all T1 sites in multiple tests.

Accumulated transfer rate of all T1 sites

Accumulated transfer rate of T1_IT_CNAF site

[1] https://twiki.cern.ch/twiki/bin/view/CMSPublic/CompOpsTransferTeamLinkCommissioning

T2_PK_NCP Network Status

Only T1_UK_RAL -> NCP link commissioned in Nov 2017 and later on decommissioned in April, 2018 due to low data rate.

T2_PK_NCP : Site Readiness Report

Ref: https://cms-site-readiness.web.cern.ch/cms-site-readiness/SiteReadiness/HTML/SiteReadinessReport.html

Recently changed status from WR to Morgue, Due to Network issues.

• Multiple simultaneous Network related issues identified, which increased the complexity of issue:

• T1_US_FNAL, and T1_RU_JINR were not initially following R&E (TEIN) path.( Resolved: April 2018)

• Low buffer related errors observed in NCP Gateway Device. Replaced with new hardware. (Resolved: March, 2018)

• PERN also Identified the issue in their uplink route. ( Resolved: March, 2018).

Network issues

PERN Network ComplexityNCP

TEIN Link

• NCP is located on North Region, while TEIN link is terminated on south Region HEC(KHI) PoP.

• Multiple Physical routes and devices exist between the path from NCP to TEIN.

• Initially PERN network suffered with frequent congestion issues to fiber ring outages. (However now situation is stable, due to bifurcation of fiber routes)

Ref: http://pern.edu.pk/wp-content/uploads/2018/10/rsz_pern_v2_updated.jpg

• Steps taken up-till now, for identification of Low throughput from WLCG sites:

• Involved WLCG Network Throughput Working Group, and Global NOC (IU).

• Tuning of storage servers: system parameters /etc/sysctl.conf file.

• Placement of Storage node at NCP edge network .

• Deployed PerfSonar Nodes at NCP and PERN Network.

• Installed GridFTP clients on PERN edge to isolate the issue.

Network issues

Network Map for Troubleshooting

Figure: Perfsonar Nodes & Latency between paths

• Network Team from Global NoCand ESNET prepared Map based on Perfsonar nodes in path,

• Initially investigating low throughput issue from Italian site T1_IT_CNAF, and T1_UK_RAL to NCP

Case:https://docs.google.com/document/d/1HHhK9t4PpYPzZOfJUAhupRAodhPT6HNIZi6J8ljpBtw/edit#

• Traffic path from R&E network is offering high latency as compared to commercial network. Difference of 100+ ms.

• Commercial route is providing better WLCG transfer rate, but have some issues:• Can not ensure guaranteed bandwidth all the time either from PERN or far end T1 Site.

• NCP is currently the major user of 1Gbps R&E link in Pakistan, but ~10-30 % utilization by other organizations, which eventually reducing bandwidth for LHC traffic.

• Minor packet loss on high latency route can severely impact throughput.

• Need to increase the coordination among intermediates Network Operators to find out the source of packet loss, possibly low buffered intermediate device, or source of intermittent congestion.

Findings & conclusion

Upcoming Work

• Planning to pledge at least 3 x times more compute and storage to fulfill the needs of WLCG experiment during HL-LHC.

• Compute pledges from 6K ( HEPSPEC) -> 18 K (HEPSPEC)

• Storage Pledges from 500 TB -> 2PB.

• International R&E Bandwidth increase from 1Gbps to 2.5Gbps.

• Upgradation of storage and ToR switches 1Gbps->10Gbps

• Upgradation of PERN2 -> PERN3 • Edge network upgradation to 10Gbps

• Core Network upgradation to support 40Gbps

• NCP site connectivity with LHCONE.

HL-LHC---Expected Storage Requirement

HL-LHC----Expected Computing Requirement