Osmotic Compression and Expansion of Highly Ordered Clay Dispersions

Gaussian estimation and joint modeling of dispersions and correlations in longitudinal data

Transcript of Gaussian estimation and joint modeling of dispersions and correlations in longitudinal data

c o m p u t e r m e t h o d s a n d p r o g r a m s i n b i o m e d i c i n e 82 (2006) 106–113

journa l homepage: www. int l .e lsev ierhea l th .com/ journa ls /cmpb

Gaussian estimation and joint modeling of dispersionsand correlations in longitudinal data

Mohammad Al-Rawwasha,∗, Mohsen Pourahmadib

a Department of Statistics, Yarmouk University, Irbid, Jordanb Division of Statistics, Northern Illinois University, DeKalb, IL 60115, USA

a r t i c l e i n f o

Article history:

Received 7 April 2005

Received in revised form 23

February 2006

a b s t r a c t

Analysis of longitudinal, spatial and epidemiological data often requires modelling dis-

persions and dependence among the measurements. Moreover, data involving counts or

proportions usually exhibit greater variation than would be predicted by the Poisson and

binomial models. We propose a strategy for the joint modelling of mean, dispersion and

Accepted 28 February 2006Keywords:

Extended generalized estimating

equations

Link functions

Model formulation

Quasi-least squares

Quasilikelihood functions

Unconstrained parameterization

Working correlation matrix

correlation matrix of nonnormal multivariate correlated data. The parameter estimation

for dispersions and correlations is based on the Whittle’s [P. Whittle, Gaussian estimation in

stationary time series, Bull Inst. Statist. Inst. 39 (1962) 105-129.] Gaussian likelihood of the

partially standardized data which eliminates the mean parameters. The model formulation

for the dispersions and correlations relies on a recent unconstrained parameterization of

covariance matrices and a graphical method [M. Pourahmadi, Joint mean–covariance mod-

els with applications to longitudinal data: unconstrained parameterization, Biometrika 86

(1999) 677–690] similar to the correlogram in time series analysis. We show that the esti-

mating equations for the regression and dependence parameters derived from a modified

Gaussian likelihood (involving two distinct covariance matrices) are broad enough to include

generalized estimating equations and its many recent extensions and improvements. The

results are illustrated using two datasets.

© 2006 Elsevier Ireland Ltd. All rights reserved.

1. Introduction

Analysis of longitudinal, spatial and epidemiological datais complicated partly due to the correlation among themeasurements [1]. Moreover, data involving counts andproportions usually exhibit greater variation than would bepredicted by the Poisson and binomial models. As an exampleof count data, we consider a clinical trial of 59 epileptics[2] where for each patient the number of epileptic seizureswas recorded during a baseline period of 8 weeks. Patientswere then randomized to treatment with the anti-epilepticdrug progabide (m1 = 31), or to placebo (m2 = 28) in additionto the standard chemotherapy. The number of seizures was

∗ Corresponding author. Tel.: +962 227 11111.E-mail addresses: [email protected] (M. Al-Rawwash), [email protected] (M. Pourahmadi).

then recorded in four consecutive 2-week intervals. The goalwas to decide whether or not the progabide reduces therate of epileptic seizures. Diggle et al. [1] provide an analysisof this dataset and note that the Poisson assumption, inparticular that the mean and variance are equal does nothold. In fact, they show that the ratio of sample variances tothe means of the counts for treatment-by-visit combinationsranges from 7 to 39 displaying a high degree of extra-Poissonvariation. Of course, proper accounting and modelling of suchtime-varying over-dispersions are crucial for making validinferences from the data. Our first goal is to present a data-based methodology for joint modelling of dispersions andcorrelations.

0169-2607/$ – see front matter © 2006 Elsevier Ireland Ltd. All rights reserved.doi:10.1016/j.cmpb.2006.02.010

c o m p u t e r m e t h o d s a n d p r o g r a m s i n b i o m e d i c i n e 82 (2006) 106–113 107

2. Background

In the literature of longitudinal data there are two broad ap-proaches based on the idea of generalized estimating equa-tions (GEE) and mixed models for estimation of correlationand dispersion parameters. The technique of generalized esti-mating equations [3,4] is used widely in health, environmentaland social sciences; its motivation and theoretical underpin-nings lie within the theory of estimating functions and max-imum quasilikelihood (QL) estimation [5–8]. In recent years,however, some of the pitfalls of GEE are pointed out and a callis made to estimate the parameters by optimizing some ob-jective functions [7,9,10]. In stark contrast to generalized esti-mating equations, longitudinal data analysis based on mixedmodels [11] usually exploits the Gaussian likelihood functionas its objective function along with the often tentative nor-mality assumption to estimate the parameters. Our secondgoal is to show that these two powerful and seemingly dis-tinct approaches can be unified using the Gaussian likelihoodas the objective function for estimating parameters of non-normal distributions. To this end, following [12], by Gaussianestimates (GE) we mean the parameter values maximizing theGaussian likelihood, that is estimating the parameters as if theobservations were normally distributed.

In recent years, the idea of GE has been gaining mo-mentum. For binary data, Crowder [13] and Paul and Islam[mbeQfpoicGit[taddjtggfon

smpttha

sponses are also proposed [2,22]. However, for a given dataset itis not clear even to an experienced user, how to specify within-subject covariance structures. At present the methodologyfor selecting suitable covariance structures is mostly ad hocand far from being data-driven [1]. We develop a data-basedmethodology for modelling the dispersions and correlationmatrix of the partially standardized data analogous to thegeneralized linear models (GLM). To this end, we decomposea covariance matrix into three components capturing its“variance”, “overdispersion” and “dependence”. Then, usinganalogues of regression graphics and diagnostics parsimo-nious models for the first two components are specified. Forthe last component, we use the regressogram [23] constructedfrom the regression coefficients when a measurement isregressed on its predecessors, to model the dependence.For estimation, we rely on the idea of Gaussian estimationdiscussed earlier, the key advantages of our approach are:(1) reliance on a criterion for estimation of parameters, (2)reliance on the data to formulate models for the underlyingcovariance matrix, (3) guaranteed positive-definiteness ofthe estimated covariance matrix, (4) not requiring third- andfourth-moment assumptions about the responses and (5) forindependent data the approach reduces to the more familiarstrategy for the joint modelling of mean and dispersions [6,24].

The outline of the paper is as follows. Section 3 reviewsand unifies some variants of the GEE using the frameworkof Gaussian estimation. Section 4 provides details of a data-

14], have compared performance of GE to nine moment-typeethods including extended beta-binomial likelihood, a com-

ination of the QL equation for the mean and the momentstimating equation for the intraclass correlation, extendedL and quadratic estimating equations. They conclude that

or the estimation of the mean (regression) parameters the QLrocedure performs best. But, for the simultaneous estimationf regression and the correlation parameters the GE procedure

s better. One expects similar or better performance of GE forount (Poisson) and continuous nonnormal data. The idea ofE for (co)variance parameters also appears to be developed

ndependently by Davidian and Carroll [15] and is known ashe pseudolikelihood (PL) estimation. Davidian and Giltinan16] show that the estimating equations obtained by differen-iating the PL criterion subsume GEE for the mean and covari-nce parameters suggested by Prentice and Zhao [17]. Later,ifferent authors arrive at the same conclusion using slightlyifferent objective functions [10,18,19]. The idea of GE in con-

unction with the GEE is also used by Lipsitz et al. [20] to es-imate correlation parameters of incomplete data. Since for aiven parametric covariance structure the computational al-orithms for finding GE and maximizing the normal likelihoodor mixed models are essentially the same, a key advantage ofur unification is that for computing GE no new software iseeded and one could rely on standard software packages.

Both GEE and mixed model approaches allow users topecify a form for the covariance matrix of the within-subjecteasurements. To facilitate this, the GENMOD and MIXED

rocedures [21], for example, include about twenty options forhe covariance matrix such as compound symmetry (CS), au-oregressive of order one (AR(1)) and few other stationary andeterogeneous models. Some families of covariance modelsccounting for overdispersion of counts and multinomial re-

based model formulation using unconstrained parameteriza-tion of covariances. The methodology is illustrated in Section5 using continuous and count datasets and compared with theGEE for some covariance structures formulated using the data.Finally, Section 6 provides a discussion on the need and meritsof data-based modelling of covariance matrices in longitudi-nal data analysis.

3. Gaussian and quasilikelihood functions

We review some variants of the GEE and quasilikelihood (QL)functions and show how they can be unified using the Gaus-sian likelihood as a universal objective function. The GEE, QLand hence GE are useful in situations where there is insuf-ficient information to construct a full likelihood function forthe data, but there might be just enough to specify models forthe first two moments. In the sequel, QL is used generically tostand for any objective function including the full likelihoodfunction. Some examples of QL and their connection with theGEE are discussed in [16]. Here, we discuss some new cases;see [10,18,19,25].

To set the notation, consider a random vector y =(y1, y2, . . . , yn)′ comprising the measurements taken on ageneric subject at times t1 < t2 < · · · < tn and the associatedcovariates xj = (xj1, . . . , xjp)′, 1 ≤ j ≤ n. In general, no distribu-tional assumption is made about y other than those about itsfirst two moments:

(i) The means and variances of the entries of the responseare related to the p-vector of covariates xj via g(�j) =x′

jˇ, var (yj) = �jv(�j), j = 1, 2, . . . , n, where ˇ = (ˇ1, . . . , ˇp)′ is

the regression parameters of primary interest, g is an in-

108 c o m p u t e r m e t h o d s a n d p r o g r a m s i n b i o m e d i c i n e 82 (2006) 106–113

vertible known link function, v(·) is a known variance func-tion and �1, . . . , �n are dispersion parameters not depend-ing on ˇ. Note that the variance of yj is the product of twocomponents; v(�j) expresses the part of the variance func-tionally dependent on the mean �j, while �j expresses thevariability beyond the mean.

(ii) The correlations of the measurements on the same subjectare modelled with a correlation matrix R = R(˛) containingfew parameters, not depending on ˇ. The matrix R and itsparameters ˛ are usually viewed as nuisance, though insome situations they are of primary interest.

Let us define the diagonal matrices ˚ = diag (�1, . . . , �n) andV = diag (v(�1), . . . , v(�n)) where � = �(ˇ) = (�1, . . . , �n)′. Then,using (i) and (ii) above, the covariance matrix of y is decom-posed into three components:

cov(y) = (˚V)1/2R(˚V)1/2. (1)

Since our interest lies in modelling R and ˚, we eliminateV or the mean parameters ˇ from (1) by working with the par-tially “standardized” response y = y(ˇ) = V−1/2(y − �) with thecovariance:

˙ = cov(y) = ˚1/2R˚1/2. (2)

This allows us to view the dispersions �j’s as the bonafide diagonal elements of the new covariance matrix ˙, afact used effectively in Section 4. In a longitudinal study, let

with respect to ˇ and ˛. Estimating equations for ˇ and ˛ are ob-tained by differentiating Q(·, ·, �). Since ˇ appears both in �i(ˇ)and v(�i) one would like to avoid complications arising fromthe differentiation with respect to ˇ of the latter. For this, onemay treat ˇ in the variance function as known or distinct fromany parameter in the mean function [18,25,26]. With this inmind, setting ∂Q/∂ = 0 gives estimating equations for ˇ whichare precisely the Liang–Zeger’s GEE:

m∑i=1

D′icov(yi)

−1(yi − �i) = 0, (5)

where Di = ∂�i/∂ . However, solving (5) requires knowing or es-timating ˛. Setting to zero ∂Q/∂˛ gives q estimating equationsfor ˛:

m∑i=1

(yi − �i)′V−1/2

i

∂R−1i

(˛)

∂˛jV

−1/2i

(yi − �i) = 0, j = 1, 2, . . . , q. (6)

These estimating equations for ˛ are quite different fromthose obtained in the literature [4,27]. In fact, initially Pren-tice [27] suggested an ad hoc system of equations justlike (5) replacing yi, �i, cov(yi) and Di, respectively, by wi =(y2

i1, . . . , y2ini

, yi1yi2, . . . , yi,ni−1, yini), E(wi), cov(wi) and ∂E(wi)/∂˛.

Later, Zhao and Prentice [28] showed that these can be arrivedat as the pseudolikelihood equations for estimating the mean,variance and correlation parameters when the responses are

yi, Xi, i = 1, . . . , m, be the observed vector of ni repeated mea-surements and ni × p matrix of covariates on the ith subjectand let Y = (y1, . . . , ym) stand for the vector of N = ∑m

i=1 ni mea-surements from all the subjects in the study. Let � be a block-diagonal covariance matrix with non-zero blocks cov(yi). Forthe estimation of parameters, the objective function we use isthe generalized Gaussian likelihood function:

GL(ˇ, �∗, �; Y) = log |�∗| + Y(ˇ)′�−1Y(ˇ), (3)

where Y is the “standardized" counterpart of Y and �∗ is anN × N covariance matrix (possibly depending on � and Xi’s)so that GL(·) ≥ 0. We note that the objective function (3) sub-sumes most of the QL-based methodologies in the literature.For example, it reduces to the Whittle’s Gaussian likelihoodand Davidian and Carroll’s [15] PL when v(�j) ≡ 1 and �∗ = �;moreover, GL(ˇ, �, �; Y) for Y normal is the familiar normallikelihood function. When �∗ and � are distinct, it also givesrise to some interesting classical and new cases. For exam-ple, the restricted maximum likelihood estimates (REML) ofparameters of � is obtained from (3) when �∗ is a specificfunction of � and the design matrices Xi’s [1]; GL(ˇ, I, �; Y)is precisely the quasi least-squares (QLS) criterion [18], see(4) below; GL(ˇ, �∗, �; Y) with � a certain line integral of �∗

is equivalent to the extended QL function [19] given in (7).As noted above, the QLS procedure is related to GL(ˇ, I, �; Y).Assuming �j ≡ � in (1), QLS amounts to minimizing:

Q(ˇ, ˛, �) =m∑

i=1

(yi − �i)′cov(yi)

−1(yi − �i)

= �−1m∑

i=1

(yi − �i)′V−1/2

iR−1

i(˛)V−1/2

i(yi − �i) (4)

assumed to follow a quadratic exponential model [29]. Sincecov(wi) depends on the third- and fourth-order cumulants ofyi, the GEE for the association parameter ˛ are usually less easyto apply and may demand assumptions that are hard to verifyusing the data at hand [19]. It is important to point out thatEq. (5) gives an unbiased estimator of ˇ while the correlationparameter estimate induced from Eq. (6) is biased. However,the unbiasedness of the parameter estimate of ˛ is guaranteedin Eq. (2), while another bias correction methodology has beenintroduced by Chaganty and Shults [30].

The extended GEE [19] is obtained using the idea of ex-tended QL function [6] or maximizing the objective function:

Q+(ˇ, ˛, �) = −m∑

i=1

(yi − �i)′(∫ 1

0

s[˙i{t(s), ˛, �}]−1ds

)(yi − �i)

+m∑

i=1

log |˙−1i

(�i, ˛, �)|, (7)

where∑

i(�i, ˛, �) = cov(yi) is given in (1) and t is the straight-

line path t(s) = y + (� − y)s, for 0 ≤ s ≤ 1 [6]. The estimatingequations, a Fisher scoring algorithm for solving them, theconsistency and asymptotic normality of the solution are dis-cussed in [19] under some regularity conditions. They alsocompared the relative efficiencies of the estimators obtainedfrom (7), the GEE and Zhao and Prentice’s [28] alternative viasimulation study.

4. Model formulation and estimation

In this section we formulate models for the covariance matrix˙ in (2) of the partially “standardized” data. The goal is to pro-vide a viable data-based alternative to the traditional reliance

c o m p u t e r m e t h o d s a n d p r o g r a m s i n b i o m e d i c i n e 82 (2006) 106–113 109

on selecting a covariance matrix from a menu of prespeci-fied covariance matrices [21]. Consequently, given any GEE onecan estimate ˇ using a variant whereby cov(yi) is replaced bya parametric model formulated using the data. Sometimes,we try to avoid using prespecified correlation structures suchas AR(1) and compound symmetry and rely more on flexibleand dynamic data-driven methods to estimate the covariancematrix. While such estimated covariance matrices are parsi-monious and guaranteed to be positive definite, it is plausiblethat they are closer to the true covariance structure of the dataand hence the regression parameter estimate could be moreefficient [1,10,25].

4.1. Modelling dispersions and correlations

Following the strategy for joint modelling of mean and dis-persions [6], unconstrained parameterization of a covariancematrix holds the key to a data-based procedure for formu-lating models for the dispersions and correlations. Let y =(y1, . . . , yn)′ be the random vector of partially “standardized”responses with the positive-definite covariance matrix ˙. Forsimplicity in notation it is convenient to drop the tilde (∼) fromhereon and view y as a mean-zero random vector with a co-variance matrix ˙ as in (2) where its diagonal entries are thedispersions �j’s introduced in Section 3.

For a given positive-definite matrix ˙, it is known that thereare a unique unit lower triangular matrix T and a unique di-a

T

tpdε

att

y

wtt�

a[tm

see

l

wa(

and correlations of the random vector y. We note that with˙ = (�st), �tt and �2

t represent different quantities. The formeris simply var(yt), while the latter is the prediction error vari-ance of yt based on its predecessors. However, these are thesame when y1, . . . , yn are independent.

For given covariance parameters �, � and the relevant co-variates zt and ztj, one can compute �tj’s and �2

t ’s using (10).Furthermore, since (9) is similar to an autoregressive model,the entries of ˙ can be computed recursively using an ana-logue of the Yule–Walker equations. For example, multiplyingboth sides of (9) by yt−k and taking expectations as in AR mod-els [33], we obtain:

�t,t−k =t−1∑j=1

�tj�j,t−k + �2t I{k=0}, k = 0, . . . , t − 1, t = 1, . . . , n,

(11)

where IA is the indicator function of a set A. These recursiveformulas express entries of ˙ in terms of those of T, D and theupper-left corner of ˙ itself. From (2) we have �tk = √

�t�k�tk,

so that the dispersion �k = �kk is the kth diagonal entry of ˙

and the correlation �tk, t �= k, can be found using the standardformula �tk = �tk/

√�t�k. The computation is done recursively

using (11) in the order �1; �21, �2; �32, �31, �3; . . . and so on.It is instructive to compare our approach to modelling

dispersions and correlations with the Zhao and Prentice’s [4]

gonal matrix D with positive diagonal entries [23] such that

˙T′ = D. (8)

The (t, j)th below-diagonal entry of T is the negative of �tj,

he coefficient of yj in yt = ∑t−1j=1 �tjyj, the linear least-squares

redictor of yt based on its predecessors yt−1, . . . , y1, and theiagonal entries of D are the variances of the prediction errors

t = yt − yt, i.e. �2t = var(εt), 1 ≤ t ≤ n. We note that �j’s and �tj’s

re different quantities and unrelated to each other. The fac-orization (8) is equivalent to the following dynamic represen-ation of the entries of y:

t =t−1∑j=1

�tjyj + εt, t = 1, . . . , n, (9)

hich is reminiscent of the autoregressive or more generallyhe antedependence models [31,32]. In the sequel, we refero �tj’s as the generalized autoregressive parameters (GARP) and2t ’s as the innovation variances (IV) of y. The plot of �tj versus j

nd that of log �2t versus t, called the regressogram (Pourahmadi

23]), is useful in suggesting parsimonious models and iden-ifying a list of potential covariates relevant to the covariance

atrix.When ˙ is unstructured the �tj’s and log �2

t are uncon-trained and can be modelled in terms of covariates. To thisnd, for t = 1, . . . , n; j = 1, . . . , t − 1, we may consider the mod-ls:

og �2t = v(z′

t�), �tj = d(z′tj�), (10)

here v(·) and d(·) are some known functions and zt, ztj

re q × 1, d × 1 vectors of covariates, � = (�1, . . . , �q)′ and � =�1, . . . , �d)′ are the new parameters related to the dispersions

approach where the (constrained) diagonal and off-diagonalentries of cov(y) are modelled directly in terms of covari-ates. Consequently, their estimated covariance matrix is notguaranteed to be positive definite. In contrast, we formulateand estimate models for the unconstrained parameterslog �2

t ’s and �tj’s, which amounts to recursive modelling ofdiagonal and off-diagonal entries of cov(y), and moreover (8)guarantees that the estimated covariance matrix of y givenby ˆ = T−1DT′−1 is always positive definite.

4.2. The Gaussian estimation

If the formulated model for the covariance matrix has a fa-miliar parametric structure found in the extensive menu ofoptions in SAS [21], then one could use the GENMOD and/orMIXED procedures to estimate its parameters. Otherwise, us-ing the reparameterization (10) one may proceed to computethe Gaussian estimates of the parameters ˛ and ˇ by minimiz-ing GL(ˇ, ˙(˛), ˙(˛); Y), even though the data are not normal[26].

As noted in Section 3, the estimating equations for ˇ and ˛

are essentially the same as those in (5) and (6) except that nowthe inverse of cov(yi) and ∂R−1

i(˛)/∂˛ are simpler to compute.

When the functions (·) and d(·) in (10) are the identity func-tions, the GL is quadratic in � and exponential in the � param-eters. In this case, details of computing the score function, theHessian matrix, Fisher information and the Newton–Raphsonalgorithm are given in Pourahmadi [34].

5. Data analysis

In this section we use a simulation study and two datasetsto illustrate aspects of our methodology for modelling dis-

110 c o m p u t e r m e t h o d s a n d p r o g r a m s i n b i o m e d i c i n e 82 (2006) 106–113

persions and correlations of discrete and continuous data.For the two data sets, we fix the mean–variance pair (�j, )at (log �j = x′

jˇ, v(�j) = �j), and (�j arbitrary, v(�j) ≡ 1) corre-

sponding to Poisson and normal distributions, respectively. Inboth cases we start with the saturated models for the meanresponse and partially “standardize” the response, if neces-sary.

5.1. Simulation study

In the following example, we study the efficiency of the re-gression parameter estimates by comparing the mean squareserror for the GEE and QLS with the GE for the AR(1) correla-tion matrix. We perform a simulation study considering fora two-treatment cross-over design mentioned in Diggle et al.[1] where they showed a severe loss of efficiency may occur inestimation of ˇ if the correlation was not accounted properly.We consider the following model:

yi = Xiˇ + i

where

X′i =

(1 1 1

xi1 xi2 xi3

)

Table 1 – Efficiency of GE compared with GEE and QLS forthe AR(1) correlation structure

˛ GEE QLS

ˇ0 ˇ1 ˇ0 ˇ1

0.1 1.1272 0.9733 1.0064 0.90970.3 1.0723 1.0058 1.0021 0.97650.5 0.9412 1.0471 0.9605 1.05760.7 0.7577 1.1481 0.9020 1.19590.9 0.5739 1.1094 0.9109 1.1556

5.2. Epileptic seizures data

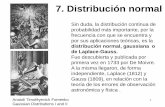

Modelling the mean of the epileptic seizures data [2] for pre-specified covariance matrices has been studied extensively inthe literature. Fig. 1 show the response of the first 20 patientsto the treatment plan. Consequently, we focus on modellingthe dispersion and the correlation matrix of this data and usethe following log-linear model to estimate the overall treat-ment effect:

log

(�ij

tij

)= ˇ0 + ˇ1xi1 + ˇ2xi2 + ˇ3xi1xi2 (12)

for j = 0, 1, 2, 3, 4, i = 1, 2, . . . , 59, where tij = 8 if j = 0 and tij = 2if j = 1, 2, 3, 4. The division by tij is needed to account for thedifferences in observation periods. The covariates are definedas

xi1 ={

1 if visit 1, 2, 3, 4,

0 if baseline,xi2 =

{1 if progabide,

0 if placebo.

Patient number 207 (observation 49) seems to be very un-usual with extremely high seizure count (151 in 8 weeks) atbaseline and his count doubled after treatment to 302 seizuresin 8 weeks. Diggle et al. [1] provide estimates of the log-linearregression coefficients (12) obtained using a GEE with a sin-gle dispersion parameter and exchangeable (compound sym-metry) correlation, with and without patient 207. In the latter

and ˇ′ = (ˇ0, ˇ1); i = 1, 2, . . . , 8. The treatment sequence(xi1, xi2, xi3) consists of all distinct permutation of zeros andones for i = 1, 2, . . . , 8. The correlation structure R(˛) will beconsidered as AR(1) and we assume a constant variance �. Thecorrelation among the simulated data is induced by i whichis assumed to be multivariate normal with mean 0 and co-variance matrix �R. Setting � = 4 and (ˇ0, ˇ1) = (120, −12.88),we simulate from the above model 1000 times to compute therelative efficiencies such as

e(ˇ) = MSE( ˆ GEE)

MSE( ˆ GE),

to compare GE to the GEE and QLS estimates of (ˇ0, ˇ1) andfind their efficiency. Shults and Chaganty [35] compared bothGEE and QLS for the AR(1) correlation structure and showedthat for ˛ < 0.5, the QLS estimate of ˇ is more efficient thanGEE while for ˛ > 0.5, GEE is better. This finding is justifiedbecause of using the method of moments in estimating thecorrelation parameter in the GEE case.

Table 1 shows that for ˇ1, the GE is more efficient thanGEE for all ˛ > 0.1 while the GE is more efficient than QLSfor ˛ > 0.3 which means that for the highly correlated re-peated measurements, the GE gives more efficient estimatesfor the slope ˇ1. On the other hand, for ˇ0, we see that theGE is more efficient than both QLS and GEE when ˛ < 0.5while it is not for ˛ > 0.5. In this case and ,in particular, forthe highly correlated repeated measurements, the GE andthe QLS show a poor performance versus the GEE, while forthe uncorrelated and weakly correlated data it seems thatthe GE is a good estimate of ˇ0. The simulation study givesthe insight that the GE is comparable to the GEE and theQLS.

case, they show there is modest evidence in favor of progabidesince the estimate of ˇ2 is negative. In the sequel, we excludepatient #207 from our analysis. Table 2 shows the parame-ter estimates using GEE, QLS and GE. It is clear that the esti-mates and their standard errors are in a reasonable agreement,

Fig. 1 – The epileptic seizures count data of the first 20patients.

c o m p u t e r m e t h o d s a n d p r o g r a m s i n b i o m e d i c i n e 82 (2006) 106–113 111

Table 2 – Parameter estimates (standard errors) of theepileptic data using GEE, QLS and GE

Parameter GEE QLS GE/AR(1) GE/CS

ˇ0 1.35(0.16) 1.31(0.16) 1.29(0.17) 1.35(0.16)ˇ1 0.11(0.12) 0.14(0.11) 0.17(0.12) 0.11(0.12)ˇ2 −0.11(0.19) −0.07(0.20) −0.05(0.20) −0.11(0.19)ˇ3 −0.30(0.17) −0.36(0.17) −0.40(0.18) −0.30(0.17)˛ 0.60 0.58 0.58 0.59� 10.40 15.29 15.30 15.29

moreover, the GE and GEE regression parameter estimates areidentical when the working correlation structure used is thecompound symmetry. All methods give negative parameterestimates for ˇ2 and ˇ3 which is a good indication that thetreatment has an effect on reducing the number of seizures.We note that the over-dispersion parameter estimate usingthe GEE was computed using the four consecutive visits [1],however, in the QLS and GE we have used the baseline obser-vation and the four consecutive visits.

Next, we estimate the regression parameters of the model(12) after a more detailed study of the covariance structure ofthe data. For formulating a model for the covariance structureof a dataset it is prudent to start with a saturated model forthe mean response profile [1]. Thus, we begin with the sam-ple covariance matrix; Table 3 gives the sample correlations,variances, GARP and innovation variances.

A simple scatter plot reveals the a decreasing trend in boththe GARP and the innovation variances, so that the follow-ing models for �tj and �2

t , t = 1, . . . , 5; j = 1, . . . , t − 1 may seemplausible:

�tj = �1

(t − j)k+ εt,j, log �2

t = �1 + �2

t2+ εt, (13)

where we choose k = 1 or 2, but �1, �1, and �2 are the unknownparameters. Since (13) is linear in these parameters, ordinaryleast squares is probably the simplest noniterative method forestimating the parameters of the tentatively identified modelsfa

cc�

uewm

Table 4 – The BIC, loglikelihood values and parameterestimates for three different models for the covariancematrix of the seizure data

MODEL BIC log L q �1 �2 �1

M1 53.33 −1540.41 3 2.909 3.246 0.406M2 51.57 −1483.38 6 – – 0.442M3 49.47 −1404.14 15 – – –

Table 5 – Epileptic seizure data: fitted variances (maindiagonal), correlations (above the diagonal), GARP (belowthe diagonal) and IV (last row) for model M1

470.89 0.81 0.81 0.81 0.82−0.41 118.80 0.81 0.80 0.80−0.20 −0.41 96.69 0.83 0.82−0.14 −0.20 −0.41 95.67 0.85−0.10 −0.14 −0.20 −0.41 101.54

470.89 41.28 26.30 22.46 20.88

Table 6 – Regression estimates (standard errors) of theepileptic data using GE for the three models

Model M1 M2 M3

ˇ0 1.35(0.16) 1.34(0.20) 1.41(0.20)ˇ1 0.11(0.11) 0.15(0.11) 0.07(0.07)ˇ2 −0.11(0.19) −0.07(0.24) −0.13(0.24)ˇ3 −0.31(0.17) −0.34(0.16) −0.29(0.13)

ber of covariance parameters. In this setup, a smaller value ofBIC is associated with a better fitting model. Table 5 providesthe fitted variances, correlations, GARP and IV correspondingto the model M1. The GE of the regression parameters and theirstandard errors corresponding to the Mi’s, i = 1, 2, 3, are givenin Table 6 and they seem comparable to those in Table 2.

Table 7 gives the ratio of the sample variances to the meansof the response for each treatment-by-visit combination intwo different cases: (1) the data set is considered as one groupand (2) the data set is divided into progabide and placebogroups. The sample variance-to-mean ratio varies between3.8 and 24.4 , whereas ratios of one correspond to the Poissonmodel for the responses. It is clear that the variation in theprogabide group is smaller than the placebo group. Moreover,Table 7 gives the sample variance-to-mean ratio of the seizuredata as well as the fitted variance-to-mean ratio based on M1

and (13). To account for the heterogeneity or over-dispersionsnot explained by the Poisson distribution, it is prudent to re-sume the analysis by taking the �i’s in (1) to be distinct. For

Table 7 – Sample variance to mean ratio for the epilepticseizure data

Treatment Visit 1 Visit 2 Visit 3 Visit 4

Progabide 6.1 4.8 9.1 3.8

or the components of ˙. These estimates could also be useds the initial values for the iterative GE, QLS and GEE methods.

Table 4 provides the ordinary least squares estimates, theorresponding values of the loglikelihood function and the BICriterion for the following three distinct models: M1; k = 1 and

ˆ 2t as in (13), M2; k = 1 and �2

t ’s unstructured (UN) and M3; ˙ isnstructured. The BIC, usually used to compare the fit of sev-ral competing models, is defined by BIC = − 2

m L + q(log m/m),here m is the number of subjects in the study, L is the maxi-ized loglikelihood for a covariance model and q is the num-

Table 3 – Epileptic seizure data: sample variances (maindiagonal), correlations (above diagonal), the GARP (belowdiagonal) and IV (last row)

479.01 0.68 0.73 0.50 0.75−0.26 69.42 0.69 0.54 0.72−0.15 −0.30 48.58 0.67 0.76

0.03 −0.24 −0.97 131.60 0.71−0.10 −0.15 −0.14 −0.18 39.39

479.01 37.49 19.33 70.61 9.79

Placebo 10.8 7.5 24.4 7.3

Sample 9.5 6.6 17.9 6.2

Fitted 16.4 12.7 20.2 13.8

112 c o m p u t e r m e t h o d s a n d p r o g r a m s i n b i o m e d i c i n e 82 (2006) 106–113

illustration, we have used the model M1 and the GE of themean and covariance parameters to compute the ratios of fit-ted variances and means reported in the last row of Table 7.Of course, in view of (1) these ratios give estimates of the over-dispersion parameters. They are computed according to theprocedure described following Eq. (11).

5.3. The cattle data

Kenward [31] provides the data and describes an experimentinvolving two treatments A and B to control intestinal par-asites in cattle. Of 60 cattles, m = 30 received treatment Aand the other thirty received treatment B. They were weighedn = 11 times, the first 10 measurements on each animal weremade at 2-week intervals and the final measurement weremade after 1 week. Measurement times were common acrossanimals and are rescaled to t = 1, 2, . . . , 10, 10.5 and no ob-servations were missing. Using the classical likelihood ratiotest the equality of the two within treatment-group covari-ance matrices is rejected [32]. We report only the result for thetreatment A. A simple profile plot of the data indicates amongother things that the measurement variances are increasingover time and that equidistant measurements are not equicor-related. Consequently, no variant of a stationary covariancesuch as compound symmetry, AR(1) and one-dependence isappropriate for this data.

Let S be the sample covariance matrix for the treatment A,T and D be its factors as in (8). Plots of �tj versus j and log �2

t ver-sus t, Pourahmadi [23], suggests the following cubic polynomi-als for the entries of T and D for t = 1, 2, . . . , 11; j = 1, . . . , t − 1:

log �2t = �1 + �2t + �3t2 + �4t3 + tv,

�tj = �1 + �2(t − j) + �3(t − j)2 + �4(t − j)3 + tjc. (14)

This underscores the role of a data-based procedure inmodelling a 66-parameter covariance matrix parsimoniouslyusing only 8 unconstrained parameters. The LSE, QLS and MLEof the parameters of the cubic models for log �2

t and �tj in (14)are given in Table 8 [34]. We note that while the estimates of �

are the same, the QLS estimate of �1, the intercept for log �2t , is

quite different from that of the other two methods. This dis-crepancy is possibly related to the bias of QLS in estimatingthe correlation parameters [18]. For a more thorough analysisof the cattle data, see Pan and McKenzie [36].

Table 8 – Estimates of parameters of model (14) usingLSE, QLS, and MLE

1 2 3 4

LSE� 3.37 −1.47 0.24 −0.93� 0.18 −1.7 1.64 −1.11

QLS� 7.08 −1.13 0.30 −0.85� 0.18 −1.71 1.64 −1.11

MLE� 3.52 −1.14 0.30 −0.85� 0.18 −1.71 1.64 −1.11

6. Discussion

We have indicated that Whittle’s [12] modified Gaussian like-lihood subsumes the recent improvements of GEE designedto estimate the mean and covariance parameters efficiently.A data-based method for formulating models for dispersionsand correlations is presented and illustrated using two realdatasets. This approach is promising and bound to lead tospecification of covariance matrices that are closer to the un-known covariance matrix of the data. For further discussionson the effect of covariance misspecification on the efficiencyof the regression parameter estimates, see [25,37]. Neverthe-less, it seems prudent to model covariance structure carefullyand simultaneously with the mean which could lead to im-proved model formulation and parameter estimation both forthe mean and the covariance. Important aspects of this strat-egy for the normal data are covered in Pourahmadi [23,34].

Acknowledgement

The second author was supported in part by the NSF grantDMS-0307055.

references

[1] P.J. Diggle, K.Y. Liang, S.L. Zeger, Analysis of LongitudinalData, Oxford University Press, Oxford, 1994.

[2] P.F. Thall, S.C. Vail, Some covariance models for longitudinalcount data with overdispersion, Biometrics 46 (1990) 657–671.

[3] K.Y. Liang, S.L. Zeger, Longitudinal data analysis usinggeneralized linear models, Biometrika 73 (1986) 13–22.

[4] L.P. Zhao, R.L. Prentice, Correlated binary regression using aquadratic exponential model, Biometrika 77 (1990) 642–648.

[5] R.W.M. Wedderburn, Quasi-likelihood functions, generalizedlinear models and the Gaussian method, Biometrika 61(1974) 439–447.

[6] P. McCullagh, J.A. Nelder, Generalized Linear Models, 2nd ed.,Chapman & Hall, London, 1989.

[7] A.F. Desmond, Optimal estimating functions,quasi-likelihood and statistical modeling, J. Stat. Plann. Infer.60 (1997) 77–121.

[8] C.C. Heyde, Quasilikelihood and Its Applications: A GeneralApproach to Optimal Parameter Estimation, Springer, NewYork, 1997.

[9] M. Crowder, On the use of working correlation matrix inusing generalized linear models for repeated measures,Biometrika 82 (1995) 407–410.

[10] A. Qu, B.G. Lindsay, B. Li, Improving generalized estimatingequations using quadratic functions, Biometrika 87 (2000)823–836.

[11] N.M. Laird, J.H. Ware, Random-effects models forlongitudinal data, Biometrics 38 (1982) 963–974.

[12] P. Whittle, Gaussian estimation in stationary time series,Bull Inst. Stat. Inst. 39 (1962) 105–129.

[13] M. Crowder, Gaussian estimation for correlated binomialdata, J. R. Stat. Soc., Ser. B 47 (1985) 229–237.

[14] S.R. Paul, A.S. Islam, Joint estimation of the mean anddispersion parameters in the analysis of proportions: acomparison of efficiency and bias, Can. J. Stat. 26 (1998)83–94.

[15] M. Davidian, R.J. Carroll, Variance function estimation, J. Am.Stat. Assoc. 82 (1987) 1079–1091.

c o m p u t e r m e t h o d s a n d p r o g r a m s i n b i o m e d i c i n e 82 (2006) 106–113 113

[16] M. Davidian, D.M. Giltinan, Nonlinear Models for RepeatedMeasurement Data, Chapman & Hall, London, 1995.

[17] R.L. Prentice, L. Zhao, Estimating equations for parametersin means and covariances of multivariate discrete andcontinuous response, Biometrics 47 (1991) 825–839.

[18] N.R. Chaganty, An alternative approach to the analysis oflongitudinal data via generalized estimating equations, J.Stat. Plann. Infer. 63 (1997) 39–54.

[19] D.B. Hall, T. Severini, Extended generalized estimatingequations for clustered data, J. Am. Stat. Assoc. 93 (1998)1365–1375.

[20] S.R. Lipsitz, G. Molenberghs, G.M. Fitzmaurice, J. Ibrahim,GEE with Gaussian estimation of the correlations when dataare incomplete, Biometrics 56 (2000) 528–536.

[21] SAS Institute, SAS/STAT Software: Changes andEnhancements Through Release 6.12, SAS Institute, Cary,North Carolina, 1997.

[22] J. Morel, A covariance matrix that accounts for differentdegrees of extraneous variation in multinomialresponses, Commun. Stat. Simul. Comput. 28 (1999)403–413.

[23] M. Pourahmadi, Joint mean–covariance models withapplications to longitudinal data: Unconstrainedparameterization, Biometrika 86 (1999) 677–690.

[24] A.P. Verbyla, Modeling variance heterogeneity: Residualmaximum likelihood and diagnostics, J. R. Stat. Soc., Ser. B55 (1993) 493–508.

[25] Y. Wang, V. Carey, Working correlation structuremisspecification, estimation and covariate design:implications for generalised estimating equationsperformance, Biometrika 90 (2003) 29–41.

[27] R.L. Prentice, Correlated binary regression with covariatesspecific to each binary observation, Biometrics 44 (1988)1033–1048.

[28] L.P. Zhao, R.L. Prentice, Use of quadratic exponential modelto generate estimating equations for means, variances andcovariances, in: V.P. Godambe (Ed.), Estimating Functions,Clarendon Press, Oxford, 1991, pp. 103–117.

[29] C. Gourieroux, A. Monfort, A. Trognon, Pseudomaximumlikelihood methods: theory, Econometrika 52 (1984) 681–700.

[30] N.R. Chaganty, J. Shults, On eliminating the asymptotic biasin the quasi-least squares estimate of the correlationparameter, J. Stat. Plann. Infer. 76 (1999) 145–161.

[31] M.G. Kenward, A method for comparing profiles of repeatedmeasurements, Appl. Stat. 36 (1987) 296–308.

[32] D.L. Zimmerman, V. Nunez-Anton, Structuredantedependence models for longitudinal data, in: T.G.Gregoire et al., (Ed.), Modeling Longitudinal and SpatiallyCorrelated Data: Methods, Applications, and FutureDirections, Springer Lecture Notes in Statistics, No. 122,Springer-Verlag, New York, 1997, pp. 63–76.

[33] M. Pourahmadi, Foundation of Time Series Analysis andPrediction Theory, Wiley, New York, 2001.

[34] M. Pourahmadi, Maximum likelihood estimation ofgeneralized linear multivariate normal covariance matrix,Biometrika 87 (2000) 425–435.

[35] J. Shults, N.R. Chaganty, Analysis of serially correlated datausing quasi-least squares, Biometrics 54 (1998) 1622–1630.

[36] J. Pan, G. Mackenzie, On modeling mean–covariancestructures in longitudinal studies, Biometrika 90 (2003)239–244.

[37] G.M. Fitzmaurice, A caveat concerning independence

[26] M. Crowder, On repeated measure analysis with misspecifiedcovariance structure, J. R. Stat. Soc., Ser. B 63 (2001) 55–62.

estimating equations with multivariate binary data,Biometrics 51 (1995) 309–317.