e-Infrastructures for e-Science: A Global View

Transcript of e-Infrastructures for e-Science: A Global View

J Grid ComputingDOI 10.1007/s10723-011-9187-y

e-Infrastructures for e-Science: A Global View

Giuseppe Andronico · Valeria Ardizzone · Roberto Barbera · Bruce Becker ·Riccardo Bruno · Antonio Calanducci · Diego Carvalho · Leandro Ciuffo ·Marco Fargetta · Emidio Giorgio · Giuseppe La Rocca · Alberto Masoni ·Marco Paganoni · Federico Ruggieri · Diego Scardaci

Received: 14 July 2010 / Accepted: 2 March 2011© Springer Science+Business Media B.V. 2011

Abstract In the last 10 years, a new way of doingscience is spreading in the world thank to the de-velopment of virtual research communities acrossmany geographic and administrative boundaries.A virtual research community is a widely dispersedgroup of researchers and associated scientific

G. Andronico · V. Ardizzone · R. Bruno ·A. Calanducci · M. Fargetta · E. Giorgio ·G. La Rocca · D. Scardaci (B)Division of Catania, Italian National Instituteof Nuclear Physics, Via S. Sofia, 64,95123 Catania, Italye-mail: [email protected]

G. Andronicoe-mail: [email protected]

V. Ardizzonee-mail: [email protected]

R. Brunoe-mail: [email protected]

A. Calanduccie-mail: [email protected]

M. Fargettae-mail: [email protected]

E. Giorgioe-mail: [email protected]

G. La Roccae-mail: [email protected]

instruments working together in a common virtualenvironment. This new kind of scientific environ-ment, usually addressed as a “collaboratory”, isbased on the availability of high-speed networksand broadband access, advanced virtual tools andGrid-middleware technologies which, altogether,

R. BarberaDepartment of Physics and Astronomyof the University of Catania, Viale A. Doria, 6,95125 Catania, Italye-mail: [email protected]

R. BarberaItalian National Institute of Nuclear Physics - Divisionof Catania, Viale A. Doria, 6,95125 Catania, Italy

B. BeckerMeraka Institute, Building 43, CISR,Meiring Naude Road, PO Box 395 Pretoria 0001,Pretoria, South Africae-mail: [email protected]

D. CarvalhoCentro Federal de Educaçãao Tecnológica CelsoSuckow da Fonseca, Av. Maracanã, 229, 20271-110Rio de Janeiro, Brazile-mail: [email protected]

L. CiuffoRede Nacional de Ensino e Pesquisa, R. Lauro Müller116/1103, 22290-906 Rio de Janeiro, Brazile-mail: [email protected]

G. Andronico et al.

are the elements of the e-Infrastructures. TheEuropean Commission has heavily invested inpromoting this new way of collaboration amongscientists funding several international projectswith the aim of creating e-Infrastructures to en-able the European Research Area and connectthe European researchers with their colleaguesbased in Africa, Asia and Latin America. In thispaper we describe the actual status of these e-Infrastructures and present a complete picture ofthe virtual research communities currently usingthem. Information on the scientific domains andon the applications supported are provided to-gether with their geographic distribution.

Keywords e-Infrastructure · e-Science ·Virtual research communities ·Virtual organization · Gridification

1 Introduction on the Concept of e-Infrastructure

Across the end of the Second Millennium andthe beginning of the Third, the way scientific re-search is carried out in many parts of the worldis rapidly evolving to what is nowadays referredto as e-Science, i.e. a “scientific method” whichforesees the adoption of cutting-edge digital plat-forms known as e-Infrastructures [1] throughoutthe process from the idea to the production of thescientific result. Conceptually, e-Infrastructurescan be represented by three layers: (1) the bottomlayer is made up by the scientific instruments and

A. MasoniItalian National Institute of Nuclear Physics, Divisionof Cagliari, Complesso Universitario di Monserrato,S.P. per Sestu km. 0.700, 09042 Monserrato (CA), Italye-mail: [email protected]

M. PaganoniDepartment of Physics of the University of MilanoBicocca, Piazza della Scienza, 3, 20126 Milan, Italye-mail: [email protected]

F. RuggieriDivision of Roma Tre, Italian National Instituteof Nuclear Physics, Via della Vasca Navale, 84,00146 Rome, Italye-mail: [email protected]

experiments providing huge amount of data; (2)then, on the top of the previous one, we have theGrid layer, networked data processing centres andmiddleware software as the “glue” of resources;(3) the third and highest level includes researchersthat perform their activities regardless geograph-ical location, interact with colleagues, share andaccess data.

Scientific instruments are becoming increas-ingly complex and produce huge amounts of datawhich are in the order of a large fraction of thewhole quantity of “information” produced by allhuman beings by all means. These data are oftenrelative to inter/multi-disciplinary analyses andhave to be analyzed by ever-increasing commu-nities of scientists and researchers, called Vir-tual Organisations (VOs) [2, 3], whose membersare distributed all over the world and belong todifferent geographical, administrative, scientific,and cultural domains. The emerging computingmodel which is being developed since a decadeor so is what is called the “Grid” [4], i.e. a largenumber of computing and storage devices, linkedamong them by high-bandwidth networks, onwhich a special software called middleware (inter-mediate between the hardware and the operatingsystem and the codes of the applications) [5] isinstalled, allowing the resources to behave as asingle huge “distributed” computer which “dis-solves” in the fabric of the Internet and can beaccessed ubiquitously through virtual services andhigh-level user interfaces.

The Grid and the underlying network consti-tute what is commonly called an e-Infrastructure.On the top of the e-Infrastructure we have thee-Science Collaborations, virtual research com-munities composed by widely dispersed group ofresearchers working together in the common vir-tual environment.

The European Commission (EC) is heavily in-vesting through its Framework Programmes in e-Infrastructures and these platforms are by nowconsidered as key enablers of the European Re-search Area (ERA). In fact, at the top of thethree-layers model of an e-Infrastructure there isthe most important “network”: the human col-laboration among scientific communities of re-searchers that work together on unprecedented com-plex multi-disciplinary problems whose solutions

e-Infrastructures for e-Science: A Global View

are highly beneficial for the society and the prog-ress at large.

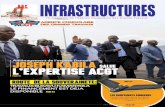

EC investments in e-Infrastructures have gonewell beyond its own borders and many project havebeen funded to create/extend e-Infrastructures in/to other regions of the world and make them con-nected and interoperable [6] with the Europeanone. All together, these projects/initiatives havecontributed to create a “global” network and a“global” Grid as shown in Fig. 1.

All these projects share the same work planwhose “virtuous cycle” can be summarized asfollow:

• Set-up and manage a pilot infrastructure;• Seek, identify and support e-Science com-

munities in the region and deploy theirapplications;

• Train users and site administrators to useand operate an e-Infrastructure based on theEuropean middleware (e.g. gLite [7, 8]);

• Disseminate, both bottom-up and top-down,the e-Infrastructure paradigm for long termsustainability of e-Science.

In this paper we not only provide a global view ofthe e-Infrastructures, based on the European mid-dleware gLite, currently operational in the regionsof the world but we also show, for the first time,

a global picture of how the research communitiestake advantages of this new scientific environ-ment. After a description of the e-Infrastructuresdeployed in the last years in the world, we presenta picture of the virtual research communities thatexploit them worldwide with their scientific ap-plications classified by scientific domain and ge-ographic location. In this way, the impact of thee-Infrastructure model to the scientific researchcan be evaluated as a function of the scientific do-main and the geographical distribution depictinghow this new way of doing science spreads overthe world.

The paper is organized as follow. Section 1 in-troduces the concept of e-Infrastructure. Section 2describes the e-Infrastructure status in all the re-gions involved. Section 3 explains the GridificationProcess, addressing the common problems en-countered in deploying e-Science applications one-Infrastructures and describing the procedureadopted. Section 4 shows a worldwide overview ofthe virtual research communities exploiting theexisting e-Infrastructures with their scientific ap-plications. Applications have been classifiedaccording to their scientific domains and theirgeographical origin and a short description ofsome relevant pilot applications for each of thescientific domains considered is provided. Finally,in Section 5, conclusions are drawn.

Fig. 1 The “global” Grid

G. Andronico et al.

2 Regional e-Infrastructures

This section describes the status of the e-Infrastructures currently operational in the re-gions of the world. The picture depicted in thissection take in account only the e-Infrastructuresbased on the European Middleware, gLite (withthe only exception of the Indian area). Severalother important e-Infrastructures, based on differ-ent middleware, have been deployed and arecurrently running in the world like the OpenScience Grid [9], DEISA [10], TeraGrid [11],NAREGI [12], the China National Grid [13], etc.They are not in the scope of this paper.

2.1 Europe

The Grid infrastructure for e-Science in Europe isbased on the GÉANT network [14] and has beenestablished through a series of projects started inearly 2001 with the European DataGrid (EDG)project [15] followed by the three phases of theEnabling Grids for E-SciencE (EGEE) project[16]. The EGEE project, which was based onnational and thematic Grid efforts, as well as thepan-European network provided by GÉANT andthe National Research and Education Networks(NRENs), brought together experts from all overEurope and the rest of the world (more than50 countries) with the common aim of buildingon recent advances in Grid technology and de-veloping a service Grid infrastructure which isavailable to scientists around the clock. Since thebeginning of the second phase of the project, sev-eral scientists, grouped into Virtual Organisations(VOs), started to access and use the virtual ser-vices exposed by the Grid framework to carry oncollaborative research and gain access to sharedcomputing and data resources. The up to datelist of all registered VOs is available from theCIC portal (http://cic.gridops.org). EGEE pro-vided a variety of services to scientists, rangingfrom training and user support, through to thesoftware infrastructure necessary to access theresources. Thanks to the reliable services devel-oped by the project, several scientific commu-nities such as: Life Sciences and High EnergyPhysics (HEP) now depend on the EGEE Grid in-frastructure, which is an essential and crucial part

of their large-scale data processing. Other disci-plines, notably astrophysics, Earth sciences, com-putational chemistry, and fusion are increasinglyusing it for production processing. In 6 years, theEGEE project has also established several rela-tionships with other Grid-related projects such as:EUMEDGRID [17], EUChinaGRID [18], andEUAsiaGrid [19] projects, to name just a few, inorder to extend the infrastructure, and its par-adigm, to foster the induction of new scientificcommunities, to establish a seamless computinginfrastructure around the globe and reduce thedigital divide. Currently, there are 10 disciplinesand 36 scientific areas that are being supported.Among them:

• Astronomy and Astrophysics;• Computational Chemistry;• Earth Sciences;• Fusion;• High-Energy Physics;• Life Sciences.

2.1.1 The European Grid Infrastructure

The European Grid infrastructure (EGI) is a fed-eration of Resource Infrastructures (typically Na-tional Grid Initiatives but not only) with a cen-tral coordinating body (EGI.eu, incorporated inAmsterdam). EGI is based on the gLite middle-ware services deployed on a worldwide collectionof computational and storage resources, plus theservices and support structures put in place tooperate them. As of today, the European GridInfrastructure, shown in Fig. 2, consists of morethan 330 Resource Centres for a total of about330,000 CPU cores and 80,000 TB of storage.

2.2 Latin America

The Latin American Grid Infrastructure (LGI),based on the RedCLARA backbone [20], has beendeveloped thanks to several projects co-funded bythe European Commission since 2006 (EELA,EELA-2 and GISELA).

The RedCLARA backbone, implementedand managed by CLARA (Cooperación LatinoAmericana de Redes Avanzadas), is composedof 9 nodes, interconnects 12 Latin American

e-Infrastructures for e-Science: A Global View

Fig. 2 EGI infrastructure. GStat Geo View (Gstat is a webapplication which aim is to display information about Gridservices, the Grid information system itself and related

metrics (http://gstat.egi.eu/gstat/geo/openlayers). Sites un-der maintenance could not appear on the figure.)

NRENs: RNP (Brazil), InnovaRed (Argentina),REUNA (Chile), RENATA (Colombia), CEDIA(Ecuador), RAICES (El Salvador), RAGIE(Guatemala), CUDI (Mexico), RedCyT (Panama),RAAP (Perú), RAU2 (Uruguay) andREACCIUN (Venezuela). It is also connected tothe pan-European network GÉANT, as well as toother international research networks.

LGI deployment started in 2006 thanks to theEELA first-phase project [21] that provided itsusers with a stable and well supported Grid whichproved, in the two year lifetime of the project, thatthe deployment of an European–Latin Americane-Infrastructure was not only viable but also re-sponding to the real needs of a significant part ofthe Scientific Community. After then, the EELA-2 Project [22] (E-science Grid facility for Europeand Latin America) started and carried on thework performed during EELA with the aim tobuild a high capacity, production-quality, scalable

Grid to answer the needs of a wide spectrumof applications from European–Latin Americanscientific collaborations.

The focus of EELA-2 was on:

• Offering a complete set of versatile servicesfulfilling Applications requirements;

• Ensuring the long-term sustainability of the e-Infrastructure beyond the term of the project

EELA-2 can be considered as the first and mostinclusive initiative that has been carried out inLatin America in the area of distributed comput-ing infrastructures.

One of the main outcomes of the EELA-2project was the definition of the LGI sustainabilitymodel, rooted on National Grid Initiatives (NGI)or Equivalent Domestic Grid Structures (EDGS),that will be implemented, in collaboration withCLARA, during the GISELA project, the thirdphase of the LGI deployment process. The

G. Andronico et al.

ambitious target of GISELA is to make LGI self-sustainable by the end of the project. In the sametime GISELA works to provide Virtual ResearchCommunities (VRCs) with the e-Infrastructureand Application-related Services required to im-prove the effectiveness of their research and tocreate a collaboration channel between LGI andthe European Grid Initiative (EGI).

2.2.1 The Latin Grid Infrastructure (LGI)

The Latin American Grid Infrastructure, shownin Fig. 3, consists, as of today, of 28 ResourceCentres for a total of more than 6000 CPU coresand about 500 TB of disk storage.

2.3 Mediterranean and Middle-East

Co-funded by the European Commission withinthe Sixth and Seventh Framework Programmes,the EUMEDGRID projects (EUMEDEGRID andEUMEDGRID-Support [23]) have run in paral-lel but in conjunction with EUMEDCONNECTprojects [24, 25] (the EUMEDCONNECTprojects have played a pioneeristic role in the

promotion of Communication Networks, as fun-damental components of e-Infrastructures in theMediterranean), and have supported the de-velopment of a Grid infrastructure in theMediterranean area. EUMEDGRID promotedthe porting of new applications on the Gridplatform, thus allowing Mediterranean scientiststo collaborate more closely with their Europeancolleagues. EUMEDGRID has disseminatedGrid awareness and competences across theMediterranean and, in parallel, identified newresearch groups to be involved in the project,helping them to exploit Grids’ enormous potentialto improve their own research activities.

The implementation and coordination of a Gridinfrastructure at a national (or wider) level canbe regarded as an opportunity to optimize theusage of existing, limited storage and computingresources and to enhance their accessibility by allresearch groups. This is particularly relevant forthe non-EU countries involved in the project.

Many research fields have indeed very de-manding needs in terms of computing power andstorage capacity, which are normally providedby large computing systems or supercomputing

Fig. 3 LGI infrastruc-ture. GStat Geo View

e-Infrastructures for e-Science: A Global View

centres. Furthermore, sophisticated instrumentsmay be needed to perform specific studies. Suchresources pose different challenges to developingeconomies: they are expensive, they need to begeographically located in a specific place and theycannot attract a critical mass of users because theyare usually very specific and are relevant onlyfor small communities of researchers scatteredacross the country/region. This is the case evenin some strategic domains such as water manage-ment, climate change, biodiversity and biomed-ical activities on neglected or emerging diseases.Thus, a significant part of researchers is forcedto emigrate to more developed countries to beable to continue their scientific careers. However,thanks to the creation of global virtual researchcommunities and distributed e-Infrastructure en-vironments, all these drawbacks can be overcome:through an appropriate access policy, differentuser groups can use resources wherever dispersed,according to their availability. Furthermore, ge-ographically distributed communities working onthe same problem can collaborate in real timethus optimizing not only hardware and softwareresources but also human effort and “brainware”.

The EUMEDGRID projects have been con-ceived in this perspective and have set up apilot Grid infrastructure for research in theMediterranean region which is interoperable andcompatible with that of the EGI initiative. TheEUMEDGRID’s vision focused on improvingboth the technological level and the know-howof networking and computing professionals acrossthe Mediterranean thus fostering the introductionof an effective Mediterranean Grid infrastructurefor the benefits of e-Science.

The EUMEDGRID achievements can be cate-gorised into two main areas:

• The creation of a human network in e-Scienceacross the Mediterranean;

• The implementation of a pilot Grid infrastruc-ture, with gridified applications, in the area.

Cooperation among all the participants has beendemonstrated by the enthusiastic participation tocommon workshops and meetings organized dur-ing the lifetime of EUMEDGRID and the suc-cess obtained fostering the creation of National

Grid Initiatives and national Certification Au-thorities (CAs) officially recognized by IGTF. Im-pressive results were also obtained in the events ofknowledge dissemination on Grid technology andservices.

The promotion of National Grid Initiatives car-ried out in all non-EGI partner countries regis-tered a good level of success with programmesalready operational in Algeria, Egypt, Moroccoand Tunisia and well advanced plans in Jordanand Syria. The national impact and policy levelawareness in some of these countries has led toan initial financial support of the initiatives.

Besides its scientific mission, EUMEDGRIDhad also a significant socio-economic impact inthe beneficiary countries. Fostering Grid aware-ness and the growth of new competences in EUNeighbours’ scientific communities is a concreteinitiative towards bridging the digital divide andthe development of a peaceful and effective col-laboration among all partners.

e-Infrastructures also contribute to mitigate theso-called “brain-drain” allowing brilliant minds inthe area to stay in their regions and contributesignificantly to cutting edge scientific activities,concretely enlarging the European Research Area(ERA). Research and Education Networks andGrids are fundamental infrastructures that will al-low non-EU researchers to carry out high qualitywork in their home laboratories without the needto migrate in more advanced countries.

An extended Mediterranean Research Areacould thus be seen as a first step towards therealisation of more politically ambitious plans ofopen market, open transportation infrastructures,free circulation of citizens, etc.

At the present time a big effort is devotedto push for a consolidation of the existingEUMEDGRID infrastructure and for the de-velopment of sustainable e-Infrastructures inthe Mediterranean region in a broad, general,meaning.

2.3.1 The Mediterranean Grid Infrastructure

The Mediterranean Grid Infrastructure, shown inFig. 4, consists of 30 Resource Centres for a totalof about 1,600 CPU cores and about 600 TB ofstorage.

G. Andronico et al.

Fig. 4 EUMEDGRID infrastructure. GStat Geo View

2.4 Far East Asia

The development of Grid infrastructures in theAsia-Pacific region has been driven by the par-ticipation of local scientists to the CERN LargeHadron Collider experiments as well as a num-ber of applications of specific interest such asbiomedical research, engineering applications anddisaster mitigation. After then, the EUAsiaGridproject [19], co-funded by the European Com-mission in the context of its Seventh Frame-work Program, starting from the Grid sites al-ready available in the region, managed to builda production Grid infrastructure increasing thecapacity currently available in a number of coun-tries such as Malaysia, Indonesia, the Philippines,Thailand and Vietnam. Nowadays, this infrastruc-ture ensures that local support and key ser-vices such as user interface nodes, compute andstorage elements can be taken for granted byresearchers and that the core of a sustainablee-Infrastructure in the region is put in place. TheGrid infrastructure is built on top of the thirdgeneration of the Trans-Eurasia Information Net-work (TEIN3), maaged by the Asia-Pacific Ad-

vanced Network [26] organisation and the TEIN3project [27], providing today a dedicated high-capacity Internet network for research and educa-tion communities across Asia-Pacific. It currentlyconnects 11 countries in the region (Australia,China, Indonesia, Japan, Korea, Laos, Malaysia,Philippines, Singapore, Thailand and Vietnam)and a direct connectivity to the GÉANT networkin Europe.

While a number of countries in the Asia-Pacificregion have done significant investments in e-Infrastructures for research, the level of fundingis still very heterogeneous and only a few coun-tries have National Grid Initiatives (NGIs) thatprovide the necessary coordination at the nationallevel to leverage the capability of Grids to pro-vide persistent and sustainable e-Infrastructuresthat can be taken for granted by researchers andthat enable them to focus on their substantiveresearch. Until recently, most Grid-related ini-tiatives were based at individual institutions thatsought to build up capacity to support specificresearch projects and application areas. As aconsequence, many resource providers ended uptrying to support installations with different

e-Infrastructures for e-Science: A Global View

middleware stacks, stretching their resources.Clearly, a coordinated approach to the de-velopment of a persistent and sustainable e-Infrastructure could not only maximise the returnon investment by enabling a wider range of re-searchers to benefit from the resources but alsohelp resource providers cope with the heterogene-ity and continuous evolution of Grid technologies.

Through the coordination and support pro-vided by EUAsiaGrid, much needed local ca-pacity has been developed, both in terms ofresources available as part of the world-wide EGIinfrastructure and in terms of the supporting hu-man infrastructure that is needed to carry on theirongoing operation and effective exploitation byresearchers. To minimize barriers to access theGrid infrastructure, the EUAsiaGrid project alsocreated and maintained a catch-all, applicationneutral, Virtual Organisation called EUAsia. Fur-thermore, several Certification Authorities (CAs)approved by the International Grid Trust Fed-eration already operate in the region, with theAcademia Sinica Grid Computing one serving asa catch-all CA and taking care of users of thosecountries that do not yet have their national CA.

Any researcher from the region interested in try-ing the Grid for his/her research can get a cer-tificate through a nearby Registration Authorityand immediately subscribe to the EUAsia VO.Although application neutral, nodes serving thisVO have installed many application packages tobe easily available. Also, each partner has set up auser interface to provide local access to the Grid.

2.4.1 The EUAsia-Grid Infrastructure

The EUAsia-Grid Infrastructure, shown in Fig. 5,consists, as of today, of 16 Resource Centres fora total of 880 CPU cores and more than 60 TB ofstorage.

The EUAsiaGrid project came to an end inJune 2010 but the EUAsiaGrid Consortium hasagreed to keep open, on a best effort basis, theexisting infrastructure.

2.5 India

In India, two main Grid Initiatives have been takenat governmental level: Regional WLCG [28] setup by the Department of Atomic Energy (DAE),

Fig. 5 EUAsiaGrid Gridinfrastructure. Gstat GeoView

G. Andronico et al.

in coordination with the Department of Science &Technology (DST), and the GARUDA NationalGrid Initiative [29]. GARUDA benefits from thecreation of the National Knowledge Network(NKN), a new backbone created by the NationalKnowledge Commission (NKC) with the aim tobring together all the stakeholders in Science,Technology, Higher Education, Research & De-velopment, and Governance with speeds in theorder of tens of Gb/s coupled with extremely lowlatencies. The regional WLCG in India is going tobe migrated to NKN that will provide transport toERNET (the Indian National Research and Edu-cation Network) replacing its existing backbone.

The Indian Department of Atomic Energy(DAE) is actively participating to the scientificprogram of LHC Computing Grid project tak-ing active part in CMS and ALICE experiments,devoted to find answers to the most fundamen-tal questions at the foundations of matter con-stituents. The data from the LHC experiments willbe distributed around the globe, according to afour-tiered model. Within this model, to supportresearchers with required infrastructure, India hasalso setup regional Tier-2 centres connected toCERN. In India there are two Tier-2 centres: onefor CMS at TIFR in Mumbai and one for ALICEat Saha-VECC in Kolkata. These centres provideaccess to CMS and ALICE users working fromTier-3 centres at Universities and national labsand LCG Data Grid services for analysis.

Specific activities are also ongoing in the area ofGrid Middleware Software development, devotedto ensuring Grid enabling of IT systems. These ac-tivities cover the area of Grid Fabric management,Grid Data management, Data Security, Gridworkload scheduling and monitoring services,fault tolerant systems, etc. DAE developed num-ber of Grid based Tools in the area of Fabric man-agement, AFS file system, Grid View and DataManagement, which are being deployed by CERNin their LHC Grid operations since September2002. So far, the number of software tools andpackages such as a correlation engine, Grid oper-ations monitoring, problem-tracking system, PoolDatabase Backend Prototype, Scientific LibraryEvaluation and Development of Routines, AliEnStorage System and Andrews File System, were

developed by DAE team members under a Com-puting Software agreement. Currently, BARC en-gineers are working on the enhancement of theGrid View software tool.

GARUDA is a collaboration of scientific andtechnological researchers for a nation wide Gridcomprising computational nodes, mass storagesystems and scientific instruments. It aims to pro-vide the technological advances required to en-able data and compute intensive science for the21st century.

GARUDA has transitioned form the Proof ofConcept phase to the Foundation Phase in April2008 and it is currently its third phase: Grid Tech-nology Services for Operational GARUDA. Thisphase has been approved and funded for 3 yearsuntil July 2012.

The GARUDA project coordinator, CDAC, es-tablished in November 2008 an IGTF recognizedCertification Authority which allows access toworldwide Grids for Indian Researchers.

GARUDA aims at strengthening and advanc-ing scientific and technological excellence in thearea of Grid and Peer-to-Peer technologies. It willalso create the foundation for the next genera-tion Grids by addressing long term research is-sues in the strategic areas of: knowledge and datamanagement, programming models, architec-tures, Grid management and monitoring, problemsolving environments, tools and Grid services.

The EU-IndiaGrid project [30], operating withinthe Sixth Framework Program of the EuropeanCommission, has played a bridging role betweenEuropean and Indian Grid infrastructures and itssuccessor, EU-IndiaGrid2 [30], aims at increasingthe cooperation between European and Indian e-Infrastructures capitalizing on the EU-IndiaGridachievements.

EU-IndiaGrid and EU-IndiaGrid2 are part ofa group of projects, funded within the Sixth andSeventh Framework Programs for Research andScientific Development of the European Com-mission, which aim at integrating the EuropeanGrid infrastructure with other regions in order tocreate one broad resource for scientists workingon existing or future collaboration.

The EU-IndiaGrid project ran from 2006 to 2009.The leading responsibilities of the EU-IndiaGrid

e-Infrastructures for e-Science: A Global View

Indian partners and the project bridging role be-tween European and Indian e-Infrastructures gaveto EU-IndiaGrid project the opportunity to be atthe core of the impressive developments in Indiain the e-Infrastructures domain and to effectivelycontribute at improving cooperation betweenEurope and India in this area. In all these activi-ties, the role and the contribution of EU-IndiaGridpartners, as well as the bridging role of the EU-IndiaGrid project, was particularly relevant andobtained full recognition at the highest level byrepresentatives of the Indian Government and ofthe European Commission so contributing at sup-porting the improvement of the e-Infrastructurescapabilities. Its successor EU-IndiaGrid2 will runfrom January 2010 to December 2011 and capital-izes on the EU-IndiaGrid achievements by actingas a bridge across European and Indian e-infrastructure to ensure sustainable scientific, edu-cational and technological collaboration. The

EU-IndiaGrid contribution in the Euro-India e-Infrastructures cooperation’s scenario and the per-spectives for EU-IndiaGrid2 can be well resumedin the words of Dr. Chidambaram, PrincipalScientific Advisor to the Govt. of India, whogave the opening speech at the EU-IndiaGrid2project launch: “I am happy to learn about thesecond phase EU-IndiaGrid2 project–Sustainablee-Infrastructures across Europe and India. Thefirst phase has benefited immensely a variety ofscientific disciplines including biology, earth sci-ence and the Indian collaboration for the LargeHadron Collider (LHC). The successful workingof the initial phase of multi-gigabit NationalKnowledge Network, Indian Certification Author-ity, and participation in Trans-Eurasia Informa-tion Network (TEIN3) phase 3 are some of theimportant building blocks for supporting virtualresearch communities in India and their collab-oration work with other countries.”

Fig. 6 Map of theGARUDA infrastructure

G. Andronico et al.

2.5.1 The GARUDA Grid Infrastructure

GARUDA, the National Grid Initiative of Indiais a collaboration of scientific and technologicalresearchers on a nationwide Grid comprising ofcomputational nodes, storage devices and scien-tific instruments. GARUDA today connects 45institutions cross 17 cities in its Proof of Con-cept (PoC) phase with an aim to bring “Grid”networked computing to research labs and indus-try. Figure 6 shows the actual status of the e-Infrastructure.

2.6 Sub-Saharan Africa

With few exceptions, African universities and re-search centres lack access to dedicated global re-search and education resources because they arenot connected to the global infrastructure consist-ing of dedicated high capacity regional networks.The consequence is that research and higher edu-cation requiring such access can currently not beconducted in Africa and the continent is not wellrepresented in the global research community.This is witnessed by the world map of scientificdivide (see Fig. 7) where territory size shows the

proportion of all scientific papers (published in2001) written by authors living there.

An important bottleneck is the lack of directpeering with other research and higher educationnetworks. This bottleneck can be removed onlyby creating dedicated National Research and Ed-ucation Networks (NRENs) connecting researchand tertiary education institutions in each Africancountry to a Regional Research and EducationNetwork (RREN) interconnected to the peer in-frastructures on other continents. In this context,a pioneering and very important role has beenplayed by the Ubuntunet Alliance [31]. Incorpo-rated in 2006, Ubuntunet gathers the following12 NRENs in Eastern and Southern Africa: Eb@le(Democratic Republic of Congo), EthERNet(Ethiopia), KENET (Kenya), MAREN (Malawi),MoRENet (Mozambique), RwEdNet (Rwanda),SomaliREN (Somalia), SUIN (Sudan), TENET(South Africa), TERNET (Tanzania), RENU(Uganda), and ZAMREN (Zambia) and it is fos-tering the creation of new ones in Botswana,Burundi, Lesotho, Namibia, Mauritius, Swaziland,and Zimbabwe.

The mission of the Alliance is to secureaffordable high speed international connectivityand efficient ICT access and usage for African

Fig. 7 World map of the scientific divide

e-Infrastructures for e-Science: A Global View

NRENs. In this respect, Ubuntunet has beenone of the stakeholders of the FEAST project[32] (Feasibility Study for African–European Re-search and Education Network Interconnection)that, between December 2008 and December2009, has studied the feasibility of connectingAfrican NRENs to the GÉANT network and hasdocumented the relevant issues in the region in-hibiting these enabling technologies.

FEAST has also paved the way for the creationof the AfricaConnect consortium that should takecare, under the coordination of DANTE, of thecreation, in the next 3–4 years, of a RREN inSub-Saharan Africa at a total cost of 15 Me, 80%funded by the European Commission and the restco-funded by the beneficiary countries.

Notwithstanding the large dissemination activi-ties of strategic projects, such as IST-Africa [33],EuroAfrica-ICT [34], and eI-Africa [35], co-funded by the European Commission in thecontext of its Sixth and Seventh Framework Pro-grams, the Sub-Saharan region of Africa has seenthe least amount of activity in distributed com-puting initiatives. However, the recent advent ofaffordable international bandwidth, the reformof national telcoms policies and the subsequentconstruction of high-bandwidth national researchnetworks in the early part of the first decade ofthe century has had a catalytic effect on inter-est in deploying e-Infrastructures in the region.These naturally have a scope well-beyond that ofGrid computing projects for scientific research,but have been identified by researchers, higher-learning institutions, and governments in the re-gion as enablers of collaboration and tools toreduce the effect of the digital divide discussedabove.

As in other cases discussed in this chapter, sci-entific projects requiring significant infrastructure—in particular the Southern African Large Tele-scope (SALT, 2010) and the Karoo ArrayTelescope—were great stimuli of the interest indeploying networks and Grids in the region. Theremote location of the scientific equipment andthe wide geographic separation of the membersof the collaborations using it were prime moti-vators, for example, for the development of theSouth African NREN. Data sharing considera-tions were long a concern, too, for the South

African participation to two experiments of theLarge Hadron Collider. Two groups of researchcentres participate to the ALICE and ATLASexperiment, respectively, and the hub of medicaland fundamental nuclear physics research under-taken at the iThemba Laboratories was one of theoriginal drivers for experimenting with a nationaldata and compute Grid.

South Africa is the only country in the Sub-Saharan region with a dedicated activity to co-ordinate distributed computing, which startedwith two projects centrally funded by Depart-ment of Science and Technology. These were theSANREN national research and education net-work and the Centre for High-Performance Com-puting, which was inaugurated in 2006. The planfor a high-speed network connecting the country’suniversities and national laboratories generatedinterest in the creation of a federated distributedcomputing infrastructure based on the Grid par-adigm. The creation of a Joint Research Unitin mid-2008 was the start of this project, whichaimed to integrate existing computing clusters andstorage distributed in the institutes into a nationalGrid computing platform.

The South African National Grid (SAGrid)[36] by the start of 2010 consisted of a federationof seven institutes taking part in Grid operationsand belonging to the SAGrid Join Research Unitwith open activities under way for futher inclusionof other universities in the country. SA-Grid leansupon the high-bandwidth SANReN and its devel-opment was based in many ways on the experi-ence acquired in Europe, starting with the EGImodel and regional activities. The EGI middle-ware (gLite) and the EGI operational tools wereadopted as standards at all sites, ensuring thatthe infrastructure would be easily used by VirtualOrganisations operating on the EGI resources.

A major obstacle in the Sub-Saharan regionwas the lack of a CA accredited by IGTF. Sincethere is indeed no region of the IGTF respon-sible for Sub-Saharan Africa, the nearest PolicyManagement Authority (PMA) is that responsiblefor Europe and the Near East : EUGridPMA. Aproposal to accredit a new CA for South Africa,the SAGrid CA, was accepted by EUGridPMAin 2009 and full accreditation is expected by mid2011. To avoid delays, the INFN CA has assigned

G. Andronico et al.

Fig. 8 SA-Grid Gridinfrastructure. GstatGeo View

Registration Authorities in several South Africaninstitutes which are able to issue digital certificatesfor individuals and services locally.

Coordinated training and development eventsboth in South Africa and the broader region,undertaken in collaboration with the GILDA t-Infrastructure [37], have expanded the base ofcompetent site administrators and users, in con-cert with similar activities undertaken by theEUMEDGRID projects (see above). This foun-dation work is essential in developing the baseof applications, technical experts and eventually(and most importantly) users in the region.

2.6.1 The SA-Grid Infrastructure

The SA-Grid Infrastructure, shown in Fig. 8, con-sists of 7 Resource Centres for a total of about1,300 CPU cores and about 12 TB of storage.

3 Deploy e-Science Applicationson e-Infrastructures: The Gridification Process

Application porting on e-Infrastructures is ahighly important support service for the successfuland efficient penetration of Grid technologies intothe various research disciplines. Most of the majorGrid projects recognized this and setup some form

of porting support for their user communities.Porting an application to an e-Infrastructure is arather technology-oriented step that can be car-ried out only after the beneficiaries of an appli-cation have recognized that Grids could improvethe application in some way. Furthermore, thebeneficiaries must also identify the Grid type towhich their application is suited. Reaching thislevel of understanding is difficult and one needsto analyze the problem from several perspectives.The porting process itself then can be carried outin a rather mechanical way which can be describedwith the following workflow:

• Carrying out a technical analysis of the currentform of the application: how it is used on asingle computer/supercomputer/cluster/Grid,what is its structure, the interfaces betweencomponents, between the application and itsenvironment, between the application and itsusers. How the application now is embeddedinto the daily routine work of users.

• Understanding the “Grid vision” of the com-munity: what do the beneficiaries expect fromthe Grid-enabled version of the application,what are their motivations and priorities forporting this application to the Grid, e.g. betterperformance, scalability, accessibility, fault tol-erance, quality of service, etc. What changes

e-Infrastructures for e-Science: A Global View

they can tolerate in their routine work to get aGrid-enabled version of the application.

• Analysing the application structure from theGrid point of view: identifying the compute/data intensive parts, analysing the dataflow,collecting or measuring required hardwarecapacities, understanding licensing schemes,understanding compilation methods, pro-gramming interfaces, etc.

• Defining one (or more) porting scenarios bywhich the application could be ported to theGrid in order to meet the users’ demands.Discussing the scenarios with the applicationporting team and with the end users. Usuallyseveral iterations are required to select thefinal scenarios that represent the most optimalsolution for every involved party. The scenariomakes recommendations on Grid services thatare relevant to the application, identifies in-frastructural and software components thatcurrent Grids don’t provide but are requiredfor the successful adoption and so need tobe developed. The foreseen steps of port-ing and development are associated with es-timated human resource requirements. Theadvantages and disadvantages of the scenarioover other solutions should be provided too,and this can later serve as a reference forthe future extension of already Grid-enabled(gridified) application.

• Completing the practical development steps ofthe porting. This may include the extensionof the original code with new APIs, wrap-ping the code with scripts, adapting the codeto the Grid with porting tools, developmentof new infrastructure services, new interfaces,integrating various components. Componentand application level testing and debugging,performance analysis, documentation closesthe core porting cycle.

This application porting methodology has beenapplied in the e-Infrastructures covered in thispaper with some slight difference according totheir different contexts. In the next sub-sectionswe describe how the “gridification” process hasbeen implemented in Europe, where the Gridknowledge could be just considered high, and inthe other regions of the world. It’s important to

notice that the best way to promote the Gridmodel outside Europe is the identification andgridification of scientific applications that couldhave a great benefit from the e-Infrastructure.For this reason, a big effort has been devotedto look for scientific applications able to satisfythis requirement selecting, in a proper way, whichapplications to support.

3.1 The Gridification Process in Europe

At the beginning of the EGEE project, two pilotapplications were identified to test the infrastruc-ture and its services. These applications camefrom the High Energy Physics (HEP) [38] andthe Life Sciences domains. Now, many scientificapplications exploit the distributed computing andstorage resources of the EGEE infrastructure toeffectively run data challenges and share knowl-edge with other partners regardless their geo-graphical location.

In its third phase, the EGEE project flaggedapplication porting as a critical service for thesuccessful adoption of Grid solutions by new usersand new communities. One of the activities ofEGEE-III project (User Community Support andExpansion) includes “Application Porting Sup-port” among its generic user support activities(besides Virtual Organization Support and DirectUser Support). The goal of the EGEE Applica-tion Porting Support group was to aid developersin effectively porting Virtual Organizations’ (VO)applications to the Grid.

The EGEE Grid Application Support Centre(GASuC) [39] was implemented as a distributednetwork of centres coordinated by the Hungarianpartner MTA SZTAKI and involved researcherswith long-term experience in applications portingfrom the following institutes:

• Universidad Complutense de Madrid (UCM),Madrid, Spain;

• Instituto Nazionale di Fisica Nucleare(INFN), Catania, Italy;

• Commissariat a l’Energie Atomique (CEA),Paris, France ;

• Consejo Superior de Investigaciones Cien-tificas (CSIC), Madrid, Spain;

G. Andronico et al.

• Academia Sinica Grid Computing Centre(ASGC), Taipei, Taiwan;

• University of Melbourne, Melbourne, Australia.

The Grid Application Support Centre (GASuC)provided assistance to Grid users and applicationdevelopers during the application gridificationprocess. GASuC helped to identify suitable ap-proaches, tools for the porting process and applybest patterns, and practices in order to get codesrunning on production Grids infrastructures. Inorder to speed up the porting of scientific ap-plications in the production quality infrastruc-tures, intensive workshops and personalized train-ing events were organized by the team to ensurethat application owners became expert on port-ing tools and could perform the porting processwith the help of the porting team quickly andefficiently. After the complexity of the portingproblem was understood, a porting expert wasassigned to the client. The expert’s responsibil-ity was to define the roadmap for the porting,to allocate resources, to coordinate the portingprocess and to ensure that the final applicationwill meet the targeted requirement. For furtherinformation about the support cycle implementedby GASuC, “seen” by the owner of an application,please refer to the “Gridification process” section(http://www.lpds.sztaki.hu/gasuc/?m=1).

3.2 The Gridification Process in Other Regionsof the World

A slightly different procedure has been adoptedby other projects, such as EELA-2, EUAsia-Grid and EUMEDGRID, to identify and supportnew applications on their Grid infrastructures.An application questionnaire has been createdto gather information from the various potentialpartners about their applications. Applicationsare then selected taking in consideration severalcriteria as number of involved institutions fromEurope and region of interest for the project(Latin America, Mediterranean, etc.), suitabilityfor Grid deployment, easiness of gridification,Grid added value, resources (CPU, storage) com-mitments of the Institutions involved, usage of theinfrastructure (number of jobs and frequency ofruns) and potential outreach / impact (in the scien-

tific community, industry, socially in the country,towards policy / decision makers). These crite-ria, mapped on quantitative ranges and properlyweighted, allowed evaluating and ranking eachapplication received through the questionnaire.Then, selected applications are supported to bedeployed in the Grid infrastructure and, hence,it is assumed that a selected application might gothrough the following stages:

1. Ungridif ied / ready for standalone use: Theapplication has been developed and runs on alocal cluster. The application cannot run un-modified or without adaptations in a GRIDfor multiple reasons: needs recompilationagainst specific OS or libraries available inGRID environments, its data needs to be pre-pared for parallel processing, the algorithmsneed to be adapted to combine results ob-tained in a parallel manner, etc.;

2. Gridif ied / ready for gLite: The application hasbeen adapted so that it can be deployed ina gLite-based Grid environment. This usuallyconsists on (a) defining how data is cut upto be processed in a parallel manner and (b)creating scripts and adapter programs to coor-dinate launching jobs and assembling resultingdata. In more complicated cases, this might re-quire actual modification of the applications,using Grid APIs, etc.;

3. Deployed / ready for validation: The appli-cation has been deployed in the Develop-ment Grid environment (GILDA), is readyto accept job submissions and start valida-tion. At this stage applications must conformwith specific GRID deployment proceduresand standards. For instance, sites usually havedeployment procedures for applications to berun in test mode different than for applica-tions to run in production mode. Executableand data files must be placed in locations ac-cessible to jobs whenever they are submittedto the queuing systems, etc.;

4. Ready for deployment: the application hasbeen deployed and validated on the develop-ment infrastructure (GILDA) and is ready tobe installed into the production infrastructure;

5. Production: The application is deployed andvalidated and is ready to start scientific

e-Infrastructures for e-Science: A Global View

Fig. 9 Applicationlife-cycle towards theproduction stage

production. Jobs are being lunched in aregular manner, data is being produced andsystemic non-functional qualities must bemonitored (performance, accessibility, secu-rity, etc.).

Different applications may enter into this life cy-cle at different stages, depending on their degreeof maturity (see Fig. 9).

The applications deployment was supported bya group of very skilled people, the “gridificationteam”, in charge of helping the application devel-opers to interface their codes with the Grid mid-dleware in the e-Infrastructure. Such “helpdesk”approach was/is carried out through both virtualmeetings and specific on-demand retreats of oneor two weeks. During such retreats, called GridSchools, application experts work in close col-laboration with tutors to interface their appli-cations with the Grid middleware. These eventsproved to be very effective and decisively helpedin speeding-up the porting of several scientificapplications on the projects’ e-Infrastructure.

4 Scientific Domains and ApplicationsSupported: A Worldwide Overview

The evolution of the e-Infrastructure modelaround the world has reached a high maturitylevel and, nowadays, there are several virtual re-search communities, spread in all the continents,profiting of this new kind of scientific environ-ment. This section shows, per scientific domain

and per geographic area, the distribution of theapplications deployed on the e-Infrastructuresdescribed in the Section 1 providing, for thefirst time, a worldwide overview of the vir-tual research communities exploiting the existinge-Infrastructures with their scientific applications.Applications have been classified according totheir scientific domains and their geographical ori-gin (continent and country of the scientists devel-oping/using them).

In the following sub-sections, a short descrip-tion of some relevant pilot applications for eachof the scientific domains considered is provided.These applications benefits of the e-Infrastructurein several ways, from the large amounts of com-putation time available to the large amounts ofstorage available, from the creation of humancollaboration among scientific communities of re-searchers that work together to allow brilliantscientists to stay in their regions and contributesignificantly to cutting edge scientific activitiesand reducing the digital divide. The number ofthe applications profiting of the e-Infrastructuresand their spread in several scientific domainscould be considered two important clues suggest-ing that scientific research will be mainly based one-Infrastructures in the near future.

4.1 Virtual Research Communities and ScientificDomains Involved

The applications using the e-Infrastructures de-scribed in Section 1 have been classified

G. Andronico et al.

Fig. 10 Applicationsdistributed per ScientificDomain

according to the following taxonomy: Astronomy& Astrophysics, Bioinformatics, Chemistry, CivilProtection, Computer Science and Mathematics,Cultural Heritage, Earth Sciences, Engineering,Fusion, High-Energy Physics, Life Sciences andPhysics.

Figure 10 shows the applications distributionper scientific domain. The distribution per geo-graphic origin is shown in Fig. 11 (a world-widevision) and in Figs. 12, 13, 14, 15, 16, 17, 18, 19, 20,21, 22 and 23 (per geographic region).

4.2 Astronomy and Astrophysics

4.2.1 CORSIKA (Europe,Germany–Switzerland–Italy)

CORSIKA (COsmic Ray SImulations for KAs-cade) [40] is a program for detailed simulationof extensive air showers initiated by high energycosmic ray particles. Protons, light nuclei up toiron, photons, and many other particles may betreated as primaries. The particles are trackedthrough the atmosphere until they undergo reac-tions with the air nuclei or—in the case of instablesecondaries—decay. The hadronic interactions athigh energies may be described by several reac-tion models alternatively: The VENUS, QGSJET,and DPMJET models are based on the Gribov–

Regge theory, while SIBYLL is a minijet model.The neXus model extends far above a simple com-bination of QGSJET and VENUS routines. Themost recent EPOS model is based on the neXusframework but with important improvements con-cerning hard interactions and nuclear and high-density effect. HDPM is inspired by findings ofthe Dual Parton Model and tries to reproducerelevant kinematical distributions being measuredat colliders. Hadronic interactions at lower ener-gies are described either by the GHEISHA inter-action routines, by a link to FLUKA, or by themicroscopic UrQMD model. In particle decays alldecay branches down to the 1% level are taken

Fig. 11 Applications distributed per continents

e-Infrastructures for e-Science: A Global View

Fig. 12 Astronomy and astrophysics

into account. For electromagnetic interactions atailor-made version of the shower program EGS4or the analytical NKG formulas may be used. Op-tions for the generation of Cherenkov radiationand neutrinos exist. CORSIKA may be used upto and beyond the highest energies of 100 EeV.

4.2.2 LOFAR (Europe, Netherlands)

LOFAR (Low Frequency Array) [41] is a uniqueinstrument developed from the ambition of Dutchastronomers to observe the earliest stages of ouruniverse. For this purpose, a radio telescope isneeded that is a hundred times more sensitive thanexisting telescopes. The LOFAR radio telescopeconsists of thousands of sensors in the form of sim-ple antennas. These antennas are distributed overEurope and connected to a supercomputer via anextensive glass fiber network. It was soon realisedthat LOFAR could be turned into a more genericWide Area Sensor Network and now sensors forgeophysical research and studies in precision agri-culture have been incorporated in LOFAR aswell.

Fig. 13 Bioinformatics

Fig. 14 Chemistry

4.3 Bioinformatics

4.3.1 GAP Virtual Screening Service(Asia, Taiwan)

Dengue fever is an epidemic disease in more than100 countries. The World Health Organizationconfirmed that around 2.5 billion people, about40% of the world’s population, are now at risk.Fifty million cases of Dengue infection are esti-mated to occur annually world-widely and around95% of those are related to children less than15 years old in South-Eastern Asian countries.EUAsiaGrid has successfully deployed the Vir-tual Screening Service (VSS) based the Grid Ap-plication Platform (GAP) [42] for the Avian FluDC2 Refine disease. In March 2009 only, a total of1,111 CPU-days were consumed on the EUAsia-Grid infrastructure and more than 160,000 outputfiles with a data volume of 12.8 GB were createdand stored in a relational database. The scientificcollaboration composed by EUAsiaGrid partnersnow seek for potential drugs to cure the DengueFever using the GVSS (GAP Virtual ScreeningService; Fig. 24).

The target for Dengue Fever Activity inEUAsiaGrid that has been focused on is the NS3

Fig. 15 Civil protection

G. Andronico et al.

Fig. 16 Computer science and mathematics

protease (Nonstructural protein 3), also knownas an enzyme critical for virus replication, sinceits aminoacid sequence and atomic structure arevery similar among the different diseases causedby flaviviruses. Grid-enabled high-throughputscreening for structure-based computational meth-ods has been used to identify small molecule pro-tease inhibitors. ASGC has developed the GVSSapplication package integrated with the gLite-based services DIANE2 and AMGA, and usedthe Autodock as the simulation docking engine.User can submit Grid jobs as well as select com-pounds and targets in the application. GVSS alsoenables the user to upload their compounds andtargets to do the same docking process, com-pare the significant components, and verify themtrough in-vitro experiments.

4.3.2 The Rosetta “ab initio” Software to Predictthe 3D-structure of Never Born Proteins(NBP; Europe, Italy)

Rosetta is an “ab-initio” protein structure predic-tion software [43] which is based on the assump-tion that in a polypeptide chain local interactions

Fig. 17 Cultural heritage

Fig. 18 Earth sciences

bias the conformation of sequence fragments,while global interactions determine the three-dimensional structure with the minimal energycompatible with the local biases. To derive thelocal sequence–structure relationships for a givenaminoacid sequence (the query sequence) Rosettaab-initio uses the Protein Data Bank to extractthe distribution of configurations adopted by shortsegments in known structures. The latter is takenas an approximation of the distribution adoptedby the query sequence segments during the fold-ing process.

The protein structures predicted using Rosettaab-initio during some data challenges have beencompared with the structures predicted for thesame proteins using the Early/Late stage softwaredeveloped by Roterman et al. at the MedicalCollege of the Jagiellonian University (Krakow,Poland). One of these proteins, protein number4091was selected to be produced and character-ized experimentally. The protein was expressedin the bacterium Escherichia coli in a labora-tory of the Peking University in China and itsstructure is in the process of being determinedexperimentally by nuclear magnetic resonancemethods.

Fig. 19 Engineering

e-Infrastructures for e-Science: A Global View

Fig. 20 Fusion

4.4 Chemistry

4.4.1 Gromacs (Latin America, Brazil)

GROMACS [44] is a versatile package to performmolecular dynamics, i.e. simulate the Newtonianequations of motion for systems with hundreds tomillions of particles. It is primarily designed forbiochemical molecules like proteins and lipids thathave a lot of complicated bonded interactions, butsince GROMACS is extremely fast at calculatingthe non-bonded interactions (that usually domi-nate simulations) many groups are also using it forresearch on non-biological systems, e.g. polymers.GROMACS supports all the usual algorithms thatresearchers would expect from a modern molec-ular dynamics implementation. The input/outputdata depends on the complexity of the moleculebeing studied and depends on the number of stepsconfigured for the experiment. For an averagemolecule, the input size is around 100 MB and theoutput data is around 10 GB.

4.4.2 Haddock (Africa, South Africa)

HADDOCK (High Ambiguity Driven protein–protein DOCKing) [45] is an information-drivenflexible docking approach for the modeling of bio-

Fig. 21 High-energy physics

Fig. 22 Life sciences

molecular complexes. HADDOCK differs fromab-initio docking methods because it encodesinformation from identified or predicted pro-tein interfaces in ambiguous interaction restraints(AIRs) to drive the docking process. HADDOCKcan deal with a large class of modeling problems.It has first been developed for protein–proteindocking but has since been applied to a varietyof protein–ligands, protein–peptides, and protein–DNA/RNA complexes.

4.5 Computer Science and Mathematics

4.5.1 MeshStudio (Europe, Italy)

3D object analysis is of particular interest in manyresearch fields and the popularity of 3D computermodels has grown during the last decades due tothe advance of 3D scanning technology. Mesh-Studio [46] allows the interaction with 3D ob-jects, represented by triangular meshes, by meansof a GUI (Graphical User Interface). The mainfeatures of the interface are: (1) control of theorientation of the triangle facets of the mesh; (2)control of the polygons rendering; (3) control of

Fig. 23 Physics

G. Andronico et al.

Fig. 24 GAP virtual screening service deployed in the Grid infrastructure

the lightning; (4) control of rotation, translationand scaling.

MeshStudio implements a new automatic algo-rithm for 3D edge detection on large objects. Thedetection step is performed by evaluating the cur-vature of the surface considering those elementsfalling within a neighborhood, defined by a setof radius values. The algorithm does not requireany input parameter and returns a set of elementspatches that represent the salient features on themodel surface regarding the best radius value.The computational complexity of the algorithmrequired a parallel implementation in order toprocess high resolution meshes in an acceptabletime and, for this reason, the application has beendeployed in a Grid infrastructure.

4.6 Cultural Heritage

4.6.1 Archaeogrid (Europe, Italy)

The approach of modern archaeology to the studyof the evolution of ancient human societies is

based on the acquisition and analysis of manytypes of data. It is well known that in archae-ology large use is made of digital technologiesand computer applications for data acquisition,storage, analysis and visualization. The amountof information coming from remote sensing, fromacquisition of 3D artifacts’ images by scannerslaser, from GPS precise reference of geographicalpoints, and from other sciences are increasing ata large extent the amount of data that are neededto be stored and made available for analysis. Suchdata must, however, be analyzed if they have tobecome valuable information and knowledge. Thedata analysis use advanced methods developedin mathematics, informatics, and physics and inother natural and human sciences. Moreover, theuse of Virtual Archaeology as a new approach tothe narration and visualization in Archaeology, isexpanding rapidly, not only in the museum andarchaeology professions, but also in the broad-cast media, tourism and heritage industries. Theinevitable result of this is an exponential increaseof the amount and complexity of information that

e-Infrastructures for e-Science: A Global View

must be acquired, transferred, stored, processedand analyzed.

ArchaeoGRID [47] is a very powerful appli-cation for the archaeological research helping tomanage this information using the power of Gridinfrastructures and implementing the most com-mon data analysis methods.

4.6.2 Astra (Europe, Italy–Spain)

The ASTRA (Ancient instruments Sound/TimbreReconstruction Application) project [48] runssince 2006 as a collaboration among the Conser-vatory of Music of Parma the Conservatory ofMusic of Salerno, the Division of Catania of theItalian National Institute of Nuclear Physics andother distinguished international partners. Since2010 it is hosted at the University of Malaga.The project’s aim is to bring back to life thesound/timbre of ancient musical instruments. Byapplying the physical modelling synthesis, a com-plex digital audio rendering technique which al-lows modelling the time-domain physics of aninstrument, the experts who are carrying out theproject can recreate models of some musical in-struments that were lost for ages (since hun-dreds and hundreds of years) and reproduce theirsounds by simulating their behaviours as mechan-ical systems. So far, ASTRA effort was focused inthe reconstruction of the sound of plucked ancientstring instruments. The history of the pluckedstring instruments dates back since 6 millenniaago in Mesopotamia, where the instruments werebuilt by limited technical skills, craftsmanship, andmaterial knowledge of the ancient times. In thecourse of history, plucked string instruments werefirst constructed by joining natural environmentalobjects such as wooden sticks, gourds, and turtleshells, and later, gradually, by manufacturing se-lected processed and specifically designed parts.The idea and the mathematical concepts behindremodelling early instruments have been aroundsince the 1970s but the amount of informationthat needed to be processed to put these theoriesinto practice was too great for earlier computers.Nowadays, researchers can access the distributedcomputing power of the Grid, based over researchand academic networks, and speed up the soundreconstructions. The starting point is represented

by all archaeological findings about the instru-ment such as fragments from excavations, writtendescriptions, pictures on ancient urns, etc. ThePhM Synthesis uses a set of mathematical equa-tions and algorithms that describe the physicalmaterials used in the ancient instruments to gen-erate the physical sources of sound. For instance,to model the sound of a drum, a formula for howstriking the drumhead injects energy into a twodimensional membrane would be created. Then,the properties of the membrane (mass density,stiffness, etc.), its coupling with the resonance ofthe cylindrical body of the drum, and the condi-tions at its boundaries (e.g., a rigid terminationto the drum’s body) would describe its movementover time and how it would sound. Similar mod-elling can be carried out with instruments suchas violins, though replicating the slip–stick behav-iour of the bow against the string, the width ofthe bow, the resonance and damping behaviourof the strings, the transfer of string vibrationsthrough the bridge, and, finally, the resonance ofthe soundboard in response to those vibrations.

4.7 Earth Science

4.7.1 WRF4G (Asia/Europe, Thailand–Spain)

WRF (Weather Research and Forecasting) forGRID (WRF4G) [49] is a GRID-enabled frame-work for the WRF Modeling System. TheWeather Research and Forecasting Model [50] isa next-generation mesoscale numerical weatherprediction system designed to serve both op-erational forecasting and atmospheric researchneeds. It features multiple dynamical cores, a 3-dimensional variational (3DVAR) data assimila-tion system, and a software architecture allowingfor computational parallelism and system exten-sibility. WRF is suitable for a broad spectrum ofapplications across scales ranging from meters tothousands of kilometers.

WRF allows researchers the ability to con-duct simulations reflecting either real data oridealized configurations. WRF provides opera-tional forecasting a model that is flexible andefficient computationally, while offering the ad-vances in physics, numeric, and data assimilation

G. Andronico et al.

contributed by the research community. WRF hasa rapidly growing community of users.

The deployment of WRF to the GRID hasbeen an achievement allowing to the WRF’s usercommunity exploiting the computational and stor-age resources provided by the GRID to carry onmore ambitious experiments. The typical experi-ments are, multi-physics, parametric sweep, initialcondition perturbations that suit well for GRIDcomputing.

4.7.2 Seismic Travel Time Tomography(Asia, India)

The application “Seismic Travel Time Tomogra-phy” known as SeisTom of Earth Sciences [51],has been developed by the Seismic Data Process-ing Team, Scientific Engineering & ComputingGroup at the Centre for Development of Ad-vanced Computing of Pune (India). It reconstructsEarth velocity model from travel time. The recon-structed model heavily depends on the correct-ness of the travel time picked for the purpose ofreconstruction.

Seismic imaging, popularly known as tomogra-phy, borrowing the term from medical sciences,produces the raster image of the internal struc-ture by combining information from a set of pro-jections obtained at different viewing angles. Toengineer high resolution and accurate solutions tomining problems, seismic travel time tomographycan yield a high resolution image of the subsur-face to provide information about lithologic char-acterization, fracture void or gallery detection,fluid monitoring, etc. The measured characteris-tics of received wave energy include amplitudeand travel time.

A number of seismic tomographic studies haveexplained how seismic velocities can be estimatedalong raypaths between boreholes and demon-strated their results with real field examples.Imaging techniques used in these applications in-clude back projection, back propagation and raytracing.

The resolution of the estimated images in seis-mic tomography depends on the inversion schemesused, completeness of the data sets and the ini-tially assigned values of the medium parameters inthe iterative reconstruction techniques. Since opti-

mal solutions are always aimed at, even from poorinitial models, global optimization and searchtechnique such as real-coded genetic algorithm isapplied for solving the present imaging problem.

Using seismic travel time tomography by par-allel real-coded genetic algorithm, the subsurfacecan be imaged successfully. The complex syntheticexamples demonstrated the authenticity of thistransmission seismic imaging technique. This to-mography algorithm using RCGA is highly com-pute intensive, because travel time computationmust be done for thousands of models. The advan-tage with the GA based tomography algorithm isthat it can be easily distributed on HPC and Gridplatforms.

While most of the existing tomographic imag-ing techniques are quite versatile, the RCGA al-gorithm is found to work over a large domain ofproblems even with very poor initial guess.

The application was tested on Param Padma(1 TFlop machine). It gave satisfactory result buthigh resolution of reconstructed model was re-quired for greater details and better visualization.But the estimated time on Param Padma for suchdetailed model output was quiet large. So it is hasbeen ported on the Grid exploiting the powerfulof the EU-IndiaGrid infrastructure.

4.8 Engineering

4.8.1 Industry@Grid (Latin America, Brazil)

Developed by the Production Engineering De-partment of the Federal Center for Techno-logical Education Celso Suckow da Fonseca ofRio de Janeriro (Brazil), Industry@Grid [52] isa Grid application composed by a set of Gridmodules aiming at solving industrial operationsproblems. Gridified during the first year of theEELA-2 Project, the Product Mix Module ofIndustry@Grid is currently used by the plastic filmindustry in order to achieve a more profitableproduct mix distribution. The Product Mix Prob-lem is to find out which products to include inthe production plan and in what quantities theseshould be produced in order to maximise profit,market share or some other goal.

In a real case, a plastic film production lineis able to produce a few hundreds of distinct

e-Infrastructures for e-Science: A Global View

products, varying weight, painting and materialcutting. In the real life, the optimisation of theproduct mix yields to a problem that has thou-sand variables and more than tens of hundredsconstraints. This kind of problem can be solved byan ordinary personal computer in a matter of sec-onds. However, besides the large number of vari-ables and constraints, each production line gener-ates scrap during its production process throughextrusion and cutting, which increases the com-plexity, since variability is introduced. This newproblem needs now a reasonable amount of timeand computer power to be tackled.

One alternative to solve this new problem isto use Monte Carlo simulation methods, wherewe use the computer to create several instancesor configurations of the scrap generated duringthe production process. So, the Industry@Gridmodule generates several instances of the newproblem in order to maximise contribution mar-gin of each item to the overall profit, simulatingthe scrap distribution for every instance and solv-ing them using the Grid. In the context of thecompany environment, every product mix sampleneeds an execution of a 50,000 instances, amount-ing to over 16 CPU days for a single study cycle.Thanks to the Latin American Grid infrastruc-ture, this special case of the product mix problemcan be solved in an affordable time, allowing anoperational margin increase.

4.8.2 MAVs-Study (Latin America, Argentina)

MAVs-Study (Biologically Inspired, Super Ma-neuverable, Flapping Wing Micro-Air-Vehicles)[53] is an application jointly developed by theArgentinian Universities of Río Cuarto and Cór-doba to study the flight of insects and small birdsin order to design, in the future, micro air-vehiclesable to be used in different contexts such as at-mospheric studies, fire detection, inspections ofcollapsed buildings, hazardous spill monitoring,inspections in places too dangerous for humans,and planetary exploration, among others. Flyingrobots, that have maneuvering capabilities simi-lar to insects, the more agile flying creatures onEarth, have ceased to be a subject of sciencefiction and are becoming real. However, there arestill major engineering problems to be solved such

as, for instance, miniature energy sources, ultra-light materials, and the complete understanding ofunsteady aerodynamics at low Reynolds numbers.

Unlike conventional vehicles such as airplanes,dirigible airships, and helicopters, which are stud-ied by steady and linear aerodynamics theories,insects use unsteady and highly non-linear un-conventional aerodynamic mechanisms, which areeffective at low Reynolds numbers. They are,among others, the delayed stall, the rotational liftgenerated by the rotation of the wings, and thewake capture.

Due to the unsteady, non-linear, and three-dimensional nature of the flow generated by theflapping of the wings, the aerodynamic modelused in MAVs-Study is a modified version ofthe method known as “unsteady vortex latticemethod” (UVLM), a generalization of the “vor-tex lattice method”. MAVS-Study allows to studythe non-steady aerodynamics associated with theflow generated by a fly (Drosophila melanogaster)in “hover”. The combination of the kinematicsmodel with the aerodynamics model, togetherwith a pre-processor able to generate the geom-etry of the insect wings, allow to predict theflow field around the flapping wings, estimate thespace-time distribution of vorticity of the boundedsheet of the wings, estimate the distribution ofvorticity and shape of the wake emitted from thesharp edges of the wings, predict the aerodynamicloads on the wings, and take into account all pos-sible aerodynamic interferences.

MAVS-Study has been developed in a Win-dows Operating System and it could be deployedin a Grid infrastructure thanks to the successfulporting of the gLite middleware to the MicrosoftWindows platform, done by the Italian NationalInstitute of Nuclear Physics (INFN) in the con-text of EELA-2 project. Thanks to this work, acompletely new set of e-Science applications, untilnow excluded by the Linux-based Grid world, cantoday benefit of the powerful Grid infrastructures.

4.8.3 SACATRIGA (Africa, Morocco)

The Sub-channel Analysis Code for Applicationto Triga research reactors [54] is a preliminarycode devoted to study the thermal hydraulic be-havior of Moroccan TRIGA MARK II research

G. Andronico et al.

reactor. Sub-channel approach is used to modelthe geometry of the reactor core. Over eachsub-channel temperature, pressure and flow rateare predicted by solving Navier–Stokes equationscoupled to the energy conservation equation. Theapplication requires MPI compiler for Fortran90and it has been deployed on the EUMEDGRIDinfrastructure. A graphic interface is also avail-able on the infrastructure which makes use of theGENIUS portal.

4.9 High-Energy Physics

4.9.1 LHC Experiments (International)

All the experiments at the CERN Large HadronCollider are all run by international collabora-tions, bringing together scientists from institutesall over the world. Each experiment is distinct,characterized by its unique particle detector.

The two large experiments, ATLAS [55] andCMS, are based on general-purpose detectors toanalyze the myriad of particles produced by thecollisions in the accelerator. They are designed toinvestigate the largest range of physics possible.Having two independently designed detectors isvital for cross-confirmation of any new discoveriesmade.

Two medium-size experiments, ALICE andLHCb [56], have specialized detectors for ana-lyzing the LHC collisions in relation to specificphenomena.

The last two experiments, TOTEM [57] andLHCf, are much smaller in size. They are designedto focus on ‘forward particles’ (protons or heavyions). These are particles that just brush past eachother as the beams collide, rather than meetinghead-on

The ATLAS, CMS, ALICE and LHCb detec-tors are installed in four huge underground cav-erns located around the ring of the LHC. Thedetectors used by the TOTEM experiment arepositioned near the CMS detector, whereas thoseused by LHCf are near the ATLAS detector. Allthe LHC experiments make heavy use of the Gridinfrastructures available in the various regionsof the world and represent their most importantcustomers.

4.9.2 The Pierre Auger Observatory(Europe/Latin America,Argentina–Italy–Mexico)

The Pierre Auger Experiment [58] is designed tostudy the cosmic rays radiation in the ultra high-energy regime (1018–1020 eV) and is currently tak-ing data as the deployment goes on. An intensesimulation activity is planned in order to analyzethe recorded data, which show a large variabilityin the primary energy (over 2 orders of magni-tude), in zenith angles, presumably also in theprimary mass. In addition, showers with the sameprimary energy–zenith–mass have different longi-tudinal and lateral profiles, because of the intrin-sic stochastic development of the cascade in theatmosphere.

The southern Auger Observatory, located nearthe small town of Malargüe (in the Argentinianprovince of Mendoza) is nearly completed and istaking data regularly.