Computer Organisation and Assembly Language - PTU (Punjab ...

-

Upload

khangminh22 -

Category

Documents

-

view

0 -

download

0

Transcript of Computer Organisation and Assembly Language - PTU (Punjab ...

Self Learning Material

Computer Organisation andAssembly Language

(MCA-103)

Course: Master in Computer Applications

Semester-I

Distance Education Programme

I.K. Gujral Punjab Technical University

Jalandhar

SyllabusI.K. Gujral Punjab Technical University

MCA-103 Computer Organization and Assembly Language

Objectives: The objective of the course is to provide students with a solid foundation in

computer design. Examine the operation of the major building blocks of a computer system.

To introduce students to the design and organization of modern digital computers & basic

assembly language.

Section-A

Computer Organization: Basic Computer Organization, Bus & Memory Transfer, Stored

Program Organization, Computer Registers, Computer Instructions, Timing and Control,

Hardwired based design of Control Unit, Instruction Cycle, Formats of Various types of

Instructions- Memory Reference Instructions, Register Reference Instructions & I/O

Instructions , General Register Organization-Control word, Design of Adder & Logic Unit,

Stack Organization-Register Stack, Memory Stack, Reverse Polish Notation, Addressing

Modes, RISC vs CISC Architectures, Interrupts & types.

Section-B

Pipeline & Vector Processing: Parallel Processing, Pipelining-Arithmetic & Instruction

Pipeline, Vector Processing-Vector operations, Memory Interleaving, Array Processors.

Input – Output Organization: Input-Output Interface- I/O vs Memory Bus, Isolated vs

Memory mapped I/O, Synchronous Data Transfer , Asynchronous Data Transfer-Strobe Control,

Handshaking, Asynchronous Communication Interface, Modes of Transfer-Programmed I/O,

Interrupt Initiated I/O, Interrupt Cycle, Priority Interrupt Controller, DMA Controller & DMA

Transfer.

Section-C

Memory Organization: Main Memory-Memory Address Map, Memory connection to

CPU, Associative Memory-Hardware organization, Match Logic, Cache Memory-Levels of

Cache, Associative Mapping, Direct Mapping, Set-Associative Mapping, writing into Cache,

Cache coherence, Virtual Memory-Address space & Memory space, Address mapping using

pages, Associative memory page table, Page replacement . Memory Management Hardware –

Segmented page mapping, Multiport memory, Memory protection.

Section-D

Multiprocessors: Characteristics of Multiprocessors, Interconnection structures-Time

Shared Common Bus, Crossbar switch, Multistage Switching Network, Hypercube

interconnection, Interprocessor communication & synchronization.

Assembly Language Programming: Example of a typical 8 bit processor (8085

microprocessor)—Registers, Addressing modes, Instruction Set-Data transfer

Instructions, Arithmetic Instructions, Logical Instructions, Program Control Instructions,

Machine Control Instructions, Use of an Assembly Language for specific programmes

: Simple numeric manipulations, Sorting of a list and use of I/O instructions.

Table of Contents

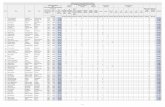

Chapter No. Title Written By Page No.

1 Basics of Computer Organization Er. Sukhpreet Kaur (SUSCET,Tangori, Mohali)

1

2 Control Unit Design Techniques andInstruction format

Er. Ranbir Singh Batth(SUSCET, Tangori, Mohali)

17

3 Computer Registers and StackOrganization

Er. Sushil Kamboj (SUSCET,Tangori, Mohali)

33

4 Addressing Modes, Instruction sets andInterrupts

Er. Sushil kamboj (SUSCET,Tangori, Mohali)

47

5 Pipeline and Vector Processing Dr. Satish Kumar (PanjabUniv. SSGRC, Hoshiarpur)

59

6 Input/Output (I/O) Interface Er.Gurpreet Singh (PanjabUniv. SSGRC, Hoshiarpur)

77

7 Data Transfer Dr. Satish Kumar (PanjabUniv. SSGRC, Hoshiarpur)

88

8 Modes of Data Transfer Dr. Satish Kumar (PanjabUniv. SSGRC, Hoshiarpur)

101

9 Memory Organization Mr. Rajinder Singh (PanjabUniv. SSGRC, Hoshiarpur)

120

10 Cache Memory and Mapping Methods Mr. Rajinder Singh (PanjabUniv. SSGRC, Hoshiarpur)

132

11 Virtual Memory Mrs. Neeru Mago (PanjabUniversity Swami SarvanandGiri Regional Centre,Hoshiarpur )

142

12 Memory Management Hardware Mrs. Neeru Mago (PanjabUniversity Swami SarvanandGiri Regional Centre,Hoshiarpur )

151

13 MULTIPROCESSORS Mr. Rahul Jassal (PanjabUniv. SSGRC, Hoshiarpur)

160

14 MULTIPROCESSORS Mr. Rahul Jassal (PanjabUniv. SSGRC, Hoshiarpur)

171

15 Assembly Language Programming Er. Balwant Raj (Panjab Univ.SSGRC, Hoshiarpur)

181

16 Use of an Assembly Language forSpecific Programmes

Er. Balwant Raj (Panjab Univ.SSGRC, Hoshiarpur)

209

Reviewed by:Dinesh Gupta

DAVIET, Jalandhar

© IK Gujral Punjab Technical University JalandharAll rights reserved with IK Gujral Punjab Technical University Jalandhar

1 | P a g e

Lesson 1. Basics of Computer Organization.

1.0 Introduction1.1 Objective1.2 Basic computer organization1.3 Bus and memory transfer1.4 Stored Program organization1.5 Computer Registers1.6 Computer Instructions1.7 Timing and control1.8 Check your1.9 Summary

1.0 INTRODUCTION

In this chapter we are concerned with basic architecture and the different operations

related to explain the proper functioning of the computer. The computer includes CPU

(which includes Arithmetic Logic Unit (ALU), Control Unit (CU), and Registers), memory

devices, input devices and output devices. Registers are special – purpose, high-speed temporary

memory units. In this chapter, we will study the different types of Registers used in Computer

Organization also how we can specify the operations with the help of different

instructions. There are various instructions with the help of which we can transfer the data

from one place to another and manipulate the data as per our requirement. we will also study

about the organization of the Computer. We have included all the instructions, how they are

being identified by the computer, what are their formats and many more details regarding the

instructions.

2 | P a g e

1.1 Objective

. Main objective of this lesson is to get familiar with following:

Basic operations performed by all types of computer systems. Basic organization of a computer system Input/output and storage units and their functions Bus and memory transfer Storage program organization Various computer registers and their functions Various computer instructions Timing and control

1.2 Basic computer organization

INPUT UNIT

An input unit performs the following functions:

1. It accepts (or reads) instructions and data from outside world

2. It converts these instructions and data in computer acceptable form

3. It passes the converted instructions and data to the computer system for further processing.

OUTPUT UNIT

An output unit of a computer system performs the following functions:

1. It accepts the result produced by the computer, which are in coded form and hence, cannot

be easily understood by us (human).

2. It converts these coded results to human acceptable (Readable) form.

3. It passes the converted results to outside world.

3 | P a g e

Basic computer organization

STORAGE UNIT

1. Data and instructions required for processing (received from input devices)

2. Intermediate results of processing.

3. Final results of processing ,before they are released to an output device

Two Types of Storage

Primary Storage

Used to hold running programs instructions

Used to hold data, intermediate results, and result of ongoing processing of Jobs

Fast in operation

Expensive

Volatile (looses data on power dissipation)

Example of primary storage is RAM (Random Access memory)

Secondary Storage

4 | P a g e

Used to hold stored program instructions

Used to hold data and information of stored jobs

Slower than primary storage

Large capacity

Lot cheaper than primary storage

Retain data even without power

Example of Secondary Storage is HARD DISK, MAGNETIC TAPES, and FLASH

DRIVES

ARITHMETIC LOGIC UNIT (ALU)

Arithmetic Logic Unit of a computer system is the place where the actual executions of

instructions take place during processing operation. It is the part of a computer processor (CPU)

that carries out arithmetic and logic operations on the operands in computer instruction words.

CONTROL UNIT

Control unit of a computer system manages and coordinates the operations of all other

components of computer system.

Central Processing Unit (CPU)

It is the brain of computer System .It is responsible for controlling the operation of all other units

of a computer system.

1.3 BUS AND MEMORY TRANSFERS

5 | P a g e

A typical digital computer has many registers, and paths must be provided to

transfer in formation form one register to another. The number of wires will be excessive if

separate lines are used between each register and all other registers in the system.

A more efficient scheme for transferring information between registers in a

multiple-register configuration is a common bus system. A bus structure consists of a

set of common lines, one for each bit of a register, through which binary

information is transferred one at a time. Control signals determine which register

is selected by the onus during each particular register transfer

One way of constructing a common bus system is with multiplexers. The multiplexers select the

source register whose binary information is then placed on the bus. The construction of a bus

system for four registers is shown in figure below. Each register has four bits, numbered 0

through 3. The bus consists of four 4 × 1 multiplexers each having four data inputs, 0 through 3,

and two selection inputs, S1 and S0. In order not to complicate the diagram with 16 lines

crossing each other, we use labels to show the connections from the outputs of the registers to the

inputs of the multiplexers. For example, output 1 of register A is connected to input 0 of MUX 1

because this input is labeled A1. The diagram shows that the bits in the same significant position

in each register are connected to the data inputs of one multiplexer to form one line of the bus.

Thus MUX 0 multiplexes the four 0 bits of the registers, MUX 1 multiplexes the four 1 bits of

the registers, and similarly for the other two bits.

The two selection lines S1 and S0 are connected to the selection inputs of all four

Multiplexers. The selection lines choose the four bits of one register and transfer them into the

four-line common bus. When S1 S0 = 00, the 0 data inputs of all four multiplexers are selected

and applied to the outputs that form the bus. This causes the bus lines to receive the content of

register A since the outputs of this register are connected to the 0data inputs of the multiplexers.

Similarly, register B is selected if S1 S0 = 01, and so on. the register that is selected by the bus

for each of the four possible binary values of the selection lines.

In general, a bus system will multiples k registers of n bits each to produce an n-line common

bus. The number of multiplexers needed to construct the bus is equal to n, the number of bits in

each register. The size of each multiplexer must be k ×1 since it multiplexes k data lines. For

example, a common bus for eight registers of 16 bits each requires 16 multiplexers, one for each

6 | P a g e

line in the bus. Each multiplexer must have eight data input lines and three selection lines to

multiplex one significant bit in the eight registers.

1.4 Stored Program organizationThe simplest way to organize a computer is to have one processor register and instruction

Code format with two parts. The first part specifies the operation to be performed and the second

specifies an address. The memory address tells the control where to find an operand in memory.

This operand is read from memory and used as the data to be operated on together with the data

stored in the processor register.

Figure 1.1 depicts this type of organization. Instructions are stored in one section of

memory and data in another. For a memory unit with 4096 words we need 12 bits to specify an

address since 212 =4096. If we store each instruction code in one16-bit memory word, we have

available four bits for the operation code (abbreviated op code) to specify one out of 16 possible

operations, and 12 bits to specify the address of an operand. The control reads a 16-bit

instruction from the program portion of memory. It uses the 12-bit address part of the instruction

to read a 16-bit operand from the data portion of memory. It then executes the operation

specified by the operation code.

7 | P a g e

Figure 1.1

Computers that have a single- processor register usually assign to it the name accumulator and

label it AC. The operation is performed with the memory operand and the content of AC.

If an operation in an instruction code does not need an operand from memory, the rest of the bits

in the instruction can be used for other purposes. For example, operations such as clear AC

complement AC, and increment AC operate on data stored in the AC register. They do not need

an operand from memory. For these types of operations, the second part of the instruction code

(bits 0 through 11) is not needed for specifying a memory address and can be used to specify

other operations for the computer.

8 | P a g e

COMPUTER REGISTERS

1.5 Computer Registers

Register is a very small amount of very fast memory that is built into the CPU (central

processing unit) in order to speed up its operations by providing quick access to commonly used

values. Registers refers to semiconductor devices whose contents can be accessed (i.e., read and

written to) at extremely high speeds but which are held there only temporarily (i.e., while in use

or only as long as the power supply remains on).

Registers are the top of the memory hierarchy and are the fastest way for the system to

manipulate data. Registers are normally measured by the number of bits they can hold, for

example, an 8-bit register means it can store 8 bits of data or a 32-bit register means it can store

32 bit of data.

Registers are used to store data temporarily during the execution of a program. Some of the

registers are accessible to the user through instructions. Data and instructions must be put into

the system. So we need registers for this.

The basic computer registers with their names, size and functions are listed below:

Various Computer Registers

9 | P a g e

Data Registers are used for temporary scratch storage of data, as well as for data Manipulations

(arithmetic, logic, etc.). In some processors, all data registers act in the same Manner, while in

other processors different operations are performed are specific registers.

Address Registers (AR) store the addresses of specific memory locations. Often many integer

and logic operations can be performed on address registers directly (to allow for computation of

addresses).Sometimes the contents of address register(s) are combined with other special purpose

registers to compute the actual physical address. This allows for the hardware implementation of

dynamic memory pages, virtual memory, and protected memory.

Accumulator Register (AC):- This Register is used for storing the results those are produced by

the system. When the CPU will generate some results after the processing then all the results will

be stored into the AC Register. This is a 16-bit general purpose register and it can be referred to

in the instructions. For example, Load AC, 20 is instructed to load the accumulator with the

contents of memory address 20.

Instruction Register (IR) is the part of a CPU's control unit that stores the instruction currently

being executed or decoded.

Program Counter (PC): The program counter (PC), commonly called the instruction pointer

(IP) in Intel x86 microprocessors, and sometimes called the instruction address register, or just

part of the instruction sequencer in some computers, is a processor register. It is a 12 bit special

function register in the 8085 microprocessor. It keeps track of the next memory address of the

instruction that is to be executed once the execution of the current instruction is completed. In

other words, it holds the address of the memory location of the next instruction when the current

instruction is executed by the microprocessor.

Temporary Register (TR) : The CPU uses this 16 – bit register to store intermediate results of

operations. It is not accessible by the external programs. It is loaded, incremented and cleared

like the other registers

Input Register (INPR):- It receives an 8 – bit character from an input device.

Output Register (OUTR):- It receives an 8-bit character from an output device.

11 | P a g e

1.6 Computer Instructions

All Basic Computer instruction codes are 16 bits wide. There are 3 instruction code formats:

Memory-reference instructions take a single memory address as an operand, and have theformat:

15 14 12 11 0+-------------------+| I | OP | Address |+-------------------+

If I = 0, the instruction uses direct addressing. If I = 1, addressing in indirect.

Register-reference instructions operate solely on the AC register, and have the followingformat:

15 14 12 11 0+------------------+| 0 | 111 | OP |+------------------+

Input/output instructions have the following format:

15 14 12 11 0+------------------+| 1 | 111 | OP |+------------------+

A computer should have a set of instructions so that the user can construct machine language

programs to evaluate any function that is known to be computable.

Instruction Types

Functional Instructions

Arithmetic, logic, and shift instructions

- ADD, CMA, INC, CIR, CIL, AND, CLA

Transfer Instructions

Data transfers between the main memory and the processor registers

- LDA, STA

Control Instructions

Program sequencing and control - BUN, BSA, ISZ

Various computer instructions

13 | P a g e

All sequential circuits in the Basic Computer CPU are driven by a master clock, with the

exception of the INPR register. At each clock pulse, the control unit sends control signals to

control inputs of the bus, the registers, and the ALU.

Control unit design and implementation can be done by two general methods:

A hardwired control unit is designed from scratch using traditional digital logic design

techniques to produce a minimal, optimized circuit. In other words, the control unit is like

an ASIC (application-specific integrated circuit).

A microprogrammed control unit is built from some sort of ROM. The desired control

signals are simply stored in the ROM, and retrieved in sequence to drive the

microoperations needed by a particular instruction

Timing and signal

15 | P a g e

Question 1: Explain various basic units of computer system.

Question 2: What is the difference between primary storage and secondarystorage?

Question 3: Explain the various computer registers.

Question 4: What are various types of computer instructions?

Question 5: Explain Timing and control signal.

1.9 SUMMARY

16 | P a g e

The computer includes CPU (which includes Arithmetic Logic Unit (ALU), Control Unit (CU),

and Registers), memory devices, input devices and output devices. Arithmetic Logic Unit of a

computer system is the place where the actual executions of instructions take place during

processing operation. Control unit of a computer system manages and coordinates the

operations of all other components of computer system. Input Unit gets input from outside world

and send to processor for processing. Computers employ many different types of memories

(semi-conductor, magnetic disks and tapes, DVDs etc.) to hold data and programs. Each type has

its own characteristics and uses.

Registers are special purpose, high-speed temporary memory units. A register is used to store

the data or information. It is made up of flip – flops and each flip-flop can store one bit of

information. They are used for temporary storage areas for holding various types of information

such as data, instructions, addresses and the intermediate results of calculations. Different types

of registers are required in CPU for the efficient processing. The different registers are Memory

Address Register, Memory Buffer Register, Program Counter, Instruction Register, Data

Register, Accumulator, Input Register, Output Register etc. All the different registers can

communicate with each other and also with the processor using the bus system.

GLOSSARY1) ALU : Arithmetic logic unit2) CU : Control unit3)CPU : Central processing unit4)RAM : Random Access memory5)REGISTER: A register is a group of flip-flops with each flip-flop capable of

storing one bit of information.

6) MAR: Memory Address Register7) PC: Program Counter8) AC: Accumulator Register9) MDR: Memory Data Register

10) IR : Index Register11) MBR: Memory Buffer Register12) DR : Data Register13) IR : Instruction Register14) TR : Temporary Register15) SP: Stack Pointer

17 | P a g e

Lesson 2. Control Unit Design Techniques and Instruction formatStructure

2.0 Introduction2.1 Objectives2.2 What is a Control Unit?2.3 Control Unit Design Techniques

2.3.1 Hardwired Control Unit2.3.2 Microprogrammed Control Unit2.3.3 Hardwired vs Microprogrammed Computers

2.4 Instruction Cycle2.5 What is an Instruction Format? Why is it needed?2.6 Types of Instruction Format

2.6.1 Register Reference Instruction Format2.6.2 Memory Reference Instruction Format2.6.3 Input-Output Instruction Format

2.7 Summary2.8 Model Answers

2.0 INTRODUCTION

In the previous chapter, we have studied about the organization of the Computer. The computer includesCPU ( which includes Arithmetic Logic Unit (ALU), Control Unit (CU), Registers), memory devices, inputdevices and output devices. The control unit helps the Central Processing Unit that is CPU to performcertain control steps for every instruction. For every control step, some microoperations are executed thatare issued by the control unit in the form of a set of signals. This all working of control unit is directed byprocessor block. The set of signals issued at some control step depends on:-

- the control step to be executed- the value of flags of the processor for e.g. condition and status flags,- the instruction to be executed actually,- various signals received on the system bus (e.g. interrupt signals).

In this block, we will study about the Control Unit and its different design techniques. Also in this chapterwe will study the Instruction Cycle and the different formats of instructions.

2.1 OBJECTIVES

This block being the part of first unit will introduce the basics of Computer Organization. At the end of thisblock you will be able to:-

explain what is Control Unit. identify the different techniques of designing Control Unit. explain the Instruction Cycle identify the different forms of instructions.

2.2 WHAT IS CONTROL UNIT?

The unit which checks the exactness of the sequence of the operations is known as the Control Unit.

The various operations executed by the control unit are:-- the instructions are fetched from the memory as in Fetch phase of instruction cycle- then the instructions are decoded by the control unit as in Decode phase of Instruction cycle,

18 | P a g e

- after that the execution of the instructions of the program is performed as in Execute phase ofInstruction cycle. It also controls the input/output devices and directs the overall functioning of the otherunits of the computer.The control unit consists of different circuits that help in executing stored program instructions. Thesecircuits consist of electrical signals that give the drive the computer system. The control unit only guidesthe other parts of the computer system to execute instructions by communicating with CPU and memory.That is why this unit is named as Control unit as it guides or controls the input/output devices and transferof data to and from the primary storage. An analogy can be considered between the CU and the trafficpolice. The CU decides which action will occur just as the traffic police takes decisions on which lanestraffic will move to stop. It also regulates various decisions about the data which is required, the positionof storage for data and the results of the operation. It also helps in the execution of the instruction bysending the control signals to the devices involved. It administers the movement of large amount ofinstructions and data used by the computer. In order to maintain the proper sequence of events requiredfor any processing task, the CU uses clock inputs.2.3CONTROL UNIT DESIGN TECHNIQUES

The different sequence of microoperations are originated by the control unit in any digital computer. Thecontrol unit helps the Central Processing Unit that is CPU to perform certain control steps for everyinstruction. . For every control step, some microoperations are executed that are issued by the controlunit in the form of a set of signals. This all working of control unit is directed by processor block. The setof signals issued at some control step depends on:-

- the control step to be executed- the value of flags of the processor for e.g. condition and status flags,- the instruction to be executed actually,- various signals received on the system bus (e.g. interrupt signals).

There are finite numbers of different types of microoperations available for a given system. The differentnumber of microoperations available for any digital system gives the complexity of that particular system.

Control unit design and implementation can be done by two general methods:

A hardwired control unit is designed from scratch using traditional digital logic designtechniques to produce a minimal, optimized circuit. In other words, any control unit is known ashardwired , when the hardware having different circuits, logic design is used in the generation ofthe control signals.

A microprogrammed control unit is built from some sort of ROM. The desired control signalsare simply stored in the ROM, and retrieved in sequence to drive the microoperations needed bya particular instruction. The systematic method for controlling the microoperation sequences in adigital computer is the fundamental of microprogramming.

2.3.1 HARDWIRED CONTROL UNIT

In hardwired organization, different hardware is used for the implementation. The hardware includesgates, decoders, logic designs with gates etc and other circuits. As it is implemented with the help ofwires and hardware, so if there is a requirement of change in design, then the wiring among the differentcomponents will be changed.

For any simple computer, the organization of gates, decoders etc in hardwired control unit are shown by ablock diagram given in Figure 2.1. The input to the controller consists of the 4-bit opcode of the instructioncurrently contained in the instruction, register and the negative flag from the accumulator. The controller'soutput is a set of 16 control signals that go out to the various registers and to the memory of the

19 | P a g e

computer, in addition to a HLT signal that is activated whenever the leading bit of the op-code is one. Thecontroller is composed of the following functional units: A ring counter, an instruction decoder, and acontrol matrix.

The ring counter provides a sequence of six consecutive active signals that cycle continuously.Synchronized by the system clock, the ring counter first activates its T0 line, then its T1 line, and so forth.After T5 is active, the sequence begins again with T0. Figure 2.2 shows how the ring counter might beorganized internally.

Figure 2.1: A block diagram of the computer’s Hardwired Control Unit

Figure 2.2: The Internal Organization of the Ring Counter

The 4-bit input from the opcode is given to the instruction decoder in the field of the instruction registerand the control unit activates one and only one of its 8 output lines. Each line corresponds to one of theinstructions in the computer's instruction set. Figure 2.3 shows the internal organization of this decoder.

20 | P a g e

The control matrix being the very important part of the hard-wired control unit receives input from the ringcounter and the instruction decoder and provides the proper sequence of control signals. Table 2.1 showswhich control signals must be active at each ring counter pulse for each of the instructions in thecomputer's instruction set (and for the instruction fetch operation). The table was prepared by simplywriting down the instructions in the left-hand column. (In the circuit these will be the output lines from thedecoder). The various control signals are placed horizontally along the top of the table. Entries into thetable consist of the moments (ring counter pulses T0, T1, T2, T3, T4, or T5) at which each control signalmust be active in order to have the instruction executed.

Figure 2.3: The Internal Organization of the Hardwired Instruction Decoder

Table 2.1: A Matrix of times at which each Control Signal must be active in order to execute thehardwired basic Computer’s Instructions

ControlSignalInstruction IP LP EP LM R W LD ED LI EI LA EA A S EU LB

“Fetch” T2 T0 T0 T1 T2 T2

LDA T3 T4 T5 T3 T5

STA T3 T5 T4 T3 T4

MBA T3 T3

ADD T4 T3 T4

SUB T4 T3 T4

JMP T3 T3

JN T3 * NF T3 *

21 | P a g e

NF

Once Table 2.1 has been prepared, the logic required for each control signal is easily obtained. For eachan AND operation is performed between any active ring counter (Ti) signals that were entered into thesignal's column and the corresponding instruction contained in the far left-hand column. If a column hasmore than one entry, the output of the ANDs are ORed together to produce the final control signal. Forexample, the LM column has the following entries: T0 (Fetch), T3 associated with the LDA instruction,and T3 associated with the STA instruction. Therefore, the logic for this signal is:

LM = T0 + T3*LDA + T3*STA

This means that control signal LM will be activated whenever any of the following conditions is satisfied:(1) ring pulse T0 (first step of an instruction fetch) is active, or (2) an LDA instruction is in the IR and thering counter is issuing pulse 3, or (3) and STA instruction is in the IR and the ring counter is issuing pulse3.

The entries in the JN (Jump Negative) row of this table require some further explanation. The LP and EIsignals are active during T3 for this instruction if and only if the accumulator's negative flag has been set.Therefore the entries that appear above these signals for the JN instruction are T3*NF, meaning that thestate of the negative flag must be ANDed in for the LP and EI control signals.

It should be noticed that the HLT line from the instruction decoder does not enter the control matrix,Instead this signal goes directly to circuitry (not shown) that will stop the clock and thus terminateexecution.

2.3.2 MICROPROGRAMMED CONTROL UNIT

In the previous control unit design technique, the organization includes various flip-flops, gates, and otherdifferent hardware and the control matrix is responsible for sending out the required sequence of signals.The hardware controller helps in the execution of the instruction by issuing a set of control signals at eachand every change of the timing signal in the system clock. Every control signal that is issued is associatedwith any one basic microoperation.

One more technique for the generation of the control signals is known as micro-programmed control. Inthis design technique the sets of control signals cause specific micro-operations to occur as being"microinstructions" that could be stored in a memory. Each bit of a microinstruction might correspond toone control signal. If the bit is set it means that the control signal will be active; if cleared the signal will beinactive. Sequences of microinstructions could be stored in an internal "control" memory. Execution of amachine language instruction could then be caused by fetching the proper sequence of microinstructionsfrom the control memory and sending them out to the data path section of the computer.

A sequence of microinstructions that implements an instruction on the external computer is known as amicro-routine. The instruction set of the computer is thus determined by the set of micro-routines, the"microprogram," stored in the controller's memory. The control unit of a microprogram-controlledcomputer is essentially a computer within a computer.

The micro-programmed control unit that can be used for the implementation of the program instruction setof any computer is shown as a block diagram in Figure 2.4. The most important part of the controller isthe control 32 X 24 ROM memory in which upto 32 24-bit long microinstructions can be stored. Each is

22 | P a g e

composed of two main fields: a 16-bit wide control signal field and an 8-bit wide next-address field. Eachbit in the control signal field corresponds to one of the control signals. The next-address field contains bitsthat determine the address of the next microinstruction to be fetched from the control ROM. Wordsselected from the control ROM feed the microinstruction register. This 24-bit wide register is analogous tothe outer machine's instruction register. Specifically, the leading 16 bits (the control-signal field) of themicroinstruction register are connected to the control-signal lines that go to the various components of theexternal machine's data path section.

The control ROM is given by the addressed from a register named as micro-counter. The micro-counter,in turn, receives its input from a multiplexer which selects from : (1) the output of an address ROM, (2) acurrent-address incrementer, or (3) the address stored in the next-address field of the currentmicroinstruction.

The 16 x 5 address ROM is inputted by the instruction register. The instruction register has an op-codefield that is mapped to the starting address of the corresponding microroutine in the control ROM.

The 5-bit CRJA (Control ROM Jump Address) sub-field that holds a microinstruction address. Thus, theaddress of the next microinstruction may be obtained from the current microinstruction. This permitsbranching to other sections within the microprogram.

The combination of the MAP bit, the CD (condition) bit, and the negative flag from the accumulator of theexternal machine provide input to the logic that feeds the select lines of the multiplexer and therebydetermine how the address of the next microinstruction will be obtained

23 | P a g e

Figure 2.4: Microprogrammed Control Unit

The value of the MAP bit helps in the selection of the address ROM and the multiplexer logic by theproduction of the value of CD (unconditional branch value). If the value of MAP is 1, the logic attached tothe multiplexer's select lines produces a 01 which selects the address ROM. If the MAP bit is zero and theCD bit is zero, (unconditional branch), the multiplexer logic produces a 10, which selects the CRJA field ofthe current instruction. Therefore, the next instruction will come from the address contained in the currentinstruction's next-address field.

The HLT bit is used to terminate execution. If it is set, the clock that synchronizes activities within theentire machine is stopped.

2.3.3 HARDWIRED vs. MICRO-PROGRAMMED COMPUTERS

24 | P a g e

From the two different control unit design techniques – that is Hardwired and Microprogrammed Designtechnique, that most computers today are using micro-programming. The reason is basically one offlexibility. Once the control unit of a hard-wired computer is designed and built, it is virtually impossible toalter its architecture and instruction set. In the case of a micro-programmed computer, however, we canchange the computer's instruction set simply by altering the microprogram stored in its control memory. Infact, taking our basic computer as an example, we notice that its four-bit op-code permits up to 16instructions. Therefore, we could add seven more instructions to the instruction set by simply expandingits microprogram. To do this with the hard-wired version of our computer would require a completeredesign of the controller circuit hardware.

The simplified design of the microprogrammed computers is also one of the advantages of worldwide useof microprogramming in the computers today. The process of specifying the architecture and instructionset is now one of software (micro-programming) as opposed to hardware design. Nevertheless, for certainapplications hard-wired computers are still used. If speed is a consideration, hard-wiring may be requiredsince it is faster to have the hardware issue the required control signals than to have a "program" do it.

CHECK YOUR PROGRESS 1Question 1: What is a Contol Unit?____________________________________________________________________________________

____________________________________________________________________________________

________________________________________________________________________________

Question 2: What are two different Control Unit Design Techniques?____________________________________________________________________________________

____________________________________________________________________________________

Question 3: Differentiate between Hardwired and Microprogrammed Control Unit.

____________________________________________________________________________________

____________________________________________________________________________________

2.4INSTRUCTION CYCLE

For any program that resides in the memory unit of the computer, there is a requirement of its execution.For its execution, it need to be decoded and acted upon in turn until the program is completed. This isachieved by the use of what is termed the 'instruction execution cycle', which is the cycle by which eachinstruction in turn is processed. However, to ensure that the execution proceeds smoothly, it is alsonecessary to synchronize the activites of the processor.

The clock of the CPU is used to synchronize the different events of the Instruction-Execution cycle. Theclock produces regular pulses on the system bus at a specific frequency, so that each pulse is an equaltime following the last. This clock pulse frequency is linked to the clock speed of the processor - thehigher the clock speed, the shorter the time between pulses. Actions only occur when a pulse is detected,so that commands can be kept in time with each other across the whole computer unit.

Every program consists of some set of instructions and in turn for program execution, each and everyinstruction needs to be executed or in other words, every instruction goes through the phases of

25 | P a g e

Instruction cycle. The CPU has the address of the program that needs execution and also the address ofthe first instruction of that program. Each instruction cycle in turn is subdivided into a sequence ofsubcycles or phases. In the basic computer each instruction cycle consists of the following phases:-

1. Fetch an instruction from memory.2. Decode the instruction.3. Read the effective address from memory if the instruction has an indirect address.4. Execute the instruction.

These four steps are performed in the cycle as shown in Figure 2.5 until either all the instructions areexecuted and the END, STOP or HALT instruction incurs or there is some error.

Figure 2.5 The Basic Instruction Cycle

The generic instruction cycle for an unspecified CPU consists of the following stages:

1. Fetch instruction: Read instruction code from address in PC (Program Counter) and place in IR(Instruction Register). (IR ← Memory[PC])

2. Decode instruction: Hardware determines what the opcode/function is, and determines whichregisters or memory addresses contain the operands.

3. Fetch operands from memory if necessary: If any operands are memory addresses, initiatememory read cycles to read them into CPU registers. If an operand is in memory, not a register,then the memory address of the operand is known as the effective address, or EA for short. Thefetching of an operand can therefore be denoted as Register ← Memory[EA]. On today'scomputers, CPUs are much faster than memory, so operand fetching usually takes multiple CPUclock cycles to complete.

4. Execute: Perform the function of the instruction. If arithmetic or logic instruction, utilize the ALUcircuits to carry out the operation on data in registers. This is the only stage of the instructioncycle that is useful from the perspective of the end user. Everything else is overhead required tomake the execute stage happen. One of the major goals of CPU design is to eliminate overhead,and spend a higher percentage of the time in the execute stage.

5. Store result in memory if necessary: If destination is a memory address, initiate a memorywrite cycle to transfer the result from the CPU to memory. Depending on the situation, the CPUmay or may not have to wait until this operation completes. If the next instruction does not needto access the memory chip where the result is stored, it can proceed with the next instructionwhile the memory unit is carrying out the write operation.

An example of a full instruction cycle is provided by the following instruction, which uses memoryaddresses for all three operands.

add s, k, addition

i. Fetch the instruction code from Memory[PC].

26 | P a g e

ii. Decode the instruction. This reveals that it's a addition instruction, and that the operands arememory locations s, k, and addition.

iii. Fetch s and k from memory.iv. Multiply s and k, storing the result in a CPU register.v. Save the result from the CPU to memory location addition.

The specific cycle for a load instruction is:

1. Fetch instruction from memory to IR2. Decode3. Fetch operand from memory to a register

The specific cycle for a store instruction is:

1. Fetch instruction from memory to IR2. Decode3. Store operand from register to memory

CHECK YOUR PROGRESS 21. Which cycle refers to the time period during which one instruction is fetched and executed by the CPU:

a. Fetch cycle b. Instruction cyclec. Decode cycle d. Execute cycle

2. Which amongst the following are the stages of the Instruction cycle:a. Fetch b. Decodec. Execute d. Storee. All of these

3 The count of the stages in the Instruction-Execution cycle is:a. 5 b. 6c. 4 d. 7

27 | P a g e

2.5WHAT IS AN INSTRUCTION FORMAT? WHY IT IS NEEDED?

Instructions are stored in the memory unit of the computer. For their execution, the address is given to theCPU. But for the interpretation of the instructions by the CPU, there is a requirement of the standardformat of the instructions and that format is known as an instruction format or instruction code. It is agroup of bits that is depicts about the data and the particular operation to be performed on the data storedon the computer. Processor fetches an instruction from memory and decodes the bits to execute theinstruction. Different computers may have their own instruction set.

An instruction consists of an operation code given by opcode and the addresses of one or moreoperands. The operands may be addressed implicitly or explicitly. To define the layout of the bitsallocated to these elements of instructions, an instruction format is used. It also indicates the addressingmode used for each operand in that instruction.

Figure 2.6: Instruction FormatIn an instruction format, first 12 bits gives the address of the operands, the next 3 bits give the operationcode which is helpful in defining the operation to be performed and the left most bit specifies theaddressing mode that is I = 0 for direct addresses and I = 1 for indirect addresses.The issues in designing an instruction format are as follows:-

Instruction Length: The core designing issue involved in the instruction format is the designingof instruction length. The instruction length determines the flexibility of the machine. The decisionon the length of the instruction depends on the memory size, memory organization, and memorytransfer length. .

Allocation of bits: The allocation of bits for the different parts of the instruction depends on thedifferent computers. If number of bits given to each field depends of the number of opcodes andthe power of the addressing capability. More opcodes means more bits in the opcode field, alsofor an instruction format of a given length, which reduces the number of bits available foraddressing.

2.6TYPES OF INSTRUCTION FORMATS

In the Instruction-Execution cycle, during the first step the instruction is fetched and after that it isdecoded. In the third step, there is a requirement of the determination of the format of the instructions.The three possible instruction types available in the basic computer are:-

1. Register Reference Instruction Format2. Memory Reference Instruction Format3. Input-Output Instruction Format

2.6.1 REGISTER REFERENCE INSTRUCTION FORMAT

If the address of the operands that are given in the Instruction format are the addresses of the registersthen the type of instructions is known as register- reference instructions. These instructions access data

28 | P a g e

and manipulate the contents of registers. They do not access memory. These instructions are executed inone clock cycle.

A processor has many registers to hold instructions, addresses, data, etc.The processor has a register, the Program Counter(PC) that holds the memory address of the nextinstruction. Since the memory in the basic computer only has 4096 locations, the PC only needs 12 bits.The Address Register(AR) is a 12-bit register that is used when there is a need to check the memorylocations that are addressed by the processor. It is used in a direct or indirect addressing.

The Data Register(DR) is also a 12-bit register that is used for the storage of operand when an operandis found, using either direct or indirect addressing. The processor then uses this value as data for itsoperation. The Basic Computer has a single general purpose register–the Accumulator(AC).Often a processor will need a scratch register to store intermediate results or other temporary data; in theBasic Computer this is the Temporary Register(TR).The Basic Computer uses a very simple model of input/output (I/O) operations. Input devices areConsidered to send 8 bits of character data to the processor. The processor can send 8 bits of characterdata to output devices. The Input Register(INPR) holds an 8 bit character gotten from an input device.The Output Register(OUTR) holds an 8 bit character to be send to an output device

An operand from the memory is not needed therefore the other 12 bits are used to specify the operationto be executed. These instructions use bits 0 through 11 of the instruction code to specify one of 12instructions. These bits are available in IR(0-11). The format of Register – reference instruction is givenby Figure 2.7.

Figure 2.7: Instruction Format of Register – Reference InstructionsThe different control functions that can be performed by the register –reference instructions are listed inTable 2.2.

Table 2.2: Execution of Register – Reference InstructionsSymbol Description

CLA Accumulator (AC) is clearedCLE Clear ECMA Accumulator (AC) is complementedCME Complement ECIR Circulate right AC and ECIL Circulate left AC and EINC Increment ACSPA Skip next instruction if AC is positiveSNA Skip next instruction if AC is negativeSZA Skip next instruction if AC is zeroSZE Skip next instruction if E is zeroHLT Halt computer

2.6.2 MEMORY – REFERENCE INSTRUCTION FORMAT

In complement to the Register-reference instructions, the memory reference instructions are those thatreference memory. Some read data from memory, others write data to memory, and one instruction doesboth. For these instructions, the most significant bit is the indirect bit. If this bit is 1, the instruction must

29 | P a g e

use indirect memory addressing. That is, the address given is not the address of the operand. It is theaddress of a memory location which contains the address of the operand.

The bits from 12 to 14 specified the OPCODE field, or operation field. These three bits may take on anyvalue except for 111; that is reserved for other instructions. The OPCODE field tells the CPU whichinstruction is to be performed. Finally, the 12 low order bits from 0-11 contain the memory address to beused by this instruction (or in the case of indirection the address of a memory location which contains theaddress to be used by this instruction). The format of the memory – reference is shown in Figure 2.8

Figure 2.8: Memory – Reference Instruction FormatSome of the Memory – Reference Instructions are shown in Table 2.3:-

Table 2.3: Memory – Reference InstructionsInstruction Description

AND AND memory word to AC (Performsbitwise Logical AND)

ADD Accumulator is added by memory wordLDA Accumulator is loaded by the memory

wordSTA the content of accumulator is stored in

memoryBUN Branch unconditionallyBSA Branch and Save Return AddressISZ Increment and Skip if zero

2.6.3 INPUT-OUTPUT INSTRUCTION FORMAT

Another category of instructions formats are known as Input and Output instructions. These instructionsare needed for transferring information to and from the accumulator( AC) register, for checking the flagbits, and for controlling the interrupt facility. An input – output instruction does not need a reference tomemory and is recognized by operational code 111 with a 1 in the left most bit of the instruction and theremaining 12 bits are used to specify the type of input output operation or test performed. The Figure 2.9shows the format of Input- Output Instruction:

Figure 2.9: Input-Output Instruction FormatThe different Input-Output Instructions are given below:-

Table 2.4: Input - Output InstructionsInstruction Description

INP Any character is inputtedOUT Any character is outputtedSKI Skip on input flagSKO Skip on output flagION Interrupt enable onIOF Interrupt enable off

30 | P a g e

The INP instruction transfers the input information from the input register into the accumulator into itseight low-order bits and also clears the input flag to 0. The OUT instruction do the vice versa case andtransfers eight low-order bits of accumulator into the output register OUTR and clears the output flag to 0.The next two instructions in the table check the status of the flags and cause a skip of the next instructionif the flag is 1. The instruction that is skipped will normally be a branch instruction to return and check theflag again. The branch instruction is not skipped if the flag is 0. If the flag is 1, the branch instruction isskipped and an input or output instruction is executed. ION and IOF are used to set and clear respectivelythe interrupt enable flip-flop IEN.CHECK YOUR PROGRESS 3

1) Micro-programmed control unit is ________________ than hardwired but __________________?

a. cheaper, more error proneb. faster, more error pronec. less error prone, slowerd. faster, harder to change

2) Hardwired control unit is ________________ than micro-programmed but __________________?

a. cheaper, more error proneb. faster, more error pronec. less error prone, slowerd. faster, harder to change

3) The goals of both hardwired control and microprogrammed control units is to?

a. access memoryb. generate control signals

c. access the ALUd. cost a lot of money

4) Any instruction format containsa. Opcodeb. Operandc. Only ad. Both a & b

5) Each operation has its _____ opcode:a. Uniqueb. Twoc. Threed. Four

31 | P a g e

6) _______specify where to get the source and destination operands for the operation specified by the_______:

a. Operand fields and opcodeb. Opcode and operandc. Source and destinationd. Cpu and memory

2.7SUMMARY

The Control Unit (CU) being the part of the CPU is used to depict the exactness of the sequence ofoperations. The Control Unit fetches the program instructions from the memory unit, and ensures correctexecution of the program. It also controls the input/output devices and directs the overall functioning ofthe other units of the computer. The Control Unit can be designed by two methods: a) Hardwired DesignTechnique b) Microprogrammed Design Technique. The hardwired design technique consists of digitallogic that is circuits, gates for designing and the microprogrammed design technique is built from ROM.Nowadays, microprogrammed based design technique is used in computers.

Instruction cycle is the process of executing any instruction in the CPU. Every instruction goes throughthis cycle which consists of Fetch, Decode, Execute and Store.

We have different types of Instruction formats according to the location of the operands mentioned in theinstructions. And these instruction format categories include : a) Register Reference Instructions b)Memory Reference Instructions c) Input Output Instructions. Register Reference Instruction format is usedif the operands are in register. Memory Reference Instructions are used if the operands are in thememory. And Input Output instructions are used to transfer the information to and from Accumulator (AC)register.

2. 8 MODEL ANSWERSYour Progress 1

1) The Control Unit (CU) checks the correctness of the sequence of operations. It fetches theprogram instructions from the memory unit, interprets them, and ensures correct execution of theprogram. It also controls the input/output devices and directs the overall functioning of the otherunits of the computer.

2) a) Hardwired Control Unit Design Technique

b) Microprogrammed Control Unit Technique

3) A hardwired control unit is designed from scratch using traditional digital logic designtechniques to produce a minimal, optimized circuit. In other words, when the control signals aregenerated by hardware using conventional logic design techniques, the control unit is said to behardwired.

A microprogrammed control unit is built from some sort of ROM. The desired control signalsare simply stored in the ROM, and retrieved in sequence to drive the microoperations needed bya particular instruction. The principle of microprogramming is an elegant and systematic methodfor controlling the microoperation sequences in a digital computer.

32 | P a g e

Your Progress 21. B 2. E 3. C

Your Progress 31. C 2. D 3. B 4. D 5. A 6. A

Glossary1) Control Unit (CU): The Control Unit (CU) checks the correctness of the sequence of operations. Itfetches the program instructions from the memory unit, interprets them, and ensures correct execution ofthe program.2) Hardwired Control Unit Design Technique: When the control signals are generated by hardwareusing conventional logic design techniques, the control unit is said to be hardwired design technique.3) Instruction Cycle: A cycle that every instruction has to undergo while it is being processed.4) Instruction Format: An instruction format or instruction code is a group of bits used to perform aparticular operation on the data stored on the computer.5) OPCODE: Operation Code, gives the operation that need to be performed on the instruction. It givesthe code assigned to particular operation. It is given in the instruction format.6) PC : Program Counter7) AR: Address Register8) DR: Data Register9) AC: Accumulator10) TR: Temporary Register11) INPR: Input Register12) OUTR: Output Register

33 | P a g e

Lesson 3. Computer Registers and Stack OrganizationStructure

3.0 Introduction3.1 Objectives3.2 What is a Register?3.3 Different Types of Registers3.4 Communication between the Registers3.5 General Register Organization3.6 What is a Control Word?3.7 Design of Adder and Logic Unit3.8 Stack Organization

3.8.1 Register Stack3.8.2 Memory Stack3.8.3 Reverse Polish Notation

3.9 Summary3.10 Model Answers

3.0 INTRODUCTION

In the previous chapter, we have studied about the organization of the Computer. The computer includesCPU (which includes Arithmetic Logic Unit (ALU), Control Unit (CU), and Registers), memory devices,input devices and output devices. Registers are high-speed, temporary storage areas for storing differentdata, instructions, addresses etc. In this chapter, we will study the different types of Registers used inComputer Organization and their organization. We will also study the design of Adder and Logic Unit. TheStack Organization is also discussed in this chapter in which a brief introduction of Register Stack,Memory Stack and Reverse Polish Notation is given.

3.1 OBJECTIVES

This block being the part of first unit will introduce the basics of Computer Organization. At the end of thisblock you will be able to:-

explain what is a Register? identify the different types of Registers explain what is a Control Word? trace the design of adder and logic unit define what is stack organization. Identify the different types of stack organizations

3.2 WHAT IS A REGISTER?

Any register is a discrete holding device consists of a group of flip-flops with each flip-flop capable ofstoring one bit of information. If any register is of n-bit then it will consists of group of n flip-flops and alsothe register can store n bits of information. These are special – purpose, high-speed temporary memoryunits.

The register can hold various types of information such as data, instructions, addresses, and theintermediate results of calculations. In other words, the registers are used to hold all the data upon whichthe CPU is working at that time. That is why, registers are also known as CPU’s working memory. Also,the registers have an additional advantage of speed. The registers perform under the supervision of thecontrol unit to accept, hold, and transfer instructions or data and perform arithmetic or logical

34 | P a g e

comparisons at high speed. The control unit uses a data storage register in the similar way a store owneruses a cash register as a temporary, convenient place to store the transactions. As soon as a particularinstruction or piece of data is processed, the next instruction immediately replaces it, and the informationthat results from the processing is returned to the main memory.

3.3DIFFERENT TYPES OF REGISTERS

There are different types of Registers:-1) MAR stand for Memory Address Register : MAR is a 12-bit register that carries the memory

addresses of data and instructions. This register is used when there is a need to access data andinstructions from memory during the execution phase of an instruction. It is used in both thecases that are either the user wants to read data from the memory or the user wants to write datainto the memory from the CPU.

2) Program Counter (PC):- PC is also a 12-bit register which always contains the address of thenext instruction that is to be executed by the CPU. This register is also known as the instructionpointer (IP) and sometimes called the instruction address register, or just part of the instructionsequencer in some computers, is a processor register. Once the program containing number ofinstructions started with the execution, then the starting address is given to the CPU. After that,the next address of the instruction is stored in the Program Counter, so that execution of theprogram can be done smoothly.

3) Accumulator Register (AC):- Accumulator is a 16-bit register and it can be referred to in theinstructions. Generally, it is used to store the intermediate results and also the final results thatare produced by the CPU. For example, Load AC, 20 is instructed to load the accumulator withthe contents of memory address 20.

4) Memory Data Register (MDR):- MDR is the 16-bit register of a computer's control unit thatcontains the data to be stored in the computer storage (e.g. RAM), or the data after a fetch fromthe computer storage. It acts like a buffer and holds anything that is copied from the memoryready for the processor to use it. MDR hold the information before it goes to the decoder, whichcontains the data to be written into or readout of the addressed location. For example, to retrievethe contents of cell 123, we would load the value 123 (in binary, of course) into the MAR andperform a fetch operation. When the operation is done, a copy of the contents of cell 123 wouldbe in the MDR. The MDR can also work in both ways that is data can be fetched from memoryand written into MDR and also data can be placed into MDR from any other register and then canbe put into memory.

5) Index Register (IR):- Index Register (IR) carries the value of some number that is used toevaluate the address portion of any instruction of the program. It is used to calculate the effectiveaddress. Sometimes it is also known as base register. When any program is given for executionto the CPU, then it is checked for the value of base address stored in the base register. If there isany value in the base register then that value is added to the address given to the CPU to find theeffective address.

6) Memory Buffer Register(MBR):- MBR stand for Memory Buffer Register. This register holds thecontents of data or instruction read from, or written in memory. It means that this register is usedto store data/instruction coming from the memory or going to the memory.

35 | P a g e

7) Data Register (DR):- A register used in microcomputers to temporarily store data beingtransmitted to or from a peripheral device.

8) Instruction Register (IR):- IR is a 16-bit register that is used to generate timing signals and othercontrol signals. This register can only be loaded with the instruction but we cannot perform anyoperation to it.

9) Temporary Register (TR):- This 16-bit register is used to store the temporary results that comeswhen the CPU is executing some instructions.

10) Input Register (INPR):- INPR, the input register is used to receive an 8 – bit character from aninput device.

11) Output Register (OUTR):- OUTR, the output register receives an 8-bit character from an outputdevice.

CHECK YOUR PROGRESS 1

Question 1: What is a Register?____________________________________________________________________________________

____________________________________________________________________________________

________________________________________________________________________________

Question 2: Differentiate between INPR and OUTR.____________________________________________________________________________________

____________________________________________________________________________________

________________________________________________________________________________

Question 3: Define the work of MBR and MAR Registers____________________________________________________________________________________

____________________________________________________________________________________

________________________________________________________________________________

3.4COMMUNICATION BETWEEN THE REGISTERS

36 | P a g e

As discussed in previous section, every computer have eight registers that are given by AC, IR, MDR,MAR, PC, TR, INPR, OUTR), a memory unit, and a control unit. So, there is a need of communicationbetween them. So, it is necessary to provide the paths to transfer information from one register to anotherand between memory and registers. The number of wires will be excessive if connections are madebetween the outputs of each register and the inputs of the other registers. A more efficient scheme fortransferring information in a system with many registers is to use a common bus. A bus system isestablished which is common between the basic computer registers and other units which is shown inFigure 3.1 and this system is commonly known as Common Bus System.

The outputs of seven registers and memory are connected to the common bus. The specific output that isselected for the bus lines at any given time is determined from the binary value of the selection variablesS2, S1 and S0. The number along each output shows the decimal equivalent of the required binaryselection. For example, the number along the output of DR is 3. The 16-bit outputs of DR are placed onthe bus lines when S2S1S0 = 011 since this is the binary value of decimal 3. The lines from the commonbus are connected to the inputs of each register and the data inputs of the memory. The particularregister whose LD(Load) input is enabled receives the data from the bus during the next clock pulsetransition. The memory receives the contents of the bus when its write input is activated. The memoryplaces its 16-bit output onto the bus when the read input is activated and S2S1S0 = 111.

Four registers DR, AC, IR, and TR, have 16 bits each. Two registers, AR and PC, have 12 bits each sincethey hold a memory address. When the contents of AR or PC are applied to the 16-bit common bus, thefour most significant bits are set to 0’s, When AR or PC receive information from the bus, only the 12 leastsignificant bits are transferred into the register.

The input register INPR and the output register OUTR have 8 bits each and communicate with the eightleast significant bits in the bus. INPR is connected to provide information to the bus but OUTR can onlyreceive information from the bus. INPR is used when the system has to receive a character from an inputdevice and OUTR is used when any character is to be outputted to any output device.

The 16 lines of the common bus receive information from six registers and the memory unit. The bus linesare connected to the inputs of six registers and the memory. There are three control inputs: LD (Load),INR (increment), and CLR (Clear) that are connected to different five registers.

The input data and output data of the memory are connected to the common bus, but the memoryaddress is connected to AR. Therefore, AR must always be used to specify a memory address. By usinga single register for the address, we eliminate the need for an address bus that would have been neededotherwise. The content of any register can be specified for the memory data input during a writeoperation. Similarly, any register can receive the data from memory after a read operation except AC.

37 | P a g e

Figure 3.1: Basic common registers connected to a common busThe 16 inputs of AC come from an adder and logic unit (ALU) circuit. This circuit has three set of inputs.One set of 16-bit inputs come from the outputs of AC. They are used to implement registermicrooperations such as complement AC and shift AC. Another set of 16-bit inputs come from the dataregister DR. The inputs from DR and AC are used for arithmetic and logic microoperations, such as addDR to AC or AND DR to AC. The result of an addition is transferred to AC and the end carry-out of theaddition is transferred to flip-flop E (extended AC bit). A third set of 8 –bit inputs come from the inputregister INPR.The clock transition at the end of the cycle transfers the content of the bus into the designated destinationregister and the output of the adder and logic circuit into AC. For example, the two microoperations

DR AC and AC DRcan be executed at the same time. This can be done by placing the content of AC on the bus, enablingthe LD (load) input of DR, transferring the content of DR through the adder and logic circuit into AC, andenabling the LD (load) input of AC, all during the same clock cycle. The two transfers occur upon thearrival of the clock pulse transition at the end of the clock cycle.

CHECK YOUR PROGRESS 21) The register that keeps track of the instructions in the program stored in memory is_______

A) Control register B) Program counterC) Status register D) Direct register

38 | P a g e

2) Data transfer between the main memory and the CPU register takes placethrough two registers namely.......

A) general purpose register and MDR B) accumulator and programcounterC) MAR and MDR D) MAR and Accumulator

3) In every transfer, selection of register by bus is decided by:

A) Control signal B) No signalC) All signal D) All of above

4) The index register in a digital computer is used for:-

A) Pointing to the stack addressB) Indirect addressingC) Keeping track of number of times a loop is executedD) Address Modification

3.5GENERAL REGISTER ORGANIZATION

Memory locations are needed for storing pointers, counters, return addresses, temporary results, andpartial products during multiplication. Having to refer to memory locations for such applications is timeconsuming because memory access is the most time – consuming operation in a computer. It is moreconvenient and more efficient to store these intermediate values in processor registers. When a largenumber of registers are included in CPU, it is most efficient to connect them through a common bussystem. The registers communicate with each other not only for direct data transfers, but also whileperforming various microoperations. Hence it is necessary to provide a common unit that can perform allthe arithmetic, logic, and shift microoperations in the processor.The seven CPU registers can be organized using the bus as shown in Figure 3.2. Two multiplexers(MUX) are used that are connected to the output of each register and they form two buses given by A andB.

39 | P a g e

Figure 3.2 : Block Diagram of General Register OrganizationThe input to the ALU is from the buses A and B. When any operation is to be preformed then that isselected by the arithmetic or logic microoperation. The result of the microoperation is available for outputdata and also goes into the inputs of all the registers. The register that receives the information from theoutput bus is selected by a decoder. The decoder activates one of the register load inputs, thus providinga transfer path between the data in the output bus and the inputs of the selected destination register.The control unit that operates the CPU bus system directs the information flow through the registers andALU by selecting the various components in the system. For example, to perform the operation

R1 R2 + R3

(1) MUX A selection (SEL A): to place the content of R2 into bus A

(2) MUX B selection (SEL B): to place the content of R3 into bus B

(3) ALU operation selection (OPR): to provide the arithmetic addition (A + B)

(4) Decoder destination selection (SEL D): to transfer the content of the output bus into R1

The four control selection variables are generated in the control unit and must be available at thebeginning of a clock cycle. The data from the two source registers propagate through the gates in themultiplexers and the ALU, to the output bus, and into the inputs of the destination register, all during theclock cycle interval. Then, when the next clock transition occurs, the binary information from the outputbus is transferred into R1. To achieve a fast response time, the ALU is constructed with high-speedcircuits.

3.6WHAT IS A CONTROL WORD?

40 | P a g e

There are 14 binary selection inputs in the unit, and their combined value specifies a control word. The14-bit control word is defined in Figure 3.2(b). It consists of four fields. Three fields contain three bitseach, and one field has five bits. The three bits of SELA select a source register for the A input of theALU. The three bits of SELB select a register for the B input of the ALU. The three bits of SELD select adestination register using the decoder and its seven load outputs. The five bits of OPR select one of theoperations in the ALU. The 14-bit control word when applied to the selection inputs specify a particularmicrooperation.

The encoding of the register selections is specified in Table 3.1.

Table 3.1 Binary codes for Register Selection Fields

BinaryCode

SELA SELB SELD

000 Input Input Input001 R1 R1 R1010 R2 R2 R2011 R3 R3 R3100 R4 R4 R4101 R5 R5 R5110 R6 R6 R6111 R7 R7 R7

The 3-bit binary code listed in the first column of the table specifies the binary code for each of the threefields. The register selected by fields SELA, SELB, and SELD is the one whose decimal number isequivalent to the binary number in the code.

3.7DESIGN OF ADDER AND LOGIC UNIT

The ALU that is Arithmetic and Logic Unit of CPU can perform arithmetic and logic operations.

ALU performs two types of operations:-

i) Fixed Point Operationsii) Floating Point Operations

Some of the arithmetic operations are:-

i) Addition, Subtractionii) Addition-with-carryiii) Subtraction with borrow from a previous operationiv) Divisionv) Increment and Decrementvi) Logical shift left and logical shift rightvii) Arithmetic shift left and logical shift right

Some of the logic operations are:-i) NOTii) AND, OR and XORiii) Compare

The general design of adder and logic unit is shown in Figure 3.3

41 | P a g e

Figure 3.3 : Design of Adder and Logic Unit

3.8 STACK ORGANIZATION

A useful feature that is included in the CPU of most computers is a stack or last-in, first –out (LIFO) list. Astack is a storage device that stores information in such a manner that the item stored last is the first itemretrieved. The operation of a stack can be compared to a stack of trays. The last tray placed on top of thestack is the first to be taken off.

The stack in digital computers is essentially a memory unit with an address register that can count only(after an initial value is loaded into it). The register that holds the address for the stack is called a stackpointer (SP) because its value always points at the top item in the stack. Contrary to a stack of trayswhere the tray itself may be taken out or inserted, the physical registers of a stack are always availablefor reading or writing. It is the content of the word that is inserted or deleted.

Two operations are defined for stack:-

i) Push :- The operation of insertion is called push because it can be thought of as the result ofpushing a new item on top.

ii) Pop:- The operation of deletion is called pop because it can be thought of as the result ofremoving one item so that the stack pops up.

3.8.1 REGISTER STACK

When the stack is implemented using a finite number of memory words or registers then this technique isknown as Register Stack. The register stack can be implemented using 32-word ,64-bit or more than thatregister stack. In Figure 3.4 64-word register stack is shown. It uses a stack pointer named as SP thatcontains the address of the word that is currently on top of the stack.

42 | P a g e

Figure 3.4: Block diagram of 64-word Register StackThree items are placed in the stack: A, B, and C, in that order. Item C is on top of the stack so that thecontent of SP is now 3. To remove the top item, the stack is popped by reading the memory word ataddress 3 and decrementing the content of SP. Item B is now on top of the stack since SP holds address2. To insert a new item, the stack is pushed by incrementing SP and writing a word in the next – higherlocation in the stack. Note that item C has been read out but not physically removed. This does not matterbecause when the stack is pushed, a new item is written in its place.In a 64-word stack, the stack pointer contains 6 bits because 26 = 64. Since SP has only 6 bits, it cannotexceed a number greater than 63 (111111 in binary). The one-bit register FULL is set to 1 when the stackis full, and the one-bit register EMPTY is set to 1 when the stack is empty of items. DR is the data registerthat holds the binary data to be written into or read out of the stack.Initially, SP is cleared to 0, EMPTY is set to 1, and FULL is cleared to 0, so that SP points to the word ataddress 0 and the stack is marked empty and not full. If the stack is not full ( if FULL = 0), a new item isinserted with a push operation. The push operation is implemented with the following sequence ofmicrooperations:-PUSH SP SP + 1 increment stack pointer

M [SP] DR unit item on top of the Stack

It (SP = 0) then (FULL 1) check it stack is full

EMPTY 0 mask the stack not empty.

The stack pointer is incremented so that it points to the address of the next-higher word. A memory writeoperation inserts the word from DR into the top of the stack. M[SP] denotes the memory word specified bythe address presently available in SP. The first item is stored in the stack at address 1. The last item isstored at 0. If SP reaches 0, the stack is full of items, so FULL is set to 1.

A new item is deleted from the stack if the stack is not empty (if EMPTY = 0). The pop operation consistsof the following sequence of microoperations:-

POP DR M[SP] read item trans the top of stack

SP SP –1 decrement SP

43 | P a g e

If (SP = 0) then (EMTY 1) check it stack is empty

FULL 0 mark the stack not full.

The top item is read from the stack into DR. The stack pointer is then decremented. If its value reacheszero, the stack is empty, so EMPTY is set to 1. This condition is reached if the item read was in location1. Once this item is read out, SP is decremented and reaches the value 0, which is the initial value of SP.

3.8.2 MEMORY STACK