Variability in nanometer CMOS: Impact, analysis, and minimization

-

Upload

independent -

Category

Documents

-

view

1 -

download

0

Transcript of Variability in nanometer CMOS: Impact, analysis, and minimization

ARTICLE IN PRESS

0167-9260/$ - se

doi:10.1016/j.vl

�CorrespondE-mail addr

INTEGRATION, the VLSI journal 41 (2008) 319–339

www.elsevier.com/locate/vlsi

Invited paper

Variability in nanometer CMOS: Impact, analysis, and minimization

Dennis Sylvestera,�, Kanak Agarwalb, Saumil Shaha

aUniversity of Michigan, Ann Arbor, MI, USAxcbIBM Austin Research Laboratories, Austin, TX, USA

Received 6 August 2007; received in revised form 7 September 2007; accepted 10 September 2007

Abstract

Variation is a significant concern in nanometer-scale CMOS due to manufacturing equipment being pushed to fundamental limits,

particularly in lithography. In this paper, we review recent work in coping with variation, through both improved analysis and

optimization. We describe techniques based on integrated circuit manufacturing, circuit design strategies, and mathematics and statistics.

We then go on to discuss trends in this area, and a future technology outlook with an eye towards circuit and CAD-solutions to growing

levels of variation in underlying device technologies.

r 2007 Elsevier B.V. All rights reserved.

1. Introduction

Technology scaling has led to a significant increase inprocess variability due to random dopant effects, imperfec-tions in lithographic patterning of small devices, andrelated effects [1]. These variations cause significantunpredictability in the power and performance characte-ristics of integrated circuits (ICs). In particular, thevariations have a large effect on leakage power. Leakagepower consumption now contributes a significant propor-tion of total power [2]. The susceptibility of leakage poweron process variations is due to its exponential dependenceon threshold voltage. In [3], it is shown that for a typicalprocess, delay variation between fast and slow dies isaround 30%, whereas leakage variation can be as high as20� . Power and delay are negatively correlated, thereforeit is often found that fast chips have unacceptably highpower consumption, and low-power chips are too slow.This two-sided constraint is significantly reducing theparametric yield in process technologies at 90 nm andbeyond. This yield loss will worsen in future technologiesdue to increasing process variations and the continuedsignificance of leakage power.

e front matter r 2007 Elsevier B.V. All rights reserved.

si.2007.09.001

ing author.

ess: [email protected] (D. Sylvester).

In recent times, there has been a wealth of researchaiming to analyze circuit variability and reduce the effectson parametric yield. In this paper, we review a range ofsuch techniques that not only predict the effects of processvariation, but also allow designers to use the informationto guide circuit optimization. Using this information allowsdesigners to move away from the conventional methods ofoptimizing for power or timing, instead focusing theiroptimization towards the objective of improving bothconcurrently.In Section 2, we explore some of the causes of process

variation and their contribution towards parametric yieldreduction. We also analyze the impact of variability on theyield of SRAM arrays. Section 3 reviews methods for themodeling and analysis of variation. These include a methodto compute the joint probability distribution function(jpdf) of power and timing, thus computing parametricyield given simultaneous power and timing constraints, amethod for the modeling of interconnect variation, and atechnique to model the effect of imperfect lithographicpatterning on circuit timing and leakage. In Section 4, wediscuss some design techniques for variability reduction.The first among these is an adaptive body biasing (ABB)scheme. We also review variation-tolerant microarchitec-ture techniques, a gate-length biasing mechanism, and aself-compensating layout scheme. Section 5 presents oneperspective on the future of design and CAD with respect

ARTICLE IN PRESSD. Sylvester et al. / INTEGRATION, the VLSI journal 41 (2008) 319–339320

to variability, bringing up several directions of researchthat merit increased attention.

2. Impact of process variation

Process variation can have a significant impact on powerdissipation, performance and functionality of a circuit. Inthis section, we discuss the impact of manufacturingvariations on parametric and functional yield of a design.

2.1. Impact on parametric yield

Parametric yield is defined as the percentage of dies thatsatisfy the specified frequency and power constraints.Parametric yield is different from manufacturing yieldand does not include yield loss due to manufacturingdefects. With fluctuations in process parameters, a large setof dies may not meet the power or performance budget ofthe design. This causes a significant yield loss as such chipscannot be shipped despite being functionally correct. Inthis section, we discuss such yield-related issues anddemonstrate that the increasing dominance of processvariation has made parametric yield loss a serious concernin current technologies.

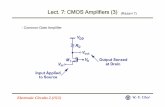

A large set of process parameters can impact the powerand performance of a design. Among all these parameters,the most significant are channel length and thresholdvoltage. Fig. 1 shows frequency scatter due to thresholdand channel length variation obtained from Monte Carlo(MC) SPICE simulations for a 15-stage ring oscillatorcircuit in a 90 nm process. The variability in channel lengthand threshold voltage is obtained from estimates for thisindustrial process. As expected, frequency is higher atsmaller channel lengths and degrades considerably forlarger channel lengths. This is in accordance with tradi-tional practices where process spread is defined as spread inchannel length and negative and positive sigma corners are

Fig. 1. Scatter plot of normalized frequency plotted against sigma-

variation in channel length.

referred to as fast and slow process corners, respectively.As can be seen from the figure, there can be large variationin frequency due to process variation. The slow chips thatdo not meet the timing constraint must be discardedresulting in parametric yield loss.Besides timing yield, process variation can also cause

yield loss due to its impact on power. In previoustechnologies, when leakage (static) power was an insignif-icant component of the total power, the impact of processvariation on parametric yield was limited to the timingyield. It is because dynamic power shows a weakdependence on process variation. Dynamic power isimpacted primarily by the switching capacitance, which islinearly dependent on device channel length. The switchingcapacitance is fairly independent of small variations inthreshold voltage and the effect of threshold voltagevariation on short-circuit power can be neglected. Hence,it can be presumed that dynamic power is sensitive only tochannel length variations. More importantly, the impact ofprocess variation on dynamic power causes power con-sumption at slow process corners to be higher than that atfast corners. This trend is shown in Fig. 2, which plotsnormalized power and frequency against sigma-variationsin channel length superimposed on the Gaussian densityfunction of variations in channel length [4]. If a certainpower and frequency constraint is imposed on such aspread, then slower dies also violate the power constraint.Hence, the constraint on parametric yield is one-sided andwe can determine the maximum sigma-value for channellength that meets both power and frequency constraintsand reject all dies lying outside this channel length bound.With growing contribution of leakage power to total

power, the impact of variation on power dissipation of achip has become significant. It is because process variationhas a strong impact on leakage current due to the

Fig. 2. Yield window for frequency constraint of fmin ¼ 0.9fnom and

power constraint of Pmax ¼ 1.05Pnom. The figure shows that for negligible

static (leakage) power, the yield window is subjected to only one-sided

constraint.

ARTICLE IN PRESS

Fig. 3. Scatter plot of normalized leakage power plotted against sigma-

variation in channel length.

1.4

1.3

1.2

1.1

1.0

0.90 5 10 15 20

Norm

aliz

ed F

requency

Normalized Lekage (lsb)

30%

20X

0.18 micron

~1000 samples

Fig. 4. Distribution of chip performance and leakage based on silicon

measurements over a large number of samples of a high-end processor

design [3].

Fig. 5. Yield window for frequency constraint of fmin ¼ 0.9fnom and

power constraint of Pmax ¼ 1.05Pnom. The figure shows that leakage

power results in a two-sided constraint on the yield window.

D. Sylvester et al. / INTEGRATION, the VLSI journal 41 (2008) 319–339 321

exponential dependence of subthreshold leakage on chan-nel length and threshold variation and gate leakage onoxide thickness variation. Fig. 3 shows the variation innormalized leakage power (subthreshold+gate) plottedagainst sigma-variation in channel length obtained fromMC SPICE simulations The figure shows that there can benearly an order of magnitude variation in leakage powerdue to process variation. The key point here is that leakageis higher for smaller channel lengths due to thresholdvoltage roll-off effects in short-channel devices. Thisinverse correlation is also illustrated in Fig. 4, which showsthe distribution of chip performance and leakage based onsilicon measurements over a large number of samples of ahigh-end processor design [3]. As can be seen, both themean and variance of the leakage distribution increasesignificantly for chips with higher frequencies. As a resulttotal power is higher at fast corners than at slow corners.This trend is shown in Fig. 5 [4]. The figure also shows thatsuch a trend in power results in a two-sided constraint onthe yield window. This is due to the fact that fast dies fail to

meet the power budget due to high leakage, while low-leakage chips are too slow to meet performance specifica-tions. This key point is the reason why there can besignificant yield loss due to process variation as leakagepower grows in significance. This shrink in yield windowis what makes process variation a significant issue forASIC or custom high-performance and power consciousdesigns.

2.2. Impact on functional yield

Besides degrading parametric yield, process variationcan also cause nominally functional circuits to fail atskewed process corners. Various analog and digital circuitsthat rely on transistor matching can easily fail in thepresence of process variation-induced device mismatch.The most significant impact of process variation onfunctional yield is observed in the form of poor yield ofthe static random access memory (SRAM) arrays in scaledtechnologies [5,6].SRAM yield is very important from an economic

viewpoint due to the critical and the ubiquitous nature ofmemory in modern processors and SoCs. Density is a veryimportant metric for memories and hence SRAM cells usethe smallest manufacturable device sizes in a giventechnology. However, the threshold voltage variation dueto random dopant fluctuation is inversely proportional togate area [7–9]. Due to this dependence, the small sizedtransistors in a memory cell see a highly pronouncedrandom dopant effect. Moreover, the yield requirementsfor SRAM designs are more stringent than other logicblocks. Ref. [10] illustrates this problem with an example ofa 4MB cache. The authors show that a typical 4MB cachewith error correcting code (ECC) cells contains approxi-mately 38 million cells. To ensure that there is at most onefailure in this cache, the circuit must operate correctly up to

ARTICLE IN PRESSD. Sylvester et al. / INTEGRATION, the VLSI journal 41 (2008) 319–339322

5.44 sigmas. This sort of fault coverage is extremely hard toachieve considering that SRAM cells are traditionallydesigned to ensure that the contents of the cell do not getaltered during read access while the cell should be able toquickly change its state during the write operation. Theseconflicting read and write requirements are satisfied bybalancing the relative strengths of the devices in the design.Such careful design of an SRAM cell provides stable readand write operation, but it also makes the cell vulnerable tothe failures caused by random variations in the devicestrengths.

For a 6-T SRAM cell as shown in Fig. 6, a readoperation typically involves precharging the bitlinesfollowed by reading the contents of the cell through theaccess transistors. When the access transistors are turnedon, one of the precharged bitlines discharges through theaccess device and the inverter pull-down transistor. Forexample in Fig. 6, during a read access, the bitline BL willdischarge through the access device AL and the pull-downdevice NL to read a zero at node L. This method of readingcell contents exposes the internal storage node L to thedisturbance caused by the resistive voltage division betweenthe access and the pull-down devices. Typically, thisdisturbance is minimized by making the pull-down devicemuch stronger than the access transistor. However,random variations in the threshold voltages can changethe strengths of various devices in an SRAM cell therebycausing the read operation to flip the contents of the cell.Similarly, write capability of a cell depends on relativestrengths of pull-up device and the access transistor. Thewrite operation in an SRAM cell is performed by settingthe bitlines to the desired values and enabling the accesstransistors to drive the internal nodes of the cell. Forexample in Fig. 6, a zero is written at the node R by drivingbitline BR low and setting the wordline high. The resistivevoltage division between the pull-up device PR and theaccess transistor AR pulls the internal node R to zerocausing the cell to change its state. In the presence ofsignificant offsets in device strengths due to processvariation, a SRAM cell may fail to write a desired statein the cell during the write operation. These sorts of failurescan be classified as functional failures because the circuitsfail to function as expected.

PR

NR

PL

NL

AR

WL

BL BR

VDD

Node L

AL (VL=0)

GND

(VR=1)

Node R

Fig. 6. Schematic of a conventional 6-T SRAM cell.

3. Modeling and analysis of variation

In the previous section, we showed that process variationcan have a significant impact on the parametric yield of adesign. This yield loss will worsen in future technologiesdue to increasing process variations and the continuedsignificance of leakage power. Hence there is a critical needto develop accurate yield estimation approaches that arecomputationally efficient to enable yield-driven optimiza-tion tools. In this section, we discuss various approaches tomodel impact of process variation on power and perfor-mance variability of a design.

3.1. Parametric yield modeling

Ref. [11] presents an efficient gate-level approach toaccurately estimate the parametric yield defined by leakagepower and delay constraints. Note that the techniquedescribed below shares certain drawbacks with all so-called‘traditional’ statistical CAD approaches—this is furtherdiscussed in Section 5.1 with a possible alternative pathdescribed.The yield in [11] is estimated by finding the jpdf for delay

and leakage power. The process parameters are expressedas a sum of correlated and random components and thesum of variances of both these components provides theoverall variation in the process parameter. To handlethe correlated components of variations (inter-die andcorrelated intra-die) the overall chip area is divided into agrid as shown in Fig. 7. Each square in the gridcorresponds to a random variable (RV) of the processparameter which has correlations with all other RVscorresponding to other squares in the grid [12]. The valuesat the bottom right corner of each of the grids show thecorrelation coefficients with the top-left square on the grid.Squares that are much further apart clearly demonstratelower correlation compared to adjacent squares on the gridin this model.

1.00 0.50

0.50

0.33

0.33

0.41 0.31

0.31 0.26

Fig. 7. Example partitions of a circuit using a grid to model the correlated

component of variations (correlation coefficients referenced to top left

element).

ARTICLE IN PRESSD. Sylvester et al. / INTEGRATION, the VLSI journal 41 (2008) 319–339 323

To simplify the problem, this set of correlated RVs isreplaced by another set of mutually independent RVs withzero mean and unit variance using the principal compo-nents of the set of correlated RVs [12]. A vector of RVs(say X) with a correlation matrix C, can be expressed as alinear combination of the principal components Y as

X ¼ Dx þ OV�1D1=2Y , (1)

Here Dx is the vector of the mean values of X, O is adiagonal matrix with the diagonal elements being thestandard deviations of X, V is the matrix of theeigenvectors of C, and D is a diagonal matrix ofthe eigenvalues of C. Since the correlation matrix of amultivariate (non-degenerate) Gaussian RV is positive-definite, all elements of D are positive and the square-rootin (1) can be evaluated. The delay and leakage power of anindividual gate can be expressed as follows:

Delay ¼ dnom þXp

i¼1

apðDPpÞ,

Leakage ¼ exp Vnom þXp

i¼1

bpðDPpÞ

!. ð2Þ

Here dnom and exp(Vnom) are the nominal values of delayand leakage power, respectively, and the a’s and b’srepresent the sensitivities of delay and the log of leakage tothe process parameters under consideration. The variableDPp represents the change in the process parameters fromtheir nominal value.

In a statistical scenario, the process parameters aremodeled as RVs. If the overall circuit is partitioned using agrid as shown in Fig. 7, the delay of individual gates can beexpressed as a function of these RVs. Using the principalcomponent approach, the delay in (2) can then beexpressed as

Delay ¼ dnom þXp

i¼1

ap

Xn

j¼1

gjizj

!þ ZdR, (3a)

where zj’s are the principal components of the correlatedRVs DPp’s in (2) and the g’s can be obtained from (1).R�N(0,1) in the above equation represents the randomcomponent of variations of all the process parameterslumped into a single term that contributes a total varianceof Z2d to the overall variance of delay. Similarly the leakagepower for an individual gate can be expressed as

Leakage ¼ exp Vnom þXp

i¼1

bp

Xn

j¼1

gjizj

!þ ZlR

!. (3b)

These representations of delay and power allow forsignificant simplification in the joint timing and poweranalysis, which otherwise becomes computationally ineffi-cient if the spatial correlation were maintained withoutsuch a unified principal component approach. The delay of

each gate (say a) can now be expressed as follows:

Da ¼ a0 þXn

i¼1

aizi þ anþ1R. (4)

This serves as the canonical expression for delay. Themean delay is simply the nominal delay (a0). Since theprincipal components (zi’s) are uncorrelated N(0,1) RVs,the variance of the delay can be expressed as

VarðDaÞ ¼Xn

i¼1

ða2i Þ þ a2

nþ1 (5)

and the covariance of the delay with one of the principalcomponents can be obtained as

Cov ðDa; ziÞ ¼ EðDaziÞ � EðDaÞEðziÞ ¼ a2i

8i 2 f1; 2; . . . ; ng. ð6Þ

Since the random components are uncorrelated and donot contribute to the covariance of the delay at the twonodes at the inputs of a gate (e.g., ‘a’ and ‘b’), thecovariance can be obtained as

Cov ðDa;DbÞ ¼Xn

i¼1

aibi. (7)

In deterministic timing analysis the delay of the circuit isfound by applying two functions to the delay of individualgates: sum and max. Similar functions for the canonicaldelay expressions (4) are defined as

SumðDa;DbÞ ¼ ða0 þ b0Þ þXn

i¼1

ðai þ biÞzi þ

ffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffia2

nþ1 þ b2nþ1

qR.

(8)

The max function of normally distributed RVs(c ¼ max(a, b)) is not a strict Gaussian but can be closelyapproximated by another Gaussian [12–14]. Ref. [15]provides equations to calculate the mean and variance ofc in terms of the mean and variance of a and b and theircorrelation coefficient. Ref. [15] also develops expressionsto evaluate the correlation of c with any other RV in termsof the correlation of the RV with a and b. The max c can beexpressed in the same canonical form as a and b [12,13]. Tofind the coefficients in the expression for c in canonicalform, the mean, variances and the correlation of c with theprincipal components are matched, giving

c0 ¼ E maxða; bÞð Þ

ci ¼ covðc; ziÞ ¼ cov maxða; bÞ; zið Þ 8i 2 f1; . . . ; ng

cnþ1 ¼ Var maxða; bÞð Þ �Xn

i¼1

c2i

!1=2

. ð9Þ

Using the sum and max operations outlined above, onecan develop an expression for the delay of a circuit in termsof the RVs associated with process parameter variations.The correlation in delay and power can be preserved byusing a similar principal component-based approach withthe same underlying RVs.

ARTICLE IN PRESSD. Sylvester et al. / INTEGRATION, the VLSI journal 41 (2008) 319–339324

For power analysis, the leakage power of the total circuitcan be expressed as a sum of correlated lognormalvariables. This sum can be accurately approximated asanother lognormal RV [16]. The leakage power of anindividual gate a is expressed as

Paleak ¼ exp a0 þ

Xn

i¼1

aizi þ anþ1R

!, (10)

where the z’s are principal components of the RVs andthe a’s are the coefficients obtained using (1) and (2).The mean and variance of the RV in (10) can then becomputed as

EðPaleakÞ ¼ exp a0 þ

1

2

Xnþ1i¼1

a2i

!, (11)

VarðPaleakÞ ¼ exp 2a0 þ

Xnþ1i¼1

a2i

!� exp 2a0 þ

1

2

Xnþ1i¼1

a2i

!.

(12)

The correlation of the leakage of gate a with thelognormal RV associated with zj is found by evaluating

E PaleakeZj

� �¼ exp a0 þ

1

2

Xnþ1i¼1;iaj

a2i þ ðaj þ 1Þ2

!

8j 2 f1; 2; . . . ; ng. ð13Þ

Similarly the covariance of the leakage of two gates(a and b) can be obtained by using

E PaleakPb

leak

� �¼ exp ða0 þ b0Þ

�

þ1

2

Xn

i¼1

ðai þ biÞ2þ a2

nþ1 þ b2nþ1

!!. ð14Þ

The sum of leakage power can be expressed in thesame canonical form as (10). If the random variablesassociated with all the gates in the circuit are summedin a single step the overall complexity of the approachis O(n2) due to the size of the correlation matrix. Sincethe sum of two lognormal RVs is assumed to be alognormal variable in the same form, one can use arecursive technique to estimate the sum of more than twolognormal RVs. In each recursive step we sum two RVs ofthe form in (10) to obtain another RV in the samecanonical form. The coefficients in the expression forthe sum are obtained by matching the first two moments(as in Wilkinson’s method [17]) and the correlationswith the lognormal RVs associated with each of theGaussian principal components. We outline the steps inone of the recursive steps where Pb

leak and Pcleak are summed

to obtain Paleak

Paleak ¼ exp a0 þ

Xn

i¼1

aizi þ anþ1

!

¼ exp b0 þXn

i¼1

bizi þ bnþ1

!

þ exp c0 þXn

i¼1

cizi þ cnþ1

!¼ Pb

leak þ Pcleak. ð15Þ

The coefficients associated with the principal compo-nents can be found using (11)–(14) and expressing thecoefficients associated with the principal components as

ai ¼ logEðPa

leakezi Þ

EðPaleakÞEðe

zi Þ

� �

¼ logEðPb

leakezi Þ þ EðPcleakezi Þ

EðPbleakÞ þ EðPc

leak� �

Eðezi Þ

!. ð16Þ

Using the expressions developed in [18], the remaining twocoefficients in the expression for Pa

leak can be expressed as

a0 ¼1

2log

EðPbleakÞ þ EðPc

leak� �4

EðPbleakÞ þ EðPc

leak� �2

þ VarðPbÞ þ VarðPcÞ þ 2CovðPbPcÞ

!,

anþ1 ¼ log 1þVarðPbÞ þ VarðPcÞ þ 2CovðPbPcÞ

EðPbleakÞ þ EðPb

leak� �2

!�Xn

i¼1

a2i

" #0:5.

ð17Þ

Having obtained the sum of two lognormals in the originalcanonical form, the process can be recursively repeated tocompute the expression for the total leakage power of thecircuit.Once we have algorithms for statistical timing and power

analysis, the parametric yield of a circuit given delay andpower constraints can be expressed as

Y ¼ PðDpD0;PpP0Þ (18)

which is the probability of the circuit delay and powerbeing less than D0 and P0, respectively. Since delay andpower are correlated, the yield cannot be simply computedby multiplying the separate probabilities. To express theyield as the probability of a bivariate Gaussian RV, wetake the logarithm of the leakage power constraint. Thecorrelation coefficient of the two Gaussian RVs in the yieldequation can now be obtained using (7). The yield in termsof two N(0,1) RVs N0 and N1 can be expressed as

Y ¼ P N0pD0 � mD

sD

;N1plogðP0Þ � mlogðPÞ

slogðPÞ

� �. (19)

Since correlation does not change under a lineartransformation with positive coefficients, the correlationbetween N0 and N1 remains same.Table 1 compares the analytical yield estimates to those

obtained using Monte Carlo-based simulations for ISCASbenchmark circuits [19]. The table also shows thesignificance of correlation in parametric yield estimation.

ARTICLE IN PRESS

Table 1

Yield estimates for different frequency bins using the proposed approach and MC-based simulations

Benchmark circuit Monte Carlo Analytical yield Yield neglecting correlation

DoDm DmoDo1.1*Dm DoDm DmoDo1.1*Dm DoDm DmoDo1.1*Dm

c432 0.17 0.43 0.14 0.46 0.31 0.30

c499 0.17 0.46 0.15 0.49 0.32 0.32

c880 0.20 0.43 0.16 0.46 0.32 0.31

c1908 0.18 0.48 0.14 0.49 0.32 0.32

c2670 0.16 0.44 0.14 0.47 0.31 0.30

c3540 0.22 0.43 0.20 0.44 0.33 0.32

c5315 0.19 0.48 0.19 0.48 0.33 0.33

c6288 0.22 0.47 0.21 0.46 0.34 0.34

c7552 0.20 0.47 0.19 0.47 0.33 0.33

1.31.4

1.5

1.6

0

2

4

6

-10.4

-10.0

-9.6

-9.2

log

(Lea

kage

)

Delay (ns)

Fig. 8. Joint probability distribution function for the bivariate Gaussian

distribution for c3540.

D. Sylvester et al. / INTEGRATION, the VLSI journal 41 (2008) 319–339 325

The analytical approach provides good estimates of theyield with an average misprediction in yield of 2%. Fig. 8shows a representative joint probability density function(jpdf) of the log of leakage and delay, which is a bivariateGaussian jpdf.

As discussed in the previous section, power results in atwo-sided constraint on the yield window. The yieldmethodology discussed in this section enables efficientcomputation of parametric yield considering correlatedvariations in power and performance. The approach canform the basis for power and performance constrainedparametric yield-driven optimization techniques as op-posed to purely timing-yield-driven optimizations whichdramatically penalize power yield.

3.2. Interconnect variation modeling

Process variation can have a significant impact on bothdevice (front-end of the line) and interconnect (back-end of

the line) performance. In this section, we focus on modelinginterconnect delay while considering variability in thephysical dimensions. The impact of process variation oninterconnect performance is context dependent [20]. Fortwo different interconnect structures, when metal thicknessis increased, the delay of the one structure may increasewhile that of the other structure may reduce [20]. Thismakes it very difficult to capture the impact of variabilityon interconnect delay using a traditional corner-basedmethod.To first order, delay through an interconnect can be

expressed as the RC product of its resistance andcapacitance. With any change in the physical dimensionsof the wire, its resistance and capacitance also change,causing interconnect delay to fluctuate. In order to modelthe impact of variability on wire delay, we need to capturethe effect of geometric variations on the electrical para-meters. The change in electrical parameters due tovariations in geometric dimensions can be captured bythe simple linear approximation as follows:

R ¼ Rnom þ a1 DW þ a2 DT ,

C ¼ Cnom þ b1 DW þ b2 DT þ b3 DH. ð20Þ

Here, Rnom and Cnom represent nominal resistance andcapacitance values, computed when the wire dimensionsare at their nominal or typical values. DW, DT, and DH

represent the change in metal width, metal thickness, andILD thickness, respectively. The coefficients ai and bi arethe modeling coefficients in the linearized model. Thislinear approximation shows a high degree of accuracy forour purpose while remaining very simple to use [21]. Forsimplicity, Eq. (20) considers variations only in metal width(W), metal thickness (T) and interlayer dielectric thickness(H) but the other variation sources can be included in asimilar manner.Ref. [22] presents a technique for mapping geometric

variations in interconnect dimensions to corresponding RCdelay variation. Once interconnect dimensions are mappedto the circuit parameters (Eq. (20)), the next step isthe computation of circuit moments. For deterministic

ARTICLE IN PRESS

R3

R2

C1

(1) (2)

(3)

R1

C2

C3

Fig. 9. A simple RC tree.

D. Sylvester et al. / INTEGRATION, the VLSI journal 41 (2008) 319–339326

resistance and capacitance values in an RC tree, the circuitmoments can be computed easily by path tracing [23].However, with interconnect variability the resistances andcapacitances are now random variables. If changes inphysical dimensions (DW, DT, etc.), are consideredindependent normal random variables, then the resistanceand capacitance calculated using Eq. (20) are correlatednormal random variables. We illustrate moment computa-tion with variability for the simple RC tree example shownin Fig. 9.

The first moment at any node can be expressed as afunction of resistances Ri’s and capacitances Ci’s. Forexample, the first moment at node 3 (without consideringvariability) in the above circuit is given by

m31 ¼ m1ðRi;CiÞ ¼ �R1ðC1 þ C2 þ C3Þ � R3C3. (21)

With variations in physical dimensions, the resistances Ri

and the capacitance Ci are linear functions of randomvariables (DW, DT, etc.),

Ri ¼ RiðnomÞ þ RiðW Þ DW þ RiðTÞ DT ,

Ci ¼ CiðnomÞ þ CiðW Þ DW þ CiðTÞ DT þ CiðHÞ DH. ð22Þ

Here, Ri(nom) and Ci(nom) are nominal resistance andcapacitance, respectively. The coefficients Ri(W), Ci(W)

model change in the resistance and capacitance with achange in width. Similarly, other coefficients capture thedelta resistance and delta capacitance with respect to eachphysical dimension. Substituting Ri and Ci from Eq. (22)into Eq. (21) gives

m1 ¼ m1ðnomÞ þ kW DW þ kT DT þ kH DH þ kW 2ðDW Þ2

þ kT2 ðDTÞ2 þ kWT ðDW DTÞ þ kWH ðDW DHÞ

þ kTH ðDT DHÞ. ð23Þ

Here m1(nom) is the first moment evaluated at nominalresistance Ri(nom) and nominal capacitance Ci(nom). Weexpress this by the following notation:

m1ðnomÞ ¼ m1ðRiðnomÞ;CiðnomÞÞ. (24)

The coefficients in Eq. (23) can be calculated byevaluating the first moment at different values of R’s andC’s. For example, the coefficient kW 2 can be computed bycalculating first moment when all resistances and capaci-tances are replaced by the corresponding Ri(W) and Ci(W).The expressions for each coefficient in terms of first

moment computation are given by

kW ¼ m1ðRiðW Þ;CiðnomÞÞ þm1ðRiðnomÞ;CiðW ÞÞ,

kW 2 ¼ m1ðRiðW Þ;CiðW ÞÞ,

kT ¼ m1ðRiðTÞ;CiðnomÞÞ þm1ðRiðnomÞ;CiðTÞÞ,

kT2 ¼ m1ðRiðTÞ;CiðTÞÞ,

kWT ¼ m1ðRiðW Þ;CiðTÞÞ þm1ðRiðTÞ;CiðW ÞÞ,

kWH ¼ m1ðRiðW Þ;CiðHÞÞ,

kTH ¼ m1ðRiðTÞ;CiðHÞÞ,

kH ¼ m1ðRiðnomÞ;CiðHÞÞ. ð25Þ

Eq. (23) shows the first moment expression as a functionof normal random variables representing variations inback-end physical dimensions. Eq. (23) contains higherorder terms and cross product terms, thereby implying thatthe distribution of first moments is not exactly Gaussian.However, if we neglect higher order terms, the first momentexpression from Eq. (23) reduces to the following equation:

m1 ¼ m1ðnomÞ þ kW DW þ kT DT þ kH DH. (26)

We can perform a similar analysis for the secondmoment. The second moment can be expressed as afunction of resistances, capacitances, and the first mo-ments. For example, the second moment at node 3 (withoutconsidering variability) for the circuit in Fig. 9 is given by

m32 ¼ m2ðRi;Ci;m

i1Þ ¼ � R1ðm

11C1 þm2

1C2 þm31C3Þ

� R3ðm31C3Þ. ð27Þ

With variations in physical dimensions, Ri’s and Ci’s canbe replaced by their corresponding expressions fromEq. (22) and similarly m1’s can be replaced by theexpression given in Eq. (26). If we again keep only thelinear terms, m2 can be expressed as

m2 ¼ m2ðnomÞ þ AW DW þ AT DT þ AH DH. (28)

Here m2(nom) is the second moment evaluated at nominalresistance Ri(nom), nominal capacitance Ci(nom) and nominalfirst moment m1(nom). We express this by followingnotation:

m2ðnomÞ ¼ m2ðRiðnomÞ;CiðnomÞ;m1ðnomÞÞ. (29)

The coefficients in Eq. (28) can be calculated byevaluating the second moment at different values of R’s,C’s and m1’s.

AW ¼ m2 RiðnomÞ;CiðnomÞ; kW

� �þm2 RiðnomÞ;CiðW Þ;m1ðnomÞ

� �þm2 RiðW Þ;CiðnomÞ;m1ðnomÞ

� �,

AT ¼ m2 RiðnomÞ;CiðnomÞ; kT

� �þm2 RiðnomÞ;CiðTÞ;m1ðnomÞ

� �þm2 RiðTÞ;CiðnomÞ;m1ðnomÞ

� �,

AH ¼ m2 RiðnomÞ;CiðnomÞ; kH

� �þm2 RiðnomÞ;CiðHÞ;m1ðnomÞ

� �.

ð30Þ

Here kw, kT, and kH can be computed as shown inEq. (25).The validity of the linearity assumption was experimen-

tally validated in [22] for the simple configuration of Fig. 9.

ARTICLE IN PRESS

-94 -92 -90 -88 -86 -84 -82 -80 -78 -760.000

0.005

0.010

0.015

0.020

0.025

0.030

0.035

0.040

0.045

Pro

babili

ty

m1∗ (1e12)

m1 distribution with all terms

m1 distribution with only linear terms

5.0 5.2 5.4 5.6 5.8 6.0 6.2 6.4 6.6 6.8 7.00.000

0.005

0.010

0.015

0.020

0.025

0.030

0.035

0.040

0.045m2 distribution with all terms

m2 distribution with only linear terms

m1∗ (1e21)

Pro

babili

ty

Fig. 10. First (left plot) and second (right plot) moment distributions with and without considering non-linear terms.

60 62 64 66 68 700.00

0.02

0.04

0.06

0.08

0.10

0.12

0.14

SPICE Monte Carlo: Mean=64.7ps, Stdev=1.7ps

Nominal: W=T=0.8um, ILD=0.55um, L=5mm, Delay=64.9ps

Pro

babili

ty

Delay (ps)

D2M: Mean=64.9ps, Stdev=1.7ps

Fig. 11. Analytical delay distribution obtained using statistical D2M

metric compared to Monte Carlo simulations.

D. Sylvester et al. / INTEGRATION, the VLSI journal 41 (2008) 319–339 327

Nominal values for width and metal thickness were chosento be 0.8 mm while nominal ILD height was taken to be0.55 mm. The 3-sigma tolerances were 70.1 mm for widthand thickness and 70.05 mm for ILD thickness. Spacingwas assumed to be inversely correlated with width. Giventhese distributions of DW, DT, and DH, Fig. 10 plots thedistributions of first and second moments at node 3 withand without the non-linear terms. The figure shows that thetwo curves are almost identical. The figures also show thatthe distributions of moments are Gaussian and hence thelinear approximations of Eqs. (26) and (28) are extremelyaccurate.

Once moments are expressed as functions ofchange in physical dimensions, the next step is to mapthe PDF of these moments to an interconnect delay PDF.Among various delay metrics, D2M [24] is a goodcandidate for this exercise due to its closed-form nature.However, the approach is independent of the metricand can be applied to any other closed-form delay metricas well.

Using D2M the delay in terms of moments can beexpressed as

D2M ¼ ln 2 �m1ðnomÞ þ kW DW þ kT DT þ kH DH� �2ffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffi

m2ðnomÞ þ AW DW þ AT DT þ AH DHp .

(31)

Analyzing the D2M expression in Eq. (31) is difficult andhence, we can write a series expansion of the D2M

expression and keep only the linear terms, as discussedearlier. The D2M expression can then be re-written as

D2M ¼ ln 2 �m1ðnomÞ

� �2ffiffiffiffiffiffiffiffiffiffiffiffiffiffiffim2ðnomÞp 1þ SW DW þ ST DT þ SH DHð Þ.

(32)

Here SW, ST, and SH can be calculated using

SW ¼2kW

m1ðnomÞ�

AW

2m2ðnomÞ,

ST ¼2kT

m1ðnomÞ�

AT

2m2ðnomÞ,

SH ¼2kH

m1ðnomÞ�

AH

2m2ðnomÞ. ð33Þ

If DW, DT and DH are independent random variableswith their mean values zero and standard deviations of sW,sT, and sH, respectively, then the mean and standarddeviation of delay in terms of the standard deviations in

ARTICLE IN PRESS

0 100 200 300 4003x107

4x107

5x107

6x107

7x107

8x107

9x107

1x108

1x108

Jon (A/cm2)

Joff (A/cm2)

Width location (nm), z-axis

Jon (

A/c

m2)

102

103

104

Joff (

A/c

m2)

Fig. 12. Drive and leakage current density variation along channel width.

D. Sylvester et al. / INTEGRATION, the VLSI journal 41 (2008) 319–339328

physical dimensions can be written as

EðD2MÞ ¼ ln 2 �m1ðnomÞ

� �2ffiffiffiffiffiffiffiffiffiffiffiffiffiffiffim2ðnomÞp ,

StdevðD2MÞ

¼ ln 2 �m1ðnomÞ

� �2ffiffiffiffiffiffiffiffiffiffiffiffiffiffiffim2ðnomÞp

ffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiS2

Ws2W þ S2Ts

2T þ S2

Hs2H� �q

. ð34Þ

Fig. 11 shows the application of Eq. (32) to estimatedelay spread of a 5mm long global interconnect wire.Nominal values (3-sigma tolerances) for metal width,thickness and ILD height were 0.8 mm (70.1 mm), 0.8 mm(70.1 mm) and 0.55 mm (70.05 mm), respectively. Fig. 11shows the model results as well as those from the MCsimulations. The figure shows that the model estimates thedelay spread very well.

In the next section, we discuss one of the commonsources of leakage variability, irregularities in lithographicpatterning. We describe a technique proposed to quantifythe leakage variation due to this source of variability.

3.3. Analysis of leakage variation due to non-uniform device

geometries

In this section, we discuss leakage variation due toimperfect lithographic patterning. It is found that printedimages on silicon are not restricted to simple rectilineargeometries. A silicon image of a transistor may not be aperfect rectangle as is assumed by all current circuitanalysis tools. The irregularity in shape can have a strongeffect on leakage current. Existing tools and device modelscannot handle complicated non-rectilinear geometries.

In this section, we discuss a new technique to model non-uniform, non-rectangular gates. The authors in [25]propose a method to convert non-rectangular gates intoequivalent perfect rectangle gates so that they can beanalyzed by SPICE-like circuit analysis tools. This enablesclosest-to-silicon timing and power analysis. Severalapproaches to handling non-rectangular gates have beenpreviously proposed methods [26–29]. However, theseapproaches neglect an important effect that is the changein threshold voltage along the width of the channel. The so-called ‘‘edge effect’’ means that an irregularity in shapenear the edge of the gate affects the currently differentlythan a similar irregularity near the gate-center. Theproposed method takes this effect into account and modelsit accurately to find the current density at every point alongthe device. The non-rectangular gate can now be sliced intoseveral rectangular regions and the current flowing througheach slice can be accurately calculated based on its length,width and position. The current thus calculated is used toobtain the effective length for an equivalent rectangulardevice. The authors show that this method is much moreaccurate than previously proposed approaches whichneglect the location dependence of the threshold voltage.

MOS devices with shallow trench isolation (STI)technology suffer from the reverse-narrow widtheffect [30]. Due to fringing capacitances from line-endextension and well proximity effects [29], the thres-hold voltage is much lower near the STI edges thannear the center of device. Fig. 12 shows the driveand leakage current densities as a function of positionalong the channel. The data is generated from aTCAD setup closely matching an industrial 90 nm SPICEmodel.The threshold voltage is analytically modeled as a

function of distance from the edge, using knowledge ofdevice behavior and data from SPICE simulations.This method is found to be considerably more

accurate compared to a ‘‘flat’’ model which does notcompare threshold voltage variation along the length.The accuracy is verified using a test structure, where asmall irregularity is introduced into the rectangular gate.The size and location of the protrusion are varied and thecurrent overhead compared to the nominal rectan-gular gate is measured. The resulting surfaces are plottedin Fig. 13.As shown, the flat model (a) cannot capture the location

dependence, whereas the proposed model (b) is found to bevery close to the TCAD simulation result (c). Table 2shows the improvement in accuracy of the proposedmethod over flat method.The results show that, using this method, the non-

rectangular gates can be accurately replaced by equivalentrectangular gates, thus allowing accurate post-lithographytiming and leakage analysis. This modeling technique canbe incorporated into a full-chip post-silicon analysis flow,as proposed in [31].

4. Design techniques for variation minimization

In this section, we discuss several design techniques forreducing the impact of process variation on parametric and

ARTICLE IN PRESS

Fig. 13. Current overhead for a small protrusion on a device: (a) flat model, (b) proposed model and (c) TCAD simulation.

D. Sylvester et al. / INTEGRATION, the VLSI journal 41 (2008) 319–339 329

functional yield of a design. In general, the techniques canbe classified into the following three categories:

�

post-silicon variation compensation techniques � variation-tolerant circuits and microarchitecture � variability-aware design4.1. Post-silicon variation compensation

Post-silicon compensation techniques are applied afterfabrication and can improve the parametric yield of adesign significantly. One such technique for post-siliconcompensation is substrate or body biasing [32–34]. Thistechnique, commonly known as, ABB allows post-silicontuning of the threshold voltage (VT) by controlling thesubstrate potential. The technique exploits the fact that VT

of a device can be controlled by changing its body-to-source bias voltage. The power-performance spread of adesign can therefore be tightened by applying a forwardbody-bias (FBB) to slow and less leaky dies and a reversebody-bias (RBB) to fast and leaky dies. The circuitimplementation of ABB requires a body-bias generatorand a control circuit for optimal body-bias selection.

The control circuit typically contains a critical path replicawhose frequency and leakage determine the optimalNMOS and PMOS body-bias voltage applied to the die.Ref. [35] demonstrates the advantage of ABB in compres-sing leakage and frequency distributions on a 150 nmCMOS test-chip. The measured results from [35] are shownin Fig. 14.The application of ABB to intra-die variation requires

biasing different regions of the die with different bodyvoltages. This limits the granularity of ABB in compensat-ing intra-die variation. Moreover, RBB and FBB haveseveral other issues that limit their effectiveness in sub-65 nm technologies. RBB increases depletion region widthand hence worsens short-channel effects [36,37]. RBB alsoincreases drain–body junction leakage [38,39]. FBB, on theother hand, results in higher junction capacitance and highforward source–body junction current [40]. Fig. 15 showsthreshold voltage change as a function of body-bias for anNFET device simulated in 65 nm technology. The figurealso shows the impact of body-bias on DIBL coefficient.Here DIBL coefficient is measured as the sensitivity ofthreshold voltage with respect to channel length variation.The plot shows that RBB results in significant degradation

ARTICLE IN PRESS

Fig. 14. Leakage vs. frequency distribution for no body-bias (NBB) and

adaptive body-bias (ABB) [35].

-100

-75

-50

-25

0

25

50

75

100

125

-1 -0.75 -0.5 -0.25 0 0.25 0.5

Body Bias (V)

ΔVth

(m

V)

0.6

0.7

0.8

0.9

1

1.1

1.2

1.3

1.4

Norm

aliz

ed D

IBL c

oeffDIBL

Vth

Fig. 15. Impact of body-bias on threshold voltage and DIBL.

PR

NR

PL

NL

ALAR

WWL

VDD

GNDWBL WBL

RWL

RBL

Fig. 16. 8-T SRAM cell with stable read operation [43].

Table 2

Results for non-rectilinear gates with protrusion or depression of width

20 nm

Loc. (nm) Length of protrusion/depression

82 86 90 94 98

0

Davinci 1.477 1.163 1.000 0.919 0.870

Proposed model 1.543 1.194 1.000 0.911 0.838

Flat model 1.133 1.040 1.000 0.978 0.967

20

Davinci 1.247 1.083 1.000 0.982 0.971

Proposed model 1.197 1.061 1.000 0.970 0.954

Flat model 1.133 1.040 1.000 0.978 0.967

40

Davinci 1.124 1.042 1.000 0.991 0.984

Proposed model 1.088 1.024 1.000 0.989 0.984

Flat model 1.133 1.040 1.000 0.978 0.967

60

Davinci 1.092 1.031 1.000 0.993 0.989

Proposed model 1.079 1.021 1.000 0.991 0.986

Flat model 1.133 1.040 1.000 0.978 0.967

80

Davinci 1.082 1.027 1.000 0.994 0.991

Proposed model 1.079 1.021 1.000 0.991 0.986

Flat model 1.133 1.040 1.000 0.978 0.967

D. Sylvester et al. / INTEGRATION, the VLSI journal 41 (2008) 319–339330

of DIBL coefficient, thereby limiting its effectiveness inprocess variation compensation.

Adaptive supply voltage (ASV) is another technique forreducing power-performance spread due to process varia-tion [41,42]. This technique shrinks distributions by low-ering supply voltage of fast and leakier dies and byincreasing VDD of slow but less leaky dies. The implemen-tation of ASV requires a DC–DC converter and a control

circuit for selecting optimal supply voltage. ASV can beused in conjunction with ABB for maximum benefits butthe design complexity and overhead of using bothtechniques together is quite significant.

4.2. Variation-tolerant circuits and microarchitecture

Variation-tolerant circuit topologies and microarchitec-ture techniques can be very effective in reducing the impactof process variation. In this section, we discuss few suchtechniques in the context of robust SRAM design. Forsensitive circuits such as SRAM cells, the variation-tolerantdesign can significantly improve the yield of a memoryarray. As discussed in Section 2, SRAM failures are causedby local device mismatch. Due to their random nature,such local variations cannot be controlled by post-siliconadaptive techniques discussed in the previous section.Hence it is important to develop design solutions thatmake the cells less sensitive to random device variations.Fig. 16 shows an SRAM cell topology [43] that provides

stable read operation even in the presence of large randomvariation. The SRAM cell contains two extra transistorsfor single-ended read operation as shown in the figure.These devices improve read stability of the design byisolating the read disturbance from the storage nodes.During read operation, the resistive voltage division

ARTICLE IN PRESS

PCLK

PCLK

EN2

BL2

S2_LS2

S1_L

Main SA

Shadow SA

To column

multiplexers…

ERROR

EN1

SR2

SR1 DATA

PCLK

EN1

BL1

S1

Fig. 17. Timing error detection in SRAM arrays using a shadow sense

amplifier [46].

Fig. 18. Ioff/Ion plots for NMOS/PMOS

D. Sylvester et al. / INTEGRATION, the VLSI journal 41 (2008) 319–339 331

between the read devices does not cause any disturbance atthe internal storage nodes. Therefore, even in the presenceof large read disturbances, the cell always retains its dataresulting in stable read operation. Furthermore, the celltopology has separate read and write ports and hence thewrite margin of the cell can be optimized independent ofthe read stability constraints. The limitation of this designis that it results in an increased cell size due to two extradevices as compared to the conventional 6-T cell.Timing failures caused by process variation can be

addressed by microarchitecture techniques such as theRazor technique discussed in [44,45]. The general ideabehind this technique is to use a shadow latch with thestandard positive edge triggered D-flipflop. The shadowlatch samples the data at the negative edge of the clock thusallowing it to latch the correct output even in the presenceof timing failures. Any discrepancy in the outputs of theshadow latch and the D-flipflop indicates an error.A similar technique can be used to detect timing errors inSRAM arrays (Fig. 17) [46]. At the sense amplifierboundary on the read bit-path, two standard differentiallatch-type sense amplifiers are used to double-sample thebitline during a read operation in the SRAM. The originalsense amplifier is triggered at the end of the cycle while thesecond sense amplifier is triggered by a delayed enablesignal. The delayed enable signal provides extra time todevelop additional bitline differential before the secondsense amplifier is fired. The outputs of the two senseamplifiers can be compared to detect read performancefailures.

When a speed path failure is detected at the senseamplifier, the correct value is multiplexed onto the staticdata bus. This result will not reach the I/O interface withinthe clock cycle, but the memory element at the I/Ointerface is capable of re-sampling the data bus and

devices as a function of gate-length.

ARTICLE IN PRESS

Table 3

Optimization results for transistor-level and cell-level biased libraries

Circuit Instance

count

% Imp

CLB

% Imp

TLB

% Imp all

variants

C5315 1681 27.66 41.69 42.09

C6288 3041 16.99 26.17 27.25

AES 30,991 22.68 38.05 38.66

ALU 15,880 15.68 32.56 33.13

S9234 1212 24.38 31.41 32.46

S13207 3464 30.83 40.15 40.43

S38417 11,620 25.98 38.44 38.78

D. Sylvester et al. / INTEGRATION, the VLSI journal 41 (2008) 319–339332

propagating the correct value via the latching mechanismproposed in [44]. If an error is detected at the latch columnor at the sense amplifier block, the SRAM can return asignal similar to a cache miss in a hierarchical memorysystem. The corrected data is forwarded to the system atthe end of the second cycle after the request rather than inone cycle.

4.3. Gate-length biasing for leakage and variability

reduction

In this section, we discuss another scheme for buildingcircuits that have high yield in the face of leakagevariability. We describe a leakage and variability reductionscheme that involves increasing the critical dimension ofstandard cells by very small amounts, which can be donewith very little impact on standard-cell design/routing. Thismethod was first proposed by the authors of Ref. [47].Fig. 18 shows how the drive and leakage currents of PMOSand NMOS devices change with small changes in gatelength.

The figures show that the ratio of leakage and drivecurrent decreases with increase in gate-length. In otherwords, small changes to the length lead to large reductionsin leakage for small delay overhead. The figure also showsthat along with reduced leakage, the sensitivity of leakageto gate-length variation reduces with increased length.Thus gate-length biasing leads to leakage variabilityreduction along with leakage reduction. The authors in[48] propose a standard-cell optimization methodologythat involves generating different variants are generated foreach cell to create an enhanced cell-library. The paperspecifically proposes a transistor-level length assignmentmethodology, where gate-length biases are assigned to eachtransistor individually. A circuit optimizer uses thisenhanced library to selectively replace cells on non-criticalpaths with slow and low-leakage cells in order to generate adesign with reduced leakage.

The authors propose that maximum length-bias be keptto approximately 10% of the nominal gate length. It isfound that with larger CD changes, the delay-leakagetradeoffs get increasingly less attractive. Furthermore, areaand capacitance penalties become significant. The pro-posed method strives to maintain layout compatibility ofthe biased standard-cell with the nominal cell. Thisconsideration also limits the allowed bias due to potentialdesign rule violations. Across technologies, it is found that10% is a reasonable value, both from the process andbenefit perspective.

We now review and compare two library optimizationmethods, one where every transistor in a cell has the samebias, and the other where each transistor is biasedindividually. We refer to the two methods as cell-levelbiasing (CLB) and transistor-level biasing (TLB). TLB,although more complex, provides a much richer designspace for the optimizer, leading to more thoroughlyoptimized designs. The need for TLB variants is motivated

by the fact that different transistors in a cell have varyingcontributions to leakage and delay. The observation thatPMOS and NMOS devices have different delay/leakagecharacteristics provides additional motivation for a TLBmethodology.To generate TLB variants for standard-cells, the authors

propose a sensitivity-based heuristic, which identifies thetransistors in the cell that can provide maximum leakagereduction while causing minimum delay overhead. Severaldifferent types of variants are generated, with varying delayand leakage tradeoffs. The generation of asymmetricvariants where the rise and fall transitions are affected, inorder to exploit asymmetric slack characteristics of designs,is also proposed.We also identify variants that are superior in both

leakage and delay compared to unbiased cells. Thesevariants are generated by distributing bias between high-leakage-impact transistors and high-delay-impact transis-tors within a standard cell.Table 3 shows the results of the gate-length biasing

scheme over a variety of ISCAS85 [19] circuits. It is foundthat using cell-level biasing gives leakage reduction of up to31%, whereas the TLB methodology achieves leakagereduction of up to 42%. The average leakage savings ofCLB and TLB over unoptimized designs are 23% and35%, respectively. The histogram for the AES circuit inFig. 19 also shows that the standard deviation of the CLBoptimized design is 28% lower than nominal and that ofthe TLB optimized design is 66% lower than nominal.This shows the clear superiority of the TLB scheme over

the gate-length biasing scheme. With minimal design andoptimization complexity, large savings in leakage andleakage variability are achievable.

4.4. Variability-aware design

In this section, we move on to review methods fordesigning circuits that inherently compensate for sources ofvariation. We discuss a self-compensation scheme for CDvariation caused by defocus. The Bossung plots in Fig. 20show that dense lines ‘‘smile’’ through focus while isolatedlines ‘‘frown’’. In this work, we propose a new designmethodology that allows explicit compensation of focus-dependent CD variation in particular, either within a cell

ARTICLE IN PRESSD. Sylvester et al. / INTEGRATION, the VLSI journal 41 (2008) 319–339 333

(self-compensated cells) or across cells in a critical path(self-compensated design). By creating iso and dense

variants for each library cell, we can achieve designsthat are more robust to focus variation. Optimizationwith a mixture of dense and iso cell variants is possibleboth for area and leakage power in timing constraints

Table 4

Leakage power change for self-compensating designs and two heuristic-based op

At 0.4 defocus c432 c499 c880 c1355 c190

Self-compensated (%) 5.1 5.7 5.5 5.6 5

Single-pitched (%) 6.6 6.9 7.0 6.7 6

heu1 (area) (%) 31.4 �4.8 �36.3 11.3 �6

heu2 (leakage) (%) �25.7 0.6 �36.9 14.8 �22

-0.4 -0.2 0.0 0.2 0.4110

120

130

140

150

line w

idth

(nm

)

defocus (um)

0.18

0.2

0.22

0.24

0.26

0.28

0.3

0.32

0.34

0.36

0.38

0.4

0.42

0.44

0.46

0.48

space (um)

Fig. 20. Linewidth variation with defocus level (nominal line-

width ¼ 130nm).

Fig. 19. Leakage histograms for unoptimized, TLB optimized and CLB

optimized AES designs.

(critical delay), with the latter an interesting complement toexisting leakage reduction techniques such as dual-Vth. Weimplement both a heuristic and mixed-integer linearprogramming (MILP) solution methods to address thisoptimization. The two methods of self-compensation arediscussed below.

4.4.1. Self-compensated cell layout

By self-compensated cell layout, we refer to a correct-by-construction methodology that relies on within-cell com-pensation of CD variation caused by focus variation. Forexample, variation can be compensated in series-connectedNMOS, if one device becomes shorter (thus, faster) underdefocus, and the other device becomes longer (thus,slower). This can be achieved by making one device ‘‘iso’’and the other device ‘‘dense’’. The other way of generatingself-compensated cells is to find spacing ranges in which thelinewidth variation is negligible by focus variation. Eachspacing between adjacent poly-lines should be one of thesevalues. In this work, we generate all the self-compensatedcells by requiring poly-spacing to be in the compensatedspacing range (to be discussed further in the next section).We also explore the possibility of single-pitched-cells

where all poly-spacings are set to one highly manufactur-able value to eliminate the focus-dependent CD variationinside cells.

4.4.1.1. Self-compensated physical design. This refers tocompensation across cells (e.g., along a critical path).Consider two cells G1 and G2 that lie on the critical pathG1-G2. Focus variation, if not corrected by applyingexpensive RETs, can cause variation in critical path delayand lead to potential timing failures or parametric yieldloss. However, if G1 is explicitly made ‘‘iso’’ while G2 ismade to act ‘‘dense’’, focus-dependent CD variation can becompensated. Assuming that iso and dense versions oflibrary cells are available, designs that are robust to focusvariation become possible.Using these different libraries and our optimization

algorithms on ISCAS85 [19] benchmark circuits, we findthat we can adequately compensate for focus-dependentCD variation. The results are shown in Tables 4 and 5. Thenormalized area overheads incurred when using each cellvariant (both uniformly and using the proposed optimiza-tion approaches) are shown in Table 5. The gate distribu-tion and runtime of the optimization options are shown inthe right side of the table. Heu1 (area-driven optimization)and heu2 (leakage driven optimization) refer to two

timizations at 0.4 mm defocus compared to original library at 0 mm defocus

8 c2670 c3540 c5315 c6288 c7552 Avg.

.5 5.0 5.5 5.5 5.3 5.4 5.4

.2 5.9 6.8 6.2 6.4 5.9 6.5

.5 �36.5 �34.5 17.4 �10.5 �33.6 �10.3

.2 �37.6 �37.2 �36.6 �25.9 �39.0 �24.6

ARTICLE IN PRESS

Table 5

Normalized area and gate distributions for each library and optimization approach

Benchmarks Total no. of

gates

Normalized area Gate distribution Runtime (s)

Orig. Dense Iso Self-

comp.

Single-

pitch

Optimization Heuristic1

(area)

Heuristic2

(leakage)

ILP

heu1

(area)

heu2

(leakage)

ILP Dense Iso Dense Iso Dense Iso heu1 ILP

c432 339 1.00 1.02 1.17 1.12 1.26 1.09 1.09 1.08 233 106 318 21 317 22 0.04 0.19

c499 682 1.00 1.00 1.17 1.11 1.27 1.00 1.02 1.00 581 101 569 113 584 98 0.09 1.70

c880 575 1.00 1.02 1.18 1.11 1.27 1.02 1.02 1.01 560 15 561 14 562 13 0.07 0.35

c1355 680 1.00 1.00 1.17 1.11 1.27 1.05 1.08 1.04 536 144 516 164 564 116 0.39 11.21

c1908 645 1.00 1.01 1.16 1.12 1.26 1.04 1.05 1.04 554 91 584 61 566 79 0.08 13.79

c2670 1040 1.00 1.01 1.15 1.11 1.25 1.05 1.05 1.04 1017 23 1020 20 1010 30 0.20 11.61

c3540 1313 1.00 1.01 1.17 1.10 1.27 1.01 1.01 1.01 1279 34 1287 26 1280 33 0.32 27.28

c5315 2028 1.00 1.00 1.16 1.11 1.27 1.01 1.01 1.00 1490 538 1978 50 1981 47 1.51 29.30

c6288 4102 1.00 1.00 1.16 1.11 1.26 1.06 1.07 1.05 3631 471 3820 282 3693 409 7.80 913.32

c7552 2700 1.00 1.00 1.15 1.11 1.25 1.01 1.01 1.00 2610 90 2658 42 2648 52 2.02 358.03

Average 1.00 1.01 1.16 1.11 1.27 1.03 1.04 1.02

D. Sylvester et al. / INTEGRATION, the VLSI journal 41 (2008) 319–339334

different heuristic approaches. While self-compensated andsingle-pitched libraries lead to good timing behavior acrossfocus, they also lead to relatively large area overheads of11% and 27%, respectively. The ILP optimization providesan optimal solution and can be used to determine how wellthe heuristics are performing. The two sensitivity-basedheuristics show 3–4% area increases while meeting timingrequirement throughout all defocus range. Note that thetrend is towards smaller area penalties in the largerbenchmarks, explainable by the fact that a smaller(relative) subset of gates are responsible for determiningtiming in these larger circuits. The first heuristic inparticular achieves circuit areas very close to optimal,usually within 1%. As can be seen from the runtime of thevarious optimization approaches, the heuristic techniquesshows very reasonable efficiency with high quality solu-tions relative to the ILP.

Table 4 shows the change in leakage power at theworst defocus conditions compared to the originallibrary at perfect focus using several self-compensatingdesign options. As can be seen, both self-compensatedcells and single-pitch cells designs options showsmodest �7% leakage increases at worst-case defocus.The area-driven dense+iso optimization shows10% less leakage than the nominal case at 0.4 mmdefocus, although the results for this case vary widely. Asexpected the leakage-driven optimization shows 25% lessleakage than the original circuit and 15% less thanheuristic 1 since leakage is directly accounted for in thisformulation.

In conclusion, a novel design technique to compensatefor lithographic focus-dependent CD variation is proposedin this paper. The proposed idea is to judiciouslyinstantiate isolated and dense versions of library cells in acircuit to effectively negate the impact of expected focusvariations.

5. Conclusions and future technology outlook

This paper has reviewed recent work in the analysis andmitigation of variability in modern ICs. There is asignificant amount of effort in both industry and academiain this area due to its potential showstopping nature. Thekey outcomes of growing process variability in nanometer-scale CMOS are: (1) a trend towards parametric yield asthe governing objective function, rather than timing orpower in isolation, (2) a push towards circuits and CADtechniques that focus on robustness as much as nominalperformance, and (3) a need for more cooperation andcommon understanding across the device, circuit, CAD,and architecture levels of the integrated circuit designprocess.The remainder of this section highlights future trends in

design and CAD efforts to cope with variability. Particularemphasis is placed on re-examining the emerging role ofstatistical techniques in nanometer-scale CMOS design,and on the injection of manufacturing information into thesignoff process in an effort to make design better reflectreality.

5.1. Statistical CAD

It is well known that traditional corner-case-baseddesign approaches lead to overly pessimistic guard-bandingand are ineffective in coping with the present scenario.However, the emerging statistical techniques that havebeen proposed are almost universally based on conceptsdeveloped in statistical static timing analysis (SSTA)[12,13,49]. Conventional thinking is that SSTA will providebetter timing estimation as well as form the underlyingengine for optimization capabilities (e.g., gate sizing, dual-Vth assignment, etc.). However, current SSTA formula-tions are based on a number of significant simplifications,

ARTICLE IN PRESSD. Sylvester et al. / INTEGRATION, the VLSI journal 41 (2008) 319–339 335

such as the assumption of normal delay and arrival timedistributions and approximations of correlations. Inaddition, a number of modeling issues remain to beaddressed in SSTA, including the dependence of delay onload and slope, interaction between interconnect and gatedelays under process variations, and the impact of noise ondelay. Finally, sensitivity computation based on SSTA hasproven to be expensive and optimization of complexparameters such as discrete Vth assignment still posessignificant difficulties. Given that commercial SSTA toolshave been available for several years and there is very little(or no) experimental data pointing to its superiority, onecan reasonably conclude that there is a lack of designerbuy-in at present.

Therefore, from a practical perspective it can be arguedthat existing statistical methods still require a significantamount of improvement, refinement, and innovationbefore they are suitable for use in developing mainstreamproducts. On the other hand, deterministic methods(i.e., algorithms, tools) are already well established andwidely used in industry. To provide an alternative path forenabling a variation-aware design technology infrastruc-ture, we suggest a new design optimization framework forstatistical analysis and optimization that uses knowndeterministic optimization procedures. This approach firststatistically samples the process space and then usesdeterministic approaches to optimize the design at eachsampled process condition. In order to maximize theruntime efficiency of the proposed framework, variationspace sampling concepts can be applied as well as a

Fig. 21. Proposed design optimization framework considering variability.

tailoring of the deterministic optimization for speedefficiency in the inner-loop of the method. After repeatingthis process of deterministic optimization across multipleprocess sample points, probability distributions (PDF) andcorrelations of the optimal design parameters (such as Vthor width) are computed for all gates in the circuit. ThesePDFs are then used to finalize the gate-level designparameters and thus guide the statistical optimization.The general philosophy behind this framework is to

learn from optimization outcomes under varying scenariosof process variations and use this knowledge to fix deviceparameters at design time for maximizing circuit yield. Theoverall framework is summarized in Fig. 21. Complexobjectives such as forming optimized clusters of gates forpost-silicon tuning using ABB [35] can be effectivelyaccomplished using this framework. It will be extremelydifficult to achieve such goals using traditional SSTAframeworks. Essentially this section strongly points to afuture built on MC-based SSTA (and accompanyingoptimization) rather than ‘traditional’ approaches thatpropagate timing (or power) distributions through circuits.The advantages of MC-based SSTA are numerous andsignificant in nature:

�

built on proven STA technology, easing integration intoflow and ensuring designer buy-in � accurate—it is essentially the golden model given a largeenough sample set

� no simplifying assumptions to deal with propagatingdelay distributions

� trivially parallelizable such that runtime can be madevery reasonable

� can leverage variance reduction strategies to bring downnumber of MC samples required

The latter two points are important in driving statisticaloptimization built on such a MC-based SSTA approach. Ingeneral the future of statistical CAD is still unclear—research into MC-based SSTA should be pursued as analternative path to more conventional SSTA approachesrelying on propagation of delay distributions.

5.2. Adaptive design

The functional correctness of designs as well as circuitparametric yield is endangered by uncertainty. As de-scribed above, statistical CAD tools have been proposed asa way to improve parametric yield and assist in timingverification. Much of variability is, however, systematicrather than random including strong spatial correlations.In that case, statistical techniques may not be sufficient tomaintain good yields going forward, particularly if physicaltolerances are relaxed due to cost pressures. An alternativeand orthogonal approach is the use of adaptive circuitfabrics that seek to guarantee and enforce a specifiedbehavior in circuits even in the presence of underlyingprocess variation. These techniques rely on sensing the

ARTICLE IN PRESS

DAP

L

2

L

2

L

2

L

2

L

2

L

2

L

2

L

2

L

2

L

2

PEProcessing Element

Simple architecture, DVS,

tunable FFs

Sensors/Tuning

Structures

PE States

Nominal Operation

Boosted Operation

Timing Yield Enhanced

Healing/Recovery

Sleep State

Failed Element

L1

Testing/Diagnosis

L3

Fig. 22. Overview of ElastIC architecture showing various PE operation modes [56].

1Since the performance requirements of the DAP unit itself are modest,

it can operate at low voltage and frequency and use aggressive built-in

redundancy to render the unit effectively immune to failure.

D. Sylvester et al. / INTEGRATION, the VLSI journal 41 (2008) 319–339336

process and environmental conditions and adjustingcertain circuit properties to keep the design within itsspecifications. The area of adaptive circuit techniques isrelatively new and approaches to date have been ad hoc,not automated, and not sufficiently general. In particular, aformal approach is required that would provide automatedcircuit synthesis onto adaptive circuit fabrics. The desiredoutcome in this case would be a methodology thatpreserves the foundations of a traditional design flow,i.e., you get what you designed. The synthesis toolsthemselves would insert sufficient adaptivity to guaranteespecified power/performance/yield levels. This adaptivitycan take the form of known, but mostly unused to date,techniques such as ABB and supply voltages, or canleverage any newly developed approaches (e.g., adaptivepower gating [50] and sizing [51]).

In addition to CAD tools for adaptive design, differentsystem architectures can help designers deal with rampantvariability. In addition to extreme parametric variations(e.g., due to random dopant fluctuations and line-edgeroughness), aggressively scaled CMOS exhibits acceleratedwear-out mechanisms (such as oxide breakdown andnegative bias temperature instability, or NBTI [52,53])and high manufacturing device failure rates (expected toreach one in thousands or even hundreds [54,55]). Suchunpredictable silicon poses a fundamental barrier forreliable computing in future technologies. To address thesechallenges, a new architecture is needed that incorporates

very aggressive runtime self-diagnosis, adaptivity, and self-

healing.One such architecture, dubbed ElastIC [56], combines

circuit-level, microarchitectural, and system-level techni-ques and is conceptually depicted in Fig. 22. It consists offour key components: (1) a large number (tens to hundreds)of small, simple processing elements (PEs) that areextremely adaptive, equipped with reliability, performanceand power monitors, and individually tunable throughvoltage/frequency scaling, bias-levels and dynamic softcycle-boundaries. (2) A central diagnostic and adaptivityprocessing (DAP) unit. During runtime, the DAP unittakes each processing element off-line in turn to performdetailed diagnostics of parametric variations and wear-outusing automatic test pattern generation (ATPG) methodscoupled with in-situ reliability monitors in each core. TheDAP unit tracks the degradation of individual or smallclusters of devices due to wearout mechanisms. It can theninitiate active healing, taking advantage of the reversibilityof several wear-out mechanisms, and will tune the PE foroptimal compensation of parametric shifts.1 (3) A corre-sponding memory and interconnect system that usesintegrated error codes and redundancy to address bothfunctional failure and parametric shifts. (4) A system-level

ARTICLE IN PRESS

SnomSknee

Yie

ld

1

0

Design rule dimension (spacing)

Conventional

design rule

Flexible

design rule

Fig. 23. General concept of flexible design rules showing additional

manufacturable design space in the shaded region.

D. Sylvester et al. / INTEGRATION, the VLSI journal 41 (2008) 319–339 337

scheduler that employs up-to-date reliability degradationand frequency/power trade-offs of individual PEs andmemory elements to maximize global system performanceunder workload constraints.

While redundancy is an important weapon to combatfunctional failure/wearout, the overheads are unacceptablylarge to apply it blindly (e.g., triple modular redundancy orTMR). Intelligent approaches such as [57] are beingdeveloped to greatly reduce such overhead while maintain-ing very high coverage of potential runtime faults.Significant further work is needed in this area to bringcoverage to acceptable levels, i.e., 499.9%.

5.3. Post-lithography simulation

Rising design and manufacturing costs for IC designs area key challenge to the semiconductor industry [1]. Inparticular, traditional design rules have become increas-ingly muddled as maintaining a balance between area/performance and yield becomes more difficult. Overlyrestrictive design rule sets with cryptic recommended ruleslimit performance gains achieved in new technologies.Also, expensive masks make first-time-right silicon evenmore crucial. Design for manufacturability (DFM) is oftenbroadly defined, but primarily pertains to tools/techniquesthat bring manufacturing-awareness into the design flow,such as yield-aware routers [58] and placers that considerphase-shift mask restrictions [59]. Despite much recentDFM work, vital components of a true DFM-based designflow are unaddressed. For instance, final timing analysisusing drawn dimensions points to different critical pathsthan analysis including post-OPC printed image dimen-sions [60], yet no signoff capabilities based on post-lithosimulation data exist. Design optimization methods areconstrained by the delay-leakage tradeoffs of existingstandard cells. There is a large portion of the design spacethat is not exploited. Innovative methods for changing the

length and the shape of the channel are required, which cangenerate cells with a wide variety of delay-leakage tradeoffsand thus provide a large range of choices to optimizationalgorithms. Also, real deployment of any research out-comes cannot rely only on simulated results: siliconvalidation must be obtained.Key components of a ‘‘true DFM’’ flow would include

the ability to work with realistic shapes (i.e., non-rectangular transistors) in both the analysis and optimiza-tion domains (beyond what is described in Section 3.3 and[25–29,31]), and to move beyond the overly simplified 0/1design rule paradigm (see Fig. 23). In addition there is aclear need for new canonical test element groups thatsupport the above tools as well as enable new character-ization and modeling capabilities of process variability.

References

[1] International Technology Roadmap for Semiconductors, /www.

itrs.netS, 2007.

[2] S. Narendra, Leakage issues in IC design: trends, estimation, and

avoidance, in: ACM/IEEE International Conference on Computer-

Aided Design, 2003 (full-day tutorial).

[3] S. Borkar, T. Karnik, S. Narendra, J. Tschanz, A. Keshavarzi, V. De,

Parameter variation and impact on circuit and micro-architecture, in:

ACM/IEEE Design Automation Conference, 2003, pp. 338–342.

[4] R.M. Rao, K. Agarwal, A. Devgan, K. Nowka, D. Sylvester, R.

Brown, Parametric yield analysis and constrained-based supply

voltage optimization, in: ACM/IEEE International Symposium on

Quality Electronic Design, 2005, pp. 284–290.

[5] D. Burnett, K. Erington, C. Subramanian, K. Baker, Implications of

fundamental threshold voltage variations for high-density SRAM

and logic circuits, in: IEEE Symposium on VLSI Technology, 1994,

pp. 15–16.

[6] B. Cheng, S. Roy, A. Asenov, The impact of random doping effects

on CMOS SRAM cell, in: IEEE European Solid State Circuits

Conference, 2004, pp. 219–222.

[7] M. Pelgrom, A. Duinmaijer, A. Welbers, Matching properties of

MOS transistors, IEEE J. Solid State Circuits (1989) 1433–1440.

[8] T. Mizuno, J. Okamura, A. Toriumi, Experimental study of threshold

voltage fluctuation due to statistical variation of channel dopant

number in MOSFETs, IEEE Trans. Electron Devices (1994)

2216–2221.

[9] K. Lakshmikumar, R. Hadaway, M.A. Copeland, Characterization