Papageorgiou SN. Meta-analysis for orthodontists: Part I – How to choose effect measure and...

Transcript of Papageorgiou SN. Meta-analysis for orthodontists: Part I – How to choose effect measure and...

Meta-analysis for orthodontists: Part I – How tochoose effect measure and statistical modelSpyridon N. Papageorgiou1,2

1Department of Orthodontics; 2Department of Oral Technology, School of Dentistry, University of Bonn, Bonn, Germany

Systematic reviews ideally provide a comprehensive and unbiased summary of existing evidence from clinical studies,

whilst meta-analysis combines the results of these studies to produce an overall estimate. Collectively, this makes them

invaluable for clinical decision-making. Although the number of published systematic reviews and meta-analyses in

orthodontics has increased, questions are often raised about their methodological soundness. In this primer, the first

steps of meta-analysis are discussed, namely the choice of an effect measure to express the results of included studies,

and the choice of a statistical model for the meta-analysis. Clinical orthodontic examples are given to explain the

various options available, the thought process behind the choice between them and their interpretation.

Key words: Effect measure, orthodontics, meta-analysis, fixed-effect, random-effects

Received 2 March 2014; accepted 5 June 2014

Introduction

Systematic reviews summarise qualitatively the results of

multiple studies, providing the highest grade of evidence

to develop clinical guidelines. Meta-analysis goes one step

further, to the quantitative synthesis of studies in order to

provide an overall summary. Although the number of

published systematic reviews and meta-analyses in

orthodontics has increased, questions about the metho-

dological soundness of some have been raised.

In this paper, the first steps of meta-analysis will be

discussed in the context of: (1) the choice of an effect

measure to express the results of included studies and (2)

selection of an appropriate model for subsequent statistical

synthesis. For readers not familiar with meta-analysis, a

short description of the forest plot and of between-study

heterogeneity will also be given. Meta-analyses will be

covered that compare two groups; either an intervention

group versus a placebo/control group or an intervention

group versus another intervention group. Meta-analyses of

data from a single group (i.e. event rates, means of groups,

etc.), meta-analyses of diagnostic accuracy or network

meta-analyses will not be covered. Furthermore, emphasis

will be given on basic principles, which can be easily

applied in the freely-available program Review Manager

(RevMan) (Review Manager, 2012) available from the

Cochrane Collaboration.

It is beyond the scope of this article to analytically

describe the stages of a systematic review and when it is

sensible to perform a meta-analysis or not. Interested

readers should refer to the Cochrane Handbook for

Systematic Reviews of Interventions (Higgins and

Green, 2011) and to the PRISMA statement (Preferred

Reporting Items for Systematic Reviews and Meta-

Analyses) (Liberati et al., 2009), where further details

can be found on how to respectively conduct and reportsystematic reviews and meta-analyses.

Effect measuresThe first step in meta-analysis is to identify all relevant

studies evaluating the same measure in order to directlycompare and eventually synthesise them. There are a

wide array of effect measures that can be used, but this

paper will focus on five of the most common:

(i) those for continuous outcomes (such as ANBangle): mean difference (MD) (also known as

difference in the means) and standardised mean

difference (SMD),

(ii) those for binary (dichotomous) outcomes (such as

bracket failure): odds ratio (OR), relative risk

(RR) (also known as risk ratio) and risk difference

(RD) (also known as absolute risk reduction).

Continuous outcomes

Effect measures. If all included studies measure the same

continuous outcome and use the same measurement

INVITATION TO SUBMIT Journal of Orthodontics, Vol. 41, 2014, 317–326

Address for correspondence: Spyridon N. Papageorgiou, DDS,

Department of Orthodontics, School of Dentistry, University of

Bonn, Welschnonnenstr. 17, 53111, Bonn, Germany.

Email: [email protected]# 2014 British Orthodontic Society DOI 10.1179/1465313314Y.0000000111

scale, then the obvious effect measure to use is the MD,

which is the mean of the intervention group minus

the mean of the control group in each study (or vice

versa):

MD~Meanintervention{Meancontrol,

and has the same units as the outcome measured.

If all included studies measure the same continuous

outcome, but do not use the same measurement scale,

then there are two options. The first is to convert the

data, so that all results are on the same scale. If this is

not possible, then the SMD can be used. This is the MD

standardised (adjusted) by the units of the standard

deviation. There are many standardised effect measures

(Cohen’s d, Hedge’s g, Glass’s d, etc.) (Borenstein et al.,

2008), but this paper will focus on Hedge’s g, which

includes an adjustment for small sample bias and is used

by default in RevMan. SMD does not have the same

units as any of the original scales, but is measured in

standardised measurement units. Both the MD and

SMD are absolute comparative measures and, if MD 5

0 or SMD 5 0, then there is no difference between the

two compared groups.

SMD~Meanintervention{Meanintervention

Pooled standard deviation of both groups

Interpretation. In order to illustrate the differences

between these two measures, suppose we have a meta-

analysis of four randomised controlled trials, where

patients are allocated either to a bite-wafer group or

to a non-wafer group during debonding and perception of

pain is measured by means of a questionnaire. Let us say

that all the trials measured pain with a Visual Analogue

Scale (VAS) (Huskisson, 1974), where a horizontal

100 mm line is plotted with ‘no pain’ on the left and

‘worst pain imaginable’ on the right and the patients

quantify their pain experience on this line. If we find an

MDwafer–control of 210 mm, this means that ‘on average,

patients allocated to the wafer group experienced less pain

during debonding by 10 mm in the VAS scale compared to

patients allocated to the control group’ or alternatively ‘on

average, the bite-wafer reduced pain during debonding by

10 mm in the VAS scale’. You will notice the word ‘on

average’. This means that we are talking about differences

in means (Figure 1) and that not all the wafer patients

experienced less pain than the control patients (hence the

overlap between the curves).

However, if two out of the four trials measured pain

with a VAS scale (in mm) and the other two trials

measured pain with a Likert scale (Likert, 1932) (where

pain is measured in a 5-level scale with a score up to 5)

then the MD cannot be used. In this case, the SMD can

be used for the meta-analysis. If by combining all

four trials using the SMD as a measure, we find a

SMDwafer–control of 20.5, this means that ‘on average,

patients allocated to the wafer group experienced less pain

during debonding by 0.5 standardised measurement units

compared to the patients allocated to the control group’.

One will notice that the interpretation of the effect

measure will depend upon several factors, including the

direction of the scale (higher VAS 5 more pain), the

length of the scale (for VAS, 0 to 100 mm), the minimally

important difference (clinical judgement) and if the

outcome is beneficial (for example, mandibular growth)

or harmful (for example, pain). The interpretation of

SMD is not straightforward and it often makes sense to

back-transform the resulting SMD in one of the original

scales to communicate the results (here, either the VAS or

the Likert scale).

Data needed. For any continuous outcome, indepen-

dently of whether the MD or the SMD is to be used, the

following data are needed: the sample size, the mean and a

measure of variation (standard deviation or standard

error) for both the intervention and the control group.

Many other data formats can be used for data input in

meta-analysis (P, t value, median, interquartile range,

MD, ratio of means, ratio of geometric means), but these

go beyond the scope of this article. It is important also

to know that the SMD does not correct for different

directions among scales. If the used scales run in different

directions, you have to alter the direction of the results, by

multiplying with 21. Finally, conventional meta-analysis

methods rely on the broad assumption that the results are

normally distributed. If concrete indications of skewness

Figure 1 Illustration of the mean difference based on thedescribed fictional bite-wafer trial. The left distributiondepicts the pain experienced during debonding from thepatients in the wafer group and the right distribution depictsthe pain experienced during debonding from the patients inthe control group

318 Papageorgiou Invitation to Submit JO December 2014

are found, it is advisable to get formal statistical advice

before proceeding.

Binary outcomes

Effect measures. For binary outcomes, the three most

widely-used measures are the OR, the RR, and the RD. In

order to understand these three measures, we need to

introduce the concepts of odds and risks. Suppose we have

a fictional randomised controlled trial, where 100 patients

with a class II malocclusion and increased overjet are

allocated either to an early-phase Twin Block treatment orto an untreated control group (to then be treated com-

prehensively later). The measured outcome is the inci-

dence of new incisal trauma, measured as trauma or no

trauma. We can see the results of this trial in the form of a

262 contingency table, which provides the standard data

needed to input binary outcomes for meta-analysis

(Table 1) and includes the events and sample size of

each group. According to the results, 12/50 (24%) of thetreated patients and 24/50 (48%) of the control patients

experienced some kind of incisal trauma during the

follow-up.

If we express the trauma experience in both groups

with odds, then:

N The odds of trauma in the Twin Block group are:

OddsTwin Block~EventsTwin Block

No-eventsTwin Block

~12

38~0:316 or 31:6%

N The odds of trauma in the control group are:

Oddscontrol~Eventscontrol

No-eventscontrol~

24

26~0:923 or 92:3%

N And the OR is simply the ratio of the odds for the two

groups:

OR~OddsTwin Block

Oddscontrol~

0:316

0:923~0:342

Expressed as risks (or probabilities), the same results

will be:

N The risk of trauma in the Twin Block group is:

RiskTwin Block~EventsTwin Block

SampleTwin Block

~12

50~0:240 or 24%

N The risk of trauma in the control group is:

Riskcontrol~Eventscontrol

Samplecontrol~

24

50~0:480 or 48%

N And the RR is simply the ratio of the risks for the two

groups:

RR~RiskTwin Block

Riskcontrol~

0:240

0:480~0:500

N Finally, the RD is the difference of the risks for the

two groups:

RD~RiskTwin Block{Riskcontrol

~0:240{0:480~{0:240 or {24:0%

Another illustrative measure for binary outcomes is the

Number Needed to Treat (NNT); however, this cannot

be directly used in the meta-analysis. Rather, the results

from the other measures have to be converted in the

NNT. NNT gives the number of patients you would have

to treat with the intervention (instead of leaving them

untreated) to prevent one additional event and is there-fore, very helpful for the clinical translation of results.

N The NNT is traditionally calculated as the reciprocal

of the RD, so in our example:

NNT~1

RD~

1

0:240~4:17,

which is always rounded up to the next number, so 5:

However, this assumes a constant RR (meaning that

the benefits/harms of a treatment are the same among

both low-risk and high-risk patients); but this is a bigassumption (Sackett et al., 1997). The aim of evidence-

based medicine is to individualise existing clinical knowl-

edge in order to satisfy the needs and preferences of each

individual patient (Glasziou et al., 1998).

Table 1 Fictional data for a randomised controlled trial, where 100 patients are allocated to either a Twin Block treatment or to an

untreated control group in order to avoid incisal trauma

Event (trauma) No event (no trauma) Total

Intervention (Twin Block) 12 38 50

Control (untreated) 24 26 50

Total 36 64 50

JO December 2014 Invitation to Submit Choice of effect and model in meta-analysis 319

N Therefore, Furukawa et al. (2002) proposed the

calculation of an individualised NNT based on the

Patient’s Expected Event Rate (PEER) and either

the RR or the OR:

NNT~1

PEER|(1{RR)or

NNT~1{PEERzOR|PEER

PEER|(1{OR)|(1{PEER)

Therefore, suppose we want to calculate the NNT for

two patient examples: (a) for a quiet late-adolescent

patient with increased overjet and a PEER for trauma of

0.3 (low-risk patient) and (b) for a rather energetic pre-

adolescent patient, who plays rugby and has a PEER for

trauma of 0.6 (high-risk patient).

N For the low-risk group we would have:

NNTlow risk~1

0:3|(1{0:5)~

1

0:3|0:5

~1

0:15~6:67 rounded up to 7:

N And for the high-risk group we would have:

NNThigh risk~1

0:6|(1{0:5)~

1

0:6|0:5

~1

0:3~3:33, rounded up to 4:

Interpretation. The interpretation of the four measures is

quite different. First of all, one must bear in mind that the

OR and the RR are relative measures, while the RD and

NNT are absolute measures. This means that for the

interpretation of the OR and the RR, we need to subtract

the calculated value from the no-effect value, which is OR

5 1 or RR 5 1:

N OR{1~0:342{1:000~{0:658 or 265.8%

This means that ‘the odds of trauma among patients

treated with Twin Block were reduced by 65.8% compared

to the odds among the control patients’ or ‘Twin Block

treatment reduced the odds of trauma by 65.8% of the

odds in untreated patients’.

N RR{1~0:500{1:000~{0:500 or 250.0%

This means that ‘the trauma risk of patients treated with

Twin Block was reduced by 50% compared to the risk of

untreated patients’ or that ‘Twin Block treatment reduced

the risk of trauma by 50% of the trauma risk in untreated

patients’.

For the interpretation of RD, no subtraction is

needed, so the interpretation would be that:

N ‘on average, patients treated with Twin Block had 24%

lower risk of trauma’ or ‘Twin Block treatment reduced

the trauma risk by 24 percentage points’

Finally, the interpretation of the individualised NNTs, is

that:

N ‘one would have to treat four high-risk patients or seven

low-risk patients with increased overjet with a Twin

Block, instead of leaving them untreated, in order to

prevent one new case of incisal trauma’.

How to choose a summary measure? Ideally, a summary

measure should have consistency, desirable mathematical

properties and ease of interpretation (Higgins and Green,

2011). A summary of the introduced effect measures is

provided in Table 2. The choice of a summary measure

for the meta-analysis of continuous outcomes is straight-

forward. In contrast, the choice of a measure for binary

outcomes has been the focal point of much discussion.

However, the selection of an effect measure for meta-

analysis should be based on empirical evidence (Walter,

2000) and not solely on theoretical or statistical

grounds. According to existing empirical studies

(Engels et al., 2000; Deeks, 2002; Furukawa et al.,

2002), the following recommendations can be made

about the choice of effect measure for binary outcomes:

(i) the use of RD should be avoided,

(ii) the RR for harm (i.e. incidence of incisal trauma)

is preferable to the use of RR for benefit

(avoidance of incisal trauma),

(iii) a convenient approach is to use the RR for the

statistical pooling and then use the patient’s

expected event rate to individualise the NNT for

application into practice.

Many researchers prefer to use OR as a measure in meta-

analysis on the grounds that it is often reported as

adjusted OR resulting from a logistic regression. How-

ever, there exist also several methods to calculate adjusted

RRs [Mantel–Haenszel method, log-binomial regres-

sion, Poisson regression with robust standard error, etc.

(McNutt et al., 2003; Greenland, 2004; Knol, 2012)].

Adding to that, statisticians argue that the OR might not

be the most suitable measure to express the results of

interventional trials or systematic reviews of such trials

(Fleiss, 1981; Sinclair and Braken, 1994; Feinstein, 1999).

For case-control studies and logistic regression, the use of

ORs is inevitable, as the OR is the best estimate of RR

that can be obtained (Deeks, 1998). However, the use of

OR should be avoided in cohort or randomised studies

320 Papageorgiou Invitation to Submit JO December 2014

(Sackett et al., 1996; Knol, 2012). Grimes and Shulz (2008)

advocate the use of RRs for epidemiological studies

together with the corresponding Confidence Intervals

(CIs), whenever possible. In both clinical trials and sys-

tematic reviews of trials there is no reason for compromis-

ing interpretation by reporting results in odds rather than

risks (Sinclair and Bracken, 1994; Sackett et al., 1996).

Finally, it is crucial for the reader to understand that every

estimate (continuous or binary) from any study is

uncertain, and should be always presented with a CI.

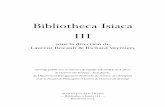

Reading a forest plotBefore advancing from expressing all studies in the same

measure to the statistical pooling of measures, we need

to take a look at the forest plot, which is the standard

graphical output of a meta-analysis. A forest plot from a

Cochrane systematic review (Thiruvenkatachari et al.,

2013) is replicated in Figure 2, highlighting its various

components. In this meta-analysis, three randomised

controlled studies are pooled, which allocated patients

to functional appliance treatment or to an untreated

control group and measured the patients’ ANB angle

post-treatment. As can be seen, the raw data from each

study (left) are re-expressed with the effect measure

(right) and then all effect measures are pooled across

studies (middle). This summary estimate is depicted as a

diamond at the bottom of the forest plot. In this data

synthesis, weights are assigned to the studies, according

to the model that is used (here fixed-effect model):

Table 2 Summary of the three assessed effect measures for binary outcomes

Odds ratio (OR) Relative risk (RR) Risk difference (RD)

Characteristics Definition Odda/Oddb Riska/Riskb Riska–Riskb

Range 1/‘ to ‘ 0 to ‘ 21 to 1

No-effect value 1 1 0

Consistency (similar

despite variation in

baseline risk)

(z) Yes (z) Yes (2) No

Mathematical

properties

Bounded (z) No (2) Yes (as risks range

0 to 1); switching event

and no-event can avoid

this problem

(2) Yes (risks

range 0 to 1)

Collapsibility (calculated

effect is unaffected

by adjustments)

(2) No (z) Yes (2) No

Constrained predictions (2) Yes; absolute benefit

when control group event

rate is 0 and 100%

– –

Relatively homogenous (z) Yes (z) Yes (2) Less homogenous

than OR and RR

(Engels et al., 2000;

Deeks, 2002)

Simple variance estimator (z) Yes (z) Yes (2) No

Symmetry (Effectevent

and effectno-event are

symmetrical)

(z) Yes (reciprocal) (2) No (z) Yes (change of sign)

Other (2) Does not approximate

well the RR with event

rates greater than 10–15%

(Altman et al., 1998)

(2) Can behave paradoxically

with chained or conditional

probabilities, in which case

the RR is more transparent

(Newcombe, 2006)

– –

Interpretation Easily understood (2) No; often misinterpreted

as RR by authors and readers

(Sackett et al., 1996)

(z) Yes (z) Yes

JO December 2014 Invitation to Submit Choice of effect and model in meta-analysis 321

Weightfixed�effect~1

variance

Then the pooled summary estimate is:

Pooled effect~sum of (estimate|weight)

sum of weights

Heterogeneity between studiesAs we can see from the forest plot (Figure 2), even

though the studies have similar intervention and control

groups, the effect estimates (here the MDs) vary across

them, a phenomenon termed between-study heterogeneity.Sources for this variation between the study results can

include clinical diversity (regarding patients, interventions

or outcomes), methodological differences (variation in

study design, conduct, attrition, etc.) or statistical hetero-

geneity (variability that could be expected entirely from

chance). Heterogeneity can be ‘eyeballed’ from the overlap

of the 95% CIs across studies. If the 95% CIs of the studies

have poor overlap, this generally indicates the presence

of heterogeneity. There are more sophisticated ways to

identify and quantify heterogeneity (Higgins and Green,

2011), but they will not be discussed here. However, it is

important to know that heterogeneity plays a major role

in meta-analysis. As can be logically expected, extreme

heterogeneity between studies can make them incompatible

with each other. When extreme heterogeneity exists, one can

choose to:

1. check the data again (for mistakes in the data

extraction or data input);

2. not to pool at all the studies;

3. ignore the heterogeneity (not advisable);

4. exclude outlying studies from the analysis (not

advisable);

5. change effect measure (not advisable);

6. encompass heterogeneity with a random-effects model;

Figure 2 Example of a meta-analysis forest plot including its various components. CI, confidence interval; N, sample size; SD,standard deviation; UK, United Kingdom; H0, null hypothesis; MD, mean difference; SMD, standardised mean difference; RD, riskdifference; RR, risk ratio; OR, odds ratio

322 Papageorgiou Invitation to Submit JO December 2014

7. explore sources of heterogeneity that can explain thevariability (subgroup analysis, meta-regression, etc).

Finally, the role of heterogeneity in the meta-analysis

depends heavily on the statistical model that is used.

Statistical model for the meta-analysisThere are two main models used in meta-analysis: the

fixed-effect model and the random-effects model. These

two models rely on different assumptions and are

fundamentally different, both in the synthesis and their

interpretation.

A fixed-effect model is based on the assumption that

every study is evaluating a common (‘fixed’) treatment

effect. This means that the effect of treatment is the same in

all studies. To put it simply, the only difference betweenthe identified studies is the random error (sampling

variation) and if all studies had an infinitely large sample

of patients, they would also yield identical results. The

pooled diamond in the fixed-effect meta-analysis repre-

sents this one ‘fixed’ treatment effect and the 95% CI

represents how uncertain we are about the estimate. In this

model our goal is to compute the common effect measure

for the identified population, and not to generalise to otherpopulations. It should be clear, however, that this situation

is relatively rare. The vast majority of cases will more

closely resemble those discussed immediately below.

A random-effects model does not assume that one

‘fixed’ treatment effect really exists. Under this model,

proposed by DerSimonian and Laird (DerSimonian and

Laird, 1986), the true treatment effects in the individual

studies may be different from each other. Between-studyheterogeneity is incorporated into the weight assigned to

each study:

Weightrandom-effects~1

variancezheterogeneity parameter

In this case the meta-analysis does not calculate a singleestimate, but rather a distribution of treatment effects

(usually assumed to be normally-distributed). That means

that the diamond in the random-effects meta-analysis

represents the mean and the dispersion of the different

treatment effects and not a single ‘fixed’ treatment effect.

Additionally, 95% predictive intervals should always be

calculated for random-effects meta-analyses (Higgins

et al., 2009). These predictive intervals incorporate iden-tified heterogeneity and answer the question: ‘based on

existing evidence, what effect can I expect my treatment

to have in a future application?’ by providing a range of

plausible effects. However, the calculation of predictive

intervals for the random-effects model is not currently

available in RevMan (possibly to be incorporated in a

forthcoming release) and one must use another statistical

package or perform it manually.

There are many methods to calculate and pool effect

measures in RevMan. For the fixed-effect model, the

inverse variance method is a straightforward method that

can be used generally in most situations by weighing

studies according to their precision (the reciprocal of

variance). The Mantel–Haenszel method (Mantel and

Haenszel, 1959; Greenland and Robins, 1985) is a good

method for reviews with few events or small studies

(default method in RevMan). For ORs, there is also the

Peto method (Yusuf et al., 1985), which is a good method

for studies with few events, small effects (OR close to 1)

and similar numbers in the experimental and control

group. For the random-effects model there are two

options (Mantel–Haenszel and inverse variance), but the

difference between them is trivial.

Comparing the results of the two models, the fixed-

effect model gives almost always, narrower CIs for the

pooled estimate than the random-effects model.

Additionally, as under the fixed-effect assumption the

random error is the only source of variability, large and

more precise studies are given more weight with this

model than with the random-effects model. As stated

before, the results of the two models are heavily

influenced by the existence of heterogeneity. When no

heterogeneity is identified, both models give relatively

similar results. However, when heterogeneity is present,

then a random-effects model is much more conservative

compared to a fixed-effect model (wider CIs and larger P

values). The two models also differ, when the meta-

analysis results are related to study size (Papageorgiou

et al., 2014a), as the random-effects model gives relatively

more weight to smaller studies.

In practice, people tend to interpret both models simi-

larly, which is wrong. To illustrate the different interpreta-

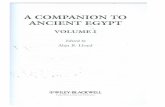

tion of the two models, the abovementioned meta-analysis

from Figure 2 is replicated in a simplified form in Figure 3,

both with fixed-effect and random-effects models. The

pooled diamonds for the two meta-analyses are graphi-

cally ‘augmented’ to illustrate the thought process behind

them. In the upper meta-analysis, where a fixed-effect

model is used, we would interpret the results as follows:

(i) functional appliance treatment has exactly the

same effect on the ANB angle in every included

clinical setting (one ‘fixed’ distribution),

(ii) our best estimate of this effect is an average

reduction in ANB angle of 0.89u compared to

untreated patients.

In the lower analysis, where a random-effects model is

used, our interpretation would be the following:

JO December 2014 Invitation to Submit Choice of effect and model in meta-analysis 323

(i) the effect of functional appliance treatment varies

among the included clinical settings (different

distributions),

(ii) the average of the various effects is a reduction in

ANB angle of 1.35u compared to untreated patients.

Comparing the two models, we see that with the random-

effects model:

(i) less weight is given to the biggest study (Florida

1998 study) than with the fixed-effect model,

(ii) the 95% CI of the overall estimate is wider than

with the fixed-effect model,

(iii) the MD moves more to the left compared to the

fixed-effect model, as the imprecise UK 2009

study influences the results more and finally

(iv) the test for the overall effect is now less significant.

The use of a fixed-effect model is not unproblematic, as

many researchers argue that heterogeneity is inevitable

in a meta-analysis. Especially for orthodontic clinical

research, a random-effects model can be in most cases

easier to justify than a fixed-effect model, as factors like

different populations, different chronological/skeletal age

and growth potential, different experimental settings,

clinician preferences and experience, appliance design,

Figure 3 Forest plot of the meta-analysis from Figure 2 with either a fixed-effect model (above) or with at random-effectsmodel. IV, inverse variance; CI, confidence interval

324 Papageorgiou Invitation to Submit JO December 2014

patient compliance and biological response to treatment

might come into play.

A common mistake to be avoided is to test for

heterogeneity and then select a fixed-effect or a random-

effects model according to the test results (Borenstein

et al., 2008; Higgins and Green, 2011; Papageorgiou,

2013; Papageorgiou et al., 2014b). This is problematic

for a number of reasons. The choice of model should be

pre-specified a priori (in the protocol) if possible, by

contemplating the review question you have asked, the

studies you intend to include, and if logically you expect

them to be very diverse. You either ‘believe’ in hetero-

geneity a priori or not at all. As an alternative, one

can apply and present both fixed and random-effects

analyses and compare their results and the role of

heterogeneity. This is also often performed to check the

robustness of the results (sensitivity analysis). How-

ever, the interpretation for each model is different, as

discussed earlier, and this could confuse the reader.

The biggest limitation of the fixed-effect model is that

existing means for the identification of heterogeneity are

very imprecise (Hardy and Thompson, 1998), meaning

that heterogeneity is very likely to exist, whether we find it

or not. In this case ignoring heterogeneity might lead to

false positive results and threatens the validity of the meta-

analysis. For example, an empirical study of meta-analyses

on psychology found that the calculated 95% CIs from the

fixed-effect model were considerably narrower than they

actually were (Schmidt et al., 2009). It is generally ‘safer’ to

use a random-effects model, as it gives similar results to the

fixed effect model in case of homogeneity, but deals much

better with the heterogeneity. Finally, a random-effects

model is also better at the generalizability of the meta-

analysis results, as it covers more clinical scenarios and

therefore, is more useful in evidence-based decision-

making in orthodontics.

ConclusionsRegarding the choice of effect measure:

N For continuous outcomes either the mean difference

or the standardised mean difference can be used,

according to the measurement scale used.

N For binary outcomes, the use of relative risk seems to

be preferable to the use of OR or RD based on

empirical and epidemiological grounds. Re-expressing

the results into the individualised number needed to

treat aids in the clinical translation.

Regarding the choice of a statistical model:

N The choice of model should be pre-specified, where

possible.

N A fixed-effect model is by definition difficult to apply in

orthodontic clinical research, as many factors can result

in between-study variability and absolutely controlled

experimental conditions are almost impossible.

N A random-effects model is easier to justify clinically in

orthodontics and is generally more conservative thana fixed-effect model, reducing the risk of spurious

findings.

N The results of a random-effects model must be

interpreted accordingly, as the average of the various

treatment effects among the included trials and, if

possible, be accompanied by 95% predictive intervals.

Disclaimer StatementsContributors Mr Papageorgiou wrote and revised the

first manuscript and is the guarantor.

Funding None

Conflicts of interest None

Ethics approval No ethical approval needed.

Acknowledgements

I would like to thank Martyn Cobourne for his helpfulcomments on the earlier version of this manuscript.

ReferencesAltman DG, Deeks JJ, Sackett DL. Odds ratios should be avoided when events

are common. BMJ 1998; 317: 1318.

Borenstein M, Hedges LV, Higgins JPT, Rothstein HR. Introduction to Meta-

analysis. Chichester: John Wiley & Sons. 2008.

Deeks J. When can odds ratios mislead? Odds ratios should be used only in case-

control studies and logistic regression analyses. BMJ 1998; 317: 1155–1156.

Deeks JJ. Issues in the selection of a summary statistic for meta-analysis of

clinical trials with binary outcomes. Stat Med 2002; 21: 1575–1600.

DerSimonian R, Laird N. Meta-analysis in clinical trials. Control Clin Trials

1986; 7: 177–188.

Engels EA, Schmid CH, Terrin N, Olkin I, Lau J. Heterogeneity and statistical

significance in meta-analysis: an empirical study of 125 meta-analyses. Stat

Med 2000; 19: 1707–1728.

Feinstein AR. Indexes of contrast and quantitative significance for comparisons

of two groups. Stat Med 1999; 18: 2557–2581.

Fleiss J. Statistical Methods for Rates and Proportions. 2nd edn. New York:

John Wiley and sons. 1981.

Furukawa TA, Guyatt GH, Griffith LE. Can we individualize the ’number

needed to treat’? An empirical study of summary effect measures in meta-

analyses. Int J Epidemiol 2002; 31: 72–76.

Glasziou P, Guyatt GH, Dans AL, Dans LF, Straus S, Sackett DL. Applying the

results of trials and systematic reviews to individual patients. ACP J Club

1998; 129: A15–A16.

Greenland S. Model-based estimation of relative risks and other epidemiologic

measures in studies of common outcomes and in case-control studies. Am

J Epidemiol 2004; 160: 301–305.

Greenland S, Robins JM. Estimation of a common effect parameter from sparse

follow-up data. Biometrics 1985; 41: 55–68.

Grimes DA, Schulz KF. Making sense of odds and odds ratios. Obstet Gynecol

2008; 111: 423–426.

Hardy RJ, Thompson SG. Detecting and describing heterogeneity in meta-

analysis. Stat Med 1998; 17: 841–856.

JO December 2014 Invitation to Submit Choice of effect and model in meta-analysis 325

Higgins JP, Thompson SG, Spiegelhalter DJ. A re-evaluation of random-effects

meta-analysis. J R Stat Soc Ser A Stat Soc 2009; 172: 137–159.

Higgins JPT, Green S. Cochrane Handbook for Systematic Reviews of

Interventions Version 5.1.0 (updated March 2011). The Cochrane

Collaboration, 2011. Available from: www.cochrane-handbook.org

Huskisson EC. Measurement of pain. Lancet 1974; 2: 1127–1131.

Knol MJ. Down with odds ratios: risk ratios in cohort studies and randomised

clinical trials. Ned Tijdschr Geneeskd 2012; 156: A4775.

Liberati A, Altman DG, Tetzlaff J, Mulrow C, Gøtzsche PC, Ioannidis JP, et al.

The PRISMA statement for reporting systematic reviews and meta-

analyses of studies that evaluate health care interventions: explanation and

elaboration. J Clin Epidemiol 2009; 62: e1–e34.

Likert R. A technique for the measurement of attitudes. Arch Psychol 1932; 140:

1–55.

Mantel N, Haenszel W. Statistical aspects of the analysis of data from

retrospective studies of disease. J Natl Cancer Inst 1959; 22: 719–748.

McNutt LA, Wu C, Xue X, Hafner JP. Estimating the relative risk in cohort studies

and clinical trials of common outcomes. Am J Epidemiol 2003; 157: 940–943.

Newcombe RG. A deficiency of the odds ratio as a measure of effect size. Stat

Med 2006; 25: 4235–4240.

Papageorgiou SN. Meta-analysis 101. Am J Orthod Dentofacial Orthop 2013;

144: 497.

Papageorgiou SN, Antonoglou G, Tsiranidou E, Jepsen S, Jager A. Bias and small-

study effects influence treatment effect estimates: a meta-epidemiological study

in oral medicine. J Clin Epidemiol 2014a. Epub ahead of print: DOI: 10.1016/

j.jclinepi.2014.04.002.

Papageorgiou SN, Papadopoulos MA, Athanasiou AE. Reporting character-

istics of meta-analyses in orthodontics: methodological assessment and

statistical recommendations. Eur J Orthod 2014b; 36: 74–85.

Review Manager (RevMan) [Computer program]. Version 5.2. Copenhagen:

The Nordic Cochrane Centre, The Cochrane Collaboration, 2012.

Sackett DL, Deeks JJ, Altman D. Down with odds ratios! Evidence-Based Med

1996; 1: 164–167.

Sacket DL, Richardson WS, Rosenberg W, Haynes RB. Evidence-Based

Medicine: How to Practice and Teach EBM. New York: Churchill

Livingstone. 1997.

Schmidt FL, Oh IS, Hayes TL. Fixed- versus random-effects models in meta-

analysis: model properties and an empirical comparison of differences in

results. Br J Math Stat Psychol 2009; 62: 97–128.

Sinclair JC, Bracken MB. Clinically useful measures of effect in binary analyses

of randomized trials. J Clin Epidemiol 1994; 47: 881–90.

Thiruvenkatachari B, Harrison JE, Worthington HV, O’Brien KD. Orthodontic

treatment for prominent upper front teeth (Class II malocclusion) in

children. Cochrane Database Syst Rev 2013; 11: CD003452.

Walter SD. Choice of effect measure for epidemiological data. J Clin Epidemiol

2000; 53: 931–939.

Yusuf S, Peto R, Lewis J, Collins R, Sleight P. Beta blockade during and after

myocardial infarction: an overview of the randomised trials. Prog

Cardiovasc Dis 1985; 27: 335–371.

326 Papageorgiou Invitation to Submit JO December 2014

![[326-09]. Singh, Rana P.B. 2009. Transformation on the cradle of time; in, his: Banaras: Making of India’s Heritage City. Planet Earth & Cultural Understanding, Series Pub. 3. Cambridge](https://static.fdokumen.com/doc/165x107/633386afa290d455630a30e3/326-09-singh-rana-pb-2009-transformation-on-the-cradle-of-time-in-his.jpg)