Operating Systems (Comp-231) (Theory) Level – 6

-

Upload

khangminh22 -

Category

Documents

-

view

2 -

download

0

Transcript of Operating Systems (Comp-231) (Theory) Level – 6

Operating Systems (Comp-231) (Theory) Level – 6

Academic Year 1437 – 38 Spring Semester

Compiled by: Mr. Syed Ziauddin (Course Coordinator)

(Feb 2017 - May 2017)

Department of Computer Science Faculty of Computer and Information Science

Jazan University, Jazan, KSA

Contents

Chapter One: Operating System Overview

Objective and Functions

Services provided by OS, and Evolution of OS

Major Achievements

Development Leading to Modern Operating System, and System Calls

Chapter Two: File Management

Files, File Systems, and File Management Systems

File Organization and Access

File Directories and Structure

File Sharing and Secondary Storage Management

Chapter Three: Processes and Threads

Process, PCB, Process States

Process Description and Process Control

Processes and Threads, Multithreading, Thread Functionality

ULT & KLT, Microkernel and Thread States

Chapter Four: CPU Scheduling

Types of Scheduling

Scheduling Algorithms (FCFS and SJF)

Scheduling Algorithms (Priority and RR)

Comparison of All

Chapter Five: Deadlocks

System Model, Deadlock Characterization

Deadlock prevention, avoidance

Deadlock detection using banker’s algorithm

Recovery from deadlock

Chapter Six: Memory Management

Address Binding, Logical Vs Physical Address, Dynamic Loading

Linking, Swapping, Fixed and Dynamic partitioning

Paging, Segmentation

Page Replacement Algorithms (FIFO,ORA,LRU)

Thrashing

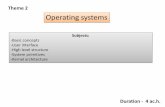

Chapter One:

Operating System Overview

Objective and Functions

Services provided by OS, and Evolution of OS

Major Achievements

Development Leading to Modern Operating

System, and System Calls

CHAPTER

OPERATING SYSTEM OVERVIEW2.1 Operating System Objectives and Functions

The Operating System as a User/Computer InterfaceThe Operating System as Resource ManagerEase of Evolution of an Operating System

2.2 The Evolution of Operating SystemsSerial ProcessingSimple Batch SystemsMultiprogrammed Batch SystemsTime-Sharing Systems

2.3 Major AchievementsThe ProcessMemory ManagementInformation Protection and SecurityScheduling and Resource ManagementSystem Structure

2.4 Developments Leading to Modern Operating Systems2.5 Microsoft Windows Overview

HistorySingle-User MultitaskingArchitectureClient/Server ModelThreads and SMPWindows Objects

2.6 Traditional UNIX SystemsHistoryDescription

2.7 Modern UNIX SystemsSystem V Release 4 (SVR4)BSDSolaris 10

2.8 LinuxHistoryModular StructureKernel Components

2.9 Recommended Reading and Web Sites

2.10 Key Terms, Review Questions, and Problems50

M02_STAL6329_06_SE_C02.QXD 2/28/08 3:33 AM Page 50

2.1 / OPERATING SYSTEM OBJECTIVES AND FUNCTIONS 51

We begin our study of operating systems (OSs) with a brief history.This history is it-self interesting and also serves the purpose of providing an overview of OS princi-ples. The first section examines the objectives and functions of operating systems.Then we look at how operating systems have evolved from primitive batch systemsto sophisticated multitasking, multiuser systems.The remainder of the chapter looksat the history and general characteristics of the two operating systems that serve asexamples throughout this book. All of the material in this chapter is covered ingreater depth later in the book.

2.1 OPERATING SYSTEM OBJECTIVES AND FUNCTIONS

An OS is a program that controls the execution of application programs and acts asan interface between applications and the computer hardware. It can be thought ofas having three objectives:

• Convenience: An OS makes a computer more convenient to use.• Efficiency: An OS allows the computer system resources to be used in an ef-

ficient manner.• Ability to evolve: An OS should be constructed in such a way as to permit the

effective development, testing, and introduction of new system functions with-out interfering with service.

Let us examine these three aspects of an OS in turn.

The Operating System as a User/Computer Interface

The hardware and software used in providing applications to a user can be viewed ina layered or hierarchical fashion, as depicted in Figure 2.1. The user of those applica-tions, the end user, generally is not concerned with the details of computer hardware.Thus, the end user views a computer system in terms of a set of applications. An ap-plication can be expressed in a programming language and is developed by an appli-cation programmer. If one were to develop an application program as a set ofmachine instructions that is completely responsible for controlling the computerhardware, one would be faced with an overwhelmingly complex undertaking.To easethis chore, a set of system programs is provided. Some of these programs are referredto as utilities. These implement frequently used functions that assist in program cre-ation, the management of files, and the control of I/O devices. A programmer willmake use of these facilities in developing an application, and the application, while itis running, will invoke the utilities to perform certain functions. The most importantcollection of system programs comprises the OS. The OS masks the details of thehardware from the programmer and provides the programmer with a convenient in-terface for using the system. It acts as mediator, making it easier for the programmerand for application programs to access and use those facilities and services.

Briefly, the OS typically provides services in the following areas:

• Program development: The OS provides a variety of facilities and services,such as editors and debuggers, to assist the programmer in creating programs.Typically, these services are in the form of utility programs that, while not

M02_STAL6329_06_SE_C02.QXD 2/22/08 7:02 PM Page 51

52 CHAPTER 2 / OPERATING SYSTEM OVERVIEW

strictly part of the core of the OS, are supplied with the OS and are referred toas application program development tools.

• Program execution: A number of steps need to be performed to execute aprogram. Instructions and data must be loaded into main memory, I/O devicesand files must be initialized, and other resources must be prepared. The OShandles these scheduling duties for the user.

• Access to I/O devices: Each I/O device requires its own peculiar set of instruc-tions or control signals for operation.The OS provides a uniform interface thathides these details so that programmers can access such devices using simplereads and writes.

• Controlled access to files: For file access, the OS must reflect a detailed under-standing of not only the nature of the I/O device (disk drive, tape drive) butalso the structure of the data contained in the files on the storage medium. Inthe case of a system with multiple users, the OS may provide protection mech-anisms to control access to the files.

• System access: For shared or public systems, the OS controls access to the sys-tem as a whole and to specific system resources. The access function must pro-vide protection of resources and data from unauthorized users and mustresolve conflicts for resource contention.

• Error detection and response: A variety of errors can occur while a computersystem is running.These include internal and external hardware errors, such asa memory error, or a device failure or malfunction; and various softwareerrors, such as division by zero, attempt to access forbidden memory location,

Enduser

Programmer

Operatingsystem

designer

Application programs

Utilities

Operating system

Computer hardware

Figure 2.1 Layers and Views of a Computer System

M02_STAL6329_06_SE_C02.QXD 2/22/08 7:02 PM Page 52

2.1 / OPERATING SYSTEM OBJECTIVES AND FUNCTIONS 53

and inability of the OS to grant the request of an application. In each case, theOS must provide a response that clears the error condition with the least im-pact on running applications. The response may range from ending the pro-gram that caused the error, to retrying the operation, to simply reporting theerror to the application.

• Accounting: A good OS will collect usage statistics for various resources andmonitor performance parameters such as response time. On any system, thisinformation is useful in anticipating the need for future enhancements and intuning the system to improve performance. On a multiuser system, the infor-mation can be used for billing purposes.

The Operating System as Resource Manager

A computer is a set of resources for the movement, storage, and processing of data andfor the control of these functions. The OS is responsible for managing these resources.

Can we say that it is the OS that controls the movement, storage, and process-ing of data? From one point of view, the answer is yes: By managing the computer’sresources, the OS is in control of the computer’s basic functions. But this control isexercised in a curious way. Normally, we think of a control mechanism as somethingexternal to that which is controlled, or at least as something that is a distinct andseparate part of that which is controlled. (For example, a residential heating systemis controlled by a thermostat, which is separate from the heat-generation and heat-distribution apparatus.) This is not the case with the OS, which as a control mecha-nism is unusual in two respects:

• The OS functions in the same way as ordinary computer software; that is, it is aprogram or suite of programs executed by the processor.

• The OS frequently relinquishes control and must depend on the processor toallow it to regain control.

Like other computer programs, the OS provides instructions for the processor.The key difference is in the intent of the program. The OS directs the processor inthe use of the other system resources and in the timing of its execution of other pro-grams. But in order for the processor to do any of these things, it must cease execut-ing the OS program and execute other programs. Thus, the OS relinquishes controlfor the processor to do some “useful” work and then resumes control long enoughto prepare the processor to do the next piece of work. The mechanisms involved inall this should become clear as the chapter proceeds.

Figure 2.2 suggests the main resources that are managed by the OS. A portionof the OS is in main memory. This includes the kernel, or nucleus, which contains themost frequently used functions in the OS and, at a given time, other portions of theOS currently in use. The remainder of main memory contains user programs anddata. The allocation of this resource (main memory) is controlled jointly by the OSand memory management hardware in the processor, as we shall see.The OS decideswhen an I/O device can be used by a program in execution and controls access to anduse of files. The processor itself is a resource, and the OS must determine how muchprocessor time is to be devoted to the execution of a particular user program. In thecase of a multiple-processor system, this decision must span all of the processors.

M02_STAL6329_06_SE_C02.QXD 2/22/08 7:02 PM Page 53

54 CHAPTER 2 / OPERATING SYSTEM OVERVIEW

Ease of Evolution of an Operating System

A major operating system will evolve over time for a number of reasons:

• Hardware upgrades plus new types of hardware: For example, early versionsof UNIX and the Macintosh operating system did not employ a paging mech-anism because they were run on processors without paging hardware.1 Subse-quent versions of these operating systems were modified to exploit pagingcapabilities. Also, the use of graphics terminals and page-mode terminals in-stead of line-at-a-time scroll mode terminals affects OS design. For example, agraphics terminal typically allows the user to view several applications at thesame time through “windows” on the screen. This requires more sophisticatedsupport in the OS.

• New services: In response to user demand or in response to the needs of sys-tem managers, the OS expands to offer new services. For example, if it is foundto be difficult to maintain good performance for users with existing tools, newmeasurement and control tools may be added to the OS.

• Fixes: Any OS has faults. These are discovered over the course of time andfixes are made. Of course, the fix may introduce new faults.

Memory

Computer system

I/O devices

Operatingsystem

software

Programsand data

ProcessorProcessor

OSPrograms

Data

Storage

I/O controller

I/O controller

I/O controller Printers,keyboards,digital camera,etc.

Figure 2.2 The Operating System as Resource Manager

1Paging is introduced briefly later in this chapter and is discussed in detail in Chapter 7.

M02_STAL6329_06_SE_C02.QXD 2/22/08 7:02 PM Page 54

2.2 / THE EVOLUTION OF OPERATING SYSTEMS 55

The need to change an OS regularly places certain requirements on its design.An obvious statement is that the system should be modular in construction, withclearly defined interfaces between the modules, and that it should be well docu-mented. For large programs, such as the typical contemporary OS, what might be re-ferred to as straightforward modularization is inadequate [DENN80a]. That is, muchmore must be done than simply partitioning a program into modules. We return tothis topic later in this chapter.

2.2 THE EVOLUTION OF OPERATING SYSTEMS

In attempting to understand the key requirements for an OS and the significance ofthe major features of a contemporary OS, it is useful to consider how operating sys-tems have evolved over the years.

Serial Processing

With the earliest computers, from the late 1940s to the mid-1950s, the programmer inter-acted directly with the computer hardware; there was no OS.These computers were runfrom a console consisting of display lights, toggle switches, some form of input device,and a printer. Programs in machine code were loaded via the input device (e.g., a cardreader). If an error halted the program, the error condition was indicated by the lights. Ifthe program proceeded to a normal completion, the output appeared on the printer.

These early systems presented two main problems:

• Scheduling: Most installations used a hardcopy sign-up sheet to reserve com-puter time. Typically, a user could sign up for a block of time in multiples of ahalf hour or so. A user might sign up for an hour and finish in 45 minutes; thiswould result in wasted computer processing time. On the other hand, the usermight run into problems, not finish in the allotted time, and be forced to stopbefore resolving the problem.

• Setup time: A single program, called a job, could involve loading the compilerplus the high-level language program (source program) into memory, saving thecompiled program (object program) and then loading and linking together theobject program and common functions. Each of these steps could involve mount-ing or dismounting tapes or setting up card decks. If an error occurred, the hap-less user typically had to go back to the beginning of the setup sequence. Thus, aconsiderable amount of time was spent just in setting up the program to run.

This mode of operation could be termed serial processing, reflecting the factthat users have access to the computer in series. Over time, various system softwaretools were developed to attempt to make serial processing more efficient. These in-clude libraries of common functions, linkers, loaders, debuggers, and I/O driver rou-tines that were available as common software for all users.

Simple Batch Systems

Early computers were very expensive, and therefore it was important to maxi-mize processor utilization. The wasted time due to scheduling and setup time wasunacceptable.

M02_STAL6329_06_SE_C02.QXD 2/22/08 7:02 PM Page 55

2.2 / THE EVOLUTION OF OPERATING SYSTEMS 59

Multiprogrammed Batch Systems

Even with the automatic job sequencing provided by a simple batch operating sys-tem, the processor is often idle. The problem is that I/O devices are slow comparedto the processor. Figure 2.4 details a representative calculation. The calculationconcerns a program that processes a file of records and performs, on average, 100machine instructions per record. In this example the computer spends over 96%of its time waiting for I/O devices to finish transferring data to and from the file.Figure 2.5a illustrates this situation, where we have a single program, referred to

Run Wait WaitRun

Time

Run Wait WaitRun

RunA

RunA

Run WaitWait WaitRun

RunB Wait Wait

RunB

RunA

RunA

RunB

RunB

RunC

RunC

(a) Uniprogramming

Time

(b) Multiprogramming with two programs

Time

(c) Multiprogramming with three programs

Program A

Program A

Program B

Run Wait WaitRun

Run WaitWait WaitRun

Program A

Program B

Wait WaitCombined

Run WaitWait WaitRunProgram C

Combined

Figure 2.4 System Utilization Example

Read one record from file 15 Execute 100 instructions 1 Write one record to file 15 Total 31

Percent CPU Utilization = 131

= 0.032 = 3.2%

msmsmsms

Figure 2.5 Multiprogramming Example

M02_STAL6329_06_SE_C02.QXD 2/22/08 7:02 PM Page 59

60 CHAPTER 2 / OPERATING SYSTEM OVERVIEW

as uniprogramming. The processor spends a certain amount of time executing,until it reaches an I/O instruction. It must then wait until that I/O instruction con-cludes before proceeding.

This inefficiency is not necessary. We know that there must be enough memoryto hold the OS (resident monitor) and one user program. Suppose that there is roomfor the OS and two user programs.When one job needs to wait for I/O, the processorcan switch to the other job, which is likely not waiting for I/O (Figure 2.5b). Further-more, we might expand memory to hold three, four, or more programs and switchamong all of them (Figure 2.5c). The approach is known as multiprogramming, ormultitasking. It is the central theme of modern operating systems.

To illustrate the benefit of multiprogramming, we give a simple example. Con-sider a computer with 250 Mbytes of available memory (not used by the OS), a disk,a terminal, and a printer.Three programs, JOB1, JOB2, and JOB3, are submitted forexecution at the same time, with the attributes listed in Table 2.1. We assume mini-mal processor requirements for JOB2 and JOB3 and continuous disk and printeruse by JOB3. For a simple batch environment, these jobs will be executed in se-quence. Thus, JOB1 completes in 5 minutes. JOB2 must wait until the 5 minutes areover and then completes 15 minutes after that. JOB3 begins after 20 minutes andcompletes at 30 minutes from the time it was initially submitted. The average re-source utilization, throughput, and response times are shown in the uniprogram-ming column of Table 2.2. Device-by-device utilization is illustrated in Figure 2.6a.It is evident that there is gross underutilization for all resources when averaged overthe required 30-minute time period.

Table 2.1 Sample Program Execution Attributes

JOB1 JOB2 JOB3

Type of job Heavy compute Heavy I/O Heavy I/O

Duration 5 min 15 min 10 minMemory required 50 M 100 M 75 MNeed disk? No No YesNeed terminal? No Yes No

Need printer? No No Yes

Table 2.2 Effects of Multiprogramming on Resource Utilization

Uniprogramming Multiprogramming

Processor use 20% 40%Memory use 33% 67%Disk use 33% 67%Printer use 33% 67%Elapsed time 30 min 15 minThroughput 6 jobs/hr 12 jobs/hrMean response time 18 min 10 min

M02_STAL6329_06_SE_C02.QXD 2/22/08 7:02 PM Page 60

62 CHAPTER 2 / OPERATING SYSTEM OVERVIEW

Now suppose that the jobs are run concurrently under a multiprogrammingoperating system. Because there is little resource contention between the jobs, allthree can run in nearly minimum time while coexisting with the others in the com-puter (assuming that JOB2 and JOB3 are allotted enough processor time to keeptheir input and output operations active). JOB1 will still require 5 minutes to com-plete, but at the end of that time, JOB2 will be one-third finished and JOB3 half fin-ished. All three jobs will have finished within 15 minutes. The improvement isevident when examining the multiprogramming column of Table 2.2, obtained fromthe histogram shown in Figure 2.6b.

As with a simple batch system, a multiprogramming batch system must rely oncertain computer hardware features.The most notable additional feature that is use-ful for multiprogramming is the hardware that supports I/O interrupts and DMA(direct memory access). With interrupt-driven I/O or DMA, the processor can issuean I/O command for one job and proceed with the execution of another job whilethe I/O is carried out by the device controller. When the I/O operation is complete,the processor is interrupted and control is passed to an interrupt-handling programin the OS. The OS will then pass control to another job.

Multiprogramming operating systems are fairly sophisticated compared tosingle-program, or uniprogramming, systems.To have several jobs ready to run, theymust be kept in main memory, requiring some form of memory management. In ad-dition, if several jobs are ready to run, the processor must decide which one to run,this decision requires an algorithm for scheduling. These concepts are discussedlater in this chapter.

Time-Sharing Systems

With the use of multiprogramming, batch processing can be quite efficient. How-ever, for many jobs, it is desirable to provide a mode in which the user interacts di-rectly with the computer. Indeed, for some jobs, such as transaction processing, aninteractive mode is essential.

Today, the requirement for an interactive computing facility can be, and oftenis, met by the use of a dedicated personal computer or workstation. That option wasnot available in the 1960s, when most computers were big and costly. Instead, timesharing was developed.

Just as multiprogramming allows the processor to handle multiple batchjobs at a time, multiprogramming can also be used to handle multiple interactivejobs. In this latter case, the technique is referred to as time sharing, becauseprocessor time is shared among multiple users. In a time-sharing system, multipleusers simultaneously access the system through terminals, with the OS interleav-ing the execution of each user program in a short burst or quantum of computa-tion. Thus, if there are n users actively requesting service at one time, each userwill only see on the average 1/n of the effective computer capacity, not countingOS overhead. However, given the relatively slow human reaction time, the re-sponse time on a properly designed system should be similar to that on a dedi-cated computer.

Both batch processing and time sharing use multiprogramming. The key dif-ferences are listed in Table 2.3.

M02_STAL6329_06_SE_C02.QXD 2/22/08 7:02 PM Page 62

2.2 / THE EVOLUTION OF OPERATING SYSTEMS 63

One of the first time-sharing operating systems to be developed was theCompatible Time-Sharing System (CTSS) [CORB62], developed at MIT by a groupknown as Project MAC (Machine-Aided Cognition, or Multiple-Access Computers).The system was first developed for the IBM 709 in 1961 and later transferred to anIBM 7094.

Compared to later systems, CTSS is primitive. The system ran on a computerwith 32,000 36-bit words of main memory, with the resident monitor consuming5000 of that.When control was to be assigned to an interactive user, the user’s pro-gram and data were loaded into the remaining 27,000 words of main memory. Aprogram was always loaded to start at the location of the 5000th word; this simpli-fied both the monitor and memory management. A system clock generated inter-rupts at a rate of approximately one every 0.2 seconds. At each clock interrupt, theOS regained control and could assign the processor to another user. This tech-nique is known as time slicing. Thus, at regular time intervals, the current userwould be preempted and another user loaded in. To preserve the old user programstatus for later resumption, the old user programs and data were written out todisk before the new user programs and data were read in. Subsequently, the olduser program code and data were restored in main memory when that programwas next given a turn.

To minimize disk traffic, user memory was only written out when the incomingprogram would overwrite it. This principle is illustrated in Figure 2.7. Assume thatthere are four interactive users with the following memory requirements, in words:

• JOB1: 15,000• JOB2: 20,000• JOB3: 5000• JOB4: 10,000

Initially, the monitor loads JOB1 and transfers control to it (a). Later, the mon-itor decides to transfer control to JOB2. Because JOB2 requires more memory thanJOB1, JOB1 must be written out first, and then JOB2 can be loaded (b). Next, JOB3is loaded in to be run. However, because JOB3 is smaller than JOB2, a portion ofJOB2 can remain in memory, reducing disk write time (c). Later, the monitor decidesto transfer control back to JOB1.An additional portion of JOB2 must be written outwhen JOB1 is loaded back into memory (d).When JOB4 is loaded, part of JOB1 andthe portion of JOB2 remaining in memory are retained (e). At this point, if eitherJOB1 or JOB2 is activated, only a partial load will be required. In this example, itis JOB2 that runs next. This requires that JOB4 and the remaining resident portionof JOB1 be written out and that the missing portion of JOB2 be read in (f).

Table 2.3 Batch Multiprogramming versus Time Sharing

Batch Multiprogramming Time Sharing

Principal objective Maximize processor use Minimize response time

Source of directives tooperating system

Job control language commandsprovided with the job

Commands entered at theterminal

M02_STAL6329_06_SE_C02.QXD 2/29/08 11:21 PM Page 63

64 CHAPTER 2 / OPERATING SYSTEM OVERVIEW

The CTSS approach is primitive compared to present-day time sharing, but itworked. It was extremely simple, which minimized the size of the monitor. Becausea job was always loaded into the same locations in memory, there was no need forrelocation techniques at load time (discussed subsequently). The technique of onlywriting out what was necessary minimized disk activity. Running on the 7094, CTSSsupported a maximum of 32 users.

Time sharing and multiprogramming raise a host of new problems for the OS.If multiple jobs are in memory, then they must be protected from interfering witheach other by, for example, modifying each other’s data. With multiple interactiveusers, the file system must be protected so that only authorized users have access toa particular file. The contention for resources, such as printers and mass storage de-vices, must be handled.These and other problems, with possible solutions, will be en-countered throughout this text.

2.3 MAJOR ACHIEVEMENTS

Operating systems are among the most complex pieces of software ever developed.This reflects the challenge of trying to meet the difficult and in some cases compet-ing objectives of convenience, efficiency, and ability to evolve. [DENN80a] proposesthat there have been five major theoretical advances in the development of operat-ing systems:

• Processes• Memory management

Monitor

FreeFree Free

JOB 1

0

32000

5000

20000

20000

(a)

Monitor

JOB 2

0

32000

5000

25000 25000

(b)

Free

Monitor

JOB 2

0

32000

5000

25000

(f)

Monitor

JOB 3

(JOB 2)

0

32000

5000

10000

(c)

Free25000

Monitor

JOB 1

(JOB 2)

0

32000

5000

(d)

20000

15000

Free25000

Monitor

JOB 4

(JOB 2)

(JOB 1)

0

32000

5000

(e)

Figure 2.7 CTSS Operation

M02_STAL6329_06_SE_C02.QXD 2/22/08 7:02 PM Page 64

2.3 / MAJOR ACHIEVEMENTS 65

• Information protection and security• Scheduling and resource management• System structure

Each advance is characterized by principles, or abstractions, developed tomeet difficult practical problems. Taken together, these five areas span many of thekey design and implementation issues of modern operating systems. The brief re-view of these five areas in this section serves as an overview of much of the rest ofthe text.

The Process

The concept of process is fundamental to the structure of operating systems. Thisterm was first used by the designers of Multics in the 1960s [DALE68]. It is a some-what more general term than job. Many definitions have been given for the termprocess, including

• A program in execution• An instance of a program running on a computer• The entity that can be assigned to and executed on a processor• A unit of activity characterized by a single sequential thread of execution, a

current state, and an associated set of system resources

This concept should become clearer as we proceed.Three major lines of computer system development created problems in tim-

ing and synchronization that contributed to the development of the concept of theprocess: multiprogramming batch operation, time sharing, and real-time transactionsystems. As we have seen, multiprogramming was designed to keep the processorand I/O devices, including storage devices, simultaneously busy to achieve maxi-mum efficiency. The key mechanism is this: In response to signals indicating thecompletion of I/O transactions, the processor is switched among the various pro-grams residing in main memory.

A second line of development was general-purpose time sharing. Here, thekey design objective is to be responsive to the needs of the individual user and yet,for cost reasons, be able to support many users simultaneously.These goals are com-patible because of the relatively slow reaction time of the user. For example, if a typ-ical user needs an average of 2 seconds of processing time per minute, then close to30 such users should be able to share the same system without noticeable interfer-ence. Of course, OS overhead must be factored into such calculations.

Another important line of development has been real-time transaction pro-cessing systems. In this case, a number of users are entering queries or updatesagainst a database. An example is an airline reservation system. The key differencebetween the transaction processing system and the time-sharing system is that theformer is limited to one or a few applications, whereas users of a time-sharing sys-tem can engage in program development, job execution, and the use of various ap-plications. In both cases, system response time is paramount.

The principal tool available to system programmers in developing the earlymultiprogramming and multiuser interactive systems was the interrupt. The activity

M02_STAL6329_06_SE_C02.QXD 2/22/08 7:02 PM Page 65

68 CHAPTER 2 / OPERATING SYSTEM OVERVIEW

we will see a number of examples where this process structure is employed to solvethe problems raised by multiprogramming and resource sharing.

Memory Management

The needs of users can be met best by a computing environment that supports mod-ular programming and the flexible use of data. System managers need efficient andorderly control of storage allocation. The OS, to satisfy these requirements, has fiveprincipal storage management responsibilities:

• Process isolation: The OS must prevent independent processes from interfer-ing with each other’s memory, both data and instructions.

• Automatic allocation and management: Programs should be dynamically allo-cated across the memory hierarchy as required.Allocation should be transpar-ent to the programmer. Thus, the programmer is relieved of concerns relatingto memory limitations, and the OS can achieve efficiency by assigning memoryto jobs only as needed.

Context

Data

Program(code)

Context

Data

i

Process index

PC

Baselimit

Otherregisters

i

bh

j

b

hProcess

B

ProcessA

Mainmemory

Processorregisters

Processlist

Program(code)

Figure 2.8 Typical Process Implementation

M02_STAL6329_06_SE_C02.QXD 2/22/08 7:02 PM Page 68

2.3 / MAJOR ACHIEVEMENTS 69

• Support of modular programming: Programmers should be able to defineprogram modules, and to create, destroy, and alter the size of modulesdynamically.

• Protection and access control: Sharing of memory, at any level of the memoryhierarchy, creates the potential for one program to address the memory spaceof another. This is desirable when sharing is needed by particular applications.At other times, it threatens the integrity of programs and even of the OS itself.The OS must allow portions of memory to be accessible in various ways byvarious users.

• Long-term storage: Many application programs require means for storing in-formation for extended periods of time, after the computer has been powereddown.

Typically, operating systems meet these requirements with virtual memory andfile system facilities. The file system implements a long-term store, with informationstored in named objects, called files. The file is a convenient concept for the pro-grammer and is a useful unit of access control and protection for the OS.

Virtual memory is a facility that allows programs to address memory from alogical point of view, without regard to the amount of main memory physicallyavailable. Virtual memory was conceived to meet the requirement of having multi-ple user jobs reside in main memory concurrently, so that there would not be a hia-tus between the execution of successive processes while one process was writtenout to secondary store and the successor process was read in. Because processesvary in size, if the processor switches among a number of processes, it is difficult topack them compactly into main memory. Paging systems were introduced, whichallow processes to be comprised of a number of fixed-size blocks, called pages. Aprogram references a word by means of a virtual address consisting of a page num-ber and an offset within the page. Each page of a process may be located anywherein main memory. The paging system provides for a dynamic mapping between thevirtual address used in the program and a real address, or physical address, in mainmemory.

With dynamic mapping hardware available, the next logical step was to elimi-nate the requirement that all pages of a process reside in main memory simultane-ously. All the pages of a process are maintained on disk. When a process isexecuting, some of its pages are in main memory. If reference is made to a page thatis not in main memory, the memory management hardware detects this andarranges for the missing page to be loaded. Such a scheme is referred to as virtualmemory and is depicted in Figure 2.9.

The processor hardware, together with the OS, provides the user with a “virtualprocessor” that has access to a virtual memory. This memory may be a linear addressspace or a collection of segments, which are variable-length blocks of contiguous ad-dresses. In either case, programming language instructions can reference programand data locations in the virtual memory area. Process isolation can be achieved bygiving each process a unique, nonoverlapping virtual memory. Memory sharing canbe achieved by overlapping portions of two virtual memory spaces. Files are main-tained in a long-term store. Files and portions of files may be copied into the virtualmemory for manipulation by programs.

M02_STAL6329_06_SE_C02.QXD 2/22/08 7:02 PM Page 69

2.3 / MAJOR ACHIEVEMENTS 71

Information Protection and Security

The growth in the use of time-sharing systems and, more recently, computer net-works has brought with it a growth in concern for the protection of information.The nature of the threat that concerns an organization will vary greatly dependingon the circumstances. However, there are some general-purpose tools that can bebuilt into computers and operating systems that support a variety of protection andsecurity mechanisms. In general, we are concerned with the problem of controllingaccess to computer systems and the information stored in them.

Much of the work in security and protection as it relates to operating systemscan be roughly grouped into four categories:

• Availability: Concerned with protecting the system against interruption• Confidentiality: Assures that users cannot read data for which access is

unauthorized• Data integrity: Protection of data from unauthorized modification• Authenticity: Concerned with the proper verification of the identity of users

and the validity of messages or data

Scheduling and Resource Management

A key responsibility of the OS is to manage the various resources available to it(main memory space, I/O devices, processors) and to schedule their use by the vari-ous active processes. Any resource allocation and scheduling policy must considerthree factors:

• Fairness: Typically, we would like all processes that are competing for the useof a particular resource to be given approximately equal and fair access to that

ProcessorVirtualaddress

Realaddress

Diskaddress

Memory-management

unit

Mainmemory

Secondarymemory

Figure 2.10 Virtual Memory Addressing

M02_STAL6329_06_SE_C02.QXD 2/22/08 7:02 PM Page 71

72 CHAPTER 2 / OPERATING SYSTEM OVERVIEW

resource. This is especially so for jobs of the same class, that is, jobs of similardemands.

• Differential responsiveness: On the other hand, the OS may need to discrimi-nate among different classes of jobs with different service requirements. TheOS should attempt to make allocation and scheduling decisions to meet thetotal set of requirements. The OS should also make these decisions dynami-cally. For example, if a process is waiting for the use of an I/O device, the OSmay wish to schedule that process for execution as soon as possible to free upthe device for later demands from other processes.

• Efficiency: The OS should attempt to maximize throughput, minimize re-sponse time, and, in the case of time sharing, accommodate as many users aspossible. These criteria conflict; finding the right balance for a particular situa-tion is an ongoing problem for operating system research.

Scheduling and resource management are essentially operations-researchproblems and the mathematical results of that discipline can be applied. In addition,measurement of system activity is important to be able to monitor performance andmake adjustments.

Figure 2.11 suggests the major elements of the OS involved in the scheduling ofprocesses and the allocation of resources in a multiprogramming environment. TheOS maintains a number of queues, each of which is simply a list of processes waitingfor some resource.The short-term queue consists of processes that are in main mem-ory (or at least an essential minimum portion of each is in main memory) and areready to run as soon as the processor is made available. Any one of these processes

Servicecall

handler (code)

Pass controlto process

Interrupthandler (code)

Short-Termscheduler

(code)

Long-term

queue

Short-term

queue

I/Oqueues

Operating system

Service callfrom process

Interruptfrom process

Interruptfrom I/O

Figure 2.11 Key Elements of an Operating System for Multiprogramming

M02_STAL6329_06_SE_C02.QXD 2/22/08 7:02 PM Page 72

2.4 /DEVELOPMENTS LEADING TO MODERN OPERATING SYSTEMS 77

2.4 DEVELOPMENTS LEADING TO MODERNOPERATING SYSTEMS

Over the years, there has been a gradual evolution of OS structure and capabili-ties. However, in recent years a number of new design elements have been intro-duced into both new operating systems and new releases of existing operatingsystems that create a major change in the nature of operating systems. Thesemodern operating systems respond to new developments in hardware, new appli-cations, and new security threats. Among the key hardware drivers are multi-processor systems, greatly increased processor speed, high-speed networkattachments, and increasing size and variety of memory storage devices. In theapplication arena, multimedia applications, Internet and Web access, andclient/server computing have influenced OS design. With respect to security, In-ternet access to computers has greatly increased the potential threat and increas-ingly sophisticated attacks, such as viruses, worms, and hacking techniques, havehad a profound impact on OS design.

The rate of change in the demands on operating systems requires not justmodifications and enhancements to existing architectures but new ways of organiz-ing the OS. A wide range of different approaches and design elements has beentried in both experimental and commercial operating systems, but much of the workfits into the following categories:

• Microkernel architecture• Multithreading• Symmetric multiprocessing• Distributed operating systems• Object-oriented design

Most operating systems, until recently, featured a large monolithic kernel.Most of what is thought of as OS functionality is provided in these large kernels, in-cluding scheduling, file system, networking, device drivers, memory management,and more. Typically, a monolithic kernel is implemented as a single process, with allelements sharing the same address space. A microkernel architecture assigns only afew essential functions to the kernel, including address spaces, interprocess commu-nication (IPC), and basic scheduling. Other OS services are provided by processes,sometimes called servers, that run in user mode and are treated like any other appli-cation by the microkernel.This approach decouples kernel and server development.Servers may be customized to specific application or environment requirements.The microkernel approach simplifies implementation, provides flexibility, and iswell suited to a distributed environment. In essence, a microkernel interacts withlocal and remote server processes in the same way, facilitating construction of dis-tributed systems.

Multithreading is a technique in which a process, executing an application, is di-vided into threads that can run concurrently. We can make the following distinction:

• Thread: A dispatchable unit of work. It includes a processor context (whichincludes the program counter and stack pointer) and its own data area for a

M02_STAL6329_06_SE_C02.QXD 2/22/08 7:02 PM Page 77

78 CHAPTER 2 / OPERATING SYSTEM OVERVIEW

stack (to enable subroutine branching). A thread executes sequentially and isinterruptable so that the processor can turn to another thread.

• Process: A collection of one or more threads and associated system resources(such as memory containing both code and data, open files, and devices). Thiscorresponds closely to the concept of a program in execution. By breaking a sin-gle application into multiple threads, the programmer has great control over themodularity of the application and the timing of application-related events.

Multithreading is useful for applications that perform a number of essentiallyindependent tasks that do not need to be serialized.An example is a database serverthat listens for and processes numerous client requests. With multiple threads run-ning within the same process, switching back and forth among threads involves lessprocessor overhead than a major process switch between different processes.Threads are also useful for structuring processes that are part of the OS kernel asdescribed in subsequent chapters.

Until recently, virtually all single-user personal computers and workstationscontained a single general-purpose microprocessor. As demands for performanceincrease and as the cost of microprocessors continues to drop, vendors have intro-duced computers with multiple microprocessors. To achieve greater efficiency andreliability, one technique is to employ symmetric multiprocessing (SMP), a termthat refers to a computer hardware architecture and also to the OS behavior that ex-ploits that architecture. A symmetric multiprocessor can be defined as a standalonecomputer system with the following characteristics:

1. There are multiple processors.2. These processors share the same main memory and I/O facilities, interconnected

by a communications bus or other internal connection scheme.3. All processors can perform the same functions (hence the term symmetric).

In recent years, systems with multiple processors on a single chip have becomewidely used, referred to as chip multiprocessor systems. Many of the design issuesare the same, whether dealing with a chip multiprocessor or a multiple-chip SMP.

The OS of an SMP schedules processes or threads across all of the processors.SMP has a number of potential advantages over uniprocessor architecture, includ-ing the following:

• Performance: If the work to be done by a computer can be organized so thatsome portions of the work can be done in parallel, then a system with multipleprocessors will yield greater performance than one with a single processor ofthe same type. This is illustrated in Figure 2.12. With multiprogramming, onlyone process can execute at a time; meanwhile all other processes are waitingfor the processor.With multiprocessing, more than one process can be runningsimultaneously, each on a different processor.

• Availability: In a symmetric multiprocessor, because all processors can per-form the same functions, the failure of a single processor does not halt the sys-tem. Instead, the system can continue to function at reduced performance.

• Incremental growth: A user can enhance the performance of a system byadding an additional processor.

M02_STAL6329_06_SE_C02.QXD 2/22/08 7:02 PM Page 78

82 CHAPTER 2 / OPERATING SYSTEM OVERVIEW

update for Server 2003, Microsoft introduced support for the AMD64 processor ar-chitecture for both desktops and servers.

In 2007, the latest desktop version of Windows was released, known asWindows Vista. Vista supports both the Intel x86 and AMD x64 architectures. Themain features of the release were changes to the GUI and many security improve-ments. The corresponding server release is Windows Server 2008.

Single-User Multitasking

Windows (from Windows 2000 onward) is a significant example of what has becomethe new wave in microcomputer operating systems (other examples are Linux andMacOS). Windows was driven by a need to exploit the processing capabilities oftoday’s 32-bit and 64-bit microprocessors, which rival mainframes of just a few yearsago in speed, hardware sophistication, and memory capacity.

One of the most significant features of these new operating systems is that, al-though they are still intended for support of a single interactive user, they are multi-tasking operating systems. Two main developments have triggered the need formultitasking on personal computers, workstations, and servers. First, with the in-creased speed and memory capacity of microprocessors, together with the supportfor virtual memory, applications have become more complex and interrelated. Forexample, a user may wish to employ a word processor, a drawing program, and aspreadsheet application simultaneously to produce a document. Without multitask-ing, if a user wishes to create a drawing and paste it into a word processing docu-ment, the following steps are required:

1. Open the drawing program.

2. Create the drawing and save it in a file or on a temporary clipboard.

3. Close the drawing program.

4. Open the word processing program.

5. Insert the drawing in the correct location.

If any changes are desired, the user must close the word processing program,open the drawing program, edit the graphic image, save it, close the drawing pro-gram, open the word processing program, and insert the updated image. This be-comes tedious very quickly. As the services and capabilities available to usersbecome more powerful and varied, the single-task environment becomes moreclumsy and user unfriendly. In a multitasking environment, the user opens each ap-plication as needed, and leaves it open. Information can be moved around among anumber of applications easily. Each application has one or more open windows, anda graphical interface with a pointing device such as a mouse allows the user to navi-gate quickly in this environment.

A second motivation for multitasking is the growth of client/server computing.With client/server computing, a personal computer or workstation (client) and a hostsystem (server) are used jointly to accomplish a particular application. The two arelinked, and each is assigned that part of the job that suits its capabilities. Client/servercan be achieved in a local area network of personal computers and servers or bymeans of a link between a user system and a large host such as a mainframe. An

M02_STAL6329_06_SE_C02.QXD 2/22/08 7:02 PM Page 82

90 CHAPTER 2 / OPERATING SYSTEM OVERVIEW

Windows is not a full-blown object-oriented OS. It is not implemented in anobject-oriented language. Data structures that reside completely within one Execu-tive component are not represented as objects. Nevertheless, Windows illustratesthe power of object-oriented technology and represents the increasing trend towardthe use of this technology in OS design.

2.6 TRADITIONAL UNIX SYSTEMS

History

The history of UNIX is an oft-told tale and will not be repeated in great detail here.Instead, we provide a brief summary.

UNIX was initially developed at Bell Labs and became operational on a PDP-7in 1970. Some of the people involved at Bell Labs had also participated in the time-sharing work being done at MIT’s Project MAC. That project led to the developmentof first CTSS and then Multics. Although it is common to say that the original UNIXwas a scaled-down version of Multics, the developers of UNIX actually claimed to bemore influenced by CTSS [RITC78]. Nevertheless, UNIX incorporated many ideasfrom Multics.

Work on UNIX at Bell Labs, and later elsewhere, produced a series of versionsof UNIX.The first notable milestone was porting the UNIX system from the PDP-7to the PDP-11. This was the first hint that UNIX would be an operating system forall computers. The next important milestone was the rewriting of UNIX in the pro-gramming language C. This was an unheard-of strategy at the time. It was generallyfelt that something as complex as an operating system, which must deal with time-critical events, had to be written exclusively in assembly language. Reasons for thisattitude include the following:

• Memory (both RAM and secondary store) was small and expensive by today’sstandards, so effective use was important. This included various techniques foroverlaying memory with different code and data segments, and self-modifyingcode.

• Even though compilers had been available since the 1950s, the computer in-dustry was generally skeptical of the quality of automatically generated code.With resource capacity small, efficient code, both in terms of time and space,was essential.

• Processor and bus speeds were relatively slow, so saving clock cycles couldmake a substantial difference in execution time.

The C implementation demonstrated the advantages of using a high-level lan-guage for most if not all of the system code. Today, virtually all UNIX implementa-tions are written in C.

These early versions of UNIX were popular within Bell Labs. In 1974, theUNIX system was described in a technical journal for the first time [RITC74]. Thisspurred great interest in the system. Licenses for UNIX were provided to commer-cial institutions as well as universities. The first widely available version outside BellLabs was Version 6, in 1976.The follow-on Version 7, released in 1978, is the ancestor

M02_STAL6329_06_SE_C02.QXD 2/22/08 7:02 PM Page 90

2.6 / TRADITIONAL UNIX SYSTEMS 91

of most modern UNIX systems.The most important of the non-AT&T systems to bedeveloped was done at the University of California at Berkeley, called UNIX BSD(Berkeley Software Distribution), running first on PDP and then VAX computers.AT&T continued to develop and refine the system. By 1982, Bell Labs had combinedseveral AT&T variants of UNIX into a single system, marketed commercially asUNIX System III. A number of features was later added to the operating system toproduce UNIX System V.

Description

Figure 2.14 provides a general description of the classic UNIX architecture. The un-derlying hardware is surrounded by the OS software. The OS is often called the sys-tem kernel, or simply the kernel, to emphasize its isolation from the user andapplications. It is the UNIX kernel that we will be concerned with in our use ofUNIX as an example in this book. UNIX also comes equipped with a number ofuser services and interfaces that are considered part of the system. These can begrouped into the shell, other interface software, and the components of the C com-piler (compiler, assembler, loader).The layer outside of this consists of user applica-tions and the user interface to the C compiler.

A closer look at the kernel is provided in Figure 2.15. User programs can in-voke OS services either directly or through library programs. The system call inter-face is the boundary with the user and allows higher-level software to gain access tospecific kernel functions. At the other end, the OS contains primitive routines thatinteract directly with the hardware. Between these two interfaces, the system is di-vided into two main parts, one concerned with process control and the other con-cerned with file management and I/O. The process control subsystem is responsible

Hardware

Kernel

System callinterface

UNIX commandsand libraries

User-writtenapplications

Figure 2.14 General UNIX Architecture

M02_STAL6329_06_SE_C02.QXD 2/22/08 7:02 PM Page 91

2.7 / MODERN UNIX SYSTEMS 93

2.7 MODERN UNIX SYSTEMS

As UNIX evolved, the number of different implementations proliferated, each pro-viding some useful features. There was a need to produce a new implementationthat unified many of the important innovations, added other modern OS design fea-tures, and produced a more modular architecture. Typical of the modern UNIX ker-nel is the architecture depicted in Figure 2.16. There is a small core of facilities,written in a modular fashion, that provide functions and services needed by a num-ber of OS processes. Each of the outer circles represents functions and an interfacethat may be implemented in a variety of ways.

We now turn to some examples of modern UNIX systems.

System V Release 4 (SVR4)

SVR4, developed jointly by AT&T and Sun Microsystems, combines features fromSVR3, 4.3BSD, Microsoft Xenix System V, and SunOS. It was almost a total rewrite

Commonfacilities

Virtualmemory

framework

Blockdeviceswitch

execswitch

a.out

File mappings

Disk driver

Tape driver

Networkdriver

ttydriver

Systemprocesses

Time-sharingprocesses

RFS

s5fs

FFS

NFS

Anonymousmappings

coff

elf

Streams

vnode/vfsinterface

Schedulerframework

Devicemappings

Figure 2.16 Modern UNIX Kernel

M02_STAL6329_06_SE_C02.QXD 2/22/08 7:02 PM Page 93

100 CHAPTER 2 / OPERATING SYSTEM OVERVIEW

Table 2.7 Some Linux System Calls

Filesystem related

close Close a file descriptor.

link Make a new name for a file.

open Open and possibly create a file or device.

read Read from file descriptor.

write Write to file descriptor

Process related

execve Execute program.

exit Terminate the calling process.

getpid Get process identification.

setuid Set user identity of the current process.

prtrace Provides a means by which a parent process my observe and control the execu-tion of another process, and examine and change its core image and registers.

Scheduling related

sched_getparam Sets the scheduling parameters associated with the scheduling policy for theprocess identified by pid.

sched_get_priority_max Returns the maximum priority value that can be used with the scheduling algo-rithm identified by policy.

sched_setscheduler Sets both the scheduling policy (e.g., FIFO) and the associated parametersfor the process pid.

sched_rr_get_interval Writes into the timespec structure pointed to by the parameter tp the roundrobin time quantum for the process pid.

sched_yield A process can relinquish the processor voluntarily without blocking via this sys-tem call. The process will then be moved to the end of the queue for its staticpriority and a new process gets to run.

Interprocess Communication (IPC) related

msgrcv A message buffer structure is allocated to receive a message. The system callthen reads a message from the message queue specified by msqid into the newlycreated message buffer.

semctl Performs the control operation specified by cmd on the semaphore set semid.

semop Performs operations on selected members of the semaphore set semid.

shmat Attaches the shared memory segment identified by shmid to the data segmentof the calling process.

shmctl Allows the user to receive information on a shared memory segment, set the owner,group, and permissions of a shared memory segment, or destroy a segment.

• System calls: The system call is the means by which a process requests a specifickernel service. There are several hundred system calls, which can be roughlygrouped into six categories: filesystem, process, scheduling, interprocess com-munication, socket (networking), and miscellaneous.Table 2.7 defines a few ex-amples in each category.

M02_STAL6329_06_SE_C02.QXD 2/22/08 7:02 PM Page 100

Chapter Two:

File Management

Files, File Systems, and File Management Systems

File Organization and Access

File Directories and Structure

File Sharing and Secondary Storage Management

FILE MANAGEMENT12.1 Overview

Files and File systemsFile StructureFile Management Systems

12.2 File Organization and AccessThe PileThe Sequential FileThe Indexed Sequential FileThe Indexed FileThe Direct or Hashed File

12.3 File DirectoriesContentsStructureNaming

12.4 File SharingAccess RightsSimultaneous Access

12.5 Record Blocking12.6 Secondary Storage Management

File AllocationFree Space ManagementVolumesReliability

12.7 File System Security

12.8 UNIX File ManagementInodesFile AllocationDirectoriesVolume StructureTraditional UNIX File

Access ControlAccess Control Lists in

UNIX12.9 LINUX Virtual File System

The Superblock ObjectThe Inode ObjectThe Dentry ObjectThe File Object

12.10 Windows File SystemKey Features of NTFSNTFS Volume and File

StructureRecoverability

12.11 Summary12.12 Recommended Reading12.13 Key Terms, Review Questions,

and Problems

551

CHAPTER

M12_STAL6329_06_SE_C12.QXD 2/21/08 9:40 PM Page 551

552 CHAPTER 12 / FILE MANAGEMENT

In most applications, the file is the central element.With the exception of real-time ap-plications and some other specialized applications, the input to the application is bymeans of a file, and in virtually all applications, output is saved in a file for long-termstorage and for later access by the user and by other programs.

Files have a life outside of any individual application that uses them for inputand/or output. Users wish to be able to access files, save them, and maintain the in-tegrity of their contents. To aid in these objectives, virtually all operating systems pro-vide file management systems. Typically, a file management system consists of systemutility programs that run as privileged applications. However, at the very least, a filemanagement system needs special services from the operating system; at the most, theentire file management system is considered part of the operating system.Thus, it is ap-propriate to consider the basic elements of file management in this book.

We begin with an overview, followed by a look at various file organizationschemes.Although file organization is generally beyond the scope of the operating sys-tem, it is essential to have a general understanding of the common alternatives to ap-preciate some of the design tradeoffs involved in file management. The remainder ofthis chapter looks at other topics in file management.

12.1 OVERVIEW

Files and File Systems

From the user’s point of view, one of the most important parts of an operating sys-tem is the file system.The file system provides the resource abstractions typically as-sociated with secondary storage. The file system permits users to create datacollections, called files, with desirable properties, such as

• Long-term existence: Files are stored on disk or other secondary storage anddo not disappear when a user logs off.

• Sharable between processes: Files have names and can have associated accesspermissions that permit controlled sharing.

• Structure: Depending on the file system, a file can have an internal structurethat is convenient for particular applications. In addition, files can be orga-nized into hierarchical or more complex structure to reflect the relationshipsamong files.

Any file system provides not only a means to store data organized as files, buta collection of functions that can be performed on files. Typical operations includethe following:

• Create: A new file is defined and positioned within the structure of files.• Delete: A file is removed from the file structure and destroyed.• Open: An existing file is declared to be “opened” by a process, allowing the

process to perform functions on the file.• Close: The file is closed with respect to a process, so that the process no longer

may perform functions on the file, until the process opens the file again.

M12_STAL6329_06_SE_C12.QXD 2/21/08 9:40 PM Page 552

12.1 / OVERVIEW 553

• Read: A process reads all or a portion of the data in a file.• Write: A process updates a file, either by adding new data that expands the

size of the file or by changing the values of existing data items in the file.

Typically, a file system maintains a set of attributes associated with the file.These include owner, creation time, time last modified, access privileges, and so on.

File Structure

Four terms are in common use when discussing files:

• Field• Record• File• Database

A field is the basic element of data. An individual field contains a single value,such as an employee’s last name, a date, or the value of a sensor reading. It is charac-terized by its length and data type (e.g., ASCII string, decimal). Depending on thefile design, fields may be fixed length or variable length. In the latter case, the fieldoften consists of two or three subfields: the actual value to be stored, the name ofthe field, and, in some cases, the length of the field. In other cases of variable-lengthfields, the length of the field is indicated by the use of special demarcation symbolsbetween fields.

A record is a collection of related fields that can be treated as a unit by someapplication program. For example, an employee record would contain such fields asname, social security number, job classification, date of hire, and so on. Again, de-pending on design, records may be of fixed length or variable length. A record willbe of variable length if some of its fields are of variable length or if the number offields may vary. In the latter case, each field is usually accompanied by a field name.In either case, the entire record usually includes a length field.

A file is a collection of similar records. The file is treated as a single entity byusers and applications and may be referenced by name. Files have file names andmay be created and deleted. Access control restrictions usually apply at the filelevel.That is, in a shared system, users and programs are granted or denied access toentire files. In some more sophisticated systems, such controls are enforced at therecord or even the field level.

Some file systems are structured only in terms of fields, not records. In thatcase, a file is a collection of fields.

A database is a collection of related data. The essential aspects of a databaseare that the relationships that exist among elements of data are explicit and that thedatabase is designed for use by a number of different applications. A database maycontain all of the information related to an organization or project, such as a busi-ness or a scientific study. The database itself consists of one or more types of files.Usually, there is a separate database management system that is independent of theoperating system, although that system may make use of some file managementprograms.

M12_STAL6329_06_SE_C12.QXD 2/21/08 9:40 PM Page 553

554 CHAPTER 12 / FILE MANAGEMENT

Users and applications wish to make use of files. Typical operations that mustbe supported include the following:

• Retrieve_All: Retrieve all the records of a file.This will be required for anapplication that must process all of the information in the file at one time. Forexample, an application that produces a summary of the information in the filewould need to retrieve all records. This operation is often equated with theterm sequential processing, because all of the records are accessed in sequence.

• Retrieve_One: This requires the retrieval of just a single record. Interac-tive, transaction-oriented applications need this operation.

• Retrieve_Next: This requires the retrieval of the record that is “next” insome logical sequence to the most recently retrieved record. Some interactiveapplications, such as filling in forms, may require such an operation. A pro-gram that is performing a search may also use this operation.

• Retrieve_Previous: Similar to Retrieve_Next, but in this case therecord that is “previous” to the currently accessed record is retrieved.

• Insert_One: Insert a new record into the file. It may be necessary that thenew record fit into a particular position to preserve a sequencing of the file.

• Delete_One: Delete an existing record. Certain linkages or other data struc-tures may need to be updated to preserve the sequencing of the file.

• Update_One: Retrieve a record, update one or more of its fields, and rewritethe updated record back into the file. Again, it may be necessary to preservesequencing with this operation. If the length of the record has changed, the up-date operation is generally more difficult than if the length is preserved.

• Retrieve_Few: Retrieve a number of records. For example, an applicationor user may wish to retrieve all records that satisfy a certain set of criteria.

The nature of the operations that are most commonly performed on a file willinfluence the way the file is organized, as discussed in Section 12.2.

It should be noted that not all file systems exhibit the sort of structure dis-cussed in this subsection. On UNIX and UNIX-like systems, the basic file structureis just a stream of bytes. For example, a C program is stored as a file but does nothave physical fields, records, and so on.

File Management Systems

A file management system is that set of system software that provides services tousers and applications in the use of files. Typically, the only way that a user or appli-cation may access files is through the file management system. This relieves the useror programmer of the necessity of developing special-purpose software for each ap-plication and provides the system with a consistent, well-defined means of control-ling its most important asset. [GROS86] suggests the following objectives for a filemanagement system:

• To meet the data management needs and requirements of the user, which in-clude storage of data and the ability to perform the aforementioned operations

• To guarantee, to the extent possible, that the data in the file are valid

M12_STAL6329_06_SE_C12.QXD 2/21/08 9:40 PM Page 554

12.1 / OVERVIEW 555

• To optimize performance, both from the system point of view in terms of over-all throughput and from the user’s point of view in terms of response time

• To provide I/O support for a variety of storage device types• To minimize or eliminate the potential for lost or destroyed data• To provide a standardized set of I/O interface routines to user processes• To provide I/O support for multiple users, in the case of multiple-user systems

With respect to the first point, meeting user requirements, the extent of suchrequirements depends on the variety of applications and the environment in whichthe computer system will be used. For an interactive, general-purpose system, thefollowing constitute a minimal set of requirements:

1. Each user should be able to create, delete, read, write, and modify files.2. Each user may have controlled access to other users’ files.3. Each user may control what types of accesses are allowed to the user’s files.4. Each user should be able to restructure the user’s files in a form appropriate to

the problem.5. Each user should be able to move data between files.6. Each user should be able to back up and recover the user’s files in case of dam-

age.7. Each user should be able to access his or her files by name rather than by nu-

meric identifier.

These objectives and requirements should be kept in mind throughout our discus-sion of file management systems.

File System Architecture One way of getting a feel for the scope of file man-agement is to look at a depiction of a typical software organization, as suggestedin Figure 12.1. Of course, different systems will be organized differently, but this

Logical I/O

Basic I/O supervisor

Basic file system

Disk device driver Tape device driver

IndexedsequentialPile Sequential Indexed Hashed

User program

Figure 12.1 File System Software Architecture

M12_STAL6329_06_SE_C12.QXD 2/21/08 9:40 PM Page 555

558 CHAPTER 12 / FILE MANAGEMENT

12.2 FILE ORGANIZATION AND ACCESS

In this section, we use the term file organization to refer to the logical structuring ofthe records as determined by the way in which they are accessed. The physical orga-nization of the file on secondary storage depends on the blocking strategy and thefile allocation strategy, issues dealt with later in this chapter.

In choosing a file organization, several criteria are important:

• Short access time• Ease of update• Economy of storage• Simple maintenance• Reliability

The relative priority of these criteria will depend on the applications that willuse the file. For example, if a file is only to be processed in batch mode, with all ofthe records accessed every time, then rapid access for retrieval of a single record isof minimal concern.A file stored on CD-ROM will never be updated, and so ease ofupdate is not an issue.

These criteria may conflict. For example, for economy of storage, there should beminimum redundancy in the data. On the other hand, redundancy is a primary meansof increasing the speed of access to data.An example of this is the use of indexes.

The number of alternative file organizations that have been implemented orjust proposed is unmanageably large, even for a book devoted to file systems. In thisbrief survey, we will outline five fundamental organizations. Most structures used inactual systems either fall into one of these categories or can be implemented with acombination of these organizations. The five organizations, the first four of whichare depicted in Figure 12.3, are as follows:

• The pile• The sequential file• The indexed sequential file• The indexed file• The direct, or hashed, file

Table 12.1 summarizes relative performance aspects of these five organizations.1

The Pile

The least-complicated form of file organization may be termed the pile. Data arecollected in the order in which they arrive. Each record consists of one burst of data.The purpose of the pile is simply to accumulate the mass of data and save it. Recordsmay have different fields, or similar fields in different orders. Thus, each field shouldbe self-describing, including a field name as well as a value. The length of each field

1The table employs the “big-O” notation, used for characterizing the time complexity of algorithms. Ap-pendix D explains this notation.

M12_STAL6329_06_SE_C12.QXD 2/21/08 9:40 PM Page 558

12.2 / FILE ORGANIZATION AND ACCESS 559

must be implicitly indicated by delimiters, explicitly included as a subfield, or knownas default for that field type.

Because there is no structure to the pile file, record access is by exhaustivesearch. That is, if we wish to find a record that contains a particular field with a par-ticular value, it is necessary to examine each record in the pile until the desiredrecord is found or the entire file has been searched. If we wish to find all recordsthat contain a particular field or contain that field with a particular value, then theentire file must be searched.

Pile files are encountered when data are collected and stored prior to process-ing or when data are not easy to organize. This type of file uses space well when thestored data vary in size and structure, is perfectly adequate for exhaustive searches,

(a) Pile file

(c) Indexed sequential file

(d) Indexed file

Variable-length recordsVariable set of fieldsChronological order

(b) Sequential file

Fixed-length recordsFixed set of fields in fixed orderSequential order based on key field

Main file

Overflowfile

Indexlevels

Exhaustiveindex

Exhaustiveindex

Partialindex

Primary file(variable-length records)

Index

12

n

Figure 12.3 Common File Organizations

M12_STAL6329_06_SE_C12.QXD 2/21/08 9:40 PM Page 559

560 CHAPTER 12 / FILE MANAGEMENT

and is easy to update. However, beyond these limited uses, this type of file is unsuit-able for most applications.

The Sequential File

The most common form of file structure is the sequential file. In this type of file, afixed format is used for records. All records are of the same length, consisting of thesame number of fixed-length fields in a particular order. Because the length and po-sition of each field are known, only the values of fields need to be stored; the fieldname and length for each field are attributes of the file structure.

One particular field, usually the first field in each record, is referred to as thekey field. The key field uniquely identifies the record; thus key values for differentrecords are always different. Further, the records are stored in key sequence: alpha-betical order for a text key, and numerical order for a numerical key.

Sequential files are typically used in batch applications and are generally opti-mum for such applications if they involve the processing of all the records (e.g., abilling or payroll application).The sequential file organization is the only one that iseasily stored on tape as well as disk.

For interactive applications that involve queries and/or updates of individualrecords, the sequential file provides poor performance. Access requires the sequentialsearch of the file for a key match. If the entire file, or a large portion of the file, can bebrought into main memory at one time, more efficient search techniques are possible.Nevertheless, considerable processing and delay are encountered to access a record in alarge sequential file. Additions to the file also present problems. Typically, a sequential

Table 12.1 Grades of Performance for Five Basic File Organizations [WIED87]

Space UpdateAttributes Record Size Retrieval

File SingleMethod Variable Fixed Equal Greater record Subset Exhaustive

Pile A B A E E D B

Sequential F A D F F D A

Indexed F B B D B D Bsequential

Indexed B C C C A B D

Hashed F B B F B F E

A ! Excellent, well suited to this purpose ! O(r)

B ! Good ! O(o " r)

C ! Adequate ! O(r log n)

D ! Requires some extra effort ! O(n)

E ! Possible with extreme effort ! O(r " n)

F ! Not reasonable for this purpose ! O(n#1)

where

r ! size of the result

o ! number of records that overflow

n ! number of records in file

M12_STAL6329_06_SE_C12.QXD 2/21/08 9:40 PM Page 560

12.2 / FILE ORGANIZATION AND ACCESS 561

file is stored in simple sequential ordering of the records within blocks.That is, the phys-ical organization of the file on tape or disk directly matches the logical organization ofthe file. In this case, the usual procedure is to place new records in a separate pile file,called a log file or transaction file. Periodically, a batch update is performed that mergesthe log file with the master file to produce a new file in correct key sequence.

An alternative is to organize the sequential file physically as a linked list. One ormore records are stored in each physical block. Each block on disk contains a pointerto the next block.The insertion of new records involves pointer manipulation but doesnot require that the new records occupy a particular physical block position. Thus,some added convenience is obtained at the cost of additional processing and overhead.

The Indexed Sequential File

A popular approach to overcoming the disadvantages of the sequential file is the in-dexed sequential file.The indexed sequential file maintains the key characteristic of thesequential file: records are organized in sequence based on a key field.Two features areadded: an index to the file to support random access, and an overflow file. The indexprovides a lookup capability to reach quickly the vicinity of a desired record.The over-flow file is similar to the log file used with a sequential file but is integrated so that arecord in the overflow file is located by following a pointer from its predecessor record.