Title ATR inhibitor AZD6738 (ceralasertib) exerts antitumor ...

Multi-sensor ATR and Identification of Friend or Foe Using MLANS

Transcript of Multi-sensor ATR and Identification of Friend or Foe Using MLANS

P e r g a m o n

o893-6o~9s~o?8-x

Neural Networks, Vol. 8, No. 7/8, pp. 1185-1200, 1995 Copyright O 1995 Elsevier Science Ltd

Printed in Great Britain. All rights reserved 089Y6080/95 $9.50 + .00

1995 SPECIAL ISSUE

Multi-sensor ATR and Identification of Friend or Foe Using M L A N S

LEONID I. PERLOVSKY, 1 JULIAN A. CHERNICK 2 AND WILLIAM H . SCHOENDORF 1

INiehols Research Corporation and 2U.S. Army Materiel Systems Analysis Activity

(Received 27 October 1994; revised and accepted 11 May 1995)

Abstract--Automatic target recognition and a related problem o f non-cooperative identification friend or foe often require fusing o f multiple sensor information into a unified battlefield picture. State o f the art approaches to this problem attempt to solve it in steps: first targets are detected, then target tracks are estimated, these are used to correlate, or associate information between multiple sensors, the associated information is combined into the unified picture and targets are identified. A drawback o f dividing the problem into smaller steps is that only partial information is utilized at every step. For example, detection o f a target in clutter may not be possible on a single frame o f a single sensor, and the target motion information may have to be utilized requiring track estimation, so several steps have to be performed concurrently. This paper describes such a concurrent solution o f the multiple aspects o f this problem based on the M L A N S neural network that utilizes internal world models. The internal models in M L A N S encode a large number o f neural weights in terms o f relatively few model parameters so M L A N S learning occurs with significantly fewer examples than required with unstructured neural networks. We describe the model-based neural network paradigm, MLANS, present results on M L A N S concurrently performing detection and tracking, adaptively estimating background properties, and learning and classifying similar U.S. and foreign military vehicles. The M L A N S performance is compared to that o f the multiple hypothesis tracker, the classical statistical quadratic classifier, and the nearest neighbor classifier, demonstrating significant performance improvement.

Keywords--Neural networks, Model-based, ATR, Sensor fusion, Tracking, Detection, IFF, Classification.

1. INTRODUCTION

An important application of ATR technology is identification friend or foe (IFF) which became evident during the Persian Gulf operation. The tragedy of friendly fire, according to the participants (Brown, 1994), is the most devastating experience in contemporary military operations. In non-military situations, I F F is normally accomplished by using special radio frequency transponders, however, in certain cases, especially in certain military engage- ments, this cooperative procedure cannot be used, and the non-cooperative IFF issue has to be resolved as part of the ATR problem. This requires discriminating between similar targets in clutter which is a complicated problem stressing single

Acknowledgements: This work was partially supported by the U.S. Army. Robert Coons and David Tye have contributed to specific aspects of this project.

Requests for reprints should be sent to Leonid I. Perlovsky, Nichols Research Corporation, 251 Edgewater Drive, Wakefield, MA 01880, USA; E-mail: [email protected]

sensor and human capabilities and further compli- cated by the fact that image clutter often makes target detection very difficult. A potential solution to this problem utilizes multiple sensors that are available in the contemporary battlefield, however, sensor fusion often involves combining multi-sensor information into a unified picture of a battle which entails an additional set of complicated problems to be solved.

State of the art approaches to sensor fusion attempt to solve it in steps, often called surveillance functions, in the following fashion. First, targets and other objects are detected using individual sensors, which is often complicated by changing clutter properties requiring adaptive detection capabilities. When clutter is strong, even adaptive detection techniques result in a number of false detections or false-alarms on each sensor frame (or scan). Second, object tracks are estimated separately for each sensor requiring correct association between objects detected in multiple frames; this function is called frame association or correlation. The track coordinates, or object positions in the case of stationary ground objects, are used to correlate, or associate informa-

1185

1186 L. I. Perlovsky, J. A. Chernick and W. H. Schoendorf

tion between multiple sensors. In addition, the sensory information should be correlated or asso- ciated with available ground maps. This associated information is combined into the unified battle picture and targets are identified by combining evidence from multiple sensors.

Not all these steps are necessarily performed every time, and other variations may occur; for example, objects might be preliminary classified using indivi- dual sensor data, and sensor fusion used to combine the individual sensor decisions. Part of the problem is sometimes solved by using fused sensors, that is sensors that have a fixed association pattern, for example, a common optical system for multiple spectral bands. (Similar solutions are employed by nature as well: owls' visual and hearing systems are fused to a significant degree in this way. However, higher animals and humans fuse their sensory systems in more cognitive fashions.) Still, the remaining aspects of the problem are complicated and are usually solved in multiple steps.

A drawback of dividing the problem into smaller surveillance function steps is that only partial information is utilized at every step. For example, detection of an object in clutter may be considerably enhanced if the object motion information is used rather than using only a single frame of single sensor data. This requires frame association, so the detection, association and tracking steps have to be performed concurrently. The fusion of these func- tions has been called concurrent detection and tracking, or track-while-detect, or track-before- detect. The human visual system is good at this task, but this fusion problem has not been solved mathematically in the past. A concept of concurrent detection, tracking, and fusion is sometimes called predetection or pretrack fusion. This concept of concurrent processing of diverse information can be carried further to concurrent detection, tracking, fusion, and identification, utilizing multiple sensors and other sources of information.

This paper describes such a concurrent solution of the multiple aspects of this problem based on the MLANS neural network that utilizes internal world models. The internal models permit MLANS to organize diverse information coming from multiple sensors and other information sources into a unified picture (Perlovsky, 1992a). These models combine the a-priori information that might be available, with adaptivity to varying or unknown properties of the data. Any model-based approach can be no better than models it utilizes, therefore MLANS models are designed to combine deterministic and statistical aspects of the data. When little deterministic information is available, MLANS models can be purely statistical. These statistical models are very general, they are not limited to the usual Gaussian

assumption and can model probability distributions of any shape. The MLA N S can use deterministic information in many ways: as simple functional relationships, as three-dimensional geometric models or as time-space dynamical models that can be described, for example, as simple track equations for point objects or as complicated partial differential equations describing distributed spatio-temporal physical/chemical processes.

The internal models in MLANS encode a large number of neural weights in terms of relatively few model parameters. In general, the flexibility of adaptive neural networks is due to the large number of adaptive weights which are learned from the data (Carpenter, 1989). This often leads to a requirement for a large amount of training data (Perlovsky, 1991 a, 1994a). The MLANS is capable of learning compli- cated decision regions from a relatively few training samples due to its model-based architecture: all its weights are parameterized in terms of a few model parameters. Therefore, compared to unstructured neural networks, MLANS learning occurs with significantly fewer examples, because only a small number of parameters have to be learned. These parameters are then used to derive a large number of weights which provides MLANS with the needed flexibility. MLANS has been shown to reach information-theoretic limits on the speed of learning and adaptation as determined by the Cramer-Rao bound (Perlovsky, 1988a, 1989, 1990).

The computational concept of MLANS can be contrasted with that of other algorithms and paradigms utilized in ATR, such as statistical classifiers, rule-based systems, and model-based vision (MBV). Statistical classifiers are usually limited by an assumption of the Gaussian distribu- tion (or another simple parametric distribution) for the class-conditional probability distribution func- tions (pdfs). When applied in low or medium dimensional feature spaces, statistical classifiers can learn from a modest amount of data using standard statistical procedures, such as the maximum like- lihood principle, and can be fairly adaptive. However, finding few features that adequately characterize the data is often impossible so the number of features is expanded, and as a consequence a large amount of training data is required. In order to avoid this requirement for a large amount of training data, rule- based systems and MBV type algorithms have been proposed that utilize detailed a-priori information about the targets and therefore do not need much training data. These approaches work well when there is not much variability and uncertainty, however, a need to account for uncertainties has led to difficulties with these systems. Rule-based and MBV type systems perform multiple steps to match a-priori internal models (or information) to the data, and at each step

MLANS Fusion for ATR and IFF 1187

there are some uncertainties that have to be resolved by evaluating multiple alternatives leading to a combinatorial explosion of the number of required computations. Thus, statistical algorithms can be used to achieve adaptivity, but they cannot utilize complicated a-priori knowledge, while rule-based and MBV type systems can utilize a-priori knowledge but have difficulties in achieving adaptivity (Perlovs- ky, 1991a, 1994a).

The computational concept of MLANS can also be contrasted with that of the multiple hypothesis testing and multiple hypothesis tracking type algorithms (MHT), which also attempt to combine adaptivity and a-priori knowledge by utilizing adaptive a-priori models. MHT can combine diverse information by associating data with internal models. The association is performed by a combinatorial procedure that evaluates every possible way to associate the data and the models. This leads to a combinatorial explosion of the amount of required computations, similar to the difficulties encountered by rule-based and MBV systems in their attempts to combine adaptivity and a-priori knowledge. This difficulty is resolved in MLANS which combines a- priori knowledge with adaptivity requiring only a linear increase in the amount of computations with the problem complexity (e.g., number of radar scans). In this way, MLANS can be viewed as a linear computational complexity alternative to MHT.

The following sections describe the model based neural network paradigm MLANS and present results on its application to various aspects of the non-cooperative IFF problem, one of which includes positive identification for fratricide reduction by fusing IR and MMW sensors. We present results on the adaptive estimation of background properties for improved detection, and the concurrent performance of detection, tracking, and, classification of U.S. and foreign military vehicles while performing adaptive learning. The MLANS performance is compared with that of the MHT-tracker and the classical statistical classifiers including quadratic and nearest neighbor classifier with MLANS demonstrating significant performance improvement.

2. MAXIMUM LIKELIHOOD ADAPTIVE NEURAL SYSTEM

MLANS was first introduced by Perlovksy in 1987. The architecture of MLANS was described in Perlovsky (1988b) and Perlovsky and McManus (1991) for classification applications, in Perlovsky (1994b) for signal processing applications, and in Perlovsky and Jaskolski (1994) for control applica- tions. A brief summary of MLANS follows with an emphasis on the modifications needed for the concurrent performance of multiple surveillance

F ~ ~ • CLASSES

INPUT DATA

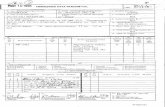

FIGURE 1. A general MLANS neural network architecture.

functions. A general, top level architecture of MLANS is shown in Figure 1. MLANS has as its inputs all the available data from multiple sensors within a specified time window. This includes metric data (such as aspect angles and ranges) and radio- metric information such as signal amplitudes or image pixel intensities. The two main MLANS subnetworks are shown as two blocks in this figure, the association subnetwork estimates data-to-model association weights and the model parameter subnet- work estimates model parameters.

The MLANS neuronal equations are determined by the likelihood structure of MLANS models. Accord- ing to the model-based concept of MLANS, all the information available for deterministic modeling is included in the MLANS models, the remaining variability in the observations is a random process with statistically independent samples, so that the total probability density function (pdf) for all observations {Xn,n = 1 , . . . ,N} is a product of individual pdfs, pdf(Xn) which are modeled as a mixture distribution of individual components or modes:

N

pdf{Xl,... ,X~} = H pdf(Xn), n=l

K M

pdf(X.) = E E rkm pdf(X,[k,m). (1) k = l m = l

Modeling the pdf for Xn as a sum of individual modes is equivalent to expanding the pdf in a set of basis functions. This will account for any pdf shape, if the basis functions or individual mode pdfs form a complete set. For example, Gaussian functions form a complete set (note that the 6-function is a Gaussian function) and, in addition, in many applications, only a few Gaussian modes are sufficient to model the pdf. For these reasons individual modes are often modeled as Gaussian:

pdf(Xn Ik, m) = (2~r) - d/2(det Ckm) - i/2

T - I X e x p ( - - O . 5 D n k m C k m D n k m ) ,

D n k m : Xn -- M n k m . (2)

1188 L. L Perlovsky, J. A. Chernick and W. 1-1. Schoendorf

Here pdf(X, lk, m ) is a pdf for the observation X~, given it is from mode m of object-class k. The observation X, is a vector of sensor measurements including qualities such as angles, ranges, Doppler velocities (if available), multispectral amplitudes or features derived from sensor measurements. An index n spans observations on multiple frames (scans) of multiple sensors. The weighted sum-of-Gaussians or normal mixture model is appropriate and efficient for a wide variety of problems, because the observations, Xn, often include Gaussian noise, which tends to make each object-class nearly Gaussian. The MLANS is robust with respect to small deviations of class distributions from Gaussian distributions, and large deviations are accounted for by the multiple modes. The MLANS can utilize a maximum entropy neuron to arrive at an optimal number of modes resulting in the best data association/classification for a finite number of observations.

The parameters of the statistical distributions (1) and (2) are the rates (prior probabilities) for the measurements from each class and mode rkm, the expected values of the observations for each class and mode:

M.k , . = E {X , , [ k ,m} , (3)

and the covariance matrices

Cnkm = E{DnkmDTnkmlk, m}. (4)

Equations of motion relate expected observed values (the distribution parameters) to the state parameters. For example, for linear trajectories, these equations relate the positional components, R, km, of M, km, at time t~, to the track state parameters at time t, R(k,m) and V(k,m),

R.km = R(k ,m) + V ( k , m ) ( t . - t). (5)

Other parameters of eqns (1) and (2) determining the distributions of classification features, in this paper, will be assumed independent and not affected by the equations of motion or other models; this eliminates the difference between the state parameters and distribution parameters for the estimation purpose.

In order to write the ML estimation equations in a compact form, let us introduce a bracket notation for the weighted averaging operation performed by neurons:

(f') = E W.k~, (6) n

where W~km is a connecting weight of the synapse

connecting the nth input to a neuron of the kth class and ruth mode andfn is the nth input; so, for example

(1) : ~ W,,~., n

( 4 ) = n

(7)

The MLANS weights Wnkm are the a-posteriori Bayes probabilities of measurement n belonging to the mth mode of the kth class:

W.k,~ = P(k, mln) = Pdf(X'lk'm) /~-'~pdf(X"lld'm')'e.e

(8)

The ML estimation equations for the track state parameters R(k, m) and V(k, m) are derived by setting to zero the derivatives of the likelihood pdf{X1, . . . ,Xn} in eqn (1) with respect to these parameters. Using the bracket notations, these equations can be written as follows:

R(k,m) = [ (R. . ,k) (~) - - (R .kmt . ) ( t . ) ] /de t ,

V(k ,m) = [(Rnkmtn)(1) -- {Rn*m)(tn)]/det, (9)

where

d e t = [ ( 1 ) ( ~ ) - ( t ~ ) ( t ) ] .

Similarly, for the parameters of the distributions of classification features we obtain:

rkm : N k m / N

N~,. = (~),

Mkm = (X,,)/N,t~, T

Ckm = < D n k m D n k m > , (10)

here Nkm can be interpreted as the expected number of objects (or observations) in class k, mode m.

The system of equations (1)--(10) defines MLANS as a non-linear, non-stationary system which exhibits stable learning in the face of a continuously changing flow of stimuli (it always converges). Its time evolution is determined by the flow of stimuli, a- priori model equations, and the ML learning principle. The ML learning principle drives MLANS to maximize the likelihood of the internal model, in other words, MLANS possesses an internal ML drive to improve its internal representation of the world.

The MLANS time evolution continues after each new piece of data until an equilibrium state (convergence) is reached (Perlovsky & McManus, 1991). A fundamental issue in learning efficiency is

M L A N S Fusion for A TR and IFF 1189

how accurately model parameters such as position and velocity of the tracks are estimated from a few scans. In the terminology of estimation theory, this measure is known as the efficiency of the neural network as a track parameter estimator. Utilization of track and target models within the neural network architecture combined with the ML estimation lead to learning that approaches the information-theoretic limit established by the Cramer-Rao bound (Perlovsky & McManus, 1991). The MLANS per- forms the optimal estimation of track parameters, utilizing all the available data from several scans which makes the MLANS ideal for track initiation, as well as for track maintenance in heavy clutter. For maneuvering, accelerating targets, or when Doppler measurements are available, eqns (5) and (9) are modified (Perlovsky, 1992b). The MLANS is applicable to both, coherent (e.g., radar) as well as to non-coherent (e.g., imaging sensor) measurements. This system performs concurrent detection, data association, tracking, and classification utilizing data from individual or multiple sensors of any type.

The problem of sensor fusion is a difficult one because the statistical distributions of the data are not known exactly for the application of standard optimal Bayesian inference. The absence of knowl- edge of the prior probabilities has been long recognized as a difficulty for the Bayesian approach. In reality, however, the problem is not limited to the absence of knowledge of the priors, but in fact all the distribution parameters are usually unknown, and the common assumption of unimodal Gaussian distribu- tions is rarely valid. The MLANS solves this problem by the adaptive estimation of all the parameters of a general pdf specified by a weighted sum of basis functions. The MLANS is also applicable in complicated cases when the information to be fused originates from sources of different types with different coverages or field-of-views, operating asynchronously. Data sets resulting from such observations are often incomplete: while many objects are observed by a single sensor, only a few may be observed by all the sensors. A modification needed to the above equation for fusing such incomplete data sets is described in Perlovsky and Marzetta (1992).

The MLANS operation described in detail in this section can be summarized in a simple and intuitively clear way as follows. The MLANS association subsystem (Figure 1) associates every piece of input data with MLANS classes and modes using a fully connected architecture, eqn (8). In parallel, the MLANS modeling subsystem estimates the model parameters according to eqns (9) and (10). At each iteration these two subsys- tems use parameters and weights computed at the previous iteration.

3. CLUTTER CHARACTERIZATION AND OBJECT DETECTION

Detection is usually the first step in the ATR process and is usually performed with relatively simple procedures such as statistical classifiers. The detec- tion problem is often complicated by variable clutter, so that a detection algorithm that works well under certain conditions may degrade catastrophically under other conditions. Adaptive clutter character- ization helps alleviate this problem--there is always enough clutter in the field of view for near real-time estimation of the current clutter leading to clutter- adaptive detection. Target signatures can also vary significantly from case to case, however, targets should be detected usually as soon as they appear in the field of view. This can be achieved in two ways, using two types of detectors: detectors using previously collected target data for training and detectors using no target data.

Detectors using no target data operate by estimating the clutter pdf from the clutter data, and the outliers of this distribution, that is the data points (or vectors) with the smallest pdf values or like- lihoods to be clutter are classified as targets. Often the detection threshold is set so that the number of incorrect detections per hour, called the false alarm rate (FAR) is constant; such a detector is called a constant false alarm rate, CFAR, detector. An alternative is to utilize previously collected target data for estimation of the target pdf as well and design a two-class detector or likelihood ratio test for the two classes, clutter and target. Both detectors require accurate adaptive clutter characterization which includes feature selection and pdf estimation.

In addition to target detection, clutter character- ization is needed for the evaluation of sensors and ATR algorithms (Clark et al., 1992; Perlovsky et al., 1992). The development and evaluation of autono- mous homing munitions (AHM) and automatic target recognition (ATR) algorithms requires more sophisticated mechanisms for characterizing clutter than the qualitative methods used in the past. An approach to quantitative clutter description has been developed based on statistical pattern recognition (Chernick et al., 1991), however, it was limited to the Gaussian assumption. In order to overcome this limitation MLANS was applied to the problem and the clutter characterization results compared with the conventional statistical pattern recognition quadratic classifier.

Each of the two examples presented below used an IR image of a target against a background of sky, forest and trees with the target on the treeline. The first image appears visually to have more clutter than the second image and this is confirmed by the clutter characterization procedure. In the results reported

1190 L. L Perlovsky, J. A. Chernick and W. H. Schoendorf

below, the comparison was made using two standard features, brightness and contrast. A quantitative measure of the improved clutter characterization was taken to be the improvement in target detection capability. The data in each image are divided into two classes: the background data set and the target data set. The background data set features are calculated by taking a 13 by 21 pixel box and moving it over the entire image with 50% overlap, excluding the target area. The target data set features are calculated by taking a 13 by 21 pixel box centered on the target and moving the box (4-3) by (4-5) pixels around the target. Brightness and contrast are computed for each box in the background and target regions. Utilizing target signatures from the same image is adequate for the stated purposes of clutter characterization and of the comparison of a neural network and classical quadratic detector performance. Absolute performance results should not be considered as realistic because of the two simplifications used in the above procedure: the use of two simple features and the use of the same set of target signatures for training and testing of the two- class classifier.

Target detectability is characterized by the operating characteristic (OC), which plots the probability of false alarm Pfa against the probability of detection Pa (or probability of leakage, PI = 1 - Pd). The OCs for each image provide a quantitative measure of the adaptive image clutter characterization. The classifiers were trained using the above described data sets for each image. The quadratic classifier characterizes each class, target or clutter under the Gaussian assumption. The results reported here for MLANS are obtained by using a single Gaussian mode for target distributions and multimodal adaptive distributions for the back- ground data set. The target data characterization by MLANS was limited to a single Gaussian mode in

order to focus the results on the utility of improved clutter characterization. The operating characteristics are estimated using the Bayesian classifier (likelihood ratio test) calculated from the estimated distributions.

The distributions of features for the targets and background for the first image are shown in Figure 2a. This plot demonstrates substantial overlap between the target and background distributions. The ellipses in the figure represent the 2-sigrna concentration ellipses for the distributions estimated under the Gaussian assumption and correspond to the classical quadratic classifier data characterization. Figure 2b illustrates the MLANS fuzzy division of the clutter portion of the decision space. The solid target concentration ellipse in Figure 2b is the same as in Figure 2a (since MLANS characterizes the target only by a single Gaussian mode). The dotted ellipses correspond to the multimodal clutter dis- tribution estimated by MLANS. Analysis of the clutter distribution modes indicates that the overlap between target and clutter distributions is mostly due to the forest clutter distribution modes. Figure 2c compares the performance of MLANS and the quadratic classifier as measured by the operating characteristics. MLANS outperforms the quadratic classifier especially in the important area of low false alarms.

The distributions of the targets and background for the second image are shown in Figure 3a. The distribution plot in Figure 3a shows less overlap between the targets and the forest (the forest region causes most false alarms) than the plot in Figure 2a. Figure 3b illustrates the MLANS fuzzy division of the clutter portion of the decision space with the solid target ellipse being the same as before. The dotted ellipses correspond to the multimodal clutter dis- tribution estimated by MLANS. Figure 3c compares the performance of MLANS and the quadratic classifier for this example as measured by operation

IMAGE METRICS DISTRIBUTION

m m i i a

• •

". . ~.'. • ..::" jbgds

BRIGH'I1NIESS

q

i

DISTRIBUTION OPERATING CHARACTERIZATION CHARACTERISTICS

i ! ! Ill • i ~ i ~ t i i i ! i u u

P iN • - - - - ~ ~ - - - - . . sPn ~ % ~ sP.

i

J a i I I I I I I I 4O , 77 , qo n , t l n m ana lunnu ioam ,,o

BR IGHTNESS FRLSE RLRRH PROSRBIL ITY

(a) Ca) (c)

FIGURE 2. Image characterization, example No. l---statistical pattern recognition (SPR) vs MLANS (NN).

MLANS Fusion for ATR and IFF 1191

IMAGE METRICS DISTRIBUTION ! u I

• •

• ° o . o

i m ", o... . . ; ." "

(a)

Ii D

DISTRIBUTION CHARACTERIZATION

u I I

I S P R

I / / /)

~)

~ N~L

OPERATING CHARACTERISTICS

. . . . . . . . . . _ _ , , . -

Ii

B

II

i

i l i i i i i i I S I m m l l l l l ~ i q

FRLSE RLRRH PROBRBILITY

(e) FIGURE 3. Image characterization, example No. 2 ~ t a t l s U c a l pattern recognition (SPR) vs MLANS (NN).

characteristics. Again, the MLANS performance is better in the area of low false alarms making MLANS' performance superior to that of the quadratic classifier. Clearly the more accurate representation of the clutter becomes important when dealing with the tails of the clutter distribu- tions. It is most probable that application of MLANS to the target distribution as well as the clutter would show improved performance throughout the OC. This exercise, however, as mentioned above was focused on obtaining a quantitative evaluation of the utility of adaptive clutter characterization.

Comparing the MLANS OCs in Figures 2c and 3c, it is clear that Figure 3c illustrates a better target detection operation characteristic than Figure 2c. This implies that the example in Figure 3 has less stressing clutter than the one in Figure 2 correspond- ing to the visual inspection of the images. The two examples presented in this section illustrate the need and utility of the adaptive multimodal representation of the clutter to achieve good target detection performance at low false alarm rates. MLANS provides this capability through its model based, adaptive approach to data representation.

4. CONCURRENT TRACKING AND DETECTION

This section describes a novel approach to enhancing the performance of the surveillance functions by fusing several functions thereby being able to use all the available information for each function. In the following examples MLANS concurrently performs the functions of target detection, data correlation (association), and track estimation using object models that combine statistical models of object feature distributions with the dynamical models of object motion. The MLANS learning mechanism results in the maximum likelihood estimation of the model parameters, yielding concurrent estimates of

data association probabilities and track parameters. This novel approach results in a dramatic improve- ment of performance: the MLANS performance exceeds that of existing algorithms because of the optimal utilization of all the available data.

Historically, intrinsic mathematical similarities between tracking and classification problems have not been explored. On one hand, classification and pattern recognition problems have been characterized by an insufficient amount of data for unambiguous decisions, which has stimulated the development of Bayes classification algorithms that utilize all the available information. On the other hand, tracking problems have been characterized by an overwhelm- ing amount of data leading to the development of suboptimal algorithms based on Kalman filters, amenable to sequential implementation for handling high rate data streams (Blackman, 1986)• The Kalman filter is a model based algorithm that combines a dynamical model of the target motion with a statistical model of sensor errors leading to an estimate of the track parameters. Kalman filters, however, have been designed for tracking a single target. The generalization of Kalman filters to multiple object tracking in clutter has been difficult (Blackman, 1986) and the optimal utilization of multi-object information has not been achieved. This has led to difficulties when tracking multiple objects or tracking in heavy clutter• As the number of clutter returns increases, it becomes increasingly difficult to maintain and to initiate tracks. Several algorithms have been suggested to extend the Kalman filter technique to this more complicated task. For example, the multiple hypothesis tracking (MHT) algorithm (Singer et al., 1974) initiates tracks by considering all possible associations within a specific window between detected objects on multiple scans (or frames) and all possible tracks. This problem is known to be non-polynomial complete (NP-com- plete), that is its optimal solution requires too large

1192 L. L Perlovsky, J. A. Chernick and IV. H. Schoendorf

an amount of computation (Parra-Loera et al., 1992)• This is difficult to handle even by parallel processors, when the number of clutter returns is large• Consequently, optimal solutions are not practically attainable. A partial solution to this problem is offered by the joint probability density association (JPDA) tracking algorithm, which performs fuzzy associations of objects and tracks, eliminating combinatorial search (Bar-Shalom & Tse, 1975)• However, the JPDA algorithm performs associations only on the last frame using established tracks and is, therefore, unsuitable for the more difficult part of the problem, track initiation•

The MLANS application to tracking explores the mathematical similarity between the tracking and classification problems by using combined dynamical and statistical models for multiple objects and in this way utilizing optimal Bayes methods for the problem of data association• By applying probabilistic, fuzzy classification to associating data in multiple scans and the ML estimation of track parameters the MLANS combines the advantages of both the MHT and the JPDA approaches• In MLANS tracking, each object- track is a class characterized by the object-track model and by the state parameters that include both classification and tracking parameters. For example, a polynomial track is characterized by state parameters such as the current object position, velocity acceleration, etc., and by a model relating these state parameters to the expected values of the observed coordinates on each scan. In addition, it is characterized by a probabilistic model describing the statistical distribution of the observables and the classification features (such as an amplitude or shape of returned pulses), and by the state parameters of the probabilistic model, such as means and covariances These state parameters are estimated adaptively, in real time (Perlovsky, 1991b; Perlovsky & Plum, 1991)•

For maneuvering objects, each object-track class can be characterized by a multi-modal distribution with different modes characterizing various dynami- cal models of maneuvers, so that the pdf for the object coordinates is a sum of these multiple modes• All these multiple dynamical modes correspond to the same object and therefore are characterized by the same pdf for the object's classification features• If an object separates into two objects (due to breaking, or due to two objects initially being unresolved), two dynamical modes will be initiated, indicating a need to form a new object-track class•

Concurrent detection, tracking, and classification utilizes classification features for improved tracking and uses tracking results to improve classification• This is illustrated in the four examples that follow• In the first example, the MLANS is applied to tracking three objects in random clutter, using pulse-Doppler search mode radar data. The scatter plot in Figure 4

. . , , . . . . . . . j . _ . , . : - . • - , . . . : - . , , ., . .:- °•. . •

E S T I M A T E D "" • • ' ' " " : ~ • " • : ' • . " •- • "

• " S T A T E . P A R A M ~ r E R S . ' : ; " .-• , " : . . . , ' . • , . . ._.. . . ~. . . . • . . . .

• • : . ~. • . • " ". • . , . - . • ." . , • . , . . . . g . . ' . , . • " t . • . , . . . . . . . " . . ' , " . ' . • • "'. ( " " . ~ " . ~ . ' . . - . ; : ~ .- " : :

- . : : . - " , , I f . ' " • . ' - " . .. C L U T T E R . .

.-!.. ' . / ..:- : .. - " - .:.t .. "

/ " U I ~ A R " I ~ I A J - E C T O R I E $ " ' • . . i . . . . . . : . .

~ i . , . " ' , . I " : " , " ' - ' . ' , " .'l . " " , ' . " , " - , /

R A N G E ( i o n )

F I G U R E 4 . T h e M L A N S combined detection and tracking. M L A N S

tracking results are shown as 10-~r ellipses.

shows the distribution of returns from 10 scans in the Doppler-velocity, range coordinate space. About 1000 random clutter returns are distributed through- out the plot, while there are three clusters of returns corresponding to the moving objects having approxi- mately 100 returns each. Due to a wide beam employed in a search mode, there are approximately 10 returns from each object per scan. The scatter seen in object returns along the Doppler velocity is determined by the radar accuracy, while the scatter along the range axis is due to the radar accuracy and the motion of the objects. Although targets are clearly visible as clusters of returns in this plot, this example is difficult for tracking using standard procedures based on detection of strong returns, because the amplitude of the radar returns from the targets are below the clutter amplitude and there are many more clutter returns with Doppler velocities (relative to the ground) greater than the target velocities than target returns. The fact that our eyes can easily identify these targets is due to our visual system performing clustering of these data, which is a step toward concurrent detection and tracking; if presented with a movie showing the time evolution of these data, our visual system would be able to perform concurrent detection and tracking (our visual system usually performs this task well for linear motion of rigid bodies). However, the conventional multiple object tracking schemes de- scribed above have considerable difficulty with this data set.

In this example each return is characterized by its time, tn and by its four coordinates, range R,, Doppler velocity V, (defined relative to the ground) and the elevation and azimuth angles 0,, ~,. A single mode with a linear track model was used for each object-class. MLANS initiated and estimated the track state parameters shown in Figure 4 (using the 10-~r ellipses). The accuracy of this estimation achieves the Cramer-Rao bound.

M L A N S Fusion f o r A T R and IFF 1193

TRACK 1 TRACK 2 TRACK 3

i! l i!ll ! t [ . . . . . . . . . . . . .~,- . . .__._..

RETURNO (" 10 1) flETUflN9 (" 10 ~) RETURNS (" 10 1)

] p

d m

I o c L

TRACK 4 . , . , - , - . . o - , - , , . , -

, . . . . . . , . , . . . . . . , . m w i m i m m m m m e m me

RETURNS 1" 10 ~)

TRACK 5

:t .... [ . . . . . . . . . . . . . . .

.:.~.;.g-_._-..__-2,,-~, "_-g,,- ,

RETURNS (" 10 ~1

CLUTTER 6

J:III I • m , m m m m i [ , ~ m m m m

f l ETU f lN8 1 " 10 11

FIGURE 5. The M L A N S probabilistic associations P(k, mln ) ore shown for oil returns from all 10 scans; the vertical axis shows the association probabil i t ies P(k, rain ) for each (Jr, m) as o function of the return number n (horizontal axis); for Ihls plot, returns are ordered so that each objects' returns are adjacent to each other. This il lustrates a near perfect association of oil returns. (Note that on imperfect association probabi l i ty, e.g., between a t rack k = 2, m = 1 and a clut ter return at near n = 700 is not a false alarm, as track initiation and object declaration ore based on all the data shown in each box.)

Let us examine now the M L A N S association of tracks and radar returns. In this example, the maximum number of allowed tracks was taken to be five. The M L A N S performs fuzzy association by calculating a probabili ty P(k, mln) for each return n belonging to a track (k, m). The probabilities for all returns on 10 scans (n = 1 , . . . , 1300) are shown in Figure 5. For plotting this figure, all the returns have been rearranged so that each objects' returns are adjacent to each other (n = 1 . . . . . 100; n = 101 . . . . . 200; n=201 , . . . , 300). It is seen that three tracks (k = 1,2, 3; m = 1) have nearly 100% probabilities for all returns from the three objects (n = 1 , . . . , 100; n = 101 , . . . , 200; n = 201 , . . . , 300) with very little clutter contribution, two tracks (k = 4, 5; m = 1) acquire just a few clutter returns and are terminated (discontinued), and nearly all the clutter returns (n = 301 , . . . , 1300) are identified as such with 100% probability. The three tracks acquiring real objects are declared detections and their trajectory parameters are output by the MLANS. The declaration decisions are based on the likelihood ratio test. Each of these tracks acquires ,-, 1 misassociated clutter points (these are not false alarms, because the track initiation and detection is based on 10 scans). Misassociated returns represent ~-,0.1% of 1000 total clutter returns: this number corresponds to the best possible association; it is the true overlap (the Bayes error) between distributions of clutter and track returns for this case. The MLANS thus achieved the information-theoretic performance limits of the Bayes error for the

accuracy of association and of the Cramer-Rao bound for the accuracy of track parameters.

In the next example, Figure 6, MLANS is applied to tracking four objects in random clutter. Shown on the right is a cartoon of maneuvering object No. 4 and object No. 3. On the left, the radar return data are shown along with the MLANS results. Three objects have constant range rates, so their returns are clumped together in Doppler velocity while the returns for maneuvering object No. 4 are spread in Doppler. MLANS results are shown by 10-a ellipses at the current (the last scan) target positions: on the left side of the lower two objects moving to the left and on the right side of the upper two objects moving to the right. Objects No. 3 and No. 4 initially occupy the same range and Doppler cells, making initiation of tracks difficult for existing tracking algorithms. In this example, it is important that each trajectory is indexed by two indexes, k, the object-class index and m, the track model index. This permits several types of track models for each object including maneuver- ing targets and unresolved objects separating into two tracks. Our results indicate that MLANS successfully initiates tracks for initially unresolved (separating) and maneuvering objects.

The following example illustrates concurrent detection, tracking and classification of an object in sub-clutter visibility. Here the object signal is 20 times smaller than the clutter. The detection of such a low signal in this case is based on utilizing a detailed clutter model and on concurrent processing of a large number of frames that separates the object from

1194 L. I. Perlovsky, J. A. Chernick and Hr. H. Schoendorf

i #4

RANGE

9

o R ! r '~

i i | ! I | . i i • I | i | ! .

". ESTI I~TED CUI~RENT ..: ' . " ".~UVE'RINQ • STATE PARAMETERS . .. '.TARO ET.4 j~

• D ' ° ' . J " " ~ • . ' " ' ' a ~ . - . . - . .

• " . . .' ....-'. $

\ I : : : : : :: o e OBJECTS ON LINEAR TRAJECTORIES'

q~ ~s " :" t .q .S

RANGE (km)

FIGURE 6. The MLANS tracking maneuvering and separating objects; (e) an exaggerated cartoon of the maneuvering object No. 4 and object No. 3 (the other two objects ere not shown in (a)); (b) the data and MLANS results (shown using 10-~r ellipses).

clutter based on the relative object to background clutter motion. A high sampling rate staring IR sensor is utilized with relatively large ground pixel footprints, so that the object occupies a small portion of a pixel and the clutter is produced by many subpixel variations in the background intensity. Several frames of these data are shown in Figure 7.

Because of the subpixel variations, an often used

technique of frame-to-frame subtraction is not efficient: two pixels on adjacent frames contain many different subpixel clutter objects, so the frame-to-frame subtraction will not improve the signal-to-clutter ratio. The MLANS approach utilizes a detailed subpixel clutter model, which relates the expected value of the observed pixel intensities Xn to the intensities of subpixel clutter

40 FRAME 1

40

z O ,< :) IM _1 ILl

1 1 1 40 1 AZIMUTH

FRAME 18 40 40

z O

X , . I LU

1 1

FRAME 100

AZIMUTH

FRAME 35

40

40

UJ

FRAME 200

AZIMUTH

FRAME 52

40

O :E n- O (/) Z W m u. O z _o I - 0 w n-

1 AZIMUTH 40 1 AZIMUTH 40 1 AZIMUTH 40

FIGURE 7. IR data containing strong ground clutter, subclutter transient events, and a moving object that is 20 times below the clutter standard devlaUon. Several frames out of total 200 are shown.

M L A N S Fusion for A T R and I F F 1195

objects. Conceptually this model is very simple, because the sensor motion is known, and the majority of subpixel clutter objects are stationary on the ground and do not change over time. The object is modeled using eqns (9).

In addition to the moving object and stationary clutter in this example, there are clutter events that are stationary on the ground but whose intensities change over time, such as sun glints. These transient events are modeled by modes with moving coordi- nates (relative to the sensor) with the known ground clutter velocity and mean intensities (M/) which change over time according to the predetermined functional shape

M l k m . = A e x p { - ( t n - - t o ) 2 / 2 " r 2 } . ( 1 1 )

Here, k and m are the class and mode numbers (up to 20 transient event modes have been allowed numbered k = 2 , m = 2 , . . . , 2 1 , with k = 1, m = 1 used for the moving object, and k = 2, m = 1 used for the stationary ground clutter); t, is the time of the measurement n; A, to and 7- are parameters of these

modes corresponding to the amplitude, mid-time and duration of the event; T is fixed at the known value "r = 0.5 s, A and to are adaptively estimated by solving the corresponding ML equations, which in this case results in the following equations replacing eqn (10) for Mkm

( ( X . - A e x p { - ( t . - t o )2 /2 " r2 } )

x e x p { - ( t . - t o )2 /2 " r2 } ) = 0,

( ( X . - A exp{-(t. - to)2/2-r2})(t, - to)

x e x p ( - - ( t . -- to)2/2"r2}) = 0. ( 1 2 )

The MLANS operation consists of the fuzzy clustering of the sensor data into the moving object and the stationary and transient clutter models. This clustering and the parameter estimation for each model are the fundamental operations that provide for the concurrent detection, data association, classification and tracking of the object of interest as well as the ancillary classification of the transient clutter events.

FRAME 1 • FRAME 100 FRAME 200 40 40 40

0 0

w

0

n- O

1 1 1 z 1 AZIMUTH 40 1 AZIMUTH 40 1 AZIMUTH 40 uJ

(n iJ.

FRAME 18 FRAME 35 FRAME S2 O Z 40 40 40 O

¢r

z z ~~ 0 o

m w ,.J _J

1 1 1 1 AZIMUTH 40 1 AZIMUTH 40 1 AZIMUTH 40

[ ] TRUE POSITION • TARGET • OTHER EVENTS [ ] BENIGN BACKGROUND

FIGURE 8. The results of concurrent detsctlon, classif ication and trecklng of • subclutter object and transient events ere shown. The true posit ions of the moving object and transient events are marked by x, and plxel classlflcaUons are shown as grey for the stationary clutter background, as dark grey for the transient clutter and as black for the moving object. The moving object is correctly detected, classif ied and tracked, despits being 20 times below the clutter standard deviation.

Z O

Ill --I tlJ

z O

uJ . J Ill

1196 L. I. Perlovsky, J. A. Chernick and W. H. Schoendorf

0.9

z O.B 0

0.7 W

o 0 . 5

~ 0 .4 m

" 03 o n , -

D. 0.,'l

0.1

0

. . . . . . . . . . . . . . . : ~ ~ " . . . . . . . . . 2 d b (NRC Preliminary) ,

! . • . ! ,

• ..'! ! ~ : "•~" . . . . :: 4 d ' B ' " i " 13dB:i . . . . i: . . . . . .? . . . . i ' " . :i "':: . . . . . :i . . . . . i: . . . . i. . . . . . i ' " ":

/ Y Z ' I . . . . ' " ~ i ' " : • . - ii ~ ~ 1 . ; ,~ . . . . ~ . . . . . "- .~ . . . . . . . . . . . . . . . . . . . , . . . . . . ~ . . . . . t . . . . . . . . . . . ~ . . " • . . . . . . -. . . . . • . . . . . : . . . . . • . . . . . .. ..:. . . . . .. . . . . . ; . . . . . ~ . . . . . : . . . . . , ... . . . . . .,. . . . . . : . . . . . - . . . .

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20

SCAN NUMBER

FIGURE 9. A comparison of the MLANS concurrent detection and tracking performance to that of the state-of-the-art implementation of the MHT algorithm. Probability of detection (vertical axis) is shown Ior a fixed value of the false alarm probability as a function of the number of scans (horizontal axis) for various values of the signal-to-clutter (S/C) ratio. MLANS outperforms MHT In this example by about 20 db.

The results of concurrent detection, classification and tracking are shown in Figure 8, where the true positions of the moving object and transient events are marked by x, and pixel classifications are shown as gray for the stationary clutter back- ground, as dark gray for the transient clutter and as black for the moving object. The moving object is correctly detected, classified and tracked, despite being 20 times below the clutter standard devia- tion.

A comparison of the MLANS concurrent detec- tion and tracking performance to that of the

state-of-the-art implementation of the MHT algo- rithm is illustrated in Figure 9 which shows the MLANS performance using sensor data and theore- tical performance curves computed for the MHT algorithm. In this figure, the theoretical detection performance of the MHT algorithm is shown for a fixed value of the false alarm probability as a function of the number of scans for various values of the signal to clutter ( S / C ) ratio• MLANS outperforms MHT in this example by about 20 db, which can be understood as follows: because of the computational complexity of the MHT algo-

FRIENDLY HOSTILE

FIGURE 10. Low resolution target images (without noise).

MLANS Fusion for ATR and IFF 1197

.<

FRIENDLY

r 22.5 48 67.5

270 292.5 315 337.5

R A N G E BINS (1 TO 64)

* ASPECT ANGLES IN DEGREES

2

HOSTILE

o* 22.5 45 67.6

270 292.5 315 337.5

R A N G E BINS (1 TO 64)

FIGURE 11. MMW target data, 95 GHz MMW radar.

rithm, a pre-detection threshold has to be used, resulting in degradation of the performance at low SIC. The following qualifications have to be made, on the one hand the MLANS result has to be considered as a preliminary because of the limited amount of data utilized, while theoretical performance curves for the M H T algorithm have been verified in operations, on the other hand, the M H T limitations cannot be significantly im- proved by an increase in the (already formidable) computer power, because of the exponential compu- tational complexity of the MHT.

5. I D E N T I F I C A T I O N FRIEND OR FOE

This section illustrates an application of MLANS to the problem of non-cooperative IFF, first, using a single IR sensor and second, by fusing IR and a millimeter wave sensor (MMW). In both cases, data

for similar friendly and hostile military vehicles are used. The IR data consist of 16 images for each vehicle, over the same set of aspect angles (Figure 10). The images' resolution corresponds to relatively long range views. The MMW data shown in Figure 11 consist of target signatures taken at the same angles. MLANS was trained on every other aspect angle (e.g., 22.5 ° , 67.5 ° , 112.5 ° , 157.5 ° , 202.5 ° , 247.5 ° , 292.5 ° and 337.5°). Then the M L A N S generalization performance was tested on the remaining intermedi- ate views (e.g., 45 ° , 90 ° , 135 ° , 180 ° , 225 ° , 270 ° , 315 ° , and 360°). The MLANS generalization performance at identifying targets as friendly or hostile over previously unseen aspect angles is shown in Table 1, which illustrates results for three types of classifiers. The quadratic classifier was trained and tested on all 16 aspect angles (no generalization required). The nearest neighbor classifier was trained and tested in exactly the same way as M L A N S described above. As

TABLE 1 MLAN$ IFF Perlormance Compared to the Nearest Neighbor and Quadratic Classifiers

MLANS General izat ion Performance Using 5 Modes

(Templates)

SPR Nearest Neighbor Classifier General izat ion

Performance Using 8 Templates

SPR Quadrat ic Classifier Performance Using Same Data

for Test ing and Training

IR a lone Friendly PCC -- 100% Hostile PCC = 75%

IR and Friendly PCC -- 100% MMW Hostile PCC -- 100%

PCC = 100% PCC -- 62% PCC = 100% PCC -- 87%

PCC -- 81% PCC -- 69% PCC = 93% PCC -- 82%

1198 L. L Perlovsky, J. A. Chernick and W. H. Schoendorf

can be seen from Table 1, MLANS outperformed both these methods by a substantial margin. The performance of the two-sensor suite is better than that for the IR sensor alone for every algorithm we tested, as theoretically expected for optimal or probabilistic-based fusion. MLANS has again out- performed the classical algorithms due to adaptive estimation of its internal statistical model; in fact, MLANS achieved the maximal performance level.

In addition, MLANS utilized fewer modes (templates) than the nearest neighbor classifier. In this example just one scenario parameter varies, the aspect angle, and the saving here is ,-~ 5/8; for a large number of scenario parameters and for high dimensions, this saving is expected to be exponen- tially enhanced, leading to significant operational advantage in terms of memory, processing speed and training requirements.

6. DISCUSSION

A novel mathematical technique has been developed using MLANS for concurrently performing multiple surveillance functions, such as detection, tracking, sensor fusion and classification. By using an internal model-based structure MLANS concurrently associ- ates data with appropriate models while estimating model parameters from the data. While other state- of-the-art techniques for concurrent performance of multiple surveillance functions often lead to a combinatorial explosion of the training or proces- sing requirements, MLANS training and processing complexity is essentially linear. The concurrent processing allows for the utilization of all the available information at every step, resulting in improvements over the current approaches that perform surveillance functions in sequential steps. The improved utilization of information is especially needed at low signal to clutter conditions and at long ranges and potentially leads to the maximal expansion of the battlespace to the information- theoretic limits.

The problem of fusing multi-source information into a unified perception of the world is a fundamental problem in computational intelligence as well as in understanding human intelligence. Computational approaches to pattern recognition research during the 1950s and 1960s using early neural network paradigms and pattern recognition algorithms have been based on the concept of self- learning from examples without a need for compli- cated a-priori knowledge. These approaches have led to difficulties related to exorbitant training require- ments. In fact, training requirements for these paradigms are often exponential in terms of the problem complexity (Perlovsky, 1994a). In order to overcome these difficulties, Minsky (1968) has

proposed to utilize extensive a-priori knowledge in a form of rule-based systems. Minsky's approach is similar to Plato's conception of mind based on a- priori ideas (Plato, Phaedrus); 2300 years ago Plato faced the very first question about the intellect: How is it possible at all?--He came to a conclusion that our ability to think is founded in that concepts or abstract ideas (Eidos) are known to us a-priori, through a mystic connection with a world of ideas.

Although Minsky has underlined that his method does not solve the problem of learning (Minsky, 1975), notwithstanding, attempts to add learning to Minsky's artificial intelligence has been continuing in various fields of modeling the mind, including linguistics and pattern recognition (Koster & May, 1981; Winston, 1984; Bonnisone et al., 1991; Botha, 1991; Keshavan et al., 1993). In linguistics, Chomsky has proposed to build a self-learning system that could learn a language similarly to a human, using a symbolic mathematics of rule systems (Chomsky, 1972). In Chomsky's approach, the learning of a language is based on a language faculty, which is a genetically inherited component of the mind, contain- ing an a-priori knowledge of language. This direction in linguistics, named the Chomskyan revolution, was about recognizing the two questions about the intellect (first, how is it possible? and second, how is learning possible?) as the center of a linguistic inquiry and of a mathematical theory of mind (Botha, 1991). However, combining adaptive learning with a-priori knowledge proved difficult: variabilities in data required more and more detailed rules leading to exponential complexity of logical inference (Winston, 1984), which is not physically realizable for compli- cated real-world problems.

The most striking fact is that the first one who pointed out that learning can not be achieved in Plato's theory of mind was Aristotle. Aristotle recognized that in Plato's formulation there is no learning, and the world of ideas is completely separated from the world of experience. Searching to unite these two worlds, he developed a concept of form having a universal and higher reality and being a formative principle in an individual experience (Metaphysics). In the Aristotelian theory of form the adaptivity of the mind was due to a meeting between the a-priori form and matter, forming an individual experience. Parametric model-based approaches have been proposed to combine the adaptivity of parameters with a-priority of models. However, algorithms that are most widely utilized today to combine adaptivity and a-priority in a model-based paradigm are based on the concept of multiple hypothesis testing, such as the MHT algorithm discussed in Section 4. These algorithms lead to exponential computational complexity and are not suited for real world problems. Therefore, a recent

M L A N S Fusion for A TR and IFF 1199

National Science Foundation report concludes that " . . . much of our current models and methodologies do not seem to scale out of limited "toy' domains" (Negahdaripour & Jain, 1991).

Most affine to the Aristotelian concept of mind are mathematical methods, founded on the new intuition of the physics of mind as a neuron field theory that were began by Grossberg. In a theory of adaptive resonance, perception is a resonance between afferent and efferent signals, that is between signals coming from the outside, from sensory cells receiving external stimuli and coming from the inside, that is signals generated by a-priori models (Grossberg, 1980). This concept of mind as a neuron field theory is further developed in MLANS, which permits utilizing a wide variety of a-priori models and leads to linear computational complexity that is physically realiz- able.

In this paper we have described the development and application of MLANS to adaptive image clutter characterization based on a statistical model, which leads to the improvement in target detection in the practically important operational region of low false alarm rates and also provides a quantitative adaptive IR clutter metric for ATR system evaluation. Concurrent detection and tracking based on a combined statistical and dynamical model have been described, illustrating the performance of these functions in a high clutter environment. A multi- sensor MLANS model has been applied to the noncooperative IFF problem utilizing the fusion of IR and MMW sensors, resulting in improved IFF vs using IR sensor alone. MLANS performance has been compared to that of classical algorithms and an improved performance has been demonstrated.

N K M

n = 1 , . . . , N

k = l , . . . , K m = 1 , . . . , M Xn

pdf(X )

pdf(X,,lk, m)

I-l~= 1 E L , e{.} (.) det

NOMENCLATURE

total number of observed samples total number of classes total number of modes within each

class observation (sample) index class index mode index observation or measurement (or

feature) vector for observation n probability density function (pdf) for

observations Xn marginal probability density function

for observations Xn given class k, mode m

product over n = 1, . . . , N sum over k : 1 , . . . , K expected value class and mode marginal averages determinant

d rkm

Nkm

Mnkm

Cnkm

tn t Rnkm R(k,m)

V(k,m)

Wnkm P(k, mln)

ed el

On

o"

Mlkmn

A t0 T

s / c

dimensionality of the vector Xn expected values of rates (prior

probabilities) for class k and mode m

expected number of samples for class k and mode m

mean or expected value of the nth observations for class k, mode m

covariance of the nth observations for class k, mode m

time of the observation n current time t positional components of nnkm track state parameters, the object

position vector at the current time t

track state parameters, the object velocity vector at the current time t

neural association subsystem weights a-posteriori Bayes probabilities for

sample n belonging to class k, mode m

probability of detection probability of leakage probability of false alarm vector of elevation and azimuth

angles standard deviation pixel n marginal mean intensity,

given class k, mode m maximal intensity of a transient event mid-time of a transient event duration of a transient event signal to clutter ratio

R E F E R E N C E S

Aristotle (IV BC). Metaphysics. Trans. H.G. Apostle, 1966, Bloomington, IN: Indiana University Press.

Bar-Shalom, Y., & Tse, E. (1975). Tracking in a cluttered environment with probabilistic data association. Automatica, 11, 451-460.

Blackman, S. S. (1986). Multiple target tracking with radar applications. Norwood, MA: Artech House.

Bonnisone, P. P., Henrion, M., Kanal, L. N., & Lemmer, J. F. (1991). Uncertainty in Artificial Intelligence 6. Amsterdam: North Holland.

Botha, R. P . (1991). Challenging Chomsky. The generative garden game. Oxford: Basil BlackweU.

Brown, J. S. (1994). Desert Storm experience. Combat Identification Systems Conference. Monterey, CA.

Carpenter, G. A. (1989). Neural network models for pattern recognition and associative memory. Neural Networks, 2, 243- 257.

Chernick, J., Noah, P. V., Noah, M. A., & Schroeder, J. (1991). Background characterization techniques for target detection using scene metrics and pattern recognition. Optical Engineer- ing, 30, 254-258.

1200 L. L Perlovsky, J. A. Chernick and W. H. Schoendorf

Chomsky, N. (1972). Language and mind. New York: Harcourt Brace Javanovich.

Clark, L. G., Pedovsky, L. I., Schoendorft, W. H., Plum, C. P., & Keller, T. L. (1992). Evaluation of forward-looking infrared sensors for automatic target recognition using an information- theoretic approach. Optical Engineering, 31(12), 2618-2627.

Grossberg, S. (1990). How does a brain build a cognitive code? Psychological Review, 87, 1-51.

Keshavan, H. R., Barnett, J., Geiger, D., & Verma, T. (1993). Introduction to the special section on probabilistic reasoning. IEEE Trans. PAMI, 15(3), 193-195.

Koster, J., & May, R. (1981). Levels of syntactic representation. Dordrecht: Foils Publications.

Minsky, M. L. (1968). Semantic information processing. Cam- bridge, MA: The MIT Press.

Minsky, M. L. (1975). A framework for representing knowledge. In The Psychology of computer vision, P. H. Whinston, (Ed.), New York: McGraw-Hill.

Negahdaripour, S., & Jain, A. K. (1991). Final Report of the NSF Workshop on the Challenges in Computer Vision Research; Future Directions of Research. National Science Foundation.

Parra-Loera, R., Thompson, W. E., & Akbar, S. A. (1992). Multilevel distributed fusion of multisensor data. Conference on Signal Processing, Sensor Fusion and Target Recognition, SP1E Proceedings, Vol. 1699.

Perlovsky, L. I. (1987). Multiple sensor fusion and neural networks. DARPA Neural Network Study. Lexington, MA: MIT/Lincoln Laboratory.

Perlovsky, L. I. (1988a). Neural networks for pattern recognition and Cramer-Rao bounds. Boston University Engineering Seminar, Boston, MA.

Perlovsky, L. I. (1988b). Neural networks for sensor fusion and adaptive classification. First Annual International Neural Network Society Meeting, Boston, MA.

Perlovsky, L. I. (1989). Cramer-Rao bounds for the estimation of normal mixtures. Pattern Recognition Letters, 10, 141-148.

Perlovsky, L. I. (1990). Self-learning ATR based on maximum likelihood artificial neural system. Research Conference on Neural Networks for Automatic Target Recognition. Boston University, Tyngsboro, MA.

Perlovsky, L. I. (1991a). Computational concepts in ATR: neural networks, statistical pattern recognition, and model based vision. ATR Working Group Meeting, Seattle, WA.

Perlovsky, L. I. (1991b). Model based target tracker with fuzzy logic. 25th Annual Asilomar Conference on Signals, Systems and Computers, Pacific Grove, CA.

Perlovsky, L. I. (1992a). Fuzzy decision sensor fusion. Fourth International Conference on Strategic Software Systems, Huntsville, AL.

Perlovsky, L. I. (1992b). Model based neural networks. Invited Lecture at the Applied Mathematics Seminar. University of Massachusetts, Lowell, MA.

Perlovsky, L. I. (1994a). Computational concepts in classification: neural networks, statistical pattern recognition, and model based vision. Journal of Mathematical Imaging and Vision, 4(1).

Perlovsky, L I. (1994b). A model based neural network for transient signal processing. Neural Networks, 7(3), 565-572.

Perlovsky, L. I., & Jaskolski, J. V. (1994). Maximum likelihood adaptive neural controller. Neural Networks, 7(4), 671~80.

Perlovsky, L. I., & Marzetta, T. L. (1992). Estimating covariance matrix from independent incompleU~ realizations of a random vector. IEEE Transactions on Signal Processing, 40(8), 2097- 2100.

Perlovsky, L. I., & McManus, M. M. (1991). Maximum likelihood artificial neural system 0VlLANS) for adaptive classification and sensor fusion. Neural Networks, 4(1), 89-102.

Perlovsky, L. I., & Plum, C. P. (1991). Model based multiple target tracking using MLANS. Proceedings of Third Biennial ASSP Mini Conference, Boston, MA.

Perlovsky, L. I., Schoendorf, W. H., Clark, L. G., & Keller, T. L. (1992). Information-theuretic performance model for ATR. IRIS meeting on Passive Sensors, Laurel, MD.

Plato (IV BC). Phaedrus. Translated in Plato, L. Cooper (Ed.). New York: Oxford University Press.

Singer, R. A., Sea, R. G., & Housewright, R. B. (1974). Derivation and evaluation of improved tracking filters for use in dense multitarget environments. IEEE Transactions on Information Theory, IT-20, 423-432.

Winston, P. H. (1984). Artificial Intelligence, 2nd edn. Reading, MA: Addison-Wesley.