Emoji2022 2022 The Fifth International Workshop on Emoji ...

-

Upload

khangminh22 -

Category

Documents

-

view

2 -

download

0

Transcript of Emoji2022 2022 The Fifth International Workshop on Emoji ...

Emoji2022 2022

The Fifth International Workshop on Emoji Understandingand Applications in Social Media

Proceedings of the Workshop

July 14, 2022

©2022 Association for Computational Linguistics

Order copies of this and other ACL proceedings from:

Association for Computational Linguistics (ACL)209 N. Eighth StreetStroudsburg, PA 18360USATel: +1-570-476-8006Fax: [email protected]

ISBN 978-1-955917-95-7

i

Organizing Committee

General Chair

Sanjaya Wijeratne, None

Program Chairs

Jennifer Lee, Emojination, USAHoracio Saggion, Department of Information and Communication Technologies, Universitat Pom-peu Fabra, SpainAmit Sheth, Artificial Intelligence Institute, University of South Carolina, USA

Publication Chair

Sanjaya Wijeratne, None

ii

Program Committee

Senior Program Committee

Sanjaya Wijeratne, NoneHoracio Saggion, Universitat Pompeu Fabra, Spain

Program Committee

Lakshika Balasuriya, Gracenote, Inc., USAZhenpeng Chen, Peking University, ChinaJoão Cunha, University of Coimbra, PortugalLaurie Feldman, University at Albany - State University of New York, USAYunhe Feng, Universtiy of Washington, USADimuthu Gamage, Google, USAManas Gaur, Samsung Research America, USAJing Ge-Stadnyk, University of California, Berkeley, USAKalpa Gunaratna, Samsung Research America, USASusan Herring, Indiana University - Bloomington, USAAnurag Illendula, New York University, USAXuan Lu, University of Michigan, USASeda Mut, Universitat Pompeu Fabra, SpainSwati Padhee, Wright State University, USASujan Perera, Amazon, USAAlexander Robertson, Google, USASashank Santhanam, University of North Carolina at Charlotte, USANing Xie, Amazon, USA

Keynote Speakers

Alexander Robertson, Google, USABenjamin Weissman, Rensselaer Polytechnic Institute, USA

iii

Table of Contents

Interpreting Emoji with EmojiJens Reelfs, Timon Mohaupt, Sandipan Sikdar, Markus Strohmaier and Oliver Hohlfeld . . . . . . . 1

Beyond emojis: an insight into the IKON languageLaura Meloni, Phimolporn Hitmeangsong, Bernhard Appelhaus, Edgar Walthert and Cesco Reale

11

Emoji semantics/pragmatics: investigating commitment and lyingBenjamin Weissman . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 21

Understanding the Sarcastic Nature of Emojis with SarcOjiVandita Grover and Hema Banati . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 29

Conducting Cross-Cultural Research on COVID-19 MemesJing Ge-Stadnyk and Lusha Sa . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 40

Investigating the Influence of Users Personality on the Ambiguous Emoji PerceptionOlga Iarygina . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 47

Semantic Congruency Facilitates Memory for EmojisAndriana Ge-Christofalos, Laurie Feldman and Heather Sheridan . . . . . . . . . . . . . . . . . . . . . . . . . . 63

EmojiCloud: a Tool for Emoji Cloud VisualizationYunhe Feng, Cheng Guo, Bingbing Wen, Peng Sun, Yufei Yue and Dingwen Tao . . . . . . . . . . . . 69

Graphicon Evolution on the Chinese Social Media Platform BiliBiliYiqiong Zhang, Susan Herring and Suifu Gan . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 75

iv

Program

Thursday, July 14, 2022

09:00 - 09:10 Welcome and Opening Remarks

09:10 - 10:10 Keynote - The Next 5000 Years of Emoji Research, Alexander Robertson, Google,USA

10:10 - 10:30 Session A - Paper Presentations

Interpreting Emoji with EmojiJens Reelfs, Timon Mohaupt, Sandipan Sikdar, Markus Strohmaier and OliverHohlfeld

10:30 - 11:00 Morning Break

11:00 - 12:30 Session B - Paper Presentations

Understanding the Sarcastic Nature of Emojis with SarcOjiVandita Grover and Hema Banati

Investigating the Influence of Users Personality on the Ambiguous EmojiPerceptionOlga Iarygina

Beyond emojis: an insight into the IKON languageLaura Meloni, Phimolporn Hitmeangsong, Bernhard Appelhaus, Edgar Walthertand Cesco Reale

Graphicon Evolution on the Chinese Social Media Platform BiliBiliYiqiong Zhang, Susan Herring and Suifu Gan

Conducting Cross-Cultural Research on COVID-19 MemesJing Ge-Stadnyk and Lusha Sa

12:30 - 14:00 Lunch Break

14:00 - 15:00 Keynote - Processing Emoji in Real-Time, Benjamin Weissman, RensselaerPolytechnic Institute, USA

v

Thursday, July 14, 2022 (continued)

15:00 - 15:45 Session C - Paper Presentations

EmojiCloud: a Tool for Emoji Cloud VisualizationYunhe Feng, Cheng Guo, Bingbing Wen, Peng Sun, Yufei Yue and Dingwen Tao

Semantic Congruency Facilitates Memory for EmojisAndriana Ge-Christofalos, Laurie Feldman and Heather Sheridan

Emoji semantics/pragmatics: investigating commitment and lyingBenjamin Weissman

15:45 - 15:50 Closing Remarks

vi

Proceedings of the The Fifth International Workshop on Emoji Understanding and Applications in Social Media, pages 1 - 10July 14, 2022 ©2022 Association for Computational Linguistics

Interpreting Emoji with Emoji:⇒

Jens Helge Reelfs1, Timon Mohaupt∗2, Sandipan Sikdar3, Markus Strohmaier4, Oliver Hohlfeld1

1 Brandenburg University of Technology, 2 Talentschmiede Unternehmensberatung AG3 RWTH Aachen University, 4 University of Mannheim, GESIS, CSH Vienna

[email protected], [email protected], [email protected],[email protected], [email protected]

Abstract

We study the extent to which emoji can beused to add interpretability to embeddings oftext and emoji. To do so, we extend thePOLAR-framework that transforms word em-beddings to interpretable counterparts and ap-ply it to word-emoji embeddings trained onfour years of messaging data from the Jodelsocial network. We devise a crowdsourced hu-man judgement experiment to study six use-cases, evaluating against words only, whatrole emoji can play in adding interpretabil-ity to word embeddings. That is, we use arevised POLAR approach interpreting wordsand emoji with words, emoji or both accord-ing to human judgement. We find statisticallysignificant trends demonstrating that emoji canbe used to interpret other emoji very well.

1 Introduction

Word embeddings create a vector-space representa-tion in which words with a similar meaning are inclose proximity. Existing approaches to make em-beddings interpretable, e.g., via contextual (Subra-manian et al., 2018), sparse embeddings (Panigrahiet al., 2019), or learned (Senel et al., 2018) transfor-mations (Mathew et al., 2020)—all focus on textonly. Yet, emoji are widely used in casual com-munication, e.g., Online Social Networks (OSN),and are known to extend textual expressiveness,demonstrated to benefit, e.g., sentiment analysis(Novak et al., 2015; Hu et al., 2017).Goal. We raise the question if we can lever-age the expressiveness of emoji to make wordembeddings—and thus also emoji—interpretable.I.e., can we adopt word embedding interpretabilityvia leveraging semantic polar opposites (e.g., cold/ hot) to emoji (e.g., / , or / ) for inter-preting words or emoji w.r.t. human judgement.

*Timon Mohaupt performed this work during his masterthesis at Brandenburg University of Technology and RWTHAachen University.

Approach. Motivated and based upon POLAR(Mathew et al., 2020), we deploy a revised variantPOLARρ that transforms arbitrary word embed-dings into interpretable counterparts. The key ideais to leverage semantic differentials as a psychomet-ric tool to align embedded terms on a scale betweentwo polar opposites. Employing a projection-basedtransformation in POLARρ, we provide embed-ding dimensions with semantic information. I.e.,the resulting interpretable embedding space valuesdirectly estimate a term’s position on a-priori pro-vided polar opposite scales, while approximatelypreserving in-embedding structures (§ 2).

The main contribution of this work is the large-scale application of this approach to a social mediacorpus and especially its evaluation in a crowd-sourced human judgement experiment. For study-ing the role of emoji in interpretability, we create aword-emoji input embedding from on a large socialmedia corpus. The dataset comprises four yearsof complete data in a single country from the on-line social network provider Jodel (48M posts ofwhich 11M contain emoji). For subsequent mainevaluation, we make this embedding interpretablewith word and emoji opposites by deploying ouradopted tool POLARρ (§ 3).

Given different expressiveness of emoji, we askRQ1) How does adding emoji to POLARρ impactinterpretability w.r.t. to human judgement? I.e., dohumans agree on best interpretable dimensions fordescribing words or emoji with word or emoji oppo-sites? And RQ2) How well do POLARρ-semanticdimensions reflect a term’s position on a scale be-tween word or emoji polar opposites?Human judgement. We design a crowdsourcedhuman judgement experiment (§ 4) to study ifadding emoji to word embeddings and POLARρ

in particular increases the interpretability—whilealso answering how to describe emoji best. Our hu-man judgement experiment involves six campaignsexplaining Words (W/*) or Emoji (E/*) with Words,

1

(W/W) Words with Words

Beach0 5-5 10-10

sand stone

holiday home

mountain sea

swim sink

land sea

(W/M) Words with Words & Emoji, Mixed

Beach0 5-5 10-10

sand stone

holiday home

mountain sea

(W/E) Words with Emoji

Beach0 5-5 10-10

(E/W) Emoji with Words

0 5-5 10-10

land sea

night sun

work recreation

holiday home

rains dryspell

(E/M) Emoji with Words & Emoji, Mixed

0 5-5 10-10

land sea

night sun

(E/E) Emoji with Emoji (cf. paper title)

0 5-5 10-10

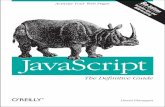

Figure 1: The POLAR-framework (Mathew et al., 2020) makes word embeddings interpretable leveraging polaropposites. It provides a new interpretable embedding subspace with systematic polar opposite scales: Along sixuse-cases, we evaluate which role emoji expressiveness plays in adding interpretability to word embeddings. I.e.,how well can our adopted POLARρ interpret (W/*) words or (E/*) emoji with words, emoji or both (*/M), Mixed.We test POLARρ alignment with human judgement as represented in shown semantic profiles above.

Emoji, or both Mixed. We evaluate two test con-ditions to answer both research questions: (RQ1)a selection test studies if human subjects agree tothe POLARρ identified differentials (e.g., how doemoji affect POLARρ interpretability?), and (RQ2)a preference test that studies if the direction ona given differential scale is in line with humanjudgement (e.g., how well does POLARρ interpretscales).Results. POLARρ identifies the best interpretableopposites for describing emoji with emoji, yet gen-erally aligning well with human judgement. Exceptinterpreting words with emoji only probably dueto lack of emoji expressiveness indicated by coderagreement. Further, POLARρ estimates an embed-ded terms’ position on a scale between oppositessuccessfully, especially for interpreting emoji.Broader application. Not all emoji have a univer-sally agreed on meaning. Prior work showed thatdifferences in the meaning of emoji exist betweencultures (Guntuku et al., 2019; Gupta et al., 2021).Even within the same culture, ambiguity and dou-ble meanings of emoji exist (Reelfs et al., 2020).Currently, no data-driven approach exists to inferthe meaning of emoji—to make them interpretable.Our proposed approach can be used to tackle thischallenge since it makes emoji interpretable.

2 Creating Interpretable Embeddings

We explain next our deployed tool for creat-ing interpretable word-emoji embeddings: PO-

LAR (Mathew et al., 2020); and provide detailon a revised POLAR extension via projection.

2.1 POLAR ApproachSemantic Differentials. Based upon the idea ofsemantic differentials as a psychometric tool toalign a word on a scale between two polar oppo-sites (Fig. 1), POLAR (Mathew et al., 2020) takesa word embedding as input and creates a new inter-pretable embedding on a polar subspace. This sub-space, i.e., the opposites used for the interpretableembedding are defined by an external source.

That is, starting with a corpus and its vocab-ulary V , a word embedding created by an algo-rithm a (e.g., Word2Vec or GloVe) assigns vectors−→Wav ∈ Rd on d dimensions to all words v ∈ V ac-

cording to an optimization function (usually wordco-occurrence). This pretraining results in an em-bedding D =

[−→Wav, v ∈ V

]∈ R|V|×d.

Such embedding spaces carry a semantic struc-ture between embedded words, whereas the dimen-sions do not have any specific meaning. How-ever, we can leverage the semantic structure be-tween words to transform the embedding spaceto carrying over meaning into the dimensions:POLAR uses N semantic differentials/oppositesthat are itself items within the embedding, i.e.,P =

{(piz, p

i−z), i ∈ [1..N ], (piz, p

i−z) ⊆ V2

}.

As shown in Fig. 2a, given two anchor points foreach polar opposite, a line between them representsa differential—which we name POLAR direction

2

( - )

( - )

( - )

(a) Polar Directions/Differentials.

( - ) ( - )

(b) Excerpt: Per Direction Orthogonal Projection.

0

0

0

(c) Interpretable Scales for .

Figure 2: POLAR (Mathew et al., 2020) with Projection in a nutshell: We showcase POLARρ interpreting emojiwith emoji (E/E) (cf. paper title). (a) We leverage polar opposites (here: / , / , / ) to provide em-bedding dimensions with semantic information. By using opposite differential directions (red dashed vectors), wecreate a new interpretable subspace. (b) Orthogonal projection (blue dotted vectors) of an embedded term (here:

) onto this subspace (e.g., left: / , right: / ) yields a direct scale measure between both opposites in theadjacent leg (green vectors, directed alike the differential). (c) The resulting interpretable embedding now containsa tangible position estimation along employed polar dimensions for each embedded term (here: ).

(red dashed vectors):−−→diri =

−−→Wapiz−−−−→Wa

pi−z∈ Rd

Base Change. Naturally, we can use these dif-ferentials as a new basis for the interpretable em-bedding E. Gathering all directions in a matrixdir ∈ RN×d, we obtain for all embedded termsv ∈ V : dirT

−→Ev =

−→Wav , and ultimately apply a base

change transformation−→Ev = (dirT )−1

−→Wav yield-

ing an interpretable subspace along the differentials−−→diri that carries over specific geometric semanticsfrom the input embedding. I.e., for each wordv ∈ V within the resulting interpretable embeddingE, its embedding vector

−→Ev now carries a measure

along each polar dimension’s semantics.Limitations. Polar opposites being very closein the original embedding space might tear apart.From a technical perspective, the used pseudo in-verse for the base change becomes numericallyill-conditioned if d ≈ N (Mathew et al., 2020).

2.2 POLARρ Extension: ProjectionWhile the base change approach seems natural,its given limitations lead us to propose a variantthat comes with several benefits. Instead of cre-ating a new interpretable vector space, we takemeasurements on the differentials dir defined asbefore (Fig. 2a, red dashed vectors). However,we now project each embedding vector

−→Wv for

v orthogonally onto the differentials as shown inFig. 2b (blue dotted vectors). This leads to a small-est distance between both lines w.r.t. the differen-tial, yet simultaneously allows for a direct scalemeasure on the differential vector as shown in

Fig. 2b & Fig. 2c (green vectors). Thereby, we alsodecouple the transformation matrix, which easeslater add-ins to the interpretable embedding.

Orthogonal projection (blue dotted vectors) ofeach input embedding vector

−→Wav onto a differential

i provides us the adjacent leg vector as follows:

oprojdiri(−→Wav) =

−→Wav ·−−→diri

|−−→diri|︸ ︷︷ ︸scalar

·−−→diri

|−−→diri|︸ ︷︷ ︸direction

As this adjacent leg (green vectors)’s directionnaturally equals the differential, we focus onlyon the scalar part representing a direct scale mea-sure. By normalizing the differential vector lengthsdir = dir · |dir|−1, the projected scale value con-

veniently results in: oprojscalardiri(−→Wav) =

−→Wav ·−−→diri.

This transformation allows to create a new inter-pretable embedding E ∈ R|V|×N for each embed-ding vector

−→Wav (exemplified in Fig. 1) as follows:

−→Ev = oprojscalardir (

−→Wav) = dir

T−→Wav ∈ RN

Computationally it requires an inital matrix mul-tiplication for each embedded term; Dimensionincrements require a dot product on each term.Downstream Tasks. Other experiments indicatePOLARρ downstream task performance being onpar with the input embedding, and an edge overbase change POLAR if d ≈ N (not shown).

2.3 Measuring Dimension Importance

There can be many possible POLAR dimensions,which requires to select the most suitable ones.

3

That is, we want to define a limited set of oppo-sites that best describes words or emoji w.r.t. inter-pretability across the whole embedding.Extremal Word Score (EWSO). We propose anew metric to measure the quality of polar di-mensions complementing heuristics from (Mathewet al., 2020). It measures the embedding confi-dence and consistency along available differentials.The idea of POLARρ is that directions representsemantics within the input embedding. We deter-mine embedded terms shortest distance to theseaxes via orthogonal projection; we use resultingintersections as the position w.r.t. the directions.

That is, as a new heuristic, for each of our dif-ferentials diri, we look out for k = 10 embeddedwords at the extremes (having the highest scores ineach direction) and take their average cosine dis-tance within the original embedding D to the differ-ential as a measure. This results in the average sim-ilarity of existing extremal words on our scale—aheuristic that represents the skew-whiffiness withinthe extremes on a differential scale.

3 Approach: Embedding & Polarization

We next propose an approach to improve the inter-pretability of word embeddings by adding emoji. Ituses our extended version POLARρ and adds emojito the POLAR space by creating word embeddingsthat include emoji.

3.1 Data Set

We create a word embedding out of a social mediatext corpus, since emoji are prominent in communi-cation within Online Social Networks. We decidedto use a corpus from the Jodel network, whereabout one out of four sentences contain emoji (see(Reelfs et al., 2020)).The Jodel Network. We base our study on acountry-wide complete dataset of posts in the on-line social network Jodel, a mobile-only messagingapplication. It is location-based and establisheslocal communities relative to the users’ location.Within these communities, users can anonymouslypost photos from the camera app or content of upto 250 characters length, i.e., microblogging, andreply to posts forming discussion threads.Corpus. The network operators provided us withdata of content created in Germany from 2014 to2017. It contains 48M sentences, of which 11Mcontain emoji (1.76 emoji per sentence on average).Ethics. The dataset contains no personal informa-

tion and cannot be used to personally identify usersexcept for data that they willingly have posted onthe platform. We synchronize with the Jodel opera-tor on analyses we perform on their data.

3.2 Semantic Differential Sources

POLARρ can create interpretable embeddings w.r.t.a-priori provided opposites. We next describe howwe select these opposites to make POLARρ appli-cable to our data. Most importantly, the approachrequires being part of or locating desired oppositeswithin the original embedding space.Words. As we extend the word embedding spacewith emoji, we still want to use words. We findcommon sources of polar opposites in antonymwordlists (Shwartz et al., 2017) as used in the orig-inal POLAR work. To fit our German dataset, wetranslated and manually checked all pairs keeping1275 items. From GermaNet (Hamp and Feldweg,1997), we extracted 1732 word pairs via antonymrelations leading to |Pwords| = 1832 word pairs.Emoji. Being not ideal, but due to lack of bet-ter alternatives, we ended up heuristically creatingsemantic opposites from emoji through qualitativesurveys across friends and colleagues resulting in|Pemoji| = 44 emoji pairs, cf. Tab. 3. While wecould use far more opposites especially of facialemoji, due to emoji clustering in the input embed-ding, spanned expressive space would arguably be-come redundant at similar EWSO scores for manydirections. Effectively it may bias interpretabilityover proportionally towards facial emoji.

3.3 Polarization

Preprocessing. We tokenize sentences withspaCy and remove stopwords. To increase am-ounts of available data, we remove all emoji mod-ifiers (skin tone and gender): { , , }→ .Due to German language, we keep capitalization.Original Embedding. We use gensim imple-mentation of Word2Vec (W2V). A qualitative in-vestigation suggests that skip-gram works betterthan CBOW (better word analogy). We kept train-ing parameters largely at defaults including nega-tive sampling, opting for d = 300 dimensions.Interpretable Embedding. The actual applica-tion of embedding transformation is simple. Wecreate the matrix of differentials dir, the POLARsubspace, according to our antonym-set Pwords ∪Pemoji (§ 3.2). After normalizing the subspace vec-tors, we create all embedding vectors via projec-

4

withinterpret W Mixed E

Words (W/W) (W/M) (W/E)Emoji (E/W) (E/M) (E/E)

(a) Campaigns Overview.We interpret Words & Emoji withlikewise Words, Emoji, and Mixed(both).

Please choose 5 Pairs thatcharacterize best!

2 -2 black - white2 female - male2 -2 slow - fast2 fork - spoon

...

(b) Selection Task for Emoji/Mixed (E/M).

Which term describes better?← = →

©©©©©©©black ©©©©©©© white

female ©©©©©©© male©©©©©©©

slow ©©©©©©© fastfork ©©©©©©© spoon

...

(c) Preference Task for Emoji/Mixed (E/M).

Figure 3: (a) We conduct six campaigns measuring human interpretability for including emoji to the POLARρ

embedding space. Exemplified with the Emoji Mixed campaign (E/M): interpreting emoji with emoji and words.(b) In the Selection test, coders choose suitable differentials for describing a given term. (c) In the Preference test,coders provide their interpretation of a given term to a differential scale.

tion−→Ev = dir

T −→Wv, ∀v ∈ V . Though normal-ization requires careful later additions to the PO-LAR space, we opted for standard normalization,Estdnrm = [E−mean(E)] · std(E)−1, to ensure thatthe whole embedding space aligns properly aroundthe center of gravity on each differential scale. Weselect the best suited opposites for a given em-bedding space by using the Extremal Word Score(§ 2.3) for d=500+44 dimensions (words + emoji).

4 Human Evaluation Approach

While we have now created a supposedly inter-pretable embedding, it remains to be seen how wellit is perceived by humans. That is, we next evaluateour two key RQs, discuss significance, and providefurther details: RQ1) How well does POLARρ withEWSO perform in selecting most interpretable di-mensions at varying expressiveness of words andemoji? RQ2) How well do POLARρ scalar val-ues reflect directions on the differential scales? i)Do humans prefer emoji to words? ii) How welldo human raters align w.r.t. interpretability? iii)What impact do demographic factors play in inter-pretability with or without emoji?

4.1 Evaluation design

To gather human judgement, we employ crowd-sourcing on the Microworkers platform.

4.1.1 Questions & Evaluation MetricsOur evaluation of the POLARρ approach includ-ing emoji to the differentials bases on two mainquestions next to demographics.Selection test. Analogous to the original work,we want to find out whether humans agree on bestinterpretability of POLARρ selected differentialswith a word intrusion task. The question asks our

coders to select five out of ten differentials thatdescribe a given word best as shown in Fig. 3b.We select half of these dimensions according tothe highest absolute projection scale values (mostextreme). The other half consists of a random selec-tion from the bottom half of available differentials.I.e., if the projection approach determines inter-pretable dimensions well, humans would chooseall five out of five POLARρ chosen differentials.

As any user might choose differently, we counthow often coders choose certain differentials. Theresulting frequencies immediately translate in aranking that we leverage for calculating the fractionof Top 1..5 being POLARρ chosen differentials.

Preference test. Additionally, we introduce thepreference test evaluating whether the directionon a given differential scale is in line with humanjudgement. That is, for the same words from the se-lection test, we display the same ten dimensions (5top-POLARρ, 5 random bottom) where coders se-lect their interpretation of the given word on scalesas shown in Fig. 3c. Typical for semantic differen-tial scales (Tullis and Albert, 2008; Osgood et al.,1957), we deliberately use a seven point scale repre-senting -3 to 3, allowing more freedom than 3 or 5points (Simms et al., 2019). Further, we specificallyallow a center point—being equal—as it might in-dicate both being equally well or not good at all.

Due to scale usage heterogeneity (Rossi et al.,2001), we normalize coder chosen directions(shift+scale according to mean) prohibiting dispro-portional influence of single coders. We evaluatethe coder agreement by counting direction (sign)non-/alignment with the POLARρ projection scale.

Demographics. There is a multitude of other ex-ternal factors that might have impact on coders’choices. To better understand participant back-

5

ground, we ask for their education, emoji usage(familiarity), smartphone platform (different emojipictograms), and if they had used Jodel before.

4.1.2 Evaluation SetupCrowdworker Campaigns We run a campaignfor each of the cross product between words only,emoji only, and mixed Tab. 3a and Fig. 2. (W/W)word/word sets a baseline comparison to resultsfrom the original POLAR work, albeit now usingthe projection approach. (W/M): word/mixed usesnot only words, but includes emoji to the POLARsubspace. (W/E): word/emoji uses only emoji todescribe words. (E/W): emoji/word provides an-other baseline as to how well emoji may be inter-preted with words only. (E/M): emoji/mixed usesboth, emoji and words to interpret emoji. (E/E):emoji/emoji may be the most interesting as we onlyuse the expressiveness of emoji to describe emoji.

For mixed cases (emoji and words within the PO-LAR subspace), we create rankings from absolutescale values on both types (words/emoji) separatelyand then select them equally often to achieve simi-lar amounts of word and emoji differentials.Used Words & Emoji. We selected 50 words andemoji to be described in each campaign. To ensurethat i) we only use common words that are verylikely known to our coders, and ii) these words arecaptured well within the underlying embedding,we pick them out of the upper 25% quantile byoccurrences in the corpus (n ≥1.6k). I.e., we choseemoji and words that appear frequently and shouldtherefore be well-known. For words, we ensuredthat they are part of the German dictionary Duden.Tasks Setup. Within our six campagins, we nowhave each 50 emoji or 50 words to be interpreted.We bundled this into 5 tasks each consisting of 10emoji/words—resulting in 30 different tasks. Eachof these tasks contains the Selection test, Prefer-ence test, and demographics.Subjects. Human judgement and crowdsourcedevaluations are noisy by nature. While it is usuallysufficient to employ few trusted expert coders, it issuggested to use more in the non-expert case (Snowet al., 2008). Thus, we assign 5 different annotatorsto each of the 30 tasks. At estimated 10-15min du-ration, we provide 3$ compensation for answeringa single task, above minimum wage in our country.Quality Assurance. Any crowdsourcing task of-fers an incentive to rush tasks for the money, whichrequires us to employ means of quality assurance(QA). As we have an uncontrolled environment and

thus untrusted coders, we handcraft test questionsfor the selection and preference test. This task isnon-trivial as we require unambiguity in correctanswers (we ensured this with multiple qualitativetests among friends and colleagues), while simul-taneously not being too obvious. We place onetest question for selection and one for preferencerandomly into each task (ending up in 11 words oremoji per task). This also means that each codercan only participate in up to 5 different tasks withina single campaign before re-seeing a test question.

We define acceptance thresholds of four out offive correct answers for both, the selection test andthe correct direction for the preference test.

4.2 Results

Within the crowdsourcing process, we rejectedabout 10% of all tasks according to our QA mea-sures, which then had to be re-taken. We ended upwith 6 campaigns each having 50 words/emoji an-swered by 5 coders; summing up to completed 150tasks. In total, 16 different coders accomplishedthis series of which 4 completed Σ ≥ 100 tasks.

4.2.1 Interpreting EmojiFirst we focus on the describing emoji cam-paigns (E/*). We present our main evaluation re-sults in Tab. 1. Within columns, we show results forrandom, original POLAR, and our six campaigns.We split the rows into results from the selection testacross Top1..5 entries and the preference test.Selection Test. We find very good results alongall emoji campaigns (E/*) being consistently bet-ter than any campaign describing words (W/*).The best performance was achieved for explain-ing emoji with emoji (E/E); others are on par.

We want to note however, that the small size ofused emoji-differential set may ease selection. E.g.,facial expression emoji regularly achieve higherembedding scores than others, which thus may biasthe bottom control half (§ 4.1.1). However, inter-preting emoji or words with words only, (E/W) and(W/W), achieve comparable performance.Preference Test. Here, we make the same obser-vation; The projected scales on the differentials aremostly well in line with human judgement.

4.2.2 Interpreting WordsAgain, we refer to Tab. 1, but now change our focusto describing words, campaigns (W/*).Selection Test. Albeit not being directly com-parable, using POLARρ in compaings: describing

6

Task Random POLAR (W/W) (W/M) (W/E) (E/W) (E/M) (E/E)

Selection

Top 1 0.500 0.876 0.79 0.83 0.60 0.81 0.79 0.88Top 2 0.222 0.667 0.62 0.61 0.35 0.67 0.68 0.77Top 3 0.083 0.420 0.45 0.42 0.15 0.54 0.57 0.67Top 4 0.024 0.222 0.30 0.18 0.07 0.37 0.37 0.59Top 5 0.004 0.086 0.14 0.08 0.01 0.22 0.19 0.38

Preference 0.500 - 0.740 0.672 0.576 0.800 0.848 0.832

Table 1: Campaign results. Random & original POLAR baseline. Selection and Preference results across cam-paigns. Words are better described by word dimensions, and emoji are better described by emoji dimensions.

words with words (W/W), or describing words withwords and emoji (W/M) achieved performance wellon par with POLAR. Noteworthy, describing wordswith emoji (W/E) yielded the worst results. Theprojection scale values for the emoji dimensionswere mostly lower compared to words. I.e., ac-cording to POLARρ, for words only few emojidifferentials would be among the top 5 opposites.Preference Test. As for the preference test, de-scribing words yield the best results using wordopposites only (W/W). Explaining words withemoji (W/E) performs particularly worse.

4.2.3 Result Confidence

Significance. To test for differences within thecoder alignment with POLARρ, we model both,the selection and preference test. With our primarygoal to understand the impact of including emojito a POLARρ interpretable word embedding, weanchor to the (W/W) campaign as a baseline.

For the selection test, we count if coders alignedwith POLARρ or chose any of the random alterna-tives across the Top 1..5 selection. For the prefer-ence test, we count whether coders aligned withPOLARρ’s scale direction. We apply double-sidedchi-square tests χ2 with p < 0.05 between the in-terpreting words with words (W/W) baseline andthe remaining five campaigns.

We identify significant differences in coder-POLARρ alignment to the (W/W) baseline whendescribing words with emoji (W/E) over Top1..5selection and preference. Counts from explain-ing emoji with emoji (E/E) signal significance forpreference and selection Top3..5. Coder-POLARρ

alignment in preferences is also significant for de-scribing emoji with emoji and words (E/M).

4.2.4 Observations

Emoji. As a byproduct, we also show if emojiopposites are preferred over words. That is, wefocus on the mixed campaigns describing wordsand emoji with words and emoji (*/M).

α (W/W) (W/M) (W/E) (E/W) (E/M) (E/E)

Selection 0.44 0.35 0.24 0.46 0.39 0.55Preference 0.57 0.41 0.34 0.61 0.54 0.60

Preference 0.65 0.52 0.40 0.70 0.64 0.68POLARρ onlyPreference 0.31 0.17 0.25 0.31 0.22 0.22random only

Table 2: Inter-rater agreement Krippendorff’s α acrosscampaigns. Coders achieve the best agreement in selec-tion test of emoji-based campaigns (E/*) and generallywithin the preference test measuring differential scales.

We establish a baseline by filtering the countsfor all non-POLARρ randomly chosen dimensionsbeing word or emoji representing a Bernoulli ex-periment. I.e., along the random dimensions, ourcoders chose 228 vs. 221 and 167 vs. 187 wordsover emoji. Applying chi-squared statistics indi-cates, that both types (words and emoji) are chosenequally often at least cannot be rejected.

We next analyze the POLARρ chosen dimen-sions in the mixed campaigns. Here, coders chosewords over emoji as follows: 465 vs. 336 in the(W/M), and 414 vs. 482 in the (E/M) campaign.We find statistically significant favors for words tointerpret words and emoji to describe emoji.Scale Usage. We find no evidence for any direc-tional biases within our preference test (cf. 3c).Coder Agreement. While the aggregate resultsare compelling, we use the Krippendorff-alpha met-ric to measure coder agreement along all six cam-paigns as shown Tab. 2; higher scores depict betteragreement. We split the overall results by test first(Selection & Preference), but also show additionalagreement results for preferences along POLARρ

chosen dimensions and their random counterpart.Most agreement is within the moderate regime.

This observation does not come unexpected fromour five non-expert classifiers per task. Overall, wefind that coders agree better for well-performingcampaigns. We identify the best agreement scoresfor interpreting emoji with emoji (E/E); codersagree least in the worst performing explainingwords with emoji campaign (W/E).

7

For the preference test, we subdivide our resultsinto POLARρ chosen differentials and comparethem to the randomly chosen ones. While theagreement on the random opposites is only fair,the agreement on POLARρ chosen opposites isconsistently better: Estimating differential scaledirections via POLARρ for words yields moderateagreement, whereas coders consistently align sub-stantially in interpreting emoji. We presume emojimay convey limited ideas, but are easier to grasp,have better readability; the campaings interpretingemoji (E/*) were generally accomplished faster.

4.2.5 DemographicsThough we are confident in applied QA measures,none of the demographics can be confirmed. Theannotator sample-size is small and thus most likelynot representative. Further, we find most work-ers providing contrasting answers across multipletasks they participated in, rendering collected de-mographic information unusable.

5 Related Work

No universal meaning of emoji. Priorwork showed that the interpretation of emojivaries (Miller et al., 2016; Kimura-Thollander andKumar, 2019), also between cultures (Guntukuet al., 2019; Gupta et al., 2021). Even within thesame culture, ambiguity and double meanings ofemoji exist (Reelfs et al., 2020) and differences ex-ists on the basis of an individual usage (Wisemanand Gould, 2018). These observations motivatethe need to better understand the meaning of emoji.Currently, no data-driven approach exists to makeemoji interpretable—a gap that we aim to close.Interpretable word embeddings. Word embed-dings are a common approach to capture mean-ing; they are a learned vector space representa-tion of text that carries semantic relationships asdistances between the embedded words. A richbody of work aims at making word embeddingsinterpretable, e.g., via contextual (Subramanianet al., 2018), sparse embeddings (Panigrahi et al.,2019), or learned (Senel et al., 2018) transforma-tions (Mathew et al., 2020)—all focus on text only.Recently, (Mathew et al., 2020) proposed the PO-LAR that takes a word embedding as input andcreates a new interpretable embedding on a po-lar subspace. The POLAR approach is similar toSEMCAT (Senel et al., 2018), but is based on theconcept of semantic differentials (Osgood et al.,1957) for creating a polar subspace. It measures

the meaning of abstract concepts by relying on op-posing dimensions associated (good vs. bad, hotvs. cold, conservative vs. liberal). In this work, weextend and use POLAR.Emoji embeddings. Few works focused on usingword embeddings for creating emoji representa-tions, e.g., (Eisner et al., 2016) or (Reelfs et al.,2020). (Barbieri et al., 2016) used a vector spaceskip-gram model to infer the meaning of emoji inTwitter data (Barbieri et al., 2016). Yet, the generalquestion if the interpretability of word embeddingscan be improved by adding emoji and if differentmeaning of emoji can be captured remains stillopen. In this work, we adapt the POLAR inter-pretability approach to emoji and study in a hu-man subject experiment if word embeddings canbe made interpretable by adding emoji and howemoji can be interpretated by emoji.

6 Conclusion

We raise the question whether we can leveragethe expressiveness of emoji to make word embed-dings interpretable. Thus, we use the POLARframework (Mathew et al., 2020) that creates in-terpretable word embeddings through semantic dif-ferentials, polar opposites. We employ a revisedPOLARρ method that transforms arbitrary wordembeddings to interpretable counterparts to whichwe added emoji. We base our evaluation on an offthe shelf word-emoji embedding from a large socialmedia corpus, resulting in an interpretable embed-ding based on semantic differentials, i.e., antonymlists and polar emoji opposites.

Via crowdsourced campaigns, we investigatethe interpretable word-emoji embedding qualityalong six use-cases (cf. Fig. 1): Using word- &emoji-polar opposites (or both Mixed), to interpretwords (W/W, W/E, W/M) and emoji (E/W, E/E,E/M), w.r.t. human interpretability. Overall, wefind POLARρ’s interpretations w/wo emoji beingwell in line with human judgement. We show thatexplaining emoji with emoji (E/E) works statisti-cally significantly best, whereas describing wordswith emoji (W/E) systematically yields the worstperformance. We also find good alignment to hu-man judgement estimating a term’s position ondifferential scales, using the POLARρ-projection.

That is, emoji can improve POLARρ’s capabilityin identifying most interpretable semantic differ-entials. We have demonstrated how emoji can beused to interpret other emoji using POLARρ.

8

Acknowledgements

We thank Felix Dommes, who was instrumentalfor this work by developing and implementing thePOLARρ projection approach and the ExtremalWord Score in his Master Thesis.

p−z pz p−z pz p−z pz p−z pz

Table 3: Used heuristically identified polar emoji oppo-sites (p−z, pz) ∈ Pemoji. We opted for a diverse set ofopposites selecting only few facial emoji differentials.

ReferencesF. Barbieri, F. Ronzano, and H. Saggion. 2016. What

does this Emoji Mean? A Vector Space Skip-GramModel for Twitter Emojis. In LREC.

Ben Eisner, Tim Rocktäschel, Isabelle Augenstein,Matko Bošnjak, and Sebastian Riedel. 2016.emoji2vec: Learning emoji representations fromtheir description. In Proceedings of The Fourth In-ternational Workshop on Natural Language Process-ing for Social Media, Austin, TX, USA. Associationfor Computational Linguistics.

Sharath Chandra Guntuku, Mingyang Li, Louis Tay,and Lyle H Ungar. 2019. Studying cultural differ-ences in emoji usage across the east and the west. InProceedings of the International AAAI Conferenceon Web and Social Media, volume 13, pages 226–235.

Mitali Gupta, Damir D Torrico, Graham Hepworth,Sally L Gras, Lydia Ong, Jeremy J Cottrell, andFrank R Dunshea. 2021. Differences in hedonic re-sponses, facial expressions and self-reported emo-tions of consumers using commercial yogurts: Across-cultural study. Foods, 10(6):1237.

Birgit Hamp and Helmut Feldweg. 1997. GermaNet - alexical-semantic net for German. In Automatic Infor-mation Extraction and Building of Lexical SemanticResources for NLP Applications.

T. Hu, H. Guo, H. Sun, T. T. Nguyen, and J. Luo. 2017.Spice up your chat: the intentions and sentiment ef-fects of using emojis. In ICWSM.

Philippe Kimura-Thollander and Neha Kumar. 2019.Examining the "global" language of emojis: Design-ing for cultural representation. In Proceedings ofthe 2019 CHI conference on human factors in com-puting systems, pages 1–14.

Binny Mathew, Sandipan Sikdar, Florian Lemmerich,and Markus Strohmaier. 2020. The polar framework:Polar opposites enable interpretability of pre-trainedword embeddings. In WWW, pages 1548–1558.

Hannah Miller, Jacob Thebault-Spieker, Shuo Chang,Isaac Johnson, Loren Terveen, and Brent Hecht.2016. "blissfully happy" or "ready to fight": Vary-ing interpretations of emoji.

P. . Novak, J. Smailovic, B. Sluban, and I. Mozetic.2015. Sentiment of emojis. PloS one.

C.E. Osgood, G.J. Suci, and P.H. Tannenbaum. 1957.The Measurement of Meaning. Illini Books, IB47.University of Illinois Press.

Abhishek Panigrahi, Harsha Vardhan Simhadri, andChiranjib Bhattacharyya. 2019. Word2sense: sparseinterpretable word embeddings. In ACL.

Jens Helge Reelfs, Oliver Hohlfeld, MarkusStrohmaier, and Niklas Henckell. 2020. Word-emoji embeddings from large scale messagingdata reflect real-world semantic associations ofexpressive icons. Workshop Proceedings of the 14thInternational AAAI Conference on Web and SocialMedia.

Peter E Rossi, Zvi Gilula, and Greg M Allenby. 2001.Overcoming scale usage heterogeneity. Journal ofthe American Statistical Association, 96(453).

Lutfi Kerem Senel, Ihsan Utlu, Veysel Yucesoy, AykutKoc, and Tolga Cukur. 2018. Semantic structure andinterpretability of word embeddings. In IEEE/ACMTASLP, 10.

Vered Shwartz, Enrico Santus, and DominikSchlechtweg. 2017. Hypernyms under siege:Linguistically-motivated artillery for hypernymydetection. In EACL, pages 65–75.

Leonard Simms, Kerry Zelazny, Trevor Williams, andLee Bernstein. 2019. Does the number of responseoptions matter? psychometric perspectives usingpersonality questionnaire data. Psychological As-sessment, 31.

Rion Snow, Brendan O’connor, Dan Jurafsky, and An-drew Y Ng. 2008. Cheap and fast–but is it good?evaluating non-expert annotations for natural lan-guage tasks. In EMNLP.

Anant Subramanian, Danish Pruthi, Harsh Jhamtani,Taylor Berg-Kirkpatrick, and Eduard Hovy. 2018.Spine: Sparse interpretable neural embeddings. InAAAI, volume 32.

9

Thomas Tullis and William Albert. 2008. Measuringthe User Experience: Collecting, Analyzing, andPresenting Usability Metrics. Morgan KaufmannPublishers Inc., San Francisco, CA, USA.

Sarah Wiseman and Sandy JJ Gould. 2018. Repur-posing emoji for personalised communication: Whymeans “i love you”. In Proceedings of the 2018 CHIconference on human factors in computing systems,pages 1–10.

10

Proceedings of the The Fifth International Workshop on Emoji Understanding and Applications in Social Media, pages 11 - 20July 14, 2022 ©2022 Association for Computational Linguistics

Beyond emojis: an insight into the IKON language

Laura Meloni1, Phimolporn Hitmeangsong2, Bernhard Appelhaus3, Edgar Walthert1, and Cesco Reale1

1KomunIKON, Neuchâtel, [email protected]@[email protected]

2Institute of Psychology, University of Pécs, [email protected]

3Department of Linguistics. University of Bremen, [email protected]

Abstract

This paper presents a new iconic language, theIKON language, and its philosophical, linguis-tic, and graphical principles. We examine somecase studies to highlight the semantic complex-ity of the visual representation of meanings.We also introduce the Iconometer test to vali-date our icons and their application to the med-ical domain, through the creation of iconic sen-tences.

1 Introduction

Since its introduction in the early 1970s, textualcomputer-mediated communication (CMC) hasbeen enriched by visual elements that expressemotion and attitude: emoticons (sideways facestyped in ASCII characters), emojis (designed, likeemoticons, to facilitate emotion expression in text-based conversation, but visually richer, more iconic,and more complex), stickers (larger, more elabo-rate, character-driven illustrations, or animationsto which text is sometimes attached) (Konrad et al.,2020).

Graphic symbols have been extensively utilizedin communication all over the globe, particularlyon social media and instant messaging services.More recently, studies have examined the use ofemojis in other dimensions. For example, considerthe usage of emojis or symbols to gauge consumersatisfaction with a product or service in the busi-ness field (Paiva, 2018). Emojis have been inves-tigated in the medical industry to assess patients’symptoms (Bhattacharya et al., 2019). Apart fromEmojis - that are not considered a language by mostlinguists - there are also visual languages, that werecreated to enable a full visual communication (e.g.

Bliss, Zlango, iConji, etc.). Nonetheless, severallimitations have been found in these visual com-munication tools. For example, some of them arebased on national languages reproducing their in-consistencies and difficulties; some were conceivedto be handwritten and so are very stylized and ab-stract; some have a too simple grammar, that doesnot allow sufficient precision in conveying complexmeanings. The IKON language was conceived toaddress these limitations.

IKON allows semantic compositionality by join-ing icons (as in Bliss, LoCoS and Piktoperanto),the use of grammar categories (Bliss), and the con-sistent use of iconemes (as in VCM), high iconicity(as in AAC languages and Emoji). IKON aims toreduce abstractness and language dependency.

Our contribution has multiple aims: i) to exam-ine IKON theoretical approach and its applicationto a few case studies based on semantic dimensionssuch as modality, verbs of motion, of perception,and of communication; ii) to present the Iconome-ter test, a crucial tool to understand how individualsinterpret IKON language; iii) To propose an appli-cation of IKON in the medical domain through abachelor’s thesis project developed by a memberof our team.

The remainder of the paper is structured as fol-lows: section 2 briefly discusses examples of iconiclanguages and their semantic approach. Section 3specifies IKON’s theoretical approach. Section 4brings a few case studies of our icons. Section5 presents the Iconometer, the next step to evalu-ate the designed icons. Section 6 describes IKONsentences in the dentist-patient frame. Finally, con-clusions are reported in section 7.

11

2 Semantic analysis in icon languages

Iconic languages have been used successfully inhuman-computer interfaces, visual programming,and human-human communication. They have, inmost cases, a limited vocabulary of icons and aspecific application. There are also “natural visuallanguages” that use logograms such as Chinese,Mayan, and Egyptian (Reale et al., 2021).

We will provide a short semantic analysis ofEmojis, Emojitaliano, and Augmentative and Al-ternative Communication (AAC).

The most popular icons today are emojis, whichroused discussions about to what extent they are alanguage and whether it is universal. Emojis arewidely used to express the user’s communicativeintent functioning as tone marking or as a word ina verbal cluster. However, emojis lack grammaticalfunction words and morphosyntax. In spite of that,some consider it an emergent graphical language(Ge and Herring, 2018).

Emojitaliano is an autonomous communicativeEmoji code born for the Italian language. It wascreated for the translation of Pinocchio (2017),launched on Twitter by F. Chiusaroli, J. Monti,F. Sangati within the Scritture Brevi community(https://www.scritturebrevi.it/). EmojitalianoBoton Telegram then supported the translation project.It contains the grammar and dictionary of the iconicsystem. Emojitaliano consists of a repertoire oflexical correspondences and a grammatical struc-ture predefined that reflects the content found inPinocchio. It respects linguistic principles such aslinearity, economy, and arbitrariness. Emojitalianodoes not have a high degree of iconicity (similar-ity between form and meaning of a sign) becausemany solutions are the result of an idiomatic orculturally marked decision not related to humanexperiences (Nobili, 2018). For example, emojital-iano represents the abstract concept of guilt withman + woman + apple, representing the referentusing a biblical and culture-specific metaphor.

The field of (AAC) has created various technolo-gies to facilitate communication for people whocannot communicate through language in the stan-dard way. Different approaches exist to developAAC iconic languages. From the semantic pointof view, these systems developed three ways torepresent language: i) single meaning pictures ii)alphabet-based methods are often subdivided to in-clude spelling, word prediction, and orthographicword selection iii) semantic compaction uses multi-

Figure 1: We dual inclusive icon (I-You). It is obtainedfrom the I pronoun (1.P.SG singular) (left) and Youpronoun (2. SG) (right).

meaning icons in sequences to represent language.Minspeak, for example, uses semantic compaction(Albacete et al., 1998; Tenny, 2016). Non-linearAAC has been proposed based on semantic rolesand verb valency (Patel et al., 2004).

The attention of researchers now focuses on theautomatic detection of icons’ meaning, using ma-chine learning and word embedding techniques (Aiet al., 2017). Nevertheless, in the creation of avisual language, it is also essential to empiricallyassess the degree of polysemy of a given icon, howits meaning is conveyed according to different lev-els of knowledge of users, evaluating the ambiguitywithin the system (Dessus and Peraya, 2005; Tijuset al., 2007).

3 Creation of an icon in IKON

3.1 Methodology

IKON follows philosophical, linguistic, and graph-ical principles. IKON language aims to create acompositional, iconic, international, and language-independent system (see Reale et al., 2021 for amore detailed analysis).

3.1.1 Philosphical frameworkThe philosphical framework determines the princi-ples and values at the core of the project. It theninforms both linguistic principles (e.g., by usinghyperonymic form to have a transcultual icon asin Figure 5 or representing semantically differentconcepts by different icons for language indepen-dence), and graphical guidelines (e.g., grey as skincolor as in the icons presented below).

IKON is human-centered. In designing a conceptor undefined events, generic humans are preferablyused as participants, creating a similarity betweenthe sign and our human experience.

IKON intends to be iconic and intelligible soas to be easily understandable by people of dif-ferent backgrounds. That is, taking into accountdifferent cultural and geographic realities creatingtranscultural icons. Pictographic, highly iconic rep-

12

resentations are favoured, while abstract symbolsare used only when no better alternative is identi-fied, or when it is already widely used (e.g. roadsigns).

IKON system aims to be inclusive, representingspecific identities through the use of no discrim-inating symbols or generalization (e.g. specificicons and symbols commonly used to represent var-ious genders), or by using unspecified and identity-neutral icons specified in the graphic guidelines.

3.1.2 Linguistic principlesLinguistic principles involve different linguisticdimensions: semantics, grammar and morphology,syntax.

The IKON lexicon is a core set of around 500icons covering basic concepts, used directly tocommunicate complex ideas and, indirectly, as“building blocks” to create new “compound words”(Reale et al., 2021). As we will see, the list iscontinuously growing as from each meaning othermeanings stem, if necessary, in a disambiguatingprocess. For instance, from a typological point ofview, there are languages that show more granular-ity than English within the number system. This isrevealed the most in the pronoun system (Corbett,2000). The dual inclusive pronoun - used to referstrictly to two people including the speaker - can befound all over the world and in different languagefamilies. It is common in Austronesian languages(e.g., in Maori taua ‘I and you’) but also found inUpper Sorbian, a West Slavonic language (mój ‘wetwo’) (Corbett, 2000). In light of that, we decidedto create an icon to represent the dual inclusivepronoun by using the icons for the pronouns I (firstperson singular) and you (second singular person)as shown in Figure 1.

IKON considers polysemy. Each semanticallydifferent concept found in our path has a differenticon (e.g., to smell can mean “to produce smell”or “to perceive smell”, and we decided to createtwo different icons for those meanings). Moreover,the main sense of a word is preferred, because amore specialized, metaphorical, or idiomatic senseis often culturally specific (e.g., to go away is rep-resented within its literal motion sense and doesnot involve other idiomatic usages such as stopbothering someone, leave someone alone. In thisway, language independence - a crucial value ofour philosphical framework - is increased.

At this point, we use a linear word order reflect-ing the linear syntax of natural spoken languages.

However, as previously mentioned, more flexiblesyntactic orders and even a bi-dimensional syntaxare conceivable.

3.1.3 Compositional rulesA graphical-semantic interface accounts for a finitenumber of pictorial forms so as to assure coherenceof the system. We go from the simplest icons to thecompound icons.Pictographic icons. When possible, icons are pic-tographic, that is a prototypical (Rosch et al., 1976;Croft et al., 2004) and conventional type of an item(e.g., the most telling representation of a window).Abstract symbol. Sometimes an abstract symbolis used if it is widespread and more comprehensible(e.g., traffic signs).Contextual icons. Some concepts and items mightbe easily recognized if represented within a givencontext. This kind of representation is called “con-textual”. Contextual icons are built as visual sceneswith several elements: graphic markers (such asarrows, circles, and color oppositions) pointing toone specific sub-element of the whole picture. Inthis case, what is highlighted is what it means.

Compound icons are more complex from a se-mantic point of view, obtaining meaning throughvarious strategies:Juxtaposition. Simple juxtaposition of two ormore elements, which seems the emergent use ofemojis (Ge and Herring, 2018).Contrastive form. Sometimes a meaning is betterunderstood in opposition to another meaning (e.g.,day as contrasted to night with yes-no symbols tosignal the intended meaning).Hyperonymic icons. As complex as they seem,serve to understand complex concepts as a set ofdifferent but related elements.Hyponymic icons. Hyponymic icons, on theopposite, highlight a specific member of thehyperonymic set. Ancient and modern visualsystems present these strategies (Reale et al., 2021).

3.1.4 Linguistic resourcesThe preset forms and strategies described aboveenable a flexible framework that allows us to graph-ically encode meanings according to the analysisof semantic, semiotic and cultural needs. For apractical example see Figure 5 (Hyperonymic iconfor to thank). Individuating the semantic frameof a lexical unit (Fillmore and Baker, 2010) - thecore elements of a word meaning - is particularlyessential if disambiguation of meanings is needed.

13

When it comes to verbs, it is also important toassess the semantic types and thematic role inthe argument structure to include the appropriateparticipants and frames in the related icon. Mostlexical resources contain a large amount oflinguistic information that can be exploited:Wordnet (Miller, 1998) (semantic lexicon withdefinitions and lexical relations), FrameNet (Boas,2005) (offers an extended amount of semantic andsyntactic phrase specifications), Sketch Engine(Kilgarriff et al., 2014) (a multilanguage annotatedcorpora resource). A limitation is that Wordnet andFrameNet are implemented only for the Englishlanguage. However, they are becoming availablein other languages. Other multilingual resourcesare also growing (Boas, 2005; Baisa et al., 2016).Thus, a more typological approach is needed andrecommended to confirm hypotheses with respectalso to our theoretical approach.

3.1.5 Graphic guidelines

The graphical guidelines are the the visible partof our project. For the most part they visually re-flect the linguistic principles, and the philosophicalframework, but also influence them due to graphicconstraints. The main points are:Vectorial. Readable at 30 px and at 4000 + px(vectorial).Text-Free. In general, the text is avoided as muchas possible, to keep language independence. Therecan be exceptions: letters, brands, proper names,sentences/words about phonetics, or linguistics.Background Independent. No background is ap-plied to the icons unless it is meaningful.Colors. Palette of 24 colors and Black-and-White.Each icon exists also in black and white. To keepicons racially neutral, we use gray as skin color.Pixel Perfect. All icons are aimed to be pixel-perfect on 48 by 48 pixels; diagonal lines are atslopes 1:1, 1:2, 1:3, or 1:6.Arrows and lines. Arrows are purple, normallyused to show one object inside one scene: solidarrows for emphasis; dashed arrows for movement.Lines dividing the two or more scenes are dottedlines, usually horizontal or vertical. Except whenanother angle makes more sense or is more practi-cal.Contrastive Icons. The contrast between 2 scenesis expressed by default through small symbols “yes-no” (green V or red X); the contrast between morethan 2 scenes is expressed by default by graying out

Figure 2: Initially proposed icons for the modal verbmust.

(or crossing out) the contrastive scenes and circlingthe signified scene.

In the following sections, we present a fewcase studies, providing concrete examples of theprocess and semantic considerations that precedethe design of complex concepts.

4 Case studies

A semantic criterion, namely the inherent concep-tual content of the event, is used to group meaningsand relative icons

4.1 Modality

Initially, the symbol of a traffic policeman in theposition of giving instruction was proposed to ex-press the modal verb must (Figure 2 (a)). Anotheroption was an obligatory road signaled with a redarrow - an idea inspired by the nobel pasigraphy(figure 2 (b)). However, these icons did not seemintuitive enough.

The World Atlas of Language Structure Online(WALS) provided typological information to ana-lyze how modality (situational and epistemic) isrealized cross-linguistically. Must can be used toexpress epistemic modality - a proposition is nec-essarily true - or situational modality - a situationof obligation in which the addressee’s action (e.g.,going home) is essential i.e., necessary. The fol-lowing analysis is focused on the latter. Must canbe decomposed in terms of the speaker’s inten-tion, in the sense of the speech acts theory. Theintention we focus on is the “speaker directives”(illocutionary force) which correspond to conceptslike “obligation”, or “advice”.

Non-verbal communication is a source of visuallanguage. A pointing gesture is a movement to-ward some region of space produced to direct atten-tion to that region. Scholars suggest that pointingremains a basilar communicative tool throughoutthe lifespan, deployed across cultures and settings,in both spoken and signed communication (Clark,

14

Figure 3: Proposal for must based on the index-fingergesture.

2003; Camaioni et al., 2004). The communica-tive role of hand gestures is evident in the factthat hand-based emojis are the third most usedtype of emoji (Gawne and Daniel, 2021). How-ever, there is limited literature on the diversityof forms and meanings, causing the exclusion ofnew culturally motivated encodings (Gawne andDaniel, 2021). To our knowledge, there are nostudies focused on the use of index finger or handpointing to express the abstract linguistic categoryof modality here discussed. Nevertheless, stud-ies on Chinese and English metaphors suggestthis possibility. As a matter of fact, in Chinese,the metaphors TO GUIDE OR DIRECT IS TOPOINT WITH A FINGER and THE POINT-ING FINGER STANDS FOR GUIDANCE ORDIRECTION are linguistically manifested. Thatis, compounds and idioms involving zhı ‘finger’express the metaphors above (among others) suchas zhı-shì (finger pointing–show) ‘indicate; pointout; instruct; directive; instruction; indication’, zhı-dao (finger pointing–guide) ‘guide; direct; super-vise; advice; coach’. These abstract senses relatedto performative language, guiding, directing, andadvising here have a bodily root (Yu, 2000). Con-cerning the emblematic open hand gesture shapedin various forms, these are shared across regionsand recognized as the verbal message to stop (Mat-sumoto and Hwang, 2013).

Finally, we hypothesize that the index fingerpointing can be an indexical non-deictic gesturethat has a general emphasis function in the dialogue(Allwood et al., 2007), which serves to give empha-sis to the speaker saying in a dialogue. This allowsthe expression of the obligation and the necessity ofan object or event. We developed the icons shownin Figure 3. The initial idea of an officer givingorders remained. The position of the index fingeris up at 45 degrees (not encoded in emoji). Thenext step will be to test these versions against otherproposals (Figure 2 or traffic signs-based icons).

Figure 4: Icon for to search (a) and icon for to find (b).

4.2 Verbs of perception

According to Wordnet (Miller, 1998), to find andto search are perceptual verbs in the sense of be-coming aware and establishing the existence of anobject through the senses. These verbs are in anon-factive causal relation because to search MAYcause to find (Ježek, 2016). Searching for some-thing has the purpose to find it even if one doesnot necessarily achieve the intended goal. To findindicates the result of discovering what that personis seeking. Therefore, we developed two similaricons (Figure 4). We chose to employ a magnify-ing glass to symbolize the process of searching andfinding, following the practice of user interfaces ofcomputers, smartphones, or websites. Payuk andZakrimal (2020) defined the magnifying glass sym-bol as "finding and searching without any characteror letter." It also signals the feature to zoom in andout on software or programs installed on a device(Ferreira et al., 2005). Both icons use a purpleball, which depicts an abstract object (often usedin IKON) that a person is looking for or has foundand that they have in mind, among other abstractobjects (gray balls). This permits the distinctionbetween searching and finding.

4.3 Verb of communication

As for words denoting communicative content, tothank is a verb of particular interest. There aremany different ways for people all around the worldto express gratitude or show appreciation to oneanother. Having analyzed the most widespread ges-tures used to thank, it was evident that there was nosingle gesture widespread enough to be understoodacross the world. For this reason, we decided toencode the cultural variation of thanking using ahyperonymic strategy shown in Figure 5. Usingbody language and hand gestures we depict theconcept of thanking in its different cultural forms.The hyperonymic icon merges four scenarios: aperson holding a hand on the chest, a common ges-ture for gratitude across cultures, referring to the

15

Figure 5: Hyperonymic icon for to thank.

Figure 6: Icon for the motion verb to go.

widespread metaphorical association of the heartas a container of emotions (Gutiérrez Pérez, 2008);the formal handshake gesture; a person bowing andthe hand-folded gesture, commonly used to greetand pay respect in South Asia and Southeast Asia.

4.4 Verb of motion

Wilkins and Hill (1995) define the verb to goas referring to a motion-away-from-the-speakeror motion-not-toward-speaker. IKON representsthe verb to go, as shown in Figure 6, in its gen-eral meaning: a person moving toward a direc-tion, signaled by the dashed purple arrow (follow-ing the graphic guidelines described above (Sect.3.1.5). (2005) described the semantics of the ar-row graphic. The arrow has three slots includinga tail, body, and head. If a person’s image or iconis behind the tail is able to interpret that a personmoves toward somewhere or someone. However,it is vice versa if it is put in the front of the headof an arrow. That should be something or someonemoving toward a person.

Languages lexicalize the various types of motionin different ways. For example, Russian motionverbs differ from English or Italian in how theylexicalize direction of movement (unidirectional orin the sense of back and forth but not limited tothat) and means of transportation (‘go-on-foot’ or‘go-by-vehicle’). Figure 6 represents the generalmeaning of movement toward an unknown destina-tion. Nevertheless, we can have specific icons thatencode direction, type of motion, and path.

5 Iconometer test

Iconometer is a software developed by the Univ.of Geneva (Peraya et al., 1999) to implement thetheoretical approach proposed by Leclercq (1992)to assess the degree of polysemy of a visual repre-sentation (icon, diagram, figurative image, photo-graph) and measure its adequacy to its prescribedmeaning.

Iconometer was previously used within theIKON language to evaluate icons from the familydomain and gender symbols used to signify gen-der. (Reale et al., 2021). Family icons representingfamily relationships with different gender signifierswere tested: only gender symbols, only haircuts, orboth gender signifiers. The results demonstrated nosignificant difference in accuracy or certainty whencomparing gender symbols, haircuts, or the com-bined gender signifiers. The research contributedin two ways: i) IKON language makes use of gen-der signifiers; ii) few family icons were subject toreconsideration due to low certainty in the test (e.g.,grandfather misunderstood for stepfather).

Our current objective is to compare the level ofcertainty that participants had on interpretationsof icons in different domains: family, modality(e.g., icons for must and can), operators, contrastiveicons, motion verbs. In some cases, we proposedtwo or more versions of the same icon; in others,only a single version was displayed to assess howit was perceived. The new Iconometer test presentsthe participant with 30 visual images and no text.Below the images are 8 possible meanings and theoption to write a personal answer.

The participant must distribute a total of 100points among the different meanings according tocertainty. The participant must give the most pointsto the meaning that seems most certain and has theoption to give 100 points to a single meaning.

Currently, we have not yet a sufficient populationto draw conclusions and we leave the discussion ofresults and consideration about the mechanism ofmeaning assignment for future work.

6 IKON in context: the medical domain

To get a useful set of iconic vocabulary for a typi-cal emergency dental treatment situation, a set ofquestions and sentences that are important in theanamnesis and treatment of patients were devel-oped, e.g., questions about previous illnesses ofvarious organs, diabetes, or medication allergies.The aim was to design unique pictograms in the

16

Figure 7: The iconic sentence Do you have toothachewith cold or hot food and drinks?

Figure 8: The simpler iconic sentence Does cold causeyour toothache? Does hot cause your toothache?

style of IKON accomplished with the help of agraphic designer and suggestions from providersof free pictograms on the internet.

The development of intuitive content is shownusing the example of the question sentence: Do youhave a toothache with hot or cold food or drinks?(Figure 7). The concept of contrastive representa-tion in a pictogram, like above for hot and cold, iscertainly helpful in isolated observation. However,grasping this principle and paying attention to theform requires an additional cognitive effort, thuscreating possibilities for misinterpretation. In thiscontext, it seems redundant. Also, the icons foreating and drinking seem redundant and confusing.

The and/or sign also caused difficulties for somerespondents. In light of that, it is easier to con-struct an iconic sentence that does not follow thesyntax of questions formulated in the modern Indo-European languages of Europe. Accordingly, Fig-ure 8 shows the same question being split into twosentences with a simplified syntax.

6.1 Online surveyAn online survey with the help of Google formswas conducted to verify the thesis of simplificationand to be able to make a statement about the com-prehensibility of iconic sentences and signs. Thesubjects selected were of different age groups andcultural backgrounds. The subjects were 52: 40Germans, 3 non-German Europeans, 4 Asians, 3

Iconic sentencesor icon

Correct an-swers

Rating scale(1=certain,5=veryuncertain)

Pregnancy 98% 1.94The tooth is de-stroyed, I will re-move it.

94% 2.1

Heart 90% 2.54Fever 90% 2.25Open your mouth 88% 2.08Endodontic treat-ment

83% 2.43

Dental filling 81% 2.64Are your lungsok?

69% 1.94

Do you take med-ications on a reg-ular basis?

69% 2.62

Do you havean allergy tomedicines?

65% 2.51

I’ll give you ananesthetic, so youwon’t be in pain.

63% 2.71

Diabetes 62% 2.82Do you have atoothache on pres-sure / on cold?

60% -

Dental x-ray 54% 2.96Where do youhave toothache?

38% -

Since when youhad toothache?

38% 2.76

You have a den-tal abscess, I willcut/open.

33% 3.18

Average under-standing rateof individualpictograms (8x)

81%

Average under-standing rate ofsentences (9x)

59%

Table 1: Percentages of correct answers and degree ofcertainty on comprehension of single icons and iconicsentences were in the survey.

17

Figure 9: Iconic sentence for You have dental abscess, Iwill cut to get the pus out.

Africans, 2 Mexicans. The age ranged from 15-30years (30 subjects), 31-50 (8 subjects) and over 50(14 subjects). The sample is unfortunately quiteunbalanced as there were great difficulty in recruit-ing people for the survey in countries where wehave no personal contacts. The original plan wasto survey 20 people from each of 5 continents.

The younger group consisted mainly of univer-sity students, the older group were mostly personalcontacts, who met the requirements of the study;overall, it can be assumed that the participants havean above-average level of education, although wedid not collect any data on this in the survey. How-ever, all participants spoke at least another languagein addition to their native language, showing a fur-ther indicator of an above-average educational levelof the test persons. Due to the small number ofcases and the uneven distribution of subjects byage group and origin, the results of the survey canonly be seen as a pilot study for further research. Itis easier to evaluate an online survey when optionshave to be ticked according to the multiple-choicemethod. On the other hand, it is not easy to findplausible alternative meanings with complete sen-tences. Thus, participants had to write their so-lutions following a more reliable approach. Thismethod is the opposite of the Iconometer test dis-cussed in section 5. In terms of age, size of placeof residence and use of emojis, the study showedthe following trends: the group 31-50 years gotthe best results, no significant difference betweenthe 15-30 and the >50 group exists; the smaller thecity in which the tester live, the better the result,suggesting that the degree of graphical stimuli inbigger places does not have a significant relevance;in contrast to the intuitive thesis that users of emo-jis have a better comprehension rate, the frequentuse of emojis as a pictorial method of communica-tion does not lead to a better comprehension of theicons. Due to the small number of cases and theuneven distribution of the test persons in terms ofage groups and origin, these results only serve as apilot study for further research.

Figure 10: Version A and version B of the iconic sen-tence Since when you have tootache?

The answers were 69% correct, but there weregreat differences. Table 1 reports the percentagesof correct answers for each icon or iconic sentence.Overall, single pictograms were 81% correct, whilesentences were 59% correct. Single icons for preg-nancy, fever, heart, open the mouth and the sen-tence the tooth is broken, I will remove it werecorrectly identified 90% of the time. The sentenceYou have a dental abscess, so I will cut to get thepus out had the worst result with 33% (Figure 9),perhaps due to the very specific medical treatment.The complex sentence involving time since whenyou have toothache? created difficulties (Figure 10.For this question two versions were proposed: 70%of participants preferred version B, which conveysthe core elements of the meaning (e.g., pronounshad been considered confusing by participants).Only 38% answered correctly. Overall, it was readas appointment at the dentist.

Generally, the difference in comprehensibilityrate was found much greater between the differenticons and sentences than between the groups. Thefuture task will be to work on semantic and syntac-tic concepts, especially in whole sentences, wherethe comprehension rate is still insufficient. In aclinical situation of patient-dentist discourse witha language barrier, the icons would be only partof the communication. Body language, pointinggestures, sounds, and demonstration material helpsfacilitate comprehension.

7 Conclusions

In this work, we presented the IKON language witha core set of about 500 core concepts. New mean-ings are semantically analyzed and then translatedinto a visual representation. In this process, IKONfollows defined criteria that assure coherence andflexibility within the system while continuously

18

growing its vocabulary. We examined conceptsgrouped according to the conceptual event encoded,such as modality (e.g., must), perception, motion,communication verbs. These are complex eventsor subject to cultural variation. Their examinationgave an insight into the semantic analysis requiredto design a visual correspondent: from the under-standing of the semantic frame of a word (descrip-tion of a type of event, relation, entity, participants)to semiotic and non-verbal language analysis. Wethen introduced modern linguistic resources thatcan be helpful for their depiction. However, onlytesting the different icons will tell us which oneperforms best among speakers of different back-grounds. Positively, cultural variation plays a sig-nificant role in our work, and IKON aims at givingequal representation.

We presented the Iconometer test, previouslyused to test the family icons and gender signifiers.The test is essential to assess the adequacy of theprescribed icons. The new test is ongoing as wedo not have a sufficient diversified population yet.Therefore, we plan to analyze and discuss results infuture work and review icons that do not performwell on the test.

Finally, we brought an example of the IKON lan-guage application in the dentist-patient discourseshowing that medical content can be transferredsuccessfully into an iconic language. Buildingiconic sentences is possible and beneficial, in thathelps people with language impairment or in a situ-ation of linguistic barrier to communicate in such acomplex domain as healthcare. However, the studydemonstrated that semantic considerations adoptedfor a single icon may not work in a more complexsyntax because of the cognitive effort required.

Aknowledgments

The authors address special thanks to the graphicdesigners of KomunIkon for the production of theshown icons, Esteban Quiñones and Esteban Ba-hamonde for their support on the Iconometer soft-ware, Marwan Kilani and Linda Sanvido for theirprecious suggestions.

ReferencesWei Ai, Xuan Lu, Xuanzhe Liu, Ning Wang, Gang

Huang, and Qiaozhu Mei. 2017. Untangling emojipopularity through semantic embeddings. In Pro-ceedings of the International AAAI Conference onWeb and Social Media, volume 11, pages 2–11.

Patricia L Albacete, Shi-Kuo Chang, and GiuseppePolese. 1998. Iconic language design for people withsignificant speech and multiple impairments. As-sistive Technology and Artificial Intelligence, pages12–32.

Jens Allwood, Loredana Cerrato, Kristiina Jokinen,Costanza Navarretta, and Patrizia Paggio. 2007. Themumin coding scheme for the annotation of feed-back, turn management and sequencing phenomena.Language Resources and Evaluation, 41(3):273–287.

Vít Baisa, Jan Michelfeit, Marek Medved’, and MilošJakubícek. 2016. European Union language re-sources in Sketch Engine. In Proceedings of theTenth International Conference on Language Re-sources and Evaluation (LREC’16), pages 2799–2803, Portorož, Slovenia. European Language Re-sources Association (ELRA).