BabylonianStory of the Deluge Epic of Gilgamish - Amazon AWS

An Interactive Rough Set Attribute Reduction Using Great Deluge Algorithm

Transcript of An Interactive Rough Set Attribute Reduction Using Great Deluge Algorithm

An Interactive Rough Set Attribute Reduction using Great Deluge Algorithm

Najmeh Sadat Jaddi and Salwani Abdullah

Data Mining and Optimization Research Group (DMO),

Center for Artificial Intelligence Technology, Faculty of Information Science and Technology,

Universiti Kebangsaan Malaysia, 43600 Bangi, Selangor, Malaysia

[email protected],[email protected]

Abstract. Dimensionality reduction from an information system is a problem of eliminating unimportant attributes from the original set of attributes while avoiding loss of information in data mining process. In this process, a subset of attributes that is highly correlated with decision attributes is selected. In this paper, performance of the great deluge algorithm for rough set attribute reduction is investigated by comparing the method with other available approaches in the literature in terms of cardinality of obtained reducts (subsets), time required to obtain reducts, number of calculating dependency degree functions, number of rules generated by reducts, and the accuracy of the classification. An interactive interface is initially developed that user can easily select the parameters for reduction. This user interface is developed toward visual data mining. The carried out model has been tested on the standard datasets available in the UCI machine learning repository. Experimental results show the effectiveness of the method especially with relation to the time and accuracy of the classification using generated rules. The method outperformed other approaches in M-of-N, Exactly, and LED datasets with achieving 100% accuracy.

Keywords: Interactive data mining, Great deluge algorithm, Rough set theory, Attribute reduction, Classification.

1 Introduction

Visual data mining is a new approach to deal with the fast rate of growing of information. With this rapid growth of high dimensional data in many domains (e.g. machine learning, pattern recognition, customer relation management and data mining), attribute reduction is considered as a necessary task of preprocessing techniques for learning process to extract rule-like knowledge from information system [1]. In recent years, the goal of many researches is to combine data mining algorithms with information visualization methods to use the advantages of both approaches [2-6] . Interaction is important for useful visual data mining. The data analyst must be able to interact with the presented data and mining parameters according to the requirements. Attribute reduction is a process of eliminating the

irrelevant and redundant attributes. This process involves the metaheuristic algorithms to optimize the solution [7-11]. Attribute reduction aids to increase the speed and quality of the learning algorithm. The quality of the learning algorithm is normally measured by accuracy of the classification [12]. Rough set theory [13] as a tool can solve this problem by discovering data dependencies using only data. This is the advantage of rough set theory that no additional information is required. Removing attributes that are highly related with other attributes (redundant) and searching for attributes that are strongly relevant to decision attribute with minimal information loss is the aim of rough set attribute reduction.

Many computational intelligence tools has been developed by Jensen and Shen [14], tabu search by Hedar et al. [15], genetic algorithm by Jensen and Shen [14], scatter search by Wang et al. [16], ant colony by Jensen and Shen [14, 17] and by Ke et al. [18] , great deluge by Abdullah and Jaddi [19, 20] and by Mafarja and Abdullah [21] are some of the available and successful methods for attribute reduction. This research investigates the performance of the great deluge for rough set attribute reduction (GD-RSAR) [19]. The investigation is performed by comparing the method with other available methods in the literature. Comparisons are based on the number of attributes in reducts, running time, number of computing dependency degree functions and classification accuracy with the use of generated rules. The proposed method in this paper is tested on 13 available datasets in http://www.ics.uci.edu/~mlearn.

An interactive interface is produced as initial study toward visualization of attribute reduction process. The interface helps analysts to easily interact with the data mining process. The user can select the class attribute for the attribute reduction. The class attribute in datasets may take place as first or last attribute or may be placed in between of other conditional attributes. This is the cause of importance of selection of class attribute by the user.

The next section describes the summary of rough set attribute reduction concept, and basic structure of the standard great deluge algorithm. The attribute reduction method using GD-RSAR is described in section 3. Section 4 presents the user interface for interaction in details. The experimental results and their comparisons with other available approaches in the literature are illustrated in section 5. Section 6 provides the final remark and conclusion.

2 Preliminaries

This section provides a brief description of the basics and concepts of rough set theory for attribute reduction as well as basic structure of the standard great deluge algorithm.

2.1 Rough Set Theory

Let (U, A) be an information system where U (universe) is a nonempty set of finite objects and A be a nonempty finite set of attributes that α: U → Vα for each αA. In a decision system, A is equal to the union of conditional attributes and decision attribute

(A=CD where C is conditional attributes and D is the decision attribute). With any P A there is an equivalence relation IND (P) as in (1).

IND (P) = {(x, y) U2| α P, α(x) = α(y)} (1)

The segment of U, produced by IND(P) is named U/IND(P) and can be presented as equation (2) where AB = {XY: XA, YB, XY≠ Ø}.

U/IND (P) = {αP: U/IND ({α})} (2)

If (x, y) IND (P), then x and y can be indiscernible by attributes from P. The

group of all equivalence classes of IND (P) that contains x is denoted by [x] P. Let XU, the P-lower approximation, PX of set X is defined as: PX ={x|[x]p X}

and P-upper approximation XP is specified as XP = {x|[x]p X ≠ Ø}. Let P and Q be equivalence relations over U then the positive region can be presented as relation (3).

POSp(Q) = QUX /

PX (3)

The positive region consists of all objects in U that can be classified to class of U/Q using attributes P. An important issue in data analysis is finding dependencies among attributes. Dependency degree between P and Q is expressed by equation (4).

k = p (Q) =||

| )(|

U

QPOSP

(4)

If k =1, Q depends totally on P, If 0 < k < 1, Q depends partially on P, and If k = 0 then Q does not depends on P. The attributes are selected using dependency degree measurement. A subset can be reduct if the condition R(D)= C (D) is met (R is the

reduct set, C is the conditional attribute set and D is the decision attribute set). Therefore, the reduced set gives the same dependency degree as the original set. Set of all reducts is defined as ralation (5).

Red={X: XC, R(D)= C(D)} (5)

Normally, in an attribute reduction process the reduct with minimum cardinality

which is called minimal reduct is searched.

2.2 Standard Great Deluge Algorithm

The basic idea of standard great deluge was presented by Dueck [22]. This is an alternative and enhanced form of the simulated annealing algorithm. The advantage of

great deluge over simulated annealing is that great deluge is less dependent on parameters than simulated annealing. It requires only two easily understandable input parameters: computational time (the user is willing to spend) and satisfying estimated value of objective function.

A standard great deluge accepts the solution when its quality is improved. It also accepts the worse solutions by comparing the quality of the solution with some given lower boundary Level (for the case of maximization). At this point, the worse solution is accepted if quality of the solution is greater or equal to Level. At first, the Level is set to function value of initial solution but during running the Level is increased by fixed increasing rate which is initialized as . The value of is an input parameter in this technique.

During the search, the value of Level makes a part of the search space impossible and pushes the current solution into the remaining possible search area (as shown in Fig. 1 with considering the quality of initial solution is equal to 0). Increasing the value of Level is a control process which drives the search towards a wanted solution. In this case, the current solution has many chances to provide several successful moves inside the remaining neighborhood and improve its value before the value of Level meets the estimated quality function.

Fig. 1. The neighborhood search area of GD-RSAR

The usefulness of the algorithm has been shown by successful applications on various optimization problems [23-26].

3 GD-RSAR

GD-RSAR presented by Abdullah and Jaddi [19] suggests a great deluge based algorithm for solving attribute reduction problems. This algorithm follows the standard great deluge algorithm (in maximization approach). However, it employs many components which are described in the following subsections. The pseudo code of GD-RSAR is shown in Fig. 2.

At each iteration of searching process of GD-RSAR, the neighborhood structure with the help of GDList, constructs a trial solution and then with the use of solution

quality measure, the quality of the trial solution is evaluated. After that, based on the quality of the trial solution, the best solution and the current solution are updated. The GDList is updated when the solution is updated as the best solution. The algorithm stops if one of the defined termination conditions is satisfied.

Set initial solution as Sol, obtained from constructive heuristic; Set best solution, Solbest Sol; Calculate the initial and best cost function, γ Sol and γ Solbest; Set estimated quality of final solution, EstimatedQuality 1; Set number of iteration, NumOfIte; Set initial Level: Level γ Sol; Set increasing rate ; Set iteration 0; do while (iteration < NumOfIte)

Add or remove or replace one attribute to/from the current solution (Sol) to obtain a trial solution, Sol*; Evaluate trial solution, γ Sol*; if (γ Sol* > γ Solbest)

Sol Sol*; Solbest Sol*; γ Sol γ Sol*; γ Solbest γ Sol*;

else

if (γ Sol* == γ Solbest) Calculate |Sol*| , |Solbest|; If ( |Sol*| < |Solbest| )

Sol Sol*; Solbest Sol*; γ Sol γ Sol*; γ Solbest γ Sol*; else

if (γ Sol* ≥ Level) Sol Sol*; γ Sol ← γ Sol*;

endif endif Level = Level + ; iteration++;

end do; Calculate |Solbest|; return |Solbest|, γ Solbest, Solbest;

Fig. 2. The pseudo code of the GD-RSAR GD-RSAR presents solutions by using one-dimensional vector. Each cell in the

vector contains the index of the attributes which is initially constructed by a constrictive heuristic. The length of initial solution is set based on user experience or with the use of the results found in the literature. The highest quality between randomly generated numbers of solutions is selected as initial solution. This number is set up by calculating 10 percent of the number of conditional attributes.

GDList is used to keep the sequence (history) of the best solutions (attributes) found so far. This sequence is to assist the great deluge algorithm to employ different neighborhood structures when it is creating a trial solution.

GD-RSAR involves three processes to generate trial solutions in a neighborhood of a current solution: 1) If the current solution has been assigned as the best solution by the previous iteration (working on the best solution) then one attribute with the lowest occurrence in the GDList is removed from the current solution. 2) If the current solution has been assigned as the worse solution by the previous iteration (working on the worse solution) then one attribute with the highest occurrence in the GDList is added to the current solution. 3) Otherwise, an alteration of an attribute is done by replacing it with another one randomly.

In GD-RSAR, the dependency degree Sol (D) of decision attribute D is used to

measure the quality of a solution Sol. To compare two solutions Sol and Sol*, we say Sol* is better than the Sol if one of the following conditions meets. Note that the cardinality (number of attributes) of set Sol is denoted as |Sol|.

Sol* (D) > Sol (D)

|Sol*| < |Sol|

if Sol* (D) = Sol (D)

If the quality value of the Sol* is greater than the quality value of the Sol, the Sol* is directly assigned as the best solution. When quality values of the Sol and Sol* are the same, one of these two solutions is assigned as the best solution under condition of the number of attributes. Definitely, the solution with less cardinality is assigned as the best solution.

GD-RSAR algorithm starts with an initialization part and continues with a do-while loop that during its iterations updates the current and the best solutions. As initialization, the number of iterations is denoted as NumOfIte and is assigned to 250 iterations (as used in Ke et al. [18]). In addition, estimated quality of the final solution is denoted as EstimatedQuality ad is set to one (maximum possibility for quality of solution). Initially, the Level is set to the quality of the initial solution, γ Sol, and is increased by increasing rate β at each iteration. The value of β is calculated by (5).

= (EstimatedQuality – γ Sol) / NumOfIte (5)

Firstly, in the do-while loop of the algorithm, the neighborhood structure employs GDList to generate a trial solution, Sol*. After that, the quality of the solution is calculated (γ Sol*). Then, γ Sol* is compared with the quality of the best solution γ Solbest. If there is an improvement in quality function, the trial solution, Sol*, is accepted and the current and the best solutions are updated. However, if there is no change in the quality function between these two solutions, then the cardinality of the solutions are compared. If (|Sol*| < |Sol|), then the current solution and the best solution are updated. A worse solution is accepted as current solution if the quality of the trial solution, γ Sol*, is greater than the Level. By inserting the best solution into GDList, when the best solution is updated, the GDList is updated as well. The process continues until one of the two stopping conditions is met. The stopping conditions are the number of iterations when it exceeds NumOfIte or the Level when it exceeds 1.

4 Visual Data Mining and Knowledge Discovery

The benefit of visual data mining is that the user is involved in the data mining process [27]. Visual data mining tries at join together the human in the data mining procedure. Fig. 3 describes the knowledge discovery process with the visualization part. The dashed lines and elements show visual analytic process. Note that the visual analytics procedure is interactive through interaction with the user of the system. Therefore an interactive user interface is required to give the user easy interaction. In the following subsection we describe the interface implemented for this aim. This user interface aids us toward an interactive visual data mining and knowledge discovery.

Fig. 3. The Overall process of visual data mining (taken from[28]).

4.1 Interactive User Interface

For any data mining algorithms, such as classification, cluster analysis, decision

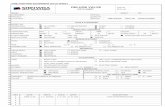

tree, etc., an interactive processing between system and user is required. Toward this, interactive interface is necessary especially in visual data mining. In this paper, an initial design of interactive attribute reduction as preprocessing technique for any data mining task is developed (Fig. 4(a)). In this interface a combo box is designed for selecting the algorithm for attribute reduction which in current study we use the great deluge algorithm. The class attribute number is asked from the user to ensure which attribute is considered as class attribute. The user can select the dataset file which is in CSV format using “Select File” button. When this button is clicked a file chooser window is opened that makes the user able to select the appropriate file (Fig. 4(b)).

Fig. 3. The user interface for attribute reduction process: (a) main interface window, (b) File chooser window

When the CSV file is selected the file path is shown for the user to confirm it. If the selected file is not the file that user needs to be reduced then by selecting the “Select File” button can choose another one. When the confirm button is clicked the “Start” button is active and a message that guide user to click the “Start” button for starting process is exposed. After doing the process the results for the reduction process are shown in the text box.

If for any reason the user missed the inserting class attribute number or selecting the file a message will be appeared to notify the user about any mistake. Besides, if the selected file by the user is not in CSV format the user will be informed by a message.

In the design of this system the effort is for high usability. This system is simple yet easy to use for the user. By extending this system we can produce a visual classification, clustering or any other data mining task. This system is toward visual data mining approach.

5 Experimental Results

The algorithm was programmed using Java and the model was tested on 13 well-known datasets from UCI. The algorithm is less dependent on the parameters (with two parameters) compared to the methods such as TSAR with seven [15], GenRSAR and SimRSAR each one with three [14] and SSAR with five [16] parameters to be tuned in advance. AntRSAR [17] and ACOAR [18], with two parameters have the same number of parameters as great deluge.

5.1 Number of Attributes in Obtained Reducts

Table 1 provides a comparison of GD-RSAR results with the results of other works in the literature. We compare the presented approach with other attribute reduction methods that are available in the literature on the 13 instances from UCI machine learning repository. The records in Table 1 represent the number of attributes in the minimal reducts obtained by each method.

Table 1. Comparison results of number of reduced attributes

Datasets

No. attributes

GD

-RS

AR

TSA

R

SimR

SAR

AntR

SAR

GenR

SA

R

AC

OA

R

SSA

R

MG

DA

R

M-of-N 13 6(10)7(10) 6 6 6 6(6)7(12) 6 6 6 Exactly 13 6(7)7(10)8(3) 6 6 6 6(10)7(10) 6 6 6 Exactly2 13 10(14)11(6) 10 10 10 10(9)11(11) 10 10 10 Heart 13 9(4)10(16) 6 6(29) 7(1) 6(18) 7(2) 6(18)7(2) 6 6 6(14)7(6) Vote 16 9(17)10(3) 8 8(15) 9(15) 8 8(2)9(18) 8 8 8 Credit 20 11(11)12(9) 8(13)9(5)10(2) 8(18)9(1)11(1) 8(12) 9(4) 10(4) 10(6)11(14 8(16)9(4) 8(9) 9(8) 10(3) 8(13)9(3)10(4) Mushroom 22 4(8)5 (9)6(3) 4(17) 5(3 4 4 5(1)6(5)7(14) 4 4(12) 5(8) 4(7)5(13) LED 24 8(14)9(6) 5 5 5(12) 6(4) 7(3) 6(1)7(3)8(16) 5 5 5 Letters 25 8(7)9(13) 8(17) 9(3) 8 8 8(8)9(12 8 8(5) 9(15) 8(18)9(2) Derm 34 12(14)13(6) 6(14) 7(6) 6(12) 7(8) 6(17) 7(3) 10(6)11(14) 6 6 6(11)7(9) Derm2 34 11(14)12(6) 8(2) 9(14)10(4) 8(3) 9(7) 8(3) 9(17) 10(4)11(16) 8(4)9(16) 8(2) 9(18) 8(4)9(12)10(4) WQ 38 15(14)16(6) 12(1)13(13)14(6) 13(16) 14(4) 12(2)13(7)14(11) 16 12(4)13(12)14(4) 13(4) 14(16) 12(1)13(11)14(8) Lung 56 4(5)5(2)6(13) 4(6) 5(13)6(1) 4(7)5(12)6(1) 4 6(8)7(12) 4 4 4(6)5(11)6(3)

In Table 1 the superscripts in parentheses represent the number of runs that achieved the minimal reducts. The number of attributes without superscripts means that the method could obtain this number of attributes for all runs. Note that we have used the same number of runs (20 runs) as other methods except SimRSAR that has used 30,

30 and 10 runs for Heart, Vote and Derm2 datasets, respectively. Although in some of the datasets the obtained reducts have slightly higher cardinality (e.g. Heart and Derm datasets) than other approaches, in general the results of GD-RSAR show potential of this method. To have more detailed comparison, we continued our study in other areas as following subsections.

5.2 Running Time

The time taken for finding reducts by some methods (AntRSAR, SimRSAR, and GenRSAR) has been reported in Jensen and Shen [14]. Comparison of GD-RSAR with three reported methods is presented in Fig. 5.

Fig. 5. Comparison of running time

This graph shows that the running time for GD-RSAR in most of the datasets is less than the other methods. It means that the method could obtain the reducts with spending less time compared with other methods. In this comparison, only Exactly, Exactly2 and Credit datasets needed slightly more time to discover the reducts. AntRSAR in WQ dataset and GenRSAR in LED dataset have spent a long time to discover the reducts. This might be the reason of finding reducts with lesser cardinality than GD-RSAR reducts.

As reported in Jensen and Shen [14], the rough order of techniques based on time is as: SimRSAR ≤ AntRSAR ≤ GenRSAR. With comparing GD-RSAR and SimRSAR (as best known algorithm in spending time) it can be found that GD-RSAR outperforms the SimRSAR in most of the datasets. Therefore, the order of algorithms can be extended as: GD-RSAR ≤ SimRSAR ≤ AntRSAR ≤ GenRSAR. Although GD-RSAR could not obtain the best reducts in some datasets in comparison with other methods, it could outperform others in terms of the time taken to generate reducts for most of the datasets. This advantage of finding reduct with consuming less time firstly is due to controlling the search space by the Level in great deluge algorithm. It makes the search space more promising and causes the solution to be found in a shorter time compared with other algorithms. The second reason might be the number of calculating dependency degree functions. The number of computing dependency degree which is a time-consuming process for the algorithms is investigated in next subsection as well.

5.3 Number of Computing Dependency Degree

By looking at the cost of the dependency degree computation especially if the number of attributes or the number of objects is a large number in a dataset, the significance of investigating number of calculating dependency degree functions in optimization of attribute reduction problem is appeared. In this part, we investigate the computational cost for the method as is shown in Table 2 and Fig. 6. Table 2. Comparison of number of computing dependency degree Datasets No. Attributes GD-RSAR AntRSAR GenRSAR TSAR M-of-N 13 251 12000 10000 702 Exactly 13 251 12000 10000 702 Exactly2 13 251 28000 10000 702 Heart 13 251 13000 10000 702 Vote 16 251 27000 10000 864 Credit 20 252 31000 10000 1080 Mushroom 22 252 11000 10000 1188 LED 24 252 17000 10000 1296 Letters 25 252 38000 10000 1350 Derm 34 253 34000 10000 1836 Derm2 34 253 50000 10000 1836 WQ 38 253 98000 10000 2052 Lung 56 255 28000 10000 3024

Fig. 6. Comparison of number of calculating dependency degree functions

In Hedar et al. [15], the number of calculating dependency degree functions used for GenRSAR, AntRSAR and TSAR is reported as following: (note that |C| is cardinality of conditional attribute set) The exact number of function evaluation in GenRSAR is about 10,000. The minimum number of function evaluations in AntRSAR is 250|C| (|Rmin|-2). The maximum number of function evaluations in TSAR is 54|C|.

In comparison, GD-RSAR uses maximum 250 iterations and one calculating dependency degree function for each plus the number of function evaluation for generating initial solution which is calculated by 10|C|/100. Therefore, the maximum number of evaluation functions for GD-RSAR is 250+(10|C|/100).

Table 2 shows the results of applied formula for all tested datasets. Fig. 6 illustrates the estimate comparison of function evaluation in GD-RSAR with other

reported methods. The best known results are presented in bold. It is obvious that the GD-RSAR could gain the first place in the number of calculating dependency degree functions.

5.4 Number of Rules and the Accuracy of the Classification

The reducts of GD-RSAR were tested by importing them into ROSETTA software to generate rules and find accuracy of the classification for all datasets. ROSETTA is a tool for analyzing data within the structure of rough set theory. This beneficial toolkit is available at http://www.lcb.uu.se/tools/rosetta/. The results of GD-RSAR were compared with genetic algorithm (GA), Johnson’s algorithm and Holte’s 1R that are available in ROSETTA. The 10-fold cross validation [1] was performed to evaluate the classification accuracy. Datasets were randomly divided into 10 subsamples. The single subsample was considered as validation data for testing the model and the rest (9 subsamples) were used as training sets. Then, the cross validation process was repeated 10 times (10 folds) and the 10 results from 10 folds were averaged to generate a single measure as predicted accuracy. So, the splitting percentage for testing and training set was 10% as testing set and 90% as training set. This process was performed for all the 13 datasets to generate rules and the predicted accuracies of the classification. The detail results are shown in Table 3. The best known results produced by GD-RSAR are presented in bold.

Table 3. Comparison of number of rules and classification accuracies

Datasets

Great Deluge Algorithm

Genetic Algorithm Johnson’s Algorithm

Holte’s 1R Algorithm

Average number of rules

Accuracy %

Average number of rules

Accuracy %

Average number of rules

Accuracy %

Average number of rules

Accuracy %

M-of-N 64 100 8658 95 45 99 26 63 Exactly 64 100 28350 73 271 94 26 68 Exactly2 559 68 27167 74 441 79 26 73 Heart 195 50 1984 81 133 68 50 64 Vote 71 92 2517 95 54 95 48 90 Credit 527 58 47450 72 560 67 81 69 Mushroom 52 100 8872 100 85 100 112 89 LED 40 100 41908 98 316 99 48 63 Letters 19 0 1139 0 20 0 50 0 Derm 127 86 7485 94 92 89 129 48 Derm2 196 64 8378 92 98 88 138 48 WQ 136 55 48687 69 329 58 94 51 Lung 18 66 1387 71 12 69 156 73

The results of the generated rules of different algorithms are shown in Fig. 6. In the case of genetic algorithm, the results were shown in Fig. 7(a) because of having a big difference in numbers compared with the other algorithms. As it is obvious in Table 3 and Fig. 7(a), the average number of generated rules by GD-RSAR is extensively less than the number of generated rules by genetic algorithm for all datasets without exception. Table 3 and Fig. 7(b) show GD-RSAR outperformed Johnson’s algorithm in 6 datasets and in comparison with Holte’s 1R, GD-RSAR gone one better than some datasets such as Mushroom, LED, Letters and Lung.

Fig. 6. Number of rules generated by GD-RSAR : (a) compared with genetic algorithm and (b) compared with GD-RSAR, Johnson’s and Holte’s 1R

Now, by looking at the Table 3 and Fig. 8 that compare the classification accuracy

of GD-RSAR, GA, Johnson’s and Holte’s 1R algorithms, it can be observed that the GD-RSAR in three of datasets such as M-of-N, Exactly, and LED could reach to 100% accuracy but other algorithms could not. If we compare the classification accuracy of the GD-RSAR and Holte’s 1R, GD-RSAR could obtain better result in most of the datasets which it can cover the inferiority of the number of rules in each datasets.

Fig. 8. Comparison of classification accuracies

Although, the reducts of GD-RSAR has slightly higher number of attributes, the

reducts found have high quality in terms of accuracy of the classification using very low computational cost. In various real-world applications, low computational cost with only approximate optimal solutions is preferred. In this case our examined method can be easily adapted for datasets when the problem is the size of dataset.

6 Conclusion and Future Work

In this study, a comparison of the GD-RSAR with other available approaches in terms of number of parameters, cardinality of the reducts, running time required finding the reducts, number of calculating dependency degree functions, number of rules generated by the reducts and predicted classification accuracy was investigated. In

these comparisons, experiments were carried out on UCI datasets. The result of the reducts cardinality showed a competitive result with other approaches. However it outperformed other methods in terms of running time and number of calculating dependency degree function. The results of the number of rules and classification accuracy showed that this method was able to obtain 100% accuracy for three datasets (i.e. M-of-N, Exactly, and LED) that other methods were not able to predict this accuracy. The rest of the datasets showed the accuracies between 50%-92% without taking into account Letters dataset which has the accuracy 0% due to its structure. These results for accuracy of the classification were achieved for the case of having less number of rules compared to other approaches in some of the datasets (i.e. Mushroom, LED, and Letters). In general, we can conclude that all these results confirm the effectiveness of this method due to generating high classification accuracy reducts in low computational cost.

Moreover, the developed user interface for attribute reduction increased the productivity and ability of use in real world problems. An enhanced of this user interface can be used for visual classification, visual clustering or in general visual data mining. This is subject to our future work.

References

1. Han, J., Kamber, M.: Data Mining: Concepts and Techniques. Morgan Kaufman Publishers, Oxford, England (2006)

2. Keim, D.A.: Visual exploration of large data sets. Commun. ACM 44, 38-44 (2001) 3. Havre, S., Hetzler, E., Whitney, P., Nowell, L.: ThemeRiver: visualizing thematic changes

in large document collections. Visualization and Computer Graphics, IEEE Transactions on 8, 9-20 (2002)

4. Stolte, C., Tang, D., Hanrahan, P.: Polaris: A System for Query, Analysis, and Visualization of Multidimensional Relational Databases. IEEE Transactions on Visualization and Computer Graphics 8, 52-65 (2002)

5. Abello, J., Korn, J.: MGV: a system for visualizing massive multidigraphs. Visualization and Computer Graphics, IEEE Transactions on 8, 21-38 (2002)

6. Zhen, L., Xiangshi, R., Chaohai, Z.: User interface design of interactive data mining in parallel environment. In: Active Media Technology, 2005. (AMT 2005). Proceedings of the 2005 International Conference on, pp. 359-363. (Year)

7. Dash, M., Liu, H.: Consistency-based search in feature selection. Artificial Intelligence 151, 155-176 (2003)

8. Lihe, G.: A New Algorithm for Attribute Reduction Based on Discernibility Matrix. In: Cao, B.-Y. (ed.) Fuzzy Information and Engineering, vol. 40, pp. 373-381. Springer Berlin Heidelberg (2007)

9. Kudo, Y., Murai, T.: A Heuristic Algorithm for Attribute Reduction Based on Discernibility and Equivalence by Attributes. In: Torra, V., Narukawa, Y., Inuiguchi, M. (eds.) Modeling Decisions for Artificial Intelligence, vol. 5861, pp. 351-359. Springer Berlin Heidelberg (2009)

10. Li, H., Zhang, W., Xu, P., Wang, H.: Rough Set Attribute Reduction in Decision Systems. In: Wang, G.-Y., Peters, J., Skowron, A., Yao, Y. (eds.) Rough Sets and Knowledge Technology, vol. 4062, pp. 135-140. Springer Berlin Heidelberg (2006)

11. Liu, H., Li, J., Wong, L.: A comparative study on feature selection and classification methods using gene expression profiles and proteomic patterns. In: Lathrop, R., Nakai, K.,

Miyano, S., Takagi, T., Kanehisa, M. (eds.) Genome Informatics 2002, vol. 13, pp. 51-60. Universal Academy Press, Tokyo, 2002, Tokyo, Japan (2002)

12. Hu, Q., Li, X., Yu, D.: Analysis on Classification Performance of Rough Set Based Reducts. In: Yang, Q., Webb, G. (eds.) PRICAI 2006: Trends in Artificial Intelligence, vol. 4099, pp. 423-433. Springer Berlin Heidelberg (2006)

13. Pawlak, Z.: Rough sets. International Journal of Computer and Information Sciences 11, 341-356 (1982)

14. Jensen, R., Qiang, S.: Semantics-preserving dimensionality reduction: rough and fuzzy-rough-based approaches. Knowledge and Data Engineering, IEEE Transactions on 16, 1457-1471 (2004)

15. Hedar, A.-R., Wang, J., Fukushima, M.: Tabu search for attribute reduction in rough set theory. Soft Comput 12, 909-918 (2008)

16. Jue, W., Hedar, A.R., Guihuan, Z., Shouyang, W.: Scatter Search for Rough Set Attribute Reduction. In: Computational Sciences and Optimization, 2009. CSO 2009. International Joint Conference on, pp. 531-535. (Year)

17. Jensen, R., Shen, Q.: Finding Rough Set Reducts with Ant Colony Optimization. UK Workshop on Computational Intelligence, UK (2003)

18. Ke, L., Feng, Z., Ren, Z.: An efficient ant colony optimization approach to attribute reduction in rough set theory. Pattern Recogn. Lett. 29, 1351-1357 (2008)

19. Abdullah, S., Jaddi, N.: Great Deluge Algorithm for Rough Set Attribute Reduction. In: Zhang, Y., Cuzzocrea, A., Ma, J., Chung, K.-i., Arslan, T., Song, X. (eds.) Database Theory and Application, Bio-Science and Bio-Technology, vol. 118, pp. 189-197. Springer Berlin Heidelberg (2010)

20. Jaddi, N.S., Abdullah, S.: Nonlinear Great Deluge Algorithm for Rough Set Attribute Reduction. Journal of Information Science & Engineering 29, 49-62 (2013)

21. Mafarja, M., Abdullah, S.: Modified great deluge for attribute reduction in rough set theory. In: Fuzzy Systems and Knowledge Discovery (FSKD), 2011 Eighth International Conference on, pp. 1464-1469. (Year)

22. Gunter, D.: New optimization heuristics (The Great Deluge Algorithm and Record to Record Travel). Computational Physic 86-92 (1993)

23. Burke, E.K., Abdullah, S.: A Multi-start Large Neighbourhood Search Approach with Local Search Methods for Examination Timetabling. In: Long D, S.S., Borrajo D, McCluskey L (ed.) Proceedings of the International Conference on Automated Planning and Scheduling (ICAPS 2006), Cumbria, UK (2006)

24. Burke, E., Bykov, Y., Newall, J., Petrovic, S.: A time-predefined local search approach to exam timetabling problems. IIE Transactions 36, 509-528 (2004)

25. Landa-Silva, D., Obit, J.: Evolutionary Non-linear Great Deluge for University Course Timetabling. In: Corchado, E., Wu, X., Oja, E., Herrero, Á., Baruque, B. (eds.) Hybrid Artificial Intelligence Systems, vol. 5572, pp. 269-276. Springer Berlin Heidelberg (2009)

26. McMullan, P.: An Extended Implementation of the Great Deluge Algorithm for Course Timetabling. In: Shi, Y., Albada, G., Dongarra, J., Sloot, P.A. (eds.) Computational Science – ICCS 2007, vol. 4487, pp. 538-545. Springer Berlin Heidelberg (2007)

27. Migut, M., Worring, M.: Visual exploration of classification models for various data types in risk assessment. Information Visualization (2012)

28. Stahl, F., Gabrys, B., Gaber, M.M., Berendsen, M.: An overview of interactive visual data mining techniques for knowledge discovery. Wiley Interdisciplinary Reviews: Data Mining and Knowledge Discovery 3, 239-256 (2013)