Resilient Energy-Constrained Microprocessor Architectures

-

Upload

khangminh22 -

Category

Documents

-

view

1 -

download

0

Transcript of Resilient Energy-Constrained Microprocessor Architectures

Resilient Energy-ConstrainedMicroprocessor Architectures

for obtaining the academic degree of

Doctor of Engineering

Approved

Dissertation

Department of Informatics

Karlsruhe Institute of Technology (KIT)

by

Anteneh Gebregiorgis

from Zalanbesa, Ethiopia

Oral Exam Date: 03 May 2019

Adviser: Prof. Dr. Mehdi Baradaran Tahoori, KIT

Co-adviser: Prof. Dr. Said Hamdioui, Delft University of Technology

Acknowledgments

First fo all, I would like to express my sincere gratitude to my adviser, Prof. Mehdi Tahoori,

who has helped me relentlessly, involved me in various research projects during my PhD

journey. Also, I would like to extend my gratitude to my co-adviser Prof. Said Hamdioui for

his support and motivation.

When I found myself experiencing the feeling of fulfillment, I realized though only my name

appears on the cover of this dissertation, my loving wife had an immense contribution for

the successful completion of my journey. Thus, I extend my earnest gratitude to my wife,

Tirhas, for her continued and unfailing love, support, and understanding during my pursuit

of Ph.D degree that made everything possible. She sacrificed most of her wishes to support

and encourage me unconditionally, which I am heavily indebted for. Finally, I acknowledge

the people who mean a lot to me, my family, for showing faith in me and giving me liberty to

follow my desire. I salute you all for the selfless love, care, pain and sacrifice you did to shape

my life.

I would like to thank my colleagues at the chair of dependable nano computing for their

thoughts, helps, and companionship. I would like to especially thank my friends, Rajendra

Bishnoi, Saber Golanbari, and Arun Kumar Vijayan which I have learned a lot from them,

not only in research, but also in other aspects of life.

v

Anteneh Gebregiorgis

Kaiserallee. 109

76185 Karlsruhe

Hiermit erklare ich an Eides statt, dass ich die von mir vorgelegte Arbeit selbststandig verfasst

habe, dass ich die verwendeten Quellen, Internet-Quellen und Hilfsmittel vollstandig angegeben

haben und dass ich die Stellen der Arbeit - einschlielich Tabellen, Karten und Abbildungen -

die anderen Werken oder dem Internet im Wortlaut oder dem Sinn nach entnommen sind, auf

jeden Fall unter Angabe der Quelle als Entlehnung kenntlich gemacht habe.

Karlsruhe, May 2019

©Anteneh Gebregiorgis

vi

ABSTRACT

In the past years, we have seen a tremendous increase in small and battery-powered devices

for sensing, wireless communication, data processing, classification, and recognition tasks.

Collectively referred to as the Internet of Things (IoT), all these devices have a huge impact on

several aspects of our day-to-day life. Since IoT devices need to be portable and lightweight,

they depend on battery or environmental harvested-energy as their primary energy source.

As a result, IoT devices have to operate on a limited energy envelope in order to increase

the supply duration of their energy source. In order to meet the stringent energy-budget of

battery-powered IoT devices, extreme-low energy design has become a standard requirement.

In this regard, supply voltage downscaling has been used as an effective approach for reducing

the energy consumption of Complementary Metal Oxide Semiconductor (CMOS) circuits and

enable ultra-low power operation. Although aggressive supply voltage downscaling is a popular

approach for extreme-low power operation, it reduces the performance significantly. In this

regard, operating in the near-threshold voltage domain (commonly known as NTC) could

provide a better trade-off between performance and energy saving, as it can achieve up to 10×energy-saving at the cost of linear performance reduction. However, the broad applicability

of NTC is hindered by several barriers, such as the increase in functional failure of memory

components, performance variation, and higher sensitivity to variation effects.

For NTC processors, wide variation extent and higher functional failure rate pose a daunting

challenge to assure timing certainty of logic blocks such as pipeline stages of a processor core

and stability of memory elements (caches and registers). Moreover, due to the reduction in

noise margin of memory components, susceptibility to runtime reliability issues, such as aging

and soft errors, is also increasing with supply voltage downscaling. These challenges limit NTC

potentials and force designers to use large timing margins in order to ensure reliable operation

of different architectural blocks, which leads to significant overheads. Therefore, analyzing and

mitigating variation induced timing failure of pipeline stages and memory failures during early

design phases plays a crucial role in the design of resilient and energy-efficient microprocessor

architectures.

This thesis provides cost-effective cross-layer solutions to improve the resiliency and energy-

efficiency of energy-constrained pipelined microprocessors operating in the near-threshold volt-

age domain. Different architecture-level solutions for logic and memory components of a

pipelined processor are presented in this thesis. The solutions provided in this thesis address

the three main NTC challenges, namely increase in sensitivity to process variation, higher

memory failure rate, and performance uncertainties. Additionally, this thesis demonstrates

how to exploit emerging computing paradigms, such as approximate computing, in order to

further improve the energy efficiency of NTC designs.

vii

ZUSAMMENFASSUNG

In den letzten Jahren wurde eine starke Zunahme an miniaturisierten und batterie-betriebenen

elektronischen Systemen beobachtet, welche zur kabellosen Kommunikation, Datenverarbeitung,

Mustererkennung oder fur Sensoranwendungen eingesetzt werden. Alles deutet darauf hin,

dass solche Internet of Things-Anwendungen (IoT) einen starken Einfluss auf unseren All-

tag haben werden. Da IoT-Systeme portabel und leichtgewichtig sein mussen, ist ein Bat-

teriebetrieb oder eine autarke Energiegewinnung aus der Umgebung Voraussetzung. Aus

diesem Grund ist es notwendig, den Leistungsverbrauch des IoT-Systems zu reduzieren und

die Energiequelle wenig zu belasten um eine moglichst lange Versorgung zu gewahrleisten.

Um diese stringenten Energie-Budgets von batteriebetriebenen IoT-Systemen einzuhalten, ist

eine Niedrigenergiebauweise ist Standardvorrausetzung geworden. In diesem Zusammenhang

wurden effiziente Verfahren entwickelt, welche die Versorgungsspannung reduzieren um den

Leistungsverbrauch von Complementary Metal Oxide Semiconductor (CMOS) Schaltungen zu

senken und eine ultra-low-power Operation zu ermoglichen. Obwohl diese Energieverbrauchs-

Reduzierung durch die starke Verringerung der Versorgungsspannung sehr effektiv ist, ver-

schlechtert sich auf der anderen Seite die Performance der Schaltung signifikant. Bei der Ab-

senkung der Versorgungsspannung in den nahen Schwellspannungsbereich (auch bezeichnet als

Near-Threshold-Computing (NTC)), wird jedoch der Leistungsverbrauch um das 10-fache ver-

ringert auf Kosten einer Performance-Reduzierung mit lediglich linearem Verlauf. Damit einher

gehen jedoch weitere Probleme, wie zum Beispiel funktionale Ausfalle von Speicherbausteinen,

Performance-Variationen und hohere Sensitivitat zu weiteren Variationen, welche eine breite

Anwendung dieses Verfahrens erschweren.

Fur NTC-Prozessoren fuhren diese erhohten Variationen und funktionalen Ausfalle zu un-

tragbaren Nebeneffekten, da zeitliche Anforderungen von Logikbausteinen wie zum Beispiel

Pipeline-Stufen des Prozessorkerns, sowohl als Stabilitat von Speicherbausteinen (Caches und

Register), nicht eingehalten werden. Des weiteren, da eine Reduzierung der Noise-Margin von

Speicherelementen auftritt, erhoht sich mit der Absenkung der Versorgungsspannung auch die

Anfalligkeit zu Alterungseffekten und Soft-Errors, welche den verlasslichen Betrieb wahrend

der Laufzeit beeintrachtigen. Diese Probleme limitieren das Potential von NTC-Verfahren

und zwingen Entwickler zeitliche Anforderungen aufzulockern um den verlasslichen Betrieb

von verschiedenen Komponenten der Gesamtarchitektur zu garantieren, welches zu einem

bedeutenden Mehraufwand fuhrt. Daher ist eine Analyse und Verbesserung der variations-

induzierten zeitlichen Verstoßen von Pipeline-Stufen und Speicherausfallen in fruhen Stadien

des Entwurfs entscheidend, um verlassliche und energieeffiziente Mikroprozessorarchitekturen

zu ermoglichen.

Diese Arbeit liefert einen schichtubergreifenden und kosteneffizienten Ansatz zur Verbesserung

der Verlasslichkeit und Energieeffizienz von energiebeschrankten Mikroprozessoren mit Pipeline,

welche im nahen Schwellspannungsbereich betrieben werden. Losungen auf verschiedenen Ar-

chitekturebenen fur Logik- und Speicherkomponenten eines Prozessors mit Pipeline werden

in der hier vorliegenden Arbeit behandelt. Die erarbeiteten Losungen adressieren die drei

viii

hauptsachlichen Herausforderungen von NTC-Anwendungen, namlich die Erhohung der Sensi-

tivitat zu Prozessvariationen, hohere Ausfallwahrscheinlichkeiten von Speicherbausteinen und

Performance-Schwankungen. Erganzend wird demonstriert, wie aufkommende Paradigmen

wie das Approximate Computing eingesetzt werden um die Energieeffizienz von NTC-Designs

weiter zu verbessern.

ix

Table of Contents

ABSTRACT x

Glossary xiii

Acronyms xv

List of Figures xvii

List of Tables xxi

1 Introduction 1

1.1 Problem statement and objective . . . . . . . . . . . . . . . . . . . . . . . . . . 3

1.2 Thesis contributions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4

1.2.1 Cross-layer memory reliability analysis and mitigation technique . . . . 4

1.2.2 Pipeline stage delay balancing and optimization techniques . . . . . . . 5

1.2.3 Exploiting approximate computing . . . . . . . . . . . . . . . . . . . . . 6

1.3 Thesis outline . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6

2 Background and State-of-The-Art 7

2.1 Near-Threshold Computing (NTC) for energy-efficient designs . . . . . . . . . . 8

2.1.1 NTC basics . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8

2.1.2 NTC application domains . . . . . . . . . . . . . . . . . . . . . . . . . . 9

2.2 Challenges for NTC operation . . . . . . . . . . . . . . . . . . . . . . . . . . . . 11

2.2.1 Performance reduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . 11

2.2.2 Increase sensitivity to variation effects . . . . . . . . . . . . . . . . . . . 12

2.2.3 Functional failure and reliability issues of NTC memory components . . 14

2.3 Existing techniques to overcome NTC barriers . . . . . . . . . . . . . . . . . . . 18

2.3.1 Solutions addressing performance reduction . . . . . . . . . . . . . . . . 18

2.3.2 Solutions addressing variability . . . . . . . . . . . . . . . . . . . . . . . 19

2.3.3 Solutions addressing memory failures . . . . . . . . . . . . . . . . . . . . 21

2.4 Emerging technologies and computing paradigm for extreme energy efficiency . 24

2.4.1 Non-volatile processor design . . . . . . . . . . . . . . . . . . . . . . . . 24

2.4.2 Exploiting approximate computing for NTC . . . . . . . . . . . . . . . . 24

2.5 Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 25

3 Reliable Cache Design for NTC Operation 27

3.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

3.2 Cross-layer reliability analysis framework for NTC caches . . . . . . . . . . . . 28

3.2.1 System FIT rate extraction . . . . . . . . . . . . . . . . . . . . . . . . . 28

3.2.2 Cross-layer SNM and SER estimation . . . . . . . . . . . . . . . . . . . 30

3.2.3 Experimental evaluation and trade-off analysis . . . . . . . . . . . . . . 33

xi

Table of Contents

3.3 Voltage scalable memory failure mitigation scheme . . . . . . . . . . . . . . . . 41

3.3.1 Motivation and idea . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 41

3.3.2 Built-In Self-Test (BIST) based runtime operating voltage adjustment . 43

3.3.3 Error tolerant block mapping . . . . . . . . . . . . . . . . . . . . . . . . 45

3.3.4 Evaluation of voltage scalable mitigation scheme . . . . . . . . . . . . . 45

3.4 Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 47

4 Reliable and Energy-Efficient Microprocessor Pipeline Design 49

4.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 49

4.2 Variation-aware pipeline stage balancing . . . . . . . . . . . . . . . . . . . . . . 50

4.2.1 Pipelining background and motivational example . . . . . . . . . . . . . 51

4.2.2 Variation-aware pipeline stage balancing flow . . . . . . . . . . . . . . . 52

4.2.3 Coarse-grained balancing for deep pipelines . . . . . . . . . . . . . . . . 55

4.2.4 Experimental results . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 56

4.3 Fine-grained Minimum Energy Point (MEP) tuning for energy-efficient pipeline

design . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 60

4.3.1 Background . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 60

4.3.2 Fine-grained MEP analysis basics and challenges . . . . . . . . . . . . . 62

4.3.3 Motivation and problem statement for pipeline stage-level MEP assignment 64

4.3.4 Lagrange multiplier based two-phase hierarchical pipeline stage-level MEP

tuning technique . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 67

4.3.5 Implementation issues . . . . . . . . . . . . . . . . . . . . . . . . . . . . 73

4.3.6 Experimental results . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 75

4.3.7 Comparison with related works . . . . . . . . . . . . . . . . . . . . . . . 80

4.4 Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 82

5 Approximate Computing for Energy-Efficient NTC Design 85

5.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 85

5.2 Background . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 86

5.2.1 Embracing errors in approximate computing . . . . . . . . . . . . . . . 86

5.2.2 Related works . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 87

5.3 Error propagation aware timing relaxation . . . . . . . . . . . . . . . . . . . . . 87

5.3.1 Motivation and idea . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 87

5.3.2 Variation-induced timing error propagation analysis . . . . . . . . . . . 88

5.3.3 Mixed-timing logic synthesis flow . . . . . . . . . . . . . . . . . . . . . . 91

5.4 Experimental results . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 93

5.4.1 Experimental setup . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 93

5.4.2 Energy efficiency analysis . . . . . . . . . . . . . . . . . . . . . . . . . . 93

5.4.3 Application to image processing . . . . . . . . . . . . . . . . . . . . . . 96

5.5 Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 97

6 Conclusion and Remarks 99

6.1 Conclusions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 99

6.2 Remarks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 100

Bibliography 101

xii

Glossary

3D three-dimensional integrated circuit made of vertical stacked silicon wafers.

AVF Architectural Vulnerability Factor is the probability that an error in memory structure

propagates to the data path. AVF = vulnerable period / total program execution period.

ECC Error Correction Code (ECC), enables error checking and correction of a data that is

being read or transmitted, when necessary.

FinFET Fin Field Effect Transistor is non planner three dimensional transistor.

FIT Failures in Time rate is a standard value defined as the Failure Rate per billion hours of

operation.

IoT Internet of Things is the network of devices that contain sensors, actuators, and connec-

tivity which allows the devices yo connect and interact.

LLC Last Level Cache is the lowers-level cache that is usually shared by all the functional

units on the chip.

SeaMicro Low-Power, High-Bandwidth Micro-server Solutions.

SP Signal Probability is the probability of storing logic 1 in the SRAM cell.

xiii

Acronyms

ABB Adaptive Body Bias.

AI Arteficial Inteligence.

BIST Built-In Self Test.

BTI Bias Temperature Instability.

CMOS Complementary Metal Oxide Semiconductor.

CMP Chip Multiprocessing.

CPU Central Processing Unit.

DCT Discrete Cosine Transform.

DSP Digital Signal Processing.

DVFS Dynamic Voltage and Frequency Scaling.

EDA Electronics Design Automation.

FBB Forward Body Bias.

GPU Graphics Processing Unit.

IPC Instruction Per Cycle.

ITRS International Technology Roadmap for Semiconductors.

LER Line Edge Roughness.

LUT Look-Up Table.

MEP Minimum Energy Point.

NMOS Negative-channel Metal Oxide Semiconductor.

NTC Near Threshold Computing.

PDP Power Delay Product.

PMOS Postive-channel Metal Oxide Semiconductor.

RBB Reverse Body Bias.

xv

Acronyms

RDF Random Dopant Fluctuations.

SER Soft Error Rate.

SNM Static Noise Margin.

SRAM Static Random Access Memory.

SSTA Statistical Static Timing Analysis.

VLSI Very Large Scale Integrated Circuits.

xvi

List of Figures

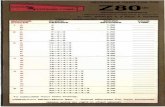

1.1 Intel Chips Transistor count per unit area, Millions of transistor per millimeter

square (MTr/mm2) and voltage scaling of different technology nodes. . . . . . . 2

1.2 Thesis contribution summary of solutions addressing memory failure, variability

effect on pipeline stages, and exploiting emerging computing paradigm. . . . . 5

2.1 Dynamic, leakage and total energy trends of supply voltage downscaling at

different voltage levels for an inverter chain implemented with saed 32nm library. 9

2.2 Supply voltage downscaling induced performance reduction (delay increase) of

an inverter chain implementation evaluated for wide operating voltage range. . 12

2.3 Process variation induced performance/ delay variation of b01 circuit across

wide supply voltage range. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13

2.4 Energy-consumption characteristics of inverter chain implementation of three

different process corners; typical (TT) with regular Vth(RVT), slow (SS) with

high Vth( HVT=RVT + ∆Vth), and fast (FF) with low Vth (LVT=RVT -

∆Vth), where ∆Vth=25mV. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 14

2.5 Schematic diagram of 6T SRAM cell, where WL= word-line, BL=bit-line and

RL=read-line. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15

2.6 Write margin (in terms of write latency) comparison of 6T and 8T SRAM cell

operating in near-threshold voltage domain (0.5V). . . . . . . . . . . . . . . . . 16

2.7 Interdependence of reliability failure mechanisms and their impact on the system

Failure In-Time (FIT) rate in NTC. . . . . . . . . . . . . . . . . . . . . . . . . 18

2.8 Variation-induce timing error detection and correction techniques for combina-

tional circuits (a) razor flip-flop based timing error detection and correction,

(b) shadow flip-flop for time borrowing, and (c) adaptive body biasing to adjust

circuit timing. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 20

2.9 Alternative bit-cell designs to improve read disturb, stability and yield of SRAM

cells in NTC domain (a) Differential 7-Transistor (7T) bit-cell deign, (b) Read/write

decoupled 8-Transistor (8T) bit-cell design, and (c) Robust and read/write de-

coupled 10-Transistor (10T) bit-cell design timing. . . . . . . . . . . . . . . . . 22

3.1 Cross-layer impact of memory system and workload application on system-level

reliability (Failure-In-Time (FIT rate)) of NTC memory components, and their

interdependence. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 28

3.2 Holistic cross-layer reliability estimation framework to analyze the impact of

aging and process variation effects on soft error rate. . . . . . . . . . . . . . . . 29

xvii

LIST OF FIGURES

3.3 SNM degradation in the presence of process variation and aging after 3 years

of operation, aging+PV-induced SNM degradation at NTC is 2.5× higher than

the super-threshold domain. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 31

3.4 SER rate of fresh and aged 6T and 8T SRAM cells for various Vdd values. . . . 33

3.5 SER of 6T and 8T SRAM cells in the presence of process variation and aging

effects after 3 years of operation. . . . . . . . . . . . . . . . . . . . . . . . . . . 34

3.6 Workload effects on aging-induced SNM degradation in the presence of process

variation for 6T and 8T SRAM cell based cache after 3 years of operation (a)

6T SRAM based cache (b) 8T SRAM based cache. . . . . . . . . . . . . . . . . 35

3.7 Workload effect on SER rate of 6T SRAM cell based cache memory for wide

supply voltage range. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 36

3.8 Impact of cache organization on SNM degradation in near-threshold (NTC) and

super-threshold (ST) in the presence of process variation and aging effect after

3 years of operation. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 37

3.9 FIT rate and performance design space of various cache configurations in the

super-threshold voltage domain by considering average workload effect (the blue

italic font indicates optimal configuration). . . . . . . . . . . . . . . . . . . . . 38

3.10 FIT rate and performance design space of 6T and 8T designs for various cache

configurations in the near-threshold voltage domain by considering average

workload effect (the blue italic font indicates optimal configuration). . . . . . . 39

3.11 FIT rate and performance trade-off analysis of near-threshold 6T and 8T caches

for various cache configurations and average workload effect in the presence of

process variation and aging effects. . . . . . . . . . . . . . . . . . . . . . . . . . 40

3.12 Energy consumption profile of 6T and 8T based 4K 4-way cache for wide sup-

ply voltage value ranges averaged over the selected workloads from SPEC2000

benchmarks. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 41

3.13 Error-free minimum operating voltage distribution of 8 MB cache, Set size =

128 Byte (a) block size=32 Bytes (4 blocks per set) and (b) block size=64 Bytes

(two blocks per set), the cache is modeled as 45nm node in CACTI. . . . . . . 42

3.14 Cache access control flowchart equipped with BIST and block mapping logic. . 44

3.15 Error tolerant cache block mapping scheme (mapping failing blocks to marginal

blocks). . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 45

3.16 Comparison of voltage downscaling in the presence of block disabling and ECC

induce overheads for gzip, parser and mcf applications from SPEC2000 bench-

mark (a) energy comparison (b) Performance in IPC comparison . . . . . . . . 46

4.1 Variation induced delay increase in super and near threshold voltages for OpenSPARC

core (refer to Table 4.1 in Section 4.2.4 for setup of OpenSPARC). . . . . . . . 50

4.2 Impact of process variation on the delays of different pipeline stages in NTC. . 51

4.3 Variation-aware pipeline stage delay balancing synthesis flow for NTC. . . . . . 52

4.4 Time constraint modification using pseudo divide and conquer. . . . . . . . . . 54

xviii

LIST OF FIGURES

4.5 Pipeline merging and signal control illustration (a) before (b) after merging

where S2 = S2+S3 and S3 = S4. . . . . . . . . . . . . . . . . . . . . . . . . . . 55

4.6 Nominal and variation-induced delays of OpenSPARC pipeline stages (a) nomi-

nal delay balanced baseline design, (b) guard band reduction (delay optimized)

of nominal delay balanced, and (c) statistical delay balanced (power optimized). 57

4.7 Power (energy) improvement of variation-aware balancing over the baseline de-

sign for OpenSPARC core. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 58

4.8 Nominal and variation-induced Delays of FabScalar pipeline stages (a) base-

line design, the gray boxes indicate the stages to be merged, (b) merged and

optimized design. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 59

4.9 Power (energy) improvement of variation-aware FabScalar design over the base-

line design. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 60

4.10 Dynamic to leakage energy ratio of pipeline stages of FabScalar core under

different workloads synthesized using 0.5V saed 32nm library. . . . . . . . . . . 61

4.11 MEP supply voltage movement characteristics of Regular Vth (RVT), High Vth

(RVT+∆Vth) and Low Vth (RVT-∆Vth) inverter chain implementations for dif-

ferent activity rates in saed 32nm library where ∆Vth = 25mV. . . . . . . . . . 63

4.12 Energy vs MEP supply voltage for a 3-stage pipeline core with Regular Vth

(RVT), High Vth (RVT+∆Vth) and Low Vth in saed 32nm library, and ∆Vth =

25mV. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 65

4.13 Energy gain comparison of core-level vs stage-level MEP assignment for a 3-

stage pipeline core with Regular Vth (RVT), High Vth (RVT+∆Vth), and Low

Vth in saed 32nm library and ∆Vth = 25mV, target frequency = 67MHz. . . . 66

4.14 Algorithm for solving MEP of pipeline stages by using Lagrangian function and

linear algebra. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 69

4.15 Illustrative example for clustering of the MEP voltages of different pipeline

stages (a) MEP distribution on Vth, Vdd space (b) Clustering of the MEPs into

3 Vdd and 3 Vth groups. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 71

4.16 Dual purpose flip-flop (voltage level conversion and pipeline stage register), the

gates shaded in red are driven by VDDL. . . . . . . . . . . . . . . . . . . . . . . 74

4.17 Comparing the energy efficiency improvement of the proposed micro-block (pipeline

stage) level MEP [Proposed] and macro-block level MEP [Related work] over

baseline design of Fabscalar core. . . . . . . . . . . . . . . . . . . . . . . . . . . 76

4.18 Comparing the energy efficiency improvement of the proposed micro-block (pipeline

stage) level MEP [Proposed] and macro-block level MEPrelated work over base-

line design of OpenSPARC core. . . . . . . . . . . . . . . . . . . . . . . . . . . 77

4.19 Effect of Vdd scaling on the energy efficiency of different pipeline stages (a)

pipeline stage level MEP (Vth) (b) core-level MEPrelated work Vth (normalized

to one cycle. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 78

xix

LIST OF FIGURES

4.20 Energy-saving and area overhead trade-off for different voltage islands for Fab-

Scalar core (three voltage islands provides better energy-saving and overhead

trade-off for FabScalar core). . . . . . . . . . . . . . . . . . . . . . . . . . . . . 80

5.1 Example of FIR filter circuit showing path classification and timing constraint

relaxation of paths. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 88

5.2 Variation-induced path delay distribution with the assumption of normal dis-

tribution. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 89

5.3 Timing error generation, propagation and masking probability from error site

to primary output of a circuit. . . . . . . . . . . . . . . . . . . . . . . . . . . . 90

5.4 Proposed timing error propagation aware mixed-timing logic synthesis framework. 91

5.5 Energy efficiency improvement for different levels of approximation (% of relaxed

paths). The curves correspond to the relaxation amount as a % of the clock. . 94

5.6 Effective system error for different levels of accuracy (% relaxed paths). The

curves correspond to the amount by which the selected paths are relaxed, as a

% of the clock. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 95

5.7 Energy efficiency improvement of different optimization schemes. . . . . . . . . 95

5.8 Post-synthesis Monte Carlo simulation flow. . . . . . . . . . . . . . . . . . . . . 96

5.9 PSNR distribution for different approximation levels for 35% relaxation slack. . 96

5.10 Comparison of output quality of different levels of approximation (% of relaxed

paths). . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 97

xx

List of Tables

3.1 Experimental setup, configuration and evaluated benchmark applications . . . 34

3.2 ECC overhead analysis fo different block sizes and correction capabilities . . . . 43

3.3 Minimum scalable voltage analysis for different ECC schemes . . . . . . . . . . 46

4.1 Experimental setup . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 56

4.2 PDP of baseline, delay optimized, and power optimized designs . . . . . . . . . 58

4.3 Experimental setup . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 75

4.4 Energy efficiency comparison of the pipeline stage-level MEP assignment with

core-level MEP assignment and voltage over-scaling technique . . . . . . . . . . 82

xxi

1 Introduction

The number of Complementary Metal Oxide Semiconductor (CMOS) transistors on a chip has

increased exponentially for the past five decades, as predicted by Gordon Moore in the year

1965, commonly referred as Moore’s law [1]. The technology downscaling driven tremendous

increase in transistor count has served as a mainstay for the semiconductor industry’s relentless

progress in improving the performance of computing devices from generation to generation [1].

The rapid increase in computing power has touched almost all aspects of our day-to-day life

such as education, health care, and security systems. As a result, embedded devices such

as wearable and hand-held devices, smartphones, navigation systems, Artificial Intelligence

(AI) systems, and critical components in aerospace and automotive systems became abundant

at an affordable cost [2]. If Moore’s law continues to hold, we can expect further exciting

developments for all segments of society. For example, it can facilitate the availability of large

health monitoring devices, such as diagnostic imaging devices, that presently are limited in use

due to their immense cost and size [2]. With scaling to the nanoscale era, atomic scale processes

become extremely important and small imperfections have a large impact on both device

performance and reliability which limits the scaling extent. In order to sustain the benefits

of technology downscaling, new silicon-based technologies, such as FinFET devices [3, 4] and

three-dimensional (3D) integration [5], are providing new paths for increasing the transistor

count per chip area. However, due to different barriers, the increase in integration density no

longer translate into a proportionate increase in the performance or energy efficiency [6].

Although microprocessors have enjoyed significant performance improvement while main-

taining constant power density, the conventional supply voltage downscaling has slowed down

in recent years. The slower pace on supply voltage downscaling limits the processor frequency

in order to meet the power-density constraint of Very Large Scale Integrated (VLSI) chips.

This supply voltage downscaling stagnation phenomenon is commonly referred as Dennard

scaling [7]. Figure 1.1 shows the technology scaling driven increase in transistor count and

supply voltage downscaling potential of different technology nodes in the nanometer regime.

As shown in the figure, the supply voltage stagnates around 1V for all technology nodes be-

yond 90nm node. As a consequence, the dynamic energy remains constant across different

technology nodes while leakage energy continues to increase. The supply voltage stagnation

leads to higher power density which restricts further technology downscaling and integration

density due to the thermal and cooling limits. Hence, FinFET and 3D based improvements

in the integration density has a minimal impact on the performance and energy efficiency

improvement [3, 5]. Moreover, the emergence of Internet of Things (IoT) applications has

increased the need for extremely-low power design, which in turn, increases the pressure for

supply voltage downscaling.

To overcome these challenges, processor designers have added more cores without a signifi-

cant increase in the operating frequency, leading to a prevalence of chip multiprocessing (CMP)

known as dark-silicon [7]. However, because the number of cores has been increasing geomet-

1

1 Introduction

250 180 130 90 65 45 32 22Technology node [nm]

0

1

2

Supply

volt

age [

V]

0

4

8

12

16

Tra

nsi

stor

denis

ty (

MTr/

mm

2)

Figure 1.1: Intel Chips Transistor count per unit area, Millions of transistor per millimeter square

(MTr/mm2) and voltage scaling of different technology nodes.

rically with each process node while die area has remained fixed, the total chip power has

again started to increase, despite the relatively flat core operating frequencies [8]. In practice,

the power dissipation of chip multiprocessing is constrained by thermal cooling limits forcing

some cores to be idle and underutilized. Hence, the underutilized cores must be powered off

to satisfy the energy budget, limiting the number of cores that can be active simultaneously

and reduces the achievable throughput of modern chip multiprocessing [7].

Therefore, a computing paradigm shift has become a crucial step to overcome these chal-

lenges and unlock the potentials of energy-constrained wearable and hand-held devices for

different application segments [9]. In this regard, aggressive supply voltage scaling down to

the near-threshold voltage domain commonly referred as Near-Threshold Computing (NTC),

in which the supply voltage (Vdd) is set to be close to the transistor threshold voltage, has

emerged as a promising paradigm to overcome the power limitation and enable energy-efficient

operation of nanoscale devices [9, 6]. Since the dynamic power decreases quadratically with

a decrease in the supply voltage, operating in the near-threshold domain reduces the energy

consumption exponentially. The exponential energy reduction makes NTC a promising design

approach for extremely-low energy domains such as mobile devices, energy-harvested embed-

ded devices, particularly in the scope of IoT applications [6, 10].

In comparison to the conventional super-threshold voltage operation, computations in the

near-threshold voltage domain are performed in a very energy-efficient manner. Unfortunately,

the performance drops linearly in the near-threshold domain [6, 11]. However, the performance

reduction at NTC can be compensated by taking advantage of the available resource (e.g.,

multi-core chips) in combination with the inherent application parallelism [12]. In addition

to performance reduction, however, NTC comes with its own set of challenges that affects

the energy efficiency and hinder its widespread applicability. NTC faces three key challenges

that must be addressed in order to harness its benefits [6, 10, 11]. These challenges include

2

1.1 Problem statement and objective

1) performance loss, 2) increase in sensitivity to variation effects as well as increased timing

failure of logic units, and 3) higher functional failure rate of memory components. Moreover,

the contribution of leakage energy is increasing significantly with supply voltage downscaling,

which makes it a significant part of the energy consumed by NTC designs. Therefore, over-

coming these challenges and improving the energy efficiency of NTC designs is a formidable

challenge requiring a synergistic approach combining different architectural and circuit-level

design techniques addressing storage elements and logic units [10, 12, 13].

1.1 Problem statement and objective

Although performance degradation is an essential issue in the near-threshold domain, variety

of circuit and architecture-level solutions, such as parallel architectures and voltage boosting,

have been proposed to fully or partially regain the performance reduction of NTC opera-

tion [12, 14]. For processors operating in the near-threshold domain, wide variation extent

and higher functional failure rate are posing a daunting challenge for guaranteeing timing cer-

tainty of logic blocks and stability of storage elements (caches and registers) [10]. Moreover,

due to the reduction in the noise margin of memory components, susceptibility to runtime

reliability issues, such as transistor aging and soft errors, is also increasing with supply volt-

age downscaling [13]. These challenges limit NTC potentials and force designers to add large

timing margins to ensure reliable operation of different architectural blocks, which imposes

significant performance and power overheads [15]. Therefore, analyzing and mitigating varia-

tion induced timing failure of pipeline stages and memory failures during early design phases

plays a crucial role in the design of resilient and energy-efficient microprocessor architectures.

Historically process variation has always been a critical aspect of semiconductor fabrica-

tion [16]. However, the lithography process of nanoscale technology nodes is worsening the

impact of process variation [17]. As a result, systematic and random process variations are pos-

ing a significant challenge [17]. Traditional methods to deal with variability issue mainly focus

on adding design margins [10]. Although such approaches are useful in the super-threshold

domain, they are wasteful and inadequate when the supply voltage is scaled down to the

near-threshold voltage domain [6]. As a result, such methods have significant performance

and power overheads when applied to NTC designs [6, 10]. Therefore, an effective variabil-

ity mitigation technique for NTC should address the variability effects at different levels of

abstraction, including device, circuit, and architecture-levels with the consideration of the

running workload characteristics.

Variation sensitivity of NTC also increases the functional failure rate, particularly it com-

promises the state of Static Random Access Memory (SRAM) cells by making them incline for

one state over the other [18, 15, 19]. Moreover, process variation increases the susceptibility

of SRAM cells to runtime failures such as aging and soft errors [18]. As a result, variation

induced functional failure of SRAM cells is increasing exponentially with supply voltage down-

scaling [18, 20]. For instance, a typical 65nm SRAM cell has a failure probability of ≈10−7

in the super-threshold voltage domain, and it is easily addressed using simple error correction

(ECC) mechanisms [21]. However, the failure rate increases by five orders of magnitude in the

near-threshold voltage domain (e.g., 500mV) [21, 20], in which ECC based solutions become

expensive. Therefore, robust cross-layer approaches, ranging from the architecture to circuit-

levels, are crucial to address the variation-induced functional failure of memory components,

3

1 Introduction

and improve the resiliency and energy efficiency of NTC designs [18].

The goal of this thesis is to improve the resiliency and energy efficiency of energy-constrained

pipelined NTC microprocessors by using cost-effective cross-layer solutions. This thesis presents

different circuit and architecture-level solutions for logic and memory components of a pipelined

processor addressing the three main NTC challenges, namely increase in sensitivity to process

variation, higher memory failure rate, and performance uncertainties. Additionally, this thesis

demonstrates how to exploit emerging computing paradigms, such as approximate computing,

in order to further improve the energy efficiency of NTC designs.

1.2 Thesis contributions

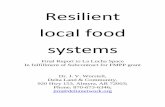

Different reliability and energy efficiency challenges of NTC designs are explored in this thesis.

To address the reliability and energy efficiency issues, a cross-layer NTC memory reliability

analysis framework consisting of accurate circuit-level models of aging, process variation, and

soft error is developed. The framework integrates the circuit-level models with architecture-

level memory organization, and all the way to the system level workload effects. The cross-

layer framework is useful to explore the impact of the reliability failure mechanisms, their

interdependence, and workload effects on the reliability of memory arrays. The framework

is also applicable for design space exploration as it helps to understand how the reliability

issues change from the super-threshold to the near-threshold voltage domain. In addition

to the framework, different architecture-level solutions to improve the resiliency and energy

efficiency of the logic units (pipeline stages) of NTC processors are also presented in this thesis.

These solutions include variation-aware pipeline stage balancing and fine-grained minimum

energy point operation. Moreover, this thesis explores the potentials of emerging computing

paradigms, such as approximate computing, for further energy efficiency improvement of NTC

designs. As shown in Figure 1.2, the overall contributions of this thesis are classified into three

main categories; 1) cross-layer memory reliability analysis and mitigation, 2) variation-aware

pipeline stage optimization, and 3) exploiting approximate computing for energy-efficient NTC

design.

1.2.1 Cross-layer memory reliability analysis and mitigation technique

It has been widely studied that functional failure of memory components is a crucial issue

in the design of resilient energy-constrained processors. In order to address this issue, a

cross-layer reliability analysis, and mitigation framework is developed in this thesis [18]. The

framework first determines the combined effect of aging, soft error, and process variation on the

reliability of NTC memories (e.g., caches and registers). Then, a voltage scalable mitigation

scheme is developed to design resilient and energy-efficient memory architecture for NTC

operation [22, 20]. The cross-layer reliability analysis framework is applicable to:

• To study the combined effect of aging, soft error, and process variation at different levels

of abstraction. Additionally, the framework is useful for circuit and architecture-level

design space exploration.

• To understand how the impact of reliability issues change from the super-threshold to

the near-threshold voltage domain.

4

1.2 Thesis contributions

• To estimate the memory failure rate, and error-free supply voltage downscaling potentials

of memory arrays for different organizations.

• To develop error-tolerant mitigation techniques addressing aging, and variation induced

failures of memory arrays operating in the near-threshold voltage domain.

1.2.2 Pipeline stage delay balancing and optimization techniques

To address variation-induced timing uncertainty of pipelined NTC processor, different architecture-

level optimization, and delay balancing techniques are presented in this thesis [23, 24].

• Variation-aware pipeline balancing [24]: variation-aware pipeline balancing tech-

nique is a design-time solution to improve the energy efficiency and performance of

pipelined processors operating in the near-threshold voltage domain. This technique

adopts an iterative variation-aware synthesis flow in order to balance the delay of pipeline

stages in the presence of extreme delay variation.

• Pipeline stage level Minimum Energy Point (MEP) design [23]: The increas-

ing demand for energy reduction has motivated various researchers to investigate the

optimum supply voltage for minimizing power consumption while satisfying the spec-

ified performance constraints. For this purpose, a fine-grained (pipeline stage-level)

energy-optimal supply and threshold voltage (Vdd, Vth) pair assignment technique for

an energy-efficient microprocessor pipeline design is developed in this thesis. The pipeline

stage-level MEP assignment is a design-time solution to optimize the pipeline stages in-

dependently by considering their structure and activity rate variation.

Gate delay analysis

Device level

Circuit level

Architecture level

System level

Aging modeling

and Vth shift

characterization

Vth variation

modeling and

characterization

Transistor model

SRAM modeling and characterization:

- SNM

- Critical charge

- SER

- Cache organization

- AVF analysis

- Signal probability analysis

- FIT rate analysis Ca

ch

e

Approximate circuit

synthesis and

optimization

- Pipeline stage balancing

- Synthesis and optimization

- Statistical timing analysis

Running workload

characterization and

trace extraction

Processor model and workload

characterstics

Memory reliability analysis

and mitigation

Pipeline stage delay balancing

and optimization

Emerging computing

paradigm

Figure 1.2: Thesis contribution summary of solutions addressing memory failure, variability effect on

pipeline stages, and exploiting emerging computing paradigm.

5

1 Introduction

1.2.3 Exploiting approximate computing

Approximate computing has emerged as a promising alternative for energy-efficient designs [25,

26]. Approximate computing exploits the inherent application error resiliency, to achieve a de-

sirable trade-off between performance/ energy efficiency, and output quality [27, 25, 28]. In

order to exploit the advantages of approximate computing, a framework leveraging the error

tolerance potential of approximate computing is developed to improve the energy efficiency of

NTC designs. In the framework, the control logic portion of a design is identified first and

is protected from approximation. Then, the approximable and non-approximable portions

of the data-flow portion are identified with the help of error propagation analysis tool. Af-

terward, a mixed-timing logic synthesis flow which applies a tight timing constraint for the

non-approximable portion, and a relaxed timing constraint for the approximable part is used

to synthesize the design.

1.3 Thesis outline

The remainder of the thesis is organized in five chapters. A short introduction of each chapter

is given as follows:

Chapter 2 presents a detailed analysis of near-threshold computing. The chapter motivates

the need for NTC operation first. Then, the merits and challenges of NTC are discussed in

detail. Besides, the chapter discusses the strengths and shortcomings of the state-of-the-art

techniques addressing NTC challenges.

Chapter 3 presents the reliability analysis and mitigation framework for near-threshold mem-

ories. The chapter first discusses the main reliability challenges of memory elements operating

at different supply voltage levels (from super-threshold to the near-threshold voltage domain).

Then, modeling and cross-layer analysis of the reliability failure mechanisms, and their in-

terdependence is presented. Based on the analysis an energy-efficient mitigation scheme is

presented to address the reliability failures of NTC memories. Finally, the chapter summary

is presented towards the end of the chapter.

Chapter 4 presents different architecture-level optimization techniques developed to improve

the energy efficiency, and balance the delay of the pipeline stages of pipelined NTC processors.

First, the chapter discusses the impact of process variation on the delay of pipeline stages.

Then, a variation-aware balancing technique is presented to balance the delay of the pipeline

stages in the presence of extreme variation effect. Additionally, since variation has a negative

impact on the leakage power of NTC designs, the chapter presents an analytical Minimum

Energy Point (MEP) operation technique that determines optimal supply and threshold voltage

pair assignment of pipeline stages.

Chapter 5 presents the framework to exploit the potentials of approximate computing for

energy-efficient NTC designs. The chapter first discusses the error tolerance nature of ap-

proximate computing for timing relaxation of NTC designs. Then, a mixed-timing synthesis

framework is presented to exploit the inherent error tolerance nature of approximate com-

puting. Finally, Chapter 6 presents the conclusions of the thesis, and points out potential

directions for future research.

6

2 Background and State-of-The-Art

Power consumption is one of the most significant roadblocks of technology downscaling ac-

cording to a recent report by the International Technology Roadmap for Semiconductors

(ITRS) [29]. Power delivery and heat removal capabilities are already limiting the perfor-

mance improvement of modern microprocessors, and will continue to restrict the performance

severely [30]. Although the dynamic power of transistors is decreasing with technology down-

scaling, the overall power density goes up due to the increase in leakage power leading to an

increase in the overall energy consumption of modern circuits.

The most effective knob to reduce the energy consumption of nanoscale microprocessors

is by lowering their supply voltage (Vdd). Although supply voltage downscaling results in

a quadratic reduction in the dynamic power consumption, the operating frequency is also

reduced and hence, the task completion latency increases significantly [31, 32]. Despite its

performance reduction, supply voltage downscaling has been widely adopted in various Dy-

namic Voltage and Frequency Scaling (DVFS) techniques. However, since it impedes the

execution time, it is not generally applicable for high-performance applications [32]. In order

to address this issue, parallel execution is used to counteract the downside of supply voltage

downscaling by increasing the instruction throughput. In the parallel execution approach, the

user program (task) is parallelized in order to run on multiple cores (CMP) [7]. However, the

increase in power density and heat removal complexity limits the number of cores that can be

active simultaneously, which eventually limits the maximum attainable throughput of modern

CMPs. Therefore, it is necessary for a paradigm shift in order to improve the performance and

energy efficiency of microprocessor designs in the nanoscale era [7]. To this end, aggressive

downscaling of the supply voltage to the near-threshold voltage domain has emerged as an

effective approach to improve the energy efficiency of nanoscale processors with an acceptable

performance reduction.

This chapter defines and explores near-threshold computing (aka NTC), a design paradigm in

which the supply voltage is set to be close to the threshold voltage of the transistor. Operating

in the near-threshold voltage domain retains much of the energy savings of supply voltage

downscaling with more favorable performance and variability characteristics. This energy

and performance trade-off makes near-threshold voltage operation applicable for broad range

of power-constrained computing segments from sensors to high-performance servers. This

chapter first presents a detailed discussion of NTC operation. Then, the main challenges

of NTC operation are discussed in detail followed by the discussion of the state-of-the-art

solutions to overcome the challenges of NTC operation.

7

2 Background and State-of-The-Art

2.1 Near-Threshold Computing (NTC) for energy-efficient designs

2.1.1 NTC basics

The power consumption of CMOS circuits has three main components; that is, dynamic power,

leakage power, and short circuit power [33]. Equation (2.1) shows the contribution of the three

power components to the overall power consumption of CMOS circuits.

Ptotal = Pdynamic + Pshort + Pleakage (2.1)

Dynamic power (Pdynamic) of CMOS devices mainly steams from the charging and discharging

of the internal node capacitance when the output of a CMOS gate is switching [34]. The

switching is a strong function of the input signal switching activity and the operating clock

frequency [35, 36]. Therefore, the dynamic power of CMOS circuits is modeled as shown in

Equation (2.2) [35, 36].

Pdynamic = α× Cload × V 2dd × f (2.2)

where α is the input signal switching activity, Cload is the load capacitance, Vdd is the supply

voltage, and f is the operating clock frequency.

Leakage power, the power dissipated due to the current leaked when CMOS circuits are

in an idle state, is also another critical component of the total power consumption of CMOS

circuits [37]. The leakage power is also dependent on the supply voltage of CMOS circuit and

it is expressed as shown in Equation (2.3) [37].

Pleakage = Ileak × Vdd (2.3)

where Ileak is the leakage current during the idle state of CMOS circuits.

Although dynamic and leakage powers are the dominant components of the CMOS circuit

power consumption, the short circuit power is also important component as it has a strong

dependency on the supply voltage [34]. However, since the dynamic power, which contributes

almost 80% of the total CMOS power consumption, has a quadratic dependency on the supply

voltage, reducing the supply voltage reduces the power consumption quadratically as shown

in Equation (2.2) [21, 24, 38]. Moreover, since the other components of total power (leakage

and short circuit powers) have a linear relation to the supply voltage, they are also reduced

linearly with supply voltage downscaling [10, 38].

Therefore, supply voltage downscaling is the most effective method to improve the energy ef-

ficiency of CMOS circuits. Since CMOS circuits can function properly at very low voltages even

when the Vdd drops below the threshold voltage (Vth), they provide huge voltage downscaling

potential to reduce the energy consumption. With such broad voltage downscaling potential,

it has become an important issue to determine the optimal operation region in which the power

consumption of CMOS circuits is reduced significantly with minimal impact on other circuit

characteristics [39]. Thus, scaling the supply voltage down to the near-threshold voltage regime

(Vdd ≈ Vth), known as NTC, provides more than 10× energy reduction at the expense of linear

performance reduction. However, further scaling the supply voltage down to the sub-threshold

8

2.1 Near-Threshold Computing (NTC) for energy-efficient designs

0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.9 1.0 1.1Supply voltage [V]

0.0

0.2

0.4

0.6

0.8

1.0

Norm

alize

d en

ergy

per

cyc

le

LeakageDynamicTotal

Figure 2.1: Dynamic, leakage and total energy trends of supply voltage downscaling at different voltage

levels for an inverter chain implemented with saed 32nm library.

voltage domain (Vdd << Vth) reduces the energy saving potential due to the rapid increase

in the leakage power and delay of CMOS circuits. Therefore, in the sub-threshold voltage

domain, the increase in leakage energy eventually dominates any reduction in dynamic energy

which increases the overall energy consumption when compared to the near-threshold voltage

domain.

To demonstrate the benefits and drawbacks of supply voltage downscaling, the dynamic

energy and leakage energy characteristics of an inverter chain implemented using the saed

32nm library is extracted for different supply voltage values as shown in Figure 2.1. the figure

shows that the dynamic energy of the inverter chain decreases quadratically with supply voltage

downscaling. As shown in the figure, the leakage energy has a rather minimal contribution to

the total energy in the super-threshold voltage domain. However, its contribution increases

when the supply voltage is scaled down to the near-threshold voltage domain, and becomes

dominant in the sub-threshold voltage domain. As a result, the leakage increase in the sub-

threshold voltage domain nullifies the energy reduction benefits of supply voltage downscaling.

An essential consideration for operating in the near-threshold domain is that the optimal

operating point is usually set to be close the transistor threshold voltage; however, the exact

optimal point varies from design to design depending on several parameters. Determining

the exact optimal point is referred to as Minimum Energy Point (MEP) operation, and it is

discussed in detail in Chapter 4 of this thesis.

2.1.2 NTC application domains

Graphics based workload applications are among the primary beneficiaries of NTC operation.

Since graphics processors (e.g., image processing units, and DSP accelerators commonly found

9

2 Background and State-of-The-Art

in hand-held devices such as tablets and smart-phones) are inherently throughput focused, indi-

vidual thread performance has rather minimal importance [40]. Thus, highly parallel ultra-low

power processing units and DSP accelerators operating in the near-threshold voltage domain

can deliver the required performance and throughput with a limited energy budget [41]. As a

result, the battery supply duration of those hand-held devices can be improved significantly by

taking advantage of NTC operation. Similarly, Graphics Processing Units (GPUs) for mobile

devices which usually run at a relatively low frequency benefits from NTC operation [24, 6].

Moreover, since GPU’s are mostly power limited, an improvement in energy efficiency with the

help of NTC operation can be directly translated into performance gain, as NTC enables to

power on multiple GPU units at the same time, and improve the throughput without exceeding

the thermal constraints [7, 42].

Additionally, the inherent error tolerance nature of various workload applications can be ex-

ploited to further improve the energy efficiency with the help of emerging computing paradigms

such as approximate computing [25, 43, 27, 44]. Floating point intensive workload applications

are inherently tolerant to various inaccuracies [25, 43]. The inherent error tolerance nature of

float point intensive workload applications makes them the best fit for NTC operation with

less emphasis on computation accuracy in order to improve the energy efficiency [43]. The

issue of exploiting inherent error tolerance nature of applications with the help of approximate

computing for energy-efficient NTC designs is discussed in detail in Chapter 5 of this thesis.

With the increasing demand for Internet of Things (IoT) applications, sensor-based systems

consisting of single or multiple nodes are becoming abundant in our day-to-day life [45]. A

sensor node typically consists of data processing and storage unit, off-chip communication,

sensing elements, and a power source [46]. These devices are usually placed in remote areas

(eg., to collect weather data) or implanted in the human body, such as pacemakers, designed

to sense and adjust the rhythm of the heart. Since these devices are mainly powered through

a battery or harvested energy, energy reduction is crucial while performance is not a major

constraint [46, 45]. Therefore, energy efficiency is the critical limiting constraint in the design

of IoT based battery-powered sensor node devices. As a result, those devices benefits from

energy-efficient NTC design techniques.

The energy efficiency achieved by near-threshold voltage operation techniques could vary

from one application to another application. For instance, general purpose processors for laptop

and desktop computers are less likely to benefit from NTC operation due to their demand

for higher performance. Thus, while energy efficiency is essential for longer battery supply

duration of laptop computers, sacrificing performance is not desirable from their overall design

purpose [32]. In such application domains user programs are executed in a time sliced manner,

and hence, the responsiveness of the system is mostly determined by the latency [15, 32].

Therefore, NTC is not beneficiary for such application domains as the modern CPUs designed

for laptop and desktop applications usually need to run at higher frequencies (e.g., 2-4GHz).

One potentially way of adopting NTC for these application domains is basically by increasing

the throughput via parallel execution while the frequency is sacrificed [7, 12]. However, due to

the limitation in the parallel portion of workload applications (commonly knowns as Amdahl’s

law) the throughput improvement of parallel execution cannot fully regain the performance

reduction imposed by NTC operation. Moreover, these systems are extremely cost sensitive,

and hence, the extra area and power overheads of the additional units can nullify the benefits

gained through NTC based parallel execution.

10

2.2 Challenges for NTC operation

Similarly, high-performance processors designed for high-end server applications are not best

fit for NTC operation, mainly because several applications require high single-threaded per-

formance with a limited response time [47]. Massive server farms are often power hungry, and

if single thread performance is not critical, then the performance is traded-off for energy effi-

ciency improvement by using controlled supply voltage downscaling [48, 49]. Hence, massively

parallel workloads, like those targeted by low-power cloud servers (e.g., SeaMicro), benefits

from NTC operation [50]. However, they have several challenges, such as the need for software

redesign to handle clustered configurations, to be addressed in order to harness the full-fledged

NTC benefits.

2.2 Challenges for NTC operation

Although NTC is a promising way to provide better trade-off for performance and energy

efficiency, its widespread applicability is limited by the challenges that come along with it. The

main challenges for NTC operation are 1) performance reduction, 2) increase in sensitivity to

variation effects, and 3) higher functional failure rate of storage elements. Therefore, these

three key challenges must be addressed adequately in order to get the full NTC benefits.

2.2.1 Performance reduction

In smaller technology nodes, the transistor threshold voltage is scaled down slowly in order to

reduce the leakage power of the transistor. Therefore, it necessitates for the supply voltage

to be considerably higher than the transistor threshold voltage in order to achieve better

performance. The dwindling threshold voltage scaling slowed down the pace of supply voltage

downscaling, which eventually leads to energy inefficiency [51]. As a consequence, energy-

efficient operation in the NTC regime comes at the cost of performance reduction. To study the

performance reduction of NTC operation, it is crucial to investigate the delay characteristics of

CMOS circuits operating in the near-threshold voltage domain. For this purpose, the voltage

downscaling induced delay increase of an inverter chain implemented using the saed 32nm

library is studied for different supply voltage values (from super-threshold down to the sub-

threshold voltage domain) given in Figure 2.2. As shown in the figure, the delay increases

linearly when the supply voltage is scaled down to the near-threshold regime (1.1V to 0.5V).

With further downscaling to the sub-threshold domain, however, the circuit delay increases

exponentially due to the exponential dependence of the transistor drain current on the node

voltages (VGS and VDS) as shown in Equation (2.4) [52].

IDS = IS × eVGS−Vth

nVT ×(

1− e−VDSVT

)(2.4)

where Vth is the threshold voltage, VT is the thermal voltage, and n is a process dependent term

called slope factor, and it is typically in the range of 1.3-1.5 for modern CMOS processes [52].

VGS and VDS parameters are the gate-to-source and drain-to-source voltages, respectively.

The parameter IS is the specific current which is given by Equation (2.5) [52].

IS = 2nµCoxV2T

W

L(2.5)

11

2 Background and State-of-The-Art

0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.9 1.0 1.1Supply voltage [V]

100

101

102

103

104

100

102

104

Dela

y in

[ns]

Figure 2.2: Supply voltage downscaling induced performance reduction (delay increase) of an inverter

chain implementation evaluated for wide operating voltage range.

where µ is the carrier mobility, Cox is the gate capacitance per unit area, and WL is the transistor

aspect ratio [52].

Although the performance reduction observed in NTC is not as severe as the reduction in the

sub-threshold voltage domain, it is still one of the formidable challenges for the widespread ap-

plicability of NTC designs. There have been several recent advances in circuit and architecture-

level techniques to regain some of the loss in performance [6, 15, 21]. Most of these techniques

mainly focus on massive parallelism with an NTC oriented memory hierarchy design. The

data transfer and routing challenges in these architectures is addressed by the use of 3D inte-

gration [15, 5]. Additionally, the use of deeply pipelined processor architectures can effectively

regain the performance loss of NTC design as it enables to increase the operating frequency [53].

However, the data dependency among instruction streams as well as conditional and uncon-

ditional branch instructions results in frequent pipeline flushing which eventually reduces the

overall throughput.

2.2.2 Increase sensitivity to variation effects

Another primary challenge for operating at reduced supply voltage values is the increase in

sensitivity to process variation which affects the circuit delay and energy efficiency signifi-

cantly [54, 55]. As a result, NTC designs display a dramatic increase in performance uncer-

tainty. Based on the nature of manufacturing, process variation is classified into two main

categories; local (intra-die) and global (inter-die) variations [17, 16, 56]. Local variation is

defined as the change (difference) in the parameters of the transistors in a single die, and it

can be systematic or random [17]. Random local variation due to Random Dopant Fluctua-

tions (RDF) and Line Edge Roughness (LER) results in variation in the transistor threshold

12

2.2 Challenges for NTC operation

voltage [16]. For nanoscale designs operating in the near-threshold voltage domain, the impact

of random local variation on circuit performance is becoming increasingly important [57, 58].

The primary reasons behind this trend are the reduction in transistor gate dimensions, reduced

pace of gate oxide thickness scaling, as well as dopant-ion fluctuations [17, 58].

To illustrate the impact of local process variation on the performance uncertainty of NTC

circuits, a Monte Carlo simulation based circuit delay analysis is performed for the b01 circuit

from ITC’99 benchmark suite [59]. The circuit has been synthesized with the Nangate 45nm

Open Cell Library [60] characterized for different supply voltages, ranging from 0.4V to 0.9V,

with Cadence Liberate Variety statistical characterization tool [61]. Monte Carlo analysis is

done using 1000 samples by considering local variation induced threshold voltage shift as shown

in Figure 2.3.

Figure 2.3 shows local process variation has minimum impact on the circuit delay when

operating at higher supply voltage values. However, the impact of local variation increases

exponentially when the supply voltage is scaled down to the near/sub-threshold voltage do-

mains. For example, the delay variation due to process variation alone increases by 6× from

≈20% in the super-threshold voltage domain (0.8V and above) to 120% at NTC (0.5V). The

delay variation even increases by 8× when the supply voltage is further scaled down to the

sub-threshold voltage domain.

Similar to the local variation, global (inter-die) variation affects the performance of dif-

ferent chips. Global variation is usually related to the process corners often called process

Monte Carlo [62]. Process corners are provided by the foundry, and are typically determined

by library characterization data [63]. Process corner is represented statistically (e.g., ±3σ) to

designate Fast (FF), Typical (TT), and Slow (SS) corners. These corners are used to represent

global process variation that designers must consider in their designs. Global (process corner)

0.4 0.5 0.6 0.7 0.8 0.9Vdd (V)

0

20

40

60

80

100

120

140

dela

y (n

s)

Mean ( delay)Standard deviation (3 delay)

Figure 2.3: Process variation induced performance/ delay variation of b01 circuit across wide supply

voltage range.

13

2 Background and State-of-The-Art

0.5

1

1.5

2

2.5

3

3.5

4

0.4 0.45 0.5 0.55 0.6 0.65 0.7 0.75 0.8 0.85 0.9

En

erg

y (

µJ)

Vdd

High VthRegular Vth

Low Vth

Figure 2.4: Energy-consumption characteristics of inverter chain implementation of three different pro-

cess corners; typical (TT) with regular Vth(RVT), slow (SS) with high Vth( HVT=RVT +

∆Vth), and fast (FF) with low Vth (LVT=RVT - ∆Vth), where ∆Vth=25mV.

variation causes significant change in the duty cycle and signal slew rate, and significantly

affect the energy efficiency of chips [63, 62]. In order to illustrate this scenario, the impact of

global process variation (process corner) on the energy consumption of inverter chain imple-

mented using three different process corners of the saed 32nm library is evaluated as shown

in Figure 2.4. The three process corners of the inverter chain are implemented using the SS,

TT, and FF process corners. In the TT corner implementation, both NMOS and PMOS tran-

sistor types are in a typical (T) process corner (Regular Vth). The FF corner implementation

represents the condition where both NMOS and PMOS transistors are faster, with lower Vth

value (Low Vth) when compared to the typical process corner (TT). Similarly, the SS process

corner implementation represents the condition where both NMOS and PMOS transistors are

slower, with higher Vth value (High Vth) than the TT process corner.

The energy consumption result given in Figure 2.4 shows that for a given activity rate, the

energy consumption varies for different process corners with different threshold voltage values.

For all implementations, the overall energy consumption reduces with a decrease in the supply

voltage (e.g., Vdd range of 0.9-0.65 V for SS corner (HVT)). When the supply voltage is reduced

further (e.g., Vdd≤0.6V for HVT), the propagation delay increases rapidly which increases the

overall energy consumption.

The increased local and global variation induced performance and energy fluctuation of

NTC circuits looms a daunting challenge that forces designers to passover low voltage design

entirely. In the super-threshold voltage domain, those variation issues are easily addressed by

adding conservative margins. For NTC designs, however, local and global process variations

reduce the performance of chips by up to 10×. Hence, conservative margin approaches are

inefficient for NTC operation due to the wide variation extent.

2.2.3 Functional failure and reliability issues of NTC memory components

The increase in sensitivity to process variation of NTC circuits affects not only the performance

but also functionality. Notably, the mismatch in device strength due to process variation

14

2.2 Challenges for NTC operation

affects the state of positive feedback loop based storage elements (SRAM cells) [21, 64, 65].

The mismatch in the transistors makes SRAM cells to incline for one state over the other, a

characteristic that leads to hard functional failure or soft timing failure [22, 13]. The variation-

induced functional failure rate of SRAM cells is more pronounced in the nanoscale era as highly

miniaturized devices are used to satisfy the density requirements [66]. SRAM cells mainly

suffer from three main unreliability sources: 1) aging effects, 2) radiation-induced soft error,

and 3) variation-induced functional failures [18]. The SRAM cell susceptibility to these issues

increases with supply voltage downscaling.

A) Aging effects in SRAM cells

Accelerated transistor aging is one of the main reliability concerns in CMOS devices. Among

various mechanisms, Bias Temperature Instability (BTI) is the primary aging mechanism in

nanoscale devices [67]. BTI gradually increases the threshold voltage of a transistor over a

long period, which in turn increases the gate delay [67]. BTI-induced threshold voltage shift

is a strong function of temperature as it has an exponential dependency. Hence, BTI-induced

aging rate is higher at high operating voltage and temperature values. In SRAM cells, BTI

reduces the Static Noise Margin (SNM)1 of an SRAM cell, and makes it more susceptible to

failures. BTI-induced SNM degradation is higher when the cell stores the same value for a

longer period (e.g., storing ‘0’ at node ‘A’ of the SRAM cell shown in Figure 2.5). Hence, the

effect of BTI on an SRAM cell is a strong function of the cell’s Signal Probability (SP)2.

VDD

WL

BLBL

P1

N1 N2

P2

GND

WL

VL = 1

VR = 0

NR

NLB

A

Figure 2.5: Schematic diagram of 6T SRAM cell, where WL= word-line, BL=bit-line and RL=read-line.

B) Process variation in SRAM cells

Variation in transistor parameters such as channel length, channel width, and threshold voltage

results in a mismatch in the strength of the transistors in an SRAM cell, and in extreme cases

it makes the cell to fail [6]. The variation-induced memory failure rate increases significantly

with supply voltage downscaling, for instance, SRAM cells operating at NTC (0.5V) have 5×higher failure rate than the cells operating at a nominal voltage [6]. Process variation affects

several aspects of SRAM cells, and the main variation-induced SRAM cell failures are:

Read failure: Read failure/ disturb is a phenomenon where the stored value is distorted

during read operation. For example, when reading the value of the cell shown in Figure 2.5,

1SNM is the minimum amount of DC noise that leads to a loss of the stored value2Probability of storing logic ‘1’ in the SRAM cell

15

2 Background and State-of-The-Art