Objective measure of scientific creativity: Psychometricvalidity of the Creative Scientific Ability...

Transcript of Objective measure of scientific creativity: Psychometricvalidity of the Creative Scientific Ability...

Ov

MG

a

ARRAA

KSC

1

A(1AtAco

idmgt

1

Thinking Skills and Creativity 13 (2014) 195–205

Contents lists available at ScienceDirect

Thinking Skills and Creativity

j ourna l h o mepa ge: h t tp : / /www.e lsev ier .com/ locate / tsc

bjective measure of scientific creativity: Psychometricalidity of the Creative Scientific Ability Test

. Bahadir Ayas, Ugur Sak ∗

ifted Education, Faculty of Education, Anadolu University, Eskisehir, Turkey

r t i c l e i n f o

rticle history:eceived 20 January 2014eceived in revised form 14 May 2014ccepted 3 June 2014vailable online 16 June 2014

eywords:cientific creativityreative Scientific Ability Test

a b s t r a c t

This article presents an overview of a new test of scientific creativity, “the Creative Sci-entific Ability Test (C-SAT)” and research carried out on its psychometric properties. TheC-SAT measures potential for scientific creativity with fluency, flexibility and creativitycomponents in hypothesis generation, experiment design and evidence evaluation tasksin five areas of science. In the current study, the test was administered to 693 sixth-gradestudents. The internal consistency reliability was found to be good (.87) and the interscorerreliability was excellent (.92). The Confirmatory Factor Analysis confirmed the one-factormodel solution for the C-SAT scores. The test had medium to high-medium correlationswith math and science grades and a mathematical ability test. Mathematically talentedstudents scored higher on the C-SAT than did average students. Research findings showthat the C-SAT can be used as an objective measure of scientific creativity both in researchand in the identification of scientifically creative students.

© 2014 Elsevier Ltd. All rights reserved.

. Introduction

The idea of novelty along with relevance, effectiveness, importance, and surprise is central to the definition of creativity.ligned with this idea, creativity usually is defined as the ability to generate ideas or products that are novel and useful

Boden, 2004; Cropley, 1999; Mayer, 1999; Piffer, 2012; Plucker, Beghetto, & Dow, 2004; Sak, 2004; Sternberg & Lubart,995). We like to present, at the outset, our definition of scientific creativity that has led our work on the Creative Scientificbility Test (C-SAT). Our definition is inspired by general definitions of creativity. Scientific creativity may be defined as

he ability to generate novel ideas or products that are relevant to context and have scientific usefulness or importance.ccording to this definition, any scientific idea that is extremely original but does not fit the context or is not useful at allannot be considered to be creative. Thus, any scientific idea to be accepted as creative needs to present some degree ofriginality and usefulness. The degree of originality and usefulness determines the level of creativity of the idea.

The two constructs, scientific creativity and general creativity, have both similarities and differences. As the above def-nitions show, both processes should result in novelty and usefulness in products to be accepted as creative. That is, the

efinitions of the both construct largely overlap. However, the two constructs differ in their theoretical foundations. Theain difference between the two construct are the knowledge component and domain-relevant skills. In the process ofeneral creativity, commonsense knowledge which is simple, general and relatively unstructured plays a major role. In con-rast, in domain specific creativity, domain specific and technical knowledge is the foundation of creativity. In addition to

∗ Corresponding author. Tel.: +90 222 335 05 80/3591.E-mail addresses: [email protected] (M.B. Ayas), [email protected] (U. Sak).

http://dx.doi.org/10.1016/j.tsc.2014.06.001871-1871/© 2014 Elsevier Ltd. All rights reserved.

196 M.B. Ayas, U. Sak / Thinking Skills and Creativity 13 (2014) 195–205

usefulness and novelty in science, new ideas should be consistent with the existing knowledge. Eminent physicist Feynman,for example, claims that whatever we are allowed to create in science must be consistent with everything else we know.The problem of creating something new and consistent with everything is one of extreme difficulty” (Feynman, Leighton,& Sands, 1964 as cited in Tweney, 1996). Furthermore, creativity is accepted to be domain general for everyday skills anddevelops into domain specific forms upon the acquisition and utilization of domain relevant skills and knowledge (Amabile,1983; Kaufman & Baer, 2005; Plucker & Beghetto, 2004). Therefore, scientific creativity can be conceptualized as a domainspecific form of general creativity.

In this article, we, first, discuss the need for a domain-specific test of scientific creativity and the construct of scientificcreativity, and then the C-SAT for measuring scientific creativity followed by research carried out on its validity. The C-SATwas developed based on a theoretical framework (Sak & Ayas, 2013), using the Scientific Discovery as a Dual Search modelproposed by Klahr and Dunbar (1988) and Klahr (2000) and research in the assessment of general creativity. The modelconsists of both domain-specific creativity skills and content-general creativity skills. We limited the number of skills usedin the framework to the theoretically most important ones; otherwise, the assessment of scientific creativity would bepractically impossible; because, both general creativity and scientific creativity involve many thinking and problem solvingprocesses.

2. The need for domain-specific tests of creativity

Research on the assessment of creativity has been criticized for using creativity tests that have trivial and theoreticallytoo general items to measure such a multidimensional construct (Baer, 1994; Frederiksen & Ward, 1978; Hocevar, 1979a;Kaufman, Plucker, & Baer, 2008). The evidence for domain specificity of creativity is found both in broadly defined cognitivedomains (e.g., mathematical, linguistic, and musical) and in narrowly defined tasks or content domains (e.g., poetry writing,story writing, and collage making) (Baer, 1998). In some studies, even in the same domain, microdomain differences alsowere found in creativity assessments (Baer, 1991, 1993, 1994; Runco, 1989). In their construct validity study of the TorranceTest of Creative Thinking, for example, Almeida, Prieto, Ferrando, Oliveira, and Ferrandiz (2008) found that specificity ofdemands in each task was better identifiers than cognitive processes related to creativity. Baer (1994) and Kaufman et al.(2008) further criticized the inefficiency of general creativity tests in predicting real-life creativity and suggested the useof domain-specific tests of creative ability. Baer (1991, 1993), for example, carried out a series of research to investigategenerality and specificity of creativity, using poems, stories, equations, mathematical word problems and collages tasks. Theresults of these studies showed a range of correlations from −0.05 to 0.08, supporting a task-specific view of creativity.

The domain generality and specificity of creativity also can be a result of assessment types used to measure creativity.Plucker (1998) and Runco (1987), for example, argued that performance assessments produce evidence of task specificitywhile creativity checklists and similar assessments suggest evidence of general creativity. Likewise, Kaufman and Baer(2002) used the term “garden-variety” creativity to highlight research findings that self-report and personality studiessuggest domain general factors that influence creative performance in all domains, whereas performance assessments ofcreativity in different domains indicate little or no evidence of general factors. After reviewing studies regarding contentgenerality and task specificity of creativity, Kaufman and Baer concluded that the evidence for general creativity-relevantskills is rather weak.

Supporting the idea for domain-specific assessment of creativity, Frederiksen and Ward (1978) and Hu and Adey (2002)developed prototype tests of scientific creativity as criterion measures of scientific creativity. Both tests were found tohave satisfactory reliability and validity evidences in preliminary studies. Frederiksen and Ward used the situational testsapproach, using important aspects of the job of a scientist, such as formulating hypotheses and evaluating proposals. Hu andAdey applied a different approach and used the Scientific Structure Creativity Model in their studies to develop the scientificcreativity test for secondary school students. The model includes both general creativity skills, such as fluency and flexibilityand science-related skills, such as imagination and thinking. Although this model has a strong theoretical background ingeneral creativity, its theoretical framework lacks a theory of scientific creativity. For example, imagination and thinking areused in all kinds of creativity and thinking is a very general psychological process that needs to be specified in psychologicalmeasurements. Also, as the both tests were prototypes, further studies were also needed in order for these tests to be usedin studies and identification practices. No such studies have been reported. The C-SAT differs from the both tests in terms ofits theoretical background and the scoring method as discussed later.

3. Scientific creativity

Scientific creativity is viewed as a result of a convergence of a number of cognitive and noncognitive variables, such asintelligence, creativity-related skills, science-related skills, personality characteristics and motivation, interest, concentra-tion and search for knowledge and chance permutation of mental elements (Dunbar, 1999; Heller, 2007; Klahr, 2000; Puccio,1991; Roe, 1952, 1961; Simonton, 1988; Subotnik, 1993; Torrance, 1992). Some researchers even used the term of scientific

giftedness along with scientific creativity (Innamorato, 1998; Shim & Kim, 2003). Scientific giftedness can be thought of asa domain-specific form of giftedness like other forms of domain-specific giftedness, such as mathematical giftedness, andgiftedness in music and the arts. Emerging conceptions of giftedness also support the construct of domain-specific gifted-ness (see Conceptions of giftedness, Sternberg & Davidson, 2004). Gardner (1999), for example, postulated the existence ofa(cBpip

aitK1

sTttkekde

3

it(Ksbiita

3

iikssd

3

pata

3

moib

M.B. Ayas, U. Sak / Thinking Skills and Creativity 13 (2014) 195–205 197

number of intelligences. Each type of intelligence, including scientific or natural one, is associated with a domain. Renzulli1986) also proposed that giftedness consists of an interaction of a cluster of above-average general or domain-specific skills,reativity related skills and task commitment. That is, scientific creativity may be a form or subset of scientific giftedness.ecause the scope of this study is scientific creativity, we do not carry out a further discussion on scientific giftedness. Asrevious research suggests that creative potential is rather domain specific (Lubart & Guignard, 2004), the focus of this paper

s the mental processes leading to scientific creativity that could be used as psychological constructs in the measurement ofotential for scientific creativity.

The process of knowledge construction in science makes it different from other disciplines in that creative scientific ideasre built on a vast theoretical, technical and experimental knowledge (Dunbar, 1999). More specifically, scientific creativitynvolves a whole array of activities such as designing and performing experiments, inferring theories from data, modifyingheories, inventing instruments, (Kulkarni & Simon, 1988), formulating hypotheses, solving problems (Klahr & Dunbar,1988;lahr, 2000; Newell & Simon, 1972), working on the unexpected (Dunbar, 1993) and on opposite ideas (Rothenberg, 1971,996).

Simon (1977) proposed that scientific creativity can be thought of as a problem solving involving search in various problempaces. A problem space includes all the states of a problem and all the operations people use to get from one state to another.herefore, it is possible to understand scientific creativity by analyzing types of representations and procedures people useo get from one state to another (Dunbar, 1999). In a similar line of thinking, Klahr (2000) proposed a two-dimensionalaxonomy of the major components of scientific creativity. One dimension represents domain-specific and domain-generalnowledge. The other dimension includes major processes involved in scientific discovery: generating hypotheses, designingxperiments, and evaluating evidence. Acquisition of domain-specific knowledge is important because it influences not onlynowledge structure in the domain but also the processes used in the generation and evaluation of new hypotheses in thatomain. During the scientific discovery, domain-specific knowledge and domain-general heuristics guide the design ofxperiments, selection or formulation of new hypotheses and verification of evidence.

.1. Scientific creativity as dual search

Building on Simon’s (1977) proposition mentioned previously, Klahr and Dunbar (1988) proposed that scientific creativityncludes two primary spaces: a space of hypotheses and a space of experiments. In search of the two spaces, they identifiedwo different strategies for generating new hypotheses: (a) searching memory for possible hypotheses (hypothesis space) andb) conducting experiments until a new hypothesis could be generated from data (experiment space). Using this framework,lahr and Dunbar (1988) and Klahr (2000) proposed that scientific discovery could be understood as a dual search (SDDS):earch in hypothesis space and search in experiment space. The SDDS consists of basic components that guide within andetween the two spaces. Problems to be solved in each space, as well as spaces themselves are rather different from each other

n that they require different representations and operators. Search in two spaces requires three major processes that worknterdependently: hypothesis space search, experiment space search, and evidence evaluation. These three processes guidehe entire process of scientific creativity from formulation of hypotheses, through experimental evaluations to decisions toccept or reject hypotheses.

.1.1. Hypothesis space (hypothesis generation)The process of generating new hypothesis is a kind of problem solving (Klahr, 2000). Scientific creativity starts with an

nitial stage that consists of some knowledge about a problem and hypotheses associated with the problem. Similarly, thenitial stage of a hypothesis space consists of some knowledge while the goal stage is a hypothesis that could explain thatnowledge. After hypotheses are generated, they are verified for their plausibility. New hypotheses stem from two differentources. One of the sources consists of prior knowledge. The other source comes from experimental data. The output ofearch in hypothesis space is a completely specified hypothesis. This new hypothesis becomes an input for experimentesign.

.1.2. Experiment space (hypothesis testing)Scientists search in the experiment space to design and carry out appropriate experiments that could prove or dis-

rove their hypotheses under consideration. According to the SDDS, the process of testing hypothesis involves designingn experiment appropriate to the hypothesis, making predictions, running the experiment, and matching the outcomes ofhe experiments to the predictions (Klahr, 2000). That is, testing hypothesis produces evidence in experiment space for orgainst the hypothesis under testing. This evidence is used as an input in evidence evaluation process.

.1.3. Evidence evaluation (verification)In the evidence evaluation process, predictions articulated in hypotheses are compared with results obtained in experi-

ents (Klahr, 2000). Evidence evaluation determines whether evidence obtained from experiments are sufficient to acceptr reject hypotheses. If evidence is not sufficient, the process restarts from the hypothesis space or hypothesis testing. Thats, the evidence evaluation process mediates search in both hypothesis space and experiment space and assesses the fitetween the theory and evidence.

198 M.B. Ayas, U. Sak / Thinking Skills and Creativity 13 (2014) 195–205

C-SAT

Areas of Science

Hypothesis Generation

Fluency Flexibility Creativity

Areas of Science

Hypothesis Testing (E. Design)

Fluency Flexibility Creativity

Areas of Science

Evidence Evaluation

Fluency Flexibility Creativity

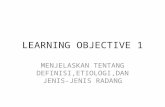

Fig. 1. The theoretical structure of the C-SAT.

In conclusion, according to the SDDS, scientific creativity involves an interaction of hypothesis generation, experimentdesign, and evidence evaluation. These three processes coordinate the search in hypothesis and experiment spaces.Furthermore, Dunbar (1993) found that one of the key aspects of making a scientific discovery is to switch goals fromtesting a favored hypothesis to a goal of accounting for unexpected findings. Being able to focus on unexpected findings isa key component of scientific discovery. On the other hand, focusing only on one hypothesis, while ignoring other possiblehypotheses, can produce a type of creativity block. This type of thinking can mislead the design of experiments, formulationof theories and interpretation of data (Dunbar, 1999). That is, the ability to produce many hypotheses for a problem situationcan be one of the key characteristics of scientific creativity.

4. An overview of the creative scientific ability test

The literature discussed hitherto shows that scientific creativity can be viewed as a process of an interaction amonggeneral-creativity skills, science-related skills and scientific knowledge in one or more areas of science. Pioneering works ondivergent thinking and its measurement (Guilford, 1950, 1956, 1967; Torrance, 1962, 1988) and the Scientific Discovery asDual Search model (Klahr & Dunbar, 1988; Klahr, 2000) provided a general framework for the development of the CreativeScientific Ability Test (C-SAT). The C-SAT has three dimensions, with general-creativity skills in one dimension, science-related skills in another dimension, and areas of science in the other dimension (Sak, 2010; Sak & Ayas, 2013). Specifically,general-creativity skills include ideational fluency, ideational flexibility, and composite creativity. Science-related skills arecomposed of hypothesis generation, experiment design, and evidence evaluation. Science areas cover biology, chemistry,physics, ecology, and interdisciplinary science. The dimensions of the C-SAT are shown in Fig. 1. We did not include a distinctideational originality in the C-SAT because its scoring has been found to be rather problematic as discussed in studies andas it has been found to have very high correlations with fluency (Mouchiroud & Lubart, 2001).

4.1. Subtests of the C-SAT

The purpose of the C-SAT is to measure potential for scientific creativity in 6th through 8th grade students. It measuresfluency, flexibility and creativity in hypothesis generation, experiment design and evidence evaluation in five areas of science,with one subtest from each area: fly experiment (biology), change graph (interdisciplinary), sugar experiment (chemistry),string experiment (physics), and food chain (ecology). Each subtest consists of one open-ended problem. Two subtests includehypothesis generation problems, another two subtests contain experiment design problems, and one subtest includes anevidence evaluation problem.

4.1.1. Subtest 1: the fly experimentThe purpose of this task is to measure fluency, flexibility and creativity in hypothesis generation in the area of biology. This

problem presents a figure of an experiment designed by a researcher. Students are required to generate as many hypothesesas they can think of that the researcher might test.

4.1.2. Subtest 2: the change graphThis task measures fluency, flexibility, and creativity in hypothesis generation in interdisciplinary science. The subtest

presents a graph of changes in the amounts of two variables and an effect that starts these changes. Students are asked tothink of three variables that fit the graph.

4.1.3. Subtest 3: the sugar experiment

This task measures fluency, flexibility, and creativity in experiment design (hypothesis testing) in the area of chemistry.A figure of an experiment designed by a researcher and a graph showing the researcher’s hypothesis are presented in thisproblem. Students are required to think of changes that should be made in the experiment in order for the researcher toprove the hypothesis.

4

ts

4

so

4

TcntS

sabpro

4

tifftfsecm

pcesaepc

5

5

s(tupew

M.B. Ayas, U. Sak / Thinking Skills and Creativity 13 (2014) 195–205 199

.1.4. Subtest 4: the string experimentThe purpose of this task is to measure fluency, flexibility, and creativity in experiment design (hypothesis testing) in

he area of physics. A figure of a force experiment is presented in this problem. Students are asked to think of changes thathould be made in the experiment to achieve a goal.

.1.5. Subtest 5: the food chainThis subtest measures fluency, flexibility, and creativity in evidence evaluation in the area of ecology. The problem of this

ubtest presents a figure of a food chain and a graph of the change in this food chain. Students are asked to think of reasonsf the change.

.2. Scoring

Three types of scores are obtained from the hypothesis generation, experiment design and evidence evaluation subtests.hat is, the test yields a fluency, flexibility, and creativity score for each subtest and a total fluency, total flexibility and totalreativity score for the total test. Fluency is the number of correct responses generated for each problem. Flexibility is theumber of conceptual categories among responses. Contrary to traditional practices that sum fluency and flexibility scoreso calculate total creativity, the C-SAT creativity is calculated using the Creativity Quotient (CQ) formula (log2) proposed bynyder, Mitchell, Bossomaier and Pallier (2004, p. 416): CQ = log2 {(1 + u1) (1 + u2) . . . (1 + uc)}.

Scoring of fluency component in creativity is a long standing problem. In fact, adding one point to the total creativitycore for each correct answer as a fluency score has been strongly critiqued, and, as a result, fluency sometimes is criticizeds a confounding variable (Mouchiroud & Lubart, 2001). A strong belief is that ideas in distinctly different categories shoulde weighted more than those that fall in the same category (Getzels & Jackson, 1962; Guilford, 1959). The C-SAT scoringrocedures generate distinct scores for fluency, flexibility, and creativity. The fluency and flexibility scores are used foresearch purposes, whereas the composite creativity score can be used both for research purposes and for the identificationf students with high potential for scientific creativity.

.3. Research on the Creative Scientific Ability Test

Psychometric properties of the C-SAT were investigated, first, in a number of pilot studies and then in a larger study beforehe current study (Sak & Ayas, 2013; Sak, 2010). In the first pilot study, the test was administered to 71 students who weredentified as gifted in mathematics (40 sixth graders, 31 seventh graders). In this study, internal consistency of the test wasound to be .76 and the interscorer reliability for the subtests ranged from .91 to .97. Subtest-total test correlations rangedrom .50 to .61. Also seventh-grade-gifted students scored significantly higher than did the sixth-grade-gifted students. Inhe second pilot study, the C-SAT was administered to 128 sixth-grade students. In this study, interscorer reliability wasound to be .95 and the internal consistency was .86. Test–retest reliability for subtests ranged from .86 to 89 (N = 24 giftedtudents). In the larger study (288 sixth grade students), exploratory factor analysis showed that one-factor solution bestxplained the factorial structure of the C-SAT. The interscorer reliability of the test ranged from .94 to .96 and the internalonsistency was found to be .85. Students’ performance on the C-SAT significantly correlated with their performance on aathematical ability test.Although the C-SAT has three dimensions theoretically, previous research showed one factor only. This is not an unex-

ected finding; because the dimensions of the C-SAT are rather overlapping constructs used together in the scientificreativity process. Prior studies yielded some evidence that the C-SAT could be psychometrically a good measure of sci-ntific creativity (Sak & Ayas, 2013). These evidence encouraged us to carry out further research on the C-SAT with a largerample. We were particularly motivated to replicate some of prior research, confirm the one-factor structure (scientific cre-tivity) of the test using the Confirmatory Factor Analysis, and extend our research program on the C-SAT to obtain strongervidence for the validity and reliability of the test so that it could be used in research and identification and decision-makingractices. Such a work required a larger and more heterogeneous sample than the ones used in prior studies and moreriterion variables against which the C-SAT could be compared. In the rest of this article, we present this study in detail.

. Method

.1. Participants

Participants included 693 sixth-grade students (female = 326; male = 367) who applied to an education program for giftedtudents at a university in a major city in the mid part of Turkey. Of the participants, approximately top 3% of the studentsN = 22) were identified to be talented in mathematics based on their scores on the Test of Mathematical Talent duringhe identification phase of the education program. Having an average group and a talented group in mathematics allowed

s to compare their performance on the C-SAT to examine its discriminant validity. Students talented in mathematics wasurposefully selected for discriminant analysis because science and mathematics are thought to be related disciplines. Math-matical physics is a prime example of this relationship. Meanwhile, some of the participants were attending public schoolshereas some were attending private schools at time of testing. Application to the program was free and no prescreening200 M.B. Ayas, U. Sak / Thinking Skills and Creativity 13 (2014) 195–205

criteria or nomination were used to apply to take the admission tests of the program; therefore any student who was willingto participate in the program had the opportunity to apply it.

5.2. Instruments

5.2.1. Mathematics and science gradesStudents’ fall-semester grades in science and mathematics courses in 6th grade were used as two criteria. Students’

science grades ranged from 1 to 5 out of 5, with a mean of 4.67 and standard deviation of .62. Their mathematics grades alsoranged from 1 to 5 out of 5, with a mean of 4.62 and standard deviation of .73.

5.2.2. The Test of Mathematical Talent (TMT)The purpose of the TMT is to identify 6th grade students who have high ability in mathematics. It is a multiple-choice,

paper–pencil test. Its psychometric properties were investigated in a number of studies and it was found to have goodvalidity and reliability evidences (Sak et al., 2009; Sengil, 2009). The test has a mean of 100 and a standard deviation of15. Students’ scores in the current study on the test ranged from 68 to 158, with a mean of 99. The group was found to berather heterogeneous in terms of their mathematical ability. Students’ scores on the TMT were used to examine the C-SAT’scriterion and discriminant validity.

5.3. Procedure

Students’ science and mathematics grades were collected when they applied to the education program. The Test ofMathematical Talent (TMT) and the C-SAT were administered to all students in two different sessions on the university’scampus in the same day. First, the TMT was administered to the students. This session lasted 80 min. After a 40-min break,students took the C-SAT. This session lasted 40 min. The TMT answers were scored by a computer program. The C-SATanswers were scored using the C-SAT standard scoring procedures.

6. Results

6.1. Item analysis

Item analysis was carried out to find out maximum correct scores generated by students for fluency, flexibility andcreativity for each subtest and for the sums, and to examine inter-item and item-total test correlations, as well as means andstandard deviations for the test scores. Further, the group was divided into three groups as high (top 27%), middle (middle46%) and low (bottom 27%) scorers based on their total creativity scores to compare their subtest scores to obtain itemdiscrimination evidence.

6.1.1. Descriptive findingsTable 1 shows means, standard deviations, and maximum scores for the group and maximum scores generated by the

top 10% scorers. The possible number of maximum scores that can be generated for each item is presented in the columnEstimated Maximum Correct Score. The possible number of correct answers (fluency) that can be generated for the fivesubtests is 267, whereas the student who got the highest fluency score generated 35 different correct answers out of 267.The possible correct category for the total flexibility is 42 and the possible correct score for the total creativity is 120.60respectively. The student who got the highest flexibility score generated 18 correct categories for the total flexibility andthe student who got the highest creativity score received 23.24 points for the total creativity. These results show that theC-SAT items are not too easy and rather sufficiently open-ended and challenging for 6th graders.

6.1.2. Item correlationsPearson product-moment correlation analysis was used to examine inter-item and item-total test correlations. Inter-

item correlations among subtests ranged from .11 to .34 (Table 2). The lowest correlation was found between the subtest 1flexibility and the subtest 4 flexibility. The highest correlation was found between the subtest 4 fluency and the subtest 5fluency. Inter-item correlations within subtests ranged from .80 to .99. Item-total test correlations ranged from .49 to .70.All of the correlations in all categories were found to be significant at 0.01 level.

6.1.3. Item discriminationA one-way between groups multivariate analysis of variance (MANOVA) was conducted to investigate item discrimina-

tion. Dependent variables (subtest scores) typically were combined in the multivariate analyses. The preliminary analysis

indicated an inequality of error variance between variables. Therefore, we set an initial alpha level of .01 for statistical signif-icance, as recommended by Tabachnick & Fidell (1999); nonetheless, the multivariate finding had less than .001 p value. Theanalysis indicated that a statistically significant difference existed among the groups (top 27%, middle 46%, and bottom 27%)on the combined score: F(10, 683) = 190.61, p < .001; Wilks’ Lambda = .18; partial eta squared = .58. In addition, follow-upM.B. Ayas, U. Sak / Thinking Skills and Creativity 13 (2014) 195–205 201

Table 1Descriptive finding by subtests and subscores.

Subtest Subscore Maximumcorrect score*

Mean SD Estimated maximumcorrect score**

Mean of top10% scorers***

Subtest 1Fluency 12 1.45 1.84 60 3.72Flexibility 5 .79 .87 10 1.68Creativity 7.32 1.11 1.25 28 2.61

Subtest 2Fluency 7 1.04 1.27 56 2.39Flexibility 4 .75 .83 8 1.54Creativity 5 .91 1.02 24 1.96

Subtest 3Fluency 9 2.01 2.08 54 4.62Flexibility 7 1.48 1.45 9 3.12Creativity 7.16 1.77 1.76 25.20 3.93

Subtest 4Fluency 10 2.08 1.80 48 4.47Flexibility 6 1.52 1.14 8 2.97Creativity 7.16 1.81 1.43 22.40 3.72

Subtest 5Fluency 13 2.63 2.57 49 5.98Flexibility 5 1.35 1.15 7 2.61Creativity 6.90 1.93 1.69 21 4.04

TotalFluency 36 9.24 6.14 267 21.19Flexibility 18 5.91 3.33 42 11.93Creativity 23.24 7.54 4.55 12,060 16.28

pt

6

firtflb

TI

* Maximum correct score generated by students.** Estimated maximum correct score that can be generated for each item.

*** Students who scored in the top 10% in the C-SAT.

airwise comparisons showed that the top group scored significantly higher than the middle and the bottom group on allhe subtests and the middle group scored significantly higher than the bottom group on all the subtests (p < .001).

.2. Reliability

The Cronbach’s Alpha for internal consistency and interscorer reliability were investigated. The Cronbach’s Alpha coef-cient value was found to be .87. For the interscorer reliability, approximately 14% of the students’ papers (n = 164) were

andomly selected. Two independent scorers who were trained in the C-SAT scoring procedures scored these papers usinghe C-SAT standard scoring procedures. Interscorer reliability ranged from .87 to .96 for the five subtests and for the totaluency, total flexibility, and total creativity, with a mean of .92 (see Table 3). Alpha levels from .80 to .89 are considered toe good and from .90 and above are thought to be excellent (George & Mallery, 2003).able 2nter-item and item-total test correlations.

Subtest Score Score

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17

Subtest 1Fluency (1) –Flexibility (2) .79**

Creativity (3) .96** .92**

Subtest 2Fluency (4) .24** .20** .24**

Flexibility (5) .22** .17** .22** .87**

Creativity (6) .24** .19** .24** .98** .96**

Subtest 3Fluency (7) .21** .16** .20** .25** .25** .26**

Flexibility (8) .17** .15** .17** .24** .25** .25** .93**

Creativity (9) .20** .16** .19** .25** .25** .26** .99** .98**

Subtest 4Fluency (10) .19** .12** .16** .20** .17** .19** .27** .24** .27**

Flexibility (11) .18** .11** .15** .20** .18** .20** .27** .25** .27** .89**

Creativity (12) .19** .12** .16** .20** .19** .20** .28** .26** .28** .98** .96**

Subtest 5Fluency (13) .26** .24** .27** .28** .27** .28** .27** .25** .26** .34** .31** .33**

Flexibility (14) .21** .22** .22** .23** .21** .23** .19** .18** .19** .30** .30** .31** .80**

Creativity (15) .25** .24** .26** .27** .26** .27** .25** .23** .25** .33** .31** .33** .96** .93**

TotalFluency (16) .59** .47** .56** .54** .49** .54** .64** .59** .63** .62** .58** .62** .74** .60** .71**

Flexibility (17) .47** .49** .50** .52** .54** .55** .67** .69** .69** .59** .63** .62** .62** .64** .66** .91**

Creativity (18) .55** .49** .55** .555** .52** .55** .67** .65** .68** .62** .61** .63** .70** .63** .70** .98** .97**

* p < .05.** p < .001.

202 M.B. Ayas, U. Sak / Thinking Skills and Creativity 13 (2014) 195–205

Table 3Interscorer reliability coefficients (n = 164).

Subtests Agreement coefficients

Subtest 1 creativity .90Subtest 2 creativity .87Subtest 3 creativity .96Subtest 4 creativity .92Subtest 5 creativity .91Total fluency .93Total flexibility .92Total creativity .93

Table 4Correlations between the C-SAT and the test of mathematical ability, mathematics grade and science grade.

C-SAT scores Test of Mathematical Talent Mathematics grade Science grade

Total fluency .59* .31* .35*

* * *

Total flexibility .50 .31 .36Total creativity .52* .31* .36** p < .001 (2-tailed).

6.3. Validity

6.3.1. Construct validityIn prior research, the C-SAT was found to have one factor. In this study, Confirmatory Factor Analysis (CFA) was conducted

to test the fit of the one-factor model with the maximum likelihood estimation method. Because the subscore fluency,flexibility and creativity are obtained from the same set of responses in each subtest, the five subtest scores of the C-SATwere used in the analysis. The results showed a non-significant value of �2 (�2 = 10.76; df = 5; p > .05). Because of the largesample size (n = 693), this value is accepted to be well-fitting (�2/df = 2.15) (Kline, 2005). The root mean square error ofapproximation was found to be 0.041 and the standardized root mean square residual was 0.024. These values also met thewell-fitting criteria (≤0.05) (Brown, 2006). The Goodness-of-Fit Index (GFI) was found to be 0.99 and the Adjusted Goodness-of-Fit Index was 0.98. These values also met the well-fitting criteria (0.95 ≤ GFI and AGFI ≤ 1.00) (Hooper, Coughlan, & Mullen,2008). Further, the relative fit indexes were obtained and they all were found to be between 0.95 and 1.00 meeting the criteriaof well-fitting (Hu & Bentler, 1999). Overall, the CFA findings confirmed the one-factor model solution for the C-SAT scores.

6.3.2. Criterion validityPearson product-moment correlations were calculated to examine relationships between students’ C-SAT total fluency,

total flexibility and total creativity scores and their grades in mathematics and science and TMT scores. Findings are presentedin Table 4. Correlation coefficients ranged from .31 to .59, with the highest correlation between the total fluency and theTMT and with all the correlations being significant (p < .01).

6.3.3. Discriminant validityAs scientific ability is related to mathematical ability to some extent, we hypothesized students who had high ability in

mathematics to have significantly higher scores in the C-SAT than students who had average or below-average ability inmathematics. We investigated this hypothesis by comparing C-SAT scores of gifted and nongifted students as measured bythe TMT. Out of the total sample (n = 693), 22 students were identified as gifted in mathematics by the TMT. Because the C-SATtotal fluency, total flexibility and total creativity scores correlated with each other very highly (correlations exceed .90; seeTable 2), only the total creativity score was used as a dependent variable. An independent-samples t-test was conducted tocompare the C-SAT scores for gifted and nongifted students. There was a significant difference between the means, favoringthe gifted group [M1 = 12.41, SD = 5.63; M2 = 7.38, SD = 4.43; t(286) = 5.33, p < .000]. The magnitude of the difference betweenthe means was large (Cohen’s d = 1.00).

7. Discussion and conclusions

In this article, we discussed scientific creativity and reviewed the Creative Scientific Ability Test (C-SAT), and then wepresented research carried out with 693 sixth grade students to investigate its psychometric properties. The C-SAT measuresfluency, flexibility and creativity in hypothesis generation, experiment design and evidence evaluation in five areas of science.Overall, the findings provided empirical evidence for its reliability and validity, supporting its use as a criterion variable in

research and identification practices for scientifically creative students.The C-SAT was found to be sufficiently difficult for sixth grade students, even for the gifted sample in mathematics inthat the highest creative student in the sample generated 36 correct responses (fluency score) for 5 subtests for which267 possible correct responses could be generated. This finding provides a strong evidence for the usefulness of the C-SAT

sdttIoo

enccstsi

(mtg2TttFiglrFneeah

ogsdeseAHdiossap

mapipmp

M.B. Ayas, U. Sak / Thinking Skills and Creativity 13 (2014) 195–205 203

coring. One of the most serious drawbacks of divergent thinking tests currently in use is related to their open-endedness andifficulty level. One can generate thousands, even more than thousands, of responses for a single item in these tests, such ashinking of uses of things and making changes in objects. Because items are too easy and too open-ended in most creativityests, scoring responses for these tests has been found to be labor intensive and unpractical (Kaufman et al., 2008; Kim, 2006).ndeed, we think that this problem is one of the major barriers preventing the use of creativity tests in the identificationf gifted and creative students. The descriptive findings related to the C-SAT items presented in findings shows that wevercame this problem in the construction of the C-SAT.

The internal consistency reliability of the C-SAT was found to be good while the interscorer reliability was rather high,xceeding .90s mostly, an excellent level. Similarly, the criterion validity of the C-SAT was found to be at desirable levels,either too high nor too low, albeit, correlations between the C-SAT and math and science grades were lower than theorrelation between the C-SAT and the Test of Mathematical Talent (TMT). This difference, hypothetically speaking, couldome from the fact that the TMT is an objective measure, whereas grades are not in the same way. Students’ math andcience grades cannot be considered as totally objective measures because students were attending different schools whereeachers had different criteria for performance assessments. If objective measures had been used for grading in math andcience classes in all the schools, higher correlations would have been found between students’ C-SAT scores and their gradesn math and science.

In prior research, we found that one-factor solution best fitted data, implying a general factor (scientific creativity)Sak & Ayas, 2013). In the current study we conducted Confirmatory Factor Analysis (CFA) to test the fit of the one-factor

odel. The findings of CFA confirmed the one-factor model solution. The one-factor structure of the C-SAT obtained inhe study deserves further discussion; because the theory (Dual Search) of the C-SAT has three dimensions as hypothesiseneration, experiment design and evidence evaluation. According to the Dual Search model (Klahr & Dunbar, 1988; Klahr,000), the three processes work interdependently rather than independently in hypothesis space and experiment space.his postulation has important implications for measuring these processes in psychological investigations. That is, one ofhe processes, say experiment design, does not only require a design of the experiment but also involves an evaluation ofhe fit between the experiment design and the related hypothesis and even the evidence obtained from the experiment.urther, searches in hypothesis space and experiment space follow one after another; because a design of an experiment ismpossible without a generation of a related hypothesis. While some cases or tasks may require a minimal use of hypothesiseneration and a maximal use of experiment design, other tasks can involve the use of experiment design at a minimumevel and the hypothesis generation at a maximum level. An inspection of all the subtests of the C-SAT shows that all of themequire the use of both hypothesis space search and experiment space search as either a major process or a minor process.or instance, although the fly experiment and the change graph subtests include hypothesis generation tasks, a respondenteeds to evaluate the experiment space or problem space first, and then, generate hypotheses that could be tested in thesexperiments. That is, the major process used in these subtests is hypothesis generation while the minor one is search inxperiment space. The main difference among the subtests is the output, such as a development of a hypothesis throughn evaluation of a hypothesis space or experiment space or both and a design of an experiment through an evaluation of aypothesis space.

Moreover, creativity tests usually show one-factor structure. For example, in prior studies, one-factor solution is rec-mmended for the Torrance Test of Creative Thinking (TTCT) (Clapham, 1998; Hocevar, 1979b) even though it is a test ofeneral creativity composed of figural and verbal tests. In their studies of the scientific creativity test for secondary schooltudents, Hu and Adey (2002) also reported that one-general factor was the best solution for the test albeit the test was threeimensional in theory. We suggest that domain-specific creativity could be thought to be more unidimensional than gen-ral creativity unless domain-specific knowledge needed to perform each task to solve problems is radically specialized inubdomains. In the Dual Search model, for example, Klahr (2000) classified knowledge as domain-general scientific knowl-dge, such as design of an experiment, and domain-specific or specialized scientific knowledge, such as theories of motion.ccording to Klahr, the scientific reasoning process is influenced by both domain-specific and domain-general knowledge.owever, the acquisition of domain-specific knowledge not only changes the schematic knowledge in the domain but alsoirect the processes used to produce and evaluate new hypotheses in that domain. Klahr further claims that expert scientists

n a field show specific reasoning processes that are characteristic of their fields and that differ from those used by experts ofther fields, or by nonexperts and even children. Even in simple contexts that do not require specialized knowledge, expertcientists do not differ from lay people (Mahoney & DeMonbreun, 1978 as cited in Klahr, 2000). In short, we suggest that mosttudents possess general scientific knowledge only, whereas experts possess the both. Therefore, in childhood, scientific cre-tivity could be unidimensional whereas in experts, it could be multidimensional, such as theorists or experimentalists inhysics or biology.

What is most needed to further validate the C-SAT is a predictive and multi-trait multi-method studies. Further researchay be necessary to investigate the predictive validity of the C-SAT, using criterion variables based on students’ academic

nd creative performance in science-related areas in the long run. Other studies related to validity may be directed torimarily on questions of criterion validity. Is performance on the C-SAT related to, for example, other personal variables

n ways that are theoretical related? Test–retest reliability was not the subject of this study though it was investigated in ailot study mentioned before and the findings were good, even with a small sample. Research reported in this article alsoay be replicated with a different sample to obtain additional evidence for the psychometric properties of the C-SAT. Its

sychometric properties can be investigated with seventh and eighth graders, as well.

204 M.B. Ayas, U. Sak / Thinking Skills and Creativity 13 (2014) 195–205

Research findings obtained in this study provide empirical evidence that the C-SAT can be used as an objective measureof scientific creativity in sixth grade students. It can be used for two purposes: (1) as a criterion in research and (2) as asupplement in the identification of gifted and creative students in science. Related to the former purpose, it can be usedto examine effects of training programs in scientific creativity, to examine gender, age and grade differences in scientificcreativity, to study scientific creativity in cross cultural settings, to examine the development of scientific creativity inlongitudinal studies, to study creativity in general and domain-specific forms, to examine the relationship between processand product in science, and to investigate relationships of scientific creativity with other variables.

Finally, the C-SAT can be used in the identification of gifted and creative students. Most well-known theories, modelsand definitions of giftedness include creativity as an essential component of the giftedness construct (e.g., three-ring model,Renzulli, 1986; successful intelligence, Sternberg, 2003; WICS, Sternberg, 2005; sea star model, Tannenbaum, 1986; differ-entiated model of giftedness and talent, Gagne, 2003; Marland report, Marland, 1972). Aligned with their postulations intheir models, these researchers suggest the use of creativity assessment in the identification of gifted students. However,most identification practices in gifted education rely mostly on IQ and achievement tests (Davis, Rimm, & Siegle, 2011;Feldhusen, Asher, & Hoover, 1984; Johnsen, 2004; Renzulli & Delcourt, 1986). This method of identification can identifyhigh-ability students in different areas but can miss many students who have high creative potential. Torrance (1962), forexample, found that creatively gifted students missing such points as 130 IQs achieve as well as their classmates with IQsin excess of 130 who would be classified as creatively gifted by similar standards. He further stated that identification ofgifted students solely on the basis of IQ and scholastic aptitude tests could eliminate about 70% of the top 20% of creativestudents from consideration. This limitation of IQ and achievement oriented practices have led researchers to defend and usemultiple criteria (Fraiser, 1997). In some cases practitioners use creativity tests as a supplementary tool in the identificationprocedures. The C-SAT also can be used in combination with other instruments in sample-based identification practices asone of criteria to identify gifted students in science. It also can be used as an additional source of information in norm-basedidentifications.

Acknowledgement

This study was partly supported by a research grant (Grant # 107K059) from the Scientific and Technological ResearchCouncil of Turkey (TUBITAK).

References

Almeida, L. S., Prieto, L. P., Ferrando, M., Oliveira, E., & Ferrandiz, C. (2008). Torrance Test of Creative Thinking: The question of its construct validity. ThinkingSkills and Creativity, 3(1), 53–58.

Amabile, T. M. (1983). The social psychology of creativity. New York: Springer-Verlag.Baer, J. (1991). Generality of creativity across performance domains. Creativity Research Journal, 4, 23–39.Baer, J. (1993). Creativity and divergent thinking: A task-specific approach. Hillsdale, NJ: Lawrence Erlbaum Associates.Baer, J. (1994). Why you shouldn’t trust creativity tests. Educational Leadership, 51, 80–83.Baer, J. (1998). The case for domain specificity of creativity. Creativity Research Journal, 11(2), 173–177.Boden, M. A. (2004). The creative mind: Myths and mechanisms. London: Routledge.Brown, T. (2006). Confirmatory Factor Analysis for applied research. New York, NY: Guilford Press.Clapham, M. M. (1998). Structure of figural forms A and B of the Torrance tests of creative thinking. Educational and Psychological Measurement, 58, 275–283.Cropley, A. (1999). Definitions of creativity. In S. R. Pritzker, & M. A. Runco (Eds.), Encyclopedia of creativity (Vol. 2) (pp. 511–524). San Diego, CA: Academic

Press.Davis, G. A., Rimm, S. B., & Siegle, D. (2011). Education of the gifted and talented (6th ed.). New Jersey: Pearson.Dunbar, K. (1993). Concept discovery in a scientific domain. Cognitive Science, 17, 397–434.Dunbar, K. (1999). Science. In M. A. Runco, & S. R. Pritzker (Eds.), Encyclopedia of creativity (Vol. 2) (pp. 525–531). San Diego, CA: Academic Press.Feldhusen, J. F., Asher, J. W., & Hoover, S. M. (1984). Problems in the identification of giftedness, talent, or ability. Gifted Child Quarterly, 4, 149–151.Feynman, R. P., Leighton, R. B., & Sands, M. (1964). . The Feynman lectures on physics (Vol. 2) Reading, MA: Addison-Wesley.Fraiser, M. M. (1997). Multiple criteria: The mandate and the challenge. Roeper Review, 20, A4–A6.Frederiksen, N., & Ward, W. C. (1978). Measures for the study of creativity in scientific problem solving. Applied Psychological Measurement, 2(1), 1–24.Gagne, F. (2003). Transforming gifts into talents: The DMGT as a developmental theory. In N. Colangelo, & G. A. Davis (Eds.), Handbook of gifted education

(3rd ed., Vol. 2, pp. 60–74). Boston, MA: Pearson Education.Gardner, H. (1999). Intelligence reframed: Multiple intelligences for the 21st century. Basic Books.George, D., & Mallery, P. (2003). SPSS for Windows step by step: A simple guide and reference, 11. 0 update (4th ed.). Boston: Allyn & Bacon.Getzels, J. W., & Jackson, P. W. (1962). Creativity and intelligence. New York: John Wiley and Sons, Inc.Guilford, J. P. (1950). Creativity. American Psychologist, 5, 444–454.Guilford, J. P. (1956). The structure of intellect. Psychological Bulletin, 53, 267–293.Guilford, J. P. (1959). Personality. New York: McGraw Hill.Guilford, J. P. (1967). The nature of human intelligence. New York: McGraw-Hill.Heller, K. A. (2007). Scientific ability and creativity. High Ability Studies, 2, 209–234.Hocevar, D. (1979a). A comparison of statistical infrequency and subjective judgment as criteria in the measurement of originality. Journal of Personality

Assessment, 3, 297–299.Hocevar, D. (1979b). The unidimensional nature of creative thinking in fifth grade children. Child Study Journal, 9, 273–278.Hooper, D., Coughlan, J., & Mullen, M. (2008). Structural Equation Modelling: Guidelines for determining model fit. Electronic Journal of Business Research

Methods, 6(1), 53–60.

Hu, W., & Adey, P. (2002). A scientific creativity test for secondary school students. International Journal of Science Education, 24(4), 389–403.Hu, L. T., & Bentler, P. M. (1999). Cutoff criteria for fit indexes in covariance structure analysis: Conventional criteria versus new alternatives. StructuralEquation Modeling, 6(1), 1–55.Innamorato, G. (1998). Creativity in the development of scientific giftedness: Educational implications. Roeper Review, 1, 54–60.Johnsen, S. K. (2004). Identifying gifted students: A practical guide. Waco, TX: Prufrock Press.

K

K

KKKKKKL

MM

MM

NP

PP

P

P

R

RRRRRRRSSS

S

S

S

SSS

S

SSSSTT

TT

TT

M.B. Ayas, U. Sak / Thinking Skills and Creativity 13 (2014) 195–205 205

aufman, J. C., & Baer, J. (2002). Could Steven Spielberg manage the Yankees? Creative thinking in different domains. The Korean Journal of Thinking andProblem Solving, 12(2), 5–14.

aufman, J. C., & Baer, J. (2005). The amusement park theory of creativity. In J. C. Kaufman, & J. Baer (Eds.), Creativity across domains: Faces of the muse (pp.321–328). Mahwah: Lawrence Erlbaum Associates.

aufman, J. C., Plucker, J. A., & Baer, J. (2008). Essentials of creativity assessment. New Jersey: John Wiley & Sons, Inc.im, K. H. (2006). Can we trust creativity tests? A review of the Torrance Tests of Creative thinking (TTCT). Creativity Research Journal, 1, 3–14.lahr, D. (2000). Exploring science: The cognition and development of discovery processes. Cambridge: The MIT Press.lahr, D., & Dunbar, K. (1988). Dual space search during scientific reasoning. Cognitive Science, 12, 1–48.line, R. B. (2005). Principles and practice of structural equation modeling (2nd ed.). New York: The Guilford Press.ulkarni, D., & Simon, H. A. (1988). The processes of scientific theories: The strategy of experimentation. Cognitive Science, 12, 139–175.ubart, T., & Guignard, J. H. (2004). The generality–specificity of creativity: A multivariate approach. In R. J. Sternberg, E. Grigorenko, & J. L. Singer (Eds.),

Creativity: From potential to realization (pp. 43–56). Washington: A.P.A.ahoney, M. J., & DeMonbreun, B. G. (1978). Psychology of the scientist: An analysis of problem-solving bias. Cognitive Therapy and Research, 1(3), 229–238.arland, S. P. (1972). Education of the gifted and talented. Report to the Congress of the United States by the U. S. Commissioner of Education. Washington, DC:

U.S. Government Printing Office.ayer, R. E. (1999). Fifty years of creativity research. In R. J. Sternberg (Ed.), Handbook of creativity (pp. 449–460). Cambridge: University Press.ouchiroud, C., & Lubart, T. (2001). Children’s original thinking: An empirical examination of alternative measures derived from divergent thinking tasks.

The Journal of Genetic Psychology, 4, 401–882.ewell, A., & Simon, H. A. (1972). Human problem solving. Englewood Cliffs, NJ: Prentice-Hall.iffer, D. (2012). Can creativity be measured? An attempt to clarify the notion of creativity and general directions for future research. Thinking Skills and

Creativity, 7, 258–264.lucker, J. A. (1998). Beware of simple conclusions: The case for the content generality of creativity. Creativity Research Journal, 11, 179–182.lucker, J., & Beghetto, R. (2004). Why creativity is domain general, why it looks domain specific and why the distinction does not matter. In R. J. Sternberg,

E. L. Grigorenko, & J. L. Singer (Eds.), Creativity: From potential to realization (pp. 153–167). Washington, DC: American Psychological Association.lucker, J., Beghetto, R. A., & Dow, G. (2004). Why isn’t creativity more important to educational psychologists? Potential, pitfalls, and future directions in

creativity research. Educational Psychologist, 39, 83–96.uccio, G. J. (1991). William Duff’s Eighteenth Century examination of original genius and its relationship to contemporary creativity research. Journal of

Creative Behaviour, 25(1), 1–10.enzulli, J. S. (1986). The three-ring conception of giftedness: A developmental model for creative productivity. In R. J. Sternberg, & J. Davidson (Eds.),

Conceptions of giftedness (pp. 51–92). Cambridge, England: Cambridge University Press.enzulli, J. S., & Delcourt, M. A. B. (1986). The legacy and logic of research on the identification of gifted persons. Gifted Child Quarterly, 1, 20–23.oe, A. (1952). A psychologist examines 64 eminent scientists. Scientific American, 185(5), 21–25.oe, A. (1961). The psychology of a scientist. Science, t34, 56–459.othenberg, A. (1971). The process of janusian thinking in creativity. Archives of General Psychiatry, 24, 195–205.othenberg, A. (1996). The janusian process in scientific creativity. Creativity Research Journal, 9, 207–209.unco, M. A. (1987). The generality of creative performance in gifted and nongifted children. Gifted Child Quarterly, 31, 121–125.unco, M. A. (1989). The creativity of children’s art. Child Study Journal, 19, 177–190.ak, U. (2004). About giftedness, creativity and teaching the creatively gifted in the classroom. Roeper Review, 26(4), 216–222.ak, U. (2010). Assessment of creativity: Focus on math and science. In Paper presented at the 12th ECHA Conference Paris, France.ak, U., & Ayas, M. B. (2013). Creative Scientific Ability Test (C-SAT): A new measure of scientific creativity. Psychological Test and Assessment Modeling, 55(3),

316–329.ak, U., Turkan, Y., Sengil, S., Akar, A., Demirel, S., & Gucyeter, S. (2009). Matematiksel Yetenek Testi (MYT)’nin gelis imi ve psikometrik özellikleri. In Paper

presented at the Second National Conference on Talented Children Eskisehir, Turkey.engil, S. (2009). (Content validity of the Test of Mathematical Talent (Unpublished master’s thesis)) Ilkögretim 6. ve 7. sınıf ögrencilerine yönelik matematik

yetenek testi’nin kapsam gec erligi. Eskisehir, Turkey: Anadolu University.him, J. Y., & Kim, O. J. (2003). A study of the characteristics of the gifted in science based on implicit theory. The Korean Journal of Educational Psychology,

17, 241–255.imon, H. A. (1977). Models of discovery. Dordrech: Reidel.imonton, D. K. (1988). Scientific genius: A psychology of science. Cambridge, England: Cambridge University Press.nyder, A., Mitchell, J., Bossomaier, T., & Pallier, G. (2004). The Creatvity Quotient: An objective scoring of ideational fluency. Creativity Research Journal,

16(4), 415–420.ternberg, R. J. (2003). Giftedness according to the theory of successful intelligence. In N. Colangelo, & G. A. Davis (Eds.), Handbook of gifted education (3rd

ed., pp. 88–99). Boston, MA: Pearson Education.ternberg, R. J. (2005). WICS: A model of giftedness in leadership. Roeper Review, 28, 37–45.ternberg, R. J., & Davidson, J. (2004). Conceptions of giftedness. Cambridge, England: Cambridge University Press.ternberg, R. J., & Lubart, T. I. (1995). Defying the crow: Cultivating creativity in a culture of conformity. New York: The Free Press.ubotnik, R. F. (1993). Adult manifestations of adolescent talent in science. Roeper Review, 15(3), 164–169.abachnick, B. G., & Fidell, L. S. (1996). Using multivariate statistics (2nd ed.). New York: HarperCollins.annenbaum, A. J. (1986). Giftedness: A psychosocial approach. In R. J. Sternberg, & J. E. Davidson (Eds.), Conceptions of giftedness (pp. 21–52). New York,

NY: Cambridge University Press.

orrance, E. P. (1962). Guiding creative talent. Englewood Cliffs, NJ: Prentice-Hall.orrance, E. P. (1988). The nature of creativity as manifest in its testing. In R. J. Sternberg (Ed.), The nature of creativity (pp. 43–75). New York: CambridgeUniv. Press.orrance, E. P. (1992). A national climate for creativity and invention. Gifted Child Today, 15(1), 10–14.weney, R. D. (1996). Presymbolic processes in scientific creativity. Creativity Research Journal, 9(2–3), 163–172.