Measuring Parallelism in Computation-Intensive Scientific/Engineering Applications

Transcript of Measuring Parallelism in Computation-Intensive Scientific/Engineering Applications

1088 IEEE TRANSACTIONS ON COMPUTERS, VOL. 37. NO. 9. SEPTEMBER 1988

Measuring Parallelism in Computation-Intensive Scientific/Engineering Applications

Abstruct-A software tool for measuring parallelism in large scientific/engineering applications is described in this paper. The proposed tool measures the total parallelism present in programs, filtering out the effects of communication/synchronization de- lays, finite storage, limited number of processors, the policies for management of processors and storage, etc. Although an ideal machine which can exploit the total parallelism is not realizable, such measures would aid the calibration and design of various architectures/compilers. The proposed software tool accepts ordinary Fortran programs as its input. Therefore, parallelism can be measured easily on many fairly big programs. Some measurements for parallelism obtained with the help of this tool are also reported. It is observed that the average parallelism in the chosen programs is in the range of 500-3500 Fortran statements executing concurrently in each clock cycle in an idealized environment.

Index Terms-Maximum/total parallelism, measurement of parallelism, parallelism in Fortran programs, parallelism in scientific/engineering applications, upper bound on parallelism.

I. INTRODUCTION

HE VIABILITY of a parallel processing system depends T heavily on the presence of parallelism in the application programs expected in the workload of that system. Since the scientific/engineering applications domain has been designated as the primary beneficiary of parallel processing, there have been several attempts to make parallel machines targeted for scientifidengineering applications [ 11-[3], 161, [7]. In each case, the claim for the viability/optimality of the machine design (and often for its superiority over all other machine designs) is based on the assumptions about the amount of parallelism present in the applications (number of concurrently performable operations, averaged over time) and its nature (fine grain, coarse grain, vectorial, etc.).

The ensuing debate on the advantages, disadvantages, and efficiency of these different machine designs has been incon- clusive, and often confusing, because researchers differ on the extent and nature of parallelism found in scientifidengineering applications. The differences have arisen because of several reasons, some of which are addressed below.

Traditionally, the investigation of parallelism in large scientifidengineering applications has been restricted to mea- suring the parallelism that can be exploited by some particular machine. Studies have been conducted to measure parallelism exhibited by vector machines such as Cray and Cyber, SIMD

Manuscript received July 18, 1986; revised December 18, 1986. The author is with the IBM Thomas J . Watson Research Center, Yorktown

IEEE Log Number 8717777. Heights, NY 10598.

machines such as ILLIAC-IV and MPP, and MIMD machines such as Intel-Hypercube and RP3 [2], [8]. [9]. Usually these experiments report only part of the parallelism present in the program, the part which can be exploited effectively by the machine on which the program is executed. For example, studies conducted on the vector machines ignore the parallel- ism present in the unstructured portions of the program, which cannot be vectorized. Similarly, the MIMD machines do not exploit, and the experiments run on these machines do not report, the low-grained parallelism which cannot be exploited effectively on MIMD machines.

To add to the reigning confusion, when parallelism is measured on a particular machine, the reported parallelism is diluted with the synchronization, communication, and re- source management overheads required to exploit it. Further- more, measurements are usually taken on a few small programs because in the absence of good tools, the measure- ment of parallelism is quite difficult and time consuming. Finally, no serious attempt has been made to characterize parallelism. Most measurements report only the reduction in finish time (for a few programs) as a function of the number of processors used.

A more reliable measure of parallelism is needed to aid the development and evaluation of future parallel processors, a measure that captures the parallelism in a program irrespective of whether this parallelism can be detected by any existing/ promised compiler, and whether it can be effectively exploited by any available/promised parallel processing system. Ma- chine dependent overheads incurred in moving the data, scheduling the computation, and managing the processor and memory resources, should be filtered out from the measure- ments because these overheads change from machine to machine. For similar reasons, machine dependent limitations such as the effect of finite number of processors and finite storage should also be filtered out. Parallelism measured according to these guidelines will be called total parallelism.

Some early measurements of parallelism in Fortran pro- grams are reported by Kuck et a/. in [4]. These measurements were obtained by analyzing the programs (statically) and determining which statements can execute in parallel. Since the programs were not executed and the actual execution path of the programs could not be determined, the parallelism available in a program was estimated by taking the average of the parallelism found on each trace in that program. Parallel- ism was measured at the level of primitive operations. Compiler transformations such as tree height reduction and forward substitution were used to expose additional parallel- ism in the program, beyond what was actually present.

0018-9340/88/0900-1088$01.00 0 1988 JEEE

KUMAR: PARALLELISM IN COMPUTATION-INTENSIVE APPLICATIONS 1089

As noted by Kuck et al. in [4], taking the average of the parallelism in each trace gives a conservative estimate for the total parallelism. Although many programs were analyzed in [4], each individual program was relatively small. Larger computation-intensive problems might have more parallelism than that observed in [4]. Furthermore, static analysis of programs tends to give a conservative estimate for available parallelism because flow of a value between two operations has to be assumed whenever the absence of this flow cannot be proved analytically.

A software tool developed for measuring the total parallel- ism in Fortran programs and characterizing it is described in this paper. It was named COMET, an abbreviation for “concurrency measurement tool. ” Parallelism in a program is obtained by actually executing the program, monitoring the statements executed and the flow of values between them, and then deducing the maximum concurrency possible. This approach avoids most of the shortcomings inherent in the approaches mentioned earlier.

The measurements obtained from COMET would aid the evaluation of various parallel processing systems by providing the right set of assumptions for the extent of parallelism present in applications. These measurements can also be used to gauge the efficiency of various compilers and machine architectures. The characterization of parallelism is a more difficult task and is handled at a cursory level at present. In the current implementation of COMET, concurrency in any chosen section(s) of a computation can be monitored over desired intervals. However, work is in progress to refine this characterization.

To measure the parallelism in a Fortran program, the program is modifiedlextended by including a set of shadow variables (one for each variable in the original program) and a set of statements that initialize and manipulate the shadow variables. The statements of the original program are called the core statements and the newly added statements are called tracking statements. The shadow variables and the tracking statements are inserted automatically by COMET. Therefore, there is no need to understand the application or change it in any other way.

The modifiedlextended program is compiled and executed (using the available input data sets). During the execution of the program, the tracking statements trace the dynamic execution sequence of the core statements. For each core statement in this sequence, the corresponding tracking state- ments also compute the earliest time at which this core statement would execute if the program was executed on a machine capable of exploiting all the parallelism present in the program without incurring any overheads, i.e., an ideal parallel machine.

The shadow of a variable (storage cell) is used to record the time at which the current value of this variable was computed. The earliest time at which a core statement can execute is determined by using the values of the appropriate shadow variables. This “earliest time” is used to update a table which, at the termination of the program, contains a profile of the parallelism observed in the program.

The programs analyzed for parallelism are fairly large

applications. Under ideal conditions, the average parallelism observed in the chosen programs is in the range of 500-3500 Fortran statements executing concurrently in each clock cycle. Furthermore, parallelism is fairly uneven in most applications. There are phases during the execution of the program when the instant parallelism is several orders of magnitude above/below the average parallelism.

While modifying/extending a program to monitor the parallelism, the original computation is not transformed in any way. The operations performed and the flow of operands between them (the computation graph) remain unchanged. Various compiler transformations (such as scalar expansion/ renaming, tree height reduction, and various loop-oriented optimizations), which can increase the amount of parallelism exhibited by the program, were not used. In fact, COMET can measure the effectivenesslimportance of these transformations by comparing the parallelism observed in the original and the transformed program.

The next section of this paper states the metric used for measuring parallelism. A hypothetical machine called the “ideal parallel machine” is defined to simplify this discussion. A brief overview of the approach used for measuring parallelism is given in Section 111. In this approach, the program to be analyzed is modified. The required modifica- tions/extensions are illustrated in Section IV. The measure- ments taken on some large programs with the help of COMET are reported in Section V. In Section VI, extensions needed in COMET to allow the measurement of parallelism in storage- oriented architectures are described (here write after read/ write synchronizations must be observed). The impact of assuming a priori knowledge of how the data flow between various statements of a program (a characteristic of the ideal parallel machine) is discussed in Section VII. Suggestions for further research in this direction are outlined in Section VIII, and finally, the summary and conclusions of this paper are given in Section IX.

11. THE IDEAL PARALLEL MACHINE

Our objective is to measure the total parallelism present in a Fortran program. This total parallelism can be observed if the program is executed on a machine which has unlimited processors and memory, does not incur any overhead in scheduling tasks and managing machine resources, does not incur any communication and synchronization overheads, and detects and exploits all the parallelism present in the program. The ideal parallel machine defined in this section is a hypothetical machine which possesses the above mentioned qualities. Parallelism in a program is defined as the activity observed in this hypothetical machine when it is executing that program.

In the following discussion, it is important to differentiate the terms “variables” and “values,” A variable is a storage cell or any array of storage cells accessible by a program. A value is a particular scalar intermediate result, computed during the execution of the program. Values are assigned to (stored in) variables. Thus, the sequence of statements “ A = 5; A = B; A = cos ( B ) . . ” assign three different values to the same variable.

.. .

1090 IEEE TRANSACTIONS ON COMPUTERS. VOL 37 . NO. 9. SEPTEMBER I Y X X

The ideal parallel machine exhibits the following behavior. 1) It executes one Fortran statement in unit time. All the

memory access and logic/arithmetic operations involved in executing this statement are completed within this unit time.

2 ) A statement S is executed as soon as

all the conditional branches that precede S in the sequential execution of the Fortran program, and whose outcome affects the decision whether to execute S or not, have been resolved, all the input data values required by S are available.

3) It has a complete knowledge of how the data flow between the statements of a program during the program’s execution. When a data value is produced by some statement, the ideal parallel machine can infer which successor state- ments will ultimately use that value.

4) The output value produced by a Fortran statement is available forever after the execution of that statement (even after the variable to which that value was assigned, has been reassigned with a different value). Therefore, there is no need for write after read/write synchronization in the ideal machine.

Perhaps, the last two points need further clarification. In Fortran, if a statement A assigns a value to a variable V and a successor statement B requires the value associated with variable V as an input, one cannot conclude that the value produced by A and the value required by B are the same until one verifies that the sequence of statements executed between A and B do not modify the variable V. However, we will assume that the ideal parallel machine can foresee the sequence of statements to be executed in the future for the restricted purpose of enumerating the successor statements that will use the currently computed result as one of the required inputs.

The above mentioned situation is illustrated in Fig. 1. A subcomputation consisting of four statements is shown in this figure. Assume that the subcomputation is initiated at time (t = l), the value of Y is 2, i.e., the true branch of the conditional statement is taken, and that the value of Y is available at time (t = 10). The value o f t shown in parenthesis next to each statement in Fig. 1 indicates the time at which the statement can be executed. Statements 1 and 2 will execute at times 1 and 10, respectively, according to the first two rules mentioned above. In practice, one would expect the execution of statement 4 to be delayed at least until the condition in statement 2 is evaluated, because until then it is not known whether the value of variable A to be used by statement 4 is the value created by statement 1 or the one created by statement 3. Unfortunately, there is no simple method for determining all the necessary delays of this kind without being overrestrictive. So the ideal machine will ignore the delays of this kind and execute statement 4 at time ( t = 2). By observing/respecting all the true data dependencies [ 5 ] , one can incorporate all the necessary delays at the cost of including some unnecessary ones. This approach and its limitations are discussed further in Section VII.

There was a motive for ignoring the write after read/write synchronizations. The need for such synchronizations arises primarily because Fortran presents a storage-oriented model

Fig. 1. Assumption about the advance knowledge of how the data flows between the statements of a program.

of computation, and application programmers have a tendency to minimize the storage requirement by reusing storage. Thus, the need for write after read/write synchronizations is usually not inherent in the application, but imposed by the program- mer. This need can often be avoided by renaming the variables that create the need.

The repercussions of ignoring the write after read/write synchronizations are illustrated in Fig. 2 . Once again, assume that the subcomputation consisting of four statements is initiated at time ( t = l), and that the value of Y is available at time (t = 10). Then the first three statements of the subcomputation will execute at times ( t = l ) , ( t = lo), and ( t = 1 l ) , respectively. In storage-oriented machines, state 4 (which assigns a new value to variable A ) cannot execute until statement 3 (which uses the previous value of A ) completes execution. Thus, the earliest time at which the execution of statement 4 can be initiated is ( t = 12).

In machines that allow several values associated with the same variable to coexist (such as dynamic data flow machines [3]), a new value can be created for a variable before all the uses of the previous value assigned to the same variable are complete. Thus, statement 4 can define a new value for variable A before statement 3 completes execution, and therefore it can be executed at time ( t = 2). Although the ideal parallel machine ignores the write after read/write synchroni- zations, they can be incorporated easily, and one method for incorporating them is discussed in Section VI.

111. METHOD FOR MEASURING PARALLELISM

To measure the parallelism that a Fortran program P will exhibit while executing on the ideal parallel machine, the program P is extended/modified to produce another Fortran program P‘. The program P’ thus obtained is compiled and executed, using one or more input data sets intended to be used with the original program P. The output obtained on executing P‘ consists of the results expected from the execution of P and a histogram of the parallelism observed in the program. The modificationdextensions are used for monitoring the computa- tion. The original computation is not altered or transformed in any way. The extensions/modifications required in P , and their role in the measurement of parallelism, is discussed in this section. The key extensions are the following.

Shadow variables: For each variable accessible in P, a shadow variable is added in P’. The shadow of an N- dimensional array is an N-dimensional array with matching dimensions. Thus, for each scalar element of an array, there is a scalar shadow element in the shadow array. The shadow of a scalar variable or an array element holds the time at which the

KUMAR: PARALLELISM IN COMPUTATION-INTENSIVE APPLICATIONS 1091

Fig. 2. Repercussions of ignoring the write after read and write after write dependencies.

value of the associated scalar element (a storage cell which can be a scalar variable or an array element) was computed.

Control variables: An array CVAR is added to P‘ so that for each statement S in P, there is a control variable CVAR(S) defined in P’. CVAR(S) marks the time at which one can conclude that S must execute. Tracking statements associated with the conditional branch and loop control statements keep the value of the CVAR() variables consistent. For example, if a statement S is executed only if a particular branch is taken from a conditional branch statement C, then one cannot decide whether S must execute or not until C is executed. In fact, the execution of S has to be delayed until the last conditional branch statement C’, which precedes S and controls its execution in the above mentioned manner, has been executed. The CVAR variable for statement S is set by the tracking statements associated with C’ .

Statements f o r updating shadow variables: For each assignment statement S in P, tracking statements are added to P‘ to update the shadow of the scalar element being modified by S . The time at which the execution of S can be initiated is determined by taking the maximum of CVAR(S) and the shadows of the scalar elements required to execute S . This is the earliest time at which we know that S must execute and that all the input values required by S are available. The time required to execute S is assumed to be one cycle, but one can substitute more realistic values for this time such as the height of the expression tree being evaluated or the number of nodes in the expression tree. The time at which the new value of the scalar element being updated by S is available is obtained by adding the time required to execute S and the time at which the execution of S can be initiated. The shadow of the updated variable is modified to reflect this time.

Statements f o r updating control variables: Conditional branch statements (arithmetic and logical “IF’S,” “DO” loops, and computed and assigned “GO TO’S”) are split into two statements. The first statement evaluates the condition and updates a Boolean variable, and is treated like an assignment statement. A tracking statement is generated for it as men- tioned in previous paragraph. The second statement makes the branch based on the value of this Boolean variable. Tracking statements are inserted on each target of the branch. For each branch target, these tracking statements modify the CVAR variables of the successor statements which are reachable from this branch target but are not reachable from all the targets of this branch statement.

The array PROFILE: A one-dimensional array “PRO- FILE” is added in P‘. It is initialized at the beginning of P‘. Every time a core statement S executes in P‘, the time T at which its execution completes (determined by the associated

tracking statements) is used to increment PROFILE( T ) by one. For each statement in P, a tracking statement is added in P‘ to perform this increment operation. At the termination of P ’ , the contents of this array are printed out. The array “PROFILE” essentially gives the parallelism that will be observed in the program, if the program is executed on the ideal parallel machine.

The conversion from P to P’ is automatic. The Fortran program is scanned (lexically) to produce the symbol table and the control flow graph. The symbol table is used to generate the declarations of the shadow variables. (Special consider- ation is given to “COMMON” declarations and equivalence statements.) The tracking statements that modify the CVAR variables are generated by analyzing the control flow graph, and those associated with the assignment statements are generated by enumerating all the scalar elements accessed by the assignment statement.

I v . CREATING MODIFIED/EXTENDED FORTRAN PROGRAMS This section illustrates how the modifications/extensions

mentioned in the previous section can be incorporated in Fortran programs. A small program consisting of 11 Fortran statements is shown in Appendix A. The extendedlmodified version of the same program is shown in Appendix B. These two programs will serve as an example to show the required modifications/extensions. These extensionslmodifications can be placed in three broad categories, namely, the declaration and initialization of shadow variables, addition of tracking statements (associated with assignment statements) to update the shadow variables and the histogram for parallelism, and addition of tracking statements (associated with conditional branch and loop control statements) to update the CVAR variables.

Declaration and initialization of shadow variables: The names of shadow variables are obtained by prefixing the variable names with “$.” Thus, the shadow for A ( I ) is $A(Z). All shadow variables are declared implicitly as integers (line 1). However, the shadow variables for arrays have to be declared explicitly, and this is done at the beginning of the program (line 3). Next, all shadow variables are initialized to 1 (lines 4-6), since it is assumed that the initial values of all variables are available at time (t = 1) . DO loops are inserted to initialize the shadows of arrays (lines 7-8). The variables in COMMON blocks are initialized in a separate subroutine created specifically for this purpose, and called at the beginning of the main program.

Updating the shadow variables and the histogram for parallelism: Line 25 in Appendix B illustrates how shadows are updated. To execute statement 6, the array element A (N - I ) is required. However, to compute the index of the array element, we need the variables I and N. Variable I is also needed to index the location where the result computed by statement 6 is stored. The variables A (N - I), N , and I are available at ( t = $A(N - I ) ) , ( t = $ N ) , and (t = $I), respectively. Furthermore, CVAR(6), set by the tracking statements of statement 5 (in line 20), indicates the earliest time at which it is known that statement 6 must execute. Therefore, the maximum of CVAR(6), $A (N - I ) , $N , and

1092 IEEE TRANSACTIONS ON COMPUTERS, VOL. 31. NO. 9. SEPTEMBER 1988

$I indicates the earliest time at which statement 6 can execute. The time at which the new value for A ( I ) is available is obtained by adding 1 (the time to compute statement 6) to the time at which execution of statement 6 is initiated. The shadow of A ( I ) , which is $ A ( I ) , is updated to reflect this time.

Every time a new value is assigned to some variable, in addition to updating the shadow of that variable, a subroutine is called (subroutine MK1$ in this example) to update the histogram for parallelism (lines 17, 26, and 30). This histogram is initialized at the beginning of the program by the subroutine MKZNZT (line 13). At the termination of the program, this histogram is printed out by the subroutine PRINT$ (line 38).

Modifying the CVAR array: It is assumed that the main program is started at time step 1. Therefore, on entry to the main program, the CVAR values of all statements that are guaranteed to execute on invocation of the main program are initialized to 1 as shown in lines 9-12 of Appendix B. The CVAR values of statements that are enclosed within loops or conditional sections, and thus may be executed repeatedly or not at all, are set within the program itself as discussed next.

Lines 19-24 illustrate how a conditional branch introduced by a “logical IF” statement (statement 5 in Appendix A) is handled. Statement 8 is executed if and only if the branch is taken. Statements 6 and 7 are executed if and only if the branch is not taken. All other statements reachable from the conditional branch statement are executed irrespective of whether the branch is taken or not. Therefore, if the branch is taken, then before jumping to the target of the branch (statement 8) the CVAR variable for statement 8 is updated. If the branch is not taken, then before executing statements 6 and 7, their CVAR variables are updated.

DO loops are viewed (thought of) as two assignment statements and a logical branch statement. One assignment statement is needed to initialize the loop induction variable, and the other is needed to update and check the loop induction variable in each loop iteration. Lines 14 and 32 in Appendix B are the tracking statements required to update the shadow of the loop induction variable on each update of the loop induction variable. Lines 34-36 update the CVAR variables of those statements within the loop whose execution is guaranteed in each new iteration.

When there are multiple exits from a loop, the CVAR variables of statements, which can be reached from the normal exit (the loop closing statement) but not from all loop exits, are updated immediately after the loop closing statement.

The loop statements are treated differently in programs written in Fortran-66, and in Fortran-77. The fact that zero trip DO loops are not allowed in the former version is taken into account. The method of handling arithmetic IF statements and computed and assigned “go to” statements is similar to that of handling logical IF statements. The essential change is in the number of branch targets allowed.

The execution of a subroutine or a function subprogram is started as soon as it is known that the subroutine call statement or the statement invoking a user-defined function (say state- ment x) must execute, i.e., at the time indicated by CVAR(x).

(It is assumed that system-defined functions return their results in unit time.) On entering a subroutine or a function subprogram, the CVAR values of all statements that are guaranteed to execute on entry are initialized to CVAR(x). The CVAR values of the remaining statements (those enclosed by “DO” loops or conditional sections) are set within the called routine in the same manner as for the main program. The value of CVAR(x) is passed from the calling routine to the called routine as a parameter. The interprocedural true data dependencies are observed by adding to the parameter list the shadows of all the values to be exchanged between the calling and the called routine. Thus, the called routine can infer when it can start executing and when the values of its global variables and formal parameters become available.

As a result of the above mentioned modifications, the number of statements in the Fortran program increases by a factor of 4-5. The storage required to hold shadow variables, control variables, and other information required to track the execution of the program is between 1 and 1.5 times the data storage required for the original program. The execution time of the program increases by a factor of roughly 10.

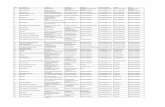

V. EXPERIMENTS

Some measurements taken with the help of COMET are discussed in this section. Different aspects of parallelism were observed in four large Fortran programs. The programs analyzed are SIMPLE, VA3D, Discrete Ordinate Transport (SNEX), and Particle-in-Cell (WAVE). In this section, the parallelism in a program is expressed as a function of time, over the interval between the start time and the finish time of a program (when executed on the ideal parallel machine). For a given time instance in this interval, parallelism is equal to the number of Fortran statements that would be executing concur- rently on the ideal parallel machine at that time instance.’ Average parallelism, obtained by dividing the total number of Fortran statements executed in the program by the execution time of the program on the ideal parallel machine, is also reported. This number can also be interpreted as the speedup of the ideal parallel machine over an ideal sequential machine which executes a Fortran program sequentially at the rate of one statement per cycle.

The SIMPLE code models the hydrodynamic and thermal behavior of plasma. The bulk of the computation in this code is performed in the single outermost loop. Each iteration of this loop calculates the changes in the hydrodynamic and thermal properties of the plasma over an incremental time step. Each iteration of this loop consists of two main parts, the hydrody- namic calculations and the heat-conduction calculations. The hydrodynamic equations are solved using an explicit method and the heat-conduction equations are solved using an alternat- ing direction implicit (ADI) method. The mesh size is 32 x 32.

The parallelism observed in a single iteration of the

’ The number of points on each parallelism plot is under 3000 (limitations of the plotting software). For programs whose execution time exceeds 3000 cycles, the execution time is divided into subintervals (less than 3000). The average number of statements executed per cycle within a subinterval is plotted for each subinterval.

KUMAR: PARALLELISM IN COMPUTATION-INTENSIVE APPLICATIONS 1093

. 0 400 800 1200 1600

Time --D

Fig. 3 . Parallelism observed within a single iteration of the SIMPLE program.

outermost loop of the SIMPLE program (one pass through the hydro and conduction phases) is illustrated in Fig. 3. The activity outside the loop has been filtered out from the measurements so that the one-time computation performed for setting up the program (noniterative part) is not reflected in the measurements. This was accomplished by initializing the PROFILE array just before the loop was entered, changing the loop test so that precisely one iteration was performed, and printing the contents of the PROFILE array immediately after the loop exit. The parallelism in four consecutive iterations of SIMPLE was measured in a similar way and the results are shown in Fig. 4.

It can be readily seen that the bulk of the computation in each iteration is performed in the first 200 time steps of the iteration, and substantial parallelism is present in this phase. The average parallelism observed in these 200 cycles is about 2350 Fortran statements/cycle. However, a small amount of computation (roughly 800 statements) is absolutely sequen- tial. This part of the program computes the total internal energy by summing up the internal energy of each grid element. A single variable is used to accumulate the partial sums and hence the sequential behavior (32 x 32 numbers are added in sequence). Thus, each iteration spans a little over 1000 time steps. The average parallelism over the entire iteration is about 475 Fortran statementditeration.

One can readily see in Fig. 4 that most of the activities of two consecutive iterations of the SIMPLE program do not overlap in time. Still, the second iteration starts much before the first iteration is over, because the second iteration does not depend on the total internal energy computed in the first iteration. In other words, the sequential part of the computa- tion within an iteration of the outer loop can be overlapped with the sequential computations of preceding and subsequent iterations, but the parallel computation within one iteration can not be overlapped with the parallel computation of other iterations.

The breakup of parallelism between the hydro and conduc- tion phases within a single iteration is shown separately in Fig. 5. A substantial part of the computation performed in the conduction phase cannot be overlapped with the hydro phase because the former uses the velocities, pressures, and densities computed in the latter. Furthermore, the conduction phase has less parallelism than the hydro phase perhaps because the former uses an implicit technique for solving the PDE's while the latter uses an explicit method.

The VA3D program solves the 3-D Euler equations, which are thin layer approximations to the compressible Navier-

0 400 800 1200 1600

Time - Parallelism observed in four consecutive iterations of SIMPLE. Fig. 4.

Total parallelism

0 800 1000 200 400 600

Time A

Parallelism in Hydro phase

v

0 8C0 1000 200 400 600 Time +>

0 800 1000 200 400 6 0 0 Time -

Parallelism in the hydrodynamic and heat-conduction phases of Fig. 5 . SIMPLE, within one iteration.

Stokes equations, on a small three-dimensional grid of size 30 x 21 x 30. Viscous effects are simulated only along one direction.

The parallelism observed in a single iteration of the outermost loop of the VA3D program is shown in Fig. 6. The average parallelism in this case is about 2200 Fortran statements/cycle. How this parallelism overlaps with the parallelism in the subsequent iterations of this loop can be observed in Fig. 7, which shows the parallelism in three consecutive iterations of the loop. It can be seen in Fig. 7 that the work done in the outermost loop can be divided in two parts. The computation belonging to first part can be over- lapped with the same computation from other iterations of this loop while the computation belonging to the second part cannot be overlapped with the same computation in preceding iterations of this loop. However, substantial parallelism exists within each iteration of the loop.

1094

- I I I I I I I L

0 1000 2000 3000 4000 5000

Time - VA3D program.

Fig. 6. Parallelism observed in a single iteration of the outermost loop of the

Time

0 1000 2000 3000 4000 5000

Time --p

Ah 0 1000 2000 3000 4000 5000

Time - Fig. 7. Parallelism in three consecutive iterations of the VA3D program.

The program SNEX provides numerical solution for the monoenergetic discrete ordinate equations in hydrodynamics, plasma, radiative transfer, and neutral and charged particle transport applications. It generates the exact solution for the linear transport equations in one-dimensional plane, cylindri- cal, and spherical geometries. The overall parallelism ob- served in this application is shown in Fig. 8 . The average parallelism exhibited by this program is about 3500 Fortran statementdcycle.

Finally, WAVE is a two-dimensional particle-in-cell code used primarily to study the interaction of intense laser lights with plasmas. It solves the Maxwell equations and particle equations of motion on a Lagrangian mesh. The overall parallelism observed in this program is shown in Fig. 9, and the average parallelism is about 1 180 Fortran statements/ cycle.

In almost all measurements, there is an initial burst of very high level of parallelism. This happens because the control

IEEE TRANSACTIONS ON COMPUTERS, VOL. 37, NO. 9, SEPTEMBER 1988

structures of the computation, especially the loops, unfold rather rapidly while the computation within the loops does not proceed as rapidly because of data dependencies. The initial burst of parallelism is created by this unfolding of the loops.

VI. INCORPORATING THE EFFECT OF WRITE AFTER READIWRITE SYNCHRONIZATIONS

In the preceding discussion, the write after read and write after write synchronizations were ignored. However, in storage-oriented architectures and in machines with restricted storage, these synchronizations are necessary. The parallelism present in the programs (or the loss of parallelism incurred) when these synchronizations are observed can be measured by modifying COMET in the following manner.

Two shadow variables are now associated with each program variable instead of one, the read shadow and the write shadow. The read shadow keeps track of the time at which the associated variable was last read after being written. The write shadow keeps track of the time at which the associated variable was last written. The tracking statements associated with each assignment statement of the original program are changed and new tracking statements are added as shown in Fig. 10. In this figure $A W, $B W, $CW and $AR, $BR, $CR are the write and read shadow variables for A , B , and C.

The earliest time at which a statement S can be executed is found by taking the earliest time at which 1) the variable being updated by S has been written and read by all statements that precede X in the sequential execution of the program and access this variable, 2 ) S can be executed on the ideal parallel machine. The earliest time at which both these requirements are met is found by taking the maximum of CVAR(S), the write shadows of all the variables being accessed by S (both on the left- and right-hand sides of S ) , and the read shadow of the scalar variable or array element being updated.

The read shadow of each variable accessed by S for obtaining an input value is modified by the tracking statements associated with S to reflect the latest time at which this value is accessed by S or its sequential predecessor. It is assumed that an input value required by S must be available until the execution of S completes. Therefore, the latest time at which S and its predecessors read this input value can be obtained by taking the maximum of the time at which S reads the input value and the latest time at which the predecessors of S read this input value.

For the SIMPLE program, the loss in parallelism incurred on incorporating the effect of write after read/write synchroni- zations is shown in Fig. 11. These synchronizations were observed for all variables except the loop control variables (the variables occurring in the DO statements). The consecutive iterations of the outer loop in the program start with a stride of roughly 200 cycles when the write after read/write synchroni- zations are ignored. The stride increases to roughly 5000 cycles when the above mentioned synchronizations are incor- porated. However, it should be recalled that an old and unmodified Fortran program is being monitored. By using compiler transformations such as scalar/array renaming/ expansion, and clever storage management policies, most of the parallelism lost because of write after read/write synchro- nizations can be recovered.

KUMAR: PARALLELISM IN COMPUTATION-INTENSIVE APPLICATIONS 1095

~

* - + I 1 I I I I 1 1

0 - ’t

0 5000 10000 15000 20000 25000

I ime- Fig. 8. Parallelism observed in the “Discrete Ordinates Transport” pro-

gram.

- 1 I I 1 1 I 1 I 1 0 2x 1 o4 4x104 6x 1 O4 8 X 1 O4 1 x 1 0 ~

T ime +

Fig. 9. Parallelism observed in the “Particle-in-Cell” program

VII. RELAXING THE ASSUMPTION ABOUT A PRIORI KNOWLEDGE OF DATA FLOW

As discussed in Section 11, by assuming the complete knowledge of the data flow between statements, the ideal parallel machine can initiate the execution of a statement much earlier than a realistic machine. Hence, the reported parallel- ism in this paper is an upper bound or an optimistic estimate of the parallelism exploitable by any realistic machine. This inaccuracy can be reduced to a certain extent by analyzing the data dependencies in the program [ 5 ] , and using the informa- tion to delay the execution of statements when necessary. This section briefly discusses an approach to avoid the assumption that the ideal parallel machine has complete knowledge of the

data flow between statements, and the limitations of this approach.

The true data dependencies can be used to enumerate, for each statement A in the program, the list L ( A ) of all predecessor statements which can possibly provide an input value for A . This list can be used to determine the set S ( A ) of statements such that the result of executing any statement in the set either provides the last input value required by A or determines that no other statements from L ( A ) will execute before A . Then the execution of A in a parallel machine can be initiated as soon as some statement in S(A) is executed.

The difficulty with this approach is that the true data dependencies generated at compile time are usually conserva-

1096 IEEE TRANSACTIONS ON COMPUTERS, VOL. 31 , NO. 9, SEPTEMBER 1988

"."%*- U~

E+&+&* $AW = Compute Time +

MAX(CVARS(i!, $BW, $ C W , $AN, $AR!

$BR = MAX($BR, $Ah%

$CR = MAXIPCR, $CW)

Fig. IO. Modifications required in COMET to incorporate the costs of write after readiwrite synchronizations.

0 400 T IME (a)

5000 10000 15000 20000

TIME

ib) Fig. 11. Effect of the write after read/write synchronizations on the

parallelism observed in the SIMPLE program. (Four consecutive iterations of the outermost loop monitored). (a) Write after read/write synchroniza- tion ignored. (b) Write after read/write synchronization incorporated.

tive (superset of the arcs that indicate the actual flow of data during the execution of the program), because techniques for interprocedural data flow analysis, analysis of complex subscript expressions, etc., have not been perfected yet. Hence, the parallelism observed in the program, when the above mentioned approach is used to initiate the execution of statements, will be a conservative estimate of the total parallelism available.

The parallelism observed in the SIMPLE program on using the approach discussed in this section is shown in Fig. 12(b). For comparison, the parallelism observed when the complete knowledge of data flow between statements is known a priori is shown in Fig. 12(a). It should be remembered that the algorithms used for analyzing data dependencies in this

- Time

(b)

0 400 BOO 1200

Fig. 12. Effect of the knowledge about data flow between statements on observed parallelism. (a) All knowledge about data flow between state- ments known apriori. @) Knowledge about data flow generated at compile time (superset of actual data flow that occurs during execution).

experiment are perhaps less sophisticated than those available with the leading vendors of vectorizing compilers.

VIII. SUGGESTIONS FOR FURTHER RESEARCH The main objectives of implementing the COMET software

are to measure the total parallelism present in the Fortran programs, characterize this parallelism, and to evaluate the efficiency of various parallel machine organizations. While the paper has focused primarily on measurement of the total parallelism, this section summarizes the efforts underway to characterize the parallelism, to evaluate various machine organizations, and to improve COMET'S usability and appli- cability.

Since COMET actually executes a program and monitors the flow of operands between various Fortran statements, it can be used to detect storage/network bottlenecks and proces- sor idling in various machine organizations with a finite number of processors and specific memory organization. If the algorithms which assign computation to processors and valueshariables to memory modules are specified, the number of operations performed on each processor, the number of memory accesses to each storage module, and the number of operands moved on each network link can be counted to determine whether a processor, memory module, or a memory link is overutilized. COMET can also serve as a testbed for evaluating these resource management algorithms and various scheduling algorithms in the finite processor case.

The characterization of parallelism, which was handled at a cursory level in this paper, is perhaps the most deserving topic for further investigation. COMET can be used to determine whether the parallelism present in programs is fine grained or

KUMAR: PARALLELISM IN COMPUTATION-INTENSIVE APPLICATIONS 1097

coarse grained or both. Measurements of parallelism at the level of operations, statements, and subroutines have been obtained with the help of COMET software. However, further refinements and measurements are required before meaningful characterization of parallelism can be provided.

The characterizations of parallelism that have a bearing on the resource management algorithms for parallel machines are of special interest. Given a partitioning of the program into tasks, the parallelism exhibited by each task and how the parallel execution of two tasks overlaps in time can be observed. This could lead to more effective partitioning of the program. Facilities are provided in COMET to allow dynamic specification of tasks (at run time).

Another useful characterization of parallelism is its depen- dence on the size of the input problem and internal data structures. For a given problem, such dependence could be observed by simply running the problem repeatedly for different input data (grid size or convergence limit, etc.).

COMET generates a tremendous amount of information during the execution of a large program, which in its totality is definitely not fit for human consumption. During the develop- ment of COMET and its subsequent use in measuring parallelism, the need for user-friendly editing facilities to digest the raw information and reproduce it in a more palatable form became quite obvious. These editing facilities are needed for taking a detailed look at the parallelism observed in some part of a big program, or that observed in the entire program during a small time window.

COMET is geared to analyze source programs written in Fortran. This choice was made because most major scientific/ engineering applications are written in this language. One can easily reimplement COMET to accept another source lan- guage. This would require the rewriting of the scanner which creates the program graph and symbol table from the input program, and the output routines which generate the modified/ extended program. Such a reimplementation would perhaps be substantially easier than the original effort because most languages are better structured than Fortran.

IX. SUMMARY AND CONCLUSIONS

In this paper, we described a software tool for measuring parallelism in large programs, and reported some measure- ments obtained with the help of this tool. An important feature of this tool is its ability to accept ordinary Fortran programs (without any modifications) as its inputs. Thus, parallelism can be measured over many large programs to get a reasonably accurate idea about the presence of parallelism in scientific engineering applications.

While only four programs were analyzed for parallelism, each of these programs is a complete and fairly large application. A few hundred million Fortran statements are executed in one run of each application (except SIMPLE, where the number is about 500 000). Under ideal conditions (the environment of the ideal parallel machine), the average parallelism observed in the chosen programs is in the range of 500-3500 Fortran statements executing concurrently in each clock cycle.

An important conclusion to be drawn from this paper is that

parallelism is fairly uneven in most applications, i.e., there are phases during the execution of the program when the instant parallelism is several orders of magnitude higher than the average parallelism, and then there are times when the instant parallelism is several orders of magnitude lower than the average.

APPENDIX A

The program to be analyzed:

IMPLICIT LOGICAL (F ) INTEGER A (lo), I , N D O 2 1 = l , N FV1 = I.LE.N/2 IF (FV1) GO TO 1

GO TO 2 1 A ( I ) = 1

A ( I ) = A ( N - I )

2 CONTINUE STOP END

APPENDIX B

Extended/modified version of the program in Appendix A:

IMPLICIT INTEGER ($), LOGICAL ( F ) (Line 1)

9997

9996

9995

9993

9994

9999

1

2

INTEGER CVAR( 1000) INTEGER $A(lO), A(10), I , N $I = 1 $N = 1 $FVl = 1 DO 9997 ZVl$ = 1, 10 $A(IVl$) = 1 DO 9996 ZV1$ = 3 , 5

CVAR(IVl$) = 1 DO 9995 ZV1# = 9, 10

CVAR(ZVl$) = 1 CALL MKINIT $I = 1 + MAXO(CVAR(3),$N) DO 9998 I = 1, N $FVl = 1 + MAXO(CVAR(3),$N,$I) CALL MK1$ (4, $FV1) FV1 = I.L.E.N/2 IF (FV1) GO TO 9994 DO 9993 [VI$ = 6, 7

CVAR(IVl$) = $FV1 GO TO 9999 CVAR(8) = $FVl GO TO 1 $ A ( I ) = 1 +

MAXO(CVAR(6),$N,$A (N - Z),$I) CALL MKl$ (6, $ A ( I ) )

GO TO 2 $A(Z) = 1 + MAXO(CVAR(8),$I) CALL MKl$ (8, $A(I ) )

$1 = 1 + MAXO(CVAR(9),$N,$Z) CALL MK1$ (9, $I)

A(Z) = A ( N - I )

A ( Z ) = 1

1098 IEEE TRANSACTIONS ON COMPUTERS, VOL. 37, NO. 9, SEPTEMBER 1988

DO 9992 ZV1$ = 3, 5 9992 CVAR(ZVl$) = $1

CVAR(8) = $1 9998 CONTINUE

CALL PRINT$ STOP END

ACKNOWLEDGMENT

I would like to thank K. Ekanadham and R. Cytron for their valuable assistance and helpful suggestions. The algorithm used to find which control variables are modified by a conditional statement was implemented by K. Ekanadham.

REFERENCES J. Beetem, M. Denneau, and D. Weingarten, “The GFl1 supercompu- ter, ” in Proc. I2th Annu. Symp. Comput. Architecture, June 1985,

W. Crowther et al., “Performance measurements on a 128-node butterfly parallel processor, ” in Proc. I985 Conf. Parallel Process- ing, Aug. 1985, pp. 531-540. J. R. Gurd, C. C. Kirkham, and I. Watson, “The Manchester prototype dataflow computer,” Commun. ACM, vol. 28, pp. 34-52, Jan. 1985.

pp. 108-113.

141 D. J. Kuck et al., “Measurements of parallelism in ordinary FORTRAN programs,” Computer, vol. 7, pp. 37-46, Jan. 1974.

[5] D. A. Padua, D. J. Kuck, and D. H. Lawrie, “High-speed multiproces- sors and compilation techniques,” ZEEE Trans. Cornput., vol. C-29, pp. 763-776, Sept. 1980. G. F. Pfkter et al., “The IBM research parallel processor prototype (RP3): Introduction and architecture,” in Proc. I985 Conf. Parallel Processing, Aug. 1985, pp. 764-771. C. L. Seitz, “The cosmic cube,” Commun. ACM, vol. 28, pp. 22- 33, Jan. 1985. K. So, F. Darema-Rogers, D. A. George, V. A. Norten, and G. F. Pfister, “PSIMUL-A system for parallel simulation of the execution of parallel programs,” IBM Res. Rep. RC-11674 (52414). Jan. 86.

[9] E. Williams and F. Bobrowicz, “Speedup predictions on large scientific parallel programs,” in Proc. I985 Conf. Parallel Process- ing, Aug. 1985, pp. 541-543.

[6]

171

181

Manoj Kumar (S’79-M’83) received the B. Tech. degree from the Indian Institute of Technology, Kanpur, in 1979, and the M.S. and Ph.D. degrees in electrical engineering from Rice University, Houston, TX, in 1981 and 1984, respectively.

Since 1983 he has been a Research Staff Member at the IBM T. J. Watson Research Center, Yorktown Heights, NY. His research interests include computer architecture, parallel processing systems, and interconnection networks for parallel processing systems.

I