Human factors in system reliability: lessons learnt from the Maeslant storm surge barrier in the...

Transcript of Human factors in system reliability: lessons learnt from the Maeslant storm surge barrier in the...

418 Int. J. Critical Infrastructures, Vol. 4, No. 4, 2008

Human factors in system reliability: lessons learnt from the Maeslant storm surge barrier in the Netherlands

Jos Vrancken*, Jan van den Berg and Michel dos Santos Soares Faculty of Technology, Policy and Management Delft University of Technology P.O. Box 5015 2600 GA Delft, The Netherlands E-mail: [email protected] E-mail: [email protected] E-mail: [email protected] *Corresponding author

Abstract: The Maeslant storm surge barrier in the Netherlands is an interesting case in system reliability, first because of the great effort that has been put into making its operation reliable and into assessing its reliability, and second, because it has characteristics that make reliability assessment extremely hard. From its history a number of interesting conclusions can be drawn, of which the most important one is that there is no straightforward, definitive solution to reliability, but reliability is obtained and maintained in a continuous process of improvement. Other conclusions are that humans cannot be excluded from the operation or decision-making in systems such as the Maeslant barrier, that all methods for improving system reliability are most effective when the people involved are sharply aware of each method’s limitations and that a continuous, open process of consulting a variety of experts is crucial to obtain the best possible reliability.

Keywords: system reliability; software reliability; human factors; IEC 61508; formal methods; fall-back options.

Reference to this paper should be made as follows: Vrancken, J., van den Berg, J. and dos Santos Soares, M. (2008) ‘Human factors in system reliability: lessons learnt from the Maeslant storm surge barrier in the Netherlands’, Int. J. Critical Infrastructures, Vol. 4, No. 4, pp.418–429.

Biographical notes: Jos Vrancken received his BS and MS degrees in Mathematics from the University of Utrecht, the Netherlands and his PhD in Computer Science from the University of Amsterdam, the Netherlands in 1979, 1982 and 1991 respectively. Currently he is a Policy Consultant at the Dutch Ministry of Transport and Public Works and an Assistant Professor in the Use of IT in Infrastructures at the Delft University of Technology, the Netherlands.

Jan van den Berg received his BS and MS degrees in Mathematics from the Delft University of Technology, the Netherlands and his PhD in Computer Science from Erasmus University Rotterdam, the Netherlands. Currently he is an Associate Professor of IT at the Delft University of Technology.

Copyright © 2008 Inderscience Enterprises Ltd.

Human factors in system reliability 419

Michel dos Santos Soares received his BS and MS in Computer Science from the Federal University of São Carlos and the Federal University of Uberlândia, both in Brazil, in 2000 and 2004 respectively. Currently he is a PhD student in Software Engineering at the Delft University of Technology, the Netherlands.

1 Introduction

After the catastrophic flood in the province of Zeeland in the Netherlands in 1953, the Delta plan1 for thorough and lasting protection against floods was drawn up and carried out by Rijkswaterstaat, the organisation within the Dutch government that is responsible for the main flood protection infrastructure. The essence of the plan was to reduce the probability of sea water flooding to once in 10 000 years by increasing the height and robustness of the protective constructions. Somewhat less stringent requirements were formulated for river water flooding.

The requirement of once in 10 000 years can be translated into a sea water level of 4.8 m above NAP (the Dutch height reference, approximating mean sea level) that the flood protection works should be able to withstand. A flood in the area surrounding Rotterdam would endanger some 1.3 million people and would destroy an economic production capacity of some 50 billion euros a year (about 10% of the Dutch annual GNP).

The closing piece of the Delta plan was the so-called Maeslantkering (kering is the Dutch word for barrier), the movable storm surge barrier in the Nieuwe Waterweg, which is the entrance to the port of Rotterdam and one of the estuaries of the main rivers (Rhine and Meuse) in the Netherlands. The movable barrier was designed to solve the problem of complying with the Delta plan requirement, while, under normal circumstances, keeping the port of Rotterdam open and allowing the outflow of river water. Current dykes in the area offer protection to sea water levels up to 3.6 m above NAP. The Maeslantkering was preferred over increasing the height of the dykes in the whole area by 1.2 m, mainly because it was considered less intrusive, cheaper and technologically more appealing.

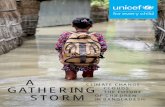

The barrier, shown in Figure 1, consists of two doors, each with a height of 22 m and a length of 210 m. By means of two horizontal steel arms, both comparable in size with the Eiffel tower, they are connected to two ball joints, each anchored in 52 000 tonnes of concrete that is able to withstand a horizontal force of 35 000 tonnes.2 When not in use, the doors are stored in two dry docks in the banks of the Nieuwe Waterweg.

The closing and opening of the barrier are fairly complex processes, which, quite justifiably, have been automated: these automated processes can be tested and are the only means of handling the many events that have to take place on time and in the proper order. The decision to close the barrier is also automated. The so-called BOS (a Dutch acronym for Beslis en Ondersteunend Systeem, which means Decision and Support System) makes the decision, based on the expected sea water level near the barrier, and executes it. Alarming water levels can only be caused by a combination of spring tide and a northwest storm. Closure height has been determined at an expected water level of 3.2 m above NAP (recently changed to 3 m in order to protect an area that was first

420 J. Vrancken, J. van den Berg and M. dos Santos Soares

allowed to be flooded). The BOS is partly new, but partly also relies on the so-called Buiten-BOS (external BOS), software for weather and water-level prediction that was already in existence before the development of BOS.

Figure 1 The Measlant storm surge barrier

The barrier is designed to prevent the inflow of sea water. Failing to close would obviously make the barrier fail its purpose, but a prolonged failure to reopen could also cause floods, due to the accumulation of river water.

The water system in the Rotterdam area is a complex system in which the Nieuwe Waterweg is not the only outlet to the sea. The Maeslantkering is interrelated with two other movable barriers, the Hartel barrier in the Hartel canal and the Haringvliet barrier. These latter two barriers can allow the outflow of river water in case the Maeslantkering fails to open after a closure. This means that the probability of catastrophic flooding, resulting from a failure of one of the three barriers to open, cannot be considered per barrier, but can only be determined by considering the complete system. The three barriers are operated independently, thus considerably reducing the probability of catastrophic flooding by river water due to the failure to open of a barrier. This is a fine example of the importance of fall-back options in system reliability.

The decision to build the Measlantkering was made in 1988. Rijkswaterstaat commissioned a design-and-construct contract to a consortium of private building companies. During the design phase in the mid-1990s, the Delta requirement of not more than one flood in 10 000 years was translated into a rate of failure to close or open of one in a 1000 requests. While considerably better than strictly necessary, this failure rate was considered feasible at the time. Later it became clear that this judgement was based on insufficient grounds (van Akkeren, 2006).

The failure rate of one in a 1000 closure requests led to the conclusion that human operators would be too unreliable. Therefore the decision was made that the barrier should operate completely automatically. This decision, too, turned out to be insufficiently justified.

Human factors in system reliability 421

The BOS subsystem was separately commissioned to a software contractor. This

contractor introduced the Safety Integrity standard IEC 61508 (IEC, 1996) and thus introduced formal methods in the design and construction of the software, because of recommendations in the IEC standard. Nevertheless, as pointed out above, part of the BOS system consisted of existing systems that were not built by means of formal methods and that were far too complex and too specialised to rebuild within the given time span and budget.

The history of the Maeslantkering shows that it is an interesting case in system reliability. Extensive effort was put, and is still being put, into improving and estimating its reliability. It is also a rather extreme case: it is a unique specimen of a kind of system that is unique in the world. It cannot be tested under the conditions for which it was designed (water levels of above 3.6 m), but its failure under such conditions would be truly catastrophic. The difference with, for instance, the automotive industry, in which reliability also plays a key role, but in which systems are produced and used en masse, could not be bigger.

Such extreme cases have the advantage of enabling us to put a magnifying glass over certain aspects of the reliability problem, thus giving us better insight into the limits of the methods applied.

In the next sections, we will further detail and analyse the history of the Maeslantkering. From this analysis a number of conclusions can be drawn regarding the reliability of software systems and the human factor in reliability. Finally, some recommendations will be given for the Maeslantkering.

2 Improving and assessing software system reliability

This article focuses on the software part of the Maeslantkering, because that is the part which turns out to be the most problematic in the various documents about the reliability assessment of the barrier (Arcadis, 2005; Brinkman and Schüller, 2003). Moreover, the software part, and especially the BOS system, is the part most closely related to the human factor in the system. BOS was intended to replace humans in the operation of the barrier.

In the life cycle of software, a number of phases can be distinguished, each of which has its own approach to the reliability problem. We distinguish a design phase, a construction phase, a testing phase and a simultaneous use and maintenance phase. Practice shows, time and again, that the maintenance phase is gravely underestimated and the Maeslantkering is no exception (van Akkeren, 2006). In the evolutionary approach to software development, a system goes through all phases repeatedly and grows incrementally, not only in functionality but also in other aspects such as reliability. With virtually no exceptions, this pattern applies to all technical artefacts, even if, originally, the project was conceived as a waterfall approach. That is why technical artefacts always have version numbers. The only difference between the waterfall approach and the evolutionary approach is that the latter is consciously evolutionary and special attention is paid to the design of the most suitable development process (things like step-size, size of the first version, etc.).

422 J. Vrancken, J. van den Berg and M. dos Santos Soares

2.1 Formal methods

In the design phase, formal methods can play an important role. Formal methods for software development are in essence mathematical methods. They consist of languages that have their meaning expressed in mathematics and that allow statements about properties of specifications, expressed in such a language, to be proven with mathematical rigour. The software development in the BOS was a combination of formal and informal methods. The formal methods Z and Promela (Spivey, 1992; Holzman, 1997) were mainly applied to the technical design. The coding, in a safe subset of C++, and code testing were not formal but supported by formal means (Tretmans et al., 2001).

Formal methods are no exception to the rule that a method is most effective when applied with the right expectations and with full awareness of the method’s limitations (Bowen and Hinchey, 1995; Hall, 1990). Formal methods have been shown to be very effective in preventing a certain type of error, in BOS and in numerous other projects. They are usually an intermediate step between an informal user specification or a requirements document and the realisation of a system in a programming language. Formal methods can strongly reduce the errors that can occur in the translation from initial document to executable source code. In addition, there are methods that apply to the source code and that allow one to prove the correctness of those parts whose functionality can be expressed in a formal way. What formal methods cannot do is to make sure that the initial document does not contain errors, serious omissions or features that later on turn out to be undesirable. The reason is that we have no formal specification of the real world, which makes the initial document formally unverifiable. Moreover, translating the result of a formal verification into a statement about the correct operation of the software, in reality, requires making many assumptions about the environment in which the software runs and the behaviour of the environment of the system. For instance, the correct behaviour of the software is also dependent on the correctness of the compiler and the platform on which the software runs, both of which usually have not been verified formally. More importantly, the model of the system’s environment is inevitably a simplification of reality: “all models are wrong, some are useful for a while” (Box, 1979). In the case of the Maeslantkering, for instance, the behaviour of the water was at first oversimplified, ignoring an important effect called seiche (de Jong, 2004). A seiche is a standing wave in the estuary, which can have the effect that the water level at the inland side of the barrier rises 2 m higher than the seaside level, which may result in the barrier’s doors being drawn out of their ball joints. The problem was already detected in the development phase in 1997, but its solution caused a year of delay in the delivery of the barrier (van Akkeren, 2006). There is no way to prove that there are no other unknown problems in the water’s behaviour.

Other drawbacks of formal methods are that their application is usually heavily time consuming and dependent on scarce knowledge. There are not that many people who have adequate experience with the application of formal methods in practice. Moreover, it makes little sense to apply formal methods in the initial design and construction, if they are not applied throughout the whole life cycle of a system. The result of a period of informal maintenance of a formally designed system would be a false impression of high reliability.

There is also a negative effect of formal methods on a system’s flexibility, but in the case of the barrier, reliability is far more important than flexibility, so this is not a real disadvantage in this specific case.

Human factors in system reliability 423

Formal methods are indispensable to achieve a required reliability level, yet cannot

guarantee the system’s correct behaviour in reality, due to the fact that the starting document is necessarily not formal or not formally verifiable and due to the many assumptions that have to be made in translating the results of formal verifications into statements about correct behaviour in practice. Actually, it is even impossible to make a complete list of all assumptions. That too would require a correct and complete model of the system’s environment. In practice, many assumptions will even remain implicit.

2.2 Testing

Testing is indispensable, as every engineer will confirm. The opinion among software engineers that formal methods could eliminate the need for testing was abandoned long ago (Bowen and Hinchey, 1995; Hall, 1990). Actually, formal methods and testing can be considered complementary. A test can vary in the degree to which it approaches real usage, but in general a test applies to far more things than a formal method. It makes far fewer assumptions, but a test is only a single case in a large space of different states in which a system and its environment can be. Extensive testing can contribute considerably to the confidence in a system, but drawing conclusions about a system’s reliability under operation again depends on assumptions, especially regarding the degree to which testing circumstances and operational circumstances do not differ in any relevant way.

Given all the assumptions that have to be made, both in the case of formal methods as in the case of testing, it can be concluded that real use is crucial in order to gain confidence in a system. This is the point were the Maeslantkering is obviously an extreme case. The real circumstances for which it was designed are extremely rare, otherwise the city of Rotterdam would have been swallowed by the waves long ago. The barrier cannot possibly be tested in real life for its behaviour at the water levels and under the weather conditions for which it is really needed. Any statement about its proper functioning under those circumstances is an extrapolation from milder circumstances.

2.3 The probabilistic approach to reliability

The probabilistic approach to reliability means that the desired reliability is expressed as a numerical probability (‘at most one failure in 1000 trials’ or ‘at most one flood in 10 000 years’). This is the approach applied in the case of the Maeslantkering. The required reliability was expressed as a failure probability, and subsequently, the evaluation of the reliability was in essence an attempt to determine this failure probability.

Probabilities are the common way to express reliability, so there seems to be no better way. Yet one should be well aware, again, of the limitations of the probabilistic approach. The limitations can be summarised in one statement: real things do not have probabilities, only models do. The difficult part when drawing conclusions about real things from probabilistic statements, is the question to what extent the model involved reflects the real thing in all relevant aspects. The big difference between models and real things is that models do not have parts that are not made explicit. Real things do. A model is completely known, real things are not. Models do not change without notice, real things do.

424 J. Vrancken, J. van den Berg and M. dos Santos Soares

Even if the probabilities are determined from experiments with the real system, there is still a factor called ‘operational profile’, i.e., the circumstances in which the system operates. If the operational profile changes during the experiments, something that may very well happen without the experimenters knowing it, the probabilistic results become disputable. But even if the operational profile were constant during the experiments, it may not be so in the future. In the case of the Maeslantkering, the probabilities that could be determined from real use (which has not yet been done to date) would not cover the operational profile of water levels above 3.6 m, owing to the rareness of this condition. Such experiments would be useful (if it fails under normal circumstances, it would probably not do better under heavy circumstances), but certainly not decisive.

The probabilistic approach points at an important method to make systems reliable: fall-back options. If several mechanisms are in place for the same function, and if these systems operate independently, which should be carefully considered, the failure rates (expressed as the failure probability per occasion that the function is needed) can be multiplied. That is a very powerful means to obtain high reliability: three mediocre mechanisms for the same function, each with failure rate of 1 in 10, result in a high reliability of 1 in 1000.

3 Eight years of maintenance

Not long after the delivery of the barrier, a number of serious problems surfaced, in itself perfectly normal in a system as innovative and complex as the barrier, but in conspicuous contrast with the reliability claims made at the time of its delivery. There were problems with the ball joints and other mechanical and electromechanical parts, and there were also problems with the software, especially with the interfacing between the BOS and the BES (the operational system for closing and opening the barrier). The two parts being made by different parties, using quite different methods and standards, this should not have been a surprise.

The BOS was delivered as a SIL4 certified system. This term was introduced in 1996 by a new standard for reliability in software-intense systems: the standard IEC 61508 (IEC, 1996). This standard distinguishes four levels of reliability: the Safety Integrity Levels SIL1 through 4. Benchmarks for the manpower required to build a system with SIL4 reliability of the size of the BOS indicated that the contractor would have worked a near-miracle for the claim to be justified (Jones, 2000). What probably happened is that the contractor followed the recommendations for software development that are part of each SIL in the standard. Each SIL is expressed as a failure rate (e.g., SIL4 is approximately a failure rate of at most once in 10 000 years). Certification for one of the SILs only means that the recommendations have been followed, not that the system at hand has the prescribed failure rate. There is absolutely no sound justification for the assumption that following the recommendations for each SIL is enough to obtain the corresponding reliability.3

The maintenance phase revealed yet another important problem: after delivery, the system was handed over to the commissioner’s maintenance organisation, which initially was in no way adequately trained for maintaining such a novel system (van Akkeren, 2006). In combination with the many teething problems of the new system, it became

Human factors in system reliability 425

clear that a fundamental rethinking of the system was necessary, in which, among other things, the role of humans would also have to be reconsidered. The software was apparently not as reliable as the SIL4 certificate suggested.

Several evaluations were carried out in the period 2001 through 2005 (Arcadis, 2005; Brinkman and Schüller, 2003). The evaluations brought to light a number of defects of varying degrees of importance, the majority of which could be mended. The evaluations thus resulted in a substantial improvement of the reliability of the barrier. The most elaborate evaluation (Brinkman and Schüller, 2003) also made an assessment of the failure rate and the resulting figure was quite disappointing: the failure rate of the barrier was estimated at 1 in 10, far worse than the initial goal of 1 in 1000. The method applied in the failure rate assessment was questionable in quite a few aspects. For instance, the failure rates of basic components and the probabilities of various kinds of circumstances were little more than rough estimates. A second criticism was that the failure rate was related too much to the original functional specification and not to the prevention of catastrophic flooding. If closing the doors would take 5 min longer than specified, this was considered a failure, while such an event would obviously not cause a flood.

Nevertheless, the report led to a further programme of improvement of the barrier, which currently is still at hand. Most notably, as a result of the report, it was concluded that very low, provable failure rates of software were not feasible, so humans were brought back into the operational process (Schultz van Haegen, 2006).

4 Human factors

During the design, construction and maintenance of the Maeslant barrier, special attention was given to humans in one specific role: that of operator. Humans were excluded from this role because of the initial, extremely low failure rate requirement. Strangely enough, the question of whether it would be possible to meet that requirement without humans was answered affirmatively, obviously on insufficient grounds. The one question that should have been asked, i.e., how can we achieve the best possible failure rate in combining humans and machines, was not asked, nor was the question studied on how the role of humans in the operational process could be made more reliable. As we saw above, fall-back options can achieve excellent failure rates from rather unreliable components, if properly applied.

The maintenance phase made it clear that the barrier was not perfect: it had to be maintained. That pointed at a second role for humans: the maintenance role. We will see below that the two roles are related.

Humans and computers are actually complementary in a number of ways. Humans are slow and unreliable, computers are fast and reliable. Humans can handle the unforeseen, computers are totally incapable of doing that. The role of humans is therefore determined in large part by the degree to which the system and its environment are known and predictable. In the case of the Maeslantkering, the long period for which it was designed (100 years) guarantees many unforeseen events. In addition, the environment, the atmosphere and the water system (the North Sea, Nieuwe Waterweg, Haringvliet, the Rhine and Meuse rivers) are highly complex, if not chaotic, systems, whose behaviour (especially long-term behaviour) is only very partially known, as illustrated by the delay during construction that was caused by the seiche phenomenon;

426 J. Vrancken, J. van den Berg and M. dos Santos Soares

reason enough for a firm role for humans in operating the barrier, even though the humans themselves are one of the most important sources of unforeseen conditions. A maintenance crew has access to all parts of a system. It can leave the system behind in any possible state. In principle, all the confidence built up during years of usage is no longer justified after a maintenance overhaul. Only humans can handle the unforeseen situations caused by humans. Various rumours about maintenance errors with the barrier, whether they are true or not, form evidence that this applies to the barrier as much as to any other system.

There is yet another role for humans in systems such as the Maeslantkering. That role has to do with the policy-makers and authorities responsible to society for the proper functioning of the system. In essence, a statement about reliability is a statement about the future. That means that it cannot be verified and not even falsified (refuted if it is not true). From the point of view of the people directly involved in the construction, maintenance and operation of the barrier, making it reliable in essence means convincing themselves and convincing the authorities responsible. This pattern can be observed in many other situations: the future does not exist; the only thing that matters are the views that people currently have of the future. Making a system reliable means convincing people of its reliability. This applies especially to the probabilistic approach to reliability. It may sound absurd, but we should realise that this is what actually happens. It shows that these authorities have a very important and difficult role. If they are not demanding enough or if they were to stress the wrong aspects, this would seriously endanger the barrier’s reliability. It goes without saying that the authorities responsible cannot be experts in all of the many disciplines involved in the design, construction, operation and maintenance of the barrier. Here too, software, which is the product of a relatively young engineering discipline, stands out as the most problematic area. There are civil engineers and jurists among the pertinent authorities, but still very few, if any, computer scientists or software engineers. It means they have to rely on experts’ advice. This is where all the well-known problems of consulting experts enter the reliability problem: not all people claiming to be experts do so justifiably; experts do not always agree; experts are fallible; experts are themselves in a learning process (what they say today is not necessarily the same as what they will say tomorrow); and experts have interests, like all other human beings. There is no easy solution here. The only two things we can suggest to counter this problem are, first, that the commissioning organisation, Rijkswaterstaat in this case, must make sure that it is as competent as possible, and, second, that a very open process of consulting experts from various backgrounds, academia as well from private corporations, national as well as international, should take place and should take place continuously. The first item, the commissioning organisation’s competence, is currently a topical discussion in national politics. The Raad van State, the highest advisory body of the Dutch government, recently stated that the level of specialist knowledge is seriously decreasing within the various governmental organisations (Raad van State, 2006; Tjeenk Willink, 2006). They mentioned only one example by name: Rijkswaterstaat. When the commissioning organisation is insufficiently competent, the second item, the open consultation process, cannot be properly executed.

Human factors in system reliability 427

5 Conclusions and recommendations

The above analysis of the history of the Maeslant barrier can be summarised in the following conclusions and recommendations.

5.1 Reliability is a process

There is a famous maxim about security: “security is a process, not a product” (Schneier, 2000). The primary conclusion from the history of the Maeslantkering is that this maxim applies equally well to reliability. Reliability is obtained in a never-ending process of improvement. The most important distinction between security and reliability is that security also deals with malice, i.e., intentional actions by humans to thwart a system’s proper functioning. Reliability usually only deals with defects by coincidental circumstances, not purposeful actions by humans. Nevertheless, reliability is not necessarily easier than security. Reliability requirements are usually much more stringent than security requirements (SIL requirements would be ridiculously infeasible for security aspects) and inanimate objects can still be very resourceful in making a system deviate from its intended behaviour (“Murphy was an optimist” – O’Toole).4

Several causes contribute to making reliability a continuous process. Not only do systems and circumstances (or operational profiles) change in unforeseen ways, one can also observe that requirements change, for instance by the accumulation of experience with the system, and especially that our knowledge of building reliable systems and of assessing reliability increases.

5.2 All methods have limitations

A method will be most effective if the user of the method is thoroughly aware of the method’s limitations. In the case of the Maeslantkering, we observe that all the types of methods applied (probabilistic failure rates, formal software development methods, testing, real use, systematic fault analysis) have limitations of a common origin: our incomplete knowledge of a system and its environment, resulting in many unconscious, implicit assumptions. Nevertheless, the methods applied are very valuable or even necessary, otherwise the system would most undoubtedly fail through simple causes that could easily have been eliminated.

5.3 Humans are needed in operating the barrier

Only systems that operate in predictable, well-known circumstances, that have been tested extensively and that have been massively used, that do not need maintenance, and that have no catastrophic consequences in the case of failure may operate without humans. For the Maeslant barrier, none of these requirements hold.

If a system is regularly maintained, this fact alone already implies that a system needs to be operated, or at least supervised, by humans.

428 J. Vrancken, J. van den Berg and M. dos Santos Soares

5.4 The barrier is an extreme case from the point of view of reliability

The operational circumstances for which the barrier was designed occur only very rarely (about once in 70 years). It is therefore not tested or used under these circumstances. This is an essential problem in any solution to extreme circumstances. Dykes have the same problem.

5.5 Recommendations for the maintenance of the barrier

One of the most effective means to improve the barrier’s reliability can be found in fall-back options for all essential functions. If it can be argued that the different mechanisms for the same function operate independently, then their failure rates can be multiplied. Moreover, the method of adding fall-back options can be combined with any other method for improving reliability.

Now that humans are back in the operational process, it should be studied how they can be made most effective, and how the inherent unreliability of humans can be reduced. Fall-back options seem to apply here as well.

Once it is understood that making the barrier reliable is a continuous process, in which specialised knowledge plays a crucial role, the question should be studied how this process can take place most effectively. A very open process of consultation, managed by a competent and knowledgeable organisation, seems to be an important part of the continuous improvement process.

Acknowledgements

We express our gratitude for useful discussions to Koos van Akkeren and Hetty Klavers of Rijkswaterstaat. This research was supported by the Delft Research Centre Next Generation Infrastructures and the Next Generation Infrastructures Foundation.

References Arcadis (2005) Toetsing dijkringverbindende kering 8, Maeslantkering & Europoortkering I,

Rijkswaterstaat Directie Zuid-Holland & Directie Weg-en Waterbouw, 7 December.

Bowen, J. and Hinchey, M. (1995) ‘Seven more myths of formal methods’, IEEE Software, Vol. 12, No. 4, pp.34–41.

Box, G.E.P. (1979) ‘Robustness in the strategy of scientific model building’, in R.L. Launer and G.N. Wilkinson (Eds.) Robustness in Statistics, New York: Academic Press, http://www .anecdote.com.au/archives/2006/01/all_models_are.html.

Brinkman, J.L. and Schüller, J.C.H. (2003) ‘Betrouwbaarheidsanalyse OKE’, Internal report Rijkswaterstaat, Dutch.

De Jong, M.P.C. (2004) ‘Origin and prediction of Seiches in the Rotterdam harbour basins’, PhD thesis, Delft University of Technology.

Hall, A. (1990) ‘Seven myths of formal methods’, IEEE Software, September.

Holzman, G. (1997) ‘The model checker SPIN’, IEEE Transactions on Software Engineering, Vol. 23, No. 5, pp.279–295.

IEC (1996) Safety Related Systems, International Standard IEC 61508, International Electrotechnical Commission, Geneva, Switzerland.

Human factors in system reliability 429

Jones, C. (2000) ‘Software assessments, benchmarks and best practices’, Information Technology

Series, Addison Wesley.

Raad van State (2006) Jaarverslag 2005, www.raadvanstate.nl.

Schneier, B. (2000) ‘Crypto-Gram newsletter’, 15 May, http://www.schneier.com/crypto -gram-0005.html.

Schultz van Haegen, M.H. (2006) State Secretary of the Ministry of Public Works: Letter to Parliament, 20 February, RWS/SDG/NW 2006/332/23875, www.keringhuis.nl.

Spivey, J. (1992) The Z Notation: A Reference Manual, New York: Prentice Hall.

Tjeenk Willink, H. (2006) ‘De overheid is uit de rails gelopen’, NRC Handelsblad, 29 April, p.13.

Tretmans, J., Wijbrans, K. and Chaudron, M. (2001) ‘Software engineering with formal methods: the development of a storm surge barrier control system’, Formal Methods in System Design, Vol. 19, pp.195–215.

Van Akkeren, K. (2006) Interview by Vrancken with Koos van Akkeren on 5 April 2006.

Notes

1 The Delta plan was expressed in a law, De Delta Wet (the Delta Law), published in De Staatscourant, 08 May 1958.

2 Maeslantkering website: http://www.keringhuis.nl/.

3 Bishop, P.G., SILs and Software, http://www.adelard.co.uk/resources/papers/pdf/SCSC _Newsletter_Software_SILs.pdf.

4 O’Toole’s comment on Murphy’s Law: http://userpage.chemie.fu-berlin.de/diverse/murphy/ murphy2.html.