Columbia Digital News System. An environment for briefing and search over multimedia information

Transcript of Columbia Digital News System. An environment for briefing and search over multimedia information

Columbia Digital News SystemAn Environment for Brie�ng and Search over MultimediaInformationAlfred Ahoy, Shih-Fu Chang�, Kathleen McKeowny,Dragomir Radevy, John Smith�, and Kazi ZamanyyDepartment of Computer ScienceColumbia UniversityNew York, NY 10027faho, kathy, radev, [email protected] �Department of Electrical EngineeringandCenter for Telecommunications ResearchColumbia UniversityNew York, NY 10027fsfchang, [email protected] this paper we describe an ongoing researchproject called the Columbia Digital News System. Thegoal of this project is to develop a suite of e�ectiveinteroperable tools with which people can �nd relevantinformation (text, images, video, and structured doc-uments) from distributed sources and track it over aperiod of time. Our initial focus is on the develop-ment of a system with which researchers, journalists,and students can keep track of current news events inspeci�c areas.1 IntroductionOur research focuses on the development of tech-nologies to aid people in �nding and tracking theinformation they need to keep current in their jobsand lives. We are developing a system, the ColumbiaDigital News System (CDNS), that provides up-to-the-minute brie�ngs on news of interest, linking theuser into an integrated collection of related multimediadocuments. Depending on the user's pro�le or query,events can be tracked over time with a summary givenof the most recent developments. A representative setof images or videos can be incorporated into the sum-mary. The user can follow up with multimedia queriesto obtain more details and further information.Our research is directed at producing a collection ofinteroperable multimedia tools with which users canmanage knowledge, search for and track events, andsummarize and present information. Our focus is onthe development of e�cient algorithms, modular com-ponents, and e�ective presentation methods. In thispaper we outline our system architecture and describethe salient components of our system.2 System ArchitectureThe architecture of the system that we are devel-oping is shown in Figure 1. Using a combination of

textual keywords and visual features, the user speci-�es the type of information he or she is interested intracking. The request may be stored in a user pro�lefor long-term gathering and tracking of information.The event-searching and tracking module looks formatching information on the requested topics, usingpattern-matching techniques over multiple represen-tations (e.g., text, metadata) and multimedia searchtechniques to �nd relevant text, images and multime-dia documents. The documents thus retrieved can bethen sent to the summarizer and the results can thenbe presented and viewed.The summary information extracted from the doc-uments can be stored in database templates. In addi-tion, the user may search online for related databases(e.g., in the current news domain, the CIA World FactBook[1] contains relevant information) from which ad-ditional information can be gleaned and merged withinformation from the set of articles for the summary.At the same time, representative images and videos il-lustrating new information or common content can beselected to be included in the summary. On present-ing the summary and related images or video, the usercan request to see additional information or speci�csources of interest. This is accomplished through aviewing and manipulation component that may rein-voke multimedia search using image and/or textualfeatures.Our system also contains tools for knowledge man-agement. The novel aspect of these tools is their abil-ity to collect, categorize, and classify image and videoinformation.Several screens from a scenario of interaction withour existing system are shown below in Figure 2. Afterproviding a few keywords specifying the area of inter-est, the user can produce a summary of several arti-cles on the World Trade Center bombing, including

Knowledge Management

Collection and Categorization

Multimedia SearchUserProfile

SummarizerEventSearching&Tracking

Viewing&Manipula-tion

TextualKeys

VisualFeatures

Users

Relevance Feedback

Figure 1: System Architecturetwo representative images revealing what maps of thearea and what photographs of the event are available.Currently, summarization is done using templates rep-resenting information extracted from the news articleusing a system developed for the DARPA message un-derstanding conference (MUC) [2]. Since the MUCsystems only handle South American terrorist news,we also store some hand-constructed templates in thesame format for testing purposes. Following receipt ofthe summary, the user then can search for additionalimages, here choosing to search for images similar tothe map. The image/video search engine uses content-based techniques to retrieve images with similar visualcontent (e.g., color and spatial structure in this case).This results in a set of 30 most relevant images (notshown) retrieved from our catalog of approximately48,000 images. The user then re�nes the image searchby adding in textual keywords to specify the topic,resulting in the 3 images shown in the second screenof Figure 2. Finally, the user can request additionaltextual documents relating to one of the retrieved im-ages, receiving again a summary of the new documentsretrieved.Our current implementation is an early prototypeillustrating our vision and therefore many open re-search issues remain. For example, we need to expandthe kinds of media handled as well as interaction be-tween media. By adding multimedia documents (newsplus images), we can begin using the text within adocument to aid in image searches. We can also useresults from automated image subject classi�cation toprovide multimedia illustration in text summary. Inorder to facilitate scaling the system, our work in-cludes development of tools for building resources thatcan be used for searching, tracking, and summariza-tion. We are developing tools to annotate and catalogimages using image features and associated text, toolsto extract lexical resources from on-line corpora [3],tools to scan corpora to identify patterns in text notfound by information extraction (e.g., role of a per-son), and are collecting domain ontologies and tools,such as the hierarchies of locations and companies de-veloped for use in the DARPA (Defense Advanced Re-search Projects Agency) TIPSTER e�ort (e.g., [4]).3 Knowledge ManagementThe Columbia Digital News System contains anumber of tools to help collect, categorize, and clas-sify information. In order to track events of interest,CDNS must be able to identify new information thatis related to the user's interests. We have tools for au-tomatic information categorization to aid in this task

Figure 2: (a) Illustrated summary generated by prototype (b) Results of image search using both visual featuresand textual keysas well as in improving the accuracy of any follow-upsearch and exploration that the user may wish to carryout. When a new image arrives, it can be automati-cally catalogued in the image taxonomy and the usercan be noti�ed if its category matches the user pro�le.In addition to including an incoming image tothe growing taxonomy, our work also features semi-automatic development of the image taxonomy usingboth image and textual features, active search of theWorld Wide Web to categorize images as part of ourlocal collection, and use of the image taxonomy to im-prove the accuracy of follow-up search. While othergroups have used image collections that were manu-ally annotated with textual key words [5], our workfocuses on semi-automatic cataloging through incre-mental classi�cation into and extension of the taxon-omy. This classi�cation can then be subsequently usedas an additional constraint on search to improve ac-curacy. In this section, we describe our current proto-types for image and video categorization.3.1 Cataloging Images and VideoAiming at a truly functional image/video searchengine for online information, we have developed atool called WebSeek [6] that issues a series of soft-ware agents (called spiders) to traverse the Web, au-tomatically detect visual information, and collect itfor automated cataloging and indexing. Taxonomiesof knowledge are very useful for organizing, searching,and navigating through large collections of informa-tion. However, existing taxonomies are not well suitedfor handling dynamic online visual information such asimages on the Web. We have developed a new work-ing taxonomy for organizing image and video subjectclasses. Our WWW image/video cataloging system isunique in that it integrates both the visual featuresand text keys in visual material detection and clas-si�cation. There are a few independently developedsystems with similar goals [7, 5], but they do not usemultimedia features to construct a comprehensive tax-onomy like ours.

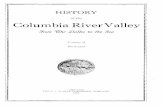

Our system uses both textual information and vi-sual features to derive image/video type and subjectclasses. Type information indicates di�erent formsof object content, such as \gif" and \jpeg" for im-ages; \mpeg", \qt", and \avi" for video; and \htm"for html documents. Subject classes represent the se-mantic content of an image or video. They providea valuable interpretation of the visual information,such as \astronomy/planets" and \sports/soccer". Wehave developed fully and semi-automatic proceduresfor type/subject mapping.We use two methods for image type mapping. The�rst method examines the type of the hyperlink andthe �lename extensions of the URLs. Mappings be-tween �lename extensions and object type are given bythe MIME content type labels. This method providesreliable mapping when correct �lename extensions aregiven. The second method is to use the visual fea-tures of the images/video for type assessment. Thisautomated procedure involves training on samples ofthe color histograms of images and videos. Fisher dis-criminant analysis is used to construct uncorrelatedlinear weightings of the color histograms that providefor maximum separation between training classes.Mapping subject classes involves building an im-age/video subject taxonomy. Figure 3 shows a por-tion of a working taxonomy. It is built incrementallythrough the process of inspecting the key-terms associ-ated with the visual information. For example, whennew, descriptive terms such as \basketball" are au-tomatically detected, a corresponding subject class ismanually added to the taxonomy if it does not alreadyexist, i.e., \sports/basketball".Subject mapping for new image and video infor-mation involves a sequence of steps. First, key-termsare extracted automatically by examining textual in-formation from hyperlinks (URLs and hyperlink tag)and directory names. Then, mapping between key-terms and the corresponding subject classes is doneby using a key-term dictionary, which is derived ina semi-automatic process. Based on the term his-

animals art

astronomy

entertainment

food

plants

science

travel

dogs

cats

whales

paintings

sketching

sculpture

comets

planets

nasa

humor

music

movies

television fruits

vegetables drinks

cactus

flowers

asia

europe

biology

sports

chemistry

baseball

soccer

lions

tigers da vinci

renoir van gogh france

germanyearth

jupiter

saturn

cartoons

pop

rock

programs

apples

bananas

roses

sunflowers

neurons

proteins

paris

berlinmoon

elvis

beatles

friends

seinfeld

star trekmona lisa Figure 3: Image taxonomytogram, terms with high frequency are presented formanual assessment. Terms are grouped into \descrip-tive" and \non-descriptive" classes. Only descriptiveterms, such as \aircraft" and \texture" are added tothe key-term dictionary. New images and videos asso-ciated with key-terms in the dictionary are automati-cally mapped to the corresponding subject classes.3.2 Current DirectionsIn the area of image/video cataloging, currently weuse only limited textual information from URLs, di-rectory, and hyperlink tags. Visual features are usedin automated type mapping, but not in subject map-ping. We are investigating new techniques using so-phisticated combination of visual features and textfeatures. In [8], we used feature clustering to gener-ate a hierarchical view of a series of video scenes andto detect video scenes with speci�c semantic content(e.g., anchorperson scenes). Several clustering tech-niques, such as self-organization maps, isodata, andk-mean, have been applied over various visual features(color, texture, and motion). We are augmenting ourprior work with two new approaches. First, the work-ing image taxonomy will be used to provide initialclusters of visual features. Consistency relations overvarious features within each cluster will be measuredto assess the association strength of visual featuresand the semantic classes. Best representative featuresfor di�erent subject classes will be identi�ed througha learning process [9]. Mapping of feature clustersto semantic objects will be derived through trainingand learning. For example, detection of salient ob-jects (people, animals, logos, skylines) and activitiesmay be achieved by optimal selection of features andtheir dynamic clustering based on the training dataand user interaction.While we have achieved remarkably good resultswith the techniques described here, accuracy of clas-si�cation using textual keys depends on the qualityof the URLs, directory names, and type extensionsassociated with the images. We are currently inves-

tigating how best to use text associated with imagesin multimedia documents or in related web pages toidentify good textual keys. We are evaluating the useof statistical natural language tools we have developedfor identifying key phrases in a textual documents, in-cluding tools for extracting technical terms [10], for ex-tracting commonly occurring collocations [11, 12], andfor identifying semantically related groups of words[13]. By extracting phrases from texts that repeatedlyoccur with the same image, we hope to �nd terms thatreliably provide information about image topics.4 Searching and TrackingTwo signi�cant features of CDNS are its facilitiesfor searching for visual and textual information andits capabilities for tracking changes and related in-formation. The multimedia search facility in CDNSallows users to �nd speci�c information by specifyingquery keys including both textual and visual proper-ties. Tracking modules allow a user to produce aninitial set of multimedia information relevant to hisor her interest. The summarizer can then be invokedto produce brie�ngs, possibly containing representa-tive images for illustration. Scanning these multime-dia brie�ngs, users can examine the source documentsand pursue an active process of in-depth informationexploration.4.1 Content-Based Visual Query andSearchContent-based visual query (CBVQ) techniquesprovide for the automated assessment of salient visualfeatures such as color, texture, shape, motion, spa-tial and temporal information contained within visualscenes [14]. By computing the similarities between im-ages and videos using these extracted visual features,new powerful functionalities are added to the system:� query based on features of visual information� classi�cation of images and video into meaningfulsemantic classes

� browsing and navigation by content through im-age and video archives [15]� automated grouping of visual scenes into visuallyhomogeneous clusters [8]Much work in computer vision and pattern recog-nition has dealt with related problems, such as objectsegmentation and recognition. Some techniques aresuited for generalization to tasks for visual query, suchas texture analysis, shape comparison, and face recog-nition. However, CBVQ has new characteristics andrequirements. Content analysis and feature extractionneed to be fast for real-time database processing. Mul-tiple features, including those from other media (e.g.,text, audio), should be explored for object detectionand indexing. Relevance feedback from user interac-tion is useful for adaptively choosing optimal featuresfor di�erent domains.With these in mind, we are focusing on the follow-ing fundamental research issues in this area:� fully automated extraction of visual features fromboth compressed and uncompressed images� e�ective functions for measuring visual contentsimilarity� e�cient indexing data structure enhancing fastfeature matching� fusion of multiple features and support of spatialqueries� linking low-level visual features to high-level se-mantics through intelligent clustering and learn-ingOur unique approach to processing compressed im-ages is worth more extensive discussion. Due totheir huge size, images and video are typically storedin compressed forms. A unique feature of our ap-proach is the use of compressed data for feature ex-traction. Given compressed visual information (suchas jpeg, wavelet images, or mpeg video), localizedcolor regions, texture regions, scenes, or video ob-jects are extracted without decoding the compresseddata. Fundamental processes used in typical compres-sion techniques, such as spatial-frequency decomposi-tion for still image compression and motion analysisused in video compression, provide very useful cluesfor extracting visual features directly from the com-pressed images and video. Content-based queries areperformed based on the extracted features. Uncom-pressed visual information is needed for display of �-nal selected images or video only. The compressed-domain approach provides great bene�ts in reducingthe resource requirements in computation, storage,and communication bandwidth. Data requirementsare usually reduced by orders of magnitude (e.g., 10times reduction in jpeg, 30 times reduction in mpeg).Required space and computation for decoding can bereduced as well.Various visual similarity measurements have beeninvestigated, including quadratic correlation distance

and intersection of color histograms [14, 16], Ma-halanobis distance of transformed vectors of texture[17, 18], and distance of absolute spatial orientations[19, 20]. Spatial indexing methods have been used toavoid exhaustive search of the entire collection andcomputing distance of each feature of every image inthe collection [21]. Initial work has been reportedfor joint CBVQ search and spatial query [22]. Wehave developed a powerful technique, binary featuremap, in combining feature representation and index-ing. Color and texture image regions are representedby a uni�ed binary vector. E�cient indexing meth-ods (similar to �le inversion) and fast distance com-parison can be derived with binary feature vector togreatly reduce computation. We are developing tech-niques to combine this approach with spatial indexingstructures like modi�ed 2D strings and quadtrees forsupporting fast processing of visual query includingboth localized content and spatial orientation.Figure 4 shows the system architecture of a CBVQsystem. Images stored in the archive are processedwith automated content analysis tools to extract theprominent image regions with coherent features. Theinput query (either drawing or example image) is pro-cessed on the y to obtain descriptions of its promi-nent regions. The associated visual features and theirspatial information are compared against the image re-gions and features in the archive to �nd the matchedresults.We have developed e�cient algorithms and sev-eral CBVQ prototypes. Our early tool, VisualSEEk[15], is a fully automated image query system whichsupports both localized content query and spatialquery. It allows users to specify visual features usinga WWW/JAVA graphic interface. Users may specifyarbitrarily-shaped regions with di�erent features (e.g.,color and texture) and their spatial relationship. Im-ages matching the speci�ed content are returned tousers for viewing or further manipulation. The JAVAuser interface for VisualSEEK is shown in Figure 5.An example of visual query using VisualSEEK isas follows. A user wants to �nd all images containingsunsets in the archive. By specifying a golden yellowcircle in the foreground and a light brown rectangle inthe background, he �nds several sunset images withinjust seconds. The matches in terms of color regionsand their spatial layout can be exact or best. Fromthe returned images, he may choose one or more andask the system to return other images similar to theselected one(s). Through multiple iterations of thesetwo types of queries (query by example vs. query bysketch), the user can navigate through the entire col-lection. Relevance feedback is provided through theselection of relevant images in the returned batch.In a follow-up more advanced CBVQ tool calledWebSEEk, we have integrated the search methods toencompass both visual features and text keys. Tosearch for images and videos, the user issues a queryby entering terms or selecting subjects directly. Thetool then extracts matching items from the catalog.The overall search process and model for user-interaction is depicted in Figure 6. As illustrated, aquery for images and videos produces a search results

Attributes

VisualFeatures/Objects

Indexing

QueryServer

Search byExamples

FeatureExtraction

Image/VideoArchive

MetaData

Image/VideoServer

Annotation

InformationExtractor

CBVQ Interface

ReducedRepresentationViewer

Text

Visual FeatureSpec. GUI

InputImages/

Off-line

On-line

Subject/TopicClassifier

Text/Summaries

Video

Figure 4: CBVQ architecturelist, list A, which is presented to the user. For exam-ple, Figure 7(a) illustrates the search results list for aquery for images and videos related to \nature", thatis, A = Query(SUBJECT = \nature"). The user maymanipulate, search or view A.After possibly searching and/or viewing the searchresults list, the user can feed back to the manipula-tion module the list as another list C, as illustratedin Figure 6. The user manipulates C by adding or re-moving records. This is done by issuing a new querythat creates a second, intermediate list from which theuser can generate a new search results listB using var-ious list-manipulation operations, such as union andintersection.The user may browse and search the list B usingboth content-based and text-based tools. In the caseof content-based searching, the output is a list C,where C � B gives an ordered subset of B, and Cis ordered by highest similarity to the user's selecteditem. In the current system, list C is truncated toN = 60 records, where N may be adjusted by theuser.For example, C = B ' Bsel,where ' means visual similarity, ranks list B in or-der of highest similarity to a selected item from B.The following content-based visual queryC = Query(SUBJECT = \nature") 'Bsel(\mountain scene image");ranks the \nature" images and videos in order of high-est visual similarity to the selected \mountain sceneimage." Alternatively, the user can select one of theitems in the current search results list B to searchthe entire catalog for similar items. For example,C = A ' Bsel ranks list A, where A is the full cata-log, in order of highest visual similarity to the selecteditem fromB. In the example illustrated in Figure 7(b),the query C = A ' Bsel(\red race car"), retrieves

the images and videos from the full catalog that aremost similar to the selected image of a \red race car."4.2 Tracking changesChanges and follow-ons to a document may be ofeven more interest than the original document it-self. A news story, for example, may have subse-quent follow-up stories containing additional informa-tion (e.g., suspect was now caught) or corrections toprevious stories (e.g., additional victims were found).Ideally, the user would like to track the changes inan ongoing story and have the results reported in ameaningful and understandable way.Our approach to this problem is to develop toolsthat identify and track changes in multimedia docu-ments. The changes can then be fed into the summa-rization modules for presentation to the user. Sinceour current application is journalism, we are inves-tigating di�erence measures that work well on newsstories. A number of statistical and structural dif-ferencing approaches have been recently reported, sowe are interested in evaluating the relative e�cacy ofthese measures on news stories. We have developed aWebDi� tool that uses combined statistical and struc-tural techniques to measure and display the di�erencesamong Web documents.We use statistical natural-language-processingtechniques to focus on semantic di�erences betweentwo documents. In information retrieval variousvector-based techniques have been developed to esti-mate the similarity of the content of documents. Sincenews stories are typically shorter in length and we donot wish to go through the extensive computations re-quired for training, we �nd simpler metrics preferable.We characterize a document by its content words, thatis, by its nouns, adjectives, and verbs; other parts ofspeech, such as articles, adverbs, and prepositions, areignored. We use a part-of-speech tagger to identify thecontent words in the documents under consideration.The statistical metrics we currently use are based onthe number of content words common to the docu-

Figure 5: VisualSEEk user interface: (a) Users specify color regions to �nd sunsets images (b) ResultsUser

User

ViewingRecord

Extraction Manipulation

Search/Ranking

User

A

C

B C

WebSEEkcatalogFigure 6: Search, retrieval and search results manipulation processes.ments.For structural di�erences, we are building on the re-cent work done at Stanford [23] for measuring changedetection in hierarchies of objects. The structuralproperties we employ are based on the grammaticalstructure of an HTML page as well as other kinds ofmetadata associated with a document. Although wedescribe the speci�c problem of dealing with HTMLpages, it should be noted that our techniques are ap-plicable to other domains and formats as well.Our approach consists of making use of the inher-ent hierarchical structure of an HTML document. Weparse the HTML documents and construct a formalinternal representation for each document. This rep-resentation is an annotated tree consisting of nodes ofdi�erent types including lists (both ordered and un-ordered), text strings, and anchors. We then �nd theminimal sequence of transformations required to mapone tree into another. Typical transformations are in-sertions, deletions, and updates of nodes. Often, there

are restrictions on some of the transformations { forexample, it may not be possible to change one type ofnode to another. Once we have the minimal sequenceof transformations, the di�erences between web pagescan be highlighted in a variety of ways. One approachis to display the changes at the level of the source webpages with insertions, deletions, and changes repre-sented in di�erent colors. Alternative methods includeusing appropriate symbols to mark up the changes inthe original document.4.3 Current DirectionsOur di�erencing algorithms are based on dynamicprogramming techniques, which allow various kinds oftransformations to be weighted di�erently. Our goal isto �nd the appropriate weights for tracking meaningfuldi�erences in news stories. The research challenge is to�nd metrics that work well on documents containingimages as well as text.We are also advancing content-based visual query

(a) (b)Figure 7: (a) Search results for SUBJECT = \nature", (b) content-based visual query results forimages/videos ' \red race car".by developing e�ective query interfaces that support exible combinations of visual features. For example,we are integrating image features (e.g., color, texture,and spatial relationships) with video features (e.g.,motion, trajectory, and temporal relationships). In fu-ture applications, we can link our image search enginesto video browsing/editing systems, which we believewill result in a more powerful environment for visualinformation access and manipulation.5 SummarizationThe goal of summarization within CDNS is to briefthe user on information within the documents re-trieved by tracking. In many cases, the summary mayprovide enough information for the user to skip read-ing the original document. In others, the user maywant to check the original documents to verify infor-mation contained within the summary, to follow up onan item of interest, or to resolve con icting informa-tion between sources. In a user focus group, journal-ists indicated that the summary can provide adequateinformation to determine reliability of the informationpresented and whether it is worth following up on theoriginal sources.Summary generation is strongly shaped by thecharacteristics of the tracking environment. Trackingof documents on the same event happens over time. Atany particular point in time, the summarization com-ponent will be given a set of documents on the sameevent from a speci�c time period and at later points,will receive additional later breaking news on the same

event. To meet the demands of this environment, ourwork on summarization includes the following key fea-tures:� Summaries are generated over multiple articles,merging information from all relevant sources intoa concise statement of the facts. This is in con-trast to most previous work that summarizes sin-gle articles [24, 25, 26, 27].� Summaries must identify how perception of theevent changes over time, distinguishing betweenaccounts of the event and the event itself [28].� Given access to live news, the summarizer mustprovide an update since the last generated sum-mary, identifying new information and linking itspresentation to earlier summaries.Given the multimedia nature of the tracking en-vironment, presentation of tracked information mustinclude more than just textual summaries. While ourwork does not include methods for summarizing a setof images returned from search, we do make use of ourcategorization tools to select a representative sampleof retrieved images that are relevant to, or part of, thetextual news. If images are an item of interest, jour-nalists can follow up on the summary by requestingimages similar to one or more of the representativeimages.In the remainder of this section, we present themain phases of summary generation in CDNS. Unlike

previous work on summarization, CDNS does not ex-tract sentences from the input articles to serve as thesummary. Instead, we use natural language processingtechniques to extract structured information from thedocument and language generation techniques [29] tomerge information extracted from di�erent documentsand form the language of the summary. Our researchfocus is on problems in the language generation stage.In the following sections, we describe the tools we havedeveloped and use for information extraction, selec-tion of summary content (conceptual summarization),and determination of summary wording and phrasing(linguistic summarization).5.1 Information ExtractionInput to summarization is the set of articles and im-ages related to a speci�c event found by event tracking.Our approach to information extraction is to use andextend existing natural language tools, allowing us tofocus on research issues in the language generationcomponent. We describe three tools we are using: asystem for extracting information speci�c to terroristevents, a tool for extracting names and their descrip-tions, and tools for connecting into online structureddata.Currently we are using an information extractionsystem for the domain of terrorist news articles devel-oped under the DARPA human language technologyprogram, NYU's Proteus system [2]. Proteus �ltersirrelevant sentences from the input articles, and usesparsing and pattern matching techniques to identifyevent type, location, perpetrator, and victim, amongothers, building a template representation of the eventas shown in Table 1. Coverage of Proteus is limited toSouth American terrorist activities. In order to handlecommon events in current news (e.g., terrorism in theMid East or the US), we are enhancing this approachusing �nite-state techniques [30] for extraction of the�elds in the templates.We have also developed separate pattern match-ing techniques to extract speci�c types of informationfrom input text, building domain knowledge sourcesfor use in text generation. We have built a pro-totype tool, called PROFILE, which uses news cor-pora available on the Internet to identify companyand organization names, person names along with de-scriptions (e.g., South Africa's main black oppositionleader, Mangosuthu Buthelezi or Sinn Fein, the po-litical arm of the Irish Republican Army), building alarge-scale lexicon and knowledge source. Descriptionsof entities are collected over a period of time to ensurelarge lexical coverage. Our database contains auto-matically retrieved descriptions of 2,100 entities at thismoment.Once the descriptions of entities have been ex-tracted, they are converted automatically into Func-tional Descriptions (FD) [31] which are fed to the nat-ural language generation component. FDs generatedin this way can be reused in summaries and can be ma-nipulated using linguistic techniques. Descriptions canbe used to augment the output produced by the infor-mation extraction component in several ways; it canbe used to provide more accurate descriptions of enti-

ties found in the input articles or it can be used to �nddescriptions of entities where the input news does notcontain adequate descriptions. By searching throughpro�les from past news, a description suitable for thesummary can be selected. Using language generationtechniques such as aggregation (e.g., [32, 33, 34]), theexact phrasing of the description may be modi�ed toproduce more concise wordings for the summary. Forexample, two descriptions may merged into a shorterdescription conveying the same meaning (e.g., \presi-dents Clinton and Chirac" instead of \president Clin-ton and president Chirac").In order to tap into nontextual sources, we arebuilding facilitators using KQML as a communica-tion language [35] that monitor on-line databases thatare available through the World-Wide Web, provid-ing an implementation independent view of the source.These will provide noti�cation when new informationarrives and will extract information from remote sitesfor summarization. CDNS currently includes tools foraccess to the CIA Fact Book [1] and the George Masondatabase of terrorist organizations [36], both of whichprovide information relevant to terrorism and currenta�airs in general.5.2 Conceptual SummarizationConceptual summarization requires determiningwhich information from the set of possible extractedinformation to include in the summary, how to com-bine information from multiple sources, and how toorganize it coherently.Our work builds on our prototype system, SUM-MONS [28], which generates summaries of a seriesof news articles using the set of templates producedby DARPA message understanding systems as input(SUMMONS architecture is shown in Figure 10). Out-put is a paragraph describing how perception of anevent changes over time, using multiple points of viewover the same event or series of events. We collecteda corpus of newswire articles, containing threads ofarticles on the same event where later articles oftencontain summaries of earlier articles. Analysis of thecorpus informed development of both conceptual andlinguistic summarization.Conceptual summarization uses planning opera-tors, identi�ed through corpus analysis, to combineinformation from separate templates into a single tem-plate. The summarizer includes seven planning op-erators, which identify di�erences and parallels be-tween templates such as contradictions or agreementbetween sources, addition of new information, or re-�nement of existing information. Each planning op-erator is written as a rule, containing preconditionswhich must be met for the rule to apply, and actionswhich extract or modify information from the inputtemplates creating a new template.Anexample of a planning operator is generalization.It uses an ontology developed for the DARPA infor-mation extraction systems to determine when valuescontained in the same slot of di�erent templates fallunder the same node in the ontology. The precondi-tion looks for attributes of the same name in di�erent

0. MESSAGE: ID TST2-MUC4-00481. MESSAGE: TEMPLATE 12. INCIDENT: DATE 19 APR 893. INCIDENT: LOCATION EL SALVADOR: SAN SALVADOR (CITY)4. INCIDENT: TYPE ATTACK5. INCIDENT: STAGE OF EXECUTION ACCOMPLISHED6. INCIDENT: INSTRUMENT ID -7. INCIDENT: INSTRUMENT TYPE -8. PERP: INCIDENT CATEGORY TERRORIST ACT9. PERP: INDIVIDUAL ID -10. PERP: ORGANIZATION ID "FMLN"11. PERP: ORGANIZATION CONFIDENCE SUSPECTED OR ACCUSED: "FMLN"12. PHYS TGT: ID -13. PHYS TGT: TYPE -14. PHYS TGT: NUMBER -15. PHYS TGT: FOREIGN NATION -16. PHYS TGT: EFFECT OF INCIDENT -17. PHYS TGT: TOTAL NUMBER -18. HUM TGT: NAME "ROBERTO GARCIA ALVARADO"19. HUM TGT: DESCRIPTION "ATTORNEY""ATTORNEY": "ROBERTO GARCIA ALVARADO"20. HUM TGT: TYPE ACTIVE MILITARY: "ATTORNEY"LEGAL OR JUDICIAL: "ROBERTO GARCIA ALVARADO"21. HUM TGT: NUMBER 1: "ATTORNEY"1: "ROBERTO GARCIA ALVARADO"22. HUM TGT: FOREIGN NATION -23. HUM TGT: EFFECT OF INCIDENT DEATH: "ATTORNEY"DEATH: "ROBERTO GARCIA ALVARADO"24. HUM TGT: TOTAL NUMBER -Table 1: Sample template.templates, with values that can be generalized. All�elds that cannot be generalized must not have con- icting values. The action merges the input templatesand creates a new template with the generalized valuesfor the attributes. In the example shown, three gen-eralizations are performed. Bogot�a and Medellin areboth identi�ed as cities in the same country, Colom-bia. In addition, both hijacking and bombing appearin the ontology as hyponyms of terrorist act. Tuesdayand Wednesday are also generalized to week. Thesemore general values are used in a new template. Fig-ure 8 shows excerpts from two templates to which thegeneralization operator can be applied. The combinedtemplate will be used as the input to the summarizerto generate the summary sentence shown in (Figure 9).5.3 Linguistic SummarizationLinguistic summarization is concerned with ex-pressing selected information in the most concise waypossible. Input to linguistic summarization is one ormore templates created by merging the templates foreach article. Each template (both these extracted bythe MUC system and those generated by the summa-rizer using planning operators) will be used as inputto generate a sentence. This requires �rst creatinga case frame representation for each template, select-ing which �eld will be realized as the verb of the sen-tence and mapping other �elds to the arguments of theverb. During this process, words are selected to real-ize the values of the �elds. The resulting case frameis passed through syntactic generation, where a fullsyntactic tree of the sentence is created, grammati-cal constraints are enforced, and morphological agree-ment is carried out. We use the FUF/SURGE pack-age [31, 37], a robust grammar of English (SURGE)along with a uni�cation interpreter (FUF) and some

text manipulation tools written in Perl as the basis forboth word choice and syntactic generation.Since the FUF/SURGE package provided a com-plete domain independent module for syntactic gener-ation, our focus was in creating a lexicon representingconstraints on how words are to be selected. We iden-ti�ed commonly occurring phrases in the corpus thatare used to mark summarized material. For example,if two messages contain information about two sepa-rate bombings, then the summary will refer to a totalof two bombings. In another example, an update sen-tence will be started by later reports show that ....We also are examining using and modifying phras-ing from the original article to aid in developing arobust lexical chooser. By creating functional descrip-tions for extracted phrases, they can be modi�ed andre-used in novel ways in the output.5.4 Current DirectionsOur current system does not yet incorporate in-formation extracted by PROFILE or from remotedatabases. We are developing new planning opera-tors that determine when such information is neededand retrieve it from one of these sources. Such infor-mation can be used to �ll gaps, either in the user'sown knowledge or in the template information pro-vided to the generator. It may also be used to connectpeople, organizations, events or companies mentionedin di�erent articles by providing the relation betweenthem. For example, we might identify the \FMLN"as the \Farabundo Marti National Liberation Front"and include its leader, if the user has not seen any pre-vious summaries about the FMLN. We will use corpusanalysis to identify when and how background infor-mation is provided in a summary. Yet another issuein this task will be how to combine information at the

template �elds message 1 message 2MESSAGE:ID TST-S03-0014 TST-S02-0011SECSCOURCE:SRC UPI ReutersINCIDENT:DATE Tuesday WednesdayINCIDENT:LOC Bogot�a MedellinPERPETRATOR:NUM 1 2INCIDENT:TYPE "bombing" "hijacking"Figure 8: Two templates to be combined using the generalization operator.A total of three terrorist acts were reported in Colombia last week.Figure 9: Generated sentence using the generalization operator.semantic level (e.g., from the templates) with phrasaland lexical information (e.g., collocations, technicalterms). At one level, collocations and technical termscould augment the HTML links to the original articles(see Figure 2) by serving as subject indices. Alterna-tively, full phrases could be used to refer to a slotin a template or a relation between slots. For exam-ple, if one template has \Garcia Alvarado" as victimand another template has \Attorney General" as thevictim, then an extracted phrase such as \Garcia Al-varado, Attorney General" may be used to merge thetemplates and refer to the slots of both.As we move to live news feeds, we must modify oursummarizer so that it can generate a summary thatupdates a user on the latest breaking news, withoutunnecessarily repeating information provided earlier.In order to provide an update, the content plannermust compare new information against its model ofpast sessions with the same user, identifying contra-dictions and additions. The planning operators will beextended to identify links, similarities and di�erencesbetween new incoming information and information inthe discourse model. In addition to combining infor-mation, the planning operators must also be able to�lter and order information according to importance.We will experiment with a variety of techniques forthis task, ranging from repetition across sources, userranking of information importance, and empiricallyderived strategies for ordering information based ona corpus of summaries.6 ConclusionsIn the CDNS project, we have developed tools anda system architecture with which users can manageknowledge, summarize information, and search for andtrack events. Our work on summarization is matureand unique in several aspects | we have shown that itis possible to obtain highly readable summaries frommultiple sources but in relatively restricted domains.In the area of content-based visual query, we havedeveloped e�ective and e�cient techniques for search-ing unconstrained images based on selected visual fea-tures. Our Web image/video search and cataloging en-gine is among the �rst of its kind and demonstrates in-novative ways of integrating multimedia features. Wehave built large-scale online prototypes for evaluation,and we are integrating our content-based search toolswith a real-time Web video editing/browsing system

which can be used as an interactive visual informa-tion system. We have also developed a platform forevaluating methods for measuring di�erences in Webdocuments.As we move to the future, we will focus on enhanc-ing access to live data, integrating multiple media,and incorporating feedback from various users. Oneof our next steps is to track new information from avariety of sources including live feeds. Although thetools that we have developed can facilitate access tolive news sources, CDNS currently runs primarily overstored local collections. One of our current e�orts isto incorporate the individual tools we have developedfor the separate components into an integrated systemthat supports live feeds.A primary focus in CDNS is the integration of mul-tiple media at all levels of the system. In our ini-tial research, we have developed an architecture thatprovides a model for interaction between componentsthat summarize and track text and components thatprimarily search image data. This model providesa paradigm for user interaction with multiple mediaand illustrates how feedback between components en-hances overall interaction with the system. We havebegun integrating textual and image processing tech-niques within individual components of the system aswell, as demonstrated by our use of both textual fea-tures provided by URLs and directory names and im-age features to constrain search over images. We arecontinuing in this direction by developing algorithmsthat utilize more sophisticated natural language pro-cessing techniques for identifying textual features withfurther image processing. We are also exploring meth-ods for incorporating multimedia processing of video,using both image clues and textual clues from a tran-script accompanying the video.Given our focus on the domain of news, journalistsare target users of CDNS. We are interacting with thefaculty and students at the Graduate School of Jour-nalism at Columbia University to collaborate bothin content development and in developing technologythat is tailored to journalists' needs. In an initial fo-cus group, journalists provided feedback on currentsummarization and search techniques, noting that ifa summary clearly attributes information to sources,identi�es contradictions and agreements between un-derlying articles, and points out di�erences between

C O M B I N E R

P A R A G R A P H P L A N N E R

Content Planner

Linguistic Generator

S E N T E N C E G E N E R A T O R

L E X I C A L C H O O S E R

A d d i t i o n

T r e n d

R e f i n e m e n t

A g r e e m e n t

Operators

M U C

T e m p l a t e s

Figure 10: (a) SUMMONS System Architecture.articles being summarized, this will aid the journalistin determining whether the original sources are impor-tant. Our future work includes planned user studiesfor formative and summative evaluation, and using thereal-time video editing/browsing prototype describedabove as a testbed of an interactive visual informa-tion system. These interactions will help us tune oursystem and algorithms to this important domain ofapplication.References[1] CIA. The CIA world factbook. URL: http://www.odci.gov/cia/publications/95fact, 1995.[2] Ralph Grishman, Catherine Macleod, and JohnSterling. New York University: Description ofthe PROTEUS system as used for MUC-4. InProceedings of the Fourth Message UnderstandingConference, June 1992.[3] Dragomir R. Radev and Kathleen R. McKeown.Building a generation knowledge source usinginternet-accessible newswire. In Proceedings ofthe 5th Conference on Applied Natural LanguageProcessing, Washington, DC, April 1997.[4] New Mexico State UniversityCRL. Products and prototypes. URL: http://www.nmsu.edu/o�er.html, 1996.[5] Interpix. Image Surfer. Technical Reporthttp://www.interpix.com/.[6] J. R. Smith and S.-F. Chang. Search-ing for images and videos on the World-Wide Web. submitted to IEEE multime-dia magazine, also cu-ctr technical report 459-96-25, 1996. Demo accessible from URLhttp://www.ctr.columbia.edu/webseek.[7] C. Frankel, M. Swain, and V. Athitsos. Web-Seer: An image search engine for the World WideWeb. Technical Report TR-96-14, University ofChicago, July 1996.

[8] D. Zhong, H. Zhang, and S.-F. Chang. Clusteringmethods for video browsing and annotation. InIS&T/SPIE Symposium on Electronic Imaging:Science and Technology { Storage & Retrieval forImage and Video Databases IV, San Jose, CA,February 1996.[9] T. P. Minka and R. W. Picard. An imagedatabase browser that learns from user interac-tion. Technical Report 365, MIT Media Lab-oratory and Modeling Group Technical Report,1996.[10] John S. Justeson and Slava M. Katz. Technicalterminology: some linguistic properties and analgorithm for identi�cation in text. Natural Lan-guage Engineering, 1:9{27, 1995.[11] Frank Smadja and Kathleen R. McKeown. Usingcollocations for language generation. Computa-tional Intelligence, 7(4), December 1991.[12] Frank Smadja. Retrieving collocations from text:Xtract. Computational Linguistics, 19(1):143{177, March 1993.[13] Vasileios Hatzivassiloglou and Kathleen R. McK-eown. Towards the automatic identi�cation ofadjectival scales: Clustering adjectives accordingto meaning. In Proceedings of the 31st AnnualMeeting of the ACL, pages 172{182, Columbus,Ohio, June 1993. Association for ComputationalLinguistics.[14] W. Niblack, R. Barber, W. Equitz, M. Flick-ner, E. Glasman, D. Petkovic, P. Yanker, andC. Faloutsos. The QBIC project: Querying im-ages by content using color, texture, and shape.In Storage and Retrieval for Image and VideoDatabases, volume SPIE Vol. 1908, February1993.[15] J. R. Smith and S.-F. Chang. VisualSEEk: a fullyautomated content-based image query system. InProc. ACM Intern. Conf. Multimedia, Boston,MA, May 1996. ACM. Demo accessible fromURLhttp://www.ctr.columbia.edu/VisualSEEk.

[16] M. J. Swain and D. H. Ballard. Color index-ing. International Journal of Computer Vision,7:1 1991.[17] F. Liu and R. W. Picard. Periodicity, direc-tionality, and randomness: Wold features for im-age modeling and retrieval. Technical Report320, MIT Media Laboratory and Modeling GroupTechnical Report, 1994.[18] T. Chang and C.-C. Kuo. Texture analysis andclassi�cation with tree-structured wavelet trans-form. IEEE Trans. Image Processing, 3(4), Oc-tober 1993.[19] H. Samet. The quadtree and related hierarchi-cal data structures. ACM Computing Surveys,16(2):187 { 260, 1984.[20] S.-K. Chang, Q. Y. Shi, and C. Y. Yan. Iconicindexing by 2-D strings. IEEE Trans. PatternAnal. Machine Intell., 9(3):413 { 428, May 1987.[21] C. Faloutsos and K.-I. Lin. FastMap: A fast algo-rithm for indexing, data mining and visualizationof traditional and multimedia datasets. In ACMProc. Int. Conf. Manag. Data (SIGMOD), pages163 { 174, 1995.[22] E. G. M. Petrakis and C. Faloutsos. Similaritysearching in large image databases. TechnicalReport 3388, Department of Computer Science,University of Maryland, 1995.[23] S. Chawathe, S. Rajaraman, H. Garcia-Molina,and Widom J. Change detection in hierarchicallystructured information. In Proceedings ACMSIGMOD Symposium. ACM, 1996.[24] Lisa F. Rau, R. Brandow, and K. Mitze. Domain-independent summarization of news. In Summa-rizing Text for Intelligent Communication, pages71{75, Dagstuhl, Germany, 1994.[25] Julian M. Kupiec, Jan Pedersen, and FrancineChen. A trainable document summarizer. In Ed-ward A. Fox, Peter Ingwersen, and Raya Fidel,editors, Proceedings of the 18th Annual Interna-tional ACM SIGIR Conference on Research andDevelopment in Information Retrieval, pages 68{73, Seattle, Washington, July 1995.[26] Chris D. Paice and Paul A. Jones. The iden-ti�cation of important concepts in highly struc-tured technical papers. In Proceedings of the 16thAnnual International ACM SIGIR Conference onResearch and Development in Information Re-trieval, pages 69{78, 1993.[27] Hans P. Luhn. The automatic creation of lit-erature abstracts. IBM Journal, pages 159{165,1958.

[28] Kathleen R. McKeown and Dragomir R. Radev.Generating summaries of multiple news articles.In Edward A. Fox, Peter Ingwersen, and RayaFidel, editors, Proceedings of the 18th Annual In-ternational ACM SIGIR Conference on Researchand Development in Information Retrieval, pages74{82, Seattle, Washington, July 1995.[29] Kathleen R. McKeown. Text Generation: Us-ing Discourse Strategies and Focus Constraints toGenerate Natural Language Text. Studies in Nat-ural Language Processing. Cambridge UniversityPress, Cambridge, England, 1985.[30] Darrin Duford. CREP: a regular expression-matching textual corpus tool. Technical ReportCUCS-005-93, Columbia University, 1993.[31] Michael Elhadad. Using Argumentation to Con-trol Lexical Choice: A Functional Uni�cation Im-plementation. PhD thesis, Department of Com-puter Science, Columbia University, New York,1993.[32] James Shaw. Conciseness through aggregationin text generation. In Proceedings of the 33rdAnnual Meeting of the ACL (Student Session),pages 329{331, Cambridge, Massachusetts, June1995. Association for Computational Linguistics.[33] Kathleen McKeown, Jacques Robin, and KarenKukich. Generating concise natural lan-guage summaries. Information Processing andManagement, Special Issue on Summarization,31(5):703{733, September 1995.[34] Jacques Robin and Kathleen McKeown. Empir-ically designing and evaluating a new revision-based model for summary generation. Arti�cialIntelligence, 85, August 1996. Special Issue onEmpirical Methods.[35] TimFinin, Rich Fritzson, DonMcKay, and RobinMcEntire. KQML | a language and protocolfor knowledge and information exchange. Tech-nical Report CS-94-02, Computer Science De-partment, University of Maryland and ValleyForge Engineering Center, Unisys Corporation,Computer Science Department, University ofMaryland, UMBC, Baltimore, MD 21228, 1994.Accessible from ftp://gopher.cs.umbc.edu/-pub/ARPA/kqml/papers/kbks.ps.[36] The Terrorist Pro�le Weekly. The TPW terroristgroup in-dex. URL: http://www.site.gmu.edu/~cdibona/-grpindex.html, 1996.[37] Jacques Robin. Revision-Based Generation ofNatural Language Summaries Providing Histori-cal Background. PhD thesis, Department of Com-puter Science, Columbia University, New York,1994.