A search engine for QoS-enabled discovery of semantic web services

-

Upload

independent -

Category

Documents

-

view

0 -

download

0

Transcript of A search engine for QoS-enabled discovery of semantic web services

A Search Engine forQoS-enabled Discovery ofSemantic Web Services

Le-Hung Vu, Manfred Hauswirth, Fabio Porto, and KarlAbererSchool of Computer and Communication Sciences,Ecole Polytechnique Federale de Lausanne (EPFL),CH-1015 Lausanne, Switzerland

Abstract:Directory services are a genuine constituent of any distributed architecture which

facilitate binding attributes to names and then querying this information, i.e., announc-ing and discovering resources. In such contexts, especially in a business environment,quality of service (QoS) and non-functional properties are usually the most importantcriteria to decide whether a specific resource will be used. To address this problem,we present an approach to the semantic description and discovery of Web serviceswhich specifically takes into account their QoS properties. Our solution uses a robusttrust and reputation model to provide an accurate picture of the actual QoS to user.The search engine is based on an algebraic discovery model and uses adaptive query-processing techniques to parallelize expensive operators. Architecturally, the enginecan be run as a centralized service for small-scale environments or can be distributedamong any number of cooperating registry providers.

Keywords: Semantic Web service; service discovery; QoS; trust; reputation

Reference to this paper should be made as follows: Vu, L.-H., Hauswirth, M., Porto,F., and Aberer, K. (200x) ‘A Search Engine for QoS-enabled Discovery of Semantic WebServices’, Special Issue of the International Journal on Business Process Integrationand Management (IJBPIM), Vol. x, No. x, pp.xxx–xxx.

Biographical notes: Le-Hung Vu is a PhD student at the Distributed InformationSystem Laboratory of the Swiss Federal Institute of Technology Lausanne (EPFL),Switzerland. His research interests include Semantic Web service discovery, QoS forWeb services, and trust and reputation management in decentralized environments.

Manfred Hauswirth is a senior researcher at the Distributed Information Systems Lab-oratory, EPFL, Switzerland. His main research interests are large-scale distributedsystems, peer-to-peer systems, sensor networks, self-organizing systems, Web services,e-commerce systems, and publish/subscribe systems.

Fabio Porto is a senior researcher at the Database Laboratory of EPFL, Switzerland.His research domain includes distributed query processing systems, query optimiza-tion, reasoning systems and the Semantic Web.

Karl Aberer is a full professor and head of the Distributed Information Systems Lab-oratory, EPFL, Switzerland. His main research interests are distributed informationmanagement, peer-to-peer computing, Semantic Web and self-organizing informationsystems.

1 INTRODUCTION

High-quality means for resource discovery are an essentialprerequisite for service-oriented architectures. Developersneed to be able to discover Web services and other re-sources for building their distributed applications. In theupcoming Semantic Web, discovery becomes even more im-

portant: Assuming that Web services are being semanti-cally annotated, the tasks of choreographing and orches-trating semantic Web services are supposed to be dele-gated to the computer. This not only requires expres-sive means to describe Web services semantically whichis a major ongoing research effort, for example, WSMO(http://www.wmso.org), but also puts more complex re-

1

quirements onto discovery.Most of the ongoing efforts address functional aspects.

However, in a business environment, usually the non-functional and QoS properties, such as price, performance,throughput, reliability, availability, trust, etc., are actuallythe first ones to be applied in deciding whether a specificresource will be used and only if they are considered sat-isfactory, the functional requirements are being looked at.In this paper we address this problem by providing an ap-proach to semantic description and discovery of Web ser-vices which specifically takes into account QoS. Our servicediscovery framework includes a semantic QoS descriptionmodel which is suitable for many application domains, issimple yet expressive and can be used for semantically de-scribing the QoS requirements of the users and the ad-vertised Service Level Agreement (SLAs) of the providers,taking into account the semantic modeling of environmen-tal factors on QoS parameter values and the relationshipsamong them.

In contrast to functional specifications which can eas-ily be verified, the assessment of non-functional proper-ties and QoS such as performance, throughput, reliability,availability, trust, etc., is much more complicated. Basi-cally the QoS described in a specification has to be seen asa claim. For assessing the actually provided QoS furtherevaluation infrastructures need to be in place which en-able online monitoring or feedback mechanisms or a com-bination of these. Both have to address specific techni-cal intricacies: Online monitoring requires modificationsof the participating software components to provide therequired data along with proofs. User feedback on theother hand must take into account that users provide falsefeedback, collude to raise or lower QoS properties, or tryother attacks to get wrong data into the feedback system.This requires a carefully devised model to filter out wrongdata, but requires only minimal changes to the existinginfrastructures. In the approach presented in this paperwe include a feedback mechanism which is based on userfeedback and feedback from (typically a few) trusted thirdparties, e.g., rating agencies, which exist in many appli-cation and business domains. Based on this data and arobust statistical trust and reputation model, which takesinto account attacks such as bad-mouthing, collusion, etc.,to filter out dishonest behavior, we can provide an accuratepicture of the actual QoS.

The suggested framework is general and facilitates theapplication of different matchmaking algorithms to the dis-covery process in the form of discovery algebra operatorsand parallelization of the semantic discovery for minimiz-ing the total discovery time. More precisely, we performthe categorization of services based on the indexing of theircharacteristic vectors as originally proposed in Vu et al(2005a) and then use this information to filter irrelevantservices during the matchmaking steps. Our frameworkalso sketches the solution to deal with the heterogeneousand distributed ontologies using mediation services dur-ing the semantic reasoning. The search engine can berun as a centralized service for small-scale environments

or can be distributed among any number of cooperatingproviders. To enable scalable discovery we devise a peer-to-peer-inspired distribution strategy which enables fault-tolerance and allows registries to maintain full control andconfidentiality on the information they provide.

The Web Service Modeling Ontology (WSMO) modelis used as a proof-of-concept of our proposed discoveryapproach. However, the approach is generally applica-ble to other models, e.g., OWL-S (http://www.w3.org/submission/owl-s). To be able to concentrate on discovery,our search engine exploits several components available inthe WSMO eXecution environment (WSMX), which is areference implementation of the WSMO model (Bursteinet al (2005)). Without constraining general applicability,we assume in the following that Web services are describedsemantically, including QoS properties, using the WSMOconceptual model. We focus on the discovery componentand assume that other functionalities such as publishingof services, managing of service advertisements and on-tologies, invocation of services, monitoring of service QoS,etc., are handled by existing (WSMX) components, andthat the interaction between our discovery component andother components is done via pre-defined APIs.

This paper is organized as follows: In Section 2 wepresent our semantic QoS description model, followed by adescription how discovery works in a centralized setting inSection 3, which we subsequently extend to a distributedenvironment in Section 4. Section 5 presents the detailsof the architecture of our QoS-enabled service discoveryframework. An analysis of our solution is provided in Sec-tion 6, Section 7 discusses it in the context of related re-search efforts, and Section 8 rounds out the paper with ourconclusions.

2 QUALITY OF SERVICE MODEL

2.1 Modeling QoS Parameters

In our perspective, a Web service is a web-based interfaceproviding an electronic description of a concrete service,which may offer the functionality of a software component,for example, a data backup service, or a real-life (business)activity such as book shipping. Therefore, the notion ofQoS in our work is generic, ranging from application-levelQoS properties to network or software-related performancefeatures that a user expects from the service execution. Ex-amples of application-level QoS parameters are the qualityof the food delivered by a online-order pizza service or thetimely delivery of a book. Typical network or software-related QoS parameters are the availability, response-time,execution-time, etc., of Web services.

In this context, selecting a service includes agreeing onthe function it delivers and on at least one of the QoSparameters (criterion) offered by the provider. Thus, wemodel a Web service description as F ∧ Q, where F is afunctional criterion and Q is a QoS criterion. Based onreal-world requirements and the most relevant QoS speci-

fication standards such as WSLA (Ludwig et al (2003)),QML (Frolund and Koisten (1998)), and WSOL (Tosic(2004)), we need to provide a semantic description for thefollowing important information of a QoS criterion:• The description of the QoS parameters and the as-

sociated required level of QoS, e.g., parameter namesand textual descriptions, possible values of the param-eters, respective measurement units, associated eval-uation methods, etc. We model this part as C ′(qi),in which C ′ is a concept expression that constrainsthe instance qi of a QoS concept in the QoS domainontology.

• The necessary and sufficient conditions that theprovider engages to offer the QoS values for offeringthe QoS instances specified by the above QoS levelC ′(qi), for instance, the price of the service, the min-imum network connection and system requirements,the maximal number of requests per time unit, etc.This part is expressed by cnd, an axiom over instancesof a set of concepts specifying a real-world scenario.

Thus, a QoS criterion is modeled as set of tuples, eachof which has the form 〈C ′(qi), cnd〉. For brevity, we denotecnd as the context to achieve the QoS level C ′(qi) hence-forth. As an example, the constraint C ′ could be used todescribe the average response-time value of a Web servicewhile cnd could be used to describe the contextual condi-tions, e.g., network connection speed, that a client musthave in order to get the specified average response-time.

Symmetrically to a Web service description, a servicediscovery request (also called a user query, a service query,or a user’s goal in the descriptions to follow) consists ofthe description of the functional and QoS criteria a Webservice should offer to fulfill a user’s needs. In this context,a user query is specified as F ∧Q, where F is a functionalcriterion and Q is a QoS criterion. A QoS criterion inuser queries is similar to its counterpart in Web servicedescriptions. Web service consumers indicate by C ′(qi)the QoS level they are seeking for and provide informationcnd regarding the state of the real-world they are able toagree with when searching for a service with that specifiedQoS level.

2.2 Feedback on QoS of Web Services

To facilitate the discovery of services with QoS informa-tion, we must evaluate how well a service can fulfill a user’squality requirements from the service’s past performance.Therefore, the discovery component provides an interfacefor the service users to submit their feedback on the per-ceived QoS of the consumed services. The following infor-mation should be provided in a user’s QoS report:• the QoS parameter and its observed value;• the context in which the user obtained this specific

value of QoS. More precisely, this would include: thetime when the user observed this value and reportedit to the discovery component, and, if possible, the de-tailed information of the user’s environmental factors

related to the QoS of the transaction between the userand the service provider.

Supposing that the corresponding QoS criterion of theuser are Q = 〈C ′1(qi1), cnd1〉, . . . , 〈C ′n(qin), cndn〉, whereC ′j(qij) and cndj are respectively the required instanceconstraints and the respective real-world condition ofthe ontological QoS concept qj , 1 ≤ j ≤ n, a corre-sponding QoS report is represented formally as a tuple〈u, S, t, qs1, . . . , qsn〉, where u, S, and t denote the iden-tifier of the user, the identifier of the corresponding service,and the report timestamp. Each term qsj = 〈qij , ucndj〉,1 ≤ j ≤ n includes qij as the instance of a QoS concept qj

and ucndj as an axiom over the set of ontological instancesdescribing the real-world scenario of the user submittingthe report. The service is considered as fulfilling all QoSrequirements of the user if KB |= C ′1(qi1), . . . , C

′n(qin),

with KB being the knowledge base of the system con-structed by composing ontologies referenced in the serviceand user query’s descriptions.

2.3 Modeling the QoS Semantic Matchmaking

The above definitions lead to a symmetric representation ofthe Web service description and the user query, expressedas F ∧Q. Nevertheless, F and Q have different meanings.As a result of this, the semantic matchmaking follows dif-ferent models when considering functional and quality ofservice properties.

Matching of functional descriptions as presented herehas been discussed in Keller et al (2005) and Li and Hor-rocks (2003). A knowledge base KB is constructed by com-posing ontologies referenced in both descriptions. Given aWeb service’s functional description specified by a conceptCa and its user query counterpart Cb, a match µF (Ca, Cb)occurs iff there exists an instance i, such that i ∈ (CauCb),in KB, or KB |= µF (Ca, Cb). Matchmaking of qual-ity criteria µQ is slightly different. Given a pair of QoScriteria in a Web service description Qws and in a userquery Quq, where, for example, Qws = 〈C ′ws(qiws), cndws〉and Quq = 〈C ′uq(qiuq), cnduq〉, µQ(Qws, Quq) representsa match iff, given the above knowledge base KB : (1)cnduq v cndws; (2) C ′ws(qiws) v C ′uq(qiuq); and (3)KB |= C ′uq(qiws). Here qiws is the QoS instance repre-senting the actual QoS capability of a service in the con-text cndws, which is estimated by our reputation-basedtrust management model based on the set of QoS instancescollected from the feedback of users under similar envi-ronmental conditions, i.e., those QoS reports of the form〈u,ws, t, qs〉, where ∃〈qi, ucndj〉 ∈ qs s.t. ucndj v cndws.Note that the subsumption in (1) follows an inverse di-rection with respect to (2). This reflects the fact that thereal-world scenario (or client-side conditions) offered by theuser must meet the requirements defined by the provider,whereas with respect to the QoS concept instance the re-quirements are stated inversely. The subsumption in (3)states that our QoS-enabled service discovery is also basedon the historical performance values of the Web services.

2.4 WSMO QoS Modeling Example

The usefulness of this representation model in practi-cal applications is illustrated in the following scenario,which we adapted from a real-world case study of ourDIP project (http://dip.semanticweb.org). A financial-information service provider offers a Web service to re-trieve the most important index information of the Euro-pean stock market. We suppose that this service is ac-cessible via an interface named subscribedServiceInterface.The produced information is assured to be as fresh as theactual values in the stock market, i.e., at most 10 min-utes old. The service is only accessible in the TechGatebuilding in Vienna city, Austria, and to use the service viathis interface, a valid credit card number of the user is re-quired to pay for using the service. Figure 1 shows howwe encode QoS parameters and their associated QoS condi-tions for this service in the WSMO conceptual model, usingthe WSML-Rule language syntax for ontological modeling.The axiom providedDataFreshness is the realization of thenotation QoS constraint C ′(qi) on instances of the QoSconcept DataFreshness. Similarly, the axiom dataFresh-nessContext corresponds to the context cnd that the Webservice provider engages to offer the values claimed in theabove axiom providedDataFreshness.

¨

§

¥

¦

WebService EUStockMarketMainIndexesimportsOntology StockMarketOntologycapability EUStockMarketMainIndexesCapability...Interface subscribedServiceInterface

importsOntology StockMarketQoSOntology,UserInformationOntology

nonFunctionalPropertiesdc#relation hasValue providedDataFreshness,

dataFreshnessContextendNonFunctionalProperties...

axiom providedDataFreshnessdefinedBy

?serviceDataFreshness[hasMeanValue hasValue ?meanhasStandardDeviation hasValue ?stdhasMeasurementUnithasValue StockMarketQoSOntology#minute

] memberOf StockMarketQoSOntology#DataFreshnessand (?mean <= 10.0).

axiom dataFreshnessContextdefinedBy

?userData[userLocation hasValue loc#Techgate,hasCreditCardNumber hasValue ?credNo

]memberOf UserInformationOntology#userInformationand StockMarketOntology#validCreditCard(?credNo).

Figure 1: Representation of QoS in a WSMOWeb Service description (a more complete descriptionand related ontologies for this example are providedat http://lsirpeople.epfl.ch/lhvu/ontologies/)

Let us now assume a user who is currently at ViennaInternational Airport and would like to obtain statisticson the European stock market which should not be olderthan 15 minutes. The WSMO representation of the cor-responding user query for such a service (formulated with

the help of GUI tools) is shown in Figure 2. In this querythe QoS required level C ′(qi) of the user and his envi-ronment cnd are respectively expressed by the axioms re-questedDataFreshness and userDataFreshnessContext. Itis important to note that the description of the client en-vironment, i.e., user’s location and credit card number,which could be necessary for the service discovery process,is also incorporated into the query. This is done by usingappropriate GUI tools to query the QoS domain ontol-ogy and derive the related environmental concepts for theparameter DataFreshness. Regarding the above EUStock-MarketMainIndexes() service description, this query doesnot match the Web service’s requirements as the user is notin the appropriate location to be able to use the service.

¨

§

¥

¦

Goal EUStockMarketMainIndexesGoalimportsOntology StockMarketOntologycapability EUStockMarketMainIndexesRequestedCapability...Interface EUStockMarketMainIndexesRequestedInterface

importsOntology StockMarketQoSOntology,UserInformationOntology

nonFunctionalPropertiesdc#relation hasValue requestedDataFreshness,

dataFreshnessContext,endNonFunctionalProperties...

axiom requestedDataFreshnessdefinedBy

?requiredDataFreshness[hasMeanValue hasValue ?meanhasStandardDeviation hasValue ?stdhasMeasurementUnithasValue StockMarketQoSOntology#minute

] memberOf StockMarketQoSOntology#DataFreshnessand (?mean <= 15.0).

axiom userDataFreshnessContextdefinedBy

?userData[userLocation hasValue loc#viennaIntAirport,

]memberOf UserInformationOntology#userInformation.

Figure 2: Representation of QoS information in a WSMOgoal description

In another situation, a user working in the TechGatebuilding uses the web interface of a discovery component,e.g., a well-known financial information portal on the In-ternet, to search for such a service and could obtain thelink to the service described in Figure 1. During the testingof this service (before integrating it into the her company’sdata analysis workflow engine), she finds out that the pro-vided information values are not as fresh as the currentstatistics in the market (via other information sources).With the help of the portal, she can submit a complainton this problem to the service provider via a QoS report.An example of the semantic description being part of sucha report is shown in Figure 3.

3 CENTRALIZED DISCOVERY APPROACH

Our search engine can be run both in a centralized and ina distributed configuration. For the centralized discovery

¨

§

¥

¦

instance observedDataFreshnessmemberOf StockMarketQoSOntology#DataFreshnessnonFunctionalProperties

dc#relation hasValue qosDataFreshnessContextendNonFunctionalPropertieshasMeanValue hasValue 20.0

axiom qosDataFreshnessContextdefinedBy?userData[userLocation hasValue loc#Techgate,

accessDay hasValue _gday("Feb 02 2006"),] memberOf UserInformationOntology#userInformation.

Figure 3: Example of a QoS Report

solution, a service query of a user (or a program interactingwith the discovery component via the API), which includesboth functional and QoS properties of the required service,is submitted to the system. The basic discovery operationis the evaluation of a user’s goal against all available Webservice descriptions in the central (in terms of distribution,local) repository. This semantic matchmaking chooses onlythose services satisfying all user requirements, in terms ofboth service functionality and QoS, ranks the preliminarylist according to the relevance to the query, and then re-turns it to the user. To do this step efficiently, we applysome simple techniques: First, all services which obviouslydo not satisfy the query are filtered out. Then, the dis-covery process can be done in parallel by any number ofavailable semantic query processors, which compare thefunctional and the QoS properties of each service descrip-tion to the query, and rank them to produce the final resultfor the user. The QoS-based service matchmaking and ser-vice ranking operators also use the actual QoS values asestimated by our reputation-based trust management sys-tem, which will be discussed in Section 3.2.

3.1 Semantic Categorization of Web Services

The core of any semantic Web service discovery solution isa logic-based matchmaking between a user request and theavailable Web service descriptions, which is a very expen-sive task. Therefore, it should be performed only on thoseservice descriptions which potentially match the query. Toachieve this, we perform a categorization of service descrip-tions and user queries into different classes based on thesimilarity (both in terms of functionality and QoS) amongthem. This semantic categorization scheme is an extensionof our previous work (Vu et al (2005a)). Given this cate-gorization information, the semantic matchmaking is onlyapplied to the user query and the set of service descriptionsbelonging to the same class. Note that the categorizationitself is an expensive process but does not affect the per-formance of the system since it can be done off-line andincrementally.

For each service description and user request, we gener-ate a corresponding characteristics vector using the avail-able information in its inputs, outputs, precondition, post-condition, and QoS specification (in the WSMO model,

these are the service capability and service interface de-scription). All service descriptions and queries are thenclassified into different categories, depending on their char-acteristics vectors. In the first step we partition all con-cepts in the knowledge-base into different concept groupsbased on their similarity. Algorithms for determining thesemantic similarity between two concepts are widely avail-able, which can be based on the affinity, structural, con-textual similarity, etc., between two concepts, for exam-ple, Castano et al (2001). The similarity threshold usedto decide whether two concepts are in the same group de-pends on the level of matching the system should provide(the usual trade-off between precision and recall). For ex-ample, we could simply set the threshold to 0.0 and useonly parental relationships to compute the similarity be-tween concepts. In this case each concept group would bea partition of its corresponding ontology. For instance, inthe StockMarketOntology of the Stock Market use case de-scribed in the previous section, the concepts Stock, Stock-Variations, StockMarket, Index, Dividend, etc., could beput into one concept group of terminologies related tothe Stock Market domain, whereas the other concepts likecreditCard, masterCard, visaCard, eCash, paymentModelshould be put into another concept group.

Given the classification of all ontological concepts in thelocal repository, we perform another step of categorizationof Web services as shown in pseudo-code in Algorithm 1.

Algorithm 1 ClassifyServices()1: for each Web service description S do2: Create a Bloom key KS ;3: for each concept Ci in the capability and QoS description of

S do4: Find the group CGi of the concept group containing Ci;5: Add CGi to the Bloom key KS ;6: end for7: Classify the service S based on the Bloom key KS ;8: end for

As a result of this algorithm, all Web services are cat-egorized based on the concept groups they operate on.Here we utilize Bloom filters (Bloom (1970)) to determinethe membership of a certain concept group to a serviceclass efficiently. More precisely, all services S belonging tothe same service class SC are described by a Bloom keyKS which is generated using the identifiers of the conceptgroups that those services operate on. Therefore, the nec-essary condition for a service S to match with a query Gis that its description at least must contain all conceptsrelated to those specified in the query. Analogously, theBloom filter representation KS of a service class SC mustcontain the Bloom filter representation KG of the query,i.e., KS = KS

⊕KG, where

⊕denotes bit-wise OR. The

pseudo-code for this step is shown in Algorithm 2.For example, in the StockMarket use case, all services

whose descriptions do not contain those concepts relatedto Stocks, StockIndexes, and do not have any claims forthe QoS parameter DataFreshness, would be classified asirrelevant for the query and be filtered out.

Algorithm 2 FindMatchedServiceClasses(UserQuery G):ListOfServiceClasses L1: Create a Bloom key KG;2: for each concept Ci in the capability and QoS description part

of G do3: Find the group CGi of the concept group containing Ci, using

a hash table of concepts;4: Add CGi to the Bloom key KG;5: end for6: Initialize the list of service classes L as empty;7: for each service class with Bloom key KS do8: if KS = KS

LKG then

9: Add service class KS to L;10: end if11: end for

Note that the implementation for the partial matchmak-ing is straightforward: Appropriate GUI tools can help theuser to specify the set of only the most important conceptsin the query from which to generate the key KG. Since ser-vice providers as well as users can use different ontologiesfor their descriptions, our categorization scheme may useavailable ontology mediators to homogenize different on-tologies in the service repository if necessary. An ontologymediator is a set of rules mapping between two ontolo-gies, a functionality, which has been realized already inmany ontology mapping frameworks. The architecture ofour discovery component is designed to be able to inter-act and exploit the available ontology mediation servicesin the system and thus addresses ontology heterogeneityappropriately.

Given a user request G and the list of its correspondingmatching service classes L, we need to compare G againsteach Web service S in L to choose only those services sat-isfying all user requirements, which include functionalityand QoS requirements. Furthermore, we also need to per-form another step of selection and ranking of results basedon the actual QoS properties of the Web services. Thisstep can be parallelized to reduce the total search time, aspresented in Section 3.3.

3.2 Reputation-based QoS Management Model

The discovery of services with QoS information requires anaccurate evaluation of how well a service can fulfill a user’squality requirements from the service’s past performance.For this estimation, we use a reputation-based model whichexploits data from many information sources: (1) We usethe QoS values promised by providers in their service ad-vertisements. (2) We provide an interface for the serviceusers to submit their feedback on the perceived QoS of con-sumed services. (3) We also use similar reports producedby a few trustworthy QoS monitoring agents, e.g., ratingagencies, to observe the QoS statistics of a number of Webservices. (4) In a distributed setting, the different discov-ery components in the network may periodically exchangethe reputation information and performance statistics ofthe service users, services, providers and of the discoverynodes themselves.

For each QoS parameter Cj (a concept in a QoS ontol-

ogy) provided by a service S, we evaluate the real capabil-ity of this service in providing this QoS parameter to theusers as follows: With every context cndjk, i.e., a set ofreal-world conditions as in Section 2.1, in which the ser-vice S advertises Cj , the related QoS instances qjkt arecollected. Specifically, we gather all user reports which areon S and refer to Cj in the corresponding context cndjk.Such a report by a user u at time t has the form 〈u, S, t, qs〉where ∃〈qjkt, ucndjkt〉 ∈ qs and ucndjkt v cndjk. Thereputation-based estimation of the actual quality of S inproviding the QoS parameter Cj in context cndjk is an in-stance qjk of Cj computed as follows: Since each QoS in-stance qjkt consists of a list of property-value pairs 〈pl, vlt〉,each property pl of the QoS instance qjk would then havethe value vl. The estimation of a vl based on its historicalstatistics 〈t, vlt〉 is then done using the time-based regres-sion methods which we proposed in Vu et al (2005b).

In order to improve the accuracy of this QoS evalua-tion, the feedback mechanism has to ensure that false rat-ings of the malicious autonomous agents, for example, bad-mouthing about a competitor’s service or pushing the ownrating level by fake reports or collusion with other mali-cious parties, can be detected and dealt with. Addition-ally, it is also necessary to create incentives for users tosubmit feedback on their consumed services and producesuch reports truthfully.

For dealing with the first problem, we have developed areputation-based trust management model under two real-istic assumptions. First, we assume probabilistic behaviorof services and users. This implies that the differences be-tween the real quality conformance which users obtainedand the QoS values they report follow certain probabil-ity distributions. These differences vary depending onwhether users are honest or cheating as well as on the levelof changes in their behaviors. Secondly, we presume thatthere exist a few trusted third parties. These well-knowntrusted agents always produce credible QoS reports andare used as trustworthy information sources to evaluatethe behaviors of the other users. In reality, companiesmanaging the discovery components at each peer can de-ploy special applications themselves to obtain their ownexperience on QoS of some specific Web services. Alterna-tively, they can also hire third party companies to do theseQoS monitoring tasks for them. In order to detect possiblefrauds in user feedbacks, we use reports of trusted agentsas reference values to evaluate behaviors of other users byapplying a trust-distrust propagation method and a clus-tering algorithm. Reports that are considered as incredi-ble will not be used in the QoS evaluation process. Thisproposed dishonest detection algorithm has been shown toyield very good results under various cheating behaviors ofusers although we only deploy the trusted third parties tomonitor QoS of a relatively small fraction of services (Vuet al (2005b)).

The solution for the second problem is studied exten-sively by economists using game-theory as their major tool.In our environment, so as to give incentives for users toparticipate and produce reports truthfully, side-payment

schemes with appropriate scoring rules could be well ap-plicable (Miller et al (2005)). That is, the users who givefeedback truthfully on their consumed Web services shouldget certain benefits, from either the discovery componentor from the service providers, e.g., reduced subscriptionprices when using or searching for Web services.

3.3 An Execution Model for QoS-enabled Seman-tic Web Service Discovery

One may envisage a single discovery component manag-ing a large number of Web service descriptions and beingtargeted by numerous user queries with completely unpre-dictable arrival rates. In this context, the performanceof a discovery process becomes of primordial importanceas well as its ability to respond to variations on incom-ing query arrival rates, while keeping the discovery time ofeach query at an acceptable level.

In order to provide such guarantees, we model the dis-covery process as a cost-based adaptive parallel query pro-cessing system (Porto et al (2005)). Within the discoveryprocess we distinguish independent operators with clearsemantics and to which one may associate estimated eval-uation costs. A discovery query is modeled as an oper-ator tree, in which nodes represent discovery operatorsand edges denote the dataflow between each pair of them.Potentially, a single discovery query may be modeled bya number of different operator trees, albeit equivalent interms of the results they produce. Thus we derive an oper-ator tree producing the smallest estimated cost for a givendiscovery query.

We have identified a set of discovery operators that to-gether form a discovery algebra. Each operator representsa particular function within the discovery process and maybe implemented using different algorithms:• restriction σ - reduces the set of Web service descrip-

tion candidates for matching with the user query. Ourcurrent implementation uses Bloom filtering as de-scribed in Section 3.1. Web service descriptions whoseBloom keys do not match those of the user query arefiltered out.

• match µ - applies a semantic matchmaking algorithmto assess the similarity between a Web service descrip-tion and a user query. Matchmaking is implementedas described in Section 2.3.

• rank ρ - orders matched Web service descriptionsbased on the results of the match operation and ac-cording to user’s preferences, as we will discuss later.

• project π - delivers results to the user.In addition to ordering operators into an operator tree,

our execution model extends traditional query executionby supporting reasoning and introducing some dynamicoptimization techniques. The reasoning task is invoked aspart of the match operator and deserves special attentionas it can become a bottleneck for the execution. Thus anefficient evaluation of a discovery query must target threemain issues: (1) reduce the number of reasoning tasks; (2)reduce the elapsed time for each individual Web service de-

scription semantic matchmaking evaluation; (3) adapt tovariations in execution environment conditions. We copewith these three issues by introducing control operatorsinto the operator tree that manage data transfer, data ma-terialization, reasoning task parallelization and scheduling,etc. For brevity reasons, we refer the interested reader toour previous work (Porto et al (2005)) describing the par-allelization and adaptive execution strategies in detail.

The ranking operator ρ is realized as follows: Let usconsider a user query G with QoS requirements QG =〈C ′1(qi1), cnd1〉, . . . , 〈C ′n(qin), cndn〉, where C ′k(qik), 1 ≤k ≤ n, represents the required QoS level of a QoS con-cept qk in a QoS ontology, and cndk is the user’s as-sociated context to achieve C ′k, respectively. Supposethat the list of services that match the above query isLG = S1, S2, . . . , Sm. As described in Section 2.3, theQoS semantic matchmaking µQ requires that for every Si,all QoS concepts qk’s that are specified in QG must ap-pear in the description of Si. Moreover, the correspondingcontext for Si to achieve the QoS level C ′k would be cndik,where cndk v cndik.

Let qik be the estimation of the actual QoS capabilityof Si in providing the QoS concept qk under the contextcndik. This is already computed based on the historicalperformance statistics of Si, as described in Section 3.2.We denote ρ(Si) º ρ(Sj), or Si has the partial higher rankthan Sj , in terms of QoS and with respect to the userquery G, iff Φ(i, j) =

∑nk=1 wk.1cqik≥cqjk ≥ ξG, where

0 ≤ wk ≤ 1 is the level of importance of the quality qk

to the user,∑n

k=1 wk = 1, and ξG is a threshold which ischosen according to the wk’s. qik and qjk are estimatedinstances of qk provided by Si and Sj with the meaning asexplained above. The wk’s and ξG are collected from user’spreferences in the systems. The global rank of a servicedepends on the number of higher ranks it gets when com-pared to all other services. Thus, the global rank score ofthe service Si is Ti =

∑Sj∈LG,j 6=i 1ρ(Si)ºρ(Sj). The rank-

ing operator ρ is a sort of the list LG in descending orderof Ti. In other words, we will give higher ranks to serviceswhich actually offer the more important QoS concepts atthe higher levels to the user. The semantic of the compari-son qik ≥ qjk is defined as the ordering relation of two QoSinstances in the corresponding QoS domain ontology.

4 DECENTRALIZED DISCOVERY APPROACH

In scenarios with independent providers of service directo-ries a centralized solution is infeasible. Here, a distributedstrategy for searching in a network of providers is requiredwhich still provides the same functionalities and guaran-tees as the centralized version. To achieve good scala-bility, high flexibility, and self-maintenance and addition-ally avoid unwanted dependencies among the providers,we apply a P2P-based approach for distributed discovery.However, in reality, any distributed infrastructure could beused to realize our solution.

4.1 Semantic Query Routing

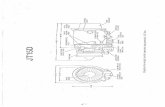

To route queries in a distributed setting, we first need toidentify all relevant discovery locations and forward thequery to these nodes. Moreover, the distribution of queriesshould be efficient in terms of message cost and bandwidthconsumption in the network. To achieve these require-ments, we propose to use a structured overlay network ontop of the network of peer discovery engines based on theindexing of the data stored at each peer (Figure 4).

Peer 1

Peer 2

Peer 3

Peer 4

Peer 5

Peer 6

Peer 9

Peer 7

Peer 8

Discovery

engine 1

Discovery

engine 3

Discovery

engine 7

Discovery

engine 8

Discovery

engine 9

P-Grid structured

overlay

P2P network of

discovery engines

Legend

Network link

Overlay link

Discovery

engine 4

Discovery

engine 5

Discovery

engine 6

Discovery

engine 2

Figure 4: Structured overlay on top of discovery nodes

Search is done via the overlay routing algorithm to lo-cate the responsible peers holding the requested informa-tion. The key idea of our solution to the identification ofrelevant discovery locations is the indexing of the ontolo-gies used in the service descriptions of the service reposi-tories. This indexing scheme is beneficial for several rea-sons: (1) A semantic service query using certain ontologiesin its description should be forwarded only to those peerswith related ontologies, since these peers are likely to storematching services. (2) This solution preserves the privacyof nodes, as it uses only the basic and not the detailedinformation about the services in a provider’s repository,which are generally unavailable for indexing in the net-work. (3) This basic information are less likely to changethan the detailed descriptions of the services. The updatecost in a large-scale network, if necessary, would be lesscostly.

Our semantic query routing also takes into account thetrust and reputation of the discovery locations in the sensethat a node would not forward queries to those with whichit had bad experiences. The evaluation of a peer’s repu-tation is based on the QoS of the services it has providedand will be discussed in more detail in the next section. Ateach peer receiving a query, another local service discov-ery process would take place in parallel. After collectingall partial results, which are already ranked by the respec-tive peers, we perform another final ranking before return-ing the results to the user. In this step, we simply assignhigher ranks for services provided by the peers with higherreputation and for those services with higher QoS levels.

4.2 Decentralized Reputation-based Trust Man-agement

In a distributed environment, a provider can have its ownservice registry for its Web services and hence may have in-centives to give feedback biased to its own services. There-fore, we also have to estimate the trustworthiness of thevarious partial search results returned from the differentdiscovery nodes. Our proposal to deal with this problem isas follows: After the invocation of a Web service S whichis provided by a node q, the user may report a QoS as-sessment on this service through a QoS report R. Thereport submission mechanism should ensure that R is sentto all nodes (or peers) pi in the list LP of peers involvedin the semantic query routing algorithm. This enables thefair sharing of QoS experience among the peers who arelikely to work in the same domain, since they are desti-nations of the semantic query route. This helps to avoidthe case where some pi and q collude to deter negativefeedback. Available cryptography techniques could be ap-plied to ensure that the feedback is authentic, for example,QoS reports could be digitally signed by both the serviceprovider and the service user. Digital signatures also en-sure that nodes can only read but not tamper with thecontents of the QoS reports. The reputation of a peer qis updated periodically by each node pi ∈ LP . For doingthis, each pi first needs to apply certain dishonesty de-tection techniques, such as the trust-distrust propagationalgorithm we proposed in Vu et al (2005b), to filter outpossibly cheating reports. Then, pi evaluates the generalreputation value of the peer q according to the set of re-ports it has about the QoS of the services provided by q.Moreover, the pi can exchange information about the rep-utation of q among each other and compute an aggregatedreputation value. A node q whose reputation (in the viewof pi) falls below a certain threshold rti is put on a black-list Li

D of nodes that pi distrusts. That means that pi willnot forward service queries to q anymore.

The reputation value of a node q should reflect its ca-pability of providing good services in the past, which isexpressed in corresponding reports of the users on theQoS of these services. A discovery node pi assigns thedefault reputation for a newly joined peer q as the mini-mal reputation value in the list of its accepted peers, i.e.,riq = max rti, min

pj∈LiD

rj. Such a default value of rep-utation would create chances for new discovery nodes tojoin the system and cause the least harm to the reputa-tion of the judging peer pi. Otherwise, pi computes the

reputation of peer q as riq =

P∀R∈Rq

e−λt1Qd≥QaP∀R∈Rq

e−λt , where

Rq is the set of QoS reports in the data repository of pi

that related to the services recommended by q, Qd and Qa

denote the QoS levels delivered by this service to the userand the respective level advertised by the provider at timet. λ is a constant to reflect the decay of the reports overtime. The indicator function 1Qd≥Qa evaluates to 1 ifthe user considers all QoS requirements to be fulfilled bythe service and 0 otherwise. Its computation is straight-

forward by comparing values in the reports with those inthe service advertisements.

5 SOFTWARE ARCHITECTURE

Figure 5 shows the high-level software architecture of oursearch engine. Each participating node hosts the samebasic functionalities and cooperates with other sites overthe network, i.e., the individual discovery nodes form adiscovery overlay network.

Network

Service

UserService

Provider

QoS

Reporters

Discovery engine nDiscovery engine 1

Query Analyzer

Semantic

Query Engine 1

Service Management and

QoS Reputation Mechanism

...Semantic

Query Engine 2

Semantic

Query Engine n

Web Service Registry InterfaceMediation Service Interface

Query Optimizer

and

Execution

Manager

QoS Data

Repository

Communication Manager

Service Index

Repository

Semantic Web Service Discovery Interface

Figure 5: The search engine architecture

The architecture consists of the following componentshosted by each node:

Semantic Web Service Discovery Interface: This is thecentral access point for all available functionalities (search-ing, querying reputation information, providing feedback,system management, etc.)

Query Analyzer: Upon receiving a user request, e.g.,a WSMO goal, this component extracts the relevant in-formation, i.e., the required functional and QoS specifi-cations, and generates an internal representation for thequery optimizer.

Query Optimizer and Execution Manager : This compo-nent uses the output of the query analyzer and producesan execution plan for the semantic matchmaking, usingthe algebraic operators defined in Section 3.3. The keyidea is that we use the query optimizer to parallelize thesemantic matchmaking (and other operators) over differ-ent semantic query engines. The execution manager partof the component is responsible for controlling query exe-cution and collecting the final results.

Semantic Query Engine: These components in parallelrun parts of the query execution plan assigned to them

by the query optimizer / execution manager. All queryengines have access to the service index repository, the QoSdata repository, and the service registry of the local nodevia standardized interfaces to get the required data fortheir execution. They can also use the mediation serviceto deal with translating among different QoS ontologiesduring the matchmaking processes.

Service Index Repository : This repository stores the in-dexes of the web service descriptions obtained from theservice registry. During the QoS-enabled service discoveryprocess, information in this database helps the semanticquery engines to filter out service descriptions irrelevantto the query by comparing the category index of a serviceand a user’s query. This frees us from doing expensivereasoning on those services.

Service Management and QoS Reputation Mechanism:This component performs the semantic categorization ofthe semantic web services in the service index repository.It is also responsible for evaluating the QoS of different ser-vices, given the data collected from various QoS reporters.

QoS Data Repository : This repository stores the feed-back of QoS reporters, the reputation information of allservice providers as well as the evaluated QoS informationof all QoS-aware web services, i.e., those services with QoSinformation in their descriptions. The QoS data repositoryacts as an additional data source for the semantic queryengines during query resolution.

Communication Manager : This component enablescommunication among the nodes of the search engine, e.g.,query routing to and result collection from remote nodes.

While these components already provide the core func-tionalities required to perform the discovery task, they de-pends on two following external components: an optionalmediation service and a mandatory service repository.

Mediation Service Interface: This is the access pointto the mediation services possibly provided by other com-ponents, e.g., the mediation service of the WSMX frame-work. Mediation is incorporated into our design via a stan-dardized interface.

Web Service Registry Interface: This interface pro-vides access to the web service registry to be used, whichprovides us with functionalities to manage the storage andretrieval of web service descriptions, ontologies and asso-ciated mediators.

6 DISCUSSION OF OUR APPROACH

6.1 Discovery Cost Analysis

We anticipate that the main cost of the discovery processis local service discovery for two reasons: (1) A discoverycomponent is able to pre-compute and maintain destina-tions for distributed queries to accelerate the search forrelevant discovery locations. (2) The distributed discoveryis done in parallel at these locations and the main costwould then be that of the slowest local discovery process.

Therefore, in this section we focus on the analysis of theefficiency of the centralized discovery.

We adopted a parallel cost model to represent the cost ofevaluating a discovery query. It considers the costs of in-dividual operators as well as a global function that modelsthe complete query execution. The global execution modelsupports two modes: intra-operator and inter-operator.The former instantiates multiple copies of the same op-erator into different execution nodes, whereas the latterprovides a pipelined execution of operators in a branchof the operator tree. In addition, we consider an initial-ization cost Ic that encompasses the cost of instantiat-ing fragments of the operator tree into different nodes,as well as the cost of transferring initial data to remotenodes. For the initialization cost we have the estimationIc = tB + tOpt + tCI where tB is the cost of computingthe Bloom key for the user query, tOpt is the cost of thescheduling algorithm for finding the set of most suitablenodes, and tCI is the time for initializing the appropriatenodes for the corresponding operators which is negligiblein a centralized environment with well-known operators.

Once the query execution plan has been produced andthe system has been initiated, the time of checking whetherthe first web service description S1 matches with the userquery G is T1 ' tCL + tµ1S1uG, where tCL is the timeof checking whether the service class of S matches withthat of the query, tµ is the time for doing the semanticmatchmaking µF ∧ µQ of the query with the service, iftheir classes matched with each other, i.e., 1S1uG = 1.

As our semantic query engines work as a parallel pipelineprocessing system, and the most expensive stage is thematchmaking µ which is scheduled to run in parallel on Ne

different nodes, the time of doing the semantic matchingof NS web service descriptions against the query is Ψ =Ic +T1 + tµ( kNS

KSNe− 1)+ tR ' tB + tOpt + tCL + tµ1S1uG +

tµ( kNS

KSNe−1)+ tR, where Ne is computed according to the

scheduling algorithm, KS is the number of service classes,k is the number of service classes matched with the query,and tR is the cost of the ranking operator.

Suppose each ontology has an average of Np partitions,each of which has Nc concepts. Furthermore, let the aver-age number of concepts in a web service description and ina user query be ls and lg, correspondingly. For the estima-tion of the execution time of different algorithms, in thefollowing we also use the notions τz to denote the approx-imate time for doing one operation of the correspondingalgorithm z. With the use of a hash table, finding therespective concept groups for each concept in the servicequery can be done with constant time O(1). Hence wehave tB = lgτb. Since the checking whether the querymatches with a service class is a bitwise OR, tCL = 1τc.The time tµ is of a logic-based reasoning operation, whichusually has exponential complexity in terms of the numberof concepts in the ontology and of the service and querydescription (Baader et al (2003), Ch. 9), so we could envis-age tµ ' eNpNclglsτµ in the worst case. The cost tOpt of thescheduling algorithm in our scenario is O(P log P ), where

P is the number of all available nodes in the system (Portoet al (2005)). Given a limited number of nodes in our lo-cal environment, tOpt is also negligible with respect to theactual execution time of the whole discovery process. Thetime tR of the ranking algorithm, given the length of theresult list lr, depends mostly on the pair-wise comparisonof services. Thus tR ≈ (lrlg)2τr.

Therefore, we have Ψ ' lgτb + tOpt + τc +eNpNclglsτµ1S1uG +eNpNclglsτµ( kNS

KSNe−1)+(lrlg)2τr. This

could be further reduced as Ψ ' eNpNclglsτµkNS

KSNe'

tµkNS

KSNe, since this is the most significant cost compared

with the other terms such as lgτb, τc, eNpNclglsτµ1S1uG and

(lrlg)2τr. On the other hand, the time cost of the dis-covery process without any enhancement techniques isΩ = NStµ. The estimated speed-up of our solution istherefore Sp = Ω

Ψ ' NStµ

NStµk

KSNe

= KSNe

k .

As the concept definitions are actually the core of thefunctional and QoS criteria in a user query, with the se-mantic categorization of services based on the groups ofconcepts they operate on, we can have a high selectivitywhile choosing the matched service classes for a certainrequest. That is, KS is expected to be high and k is ofmuch smaller magnitude. Thus the discovery time for onequery can be can significantly reduced. Moreover, in anenvironment where there is an unexpected high number ofqueries, our solution also takes into account the load andthroughput statistics of each node in the system and op-timizes the execution via the node-scheduling algorithm.Therefore, our total gain of efficiency is extremely relevantin terms of not only the reduction in the response timeof each individual query but also the optimization of theoverall performance of the system as well.

6.2 Effectiveness of the Reputation-based TrustManagement Model

We have implemented the QoS-based selection and rank-ing algorithm with the application of our reputation-basedtrust management model and then studied its effective-ness under various settings. We observed the dependencybetween the quality of selection and ranking results andother factors, such as the percentage of trusted users andreports, the rate of cheating users in the user society andthe various behaviors of users. Details of these experi-ments are discussed in Vu et al (2005b). Through em-pirical experiments, our QoS-based service selection andranking algorithm yields very accurate and reliable resultseven in extremely hostile environments (up to 80% cheat-ing users with varying cheating behaviors), which is dueto facts that the use of trusted third parties monitoring arelatively small fraction of the services (3%–5%) can sig-nificantly improve the detection of dishonest behavior evenin extremely hostile environments. The experiments sug-gest to deploy trusted agents to monitor the QoS of themost important and most widely-used services in order toget a “high impact” effect when estimating behaviors ofusers. Additionally, we can pre-select the important ser-

vices to monitor, keep the identities of those specially cho-sen services secret and change them periodically to makethe model even more robust. Thus, cheaters will not knowon which services they should report honestly in order tobecome high-reputation recommenders and have to pay avery high cost to if they want to achieve great influence inthe system. In reality, this also helps us to reduce the costof setting up and maintaining trusted agents as we onlyneed to deploy them to monitor changing sets of servicesat certain time periods.

7 RELATED WORK

The traditional UDDI standard (http://uddi.org/pubs/uddi-v3.0.2-20041019.htm) does not mention QoS for Webservices, but many proposals have been devised to extendthe original model and describe Web services’ quality ca-pabilities, e.g., Ludwig et al (2003), Frolund and Koisten(1998), and Tosic (2004). Chen et al (2003) suggest usingdedicated servers to collect the feedback of consumers andthen predict future performance of published services. Bil-gin and Singh (2004) propose an extended implementationof the UDDI standard to store QoS data submitted by ei-ther service providers or consumers and suggest a querylanguage (SWSQL) to manipulate, publish, rate and se-lect those QoS data from the repository. According toKalepu et al (2004), the reputation of a service should becomputed as a function of three factors: different ratingsmade by users, service quality compliance and its verity,i.e., the changes of service quality conformance over time.However, these solutions have not yet addressed the trust-worthiness of QoS reports produced by various users, whichis important to assure the accuracy of the QoS-based se-lection and ranking results. Liu et al (2004) rate servicesin terms of their quality with QoS information provided bymonitoring services and users. The authors also employ asimple approach of reputation management by identifyingevery requester to avoid report flooding. In Emekci et al(2004), services are allowed to vote for quality and trust-worthiness of each other and the service discovery engineutilizes the concept of distinct sum count in sketch theoryto compute the QoS reputation for every service. Othersolutions such as Tian et al (2003); Ran (2003); Ouzzaniand Bouguettaya (2004); Patel et al (2003); Maximilienand Singh (2002) use mainly third-party service brokers orspecialized monitoring agents to collect performance of allavailable services in registries, which would be expensivein reality.

Regarding decentralized solutions for service discovery,Verma et al (2005) and Schlosser et al (2002) propose adistribution of Semantic Web service descriptions basedon a classification system expressed in service or registryontologies. Kashani et al (2004) uses an unstructured P2Pnetwork as the service repository, which would be not veryhighly scalable and efficient in terms of search and updatecosts. Schmidt and Parashar (2004) indexes service de-scription files (WSDL files) by a set of keywords and uses

a Hilbert-space-filling-curve to map the n-dimensional ser-vice representation space to a one-dimensional indexingspace and hash it onto the underlying DHT-based storagesystem. Emekci et al (2004) suggests the discovery of ser-vices based on the process structure of the services, but weconsider these as less important in our case, since they aredifficult to use in queries and unlikely to be the primaryselection criteria in searches, and thus not critical in termsof indexing.

8 CONCLUSIONS

In this paper we have proposed a semantic descriptionmodel for the QoS of Web services, whose expressivity fa-cilitates modeling a wide range of QoS requirements. Ourframework includes a solution for dynamic assessment andmanagement of QoS values of Web services based on userfeedback and performs QoS-enabled discovery and rank-ing of Web services based on their QoS compliance. Theranking is based on a reputation-based trust managementmechanism to evaluate the actual QoS of the services,which increases the robustness and accuracy of our solu-tion. We have introduced a scalable and efficient QoS-enabled Semantic Web service discovery framework, whichcan be used both as a centralized discovery component oras a decentralized registry system consisting of cooperatingdiscovery nodes (discovery overlay network). Additionally,our discovery architecture is based on a query processingarchitecture in which operations are modeled algebraically,enabling query optimization and parallelization of opera-tor execution. The decentralized discovery approach in ourframework prescribes a realistic solution for large-scale dis-tributed discovery of Web services and addresses the issueof heterogeneous and distributed ontologies.

ACKNOWLEDGMENTS

The work presented in this paper was (partly) carried outin the framework of the EPFL Center for Global Com-puting and was supported by the Swiss National FundingAgency OFES as part of the European project DIP (Data,Information, and Process Integration with Semantic WebServices) No 507483. Le-Hung Vu is supported by a schol-arship of the Swiss federal government for foreign students.

REFERENCES

Baader, F., Calvanese, D., McGuinness, D., Nardi, D.,Patel-Schneider, P. (2003) ‘The Description Logic hand-book’, Cambridge University Press, 2003.

Bilgin, A. S. and Singh, M. P.(2004) ‘A DAML-basedrepository for QoS-aware semantic Web service selec-tion’, Proceedings of the ICWS’04, page 368, USA, 2004.

Bloom, B.H. (1970) ‘Space/Time trade-offs in hash cod-ing with allowable errors’, Communication of the ACM’,13(7), p.p. 422–426, 1970.

Burstein, M. et al (2005) ‘A Semantic Web Services Ar-chitecture’, IEEE Internet Computing. Vol. 9, No. 5,September, October 2005.

Castano, S., De Antonellis, V., and di Vimercati, S. D. C.(2001)‘Global Viewing of Heterogeneous Data Sources’,IEEE Transactions on Knowledge and Data Engineer-ing, vol. 13, no. 2, pp. 277-297, Mar/Apr, 2001.

Chen, Z., Liang-Tien, C., Silverajan, B., Bu-Sung, L.(2003) ‘UX- an architecture providing QoS-aware andfederated support for UDDI’, Proceedings of ICWS’03,2003.

Ding, H., Solvberg, I. T., and Lin, Y. (2004) ‘A visionon semantic retrieval in P2P network’, Proceedings ofAINA’04, USA, 2004.

Emekci, F., Sahin, O. D., Agrawal, D., Abbadi, A. E.(2004) ‘A peer-to-peer framework for Web service dis-covery with ranking’, Proceedings of the ICWS’04, page192, USA, 2004.

Frolund, S., Koisten, J. (1998) ‘QML: A Language forQuality of Service Specification’, http://www.hpl.hp.com/techreports/98/HPL-98-10.html.

Kalepu, S., Krishnaswamy, S., Loke, S. W. (2004) ‘Reputa-tion = f(user ranking, compliance, verity)’, Proceedingsof ICWS’04, page 200, USA, 2004.

Kashani, F. B., Chen, C. C., Shahabi, C. (2004) ‘WSPDS:Web services peer-to-peer discovery service’, Proceedingsof the International Conference on Internet Computing,p.p. 733–743, 2004.

Keller, U., Lara, R., Lausen, H., Polleres, A. and Fensel,D. (2005)‘Automatic location of services’, Proceedings ofESWC’05’, 2005.

Li, L. and Horrocks, I. (2003) ’A software framework formatchmaking based on Semantic Web technology’, Proc.of the Twelth Intl. WWW’03, Hungary, 2003.

Liu, Y., Ngu, A., Zheng, L. (2004) ‘QoS computation andpolicing in dynamic Web service selection’, Proceedingsof the WWW conference on Alternate track papers &posters, p.p. 66–73, USA, 2004.

Ludwig, H., Keller, A., Dan, A., King, R.-P., Franck,R. (2003) ‘Web Service Level Agreement (WSLA) Lan-guage Specification’, available online at http://www.research.ibm.com/wsla/WSLASpecV1-20030128.pdf.

Maximilien, E. M. and Singh, M. P.(2002)‘Reputation andendorsement for Web services’, SIGecom Exch., 3(1):24–31, 2002.

Miller, N., Resnick, P., and Zeckhauser, R. (2005)‘Eliciting Informative Feedback: The Peer-PredictionMethod’, Forthcoming in Management Science, 2005.

Ouzzani, M., Bouguettaya, A. (2004) ‘Efficient access toWeb services’, IEEE Internet Computing, p.p. 34–44,March/April 2004.

Patel, C., Supekar, K., Lee, Y. (2003)‘A QoS orientedframework for adaptive management of Web servicebased workflows’, Proceeding of DEXA’03, p.p. 826–835,2003.

Porto, F., Silva, V. F. V., Dutra, M. L., Bruno, S. (2005)‘An adaptive distributed query processing grid service’,Proceedings of the Workshop on Data Management inGrids, VLDB2005, Trondheim, Norway, 2005.

Ran, S.(2003) ‘A model for Web services discovery withQoS’, SIGecom Exch., 4(1):1–10, 2003.

Schlosser, M., Sintek, M., Decker, S., Nejdl, W. (2002) ‘Ascalable and ontology-based P2P infrastructure for se-mantic Web services’, Proceedings of P2P’02, page 104,Washington, DC, USA, 2002.

Schmidt, C. and Parashar, M. (2004)‘A peer-to-peer ap-proach to Web service discovery’, Proceedings of theWWW’04, 7(2):211–229, 2004.

Tang, C., Xu, Z., and Dwarkadas, S. (2003) ‘Peer-to-peerinformation retrieval using self-organizing semantic over-lay networks’, Proceedings of ACM SIGCOMM’03, USA,2003.

Tian, M., Gramm, A., Naumowicz, T., Ritter, H., Schiller,J. (2003) ‘A concept for QoS integration in Web ser-vices’, Proceedings of Fourth International Conferenceon Web Information Systems Engineering Workshops,Vol. 00, p.p. 149–155, Italy, 2003.

Tosic, V. (2005) ‘Service Offerings for XML Web Servicesand Their Management Applications’, Ph.D. disserta-tion, Department of Systems and Computer Engineer-ing, Carleton University, Canada, 2004.

Verma, K., Sivashanmugam, K., Sheth, A., Patil, A.,Oundhakar, S., Miller., J. (2005) ‘METEOR-S WSDI:A scalable P2P infrastructure of registries for semanticpublication and discovery of Web services’, Inf. Tech.and Management, 6(1):17–39, 2005.

Vu, L.-H., Hauswirth, M., Aberer, K. (2005a) ‘TowardsP2P-based Semantic Web Service Discovery with QoSSupport’, Proceeding of Workshop on Business Processesand Services (BPS), Nancy, France, 2005.

Vu, L.-H., Hauswirth, M., Aberer, K. (2005b) ‘QoS-basedservice selection and ranking with trust and reputationmanagement’, Proceedings of OTM’05, R. Meersmanand Z. Tari (Eds.), LNCS 3760, p.p. 466-483, 2005.