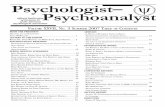

Tablet Scoring: Current Practice, Fundamentals, and ... - MDPI

A Multi-Criteria Decision Analysis based methodology for quantitatively scoring the reliability and...

Transcript of A Multi-Criteria Decision Analysis based methodology for quantitatively scoring the reliability and...

Science of the Total Environment 538 (2015) 102–116

Contents lists available at ScienceDirect

Science of the Total Environment

j ourna l homepage: www.e lsev ie r .com/ locate /sc i totenv

A Multi-Criteria Decision Analysis based methodology for quantitativelyscoring the reliability and relevance of ecotoxicological data

Panagiotis Isigonis b,c, Philippe Ciffroy a, Alex Zabeo b,c, Elena Semenzin b,c, Andrea Critto b,c,Silvio Giove d, Antonio Marcomini b,c,⁎a EDF R&D, Département LNHE 6 Auai Watier, 78400 Chatou, Franceb Consorzio Venezia Ricerche, Via della Libertà 5–12, 30175, Marghera, Venice, Italyc Department of Environmental Sciences, Informatics and Statistics, University Ca' Foscari Venice, Calle Larga S. Marta 2137, 30123 Venice, Italyd Department of Economics, University Ca' Foscari of Venice, Venice, Italy

H I G H L I G H T S G R A P H I C A L A B S T R A C T

• Quantitative scoring of the reliabilityand relevance of ecotoxicological data

• MCDA based methodology, which han-dles uncertainty and uses fuzzy logic.

• Innovative Weight of Evidence (WoE)assessment framework

• Assessment framework based on threesolid Lines of Evidence (LoEs)

⁎ Corresponding author at: Department of EnvironmenE-mail address: [email protected] (A. Marcomini).

http://dx.doi.org/10.1016/j.scitotenv.2015.06.0160048-9697/© 2015 Elsevier B.V. All rights reserved.

a b s t r a c t

a r t i c l e i n f oArticle history:Received 29 April 2015Received in revised form 3 June 2015Accepted 3 June 2015Available online 22 August 2015

Editor: D. Barcelo

Keywords:Ecotoxicological dataEcological risk assessmentMulti-Criteria Decision AnalysisFuzzy logic

Ecotoxicological data are highly important for risk assessment processes and are used for deriving environmentalquality criteria, which are enacted for assuring the good quality of waters, soils or sediments and achieving desir-able environmental quality objectives. Therefore, it is of significant importance the evaluation of the reliability ofavailable data for analysing their possible use in the aforementioned processes. The thorough analysis of current-ly available frameworks for the assessment of ecotoxicological data has led to the identification of significantflaws but at the same time various opportunities for improvement. In this context, a new methodology, basedon Multi-Criteria Decision Analysis (MCDA) techniques, has been developed with the aim of analysing the reli-ability and relevance of ecotoxicological data (which are produced through laboratory biotests for individual ef-fects), in a transparent quantitative way, through the use of expert knowledge, multiple criteria and fuzzy logic.The proposed methodology can be used for the production of weighted Species Sensitivity Weighted Distribu-tions (SSWD), as a component of the ecological risk assessment of chemicals in aquatic systems. The MCDA ag-gregation methodology is described in detail and demonstrated through examples in the article and thehierarchically structured framework that is used for the evaluation and classification of ecotoxicological data is

tal Sciences, Informatics and Statistics, University Ca' Foscari Venice, Calle Larga S. Marta 2137, 30123 Venice, Italy

103P. Isigonis et al. / Science of the Total Environment 538 (2015) 102–116

shortly discussed. The methodology is demonstrated for the aquatic compartment but it can be easily tailored toother environmental compartments (soil, air, sediments).

© 2015 Elsevier B.V. All rights reserved.

1. Introduction

Ecological risk assessment (ERA) is defined as the estimation of boththe magnitude and the probability of environmental harm caused byhuman activities (Barnthouse and Suter, 1986). Ecological risk assess-ment usually focuses in the estimation of negative effects on specificecosystems (Breitholtz et al., 2006) and according to the EuropeanCom-mission (2003) it is completed in four steps: hazard identification,dose–response assessment (effect assessment), exposure assessment,and risk characterisation. Many international organisations have devel-oped frameworks for ERA, such as the US Environmental ProtectionAgency (US EPA, 1998), the World Health Organisation (WHO, 2001),the European Commission (EC, 2003) and others (OECD—Organisationfor Economic Co-operation and Development, EPPO — European andMediterranean Plant Protection Organisation, ECETOC — EuropeanCentre for Ecotoxicology and Toxicology of Chemicals). These frame-works have been evaluated, advanced and adapted in order to meetthe needs of the assessors in various countries (e.g. European Union,United States, Japan, Canada, South Africa, Australia and NewZealand), as identified by Suter (2006) and Bradbury et al. (2004).

ERA can be divided in two main tiers: Screening ERA and site-specific ERA (Critto and Suter, 2009). While screening risk assessmentaims at identifying chemicals and agents that do not pose hazards atthe ecosystem under analysis, and thus could be excluded from the as-sessment process, site-specific risk assessment aims at providing esti-mations of risks to support decision-making processes (Critto andSuter, 2009). The definition of Environmental Quality Criteria (EQC) isincluded in the context of screening ERA. EQ criteria (or standards)are threshold numerical values that indicate a level beyond whichthere is a significant risk that the associated environmental quality ob-jective has not been achieved and for which the assessors shouldadopt actions for the preservation of the ecosystems, including the de-velopment of a site-specific risk assessment (EPA, 2005). The way envi-ronmental standards are derived, and the frameworks within whichthey are used, differ between countries and regions. The EQ criteriacan be derived either through deterministic or probabilistic approaches,with the latter being preferred in the recent advances in thefield as theyallow to take into consideration uncertainty as well as the spatial andtemporal variability of the data (Verdonck et al., 2002). In the recentyears, various international frameworks and legislation have been de-veloped to tackle important issues regarding the water related EQC,such as the establishment, the derivationmethods and the implementa-tion. These include theWater FrameworkDirective (EC, 2000), followedby the REACH regulation (EC, 2006), the Environmental Quality Stan-dards Directive (EC, 2008) of the European Commission and the relatedTechnical Guidance Document (TGD-EQS) for Deriving EnvironmentalQuality Standards (EC, 2011), the standards of the European ChemicalsAgency (ECHA, 2008b), the Water Quality Standards Regulation of theUS Environmental Protection Agency (US EPA, 1983) and the relatedWater Quality Standards Handbook (US EPA, 1994). The advances inthe field of risk assessment have urged scientists to develop methodol-ogies that are strongly connected with the decision-making processesand specifically develop high-quality assessment that address theneeds of decision makers. Ecotoxicological data are used in the deriva-tion of EQC and in the risk assessment processes, therefore it is of highinterest the analysis of their reliability and relevance that will allowthe derivation of more significant and relevant EQ criteria, as well asmore reliable risk assessments.

Ecotoxicity data can be obtained throughmany different approachesand conditions, e.g., the protocol can be standardized or not; for non-standard tests, experimental design and/or analytical methods can

vary among laboratories; time duration can vary among experiments,leading to chronic or acute data; different physiological endpoints canbe observed, e.g. mortality, growth, reproduction and more; statisticsused for interpreting data can differ, leading to e.g. NOEC or ECx andmore. Each ecotoxicity datummust then be evaluated to rank its accept-ability for being used in the further risk assessment process. This is pos-sible through the incorporation of ecotoxicity data in the building ofSpecies Sensitivity Weighted Distribution — SSWD (Duboudin et al.,2004), which can be used as a component of the ecological risk assess-ment of chemicals in aquatic systems. So far, such evaluations of indi-vidual data were often done on a case-by-case expert judgement. Thisresults in a poor transparency, reproducibility and predictability of therisk assessment process because each expert may have his own implicitset of criteria and rankings for rejecting or not an ecotoxicity datum.

To improve ecotoxicity data evaluation, several structured frame-works based on lists of pre-defined criteria were proposed. A firstattempt to classify ecotoxicological data, according to a systematic ap-proach, and to harmonise data evaluation processes was proposed byKlimisch et al. (1997), who proposed four qualitative reliability catego-ries (i.e. Reliable without restriction, Reliable with restriction, Not reli-able and Not assignable). In order to help the assignment of a study tothese categories of reliability, Klimisch et al. (1997) proposed to screenseveral elements, such as the description of the test procedure, the dataon the measured parameters, test species, exposure period, the statisti-cal evaluations andmore.Warne et al. (1998) proposed amore detailedscheme for assessing the quality of aquatic ecotoxicological data. It isbased on a series of questions and a score is given to the answer ofeach question; the scores of all questions are then summed in order toobtain a ‘total score’ for each datum, expressed as a percentage of themaximum possible score. The data are classified as being unacceptable,acceptable or high quality, depending on whether the quality score isb50%, between 51 and 79% and N80% respectively. Hobbs et al. (2005)submitted Warne's scheme to a panel of experts and refined the set ofquestions in order to modify/clarify ambiguous or poorly written ques-tions, to reduce assessor variation and thus improve the consensus levelamong experts. Similarly, Schneider et al. (2009) developed a tool(called ToxRTool) for assessing reliability of toxicological data (bothin vitro and in vivo data and rather dedicated to human health risk as-sessment). The process followed by Schneider et al. (2009) is similarto those of Hobbs et al. (2005), i.e. based on a set of questions, refinedafter consultation of a panel of experts. One innovation of Schneider'sframework is the introduction of ‘red criteria’: non-compliance withat least one red criterion leads to the ‘Not reliable’ category, irrespectiveof the total score achieved. Breton et al. (2009) developed a Quality As-surance system (called eco-QESST) specifically dedicated to three of themost common tests used in ecotoxicology, i.e. the fish acute toxicity test(OECD, 1992), the Daphnia acute immobilization and reproduction toxic-ity test (OECD, 2004) and the algae growth and inhibition effects test(OECD, 2002). The eco-QESST system is based on a set of questions,most of them being answered as either by ‘Yes’, ‘No’, ‘Not applicable’or ‘Not reported’. A scoring process is included in the eco-QESST system:a ‘Yes’ answer is given a specific weight, depending on the relative im-portance of the factor addressed by the question, while a ‘No’ or a ‘Notreported’ answer is given a zero weight. The overall study qualityscore (OSQS) is calculated as a percentage ofmaximum sum of weights.Finally, Ågerstrand et al. (2011) reviewed criteria for reporting andevaluating ecotoxicological tests dedicated to pharmaceuticals. Aframework allowing a comparative assessment of standard and non-standard tests was then developed. A main innovation of Ǻgerstrand'sframework was the explicit subdivision of the analysis criteria in reli-ability and relevance criteria.

104 P. Isigonis et al. / Science of the Total Environment 538 (2015) 102–116

Such frameworks improve our vision of the risk assessment decisionprocesses because they are based on a priori selected and objectivecriteria and help to rank the acceptability of individual datasets to thefulfilment of strict specifications. Despite their obvious positive input,existing assessment schemes present however some flaws, e.g.:(i) initial schemes proposed by Klimisch are based on rather poorlywrit-ten questions that can be interpreted differently by risk assessors, lead-ing to significant variations among experts. More unambiguousquestions are needed for improving consensus and reproducibilityamong experts; (ii) for most of the frameworks, the qualitative ‘summa-ry’ result appears ‘poor’ compared to the information collected duringthe assessment process: data are assigned to three (or four) qualitativecategories only. Concretely, the first categories (Reliable without restric-tion, Reliable) are actually not (or poorly) distinguished in further riskprocess (e.g. they are generally equally used for SSD construction),while data belonging to the last one (Not reliable) are actually complete-ly ignored. Only the eco-QESST system proposes a quantitative scoringsystem, but rules for the assignment of data to Klimisch categorieswere finally defined (from ‘Reliable without restriction’ if Score N 90%,to ‘not assignable’ if Score b60%) and quantitative scores are hidden;(iii) Schneider et al. (2009) identified rightly that “a source of heteroge-neity among experts is the degree to which they include elements of rel-evance and adequacy into their rating and how they weighted thoseagainst reliability. The concepts of reliability and relevance and their dis-crimination need to be discussed more thoroughly”. However, exceptǺgerstrand's scheme, it can be noted that reliability and relevance arenot explicitly distinguished, leading to biased weighting process.

In order to tackle the abovementioned issues, the French-funded re-search project ‘AMORE’ aimed at the development of a risk assessmenttool, which incorporates innovative methodologies for the analysis ofecotoxicological data.

The objective of the present studywas: (i) to develop an assessmentframework of individual ecotoxicity data providing quantitative scores;(ii) to include in the scoring system sources of heterogeneity among ex-perts; and (iii) to build an operational methodology able to help asses-sors to quantitatively rank ecotoxicity data. For this purpose, theassessmentmethodologywas built on a hierarchical structure: informa-tion required for calculating a Quality score is structured according toLines of Evidence (at the highest level of the hierarchy), categories,criteria groups and questions (at the lowest level of the hierarchy) suc-cessively. This structured framework was submitted to a panel of ex-perts in ecotoxicology, allowing defining veto criteria and preferencerules according to a Multi-Criteria Decision Analysis (MCDA)-basedWeight of Evidence approach. The proposed methodology can be usedfor the production of weighted Species Sensitivity Weighted Distribu-tions (SSWD), as a component of the ecological risk assessment ofchemicals in aquatic systems.

2. Background

2.1. Weight of evidence and MCDA

The term ‘Weight of Evidence’ constitutes neither a scientificallywell-defined term nor an agreed formalised concept characterised bydefined tools and procedures (Weed, 2005). An evidence based ap-proach involves an assessment of the relative values/weights of differ-ent pieces of the available information that have been retrieved andgathered in previous steps (ECHA, 2010). To this end, a value needs tobe assigned to each piece of information. These weights/values can beassigned either in an objective way by using a formalised procedure orby using expert judgement. The weight given to the available evidencewill be influenced by factors such as the quality of the data, consistencyof results, nature and severity of effects, relevance of the information forthe given regulatory endpoint.

Weight of Evidence (WoE) refers to a large family of methods and isapplied into various scientific projects, mainly known for the

applications into human health and ecological risk assessments. Weed(2005) and Linkov et al. (2009), Linkov et al. (2011) have providedcomprehensive critical reviews on the concept and the uses of Weightof Evidence, both in an exploratoryway aswell as in an effort to providea categorisation of the available qualitative and quantitative WoEmethods and their use in environmental assessments.

Multi-Criteria Decision Analysis includes a wide variety of methodsfor the evaluation and ranking, or selection, of different alternativesthat consider all the aspects of a decision problem involving many ac-tors (Giove et al., 2009). As such, it has been considered ideal for han-dling the needs of the proposed research.

Using Multi-Criteria Decision Analysis methods in WoE approachesallows to:

• classify available information according to a hierarchical structurebased on different ‘Lines of Evidence’, each of them being subdividedinto several levels of criteria;

• normalise information, i.e. assigning common units to qualitative (e.g.originating from expert judgement), semi-quantitative (e.g. Booleaninformation) or quantitative information;

• assign different weights and relations to the selected criteria in orderto rank and compare criteria based alternatives through an integratedapproach;

• define decision indices integrating all the selected criteria on the basisof experts' judgements and decision makers' insights.

3. Material and methods

3.1. Participatory process

One of the main innovative aspects of the proposed methodology isthe strong focus that has been given to the participation of experts inthe design and creation of the assessment process. A total of 23 expertsand senior researchers in the fields of ecotoxicology and environmentalchemistry, from prestigious research centres, universities and companiesin France (EDF Energy, Veolia Environmental Services, INERIS — FrenchNational Institute for the Industrial Environment and Risks, IRSN — Insti-tute for Radiological Protection and Nuclear Safety) and Europe (KTHRoyal Institute of Technology, University of Basel, UNICATT — CatholicUniversity of the Sacred Heart, UFZ— Helmholtz Centre for Environmen-tal Research, BPI — Benaki Phytopathological Institute) have been in-volved throughout the course of the project, in a strongly interactiveparticipatory process.

The participatory process has been structured in such a way that itwould allow the extraction of valuable information regarding thecriteria that are used in the assessment of ecotoxicological data, aswell as the possible interactions among those criteria and the impor-tance of the elements used in the Weight of Evidence methodology. Inaddition, it also enabled the research team to gather the necessary infor-mation for the creation of the knowledge database of the research pro-ject, upon which the MCDA methodology has been designed. Theprocess included the use of two different questionnaires and the organi-sation of a workshop for gathering live the experts and providing themthe possibility to interact in real time.

In the next sections, the process that has been followed is describedin further detail.

3.1.1. Questionnaire for the evaluation of the criteria hierarchyThe assessment methodology is based on a hierarchical structure,

which relates the different aspects of ecotoxicological data in a clearand solid fashion. The hierarchy is based on four levels, starting fromthe Lines of Evidence (LoE). Each LoE is subdivided into several catego-ries, which are further subdivided in criteria groups, and finally in spe-cific assessment criteria, which are evaluated with the use of detailed

105P. Isigonis et al. / Science of the Total Environment 538 (2015) 102–116

questions. Criteria-questions are the lowest level of the hierarchy, theone which must be informed by the user.

As a basis for defining such a hierarchical structure, the frame-works developed by US EPA (1991), Klimisch et al. (1997), Warneet al. (1998), Hobbs et al. (2005), Schneider et al. (2009), Bretonet al. (2009) and Ågerstrand et al. (2011) were used. The criteriaevaluation questions andweighting rules suggested in these publica-tions were reviewed in detail to provide a first hierarchical structure,which included 24 criteria-questions, organised in 10 categories.This latter was submitted for evaluation to a panel of eight expertsin the field of ecotoxicology, who had to answer the following five(Critto and Suter, 2009) questions for each criterion/question:

• In your opinion, is this criterion potentially relevant in the quality as-sessment of eco-toxicological data generated through lab bio-test?

• In your opinion, is the question unambiguous?• Would you split the question into several questions because you con-sider that it ambiguously merges several issues?

• How would you change the sentence?• In your opinion, is this criterion in the right Category/Criteria Group?

In addition, experts were asked if they would add new Categories/Criteria groups/Criteria-Questions.

This set of questions aimed at: (i) defining if the hierarchicalstructure was well designed (i.e. if criteria-questions are properlyplaced in the right group/category) and shared by a significantpanel of experts; (ii) detecting potential lacking LoEs/Categories/Criteria groups/Criteria-Questions; and (iii) guaranteeing thatquestions were unambiguous.

Based on the results of the first part of the experts' participatoryprocess, the first version of the criteria-questions hierarchy hasbeen significantly expanded to include a total of 57 assessmentcriteria-questions, organised in 23 criteria groups and 11 categories.

In the paper, only the finalWoE hierarchical structure resulting fromthis process of expert consultation is presented (see Table A.1 in theSupporting Information).

3.1.2. Questionnaire for expert consultationOnce theWoE hierarchical framework has been established and the

evaluation basis had been set up, the next step of the participatoryprocess consisted in the design of a process for the exploitation of theaggregation operators to be used in the MCDAmethodology. In cooper-ationwith the existing panel of experts, an analysis has been performedin order to identify which parameters and characteristics of the criteriahierarchy are important and should be explored further. The procedureincluded various steps and involved continuously the members of theexpert panel. Initially a draft of the second evaluation questionnairewas designed with main purposes the exploration of the relationsamong criteria (e.g. identification of criteria which are interconnectedand their simultaneous positive/negative evaluation influences theassessment) as well as the identification of the different types ofimportance of criteria (e.g. prerequisite criteria, very important criteria,not relevant criteria and so on). The questionnaire included 6 mainpoints, as described below, and was presented to 12 members of thepanel of experts during the dedicated workshop, with the aim ofevaluating the clarity of the questionnaire, testing the procedure andidentifying possible improvements that could be implemented in thefinal version of the questionnaire. Reaching a consensus among experts,when possible, was a priority throughout the procedure.

The first version of the questionnaire included the followingpoints, which were defined as important for the evaluation of thecriteria hierarchy:

• Identification of criteria whose evaluation overrules (positively ornegatively) other criteria belonging to the same criteria group(‘Over’ and ‘Veto’ criteria).

• Identification of synergic or redundant effects of criteria to the evalu-ation.

• Identification of the importance (ranking) of ‘Optimum and Worseevaluation’ of each criterion.

• Identification of the effects in data degradation of a criterion being‘Applicable but not reported’ for a given ecotoxicological datum.

• Identification of the credibility and plausibility of a criterion.• Identification of the robustness of the evaluation and the possible ex-istence of disputable conditions.

During the workshop, the complete testing of the process wasperformed and each expert present had the possibility to expresshis/her insights on the six (Duboudin et al., 2004) aforementionedpoints/questions for each element of the assessment criteria hierar-chy (see Table A.1). Based on the fruitful discussions, the providedfeedback from the present experts and the outcomes of the work-shop, the questionnaire for expert consultation was slightlyredesigned and adjusted to fit further the characteristics of ecotoxi-cological data. Specifically, the questions regarding (Ågerstrand et al.,2011) the ‘synergic/redundant effects’ of criteria and (Barnthouse andSuter, 1986) the credibility of criteria were omitted as not applicablein the context of ecotoxicology and the question regarding the ‘impor-tance of criteria’ was rephrased to include only the ranking regardingthe ‘Worse evaluation’ of a criterion.

The final version of the ‘Questionnaire for expert consultation’ in-cludes the following four points, for which the related questions andoutputs are reported:

1. Identification of elements whose evaluation overrules (positively ornegatively) other elements belonging to the same element group(‘Over’ and ‘Veto’ criteria).Q: “Does an optimum (i.e. green answer), or conversely, worst (i.e. redanswer) evaluation of one of the following criteria make all/some of theother criteria within the same category irrelevant?”The output of the question can be a set of causal relations, in the formof ‘IF–THEN’ rules or a null set, in the caseswhere the expert does notdefine any relation.

2. Identification of the importance (ranking) of ‘Worse evaluation’ ofeach element.Q: “Rank the importance of each criterion by assigning each of them tothe appropriate category. Each criterion should be ranked, based onyour judgment for its effects on data generation. For example, thinkabout one test where all the criteria are optimum except to the criterionyou are considering here. How would this worst answer degrade thetest?”The output of the question is a classification of each element to five(5) predefined classes (i.e. Prerequisite, Highly important,Moderate-ly important, Slightly important, Not relevant).

3. Identification of the effects in data degradation of an element being‘Applicable but not reported’ for a given ecotoxicological data.Q: “Supposing a criterion is applicable for the type of test under assess-ment but not reported in the paper or not specified by the person evalu-ating the test's quality, which action would you take? Each criterionshould be assigned, based on your judgment for its effects on data gen-eration, in the right answer.”The output of the question is a classification of each element to three(3) predefined classes (i.e. Substituted by optimum, No idea on howto substitute, Substituted by worst).

4. Identification of the robustness of the evaluation and the possibleexistence of disputable conditions.Q: “Evaluate if the Optimum/Worst answer is disputable (i.e. highlydepend on the data assessor) or consensus-based (i.e. based on largelyrecognized assumptions/desired conditions). Each criterion should beassigned in the right answer, based on your judgment for its effects ondata generation.”

106 P. Isigonis et al. / Science of the Total Environment 538 (2015) 102–116

The output of the question is a classification of each element to two(2) predefined classes (i.e. Disputable, Undisputable).

It is important to notice that the final questionnaire design, includesthe possibility for a user to skip the four questions for a given node of thecriteria hierarchy, in case the user does not possess sufficient informa-tion or knowledge for evaluating the elements included in that node.This feature is designed for excluding from the evaluation the possibleexisting lack of knowledge, up to the highest possible percentage. Forthe completion of the questionnaire, a bottom-up approach is followed;therefore the user starts the evaluation from the criteria-questions level,continues with the criteria groups, the categories, the ecotoxicologicalcategories and ends with the evaluation of the Lines of Evidence (LoEs).

The questionnaire was made available to the expert panel, throughan onlineweb application specifically developed, so that it could be sub-mitted to and answered by any member of the panel. A total of 14experts have provided their answers to the questionnaire, with theaim of: (i) identifying the possible existing relations among criteria,(ii) identifying the relative importance of each criterion and (iii) identi-fying the possible inherent uncertainty. The uncertainty could beexpressed in the form of: (i) unreported information, (ii) disputable in-formation and (iii) possible lack of knowledge of the experts.

The outputs thatwere gathered from the criteria ranking process areused as part of the MCDA-based aggregation procedure, with thepurpose of identifying and scoring the reliability and relevance of theecotoxicological data under assessment. The elaborated outputs consti-tute the knowledge base of the proposedmethodology, which is used inthe implementation of the methodology for the quantitative scoring ofecotoxicological data. The knowledge base constitutes the drivingforce of the MCDA based aggregation process, as it is described in thefollowing Section (3.2), and allows taking into consideration the in-sights and opinions of all the involved experts. Furthermore, the meth-odology allows the addition or removal of experts' input from theknowledge base, as it is designed to bemodular, flexible and adjustableto the needs of the user/decision maker. Thus, the knowledge base canbe expanded to be even more reliable in the future.

Fig. 1. Information flow and MCDA based aggregati

3.2. Introduction to the MCDA based aggregation methodology (Scoringsystem)

The proposed MCDA methodology is a vital part of the WoE frame-work that connects the different elements of the ecotoxicological dataassessment process. It serves as the main connector between theanalysed ecotoxicological data and the knowledge base, and thus allowsthe quantification of all the available information firstly for the analysisof the reliability and relevance of ecotoxicological data and secondly forthe ranking of the data. Three figures are used to describe in further de-tail the proposed methodology. Specifically, they illustrate the informa-tion flow and the process that is followed for the implementation of themethodology (Fig. 1), the connections between the various elementsused in the methodology and the various actors (Fig. 2) and the waythe hierarchical aggregation of information is performedmathematical-ly (Fig. 3). In Fig. 1, the blue part represents the process followed for thecreation of the WoE assessment framework and the knowledge base,the grey part the process for the assessment of ecotoxicological databy a user, based on theWoE framework, and the light green the processfor the application of the MCDA methodology. The background coloursused correspond to the ones used also in Fig. 2 for allowing the readerto connect the various instances of themethodology and provide a bet-ter understanding of the involvement of various actors in themethodol-ogy. On the other hand, Fig. 3 shows a graphical representation of thehierarchical aggregation techniques used (WoE assessment hierarchy),in which specific functions (F) are used for coupling the informationstored in the knowledge base (w)with the answers to criteria for a spe-cific evaluated dataset (x).

The methodology allows performing a systematic analysis of the di-verse types of available information and using various complex algo-rithms for the calculation of a final reliability and relevance index forevery ecotoxicological datum under assessment. It is an innovative pro-cess that has been designed and tailored to fit the characteristics of theavailable information in the context of ecotoxicology as well as to fill inthe gaps in the state-of-the-art of the analysis of ecotoxicological data,as identified in Section 1. Specifically, the methodology makes use of

on methodology — conceptual representation.

Fig. 2. Connections between elements and actors in the application of the MCDA methodology.

107P. Isigonis et al. / Science of the Total Environment 538 (2015) 102–116

all the available information and provides a transparent scoring systembased on unambiguous multiple criteria, while the existence of hierar-chical aggregations allows the detailed analysis and identification ofthe elements that influence the ranking of ecotoxicological data in aclear way.

The WoE framework and the proposed MCDA methodology havebeen developed based on the notions of the Multi-Attribute Value The-ory (Keeney and Raiffa, 1976), since the concept of ‘value functions’ isused for the handling of the criteria values, and their transformationsinto certain scales, and a ‘decision tree’ has been designed and used asa foundation of the assessment process. The methodology is combining

Fig. 3. Illustration of the hierarchic

the value theory with elements of fuzzy logic. Fuzzy logic (Zadeh, 1965)introduces the concepts of ‘partial membership’ and ‘degree of truth’.Namely, ‘degree of truth’ of an element x refers to the associated valuein [0,1] of the value of themembership (characteristic) function for ele-ment x of a fuzzy set and ‘partialmembership’ refers to the possibility ofan element x to partially belong to a fuzzy set.

In order to use the proposedMCDAmethodology the assessor has toanalyse each ecotoxicological datum, by answering each of the 57criteria-questions (multiple answer questions) of the WoE frameworkregarding the conditions and methods under which each ecotoxicolog-ical datum was created, as seen in the grey section of Figs. 1 and 2. The

al mathematical aggregations.

108 P. Isigonis et al. / Science of the Total Environment 538 (2015) 102–116

methodology allows coupling the answers to the criteria-questionswiththe contents of the knowledge base (as described in Section 3.1.2 andseen in Fig. 2) by the application of a set of aggregation algorithmsand functions for the calculation of the reliability and relevance scoreas depicted in Figs. 2 and 3.

As mentioned in Section 3.1.2, each of the questions used in thequestionnaire for expert consultation provides specific types of outputs:

1. the relations among elements,2. the relative importance of elements,3. the disputability of elements, and4. information on the handing of unreported data.

Outputs are expressed in different forms:

• relations among elements: a priority ordered set of causal relations,i.e. rules of the ‘IF–THEN’ form,

• the rest: unique element scores in the interval [0,1].

It is useful to describe themainway each output is incorporated andprocessed in the aggregation methodology for the analysis of one eco-toxicological datum. In Fig. 4, an example of a priority ordered set ofcausal relations is given and the concepts of a criterion and its evalua-tion, a rule block, a rule outcome, a rule and a set of rule blocks are pre-sented. For the evaluation of a set of rules, the following steps arefollowed: Firstly, the causal relations are divided into smaller blocksand evaluated for their validity, based on the answers of each criterionfor the given datum. This is done by calculating the degree of truth ofeach rule block and then combining all the degrees of truth for calculat-ing the degree of truth of each specific rule. Since the set of causal rela-tions is priority ordered, the degree of truth of each rule receives aspecific priority in the aggregation procedure.

The outputs of the other three questions are expressed as scores andpresented in numerical forms. They are all used for evaluating howmuch each element evaluation could affect, or not, the production ofthe ecotoxicological datum and therefore how much it reduces thereliability and relevance of the datum.

TheMCDA aggregationmethodology is based on various types of in-puts and 10 distinctive functions. Below only thefivemain functions arepresented, which are used in themethodology for the calculation of thescore for one node j(j = 1:38) of the criteria hierarchy. In an identicalway, the scores are calculated for all the nodes of the hierarchy, in abottom-up approach, that is concluded with the calculation of thetotal reliability and relevance score for every datum. Interested readerscan find the complete mathematical annotations and details in the sup-plementary material.

The replies of the criteria-questions for a given ecotoxicologicaldatum are represented and expressed in the aggregation methodologyas CAi∈{Y, N, NA, NR, DK}, for each criterion-question i(i = 1:57). The

Fig. 4. Example of a prioritis

corresponding answers are Y: ‘Yes’, N: ‘No’, NA: ‘Not Applicable’, NR:‘Applicable but Not Reported’, and DK: ‘I don't know’. In the framework,there is a specific correspondence between the answers to eachcriterion's question (CAi∈{Y, N, NA, NR, DK}) and the numerical evalua-tion of each criterion, represented as CAi ∈½0;1�∪K. ‘Yes’ and ‘No’ aremapped into 1 and 0 respectively, while ‘Not applicable’ is mapped to∅, ‘I don't know’ to 0.5 and ‘Applicable but not reported’ to a numberthat is derived from the knowledge base.

Moreover, disputability score from the knowledge base is utilized for

the updating of the criteria evaluations CAi into CAidisp

which takes intoaccount the disputability of elements' evaluations through a dedicatedfunction F8 : F8ðCAi; dispiÞ→½0;1�∪K. For each Node j the evaluation

is based on these disputability aware criteria evaluations CAidisp

by theapplication of three steps: i) evaluation of priority ordered list of causalrelations, in the form of ‘IF–THEN’ rules; ii) evaluation of relative impor-tance of the node components; and iii) integration of causal and impor-tance evaluations. In the following sections the definition of the threeaforementioned steps are reported for the 2nd-lowest level of thehierarchy, namely criteria groups.

3.2.1. Evaluation of priority ordered list of causal relations, in the form of‘IF–THEN’ rules

A causal relation, as described in Fig. 4, denoted as Rj,r and herebyreferred as Rule (R), is related with a specific Node j and identifiedthrough the subscript r, for r ≥ 1 Each Rule defined by the experts con-sists of a set of blocks Bj,r, paired with the respective rule evaluation Sr∈{O,W} (where O is Optimum and W is Worse). Specifically: Rj,r ={Bj,r, Sr} for criteria causal relations. Formally a set of blocks Bj,r isdescribed as the collection of blocks Bj,r,k, therefore: Bj,r = {Bj,r,k│k ≥ 1}.

Each block is composed by a criterion i and the respective criterionevaluation Si (e.g. criterion i is Yes), therefore Bj,r,k = {i, Si} for i =1:57, j = 1:38, r ≥ 1 and k ≥ 1.

The evaluation of blocks, denoted as BEj,r,k, is defined through the

function F1 : F1ðBj;r;k; CAidispÞ→½0;1�∪K, whose inputs are the blocks

Bj,r,k and the disputability aware criteria evaluations CAidisp

. The formalimplementation is defined by:

BΕ j;r;k ¼K when CAi

disp ¼ K

CAidisp

when Si ¼ O1−CAi

dispwhen Si ¼ W

8><>:

: ð5Þ

Subsequently, the evaluation of a set of blocks for a given Rule Rj,k,called Rule evaluation is denoted as R j;r and defined through thefunction F3(Rj,r) → ([0, 1], {0, 1}) ∪ Ø.

ed set of IF–THEN rules.

109P. Isigonis et al. / Science of the Total Environment 538 (2015) 102–116

Formally,

R j;r ¼K ;∀ BEj;r;k ¼ K

min BΕ j;r;k� �

;1� �

; ∃BEj;r;k≠K∧Sr ¼ Omin BΕ j;r;k

� �;0

� �; ∃BEj;r;k≠K∧Sr ¼ W

8<: : ð6Þ

The described function is used for the evaluation of all the presentcausal relations, as r ≥ 1.

3.2.2. Evaluation of relative importance of the node componentsThe second important part of the knowledge base is the info regard-

ing the relative importance of criteria. For the calculation of the relativeimportance of a criterion, themethodology takes into consideration two

variables: The disputability aware criteria evaluation ðCAidispÞ and the

importance score of a criterion. The importance score of a criterion imi

∈[0,1] is an input provided by the expert panel from the knowledgebase.

As such, the relative importance of criteria, which are part of aspecific Node j, is denoted as Q2,j and is calculated through the func-

tion F7 : F7ðCAidisp

; imiÞ→½0;1�∪K. Formally:

Q2; j ¼K ; ∀ CAi

disp ¼ KXn

i¼1CAi

disp þ 1−CAidisp� �

� 1−imið Þn

; else

8><>:

: ð7Þ

3.2.3. Integration of causal and importance evaluationsValues R j;r for each rule and relative importance of criteria Q2,j have

been defined therefore we can proceed with the definition of thefunction describing the calculation of the node score (SCj).

Specifically, given the Sufficiency level Slj, which is taken from theknowledge base and represents whether an expert has providedfeedback on the ‘Questionnaire for expert consultation’ for theNode j under evaluation or not, the score of a Node j is calculatedthrough the application of function F4 : F4ðRj;r;Q2; j; Sl jÞ→½0;1�∪K de-fined as:

SCj ¼K Sl j ¼ 0

Xni¼1

ai � R2j;i þ anþ1 � Q2; j Sl j ¼ 1

8><>:

ð8Þ

where for each Rule evaluation R j;r , that has an output of the form

([0,1],{0.1}), its two elements are denoted as R1j;r and R

2j;r (the former

identifies the degree of truth of the rule while the latter identifies the

associated outcome), r = 1:n, a0 = 0, R1j;nþ1 ¼ 1 and ai ¼ ½1−∑i−1

k¼0ak

� � R1j;i, with i N 0. The idea behind this formula is that rule scores and

relative importance are aggregated by weighted average, withweights based on degree of truth and decreasing priority with rela-tive importance having the lowest weight.

Based on the proposed aggregation methodology, it is possible tocalculate the overall reliability score of a given ecotoxicological datum,denoted as SC38∈[0,1]. It is important to clarify that this reliabilityscore is associated with a specific member of the expert panel, as thescore is extracted by using the input provided by that expert duringthe creation of the knowledge base. By following the same procedure,it is possible to calculate a specific number of reliability scores for agiven ecotoxicological datum, based on the number of members thatconstitute the expert panel.

For a given number of experts M= 1:m, we denote with X= {SC381 ,SC382 ,…,SC38

m } the set of reliability scores of an ecotoxicological datumandW= {w1, w2,…,wm} the set of the respective weights, each associ-ated with an expert. Each weight is in [0,1].

The calculation of the total reliability score of an ecotoxicologicaldatum is based on the application of the weighted average and is de-fined as:

TSC ¼Xm

M¼1SCM

38 �wMXm

1wM

: ð12Þ

4. Results and discussion

4.1. Definition of Lines of Evidence (LoEs)

At the first level of the hierarchical structure, it is proposed to evalu-ate ecotoxicological data according to three Lines of Evidence:

1. Experimental Reliability LoE. Experimental reliability covers the in-herent quality of a test relating to test methodology and the waythe performance and results of the test are described.

2. Statistical Reliability LoE. Statistical reliability covers the inherentquality statistical methodology and assumptions chosen forinterpreting experimental results and the way the performance andresults of the statistical analysis are described.

3. Biological relevance LoE. Biological relevance covers the extent towhich a biological endpoint is appropriate for a particular risk assess-ment. This category includes the extent towhich a test is appropriatefor a particular substance, given prior knowledge about its mode ofaction, and for a particular site, given prior knowledge aboutphysico-chemical conditions and biological characteristics (e.g.habitat typology).

These three LoEs are the ‘mandatory’ block of theWeight-of-Evidence(WoE). They can be informed once and then reused for each assessmentmade under the same conditions (e.g. if more than one substance isassessed during the same experiment). Some criteria can be informedor not, according to the assessment context (i.e. prior knowledge or noton site conditions). At the lowest level of the hierarchical structure, specif-ic questions were defined and used for the weighting process further de-veloped. The questions could be answered either by ‘Yes’, ‘No’, ‘Notapplicable’, ‘Applicable but Not reported’ or ‘I don't know’.

The complete set of LoE, categories, criteria groups and associatedcriteria-questions is reported in Table A.1 and discussed shortly below.

4.1.1. The experimental reliability LoEIt is proposed to structure information related to the ‘Experimental

Reliability’ LoE into four categories: 1. ‘Quality assurance’ category; 2.‘Substance identification and monitoring’ category; 3. ‘Organisms cul-ture’ category; and 4. ‘Test design’ category.

4.1.1.1. ‘Quality assurance’ category. ‘Quality assurance’ has first to bedefined because it highly differs according to frameworks. For example,according to Breton et al. (2009), ‘Quality Assurance’ refers to all thecriteria that they included in their system. Most authors defined ‘Qualityassurance’ in a more restricted meaning, i.e. referring to the complianceto standards and/or Good Laboratory Practices (GLP). Some frameworksdo not include ‘Quality assurance’ in their list of criteria. For example,Schneider et al. (2009) indicated that ToxRTool developers preferred totreat all kind of data equally and to base the decision on the data reliabilityusing only information provided in the study report. Thus, the ToxRTooldoes not specifically consider whether data were obtained in compliancewith recent guidelines and under GLP conditions. Similarly, Hobbs'scheme does not refer to guidelines, normative or GLP. Instead, the eco-QESST system (Breton et al., 2009) submits detailed questions relatedto standards and GLP and weights affected in case of positive answerare themaximumweights of the system, showing that the eco-QESST de-velopers consider this criterion as one of themost important. As stated bySchneider et al. (2009), this latter option however introduces bias in

Fig. 5. The presence of IF–THEN rules per criteria hierarchy level.

110 P. Isigonis et al. / Science of the Total Environment 538 (2015) 102–116

reliability assessment because, for old data, guidelines and/or GLP werenot necessarily available at the time the experimentwas conducted. Con-sidering this (contradictory) background, we proposed to consider twocriteria related to Quality Assurance category, related to guidelines/stan-dards and GLP respectively.

4.1.1.2. ‘Substance identification and monitoring’ category. Many of theschemes analysed (Klimisch et al., 1997; US EPA, 1991; Hobbs et al.,2005; Schneider et al., 2009) defined a set of questions related to thesubstance identification/characterisation. Schneider's scheme is themost detailed one and it is thus proposed to build a similar structurefor our methodology. Chemical monitoring during the test period isalso mentioned by Hobbs' scheme (‘Was the chemical concentrationmeasured?’), but the scoring process is purely dichotomic (i.e. Maxi-mum score if the substance is measured and minimum score if it isnot measured), while the relevance of measuring the substance duringthe test period can also depend on the substance itself, e.g. on its abilityto be degraded or lost by any other process (e.g. volatilization). Such in-teractions between criteria have been considered in the process ofextracting the experts' knowledge.

4.1.1.3. ‘Organisms’ category. The schemes of Schneider et al. (2009),Breton et al. (2009) and Ågerstrand et al. (2011) included significantcriteria related with organisms (i.e. species identification and

Fig. 6. Number of elemen

organisms' physiology). Those were proposed to be included in the de-veloped criteria hierarchy together with the criteria related with theculture design, as well as the acclimation and feeding of organisms.

4.1.1.4. ‘Test design’ category. ‘Test design’ refers to all the experimentalconditions that must be satisfied for guarantying the reliability of thetest, i.e. the occurrence of negative and/or positive controls, themonitor-ing of important physico-chemical characteristics (e.g. temperature), themode and route of contamination. The proposed framework includesthree different criteria groups for test design, i.e. ‘Controls’, ‘Physico-chemical conditions’ and ‘Exposure conditions’ sub-categories.

4.1.2. The statistical reliability LoEIt is proposed to structure information related to the ‘Statistical Reli-

ability’ LoE into three categories: 1. ‘Test design’; 2. ‘Assumptions’; and3. ‘Estimation quality’.

4.1.2.1. ‘Test design’ category. ‘Concentration design’, ‘Replicates’ and‘Replicates Individual numbers’ are the aspects of the test design thatare considered important and relevant for the statistical reliability ofan ecotoxicological test. Criteria related with the aforementioned char-acteristics have been identified and included in the assessment frame-work. In detail, the choice of concentrations, both the number of themand their value, affects the precision of LC/EC or NOEC/LOEC estimates.

ts per IF–THEN rule.

Fig. 7. Experimental reliability — appearance of elements in IF–THEN rules.

111P. Isigonis et al. / Science of the Total Environment 538 (2015) 102–116

Guidelines currently often require four or five concentrations that aregeometrically spaced, in addition to an untreated control. Even if theserecommendations are conditional upon certain aspects of the tests,criteria are proposed to check that data actually respect them. Further-more, criteria from Breton et al. (2009) and Ågerstrand et al. (2011)frameworks regarding the replicates have been identified as importantand taken into consideration.

4.1.2.2. ‘Assumptions’ category. Ecotoxicological data can be analysed ei-ther through hypothesis-testing or regression techniques. Assumptionsare required in both cases and related criteria are proposed in this cate-gory. Information related to power and precision are important forassessing statistical reliability of hypothesis-testing methods. Whenhypothesis-testing, several assumptions need to be considered: inde-pendence of errors, normality of errors, and homogeneity of variancebetween treatments. Homogeneity of variance is the main assumptionwhich must be satisfied. On the other hand, for the regression tech-niques, the model selection, the model comparison and the prior as-sumptions are evaluated and assessed.

4.1.2.3. ‘Estimation quality’ category. An advantage of the regression ap-proaches is the capability to provide a confidence interval for the calcu-lated summary statistics (e.g. LC50). To be able to check the precision ofthe calculation, it is necessary that this information is available in the re-port/publication under analysis. The ‘estimation quality’ category in-cludes criteria that are selected for assessing if an appropriate modelhas been selected for adequately fitting the set of data and if the quality

Fig. 8. Statistical reliability — appearan

of fit and/or comparison of models and/or justification of a given modelare useful for assessing the quality of the summary statistics.

4.1.3. The biological relevance LoEIt is proposed to structure information related to the ‘Biological Rel-

evance’ LoE into four categories: 1. ‘Duration’; 2. ‘Test design’; 3. ‘Biolog-ical endpoint’; and 4. ‘Organism relevance’.

4.1.3.1. ‘Duration’ category. According to the REACH guidance publishedby ECHA (ECHA, 2008a) ‘Chronic toxicity’ related to waterborne expo-sure refers to the potential or actual properties of a substance to causeadverse effects to aquatic organisms during exposures which are deter-mined in relation to the life-cycle of the organism. Duration is then a keyfactor for determining whether a test can be considered as chronic ornot and for identifying potential bias. Four criteria were proposed tocheck whether the test duration is optimal or not, for acute and chronictests respectively.

4.1.3.2. ‘Test design’ category. This category combines criteria, whichwere not included in the existing frameworks found in the literature, re-garding the physicochemical conditions of the tests under assessmentand an updated version of the relevance criterion of Ågerstrand et al.(2011) framework.

4.1.3.3. ‘Biological endpoint’ category. For somebiotests, several biologicalendpoints can be followed (e.g. for fish long term toxicity: hatching suc-cess, growth and/or survival; for fish embryo-larval test: several

ce of elements in IF–THEN rules.

Fig. 9. Biological relevance — appearance of elements in IF–THEN rules.

112 P. Isigonis et al. / Science of the Total Environment 538 (2015) 102–116

development effects like abnormally coiled tail or flexed tail with re-duced length, small and disorganised trunk or inhibition of trunk mor-phogenesis, absence or malformation of ocellus, etc.; for invertebrates:long term toxicity, reproduction, growth and/or lethality; for macro-phytes, freshweight change, yield of energy conversion at photosystem;etc.). When several endpoints can be estimated, it would be necessaryto justify whether the reported endpoint is actually appropriate forthe risk assessment or not. In this context, seven criteria were proposedthat were related with the summary statistics, the sensitivity of end-points and the population relevance (as expressed also in Ǻgerstrand'sframework).

4.1.3.4. ‘Organism relevance’ category. The criteria included in this cate-gory answer some questions related to species relevance in case ofsite-specific assessments. Species that are tested in laboratory are in-deed generally selected mainly for their ease of breeding and handling(species should be readily available year-round and tolerate handlingand laboratory conditions) and their ability to be used as usual stan-dards (allowing a good reproducibility of biological responses to toxi-cants). However, such test species do not necessarily relate, bothphylogenetically and ecologically, to the organisms that naturally dom-inate natural ecosystems. Species can be described by a list of biologicaland ecological traits (e.g. life-cycle patterns, reproductive features, food/

Fig. 10. Importance of a

feeding habits, habitat preferences, etc.). Those traits are related to ex-posure (routes and levels) and toxicity mechanisms (contaminationand elimination kinetics as well as bioaccumulation) and they canthus be used for evaluating the relevance of lab species for a given riskassessment context.

4.2. Statistics of the expert database and discussion

An analysis of the input provided by the experts, allows the extrac-tion of interesting statistics and information regarding the insights ofthe experts onwhich criteria are important for the analysis of ecotoxico-logical data andwhich elements of the criteria hierarchy are consideredthe most influential. A wealth of information can be extracted from thedatabase, thus in the following paragraphs themost relevant graphs andstatistics are presented and shortly discussed.

Firstly, an overview of the provided IF–THEN rules by the experts ispresented. In Fig. 5 the percentage of experts that have provided rulesfor each level of the criteria hierarchy is presented, information fromwhichwe can identifywhichnodes of the criteria hierarchy aremore in-fluential, either positively or negatively, in the evaluation of ecotoxico-logical data according to the experts. In detail, the most influentialnodes are ‘1.2.1 — Substance identification’, ‘1.4.1 — Controls’, ‘1.2 —Substance identification and monitoring’ and ‘1.4 — Test design’,

ssessment criteria.

Fig. 11. Importance of assessment criteria groups.

113P. Isigonis et al. / Science of the Total Environment 538 (2015) 102–116

followed by nodes ‘1.3.1 — Organisms identification and physiology’,‘2.1.2 — Replicates’, ‘1 — Experimental Reliability’ and ‘0 — Laboratorybiotests’.

Furthermore, in Fig. 6, the number of elements per each providedrule is presented. The majority of experts have identified rules with upto two elements (therefore including up to two rule blocks) as themost representative for the performed evaluation as seen in Fig. 6,since 104 rules with a single element and 38 with two elements havebeen provided. The use of rules with up to two elements indicates aslightly conservative approach expressed by the expert panel, due tothe fact that single elements can influence significantly the performedevaluation.

The following figures (Figs. 7, 8 and 9) provide a concise analysis ofthe influence of specific criteria (andnodes) in the analysis of ecotoxico-logical data, through their appearance in rules provided by the expertpanel. Each graph provides a summary of the number of times each el-ement (i.e. criterion or node) appears in the provided IF–THEN rules,and shows how many times the element has a positive/negative

Fig. 12. Importance of assessment eco

influence, as well as how many times each element has been includedin single element rules. Fig. 7 shows the statistics for the ‘Experimentalreliability’ LoE, Fig. 8 the ‘Statistical reliability’ LoE and Fig. 9 the ‘Biolog-ical relevance’ LoE. Based on these graphs, we identify the followingcriteria as the most influential ones:

• 1.2.1.1 — Substance identity• 1.4.1.1 — No toxicant control• 2.1.2.1 — Replicates• 2.1.1.2 — Concentration spacing• 2.1.2.2 — Individual numbers• 3.1.1.1 — Acute duration• 3.3.3.1 — Population dynamics.

Secondly, an overview of the importance of the criteria hierarchy el-ements is provided in Fig. 10 (criteria), Fig. 11 (criteria groups) andFig. 12 (ecotoxicological categories and LoE). The figures provide anoverview of the classification of elements into the five predefined

toxicological categories and LoE.

Table 1Reliability and relevance scores of the analysed laboratory tests per expert.

Test/overall score Exp. 1 Exp. 2 Exp. 3 Exp. 4 Exp. 5 Exp. 6 Exp. 7 Exp. 8 Exp. 9 Exp. 10 Exp. 11 Exp. 12 Exp. 13 Exp. 14

Test 1 0.893 0.160 1.000 0.997 0.490 0.604 0.629 0.991 0.884 0.767 0.995 0.657 0.617 0.766Test 2 0.000 0.000 0.002 0.211 0.021 0.064 0.083 0.000 0.002 0.134 0.000 0.049 0.064 0.094Test 3 0.178 0.019 0.931 0.959 0.404 0.434 0.445 0.977 0.811 0.623 0.985 0.580 0.406 0.651

114 P. Isigonis et al. / Science of the Total Environment 538 (2015) 102–116

categories (from ‘Prerequisite’ to ‘Not relevant’) for every element andthe importance a non-optimum evaluation may have in the assessmentof ecotoxicological data. As it can be seen, the criteria with the highestnumber of classifications as ‘Prerequisite’ (in light red colour) and‘Highly important’ (in yellow colour) are ‘1.2.1.1 — Substance identity’,‘1.2.2.2 — Concentration monitoring’, ‘1.2.2.3 — Loss acceptability’,‘1.3.1.1 — Species identity’, ‘1.4.1.1 — No toxicant control’, ‘1.4.1.2 —No toxicant control’, ‘1.4.2.1 — Temperature’, ‘1.4.2.2 — pH’, ‘1.4.3.1 —Exposure system’, ‘1.4.3.2 — Exposure route’, ‘2.1.1.1 — Concentrationnumber’, ‘2.1.2.1 — Replicates’ and ‘3.1.1.1 — Acute duration’.

Similarly, for the criteria groups the most important according to ex-perts' inputs are ‘1.2.1 — Substance identification’, ‘1.2.2 — Substanceloss and monitoring’, ‘1.3.1 — Organisms identification and physiology’,‘1.4.1 — Controls’, ‘1.4.3 — Exposure conditions’, ‘2.1.1 — Concentrationdesign’, ‘3.1.1— Acute test relevance’ and ‘3.1.2— Chronic test relevance’.

Lastly, the most important ecotoxicological categories are ‘1.2 —Substance identification and monitoring’, ‘1.3 — Organisms’, ‘1.4 —Test design’ and ‘3.1 — Duration’ and the most important LoE are ‘1 —Experimental reliability’ and ‘2 — Statistical reliability’

4.3. The MCDA aggregation methodology — demonstration throughexamples

For the application of the developedMCDAmethodology, a softwareprogramwas developed in theMS Excel environment, usingMacros andVisual Basic for Applications (VBA) Programming Language coding. Theprogramperforms the analysis of the reliability and relevance of ecotox-icological data and implements the complete methodology, as it is de-scribed in Section 3.2 (and in detail in the supplementary material).

A set of threefictional laboratory tests has been selected for thedem-onstration of the functionalities of the methodology and the way theMCDA methodology performs. Specifically, for the assessment criteria:

1. All the 57 criteria for the 1st test have been answered as ‘Yes’, there-fore simulating a test performed under optimum conditions.

2. All the 57 criteria for the 2nd test have been answered as ‘No’, there-fore simulating a test performed under non-optimum conditions.

3. The answers to the 57 criteria vary for the 3rd test, therefore simulat-ing a test performed under realistic laboratory conditions. 40 criteriahave been answered as ‘Yes’, 12 as ‘No’, 2 as ‘Applicable but not re-ported’, 1 as ‘Not applicable’ and 2 as ‘I don't know’. The responsesheet of this test can be found as an example in the Supporting Infor-mation, Table A.2.

In the simulation, the following aspects have been taken intoconsideration:

• The complete set of available experts' answers has been used (14experts).

Table 2Scores for the experimental reliability LoE per expert and average score per test.

Experimental reliability Exp. 1 Exp. 2 Exp. 3 Exp. 4 Exp. 5 Exp. 6 Exp. 7

Test 1 0.971 0.504 1.000 0.985 1.000 Null 0.832Test 2 0.000 0.000 0.000 0.025 0.040 Null 0.058Test 3 0.430 0.179 1.000 0.967 1.000 Null 0.826

• All experts have been considered of equal importance for the evalua-tion and thus equal weights have been assigned to them.

• Thedisputability scores have been set as 0 for undisputable conditionsand 0.3 for disputable conditions.

As expected, the 1st test, whichwas performed under optimum con-ditions, scores (0.747) much higher in comparison with the 2nd test(0.052), which was performed under non-optimum conditions. Thescores of the 1st and 2nd tests would be expected to be ‘1’ and ‘0’ respec-tively, as they are performed under ‘optimum’ and ‘non-optimum’ condi-tions. Though, as explained in Section 3, theMCDAmethodology is takinginto consideration the possible existing uncertainty in the evaluation ofecotoxicological data and specifically, the presence of disputable condi-tions, as described in Section 3.1.2. This element is evaluated in themeth-odology based on the feedback of the expert panel, which results inoverall scores not equal to 1 or 0 for the aforementioned tests. Thedisputability scores are user defined and therefore can be adjusted ac-cording to the individual preferences of the decision maker. The 3rdtest, whose conditions varied significantly between optimum and non-optimum, received a middle level reliability and relevance score (0.558).

In Table 1, the reliability scores of the three tests are presented sepa-rately for each of the involved experts. The variability that is observedamong experts for the scores of an individual test is based on the differentinputs provided by each expert, regarding the importance of the as-sessment criteria and the relations among criteria, as those areexpressed in the questionnaire for expert consultation and storedin the knowledge base. In practice, the variability is one of the maincores of the methodology, as it allows evaluating and assessing eco-toxicological data on the basis of different experts' opinions based onthe same criteria hierarchy.

Themethodology allows extracting further information regarding theanalysis of each specific test for every single node of the criteria hierarchy.As an example, we can see in Table 2 howwell the different tests performfor the ‘Experimental Reliability’ Line of Evidence per expert and the aver-age score of each test (The score ‘null’means that the 6th expert did notpossess enough information or knowledge for evaluating the ‘Experimen-tal reliability’ node of the hierarchy through the ‘Questionnaire for expertconsultation’, as explained in Section 3.1.2). In a similar way, we can an-alyse and compare every assessed test and ecotoxicological datumbased on every criteria group, ecotoxicological category and line ofevidence of the WoE framework. As it can be seen in Table 3, Test 3scores very well in the ecotoxicological categories ‘Quality Assurance’,‘Substance identification and monitoring’, ‘Test design’ whereas itscores poorly on the categories ‘Estimation quality’ and ‘Duration’.In this way, apart from the overall reliability score, different testscan be quantitatively evaluated, based on their various differentcharacteristics.

Exp. 8 Exp. 9 Exp. 10 Exp. 11 Exp. 12 Exp. 13 Exp. 14 Average

0.996 0.942 0.989 0.995 0.988 0.829 0.989 0.9250.061 0.099 0.077 0.014 0.000 0.044 0.038 0.0350.988 0.904 0.978 0.985 0.963 0.822 0.974 0.847

Table 3Average scores of Test 3 for each of the ecotoxicological categories of the hierarchy (14experts).

Test 3 Average

1.1 Quality assurance 0.9861.2 Substance identification and monitoring 0.9731.3 Organisms 0.7621.4 Test design 0.9632.1 Statistical test design 0.6602.2 Assumptions 0.7912.3 Estimation quality 0.0823.1 Duration 0.0573.2 Test design relevance 0.7623.3 Biological endpoint 0.7923.4 Organism relevance 0.599

115P. Isigonis et al. / Science of the Total Environment 538 (2015) 102–116

5. Conclusions

A new methodology has been developed for the assessment of eco-toxicological data, in a quantitative and systematicway. The assessmentframework, on which the methodology was based and designed, hasbeen built upon the review of the state-of-the-art in the field of ecotox-icological assessment frameworks for the analysis of the quality of eco-toxicological data but at the same time, it has been expanded to providethe possibility of exploring ecotoxicological data in a thorough way.

Themethodology is based on the use ofMulti-Criteria Decision Anal-ysis and Fuzzy logicmethods, can handle heterogeneous criteria and in-tegrates the evaluation provided by different experts through a solidparticipatory process.

The developed methodology incorporates the gathered knowledgeand expertise from the expert panel and provides the user the possibil-ity to use an extensive source of knowledge, which would not be avail-able in individual decision making contexts.

Themethodology allows easilymodifying and enlarging the numberof experts as well as the predefined numerical values (i.e. disputabilityscores) according to the users' demands. It has been designed forassessing ecotoxicological laboratory biotests for individual effects butcan be easily applied to other types of experimental or modelling ap-proaches (i.e. Laboratory biotests for population effects, Multi-speciespseudo-field tests and Modelling data), once the criteria hierarchicalstructure has been updated and evaluated by the specific expert panel.

Therefore, themethodology is suggested for use in the production ofreliable weighted Species Sensitivity Distributions, a vital component ofthe probabilistic ERA.

Acknowledgements

The authors acknowledge the contribution and support for thisstudy by the French National Research Agency, within the research pro-ject AMORE (contract 2009 CESA 15 01).

The authorswould like to thank eachmember of the expert panel fortheir outstanding contribution to the project: Dr. Agerstrand Marlene,Dr. Andres Sandrine, Dr. Beaugelin Karine, Dr. Bisson Michele, Dr.Casas Stellio, Dr. Cauzzi Nicolas, Dr. Ciffroy Philippe, Dr. EmmanouilChristina, Dr. Geoffroy Laure, Dr. Gilbin Rodolphe, Dr. Grasso Paola, Dr.GroteMatthias, Dr. Hayaud Nathalie, Dr. James-Casas Alice, Dr. MarliereMaryse, Dr. Maurau Sylvaine, Dr. Paschke Albrecht, Dr. Pery Alexandre,Dr. Pucheux Nicolas, Dr. Richard Bertille, Dr. Roth Nicolas, Dr. TroiseAdrien, Dr. Vincent Jean-Martin and Dr. Vivier Stephanie.

Appendix A. Supplementary data

Supplementary data to this article can be found online at http://dx.doi.org/10.1016/j.scitotenv.2015.06.016.

References

Ågerstrand, M., Küster, A., Bachmann, J., Breitholtz, M., Ebert, I., Rechenberg, B., Rudén, C.,2011. Reporting and evaluation criteria as means towards a transparent use ofecotoxicity data for environmental risk assessment of pharmaceuticals. Environ.Pollut. 159 (10), 2487–2492. http://dx.doi.org/10.1016/j.envpol.2011.06.023.

Barnthouse, L.W., Suter II, G.W. (Eds.), 1986. User's Manual for Ecological Risk Assess-ment. ORNL-6251. Oak Ridge National Laboratory, Oak Ridge, Tennessee.

Bradbury, S.P., Feijtel, T.C.J., Van Leeuwen, C.J., 2004. Meeting the scientific needs of eco-logical risk assessment in a regulatory context. Environ. Sci. Technol. 38 (23),463A–470A. http://dx.doi.org/10.1021/es040675s.

Breton, R.L., Gilron, G., Thompson, R., Rodney, S., Teed, S., 2009. A new quality assurancesystem for the evaluation of ecotoxicity studies submitted under the new substancesnotification regulations in Canada. Integr. Environ. Assess. Manag. 5 (1), 127–137.http://dx.doi.org/10.1897/IEAM_2008-026.1.

Critto, A., Suter II, G.W., 2009. Environmental Risk Assessment Chapter on In: Marcomini,A., et al. (Eds.), Decision Support Systems for Risk-BasedManagement of Contaminat-ed Sites. Springer Science & Business Media.

Duboudin, C., Ciffroy, P., Magaud, H., 2004. Effects of data manipulation and statisticalmethods on species sensitivity distribution. Environ. Toxicol. Chem. 23 (2), 489–499.http://dx.doi.org/10.1897/03-159.

ECHA, 2008a. Guidance on information requirements and chemical safety assessment.Chapter R.7b: Endpoint Specific Guidance.

ECHA, 2008b. Guidance on information requirements and chemical safety assessmentchapter R. 10: Characterisation of Dose [Concentration]— Response for Environment.

ECHA, 2010. Practical Guide 2: How to Report Weight of Evidence (Helsinki, Finland).EPA, 2005. Environmental quality criteria reference document for Cockburn sound

(2003–2004). Environmental Protection Authority Report 20, Australia.European Commission, 2000. Directive 2000/60/EC of the European Parliament and of the

Council of 23 October 2000 Establishing a Framework for Community Action in theField of Water Policy. pp. 1–73 (OJ L 327, 22/12/2000).

European Commission, 2003. Technical Guidance Document in Support of Commis-sion Directive 93/67/EEC on Risk Assessment for New Notified Substances and Com-mission Regulation (EC) No 1488/94 on Risk Assessment for Exiting Substances(Luxembourg).

European Commission, 2006. Regulation (EC) No 1907/2006 of the European Parliamentand of the Council of 18 December 2006 concerning the Registration, Evaluation, Au-thorisation and Restriction of Chemicals (REACH), establishing a European ChemicalsAgency. Off. J. Eur. Union L396, 1–849.

European Commission, 2008. Directive 2008/105/EC of the European Parliament and of theCouncil of 16 December 2008 on Environmental Quality Standards in the Field ofWater Policy, Amending and Subsequently Repealing Council Directives 82/176/EEC,83/513/EEC, 84/156/EEC, 84/491/EEC, 86/280/EEC and amending Directive 2000/60/ECof the European Parliament and of the Council. pp. 84–97 (OJ L 348, 24/12/2008).

European Commission, 2011. Common Implementation Strategy for the Water Frame-work Directive (2000/60/EC), Guidance Document No. 27: Technical Guidance forDeriving Environmental Quality Standards. Tech. Rep, pp. 2011–2055.

Giove, S., Brancia, A., Satterstrom, F.K., Linkov, I., 2009. Decision support systems and environ-ment: role ofMCDA Chapter on In:Marcomini, A., et al. (Eds.), Decision Support Systemsfor Risk-Based Management of Contaminated Sites. Springer Science & Business Media.

Hobbs, D.A., Warne, M., Markich, S.J., 2005. Evaluation of criteria used to assess the qualityof aquatic toxicity data. Integr. Environ. Assess. Manag. 1 (3), 174–180. http://dx.doi.org/10.1897/2004-003R.1.

Keeney, R., Raiffa, H., 1976. Decisions with Multiple Objectives: Preferences and ValueTrade-offs. Wiley, New York.

Klimisch, H.J., Andreae, M., Tillmann, U., 1997. A systematic approach for evaluating thequality of experimental toxicological and ecotoxicological data. Regul. Toxicol.Pharmacol. 25, 1–5. http://dx.doi.org/10.1006/rtph.1996.1076.

Linkov, I., Loney, D., Cornier, S., Satterstrom, K.F., Bridges, T., 2009.Weight-of-evidence evalu-ation in environmental assessment: review of qualitative and quantitative approaches.Sci. Total Environ. 407, 5199–5205. http://dx.doi.org/10.1016/j.scitotenv.2009.05.004.

Linkov, I., Welle, P., Loney, D., Tkachuk, A., Canis, L., Kim, J.B., Bridges, T., 2011. Use ofmulticriteria decision analysis to support weight of evidence evaluation. Risk Anal.31 (8), 1211–1225. http://dx.doi.org/10.1111/j.1539-6924.2011.01585.x.

Breitholtz, M., Rudén, C., Ove Hansson, S., Bengtsson, B.E., 2006. Ten challenges forimproved ecotoxicological testing in environmental risk assessment. Ecotoxicol.Environ. Saf. 63 (2), 324–335. http://dx.doi.org/10.1016/j.ecoenv.2005.12.009(February).

OECD, 1992. Guideline for Testing of Chemicals— Fish, Acute Toxicity Test (Method 203).OECD, 2002. Guideline for Testing of Chemicals — Draft Revised Guideline 201: Freshwa-

ter Alga and Cyanobacteria, Growth and Inhibition Test.OECD, 2004. Guideline for Testing of Chemicals. Daphnia sp., Acute Immobilization Test

and Reproduction Test Method 202.Schneider, K., Schwarz, M., Burkholder, I., Kopp-Schneider, A., Edler, L., Kinsner-

Ovaskainen, A., Hartung, T., Hoffmann, S., 2009. “ToxRTool”, a new tool to assessthe reliability of toxicological data. Toxicol. Lett. 189, 138–144. http://dx.doi.org/10.1016/j.toxlet.2009.05.013.

Suter II, G.W., 2006. Ecological risk Assessment. CRC press.US EPA, 1983. Water Quality Standards Regulation (40 CFR 131).US EPA, 1991. Technical Support Document for Water Quality-Based Toxics Control. US

Environmental Protection Agency, Washington, DC (505/2-90-001).US EPA, 1994. Water Quality Standards Handbook. Second edition.US EPA, 1998. Guidelines for ecological risk assessment. EPA/630/R-95/002F. Risk Assess-

ment Forum. US Environmental Protection Agency, Washington, DC.Verdonck, F.A.M., Jaworska, J., Janssen, C.R., Vanrolleghem, P.A., 2002. Probabilistic Eco-

logical Risk Assessment Framework for Chemical Substances. Integrated Assessment

116 P. Isigonis et al. / Science of the Total Environment 538 (2015) 102–116

and Decision Support. Proceedings of the First Biennial Meeting of the InternationalEnvironmental Modelling and Software Society (IEMSS) vol. 1, pp. 144–149.

Warne, M.St.J., Westbury, A.M., Sunderam, R.I.M., 1998. A compilation of data on the toxicityof chemicals to species inAustralasia Part 1: pesticides. Australas. J. Ecotoxicol. 4, 93–144.

Weed, D.L., 2005.Weight of evidence: a review of concept andmethods. Risk Anal. 25 (6),1545–1557. http://dx.doi.org/10.1111/j.1539-6924.2005.00699.x.

WHO (World Health Organization), 2001. Report on Integrated Risk Assessment. WHO/IPCS/IRA/01/12. World Health Organization, Geneva, Switzerland.

Zadeh, L.A., 1965. Fuzzy sets. Inf. Control. 8, 338–353. http://dx.doi.org/10.1016/S0019-9958(65)90241-X.