Online Urdu Handwritten Character Recognition System ...

-

Upload

khangminh22 -

Category

Documents

-

view

3 -

download

0

Transcript of Online Urdu Handwritten Character Recognition System ...

Online Urdu Handwritten

Character Recognition System

Quara-tul-Ain Safdar

2019

Department of Electrical Engineering

Pakistan Institute of Engineering and Applied Sciences

Nilore, Islamabad 45650, Pakistan

Reviewers and Examiners

Reviewers and Examiners Name, Designation & Address

Foreign Reviewer 1

Dr. Jian Yang,ProfessorDeptt. of Electronic Engineering, Tsinghua University,Bejing, 100084, China

Foreign Reviewer 2

Dr. Choon Ki Ahn,ProfessorRoom 506, Engineering Building, School of ElectricalEngineering, Korea University,Seoul, Korea

Foreign Reviewer 3

Dr. Liangrui Peng,Associate ProfessorDeptt. of Electronic Engineering, Tsinghua University,Beijing, 100084, China

Internal Examiner 1

Dr. Abdul JalilProfessorDeptt. of Electrical Engineering, International IslamicUniversity,Islamabad, Pakistan

Internal Examiner 2

Dr. Mutawarra HussainProfessorDepartment of Computer and Information Sciences,Pakistan Institute of Engineering and Applied Sciences,Islamabad, Pakistan

Internal Examiner 3

Dr. Ijaz Mansoor QuereshiProfessorDeptt. of Electrical Engineering, Air University,Sector E-9, Islamabad

Head of the Department (Name):

Signature with Date:

Certificate of Approval

This is to certify that research work presented in this thesis titled Online Urdu

Handwritten Character Recognition System was conducted by Ms. Quara-

tul-Ain Safdar under the supervision of Dr. Kamran Ullah Khan.

No part of this thesis has been submitted anywhere else for any other degree. This

thesis is submitted to PhD in partial fulfillment of the requirements for the degree

of Doctor of Philosophy in the field of Electrical Engineering.

Student Name: Quara-tul-Ain Safdar Signature:

Examination Committee:

Examiners Name, Designation & Address Signature

Internal Examiner 1Dr. Abdul JalilProfessorDEE, IIU, Islamabad

Internal Examiner 2Dr. Mutawarra HussainProfessorDCIS, PIEAS, Islamabad

Internal Examiner 3Dr. Ijaz Mansoor QuereshiProfessorDEE, Air University, Islamabad

Supervisor Dr. Kamran Ullah KhanPE, DEE, PIEAS, Islamabad

Department Head Dr. Muhammad ArifDCE, DEE PIEAS, Islamabad

Dean Research PIEASDr. Naeem IqbalDCE, DEE PIEAS, Islamabad

Thesis Submission Approval

This is to certify that the work contained in this thesis entitled Online Urdu

Handwritten Character Recognition System was carried out by Quara-tul-

Ain Safdar under my supervision and that in my opinion, it is fully adequate,

in scope and quality, for the degree of PhD Electrical Engineering from Pakistan

Institute of Engineering and Applied Sciences (PIEAS).

Supervisor:

Name: Dr. Kamran Ullah Khan

Date: February 14, 2019

Place: PIEAS, Islamabad

Head, Department of Electrical:

Name: Dr. Muhammad Arif

Date: February 14, 2019

Place: PIEAS, Islamabad

Online Urdu Handwritten

Character Recognition System

Quara-tul-Ain Safdar

Submitted in partial fulfillment of the requirements

for the degree of Ph.D.

2019

Department ofElectrical Engineering

Pakistan Institute of Engineering and Applied Sciences

Nilore, Islamabad 45650, Pakistan

Acknowledgements

At last, I have traveled the (pro)long(ed) thoroughfare of writing PhD thesis. It

seems like walking on a never ending road. It looks like wandering from room to

room hunting for the diamond necklace that is already around the neck but you

are unaware of its presence. However, the whole endeavoring led to a beautiful

destination.

It started from PIEAS, in Nilore. Well, right before the beginning began,

the Higher Education Commission of Pakistan advertised the Indigenous Scholar-

ship, I applied, got selected, my parents encouraged and made me reach at PIEAS.

Let me take you there for a stroll.

Clear blue sky, picturesque hills, wild greenery, twittering birds, and tran-

quillity.... it is PIEAS! (There are jackals, oxen and pigs too, but do not look at

them). ‘Pleasant’, ‘Cooperative’, ‘Nice’, ‘very Nice’... three persons, four words!

they made the long lasting impression.

In the beginning, I was afraid of the ‘Giants of knowledge’ in the depart-

ment of Electrical Engineering, and my PhD supervisor is one of them. Fortu-

nately, they were kind enough to teach me the skills they learnt through out their

lives. And they were sensible enough to make me realize the difficulties coming

along the journey. You know, the most benevolent Allah favored me with a high

quality man as PhD supervisor. With very clear concepts and deep knowledge, my

PhD supervisor polished my learning skills and illuminated my research-avenue

with his intellectual proficiency. He never refused to answer my questions. As

a human being, giving respect to my space, he guided me deciding between ‘ap-

propriate and inappropriate’, and ‘right and wrong’. Like my parents, he always

made me decide independently. It is him who made me how to acknowledge the

things worth to be acknowledged.

Along the way, there were many faces; some turned into well-wishers, some

into friends, and a few into family. There were (uncountable) helping hands as

well and shoulders to whom I could rest on. I may not forget the ‘Golden Girls’ of

session 2009-2011 who filled the blanks with valuable moments. I will remember

ii

the ‘Caring Agglomerates’ of session 2012-2014 for the respect I was endowed with.

And then there were especial ones! The days we had walking, talking, laughing and

laughing, and once again laughing with hurting jaws. The messages of “bhookun

laggiun veryun shadeedun” (I am very very hungry) for lunch and dinners. The

prathas we literally made together and that birthday cake too. The arguments,

counter-arguments, counter counter-arguments, and never seconded each other’s

opinion yet still sang (screamed is a true word for our singing) the songs together.

This is all the love I am holding on forever.

The road was getting longer than usual and time was getting harder on me

because I had got stuck somewhere on the track of publishing my research work.

Mornings met up with the evenings, the evenings transformed into nights, and

the given time was getting over. But the ‘courage’ did not lost me because of the

prayers. The prayers and true support of my family, friends and well-wishers, my

diamonds!, never left me in the darkness of disappointment. Rather my home-

trips always made me fresh and more energetic to carry on the journey in forward.

I owe them all.

From the starting block to the finish line many ups and downs has been

passed. At this moment, I am thankful for the nights that turned into morn-

ings; I am thankful for the friends who turned into family; I am thankful for the

family who turned into absolute prayers and never-ending source of courage and

determination; and I am thankful for the dreams that turned into reality.

The people who deserve to be thanked in the most are the tax-payers of my

country. Because these are them who paved the way for an ordinary girl to take

the course of her dreams. These are them who helped me finding the diamonds of

my necklace. Thanks, sir/madam; all the rest is mute.

I am thankful to the Higher Education Commission of Pakistan (HEC) for

providing scholarship under the Indigenous PhD 5000 Fellowship Program (Phase-

V) for my PhD studies.

iii

Author’s Declaration

I, Quara-tul-Ain Safdar hereby declare that my PhD Thesis Titled Online

Urdu Handwritten Character Recognition System is my own work and

has not been submitted previously by me or anybody else for taking any degree

from Pakistan Institute of Engineering and Applied Sciences (PIEAS) or any other

university / institute in the country / world. At any time if my statement is found

to be incorrect (even after my graduation), the university has the right to withdraw

my PhD degree.

(Quara-tul-Ain Safdar)

February 14, 2019

PIEAS, Islamabad.

iv

Plagiarism Undertaking

I, Quara-tul-Ain Safdar solemnly declare that research work presented in the

thesis titled Online Urdu Handwritten Character Recognition System is

solely my research work with no significant contribution from any other person.

Small contribution / help wherever taken has been duly acknowledged or referred

and that complete thesis has been written by me.

I understand the zero tolerance policy of the HEC and Pakistan Institute of En-

gineering and Applied Sciences (PIEAS) towards plagiarism. Therefore, I as an

author of the thesis titled above declare that no portion of my thesis has been

plagiarized and any material used as reference is properly referred / cited.

I undertake that if I am found guilty of any formal plagiarism in the thesis titled

above even after the award of my PhD degree, PIEAS reserves the rights to with-

draw / revoke my PhD degree and that HEC and PIEAS has the right to publish

my name on the HEC / PIEAS Website on which name of students are placed

who submitted plagiarized thesis.

(Quara-tul-Ain Safdar)

February 14, 2019

PIEAS, Islamabad.

v

Copyright Statement

The entire contents of this thesis entitled Online Urdu Handwritten Char-

acter Recognition System was carried out by Quara-tul-Ain Safdar are an

intellectual property of Pakistan Institute of Engineering and Applied Sciences

(PIEAS). No portion of the thesis should be reproduced without obtaining ex-

plicit permission from PIEAS.

vi

Contents

Acknowledgements ii

Author’s Declaration iv

Copyright Statement vi

Contents vii

List of Figures xi

List of Tables xv

Abstract xxi

List of Publications and Patents xxii

List of Abbreviations and Symbols xxiii

1 Introduction 1

1.1 Background . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1

1.2 Place of Handwriting in Digital Age . . . . . . . . . . . . . . . . . . 4

1.3 Word Processing Software . . . . . . . . . . . . . . . . . . . . . . . 4

1.4 Integrating Handwriting with Technology . . . . . . . . . . . . . . . 6

1.5 Difficulties Involved in Handwriting Recognition . . . . . . . . . . . 7

1.6 Online and Offline Handwriting Recognition . . . . . . . . . . . . . 9

1.6.1 Dynamic Information acquired through Online Hardware . . 10

1.6.2 Advantages of Online Handwriting Recognition over the Of-

fline . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 11

1.6.3 Available Handwriting Recognition Software . . . . . . . . . 12

1.7 Problem Statement: Online Handwritten Urdu Character Recognition 14

vii

1.8 Motivation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15

1.9 Literature Review . . . . . . . . . . . . . . . . . . . . . . . . . . . . 16

1.10 Contributions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 20

1.11 Thesis Organization . . . . . . . . . . . . . . . . . . . . . . . . . . . 21

2 Urdu 22

2.1 Urdu Character-Set . . . . . . . . . . . . . . . . . . . . . . . . . . . 22

2.1.1 Urdu Diacritics . . . . . . . . . . . . . . . . . . . . . . . . . 24

2.1.2 Single and Multi-Stroke Characters in Urdu . . . . . . . . . 24

2.1.3 Word-Breakdown Structure in Urdu . . . . . . . . . . . . . . 24

2.1.4 Half-Forms of Urdu Alphabets . . . . . . . . . . . . . . . . . 25

2.2 Urdu Fonts: Where do these Half-Forms come from? . . . . . . . . 27

2.2.1 The Nastalique Font . . . . . . . . . . . . . . . . . . . . . . 28

2.2.1.1 Characteristics of Nastalique Font . . . . . . . . . . 31

2.3 Idiosyncrasies of Urdu-Writing . . . . . . . . . . . . . . . . . . . . . 33

3 System Description 38

3.1 Data Acquisition . . . . . . . . . . . . . . . . . . . . . . . . . . . . 38

3.1.1 GUI: Writing Canvas . . . . . . . . . . . . . . . . . . . . . . 38

3.1.2 Information in Handwritten Character-Signal . . . . . . . . 39

3.1.3 About the Data . . . . . . . . . . . . . . . . . . . . . . . . . 41

3.1.4 Instructions for writing . . . . . . . . . . . . . . . . . . . . . 42

3.2 Character Database . . . . . . . . . . . . . . . . . . . . . . . . . . . 42

3.2.1 Handwritten Samples . . . . . . . . . . . . . . . . . . . . . . 44

3.3 Preprocessing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 45

3.3.1 Re-Sampling . . . . . . . . . . . . . . . . . . . . . . . . . . . 45

3.3.2 Smoothing . . . . . . . . . . . . . . . . . . . . . . . . . . . . 46

4 Pre-Classification 50

4.1 Pre-Classification of Half-Forms . . . . . . . . . . . . . . . . . . . . 50

4.2 Results of Pre-Classification . . . . . . . . . . . . . . . . . . . . . . 52

4.3 Further Reflections of Pre-Classification . . . . . . . . . . . . . . . . 56

5 Features Extraction 58

viii

5.1 Wavelet Transform . . . . . . . . . . . . . . . . . . . . . . . . . . . 60

5.1.1 Daubechies Wavelets . . . . . . . . . . . . . . . . . . . . . . 63

5.1.2 Discrimination Power of Wavelets . . . . . . . . . . . . . . . 63

5.1.3 Biorthogonal Wavelets . . . . . . . . . . . . . . . . . . . . . 65

5.1.4 Discrete Meyer Wavelets . . . . . . . . . . . . . . . . . . . . 65

5.2 Structural Features . . . . . . . . . . . . . . . . . . . . . . . . . . . 67

5.3 Sensory Input Values . . . . . . . . . . . . . . . . . . . . . . . . . . 67

6 Final Classification 72

6.1 Final Classifiers with Pre-Classification . . . . . . . . . . . . . . . . 72

6.2 Final Classifiers without Pre-Classification . . . . . . . . . . . . . . 74

6.3 Artificial Neural Networks . . . . . . . . . . . . . . . . . . . . . . . 74

6.4 Support Vector Machines (SVMs) . . . . . . . . . . . . . . . . . . . 75

6.5 Recurrent Neural Networks: Long Short-Term Memory . . . . . . . 77

6.6 Deep Belief Network . . . . . . . . . . . . . . . . . . . . . . . . . . 79

6.7 AutoEncoders . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 80

6.8 Results and Discussions . . . . . . . . . . . . . . . . . . . . . . . . 82

6.9 Results with Pre-Classification . . . . . . . . . . . . . . . . . . . . . 82

6.10 Results without Pre-Classification . . . . . . . . . . . . . . . . . . . 82

6.11 Maximum Recognition Rate . . . . . . . . . . . . . . . . . . . . . . 85

6.11.1 Overall Accuracy . . . . . . . . . . . . . . . . . . . . . . . . 86

6.11.2 Polling or Subset-wise Accuracy . . . . . . . . . . . . . . . . 87

6.11.3 Character-wise Accuracy . . . . . . . . . . . . . . . . . . . . 87

6.12 Error Analysis using Confusion Matrices . . . . . . . . . . . . . . . 87

6.12.1 Confusing Characters . . . . . . . . . . . . . . . . . . . . . . 91

7 Conclusion 95

7.1 Future Work . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 96

Appendices 98

Appendix A Confusion Matrices 99

A.1 Confusion Matrices of Support Vector Classifier with db2 -Wavelet-

Features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 99

ix

A.2 Confusion Matrices of Support Vector Classifier with Sensory Input

Values . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 107

Appendix B Handwritten Urdu Character Samples 115

References 122

x

List of Figures

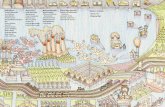

Figure 1.1 Ancient symbols for alphabets [7] . . . . . . . . . . . . . . . 2

Figure 2.1 Urdu alphabets (fundamental) . . . . . . . . . . . . . . . . . 23

Figure 2.2 Alphabets added to fundamental Urdu alphabets to cope

with phonetic peculiarities . . . . . . . . . . . . . . . . . . . 23

Figure 2.3 Examples of Urdu (fundamental) alphabets with major and

(none, one-, two-, or three-) minor strokes . . . . . . . . . . 25

Figure 2.4 Constructing the Urdu-words . . . . . . . . . . . . . . . . . 26

Figure 2.5 All Urdu characters in all half-forms. . . . . . . . . . . . . . 26

Figure 2.6 Single- and multi-strokes half-forms of Urdu Characters . . . 28

Figure 2.7 Examples of words composed of half-form characters. . . . . 29

Figure 2.8 Examples of words composed from (segmented) handwritten

half-form characters . . . . . . . . . . . . . . . . . . . . . . 29

Figure 2.9 Different Urdu fonts . . . . . . . . . . . . . . . . . . . . . . 30

Figure 2.10 Context dependency . . . . . . . . . . . . . . . . . . . . . . 33

Figure 2.11 Distinct features of Nastalique font . . . . . . . . . . . . . . 34

Figure 2.12 Idiosyncrasies of Urdu writing emphasizing ligature overlap,

writing directions, and placement of diacritics . . . . . . . . 35

Figure 2.13 Idiosyncrasies of Urdu writing emphasizing presence and

characteristics of loops in different writing styles . . . . . . 36

Figure 2.14 Idiosyncrasies of Urdu writing emphasizing presence or ab-

sence of loop in the same character penned by different hands 36

Figure 3.1 Block diagram of the proposed Online Urdu character recog-

nition system: from data acquisition to preprocessing to pre-

classification to feature extraction to final classification. . . . 39

Figure 3.2 Writing interface for digitizing tablet . . . . . . . . . . . . . 40

xi

Figure 3.3 An Urdu word is written on the canvas with the help of a

stylus and tablet . . . . . . . . . . . . . . . . . . . . . . . . 41

Figure 3.4 Examples of handwritten character using stylus and digitiz-

ing tablet . . . . . . . . . . . . . . . . . . . . . . . . . . . . 43

Figure 3.5 Examples of handwritten character using stylus and digitiz-

ing tablet . . . . . . . . . . . . . . . . . . . . . . . . . . . . 44

Figure 3.6 Examples of handwritten character using stylus and digitiz-

ing tablet . . . . . . . . . . . . . . . . . . . . . . . . . . . . 45

Figure 3.7 A handwritten ensemble of all Urdu characters written on

the canvas with the help of a stylus and digitizing tablet . . 46

Figure 3.8 Re-sampling and Down-sampling of characters . . . . . . . . 48

Figure 3.9 Smoothing of Urdu Handwritten Samples . . . . . . . . . . . 49

Figure 4.1 Pre-classification of initial half-forms on the basis of stroke

count, position and shape of diacritics . . . . . . . . . . . . . 51

Figure 4.2 Pre-classification of medial half-forms on the basis of stroke

count, position and shape of diacritics . . . . . . . . . . . . . 52

Figure 4.3 Pre-classification of terminal half-forms on the basis of

stroke count, position and shape of diacritics . . . . . . . . . 53

Figure 5.1 Wavelet coefficients for ‘sheen’ and ‘zwad’ . . . . . . . . . . 59

Figure 5.2 Wavelet coefficients for different Urdu characters in their

half-forms. Top row shows the character, and x(t) and y(t)

of its major stroke. Second and third rows show level-2

db2 wavelet approximation, and level-4 db2 wavelet detail

coefficients of x(t) and y(t) respectively . . . . . . . . . . . . 61

Figure 5.3 Wavelet coefficients for ‘Tay’ . . . . . . . . . . . . . . . . . . 62

Figure 5.4 Wavelet coefficients for ‘kaafI’ . . . . . . . . . . . . . . . . . 64

Figure 5.5 Wavelet coefficients for ‘hamza’ . . . . . . . . . . . . . . . . 66

Figure 5.6 Wavelet coefficients for ‘ghain’ and ‘fay’ . . . . . . . . . . . . 68

Figure 5.7 Wavelet coefficients for ‘daal’ and ‘wao’. Due to flow of

writing by the users ‘daal’ has included a loop which makes

its wavelets transform similar to ‘wao’ . . . . . . . . . . . . . 69

xii

Figure 5.8 Wavelet coefficients for three different handwritten samples

of ‘meem’ in initial form. Top row shows the character

‘meem’ in initial form written differently by different users,

and x(t) and y(t) of its major stroke. Second and third

rows show level-2 db2 wavelet approximation, and level-4

db2 wavelet detail coefficients of x(t) and y(t) respectively . 70

Figure 5.9 Wavelet coefficients for Urdu character ‘hay ’ in their half-

forms. Top row shows character ‘hay ’, and x(t) and y(t)

of its major stroke. Second and third rows show level-2

db2 wavelet approximation, and level-4 db2 wavelet detail

coefficients of x(t) and y(t) respectively . . . . . . . . . . . . 71

Figure 6.1 Multi-layer perceptron neural network . . . . . . . . . . . . 75

Figure 6.2 Bidirectional multi-layer recurrent neural network . . . . . . 77

Figure 6.3 A simple recurrent neural network. Along solid edges acti-

vation is passed as in feed-forward network. Along dashed

edges a source node at each time t is connected to a target

node at each following time t+1 . . . . . . . . . . . . . . . . 78

Figure 6.4 Confusing pair of ‘fay ’ and ‘ghain’ in medial forms . . . . . 93

Figure 6.5 Confusing pair of ‘Tay ’ and ‘hamza’ in medial forms . . . . 94

Figure 6.6 Confusing pair of ‘ain’ and ‘swad ’ in medial forms . . . . . . 94

Figure 6.7 Confusing pair of ‘meem’ and ‘swad ’ in initial forms . . . . . 94

Figure 6.8 Confusing pair of ‘daal ’ and ‘wao’ in terminal forms . . . . . 94

Figure B.1 A handwritten ensemble of all Urdu characters written on

the canvas with the help of a stylus and digitizing tablet by

writer-1 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 115

Figure B.2 A handwritten ensemble of all Urdu characters written on

the canvas with the help of a stylus and digitizing tablet by

writer-2 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 116

Figure B.3 A handwritten ensemble of all Urdu characters written on

the canvas with the help of a stylus and digitizing tablet by

writer-3 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 116

xiii

Figure B.4 A handwritten ensemble of all Urdu characters written on

the canvas with the help of a stylus and digitizing tablet by

writer-4 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 117

Figure B.5 A handwritten ensemble of all Urdu characters written on

the canvas with the help of a stylus and digitizing tablet by

writer-5 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 117

Figure B.6 A handwritten ensemble of all Urdu characters written on

the canvas with the help of a stylus and digitizing tablet by

writer-6 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 118

Figure B.7 A handwritten ensemble of all Urdu characters written on

the canvas with the help of a stylus and digitizing tablet by

writer-7 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 118

Figure B.8 A handwritten ensemble of all Urdu characters written on

the canvas with the help of a stylus and digitizing tablet by

writer-8 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 119

Figure B.9 A handwritten ensemble of all Urdu characters written on

the canvas with the help of a stylus and digitizing tablet by

writer-9 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 119

Figure B.10 A handwritten ensemble of all Urdu characters written on

the canvas with the help of a stylus and digitizing tablet by

writer-10 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 120

Figure B.11 A handwritten ensemble of all Urdu characters written on

the canvas with the help of a stylus and digitizing tablet by

writer-11 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 120

Figure B.12 A handwritten ensemble of all Urdu characters written on

the canvas with the help of a stylus and digitizing tablet by

writer-12 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 121

Figure B.13 A handwritten ensemble of all Urdu characters written on

the canvas with the help of a stylus and digitizing tablet by

writer-13 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 121

xiv

List of Tables

Table 1.1 Comparison of the proposed online Urdu handwritten char-

acter recognition method with Arabic work . . . . . . . . . . 18

Table 1.2 Comparison of the proposed online Urdu handwritten char-

acter recognition method with Persian work . . . . . . . . . . 18

Table 1.3 Comparison of online Urdu handwritten character recognition 19

Table 4.1 Pre-classification of Urdu character-set. The encircled num-

bers indicate the cardinality of final stage subsets that could

be obtained with the help of the proposed pre-classifier . . . . 54

Table 4.2 Characters recognized at pre-classification stage and don’t

require any further classification . . . . . . . . . . . . . . . . 55

Table 6.1 Features-classifier Summary . . . . . . . . . . . . . . . . . . . 73

Table 6.2 ANN configurations (trained using wavelet db2 approxima-

tion and detailed coefficients). . . . . . . . . . . . . . . . . . 76

Table 6.3 RNN configurations (trained using sensory input values). . . . 80

Table 6.4 DBN configurations (trained using wavelet dmey approxima-

tion and detailed coefficients). . . . . . . . . . . . . . . . . . 81

Table 6.5 Recognition rates for each subset of handwritten half-form

Urdu characters obtained from the pre-classifier. Results ob-

tained with using ANNs, and SVMs using different features

are presented for comparison. . . . . . . . . . . . . . . . . . . 83

Table 6.6 Recognition rates for each subset of handwritten Urdu char-

acters obtained from the pre-classifier. Results obtained with

DBN, AE-DBN, AE-SVM and RNN using different features

are presented for comparison. . . . . . . . . . . . . . . . . . . 84

xv

Table 6.7 Recognition rates for half-form Urdu characters without go-

ing through pre-classification. Results are obtained using

SVMs, DBN, AE-DBN, AE-SVM and RNN using different

features. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 85

Table 6.8 Characters accuracy chart . . . . . . . . . . . . . . . . . . . . 88

Table 6.9 Confusion matrix for 4-stroke characters (initial half-form)

with dot diacritic above the major stroke . . . . . . . . . . . 89

Table 6.10 Confusion matrix for initial half-forms 2-stroke characters

with other-than-dot diacritic above the major stroke. Overall

accuracy for this subset is 91.9% . . . . . . . . . . . . . . . . 90

Table 6.11 Confusion matrix for medial half-form 2-stroke characters

with dot diacritic above the major stroke. Overall accuracy

for this subset is 93.6% . . . . . . . . . . . . . . . . . . . . . 90

Table 6.15 Confusion matrix for medial half-forms 2-stroke characters

with other-than-dot diacritic above the major stroke. Overall

accuracy for this subset is 93.3% . . . . . . . . . . . . . . . . 92

Table 6.16 Confusion matrix for terminal half-forms 2-stroke characters

with dot diacritic above the major stroke. Overall accuracy

for this subset is 96.7% . . . . . . . . . . . . . . . . . . . . . 92

Table 6.17 Confusion matrix for terminal half-forms 4-stroke characters

with dot diacritic above the major stroke. Overall accuracy

for this subset is 99.6% . . . . . . . . . . . . . . . . . . . . . 93

Table A.1 Confusion matrix for single-stroke characters (initial half-

form). It contains 7 characters. Overall accuracy: 94.7% . . . 99

Table A.2 Confusion matrix for 2-stroke characters (initial half-form)

with dot diacritic above the major stroke. It contains 6 char-

acters. Overall accuracy: 99.1% . . . . . . . . . . . . . . . . . 100

Table A.3 Confusion matrix for 2-stroke characters (initial half-form)

with other-than-dot diacritic above the major stroke. It con-

tains 6 characters. Overall accuracy: 91.9% . . . . . . . . . . 100

xvi

Table A.4 Confusion matrix for 2-stroke characters (initial half-form)

with dot diacritic below the major stroke. It contains 3 char-

acters. Overall accuracy: 97.2% . . . . . . . . . . . . . . . . . 100

Table A.5 Confusion matrix for 2-stroke characters (initial half-form)

with other-than-dot diacritic below the major stroke. It con-

tains 2 characters. Overall accuracy: 98.3% . . . . . . . . . . 101

Table A.6 Confusion matrix for 3-stroke characters (initial half-form)

with dot diacritic above the major stroke. It contains 3 char-

acters. Overall accuracy: 94.4% . . . . . . . . . . . . . . . . . 101

Table A.7 Confusion matrix for 3-stroke characters (initial half-form)

with other-than-dot diacritic above the major stroke. It con-

tains 2 characters. Overall accuracy: 100% . . . . . . . . . . 101

Table A.8 Confusion matrix for 4-stroke characters (initial half-form)

with dot diacritic above the major stroke. It contains 3 char-

acters. Overall accuracy: 88.8% . . . . . . . . . . . . . . . . . 101

Table A.9 Confusion matrix for 4-stroke characters (initial half-form)

with dot diacritic below the major stroke. It contains 3 char-

acters. Overall accuracy: 92.7% . . . . . . . . . . . . . . . . . 102

Table A.10 Confusion matrix for single-stroke characters (medial half-

form). It contains 8 characters. Overall accuracy: 89.1% . . . 102

Table A.11 Confusion matrix for 2-stroke characters (medial half-form)

with dot diacritic above the major stroke. It contains 8 char-

acters. Overall accuracy: 93.6% . . . . . . . . . . . . . . . . . 102

Table A.12 Confusion matrix for 2-stroke characters (medial half-form)

with other-than-dot diacritic above the major stroke. It con-

tains 4 characters. Overall accuracy: 93.3% . . . . . . . . . . 103

Table A.13 Confusion matrix for 2-stroke characters (medial half-form)

with dot diacritic below the major stroke. It contains 2 char-

acters. Overall accuracy: 98.3% . . . . . . . . . . . . . . . . . 103

Table A.14 Confusion matrix for 3-stroke characters (medial half-form)

with dot diacritic above the major stroke. It contains 2 char-

acters. Overall accuracy: 95.0% . . . . . . . . . . . . . . . . . 103

xvii

Table A.15 Confusion matrix for 3-stroke characters (medial half-form)

with other-than-dot diacritic above the major stroke. It con-

tains 2 characters. Overall accuracy: 95.8% . . . . . . . . . . 103

Table A.16 Confusion matrix for 4-stroke characters (medial half-form)

with dot diacritic above the major stroke. It contains 2 char-

acters. Overall accuracy: 95.8% . . . . . . . . . . . . . . . . . 104

Table A.17 Confusion matrix for 4-stroke characters (medial half-form)

with dot diacritic below the major stroke. It contains 2 char-

acters. Overall accuracy: 100% . . . . . . . . . . . . . . . . . 104

Table A.18 Confusion matrix for single-stroke characters (terminal half-

form). It contains 16 characters. Overall accuracy: 94.7% . . 104

Table A.19 Confusion matrix for 2-stroke characters (terminal half-form)

with dot diacritic above the major stroke. It contains 9 char-

acters. Overall accuracy: 96.7% . . . . . . . . . . . . . . . . . 105

Table A.20 Confusion matrix for 2-stroke characters (terminal half-form)

with other-than-dot diacritic above the major stroke. It con-

tains 7 characters. Overall accuracy: 99.0% . . . . . . . . . . 105

Table A.21 Confusion matrix for 3-stroke characters (terminal half-form)

with dot diacritic above the major stroke. It contains 3 char-

acters. Overall accuracy: 99.4%. . . . . . . . . . . . . . . . . 105

Table A.22 Confusion matrix for 4-stroke characters (terminal half-

forms) with dot diacritic above the major stroke. It contains

4 characters. Overall accuracy: 99.6% . . . . . . . . . . . . . 106

Table A.23 Confusion matrix for single-stroke characters (initial half-

form). It contains 7 characters. Overall accuracy: 95.4% . . . 107

Table A.24 Confusion matrix for 2-stroke characters (initial half-form)

with dot diacritic above the major stroke. It contains 6 char-

acters. Overall accuracy: 99.0% . . . . . . . . . . . . . . . . . 107

Table A.25 Confusion matrix for 2-stroke characters (initial half-form)

with other-than-dot diacritic above the major stroke. It con-

tains 6 characters. Overall accuracy: 89.3% . . . . . . . . . . 108

xviii

Table A.26 Confusion matrix for 2-stroke characters (initial half-form)

with dot diacritic below the major stroke. It contains 3 char-

acters. Overall accuracy: 98.6% . . . . . . . . . . . . . . . . . 108

Table A.27 Confusion matrix for 2-stroke characters (initial half-form)

with other-than-dot diacritic below the major stroke. It con-

tains 2 characters. Overall accuracy: 96.0% . . . . . . . . . . 108

Table A.28 Confusion matrix for 3-stroke characters (initial half-form)

with dot diacritic above the major stroke. It contains 3 char-

acters. Overall accuracy: 94.6% . . . . . . . . . . . . . . . . . 109

Table A.29 Confusion matrix for 3-stroke characters (initial half-form)

with other-than-dot diacritic above the major stroke. It con-

tains 2 characters. Overall accuracy: 100% . . . . . . . . . . 109

Table A.30 Confusion matrix for 4-stroke characters (initial half-form)

with dot diacritic above the major stroke. It contains 3 char-

acters. Overall accuracy: 86.6% . . . . . . . . . . . . . . . . . 109

Table A.31 Confusion matrix for 4-stroke characters (initial half-form)

with dot diacritic below the major stroke. It contains 3 char-

acters. Overall accuracy: 94.0% . . . . . . . . . . . . . . . . . 109

Table A.32 Confusion matrix for single-stroke characters (medial half-

form). It contains 8 characters. Overall accuracy: 93.7% . . . 110

Table A.33 Confusion matrix for 2-stroke characters (medial half-form)

with dot diacritic above the major stroke. It contains 8 char-

acters. Overall accuracy: 90.0% . . . . . . . . . . . . . . . . . 110

Table A.34 Confusion matrix for 2-stroke characters (medial half-form)

with other-than-dot diacritic above the major stroke. It con-

tains 4 characters. Overall accuracy: 93.0% . . . . . . . . . . 110

Table A.35 Confusion matrix for 2-stroke characters (medial half-form)

with dot diacritic below the major stroke. It contains 2 char-

acters. Overall accuracy: 100% . . . . . . . . . . . . . . . . . 111

Table A.36 Confusion matrix for 3-stroke characters (medial half-form)

with dot diacritic above the major stroke. It contains 2 char-

acters. Overall accuracy: 97.0% . . . . . . . . . . . . . . . . . 111

xix

Table A.37 Confusion matrix for 3-stroke characters (medial half-form)

with other-than-dot diacritic above the major stroke. It con-

tains 2 characters. Overall accuracy: 98.0% . . . . . . . . . . 111

Table A.38 Confusion matrix for 4-stroke characters (medial half-form)

with dot diacritic above the major stroke. It contains 2 char-

acters. Overall accuracy: 96.0% . . . . . . . . . . . . . . . . . 111

Table A.39 Confusion matrix for 4-stroke characters (medial half-form)

with dot diacritic below the major stroke. It contains 2 char-

acters. Overall accuracy: 100% . . . . . . . . . . . . . . . . . 112

Table A.40 Confusion matrix for single-stroke characters (terminal half-

form). It contains 16 characters. Overall accuracy: 96.3% . . 112

Table A.41 Confusion matrix for 2-stroke characters (terminal half-form)

with dot diacritic above the major stroke. It contains 9 char-

acters. Overall accuracy: 91.5% . . . . . . . . . . . . . . . . . 113

Table A.42 Confusion matrix for 2-stroke characters (terminal half-form)

with other-than-dot diacritic above the major stroke. It con-

tains 7 characters. Overall accuracy: 99.4% . . . . . . . . . . 113

Table A.43 Confusion matrix for 3-stroke characters (terminal half-form)

with dot diacritic above the major stroke. It contains 3 char-

acters. Overall accuracy: 100%. . . . . . . . . . . . . . . . . . 113

Table A.44 Confusion matrix for 4-stroke characters (terminal half-

forms) with dot diacritic above the major stroke. It contains

4 characters. Overall accuracy: 98.5% . . . . . . . . . . . . . 114

xx

Abstract

This thesis presents an online handwritten character recognition system for Urdu

handwriting. The main target is to recognize handwritten script inputted on the

touch screen of a mobile device in particular, and other touch input devices in

general. Urdu alphabets are difficult to recognize because of inherent complexities

of the script. In a script, Urdu alphabets appear in full as well as in half-forms:

initials, medials, and terminals. Ligatures are formed by combining two or more

half-form characters. The character-set in half-forms has 108 elements. The whole

character-set of 108 elements is too difficult to be classified accurately by a single

classifier.

In this work, a framework for development of online Urdu handwriting

recognition system for smartphones has been presented. A pre-classifier is de-

signed to segregate the large Urdu character-set into 28 smaller subsets, based on

the number of strokes in a character and the position and shape of the diacrtics.

This pre-classification allows to cope with the demand of robust and accurate

recognition on processors having relatively low computational power and limited

memory available to mobile devices, through banks of computationally less com-

plex classifiers. Based on the decision of the pre-classifier, the appropriate classi-

fier from the bank of classifiers is loaded to the memory to achieve the recognition

task. A comparison of different classifier-feature combinations is presented in this

study to exhibit the features’ discrimination capability and classifiers’ recognition

ability. The subsets are recognized with different machine learning algorithms

such as artificial neural networks, support vector machines, deep belief networks,

long short-term memory recurrent neural networks, autoencoders-support vector

machines, and autoencoders-deep belief networks. These classifiers are trained

with wavelet transform features, structural features, and with sensory input val-

ues. Maximum overall classification accuracy of 97.2% has been achieved. A large

database of handwritten Urdu characters is developed and employed in this study.

This database contains 10800 samples of the 108 Urdu half-form characters (100

samples of each character) acquired from 100 writers.

xxi

List of Publications and Patents

Journal Publication:

• Safdar, Quara tul Ain, Khan, Kamran Ullah, and Peng, Lianguri, “A Novel

Similar Character Discrimination Method for Online Handwritten Urdu

Character Recognition in Half Forms”, Scientia Iranica, vol. , pp. , 2018.

ISSN=“1026-3098”, DOI=“10.24200/sci.2018.20826”

Conference Publication:

• Q. Safdar and K. U. Khan, “Online Urdu Handwritten Character Recog-

nition: Initial Half Form Single Stroke Characters”, in 12th International

Conference on Frontiers of Information Technology, Dec 2014, pp. 292–297.

xxii

List of Abbreviations and

Symbols

AE AutoEncodersANN Artificial Neural NetworkBPNN Back Propagation Neural Network

BRNN Bidirectional Recurrent Neural NetworkDBN Deep Belief Network

GUI Graphical User Interface

IHF Initial Half-FormLSTM Long Short-Term Memory

MHF Medial Half-FormMLP Multi-Layer Perceptron

NLPD National Language Promotion Department

OCR Optical Character Recognition

OS Operating System

PDA Personal Digital Assistant

POS Point of SaleRBF Radial Basis FunctionRBM Restricted Boltzmann MachineRNN Recurrent Neural NetworkSC Stroke CountSVC Support Vector Classifier

SVM Support Vector Machine

THF Terminal Half-FormUK United Kingdom

USA United Staes of America

xxiii

Chapter 1

Introduction

Online handwritten character recognition is a process in which data-stream for

handwritten characters is collected, recognized and converted to editable text as

the writer writes on a digital surface [1], [2]. The digital surface may be a tablet or

any hand-held device (like personal digital assistant, smartphone etc.) that allows

handwriting on its surface either by an electronic pen/stylus or with a finger-tip.

1.1 Background

Writing by hand, an illustration of a synchronization of mind and body, is one of

the most mesmerizing and influential inventions of human beings. It is seeded in

artistic depictions engraved on rocks, etched in sand, and marked on walls that at

last morphed into alphabets [3] (see Figure 1.1), ligatures, graphemes, and words.

Each hand-drawn shape, each handwritten word is not mere a scribbling expres-

sion but the most natural way of information exchange. It reminds us that we

are still conducting the ancient act of using hands to transcribe what rests in our

minds. Reading and writing play a vital role to develop a civilized society. Since

its early days thereabouts 5000 years ago in Mesopotamia and Egypt different

symbols (alphabets) were coined [4] in order to save thoughts and facts. Symbols

were imprinted or scratched in clay, or drawn on parchment, wax tablets, and

papyrus with the help of quill pens and reed pens. They also made use of thin

metal sticks called stylus (pl. styluses or styli) for writing in wax tablets and for

palm-leaf manuscripts. With the passage of time, interaction among individuals

1

Introduction

and tribes increased. Kingdoms expanded with which keeping track of historical

and environmental events became the calendrical and political necessity to survive

and rule. The complexity of administrative actions and trade transactions out-

grew human memory. It required the administrators and traders to keep record

of administrative affairs and transactions in some permanent form [5]. The obser-

vance of this substantial requirement helped writing to evolve as a more reliable

method for registering and presenting the matters and events, deals and deeds,

actions and transactions, and many other goings-on. Earlier the implements or

instruments used to write something were quills, reeds, and metallic sticks. To

speedup the writing process the writing implements were gradually complemented

by letterpress, stamps, chalks, split-nib pens, dip pens, graphite pencils etc. [6].

With the development of pen and paper handwriting became prevailing mode for

documentation. Afterwards, the handwriting turned to be the part of literacy

culture, qualified as a rudiment of academics and considered imperative to pro-

fessional life. Nowadays, the mode of writing is going through a dramatic change.

Early

Semitic

1800

Phoenician

1100 1100 900Greek

800 700Roman

100 CE

Ox

House

Throwing

Stick

Egyptian

2000 BCE

Early

Semitic

1800

Phoenician

1100

Roman

100 CE

Greek

600

Egyptian

2000

Sumerian

4000 BCE

Early

Semitic

1800

Phoenician

1100

Roman

100 CE

Greek

600

Early

Latin

300

Early

Hebrew

1000

Egyptian

2000 BCE

Figure 1.1: Ancient symbols for alphabets [7]

With the emergence of smart IT equipment and digital writing devices it is be-

ing observed that writing by hand is getting less and less in our daily lives [8].

These days it is just to use a keypad or a touch-sensitive screen to do number

of jobs with a single keypress or tap, like operating machines, withdrawing cash,

2

Introduction

filling forms, searching a book or an article in an online repository, posting mes-

sages, uploading images, adding animations and much more. As we type merrily

on keypads or signal to touch-screens, handwriting certainly seems like a dying

form. In this scenario one of the two questions should be addressed; whether this

withering away of handwriting is really a setback or it is inexorable evolution of

language-forms unfolded over the centuries from oral to written to printed, and

now in the form of electronic-ink [9]. Sometimes, at some places it’s just signing

naively on a credit-card-pay-screen using mere a finger or scribbling a signature

with an electronic-pen at the grocery store which tells us that handwriting needs

not fold up and die. Moreover, the desire to coalesce/fuse the convenience of

handwriting with the need to use, maintain, and communicate digital information

requires the digital industry to embed handwritten-input into hand-held devices,

smart boards and smartphones, tablet PCs, personal digital assistants (PDAs),

and other ubiquitous computing devices. From mainframes to ubiquitous devices,

shaping of personal computers and miniature devices have taken an intellectual

leap. Undoubtedly, invention of transistors and ICs revolutionized the technologi-

cal means, however mere the availability of instruments and devices cannot ensure

the breakthroughs. Computing for portable devices and smart environments en-

hanced the human-machine interaction and becomes the key turning point in the

modern world. Looking back, we see that primarily the mainframes were the ma-

chines shared by lots of people. Afterwards, in personal computers era people were

put into a computer generated virtual reality while the user and machine were star-

ing at each other across the desktop. In the current time, the mobile computing

made the machines to live out here in the physical world with the users. Mo-

bile devices which simply started as portable telephones evolved into smartphones

and smart computing devices. Undoubtedly, this evolution of portable computing

devices reshaped the world of personal computers. An important change that hap-

pened with this development is the change in mode of input to portable devices.

Soft(ware)-keyboard-replicas replaced the hard keyboarding which led the atten-

tion towards non-keyboard-based interfaces. An interface which interacts with

the device by taking input either through a pen or a finger-tip(s) is said to be a

non-keyboard-based interface. Input through a pen moving on a tablet or through

3

Introduction

a finger-tip tapping on a touch-screen swayed the research communities to design

and develop the interfaces that could realize the handwritten inputs efficiently.

1.2 Place of Handwriting in Digital Age

Handwriting represents a person’s identity and forms a unique part of a civiliza-

tion. It is less restrictive, more functional, and creative as compared to keyboard-

ing which is a digital counterpart of handwriting. Handwriting of an individual

and handwritten scripts of a society show evolution of text not only for a per-

son but more importantly for a civilization. Written languages either made up of

letters like Latin, English, German, Devanagari, Arabic, Urdu etc. or consist of

characters like Mandarin, Japanese etc. are the examples for evolution of text.

One can see through handwritten documents what went before. Writing by hand

is an integral part of not only our daily life but also a learning tool in any edu-

cational system. It is developed as a functional skill because of majority of our

academic examinations are still handwritten. Even a good handwriting can serve

as a benefit in scholastics. Usually the students who can write legible gets an ad-

vantage over those who cannot. Although technological means are becoming part

of our class rooms yet students’ ability to write clearly is still the center of atten-

tion. We all know that writing by hand is less restrictive. It gives the writer a free

hand to write things and thoughts in any style, draw any kind of shapes, connect

different sections together, scribble side notes, encircle important information and

much more wherever and whenever it makes sense. Besides retaining creative flow

use of pen also comes up with cognitive benefits. Writing and rewriting notes and

information by hand makes it more likely to remember it.

1.3 Word Processing Software

On the other hand, with the development of word processing software, creation,

updating and maintenance of documents can be viewed on a different level. Doc-

ument is typed up, saved with a single click, gone through editing as many times

as necessary. Pictures, shapes and diagrams are allowed to add although graphics

made in word processing softwares are often not as sophisticated as those created

4

Introduction

with specialized programs. Spelling and grammatical mistakes can be corrected

using in-built spell and grammar checking option. Text formatting, margin ad-

justments, and page layout settings are available to make the document look more

appealing, easy to read, and above all in a standard format. Generating multiple

copies and keeping older to newer versions of a document becomes an easy task

with the help of word processors. Converting the document from soft-form to

hard-form, that is to say, taking a printout is nothing but mere a story of a click

(if a printer is already installed). Moreover, the availability of the document files

on various platforms, and their synchronization across multiple devices have made

the documents handling somewhat an easier job.

However, the other side of the picture narrates that typing up in a lan-

guage which uses alphabets different from English (Latin script) is not a trivial

exercise. In fact, there are a number of languages which do not follow Latin

script and therefore have different character-sets. For example, Bulgarian, Be-

larusian, Russian, Ukrainian, Macedonian, Serbian, Old Church Slavonic, Church

Slavonic use Cyrillic alphabets. Bengali, Devanagari, Gurmukhi, Gujarati, and

Tibetan belong to Brahmic family of scripts. Urdu follows Arabic and Persian

like scripts. Chinese, Japanese, Korean, Hebrew, Greek, Armenian, Georgian,

each has its own set of alphabets/characters not matching with Latin alphabets

normally found on a standard keyboard. Moreover, Latin characters with diacritic

(circumflex or umlaut), part of some Latin script based languages (e.g. German,

French, Swedish, Finnish, Spanish, Italian etc.) are not easily accessible on a key-

board. Similarly taking the example of Japanese language used in daily life, there

are more than 3000 Kanji and Kana (Chinese ideographs (Kanji) and Japanese

syllabaries (Kana) where each of the syllabaries appears for one consonant-vowel

pair) characters and digits. Even though designating a nominal subset from larger

character-set (of 3000 characters), there would be at least 100 characters in the

subset. This subset is even too large to input through a keyboard for an ordinary

user [10]. Furthermore, incorporating complex mathematical symbols and equa-

tions in a document is not that much straight forward as that of typing a simple

English sentence. It is quite easier to hand write an equation (on a hard copy of

the document) than using some equation typing software.

5

Introduction

1.4 Integrating Handwriting with Technology

From a technology user’s point of view, such machines are receiving a warm wel-

come in which ease of human-machine interaction is well focused. Input through

handwriting is one such example of convenience that is being tried to provide in

smart machines. So what if we merge the convenience of handwriting with the

smartness of machines?

Earlier, personal computers and machines were provided with keyboards

and keypads. On a keyboard there are two ways to type, either by using two fingers

(Hunt and Peck Method, also called Eagle Finger Typing) or using both hands

where the fingers are set down on A, S, D, F and J, K, L keys and thumbs are used

to access the space bar (Touch-Typing or Touch-Keyboarding). In touch-typing, a

string of keys is typed pressing the keys one finger down at a time without looking

at the keyboard. A typed sentence is obtained through a series of coordinated and

automatized movements of fingers. However in touch-typing, to press the right

key with the right finger requires some beginner’s knowledge. Moreover, typing

rehearsal becomes necessary so that brain can learn coordination of fingers and

intricate movements of fingers could be executed easily at first and at last speedily.

Touch-keyboarding has already been replaced by touch-sensitive screens, panels

and interfaces in writing pads, smartphones, tablets, phablets and many other

portable and functional common electronics, and even in non-portable machines.

A touch-sensitive screen is a device which acts not only as an input device but also

as an output device. Displayed options on a touchscreen (output) can be chosen by

touching the screen (input) with the help of finger(s) or a special stylus (however,

for most modern touch-screens stylus has become an optional choice). The use of

touch-screens is established in various fields like heavy industry, medical, commu-

nication etc.; especially in those areas where keyboard and mouse may not permit

a suitably intuitive, instantaneous, or precise and accurate interaction between

the user and displayed content like kiosks, ATMs, point of sale (POS) systems,

electronic voting machines etc. Varying from machine to machine either a menu

driven interface or ‘app-icons’ are provided with touch-screens to access different

options or applications. Certainly, the technology with embedded touch screens

and intuitive user interfaces has brought great convenience to human-machine in-

6

Introduction

teraction. However, well effective interfacing is not an easy task. Moreover, there

are scenarios, like note taking, drawing/painting or electronic document annota-

tion, where a significant amount of data is taken as input, for which mere touch

interaction is not enough. To make these tasks easier and more natural there

should be other input methods. Today, for natural writing, note taking and draw-

ing, a pen or an active stylus can be viewed as the most potential device among

all input devices. Being precise and more intuitive, a stylus/pen can brush up

the user’s experience of touch-devices. Styluses/Pens are portable and have ex-

tendable functions of pressure sensitivity measurement and auxiliary customizable

buttons for different tasks. Instead of going through menus via touch or click it

is easier to write using a stylus and the required activity will be done. However,

‘from handwritten-command to task-done’ requires logically rich and an efficient

interfacing.

1.5 Difficulties Involved in Handwriting Recognition

As stated above, developing an interface that could recognize and respond effi-

ciently to handwritten input(s) is a non-trivial job. The task of efficient interfacing

is difficult mainly because of two reasons. At first, ‘handwriting’ by itself and at

second ‘hardware’ resources for processing in portable devices. Writing by hand

either by using a simple led-pencil on a paper or with the help of a pen/stylus on

a smart-screen inherits complications from versatile nature of handwriting(s). It

also owes complexities of the language in which the input command has been writ-

ten. Each writable language follows a particular script and each script has its own

alphabets and writing standards. Some scripts allow cursive style of writing, gen-

erally intended for making handwriting faster while some others are non-cursive,

in which writing style follows a ‘printscript’ where letters of a word are not con-

nected to each other. Certainly, the very nature of the language script propounds

difficulties to the interface development.

Nature of the script and versatility in writing by hand are not the only

challenges that make the handwriting recognizable interfacing a tough job. There

are other factors also to which developers would have to deal with. Speed of

writing is one such factor. Humans write things more quickly than they type up

7

Introduction

on a touch-keyboard or on a touch-screen. It requires that the technology used for

integrating handwriting should have fast response-rate so that it could reproduce

the shapes drawn in accordance to the speed of the writer. The technology should

also has to respond according to various delicate aspects of handwriting like the

force with which writing instrument has been used, the tilt of the nib at varying

angles, the quick or might be a slow rotation of the pen at various degrees. While

writing humans are habitual of resting their palm/wrist on writing surface or even

fingers other than used for holding the pen/stylus might touch the writing surface.

If this habit continues to go on a pen-tablet or on a stylus-touchscreen then the

display must be smart enough to distinguish between writing (stylus) function

and touch function. Another important aspect of handwriting is the first-touch-

latency or touch lag. A real pen does not leave a time gap (first-touch-latency or

touch lag) between inking and writing. A touch-latency for touch surfaces is how

fast a touch is registered on the surface. In other words, there is a delay between

actual physical input occurrence and that input being processed electronically

and displayed on an output device. For any interaction, according to Robert B.

Miller [11], the minimum just noticeable time difference related to the response

time of the system is 100 milliseconds. Humans are quicker and can respond even

in few milliseconds. Therefore, to replicate the function of handwriting, there is a

need to devise such devices which could catch up with human response.

Digging further into technology means and measures, we see that there are

limitations in capability of hardware resources available for portable devices. Two

main limitations, in respect of hardware resources, are coherent to smartphones,

tablets and other portable devices. On one side, for processing purposes, relatively

less speedy processors are available in smart devices. While on the other side ran-

dom access memory and auxiliary storage space available for or attached to these

devices cannot be enhanced to more than a certain limit. These lacks in resource-

fulness does not allow the developer to opt for some quick but resource consuming

techniques but that may open the new horizons of logics for the developer in which

these challenges could be coped efficiently.

8

Introduction

1.6 Online and Offline Handwriting Recognition

All of the above discussion is regarding online handwriting recognition. The terms

dynamic, and real time handwriting recognition are also used in place of online

handwriting recognition. It is a system in which handwriting is converted to text

as it is registered on special digitizer, smartphone, PDAs, or any other appro-

priate hand-held device(s). In simple words, the machine recognizes the writing

while the writing process is in progress [12]. In this type of recognition system,

a transducer (e.g. PDAs, smartphones, etc.) records pen-tip movements and

pen-up/pen-down events. The data generated against pen-tip movements and

pen-up/pen-down events is known as digital ink. This is nothing but digital rep-

resentation of handwriting. The elements of online handwriting recognition system

include a stylus/pen, a touch sensitive surface either embedded in, or attached to

some output display and a software that could translate pen movements across

the touch surface into writing strokes and digital text. The input through pen

is dynamic and stated as a function of time and order of the pen-stroke. The

digital representation of the input (pen movements actually) is a time dependent

sequential data based on pen trajectory. It gives not only the two dimensional in-

formation about position, velocity, and acceleration but also recounts the pressure

values, number of strokes, stroke order, and stroke direction.

Offline handwriting recognition or optical character recognition (OCR), in

contrast to online handwriting recognition, is conducted after the writing activity

is completed. In offline handwriting recognition, a raster image of the typed,

printed, or handwritten text is taken from an optical scanner or any other digital

input source (e.g. digital camera). The text might be typed, printed, or written

by hand on a document, on a sign board, on a billboard etc. It might be a

caption superimposed on some picture, photograph, figure etc. It may also be

subtitles embedded into a video or movie. The digital devices like optical scanners

or digital cameras yield the bit pattern of the image of the typed, printed or

handwritten text. After the handwriting is made available in the form of an

image, the recognition task can be performed at some later time, for example,

days, months, or even after years. The image obtained for offline recognition is

converted to a binary or colored image. Binary image is an image in which image

9

Introduction

pixels are either 0 or 1. To acquire a binary version of an image threshold technique

is used. The technique is applicable to both colored and gray scale images.

1.6.1 Dynamic Information acquired through Online Hardware

Today, with the technological development, we are able to get real time informa-

tion for a given process. One such example of this ascent can be seen for online

handwriting devices. Online handwriting hardware has risen up to that matu-

rity level that first hand information can be obtained instantaneously. It is not

just that but also this first hand information proves to be helpful to extract and

compute further information very easily. Instantaneously acquired information

include:

• Precise loci of the pen as a function of time. This also includes retraces of

the stroke made by the writer

• Pen inclination as a function of time. It reflects the trend of the pen/stylus

movement

• Pen pressure value for each pen locus

• A portrait of the full -stroke. It comprehends pen-down and pen-up events

listing all intermediary points between each pen-down and pen-up event

• Temporal connection between major and minor strokes to from a character

Above information can further be processed to yield the following:

• Velocity and acceleration with which a stroke is penned down

• Direction of the pen stroke

• Number of strokes with which a character is drawn

• Order of the strokes to form a character

• Variations at beginnings and endings of the strokes

• Variations in stroke length and width

10

Introduction

All the dynamic information associated to how a character has been written is lost

for scanned version of that handwritten character. That is why offline images of

handwritten scripts are referred to as static images. It is hard to acquire dynamic

features from static images. However, with today’s available online hardware,

dynamic attributes can be obtained and drawn with quite reasonable accuracy.

1.6.2 Advantages of Online Handwriting Recognition over the Offline

Handwriting input is natural and appealing style of input. That is why it is more

acceptable over keyboarding. As the information is immediately available and

processed for online handwriting signal therefore the work-flow is improved. The

main difference between online and offline is handwriting data capturing method.

In online recognition systems, machine/handwritten data is captured at the instant

the person writes on a writing surface. In offline recognition, data is captured at

some later time after the writing is created. The advantage of online data recording

is that online devices also capture temporal information of handwritten stroke

which is not available in offline images. Temporal information helps to keep track

of stroke order and direction. Such information may not seem much beneficial

for languages, like English, where stroke order does not matter. However, the

languages, like Chinese, Arabic, Urdu etc., in which writing a character is stroke-

order dependent, temporal information becomes more favorable to recognition

process. Temporal difference of writing can be used to identify and discard the

overlapping of strokes in a written character.

Another advantage of online system over offline is that online system ren-

ders interactivity between the writer and the device at the time of writing. This

interaction also allows the user to edit and/or rectify the mistakes immediately

which let the recognition errors be corrected at the spot. On the other hand, in of-

fline systems, writer-machine interaction does not occur when the writing activity

is in process. In fact, as stated above, in offline recognition system the interaction

with machine/device happens only after the writing is materialized. The result

of this script-machine interaction is a scanned or digital image which is further

dispensed to some recognition process.

11

Introduction

Adaptation is another advantage of online recognition system. Two possi-

bilities of adaptation can be observed for online recognition systems. One is writer

to machine adaptation and the other is machine to writer adaptation. Writer to

machine adaptation brings advantage where the writer sees that some of the writ-

ten characters are not correctly recognized he may modify his drawings to improve

the recognition. Machine to writer adaptation benefits when the recognizer has

the ability of adapting to the writer. Such recognizers have capability of storing

writer’s samples of handwritten stroke for subsequent recognition process.

On the other hand, to produce more respectable results, offline character

recognition systems are subject to some constraints. For example, the scanned or

digital image fed to recognition engine should have clear contrast between image

colours with even lighting exposure. For a good chance of recognition, specific

image resolution is another important factor. For example, for text recognition in

a document with Google’s OCR software built into Google Drive, the resolution

of the document in height should be at least 10 pixels to increase recognition

probability. Good recognition results might also be font dependent. With Google’s

OCR for best results the document should be prepared in Arial or Times New

Roman font (English script). Offline recognition engines also put a constraint on

file format (jpg, tiff, png, pdf etc.), text layout (single/multicolumn), skewness,

brightness and other layouts of the scanned image/document. For example, earlier

versions of Tesseract engine, originally developed by Hewlett Packard Labs, were

not able to process images for text in two columns and other than TIFF format.

1.6.3 Available Handwriting Recognition Software

There are many application softwares available for offline character recognition.

Following are a few to mention in this regard:

• Tesseract OCR, a free software for character recognition, supported by

Google since 2006 [13]. Initially developed for English language text but

now it can recognize text in more than 100 languages [14] in printed font. In

terms of character recognition accuracy, Tesseract OCR has been considered

one of the most accurate OCR engine [15], [16], [17]. The output format is

text, hOCR, pdf and others with different APIs.

12

Introduction

• Google’s OCR is provided with Google Drive and can recognize 100+ lan-

guages with over 90% accuracy. It can take images (jpg, png) as well as

multipage pdf documents as input for recognition. For Urdu handwritten

text, the OCR has not been found very accurate.

• IRIS-Readiris for MAC and Windows operating systems (OS) implement

optical recognition technology and convert images and pdf files into editable

files. The converted file format may be of Word, Excel, PDF, HTML etc.

and can be chosen by the user. The software keeps the original layout intact.

IRISDocument Server is a server-based OCR solution which automatically

converts unlimited volumes of images into fully editable but structured for-

mats. It also offers hyper-compression of the converted documents for long

or short term archiving. It can deal with more than 100 languages for text

recognition [18].

• ABBYY FineReader OCR software converts the digital photographs and

scanned documents either in image form or pdf format into emendable for-

mats. The output document format may be any of the RTF, TXT, DOC,

DOCX, PDF, XLS, XLSX, HTML, PPTX, CSV, EPUB, DjVu, ODT, or

FB2 format as per user choice. The OCR can recognize 192 languages [19].

• CuneiForm, developed by Cognitive Technologies (a Russian software com-

pany), converts electronic copies of images and documents into editable for-

mats. The editable conversion of electronic copies is accomplished without

changing the fonts and structure of the document/image. It can recognize 28

languages in any printable font (including Russian-English bilingual, French,

German, and Turkish) saving the output in hOCR, HTML, TeX, RTF, or

TXT formats.

• OmniPage is another OCR software. With auto language detection feature,

it can recognize 120 different languages converting the scanned documents

into searchable and editable electronic versions. The output file matches

exactly to original input document in color, font, and layout. It is vended

by Nuance Communications [20].

13

Introduction

Above are a few OCR application softwares to mention. In fact, there is a long list

for offline character recognition softwares including OCR Using Microsoft OneNote

2007, Office Lens (an OCR application by Microsoft for mobile phones), OCR Us-

ing Microsoft Office Document Imaging, PDF Scanner (a document scanner soft-

ware with OCR technology, also available for Android users), ONLINE OCR [21]

(for personal computers an online facility for OCR/Free, Arabic/Persian/Urdu

languages are not supported), SimpleOCR (freeware) and SimpleOCR SDK for de-

velopers (royalty free). The list is to show that there is a lot of work done on offline

version of character recognition. However, for online character recognition there

is a lack of available softwares either commercial or non-commercial. MyScript-

Nebo is an application software for online handwriting recognition provided by

Vision Objects. It turns the natural handwriting recorded through a stylus, a dig-

ital pen or a finger into computer readable information. The software is available

for Linux, Microsoft Windows, Apple MAC OS, iOS, and Android as well. There

are many hand-held and smart devices that are supported by MyScript-Nebo such

as Samsung Galaxy Tab S3 with S-Pen, Samsung Galaxy Note 10.1′′, 2014 edi-

tion with S-Pen, Samsung Galaxy Note Pro 12.2′′ with S-Pen, iPad Pro and iPad

2018 with Apple Pencil, Microsoft Surface Pro 3 (Intel Core i3, i5, i7), Microsoft

Surface Pro 4 (Intel Core m3, i5, i7), Sony Vaio 13 (Core i5), Huawei Media Pad

2 10.1′′ with active pen and many more [22]. Recognizing 59 languages [23], the

software can convert handwritten notes, mathematical equations, and geometrical

shapes into editable and searchable digital text/ink. However, the software does

not support the most widespread right-to-left languages namely Hebrew, Arabic,

Persian, and Urdu [24].

1.7 Problem Statement: Online Handwritten Urdu Char-

acter Recognition

Character recognition has enjoyed a lot of research in the recent past. Good recog-

nition systems are available commercially for alphabetical languages based on Ro-

man characters and for symbolic languages like Chinese. But languages based on

Arabic alphabets like Urdu, Pashto, Sindhi etc. do not have such recognition sys-

14

Introduction

tems. The recognition systems generally have a scanner or a camera as the input

device for off-line recognition, or a stylus/tablet as input device for online recog-

nition. These systems are used in conjunction with the input peripheral devices

like keyboards and mice. With the recent developments in electronic tablets, pen

movements and pressure content can be captured more accurately. However, in

spite of these technological developments, we see there is no such application soft-

ware which could recognize Urdu characters written by hand using a pen-tablet or

on a smartphone with a stylus. Urdu language is based on Arabic alphabets with

a larger character-set as compared to Arabic (38 characters). Urdu, due to its

large character-set and limited number of strokes, is difficult to recognize. In this

work, we have focused on recognition of Urdu characters in half-forms. Half-form

characters appear in the start, mid, or end of a word while writing cursively. The

emphasis of this thesis is to propose a technique to recognize Urdu handwritten

characters using a pen-tablet.

1.8 Motivation

Absence of handwritten character recognition application for Urdu language is

the motivation behind this work. Such an application, in this digital age, is of

national interest. It can be used in mobile phones with styluses, in personal digital

assistants, and in any portable device with pen input. In Pakistan, there are

about 200 Million inhabitants and Urdu is a primary language of communication.

According to Pakistan Telecommunication Authority, there are about 130 Million

mobile phone users [25]. According to market estimates, based on current trends

in the e-commerce sector, there could be 40 million smartphones in Pakistan in

the coming year [26]. In that scenario, there is a need to carry out research

in the field of design and development of online Urdu handwriting recognition

systems for computing devices (like smartphones) to provide benefit to the large

Urdu speaking population of the world. It will also be helpful in Urdu data

entry for people not experienced with Urdu keyboard. Moreover, it can serve

the purpose of Urdu handwriting tutor-software for children and new learners.

The application can further be extended to touch systems as well. Online Urdu

handwriting recognition system can also extend its benefits to the users of other

15

Introduction

Arabic script based languages like Persian, Uyghur, Sindhi, Punjabi and Pushto

with minor modifications.

1.9 Literature Review

Urdu script comprises of a large character-set with cursively written and contextu-

ally dependent alphabets. Being context dependent, Urdu alphabets adjust their

shapes according to the preceding and succeeding characters. In this way, for

an Urdu alphabet there are one full- and at least three different half-forms with

few exceptions. Moreover, complexities for Urdu handwriting recognition arise

not only due to cursiveness and context dependency but also because of the very

nature of an alphabet-structure, word-formation in a particular font-style, and di-

acritics involved in alphabets. Overlapping ligatures, delicate joints of characters

in a word, atilt traces, neither fixed baseline nor standard slope (in Nastalique

font style), associated dots and other diacritic symbols which may be above, be-

low or within the character, displacement of dots with base stroke’s slope and

context [27–29] are a few to shed light on the complexities of Urdu script.

On the basis of recognition target-set, Urdu handwriting recognition (both

off-line and online) can be placed into three categories: isolated- or full-form char-

acter recognition [30–33], selecting ligatures for recognition or holistic approach