Measuring vocabulary size in English as an additional language

-

Upload

khangminh22 -

Category

Documents

-

view

0 -

download

0

Transcript of Measuring vocabulary size in English as an additional language

Measuring vocabulary size in Englishas an additional languageLynne Cameron University of Leeds

The paper reports findings from a study in two stages to trial tests ofvocabulary size in English as an additional language (EAL). The first,pilot, stage trialled the Levels test (Nation, 1990) and the Yes/No test(Meara, 1992) with secondary students aged 15 years, with an averageof 11 years of education in the target language. The Levels test wasfound more useful, mainly because the inclusion of non-words in theYes/No test produced unreliable results.

In the second stage, the Levels test was used with students aged 13and 14 years, 63 students for whom English was an additional language,and 84 monolingual English speakers. The results of the tests show adifferent profile of scores for EAL than occur in typical EFL contexts.EAL students, who have had on average 10.5 years in English mediumeducation, show gaps in their knowledge of the most frequent wordsand more serious problems with less frequent words, with importantimplications for educational achievement. Comparison of mean scoresof EAL students and their native speaker peers using t-tests revealsignificant differences at 3K and 5K levels.

The study shows that the Levels test offers a useful research andpedagogic tool in additional language learning contexts, yielding anoverall picture of receptive vocabulary learning across groups. The testalso produces information about individual language development thatmay help teaching. Implications include the need for further researchinto the effects of learning environments on language development, andthe need for skilled intervention in additional language development tocontinue throughout secondary schooling.

I Introduction and background

The study investigates vocabulary size, as one aspect of the lexicaldevelopment, of students in a UK secondary school who use

© Arnold 2002 10.1191/1362168802lr103oa

Address for correspondence: School of Education, University of Leeds, Leeds LS2 9JT, UK;e-mail: [email protected]

Language Teaching Research 6,2 (2002); pp. 145–173

at PENNSYLVANIA STATE UNIV on May 12, 2016ltr.sagepub.comDownloaded from

English as an Additional Language (EAL).1 Such students, fromethnic minority and refugee groups, comprise about 10 per cent ofthe school population, or half a million children, in England andWales (DfEE, 1997). All curriculum subjects are taught throughEnglish (except in Wales, where parents may choose for theirchildren to be taught through Welsh), and the National Curriculumplaces a dual responsibility on mainstream teachers to ensureaccess to the curriculum content and to develop English languageskills. Specialist language support is provided via ‘languagesupport’ teachers, who work alongside mainstream subjectteachers. Policy and levels of funding lead to a situation whereEAL beginners will probably receive language support in theclassroom for some parts of the school day, but it is impossible toprovide specialist support for most EAL students once they passbeginner level. The secondary school in which the first stage of thestudy was carried out is not atypical in having, at the time of thestudy, one full-time and two part-time EAL support teachers foraround 400 EAL students at various stages of languagedevelopment. In this school, as in many others, the responsibilityand day-to-day provision for English language development restswith mainstream teachers of curriculum subject areas.

Most mainstream teachers have not been trained in their initialteacher training for this responsibility, and it is unusual to findsubject teachers who have focused on language development intheir continuous professional development. Some mainstreamteachers received training in national training programmes held in1996 and 1997, although many also did not. Teachers, particularlyat secondary level, are often forced to operate with limitedunderstanding of additional language development and of theteaching practices that might best support it (Franson, 1999).Furthermore, mainstream teachers are unlikely to speak the homelanguages of their students, thus depriving them of opportunitiesto use the home language to develop English throughinterpretation, translation or explicit contrast. Where bilingualadults are available to students, they are more likely to be bilingualassistants with concomitant lack of status and training in children’slearning (Martin-Jones and Saxena, 1995).

One of the central assumptions underlying the mainstreamingpolicy for EAL students has been that the language learning

146 Measuring vocabulary size in English

at PENNSYLVANIA STATE UNIV on May 12, 2016ltr.sagepub.comDownloaded from

environment provided by school classrooms will result in efficientand continuing language development. However, very littledetailed empirical research into patterns and outcomes ofadditional language development in this learning context has beencarried out. Where language use has been a focus of researchattention, it has often been addressed as a constituent of socio-cultural identity (Rampton, 1995) or as a minor aspect of goodpractice in schools (e.g., Blair and Bourne, 1998). One reason forthe lack of research into EAL achievement has been the politicalunacceptability over the last decade of public discussion aboutlevels of language proficiency, because of possible racist ordiscriminatory overtones. This limiting of debate has not alwaysserved bilingual students well, in that lack of focus on languageskills possibly contributes to subsequent underachievement inpublic examinations. One of the aims of the study reported in thispaper was to collect data that might inform debate and argue forappropriate specialized support for ‘advanced language skills’development if that might increase the opportunities of studentsin their lives after compulsory schooling.

Research from other countries indicates that additional languagedevelopment through mainstream education is not unproblematic,and that policy decisions may lead to unexpected outcomes. InHolland, a context with strong demographic and educationalparallels to the UK, Verhallen and Schoonen (1993, 1998) havecarried out studies into the lexical knowledge of pupils fromTurkish communities who learn Dutch as an additional languagethrough school experience. In their first study they found that theTurkish students, aged 9 and 11 years, produced fewer and lessvaried meaning aspects for Dutch words than did their Dutchnative speaker peers. The second study compared lexicalknowledge in Turkish children’s first language (L1) and in theirsecond language (L2), Dutch. Children’s knowledge about wordsin their L1 was found to be less deep and varied than theirknowledge about words in their L2. The combined results of thetwo studies imply that first language lexical knowledge is notnecessarily available to compensate for gaps in second languagelexical knowledge. Whereas the vocabulary of monolingualchildren can be built on and deepened through education,education via an L2 slices through this continuity in lexical

Lynne Cameron 147

at PENNSYLVANIA STATE UNIV on May 12, 2016ltr.sagepub.comDownloaded from

development. Schooling produces development in L2 deep lexicalknowledge that takes it beyond the depth of L1 lexical knowledge,but this L2 development lags behind monolingual lexicaldevelopment; Verhallen and Schoonen suggest that this representsa ‘serious break’ in lexical development (1998: 466).

Studies in North America and Australia have produced fairlyconsistent results about the time it takes for EAL students to ‘catchup’ their native speaker peers in terms of proficiency in theadditional language. Collier (1987) showed that middle-classimmigrant students in USA took from 5–10 years; Cummins’ re-analysis of Canadian data showed that students who startedlearning English at age 6 or later took between 5 and 7 years toapproach grade norms in vocabulary knowledge, although thosewho arrived in Canada as infants were at or near grade norms(Cummins, 1984). A key distinction in additional language skills ismade between academic language, which uses disembedded anddecontextualized language for conceptual purposes, and moreconversational language used in everyday social interaction(Cummins, 1984). Early studies with immigrant students in Sweden(Skutnabb-Kangas and Toukomaa, 1976) showed that these twoaspects of proficiency, commonly labelled BICS (BasicInterpersonal Communication Skills) and CALP (CognitiveAcademic Language Proficiency) (Cummins, 1984) developdifferently, and these results have been replicated in other contextssince. Case-study data from Australia (McKay, 1997) showed thatadditional language students, who had received all their educationin Australian schools, were having problems with academic Englishat age 12.

The demographics and socio-economics of the ethnic minoritycommunities served by the study schools, as in other inner-citycontexts, lead to a situation where many uses of language in thelocal community do not require English. It was also interesting,and somewhat surprising, to find out from interviews with studentsin the first study school that several did not watch, or have,television at home. English language development, in both BICSand CALP, is thus heavily dependent on what happens withinschool.

In addition to needing empirical data to understand theprocesses and outcomes of additional language development in

148 Measuring vocabulary size in English

at PENNSYLVANIA STATE UNIV on May 12, 2016ltr.sagepub.comDownloaded from

different contexts in the UK, there is a need to build up a clearertheoretical model of additional language development, informedby such empirical research, that can guide the development ofteachers’ practice and educational policy. As one step towardsaddressing these needs, this paper reports findings from aninvestigation into students’ vocabulary size. The first stage of thestudy was designed as a pilot to trial two possible tests ofvocabulary size to ascertain their usability with school-age EALstudents. A second stage then used one of the tests with a largergroup. The results of the vocabulary size tests show surprising gapsin vocabulary knowledge after 10 or 11 years of education throughEnglish, and contribute to our understanding of how an additionallanguage develops through participation in mainstream classes.

II Vocabulary measures and language development

1 Why focus on vocabulary?

An earlier in-service project in the pilot study school had broughtuniversity staff and mainstream subject teachers together toexplore the nature and possible causes of language-related under-achievement through a combination of seminars and classroom-based action (reported in Cameron, 1997; Cameron et al., 1996).During that project, students’ lack of vocabulary in English wasfrequently mentioned by teachers as a problem. However, lack ofstudent output in observed classes prevented much useful databeing collected on this. This study starts from that point, workingwith vocabulary size as a parameter that may be useful both forassessment and, because of teachers’ use of the concept, forclassroom intervention and teacher development.

2 Vocabulary size and language development

The vocabulary tests which were trialled in this study aim tomeasure receptive vocabulary size through word recognition; if astudent recognized a word, he or she was said to ‘know’ it. Clearly,there is much more to knowing a word than just recognizing it.The studies mentioned earlier with Turkish–Dutch bilingualchildren emphasized ‘deep lexical knowledge’, rather than size,operationalized as the production of associations and different

Lynne Cameron 149

at PENNSYLVANIA STATE UNIV on May 12, 2016ltr.sagepub.comDownloaded from

sorts of meanings of a word (Verhallen and Schoonen, 1993, 1998).Deep word knowledge includes spelling, word associations,grammatical information and meaning (Richards, 1976; Schmitt,1998; also Schmitt and Meara, 1997). A further aspect ofvocabulary development that needs to form part of any theoreticalmodel is how vocabulary knowledge is used productively incommunication.

Acknowledging that word recognition measures can only tapinto a small part of the complexity of vocabulary development doesnot imply that such measures are not important. Vocabulary sizeestimated through word recognition counts will give a guide to theouter limits of vocabulary knowledge, since words that areunderstood or used with any depth of meaning should berecognized. Given the lack of empirical data on EAL development,and as a first step in assessing vocabulary development of EALstudents, vocabulary size will be a useful place to start.

3 Learning and testing vocabulary in context

A further complaint sometimes made against the type ofvocabulary measures used in this study is that words are presentedto students in isolation, without supporting linguistic or schematiccontext. It is reasonable to point this out, since current views onlanguage testing emphasize the need for testing situations toreplicate situations of language use or learning (Bachman andPalmer, 1997). Where the argument can fall down is by beinginsufficiently precise as to how ‘context’ (linguistic orsituational/social) works to support understanding, and by failingto distinguish between learning, which probably best happens withcontextual support, and desired outcomes of learning, which includethe capacity not only to make use of, but also to generate, contextsaround given words.

In the process of acquiring a vocabulary item, the meeting andmaking sense of a new word in context is likely to be the first stepin a longer process; initial encounters with a word do notnecessarily lead to that word being recognized on furtheroccasions. Further meaningful encounters will be needed toestablish the full range of a word’s meaning possibilities, and toengrave the word in memory. Eventually, after sufficient

150 Measuring vocabulary size in English

at PENNSYLVANIA STATE UNIV on May 12, 2016ltr.sagepub.comDownloaded from

contextualized encounters, a word will be recognized when it is metin a new context or in isolation. If we then think about the processof completing a word recognition test, we can surmise thatdecontextualized presentation of a word in a test does not implythat a testee makes sense of the test word in a ‘decontextualized’mental void. Rather, the recognition process may activate recall ofprevious encounters and their contexts. Since we would expect andwant secondary-level students to be able to operate this way withlarge sections of their vocabulary, it does not seem unreasonableto test to see how much vocabulary can be recognized withoutextended linguistic or textual contexts.

Furthermore, vocabulary test results have long been found tocorrelate with reading comprehension test results (Beck et al.,1987; Read, 1997). Stanovich (1980) concludes, from a survey ofstudies into word recognition, use of context and reading skills, thatskilled readers in fact use word recognition skills to understandtext, only turning to contextual information when word recognitionfails. The relationship between reading comprehension and therecognition of written words will also work in the other direction,in that higher levels of reading skills will lead to encounters withmore words and thus increase receptive vocabulary. Recognizingwords out of context is an important type of knowledge intimatelylinked with reading.

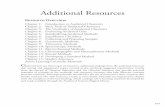

III The design of the studies

If vocabulary levels do reflect language development moregenerally, and if existing tests can be shown to be valid and reliable,then vocabulary testing might offer a relatively quick and easy wayfor researchers and schools to monitor progress in languagedevelopment. The study reported here had two stages. The firststage was designed as a pilot to investigate the usability ofestablished English as a foreign language (EFL) vocabulary testsin measuring vocabulary levels of EAL students. Two vocabularysize tests, the ‘Levels test’ (Nation, 1990) and the ‘Yes/No test’(Meara, 1992) were used, in the same school week with the sameclass. National test data and school data on the students wasmapped against the vocabulary size measures. At the end of thefirst stage, the Levels test emerged as more usable. In the second

Lynne Cameron 151

at PENNSYLVANIA STATE UNIV on May 12, 2016ltr.sagepub.comDownloaded from

stage, the Levels test was used with a larger group of students toinvestigate patterns of vocabulary size as measured by the test.

IV Aims and research questions

Overall, the study aimed to

� explore the potential of vocabulary size as a useful parameter indescribing outcomes and patterns of mainstream EALdevelopment

� begin the selection and development of a test that is reliable andvalid

� compare vocabulary sizes of first language (E1L) and EALstudents.2

These aims were turned into more precise research questions:

1 Stage 1

1. Is the Levels test and/or the Yes/No test usable with secondaryEAL students?

2 Stage 2

2. What results of vocabulary size does the Levels test give, forEAL students and for their monolingual peers?

V Participants

1 Stage 1

The participants in the first stage were one class from Year 10 ofSchool A, which is in the north of England. Twenty-three studentsparticipated in one or both parts of the tests; eight were female,15 male, reflecting the gender balance in the school as a whole.Four students, all male, used English as a first language; the restused English as an additional language.

The first page of the test included a self-report form on students’background and languages. According to this, 21 students wereborn in UK, all locally; one was born in India and one in Pakistan.They were aged between 14 years 10 months and 15 years 9 monthsat the time of the test. Nineteen students had attended nursery

152 Measuring vocabulary size in English

at PENNSYLVANIA STATE UNIV on May 12, 2016ltr.sagepub.comDownloaded from

school,3 all in the local area, and all students had attended primaryschool locally. The length of time the EAL students had been inUK mainstream education was therefore between 13 and 10 years.

The other languages spoken by the 19 EAL students wereGujerati (10), Panjabi (10), Urdu (10) and Arabic (1). Six studentsreported that they only used English for speaking, reading andwriting, although two of these were included as EAL users by theschool Language Support staff. Ten reported only using Englishfor reading and writing, with four of those speaking a languageother than English. Ten reported speaking three or more lan-guages; 13 reported reading in two or more languages; 11 reportedwriting in two or more languages. Urdu was the most commonlyreported other language in which students were literate (12).

2 Stage 2

The Levels test was taken by the entire Year 9 of School B,comprising 92 monolingual speakers of English and 52 EALspeakers. The school is located in the Midlands, and the EALstudents come mainly from established Bangladeshi and Gujeratiheritage communities, alongside small numbers of recently arrivedstudents from various countries.

Of the 52 EAL students, 44 were born in UK, eight were bornoutside the UK. The female : male ratio was 24 : 28. The studentswere aged between 13 years 10 months and 14 years 9 months atthe time the test was taken. Three students had been in the UKfor less than 2 years, the rest for between 3 and 5 years. Twenty-eight students had attended nursery school in the UK.

The other languages spoken by the EAL students were Bengali(24), Gujerati (20), Hindi (5), Arabic (3), Farsi (2), Malay (1),Setswana/Afrikaans/Haero (1), French (1), Italian (1), Albanian(1). The most commonly reported other languages read wereArabic (14), Bengali (10) and Gujerati (6).

After removing discrepant scores (see Section IX below) andcombining the participants of School A and School B, Levels testscores were analysed for 63 EAL students and 84 E1L students.Key characteristics of these groups are set out in Table 1.

Lynne Cameron 153

at PENNSYLVANIA STATE UNIV on May 12, 2016ltr.sagepub.comDownloaded from

VI Instruments

1 The Levels test

The vocabulary test devised by Nation (1990) is a multiple-choicetest of word recognition. A sample question is shown below:

1. business2. clock part of a house3. horse4. pencil animal with four legs5. shoe6. wall 4 something used for writing

Each test section consisted of six words and three definitions ormeanings, expressed as a synonym or phrase; the vocabulary usedfor the meanings is at an easier level than that of the words. Thetestee has to match words to meanings and write the number ofthe word next to its meaning, as in the last line above. The originaltest, which was used here, has five levels, each with six sections andthus directly tests the meaning of 18 words. A more recent version,with 30 words at each level, has been studied for validity with arange of ESL/EFL students (Schmitt et al., 2001). The levels of thetest represent different word frequencies, based on several lists ofwords4 compiled for foreign language teaching. The first level(labelled here ‘levels 2K’) tests 2000 high frequency words of the

154 Measuring vocabulary size in English

Table 1 Stage 2 Participants in the Levels test

EAL students (n = 63) E1L students (n = 84)

gender female 27 : male 36 female 42 : male 42(1 : 1.3) (1 : 1)

average age 14 years 6 months 14 years 6 months

average length of time 10.0 years 10.6 yearsin UK education (incl. nursery)

major home Gujerati 30languages other than Bengali 22English Panjabi 7(some students Hindi 5reported more than Arabic 4one)

at PENNSYLVANIA STATE UNIV on May 12, 2016ltr.sagepub.comDownloaded from

General Service List (West, 1953). The words were selected as themost frequently occurring words in texts totalling 5 million words,a respectable corpus although somewhat small in comparison withmore recent computerized corpora. Although now quite old, thelist is likely still to be representative of the most frequent wordsin English, and around 82 per cent of the words in any text will bedrawn from this 2000 word set (Nation and Waring, 1997).

Further levels (levels 3K, 5K, 10K) measure words from the3000, 5000 and 10 000 most frequent words from a list compiled byThorndike and Lorge in 1944, which measures frequency ofoccurrence in 18 million words of written text. A final level (levelsAc.) tests words from the Academic Word List (listed in Nation,1990) and containing 836 words which ‘are frequent and of widerange in academic texts’ at upper secondary and university level(Nation and Waring, 1997: 16). Words in the Academic Word Listcome from various frequency levels beyond the 2K level, and arelargely Graeco-Latin in origin.5

Many EFL courses use frequency lists, including thosementioned above, to select words for language teaching, and,possibly as a result of this, test scores generally show words at onelevel of frequency being mastered before words at the next level(e.g., Laufer, 1998). Learning EAL is a more random experiencelexically, in that words are not deliberately selected for learningbut are encountered through everyday classroom materials anddiscourse. These mainstream experiences may be expected toprovide a rich lexical environment (Meara et al., 1997) but thenature of this learning environment has not been described insufficient detail for students to be tested against words that theyhave been exposed to. It will be of interest to see whether thevocabulary of EAL students, learning through mainstream subjectstudy, shows a similar gradient in scores across frequency levels orwhether their different type of exposure produces a differentpattern of vocabulary development.

An overall figure for vocabulary size is sometimes calculated byresearchers using the Levels test. However, there is a lack ofcomparability in the way size is calculated, and Nation himself(pers. com.) recommends that an overall size should not be workedout. It is understandable that an overall figure would holdattractions, and in the context of this study, it would be useful to

Lynne Cameron 155

at PENNSYLVANIA STATE UNIV on May 12, 2016ltr.sagepub.comDownloaded from

have an overall figure that could be compared with figures givenfor native speaker word knowledge. Laufer (1992) places eachlearner as ‘belonging to one level’ (1992: 98), presumably the levelat which they scored most words correct. Schmitt and Meara (1997)assume that the score out of 18 at each level reflects the proportionof words known, and total the scores to give ‘an estimate of overallvocabulary size’ (1997: 22). Laufer (1998) has a more complicatedcalculation to reach an overall size. Because of this apparentconfusion over calculations, the temptation to produce an overallfigure for vocabulary size was resisted and scores at each level areused to produce a ‘profile of vocabulary size’ for each student.

2 The Yes/No test

The Yes/No test was devised by Meara (1992). It employs a simplerformat than the Levels test, while maintaining the same idea oflevels of word frequency. At each level, the testee is presented witha list of words and asked to indicate whether or not he or she‘knows’ the word. The test, as used in this study, had 60 words ateach of the 1K, 2K, 3K, 4K, 5K and Academic levels. The 2K andAcademic lists used by Meara are the same as those used byNation, but the other levels are based on different lists and so donot correspond exactly with the Levels test .

To counterbalance any tendency for testees to overestimate theirword knowledge by checking words they do not actually know,nonsense words are included. The scoring takes account of any ofnon-words that the testee checks as known. The first six items fromthe 1000 word level test are shown below, with a nonsense wordat item (3):

1 [ ] obey 2 [ ] thirsty 3 [ ] nonagrate4 [ ] expect 5 [ ] large 6 [ ] accident

The test is easier to do than the Levels test, and has been shownto have validity against other tests of language proficiency (Mearaand Buxton, 1987). Problems have been noted however withreactions to non-words, particularly with low-level learners (Read,1997) and with speakers of some languages, including Arabic(Meara, pers. com.).

Scores are calculated at each level through a formula that

156 Measuring vocabulary size in English

at PENNSYLVANIA STATE UNIV on May 12, 2016ltr.sagepub.comDownloaded from

penalizes the ticking of non-words. Again, the results of the testare in the form of a profile of vocabulary size that contains scoresat each level.

VII Vocabulary size of first language users

Schmitt et al. (2001) report that native speaker subjects who tookthe Levels test scored maximum or near maximum scores. Figuresof vocabulary size are always subject to methodological questions,but a university graduate could be expected to know around15–20K word families. A five year old starting school has beenestimated as knowing around 4 or 5K word families and would addaround 1000 new words per year (Nation and Waring, 1997). Bythe age of 15 years, we might expect a native speaker to knowaround 10–15K word families.

VIII Procedures

1 Stage 1: the usability of vocabulary size tests

The two tests were taken by the participants from School A underexamination conditions (single desks in the school hall). Onestudent was absent for the Yes/No test; three were absent for theLevels test. The tests were marked and scored, and a sample cross-checked for reliability of marking. In each test, two sets of scoreswere so low as to be unusable and were omitted from the finaldataset. This left 14 sets of test results from EAL pupils and fourfrom E1L pupils. Vocabulary size profiles for each student wereobtained for the two tests, and means and standard deviationsacross the group were calculated.

The rankings of the EAL students at each level on each testwere cross-correlated using Spearman correlations. The rankingswere also mapped against two other measures, again usingSpearman correlations via SPSS:

a School assessment of language level Students using Englishas an Additional Language had been assigned a ‘stage’ on theEnglish Language Development Assessment (ELDA) scale drawnup by the local authority Education Advisory Service. Withineach stage, assessment had been made by EAL specialists of

Lynne Cameron 157

at PENNSYLVANIA STATE UNIV on May 12, 2016ltr.sagepub.comDownloaded from

students’ listening, speaking, reading and writing by matching todescriptors.

The ELDA scale has four stages, with stages 1, 2 and 3 eachsubdivided into three (1a, 1b, 1c, etc.). Stage 4 is the point wherestudents are held to reach levels of language use equivalent to theirnative speaker peers. The 10 stages and sub-stages on the ELDAscale were relabelled 1–10, and students assigned a numerical pointcorresponding with specialist EAL teacher assessment of theirlanguage level.

b Published tests Results were available for all but one studentfrom the NFER-Nelson Cognitive Abilities Test, which has threesub-sections: Verbal, Quantitative and Non-verbal (Thorndike andHagen, 1986). Correlations were measured between EAL testscores at each level and each of the three NFER scores.

2 Stage 2: use of the Levels test

In School B, the Levels test was administered to the Year 9 group.Very low or erratic scores were removed (discussed further below)and the Levels test scores from School A and School B combinedfor analysis. Means and standard deviations were calculated foreach Level for both monolingual English speakers (n = 84) andEAL speakers (n = 63). The means of the two groups werecompared at each Level, and a two-tailed t-test carried out forsignificant differences.

IX Findings and discussion: Stage 1 – usability of the tests

1 Practicality of the two tests

With students of this age, the time and effort required to take atest is an important consideration; fatigue or boredom are likelyto reduce effort and lead to scores that may under-representproficiency. Most of the School A students completed all levels ofeach test in 40 minutes or less, which makes them feasible forschool use.

The Yes/No test was completed slightly faster, but only one testat each level was given. Using three tests at each level, asrecommended, would produce tests that are too long to sustain

158 Measuring vocabulary size in English

at PENNSYLVANIA STATE UNIV on May 12, 2016ltr.sagepub.comDownloaded from

effort. A similar problem may arise with more recent versions ofthe Levels test which have 30, rather than 18, items at each levelfor increased reliability (Schmitt et al., 2001).

2 Unusable scores

The non-words in the Yes/No test created problems for all students,but particularly the EAL students, many of whom had scoresheavily reduced by the number of non-words they checked as‘known’. This was particularly evident at the 1K and 2K levels. Thephenomenon of ‘recognizing’ non-words may be linked to thestudents’ experience of learning EAL in the mainstream. This typeof unplanned language learning through content study may resultin students frequently meeting new words that are not explicitlyexplained or focused on. From first encounters, words may be onlypartially understood but students have to work with this partialknowledge until further encounters provide additionalinformation. The non-words in the test were constructed toresemble English words, and EAL students, who work daily withpartially known words, may find it more difficult to distinguishbetween non-words that look like English words and ‘real’ words.

Because of the effect of the non-words on scores, the Yes/Notest was found to be less useful than the Levels test for the EALcontext. However, the Levels test was not without problems. Thetest papers of the fourth E1L student, whose scores were quitediscrepant, across levels and in comparison with other students,showed that he sometimes chose definitions that were not themeaning of the test word, but instead had some semantic link toit. For example, for the word blame, the definition have a bad effecton something was chosen. This happened more with high frequencywords The writers of the more recent versions of the Levels testmentioned above have tried to avoid such possibilities, but it doesunderline the importance of making sure test takers understandexactly what they have to do.

Twelve E1L profiles (13 per cent) and five EAL profiles (6 percent) were omitted from calculations of group means at stage 2.These were either very low scores or discrepant profiles. The EALscores that were omitted were all very low, defined here as lessthan 8 out of 18 at Level 2K. Most of these were from recent

Lynne Cameron 159

at PENNSYLVANIA STATE UNIV on May 12, 2016ltr.sagepub.comDownloaded from

arrivals in the country, although the converse was not true: somerecent arrivals scored quite high. The vocabulary of recentlyarrived EAL students with little English can be usefully measuredwith the Levels test, but they were not included in overall meansbecause of their skewing effect.

A couple of the omitted E1L scores were very low overallprofiles but most of the discrepant score profiles had quite highscores at one or more levels with zero at other levels, e.g., [0, 16,8, 5, 0] or [17, 13, 0, 0, 0]. Sudden drops in scores after the 3K or5K levels occurred in five cases, and suggested that the studentshad ‘given up’ on the test.

Most of the omitted monolingual students had readingdifficulties or other special educational needs. This suggests thatliteracy skills are a factor in completing the test. Both tests are ofcourse written tests, with the testees required to read the test items.In the use of the Levels test to date, this has not been muchdiscussed as an issue; Schmitt et al., for example, state that theLevels test requires ‘little grammatical knowledge or readingability’ (2001: 69). Using the test with school-age studentshighlights the readability issue. More research is needed to see howliteracy skills interact with measures of vocabulary size. Meanwhile,we should perhaps think of the Levels test as a measure of ‘writtenEnglish vocabulary size’, rather than of ‘vocabulary size’ per se.

3 Comparisons of scores from the two tests

When the rankings from the two tests carried out in School A werecorrelated at each frequency level (Table (i) in Appendix), onlytwo significant correlations were found: between Yes/No Academicand levels 5K, and between Yes/No 3K and levels 2K. This level ofcorrespondence is quite weak, since we might expect to find twosignificant correlations between this number of tests purely bychance. Furthermore, if the tests had been measuring the sameconstruct, we would have expected more instances of significantcorrelation, particularly at the 2K and Academic levels, where thetests are based on the same word lists. So, although, in informalfeedback, the Yes/No test was preferred by many of the studentsas easier to complete, it is not, as it stands, interchangeable withthe somewhat more demanding Levels test.

160 Measuring vocabulary size in English

at PENNSYLVANIA STATE UNIV on May 12, 2016ltr.sagepub.comDownloaded from

4 The scores of vocabulary size

The mean scores for the EAL students and the E1L students ofSchool A on the two tests are presented in Figures 1 and 2, andsummarized in Table 2. Scores on both tests showed great variationacross individual EAL students, reflected in standard deviations ofbetween 1.8 and 3.8 (10 per cent and 21 per cent) in the Levelstest, and between 11 per cent and 21 per cent in the Yes/No test.However, for the Levels test, the mean scores and most individualscores are higher for the more frequent words and reduceprogressively. This gradient is similar to that found for EFLlearners, but is less steep than for FL students of a similar age andeducational level (Laufer, 1998), with many more words of lowerfrequency being recognized. This can be expected since EAL

Lynne Cameron 161

Figure 1 School A: Mean scores on the Levels test

Figure 2 School A: Mean scores on the Yes/No test

at PENNSYLVANIA STATE UNIV on May 12, 2016ltr.sagepub.comDownloaded from

162 Measuring vocabulary size in English

Tab

le 2

Sch

oo

l A

: Th

e re

sult

s o

f th

e tw

o t

ests

Th

e Le

vels

tes

tT

he

Yes/

No

tes

t

EA

L st

ud

ents

E1L

stu

den

tsE

AL

stu

den

tsE

1L s

tud

ents

Leve

lm

ean

%S

D%

nm

ean

%S

D%

nLe

vel

mea

nS

Dn

mea

nS

Dn

—1K

8211

.115

8019

.24

2K16

.893

1.8

7.8

1416

.893

2.2

12.2

42K

7416

.115

941.

43

3K15

.888

2.1

11.7

1416

.588

2.6

14.4

43K

8120

.914

927.

63

––4K

7316

.215

6833

.34

5K12

.972

3.8

21.1

1417

.396

0.8

4.4

45K

7419

.715

7924

.04

10K

8.4

472.

715

.014

9.3

525.

430

.04

––A

c.8.

748

3.6

20.0

148

444.

122

.84

Ac.

4918

.314

6424

.94

No

te:

Var

iati

on

in

th

e n

um

ber

of

sco

res

in t

he

Y/N

tes

t is

du

e to

‘fa

lse

alar

ms’

ren

der

ing

pro

file

s u

nre

liab

le.

at PENNSYLVANIA STATE UNIV on May 12, 2016ltr.sagepub.comDownloaded from

students are likely to encounter a wide range of vocabularythrough their various subject classes.

Another way of presenting the Levels test scores is to take ascore of 16 out of 18 (89 per cent) as representing ‘mastery’ of thelevel (Read, 1988). The scores then show that five of the 14 EALstudents, or 36 per cent, did not reach this standard at the 3K level;nine EAL students, or 64 per cent, did not reach mastery at the5K level, whereas all the monolingual students did. Only one EALstudent and one monolingual student could be said to have masteryof the 10K most frequent words. Given the expectation that nativespeaker 15 year olds would know 10–15K word families, theseresults seem on the low side. It may be helpful to see examples ofsome of the words at each level that were not recognized; theseare set out in Table (ii) in the Appendix.

I want to highlight the potential importance for EAL learnersof the gaps found at the 5K level. In encounters with English ineveryday classroom situations, students will be exposed to the mostfrequent words, often and in multiple contexts. There will be farfewer opportunities to learn less frequent words, such as those at5K and 10K levels, and they are also less likely to be encounteredoutside school. And yet mastery of the 5K level is necessary forcomprehension of texts past the most basic. This issue will bediscussed further as we proceed.

As expected, the Academic level scores are very low. If such alevel is to be useful for secondary EAL, then a word listappropriate to secondary level needs to be constructed to replacethe university-oriented list currently used.

5 Correlations between test scores and school assessment oflanguage

Table (iii) in the Appendix shows correlations of the ranking ofscores at each level on the two tests against the school assessmentof language for EAL students. The Yes/No test scores correlatedsignificantly at the 1K and 3K levels, and the Levels test scorescorrelated significantly at 2K and at the Academic level (p < 0.05).

There may be some link between this finding and an observationI have made elsewhere about the assessment of additionallanguage development in UK schools (Cameron and Bygate, 1997).

Lynne Cameron 163

at PENNSYLVANIA STATE UNIV on May 12, 2016ltr.sagepub.comDownloaded from

In the widely used four-stage assessment framework, assessment atthe beginning and second stages of language learning are quitefinely tuned. The third stage is very wide, and the fourth stage isdescribed as native speaker level. EAL students who are born inUK will then spend most of their late primary and secondary yearsin the third stage. Given this, the third stage appears to be grosslyunder-detailed. Specialist EAL support teachers who use a four-stage scale as a professional tool for assessment, are likely to bemore expert at classifying stages 1 and 2, where the significantcorrelations occur. The 5K level, which is emerging as importantin the results, would fall firmly into the third stage of a four stageframework.

6 Correlations between test scores and NFER scores

Table (iv) in the Appendix shows correlations between rankings oftest scores and NFER scores for EAL students in School A. TheLevels test scores correlated significantly with the NFER Verbaltest at 2K, 5K and Academic, with the 2K level scores showing ahighly significant correlation (p < 0.001). The Yes/No test onlycorrelates significantly at the 1K level (p < 0.05).

The significant correlation between levels 2K and the Non-verbal NFER scores could be expected because, even though thistest works with non-verbal concepts, the most frequent words willbe used in the instructions for the test items.

X Findings and discussion: Stage 2 – use of the Levels test tomeasure vocabulary size

The combined results for students from Schools A and B arepresented in Table 3 and Figure 3. These are scores at each levelaveraged across students, omitting discrepant scores as describedin Section IX, 2, above.

1 The profile of vocabulary size in the EAL context

Looking at Figure 3, we see that the overall shape of the averageprofile replicates that in Figure 1, from the smaller group. Onceagain, the gradient is initially less steep than a typical FL profilebut gets steeper from 3K to 5K, and again from 5K to 10K. What

164 Measuring vocabulary size in English

at PENNSYLVANIA STATE UNIV on May 12, 2016ltr.sagepub.comDownloaded from

we seem to be seeing here is a profile of vocabulary size thatreflects learning language through participation in English mediuminstruction, and which contrasts with the typical profile of learnerstaught English as a foreign language.This hypothesis can be furthertested by using the test in other content-based learning contexts.

2 Comparing EAL and E1L profiles

The mean scores of the EAL students and their monolingual peerswere compared using a two-tailed t-test and results are shown inTable (v) in the Appendix. Significant differences in the scores arefound at the 3K level and highly significant differences at the 5Klevel. The perceived ‘lack of vocabulary’ mentioned by teachers inSchool A is thus supported by empirical evidence. The vocabularyof the EAL students appears to be lagging behind that of theirpeers, at a point well past the 5–7 years established in NorthAmerican research referred to in the first section.

The results indicate that EAL vocabulary development is not

Lynne Cameron 165

Table 3 Combined mean scores of vocabulary size from the Levels test

EAL students E1L students

Level mean SD n mean SD n

2K 16.8 1.7 63 17 1.3 843K 15.9 2.0 63 16.6 1.8 845K 13.6 3.5 63 15.2 3.0 8410K 8.9 3.9 63 10.4 5.0 84Academic 9.4 3.7 63 10.1 4.3 84

Figure 3 Combined mean scores of vocabulary size from the Levels test

at PENNSYLVANIA STATE UNIV on May 12, 2016ltr.sagepub.comDownloaded from

reaching the levels that might be expected, or that is needed bystudents for examinations and full social participation. Thestudents in the sample had received 10 years of education in theUK through the medium of English, and yet the results show gapsin even the most frequently occurring words, and some seriousproblems at the 5K and 10K levels. Words at levels 3 to 5K areconsidered necessary for basic comprehension in English as asecond language (Nation and Waring, 1997), yet these students areone or two years from public examinations which require them,not just to understand basic texts, but to understand and produceprecise and accurate meanings in school and examination texts.

3 Individual vocabulary size profiles

The Levels test scores give a measure of vocabulary recognitionknowledge across the population, but also produce individualprofiles which are more important for diagnostic purposes. Figure4 shows the profiles of two EAL students from School A(identified as O and P), both male, born in the same town and whoattended the same nursery and primary schools. We can see thatwhile Student P’s scores are consistently high across the levels,those of Student O begin to fall away beyond 5K.

In everyday classroom action, which uses more frequent words,the two might perform in apparently similar ways, and, in fact, theywere assessed by school staff as at exactly the same stage inlanguage development and in their on-target scores. However, thetwo students bring to their classroom learning different vocabularyresources.

166 Measuring vocabulary size in English

Figure 4 Levels test results for two EAL students from School A

at PENNSYLVANIA STATE UNIV on May 12, 2016ltr.sagepub.comDownloaded from

XI Conclusions

The 2K–10K Levels test has emerged from the study as suitablefor use with EAL students at Years 9 and 10, apart from those withspecial educational needs, and may be more widely applicable foruse with school-age subjects at younger ages, or to showdevelopment over time. The use of a vocabulary size test such asthe Levels test might provide detailed information about individuallanguage development, even for students recently arrived in thecountry with very low levels of English, that can help specialist andmainstream teachers decide on appropriate intervention strategies.A more appropriate Academic Word List is needed for lowersecondary school students.

Deciding which discrepant scores to leave out of overall meanswas difficult. It is clearly reasonable to leave out of calculations ofyear or class means very low scores that will lower means in anunrepresentative way. It also seems wise to leave out erraticprofiles that suggest that the five levels of the test have not allbeen undertaken carefully, and whose inclusion might skew means.Implications for administering the test include making surethrough examples that students know how to choose answers andencouraging students to work through to the end of the testwithout losing motivation.

The interaction of reading skills and vocabulary size becomes anissue with younger subjects, as ability to understand the writtendefinitions may be affected by literacy skills. Adopting the test withyounger students will require further qualitative investigation ofthis issue.

The most important outcome of this study is the finding that thereceptive vocabulary of EAL students who have been educatedthrough English for 10 years has gaps in the most frequent wordsand serious problems at the 5K level. Explanations for these gapsmay lie in the nature of the learning environment for EAL andthe possible lack of focused support it provides for vocabularydevelopment. In the EAL situation, vocabulary coverage is notplanned but arises from teaching in curriculum areas. Furthermore,intervention by mainstream subject teachers in vocabularydevelopment may often be limited to simplification of unfamiliarwords, rather than attending to the need to increase vocabulary

Lynne Cameron 167

at PENNSYLVANIA STATE UNIV on May 12, 2016ltr.sagepub.comDownloaded from

size or to develop deep word knowledge.The results of the monolingual students suggest that similar

concerns may apply to first language vocabulary development, andthat both groups of students need support to develop knowledgeof less frequent words. However, it is important to note that,although both groups show large gaps at the 10K level (Figure 3),the difference between the groups is largest at the 5K level. Thismay mean that, in situations where low frequency vocabulary isneeded, both groups will display difficulties, but, because of thedifference in vocabulary resources, it should not be assumed thatthe same pedagogic interventions will be appropriate for both.Both groups need attention to academic and low frequencyvocabulary, but they bring to this ‘advanced language development’different profiles of everyday vocabulary knowledge, and thusrequire different teaching strategies.

The study has wide implications for the education of secondaryEAL students and the training of their teachers. In highlighting aparticular aspect of language development, it reveals how much westill need to understand about additional language development,and about the processes of development through participation incurriculum classes. Such understanding is vital for teachers, whosedaily classroom decisions create students’ learning opportunities.The study suggests that additional language development needs tobe a continuing process all the way through compulsory schooling,and that skilled intervention may well be useful beyond the initialstages.

Notes1 I should like to thank the schools and the language support teachers for

allowing access and generously providing the data, and the students whoparticipated. The first stage of the work was funded by a research grantfrom the School of Education, University of Leeds. Jane Wagstaff and JuupStelma worked as Research Assistants on the project.

Thanks are also due to Norbert Schmitt and Paul Meara for commentson earlier drafts of the paper.

2 The usefulness of such comparisons is open to debate. However, the schoolassessment of language proficiency, and national measures of languagedevelopment, assume that the target for EAL students is native speakercompetence. A more useful comparison may be with the lexical demandsof texts that EAL students are required to deal with.

3 No exact figures were available for time spent in nursery. It was decided

168 Measuring vocabulary size in English

at PENNSYLVANIA STATE UNIV on May 12, 2016ltr.sagepub.comDownloaded from

to take 2 years as a reasonable norm, and this figure was allocated to eachstudent who had attended nursery.

4 More precisely, the lists and tests deal in ‘word families’ rather than ‘words’,so that various forms of a lexeme (e.g., pass, passing, passed) count as one‘word’.

5 The more recent version of the test uses a newer Academic Word List(Coxhead, 2000). Since both lists were devised for stages of educationhigher than that of the participants in the studies reported here, the resultsof this level are only of marginal interest.

XIII References

Bachman, L. and Palmer, A. 1997: Language testing in practice. Oxford:Oxford University Press.

Beck, I., McKeown, M. and Omanson, R. 1987: The effects and use ofdiverse vocabulary instruction techniques. In McKeown, M. andCurtis, M., editors, The nature of vocabulary acquisition. Hillsdale,NJ: Lawrence Erlbaum, 147–63.

Blair, M. and Bourne, J. 1998: Making the difference: teaching and learningstrategies in successful multi-ethnic classrooms. London: DfEE.

Cameron, L. 1997: A critical examination of classroom practices to fosterteacher growth and increase student learning. TESOL Journal 7:25–30.

Cameron, L. and Bygate, M. 1997: Key issues in assessing progression inEnglish as an additional language. In Leung, C. and Cable, C.,editors, Mainstream schooling and the needs of bilingual pupils.NATE for NALDIC (National Association for LanguageDevelopment in the Curriculum), 40–52.

Cameron, L., Moon, J. and Bygate, M. 1996: Language development inthe mainstream: how do teachers and pupils use language?Language and Education 10: 221–36.

Collier, V. 1987: Age and rate of acquisition of second language foracademic purposes. TESOL Quarterly 21: 617–41.

Coxhead, A. 2000: A new academic word list. TESOL Quarterly 34(2):213–38.

Cummins, J. 1984: Implications of bilingual proficiency for the educationof minority language students. In Allen, P. and Swain, M., editors,Language issues and education policies. London: Pergamon/BritishCouncil, 21–34.

Department for Education and Employment. 1997: Excellence in Cities.London: HMSO.

Franson, C. 1999:Teachers’ perceptions of ESL learners’ English languageproficiency. Paper presented at AAAL Annual Conference,Stamford, CT, 6–9 March 1999.

Lynne Cameron 169

at PENNSYLVANIA STATE UNIV on May 12, 2016ltr.sagepub.comDownloaded from

Laufer, B. 1992: Reading in a foreign language: how does L2 lexicalknowledge interact with the reader’s general academic ability?Journal of Research in Reading 15: 95–103.

–––– 1998: The development of passive and active vocabulary in a secondlanguage: same or different? Applied Linguistics 19: 255–71.

Martin-Jones, M. and Saxena, M. 1995: Supporting or containingbilingualism? Policies, power asymmetries and pedagogic practicesin mainstream primary classrooms. In Tollefson, J. W., editor, Powerand inequality in language education. Cambridge: CambridgeUniversity Press, 73–90.

McKay, P. 1997: Executive overview. The bilingual interface project report.Department of Education, Employment and Training. Canberra,Australia.

Meara, P. 1992: EFL Vocabulary Tests. Centre for Applied LanguageStudies, University College, Swansea.

Meara, P. and Buxton, B. 1987: An alternative to multiple choicevocabulary tests. Language Testing 4: 2142–54.

Meara, P., Lightbown, P. and Halter, R. 1997: Classrooms as lexicalenvironments. Language Teaching Research 1: 28–47.

Nation, P. 1990: Teaching and learning vocabulary. Boston, MA: Heinleand Heinle.

Nation, P. and Waring, R. 1997: Vocabulary size, text coverage and wordlists. In Schmitt, N. and McCarthy, M., editors, Vocabulary:description, acquisition and pedagogy. Cambridge: CambridgeUniversity Press, 6–19.

Rampton, B. 1995: Crossing. Harlow: Longman.Read, J. 1988: Measuring the vocabulary knowledge of second language

learners. RELC Journal 19: 12–25.–––– 1997: Vocabulary and testing. In Schmitt, N. and McCarthy, M.,

editors, Vocabulary: description, acquisition and pedagogy.Cambridge: Cambridge University Press, 303–20.

Richards, J.C. 1976: The role of vocabulary teaching. TESOL Quarterly10(1): 77–89.

Schmitt, N. 1998: Tracking the incremental acquisition of secondlanguage vocabulary: a longitudinal study. Language Learning 48:281–317.

Schmitt, N. and Meara, P. 1997: Researching vocabulary through a wordknowledge framework: word associations and verbal suffixes.Studies in Second Language Acquisition 19: 17–36.

Schmitt, N., Schmitt, D. and Clapham, C. 2001: Developing and exploringthe behaviour of two new versions of the Vocabulary Levels Test.Language Testing 18: 55–89.

Skutnabb-Kangas, T. and Toukomaa, P. 1976: Teaching migrant children’smother tongue and learning the language of the host country in the

170 Measuring vocabulary size in English

at PENNSYLVANIA STATE UNIV on May 12, 2016ltr.sagepub.comDownloaded from

context of the socio-cultural situation of the migrant family. Helsinki:The Finnish National Commission for UNESCO.

Stanovich, K. 1980: Towards an interactive-compensatory model ofindividual differences in the development of reading. ReadingResearch Quarterly 16: 32–71.

Thorndike, E.L. and Lorge, I. 1944: The teacher’s word book of 30,000words. Teachers College, Columbia University.

Thorndike, R. and Hagen, E. 1986: Cognitive abilities test: levels A to F,second edition. Windsor: NFER-Nelson.

Verhallen, M. and Schoonen, R. 1993: Word definitions of monolingualand bilingual children. Applied Linguistics 14: 344–65.

–––– 1998: Lexical knowledge in L1 and L2 of third and fifth graders.Applied Linguistics 19: 452–70.

West, M. 1953: A general service list of English words. London: Longman.

Appendix

Table (i) Spearman correlations of rankings of EAL pupils from School A in theLevels and Yes/No tests (N = 14)

Yes/No 1K Yes/No 2K Yes/No 3K Yes/No 4K Yes/No 5K Yes/No Ac.

Levels 2K .31 .21 .60* 40 .38 .36Levels 3K .30 .07 .15 .43 .14 .30Levels 5K .37 –.15 .40 .41 .45 .58*Levels 10K .39 .00 .50 .12 .47 .50Levels Ac. .48 .13 .47 .30 .24 .28

Note:*Significant for p < 0.05.

Table (ii) Examples of words not recognized in the Levels test

Level Words not recognized

2K sorry, apply, debt, roar, melt, hide3K peer, oblige, climate5K eagerness, pail, novelty, paste10K antics, dogma, botany, swagger, blurtAc. instance, concentrate, consult, revise, valid

Lynne Cameron 171

at PENNSYLVANIA STATE UNIV on May 12, 2016ltr.sagepub.comDownloaded from

Table (iii) Correlation of test scores of EAL pupils from School A and schoolassessment of language level (N = 14)

rs n

Levels 2K 0.58* 16Levels 3K 0.39 16Levels 5K 0.42 16Levels 10K 0.37 16Levels Ac. 0.52* 16

Yes/No 1K 0.50* 16Yes/No 2K 0.47 15Yes/No 3K 0.53* 16Yes/No 4K 0.40 16Yes/No 5K 0.38 16Yes/No Ac. 0.23 14

Note:*Significant for p < 0.05.

Table (iv) Correlations between test scores and NFER scores of EAL studentsin School A

Verbal Non-verbal Quantitative

rs n rs n rs n

Levels 2K 0.73*** 16 0.63* 16 0.25 16Levels 3K 0.37 16 0.03 16 0.21 16Levels 5K 0.51* 16 0.05 16 0.16 16Levels 10K 0.46 16 0.26 16 0.10 16Levels Ac. 0.53* 16 0.38 16 0.13 16

Yes/No 1K 0.52* 16 0.31 16 0.31 16Yes/No 2K 0.46 15 0.26 15 0.14 15Yes/No 3K 0.45 16 0.43 16 0.08 16Yes/No 4K 0.24 16 0.07 16 0.03 16Yes/No 5K 0.37 16 0.10 16 –0.05 16Yes/No Ac. 0.42 14 –0.12 14 –0.11 14

Note:*p < 0.05, **p < 0.01, ***p < 0.001.

172 Measuring vocabulary size in English

at PENNSYLVANIA STATE UNIV on May 12, 2016ltr.sagepub.comDownloaded from

Lynne Cameron 173

Table (v) Comparison of scores of EAL students and E1L students usingtwo-tailed t-tests

Degrees of Significancet freedom (2-tailed)

2K level –1.366 145 .1743K level –2.176 145 .031*5K level –2.898 119.594 .004**10K level –1.571 144.570 .118Ac. level –1.522 145 .130

Note:*Difference is significant at 0.05 level.**Difference is significant at 0.01 level.

at PENNSYLVANIA STATE UNIV on May 12, 2016ltr.sagepub.comDownloaded from