GEMS: User Control for Cooperative Scientific Repositories

-

Upload

independent -

Category

Documents

-

view

0 -

download

0

Transcript of GEMS: User Control for Cooperative Scientific Repositories

1

GEMS: User Control forCooperative Scientific Repositories

Justin M. Wozniak∗, Paul Brenner†, Santanu Chatterjee†,Douglas Thain†, Aaron Striegel†, and Jesus Izaguirre†

∗ Argonne National Laboratory, Argonne, IL USAEmail: [email protected]

† University of Notre Dame, Notre Dame, IN USAEmail: {pbrenne1,schatter,dthain,striegel,jizaguirr}@nd.edu

Opportunistic techniques have been widely usedto create computational infrastructures and havedemonstrated an ability to deliver computing re-sources to large applications. However, the man-agement of disk space and usage in such systemsis often neglected. While overarching filesystemshave been applied, these limit the ability of sub-groups within an organization to customize andoptimize system behavior. Explicit data placementtechniques offer more utility but may bury userswith the complexity of system specifics and scripts.New solutions to storage problems will require newapproaches to data abstraction, archive survivability,security models, and data delivery to consumers. Inthis chapter, we describe design concepts used inthe GEMS storage system within which users mayspecify abstract resource structures and policies fortheir data.

I. INTRODUCTION

Data repositories are an integral part of mod-ern scientific computing systems. While a varietyof grid-enabled storage systems have been devel-oped to improve scalability, administrative control,and interoperability, users have several outstandingneeds: to seamlessly and efficiently work with repli-cated data sets, to customize system behavior withina grid, and to quickly tie together remotely admin-istered grids or independently operated resources.This is particularly evident in thesmall virtualorganization, in which a subset of possible usersseek to coordinate subcomponents of existing gridsinto a workable collaborative system. Our approachto this problem starts with the storage system and

seeks to enable this functionality by creatingad hocstorage grids.

Modern commodity hardware used at researchlabs and university networks ships with an abun-dance of storage space that is often underutilized,and even consumer gadgets provide extensive stor-age resources that will not immediately be filled.The installation of simple software enables thesesystems to be pooled and cataloged into a spacious,parallel ad hoc storage network. While traditionalstorage networks or tertiary storage systems are iso-lated behind file servers and firewalls, constrictingdata movement, we layer the storage service net-work atop the client consumer network, improvingthe available network parallelism and boosting I/Operformance for data-intensive scientific tasks.

Unfortunately, extending scientific repositoriesout to volunteer data sources and across admin-istrative boundaries using centralized file services,static replica management systems, or peer-to-peersystems is not viable because of the nature of tar-get systems. Heavy scientific workloads, research-oriented usage patterns, and variably collaborativeresource networks require new storage architectures.

Our focus application area, computational chem-istry simulations, often produce heavy output datasets, transcripts, etc., compared to amount of meta-data that is used to specify the data set, such as thesimulation configuration, random seed, file names,and access control policy. Metadata may be usedto refer to existing data sets but is typically muchsmaller than the files themselves. Programmatic ac-cess to metadata catalogs creates ascientific filesys-tem that greatly eases access to complex reposito-ries. Our architecture is inspired by typical scien-

2

tific usage: optimizing system performance for thedemands of typical software and interfaces for themental framework of typical simulation researchers.

The dynamic nature of ad hoc storage networksallows scientific users to easily provide storageresources to the system, insert data sets, and man-age access control to resources and data. Hence,researchers - not just computer administrators - canpatch together their own communities of software,data, resources and users while integrating with alarger repository system and benefiting from semi-autonomic storage management.

Current systems limit user customizability, whichenables locally optimal performance, and ease ofutility, which enables maintainable software. Oursolution is to develop of flexible systems, provid-ing a variety of access methods and administrativecontrols. The policies carried out by flexiblead hocsystems will be reconfigurable at the user level,allowing for integration of grid resources into largersystems and derivation of smaller grids; likewise,enhanced or limited privileges will be adminis-tered by users on their own data environments.Applications developed on such a system can usethis hybridization, enabling system-aware workflowstructures.

Our solution to this problem is represented by theGEMS (Grid-Enabled Molecular Simulation) sys-tem. Programmatically, GEMS presents a reliabledatabase abstraction atop an uncontrolled networkof independent Chirp [1] file servers. GEMS wasoriginally designed for molecular dynamics but hasbeen fully generalized to support any application.In this chapter, we discuss the advanced capabil-ities of this system for scientific computation andcollaboration.

II. GEMS ARCHITECTURE

The GEMS architecture, shown in Figure 1 isintended to provide a quickly deployable organiza-tional framework for a network of independentlyowned and operated storage services. In an insti-tutional setting, these machines may already becombined into an opportunistic computing systemsuch as Condor, but the available disk space maygo unused. The storage services are available onthese machines but not usable because of theirindividual instability. By structuring and controllingthese servers, GEMS gains the utility of the extra

Queries

Notification

Synchronization

Data movement

InsertionPut

Get

RunStorage

Storage

Storage Storage

Storage

Storage

Visualization

Workflows

Level 2 Level 1

Client Tools with APIs

Metadatabase

Location database

Storage controller

Volunteer Storage Providers

CentralServices

Level 3

Fig. 1. Overview of the GEMS architecture.

disk space as well as the performance offered by adata delivery system that is potentially as scalableas the computing infrastructure.

GEMS delivers the utility of these services as aunified resource, and relies on four major concepts:automated replication, awareness of administrativeconcerns, distributed access control, and centralizedbrowsability. Replication increases the reliabilityand parallelism of data sources; in GEMS, a semi-autonomic controller [2] detects and responds tosubsystem failure in a priority-driven manner. Addi-tionally, administrative matters are managed withinthe same control system: disk allocation is balancedwith respect to the available space volunteered tothe system, and data is removed from disks astheir owners fill them. GEMS also manages accesscontrol lists in the volunteered space and providesan authentication mechanism [3] compatible withexisting techniques. Observed deviations from sys-tem policy are prioritized and repaired [4] withoutuser intervention, by locating, allocating, and usingadditional available disk space in accordance withuser policy.

The browsable repository created by GEMS es-sentially implements a parameter sweep database.This metadata may be used to derive a novel parallelprogramming model that enables synchronized tuplespace communication and parallel data movement,called adata sweep. This framework may be usedto build up parameter sweeps, workflows, interac-tive parameter space exploration, and related hybridstructures.

A. Runtime Operation

GEMS maintains a replica list for each datafile inserted into the system, which asynchronously

3

Storage

Storage Storage

Storage

X

Job Submission Sitepippin.cse.nd.edu

gems.cse.nd.edu

ccl00.cse.nd.edu

Execution Site

???.helios.nd.edu

Client Operations

Job Submission

Resource Access

Cluster Failure

???.rcac.purdue.edu

Resource Monitoring

Local User Services

Resource Allocation

Server Catalog

GEMS

Local Resources

Fault−Triggered Replication???.cse.nd.edu

chirp.rcac.purdue.edu

???.cselab.nd.edu

Remote Resources

Fig. 2. Example operation of replica maintenance system. Replicashortages are repaired while data services to running jobs continue.

grows as the file is replicated in accordance withuser policy. Management aspects include monitoringthe underlying storage for failure, file existence, andfile corruption via an MD5 comparison. A diagramof example operation is shown in Figure 2.

Limiting the control software system to user levellimits its ability to abuse resource providers andenables the opportunistic use of available storage.Whereas a top-down stovepipe solution might intim-idate storage providers, resulting in low volunteerparticipation, the controller relies on an existing in-termediary resource discovery service and interactswith registered services as an ordinary user. Thisapproach promotes the healthy use of ordinary pol-icy negotiation among volunteer resource providersand the consumers of thead hocgrid [5].

B. Client Toolkit

While existing tools may be used to access filesstored on the underlying system, GEMS enablesthe orchestration of higher-level operations by pro-viding additional tiered client operations as dia-grammed in Figure 1. First-level utilities enableresource reservation and insertion. These allow aclient to reserve a given amount of space on aresource - selected by the user or by GEMS - forlater use. Data movement utilities may be used totransport the data to that location, in addition to theuse of existing clients compatible with the storageservices. Upon transfer completion, the state of thefiles is synchronized with the centralized service,which is then entrusted to manage them. Otherclients waiting for notification with respect to thisdata set may then be notified, or the data set may

be found and located later by executing metadata-driven queries.

Second-level utilities provide simplified access tothe system, including FTP-like get and put opera-tions as well as a computation utility to bind existingprograms to data sources in the repository withoutcode modification. Third-level utilities enable higherlevel user interaction with the system. Graphicaltools include a general-purpose data browser capa-ble of downloading or uploading repository data.Additionally, workflow structures may be built andexecuted by using complex query and notificationcombinations, as discussed below.

III. REPLICA ACCESS

Data replication has multiple benefits. While en-hancing data survivability and enabling wide areastorage systems as discussed above, it may be com-bined with computation systems to provide perfor-mance benefits. Large-scale computing infrastruc-tures built on load-sharing architectures or Internetpeer groups require scalable access to the scien-tific repositories that enable the application. High-throughput computing requires the elimination ofbottlenecks, such as the overuse of a particular fileserver. A balance in disk usage may intuitively beobtained by balancing data placement: a user-drivenprocess discussed above.

Replica-aware computation has several imporantaspects. Record naming and identification are anessential user tasks that may be greatly eased byappropriate software tools. Data access may beenabled by explicit data transfer or virtual filesys-tem technologies. Ultimately, efficiency and perfor-mance must be delivered by proper reference to thereplica system.

A. Data Identification and Access

The underlying complexity of a replica systemcreates the need for abstract data catalogs thatenable scientific data tagging and location. In thecase of a simulation repository, for example, eachrecord in the replica system contains the set of datafiles involved; each record is uniquely identified bya config number. The record may also be tagged bya unique set of key-value pairs, which are typicallybased on the parameters used to generate the data,such as software settings, scientific coefficients, andrandom number seeds. Thescientific namespaceis

4

the set of possible tag combinations that may bemapped to a record.

While abstract naming systems are required inthe presence of dynamic data placement, the use ofa virtual filesystem creates an additional translationlayer that must be overcome. The combination of aconfig number and a filename represents anabstractname for a file that is location independent withrespect to data sources, that is, replica location. Thecombination of a file server name and a filenamerepresents avirtual name for a file. The resolutionof abstract name to virtual name is the essential taskof a replica location system, but the existence ofboth names is useful in replica-aware computation.

The GEMS-based encapsulation of a set of sci-entific data files inside a logically unified, taggedrecord provides both scientific and technical ben-efits. Simulation result files are typically used inconcert when performing analysis, and the abstracttagging and naming system simplifies the imple-mentation of postprocessing routines. The namingand access scheme additionally helps mask the com-plex internals of a distributed database, providingconceptual understanding that may be lacking in aplain filesystem. Common tasks such as retrievingyesterday’s results, searching for a critical remotefile, or deleting a whole record may be performedwith confidence.

Technically, the data structures created by therecord system provide guidance when performingmaintenance activities. For example, resource con-sumption may be allocated with respect to dis-cernible dataset characteristics or prioritized withrespect to user-defined importance.

B. Computation in a Replica Management System

Utilization of network topology information withreplica access and data insertion is an importanttechnique. When reading from extended data repos-itories, simple strategies may be used that benefitfrom the user-defined storage strategy to obtainaccess to nearby replicas. In a scientific setting,client software is designed to enable researchers tobenefit from fast access to large data sets using avariety of computation tools.

Data access methods may be classed asexplicit orimplicit techniques: explicit techniques specify thatdata is to be moved; implicit techniques reduce themethod to one similar to a local data access method

and leave the data movement to an underlyingsystem. Explicit methods are exemplified by FTPand SCP, while implicit methods are exemplifiedby remote filesystems such as NFS or AFS. Addi-tionally, virtual remote filesystems may be exposedto user software using adapters such as Parrot orUFO, allowing implicit access to otherwise explicitservices.

Both access methods are expected in extendedrepositories and may lead to performance improve-ments because of the available locality and par-allelism of data sources. Added complexity andflexibility result when combining traditional localworkstation computation with distributed cluster orgrid compute engines. Consider the differences incomplexity between two potential access patterns: afile retrieval and graphical display on a researcher’slocal workstation or a batch of hundreds of parallelcomputation jobs that analyze hundreds of existingsimulations to parameterize and instantiate addi-tional simulations. In both cases, the system mustbe able to provide access to a nearby copy of a datafile for read access.

However, in the distributed case, additional opti-mizations are possible if the data tools are integratedwith the computation tools. First, when submittingthe job, the abstract name may be used to direct thejob submission system to prefer certain computationsites that are more likely to obtain good data accessperformance. This may be done by inspecting thereplica locations that correspond to the abstractrecord definition upon translation to virtual datanames. Next, upon job arrival and start, abstractnames are resolved a second time, and data accessis optimized with respect to the actual job location.

The implementation of multiple-name resolutionprovides benefits in performance and reliability.Performance is improved by a two stage processthat executes jobs close to their data sources andbinds file access to nearby services. Reliability isimproved by allowing running jobs to determinereplica existence and rebinding the file access withrespect to replica availability.

C. Formulating Job Submission for Replica Access

An adapter called Parrot has been previouslydeveloped [1] to present a view of multiple remotefilesystems as a single, local filesystem. By trappingfile operations from a running process, the adapter

5

may allow the process to access files from remoteservers in a transparent way. This adapter enablesthe user to choose thecompute site, theinput sourcesite, and theoutput destination siteindependently.The replica management system is combined withthese tools to locate data; however, doing so in-creases the complexity faced by the programmer. Aclient job description framework is needed to locateinput sites, find sites to safely store outputs, andobtain a compute site that is not already occupied.To obtain good performance, the three sites shouldbe collocated.

Automated resolution allows clients of thereplica management system to map file loca-tions in the searchable, database-like namespaceto the physical namespace of the virtual filesys-tem. In this work, we call the entries in replicanamespace theabstract filenames, as opposedto the virtual filenames compatible with theadapter.Name resolutionmaps abstract names ina /label/path/file format to virtual file-names in a/protocol/host/path/file for-mat. The replica management system provides theadditional ability to obtain data set labels by search-ing over the metadata, enabling one to performcomputation in a completely application-orientedway, as shown in Figure 3.

> KEY=$( GEMSmatch reagents=acidbase )

> GEMSrun --input HCl /$KEY/hcl

--input NaOH /$KEY/naoh

--output NaCl salt

--output HOH water

reagents=saltwater

--exec transmute HCl NaOH

to NaCl HOH

Fig. 3. Example simulation script using abstract data locations

The first line of the script uses the client toolkitto locate the data set label required to obtain thenecessary input files for thetransmute task.The second line invokes the toolkit to resolve theabstract file names to virtual file names compatiblewith the adapter and then to submit the user task,transmute, to an available compute system, usingthe adapter to perform the file operations. Theresult of this script is a new entry in the replicasystem, containing two files,salt and water,which may be located by using the tag-value pairreagents=saltwater.

Clearly, more complex tasks would involvelengthy command lines. Since existing job sched-ulers already require users to explicitly define inputand output files, simple extensions to the syntaxof these job scheduler scripts may be preprocessedby the GEMSrun client to provide a more familiarsyntax for job submitters.

D. Job Submission Methods for Replica Access

In a replica location system, a service maintainsa database of replica locations. This service may bequeried in three ways: to map metadata tags to dataset labels, to map data set labels to a set of filenames, or to map a dataset label and file name toa storage site. Once the site has been obtained, thedata source may be accessed as described above.

While a replica management system does notspawn computation itself, we demonstrate a toolto interface with existing computation systems toaccess, analyze, and create data in a compatible way.In this section, we outline fourcomputation modes:to effectively perform computation utilizing replicaaccess:

• Local Computation on Remote Data; whichallows the local workstation to access remotedata sources and create new data in the replicasystem over a virtual filesystem.

• Scheduled Computation on Remote Data;which interfaces with an existing schedulerto create jobs that access data over a virtualfilesystem.

• Remote Computation on Remote Data; whichutilizes the ability to directly send jobs toremote systems for processing.

• Multiple Name Resolution; which is a morecomplex scheduled method, guiding the match-making process with respect to data locations.

1) Local Computation on Remote Data (LCRD):In many common cases, the user simply desires torun a single job on the local workstation. The LCRDmodel enables users to start new jobs that requiredata access to the replica management system. Thismode is based on a typical command consisting ofan operation on abstract dataset identities, compris-ing the input and output locations. These abstractarguments, which do not specify the actual datalocation, are translated by the replica system intothe physical file locations required by the virtualfilesystem adapter, in a manner analogous to shell

6

parameter parsing and expansion. Thus a task thatrequires access to remote, abstracted data sets maybe translated into a local task operating on datathat is virtually local. An example execution of thismethod is shown in Figure 4 in the LCRD frame.

Optimizing this operation is simple. First, theoutput data location is determined. The preferredoutput location is a server on the local host, but ifthis is not available or not allowed by the relevantaccess control policy, a server that is not currentlybusy will be selected. In the worst case, a remote,busy machine will be selected. If any required inputfiles from the system have replicas on a local server,these hosts are selected as data sources. Otherwise,a remote, idle service will be selected to providethe file; and if this is not possible, the worst casebehavior of a busy remote server will be selected.

2) Scheduled Computation on Remote Data(SCRD): We augment the specification for sched-uled remote jobs by allowing users to specify in-put and output locations that reside in the replicamanagement system, and we use these locationsin the commands and arguments. The GEMSrunclient translates this augmented submission scriptinto a submission script compatible with the replicasystem by making necessary substitutions: resolvingthe abstract data locations into virtual filesystemlocations and ensuring that the resulting job doesin fact run atop the adapter. The data sets requiredby the resulting job are then location-independentbecause of the adapter (i.e., no data staging isnecessary).

As shown in the SCRD frame of Figure 4, jobsare sent to the scheduler with requested destinationhosts, illustrated by the “@” markup. The schedulerhonors the request and submits the job to theappropriate compute site.

3) Remote Computation on Remote Data(RCRD): The file server used in this work allowsthe user to execute jobs inside a server-sideexecution environment (a Chirp feature). TheGEMSrun client makes use of this techniqueby sending jobs directly to a file server forcomputation. The method is similar to the scheduledSCRD method, but missing two important concepts:external centralized matchmaking and scheduling.

An example of replica access is shown in Figure4 in the RCRD frame. Two jobs are submitted to thesystem by the user. Each specifies a target file,f1 orf2. The requests are translated into replica location

operations. The request forf1 is received first, andthe response indicates that the file can be accessedon hostc3. This host is then marked “busy.” Theclient then submits the job to hostc3. The secondresponse arrives at the server, which locates the fileon c3 andc4. Sincec3 is busy, the job is sent toc4.

4) Multiple Name Resolution (MNR):As seenabove, the local computation method selects replicasrelative to the given computation site, which isimmutable. The scheduled and remote computationmethods select replicas relative to a variety of poten-tial compute sites, presenting challenges of interestto designers of grid computing systems.

• In an environment in which replica locationsare free to change or fail, replica locations maynot be available at job execution time.

• If the job is not deployed to the specific hostthat optimizes the file transfer, it may be bene-ficial to re-select replica locations to minimizetransfer relative to the actual computation site.

Clearly the first property is a harder constraint thanthe second property, but both represent essentialdesign issues.

A potential solution involves resolving the replicalocations twice. The first resolution is performed bythe job submission routine, which now selects onlythe computation site. The computation site is chosenin such a way that the resulting file transfers willbe minimized. Then, after deployment, the computejob again resolves replica locations, essentially per-forming an LCRD operation described above. Atthis point, all replica locations may change as aresult of storage server failure or computation sitesurprises, but the selection of compute site is fixed,greatly simplifying the choice. This may producevery good throughput at the small but significantcost of a second query to the centralized replicalocation service.1

In summary, we have a scheduled method similarto the SCRD but more robust and efficient becauseof the complex handling of replica locations. Below,the algorithm for the Multiple Name Resolutionmethod is outlined:

1A second query is not absolutely necessary: results from thefirstquery could be packaged, deployed, and reused.

7

LCRD SCRD

Metadatabase

Match1flocate( )

Storage

1c

1f

Storage

c2

1f f 2

Storage

c3

f 2

Storage

c4

adapter

1fjob( )

query

response

Sto

rag

eS

erv

ers

Clie

nt

Virtual Filesystem Operations

Local Computation

GEMSrun(f )1

Metadatabase

Match1flocate( ) 2flocate( )

Storage

1c

1f

Storage

c2

1f f 2

Storage

c3

f 1 c3job( ) @ f2 c4job( ) @

1fjob( ) f2job( )

Storage

c4

f 2

query

response

Sto

rag

eS

erv

ers

Clie

nt

Scheduler

adapter adapter

GEMSrun(f )GEMSrun(f )1 2

Local Computation on Remote Data Scheduled Computation on Remote Data

RCRD MNR

Metadatabase

Match1flocate( ) 2flocate( )

Storage

1c

1f

Storage

c2

1f f 2

Storage

c3

f 2

Storage

c4

1fjob( ) f2job( )

query

response

Sto

rag

eS

erv

ers

Clie

nt

GEMSrun(f ) GEMSrun(f )1 2

Metadatabase

Match1flocate( ) 2flocate( )

Storage

1c

1f f 2

Storage

c3

f 2

Storage

c4

f 1 c3job( ) @ f2 c4job( ) @

1f

Storage

c2

1fjob( )f 2job( )

query

response

Sto

rag

eS

erv

ers

Clie

nt

Scheduler

adapteradapter

GEMSrun(f )GEMSrun(f ) 21

Remote Computation on Remote Data Multiple Name Resolution

Fig. 4. Computation in a replica management system

Job SubmissionThe following algorithm is performed byGEMSrun.

1) For each potential compute hostci,compute the network transferni re-quired to perform computation onthat host.

2) Compute appropriate ranks and sub-mit the Late Resolution algorithm asa job to the scheduler.

Late ResolutionThe following algorithm is performed bythe job upon arrival on a compute host.

1) Determine the host this task is occu-

pying.2) Locate all required files, and prefer

locations that are on this host.3) Resolve abstract file locations to vir-

tual file locations in the user jobargument string.

4) Execute the user job atop theadapter.

This algorithm is illustrated in Figure 4 in theMNR frame. In a manner similar to the SCRDillustration, the user submits jobs, the replica man-agement system suggests appropriate hosts, and thejobs are sent to the external scheduler. However,the external scheduler placesjob(f2) on c3 first

8

and then placesjob(f1) on c4. Simply applying theSCRD method here would cause two network fileaccesses: each job would begin accessing files foundon a different host. Using MNR,job(f2) utilizesthe Late Resolution method and obtains access tothe local copy off2. Then,job(f1) utilizes the LateResolution method and cannot locate a local copyof f1 or the originally preferred copy onc3, but isable to locate the copy onc2. The net result is thatone job obtains access to a local file, and one jobmust access a file over the network.

E. Summary

LCRD jobs can be executed in any environmentin which a replica location service is available,creating a useful and practical prototyping tool forrunning simulation in the presence of any replicalocation system. They even can be submitted to jobschedulers, implicitly creating an “unguided” MNRmethod.

SCRD jobs provide a useful and often requestedadditional functionality to existing job schedulers:they allow matchmaking based on replica location.Once the job arrives, it functions as a LCRD job,that is, if a different compute site is allocated bythe scheduler, all file access must occur over thenetwork, because name resolution haspermanentlyoccurred.2

RCRD jobs require the user to have computeaccess to the remote machine, over a system such asSSH or Chirp. A special case that could benefit fromsuch a system is Internet computing applications asdiscussed below, because such applications often re-quire an application-specific job scheduling policy.The RCRD method would allow such applicationsto use the data locations as an additional guide inthe process.

The MNR is a robust and complex method forjob scheduling. By both guiding the job to anappropriate compute site and making correctionsupon arrival, it gains the benefits of the replicateddata sources and the global view of the scheduler.Practically, it relies upon the ability to packageadditional code as a wrapper around the user job,which may be a constraint in some environments.

2A variation would be to instruct the scheduler that a given job isonly eligible to be run on the specified host, and must otherwise wait-which would provide good collocation but would fill job queues inmany applications.

IV. THE REPOSITORYMODEL

Scientific repositories create a browsable frontend for user-labeled data sets stored in a scalablebackend. With the primary intent of creating asearchable repository, GEMS presents a database-like abstraction over the file sets stored among thestorage providers. User applications could accessthese services directly, but since they are indepen-dently managed, their presence in the system isunreliable. Technically, the GEMS system createsa centralized parameter sweep database of applica-tion specific metadata backed up by a churn-awarefile replica management backend. By combiningthe metadatabase with the management system, aquickly deployable tuple spaceand a survivableand parallelizable data system are therefore imple-mented. The resulting system is thus a merger ofrepository tags and parameter sweep entries, whichprogrammatically represents a shared tuple space inwhich running jobs may communicate, with linkageto a replica location service.

The browsable repository created by GEMS al-lows users to structure workflows around data setsstored in GEMS. This metadata may be used toderive a novel concurrent programming model thatenables synchronized tuple space communicationand parallel data movement, called adata sweep.This framework may be used to build up parametersweeps, workflows, and complex interactive param-eter space explorations.

Each entrye in the GEMS metadatabase containsa tuple of parameter tags and values, formatted asm equations:e.tuple = {pi = vi, i = 1..m} (seeFigure 3). A query set may be formed by creating asimilar tuple{qiRiwi, i = 1..n}, whereRi is somerelation. The metadatabase responds to a query setby returning the set{e : ∀qi∃pj : pj = qi∧vjRiwi}.Thus, range queries over the metadatabase may besimply constructed; for example, a user may request“all entries of type simulation with temperatureabove 300.” The entrye also contains a file man-agement data structure,e.files, which may be usedto obtain file information and replica locations.

A. Workflows in a Tuple Space

Experimental scientific workloads are more oftendriven by adaptive parameter investigations thanwell-known static sequences. Popular grid program-ming models include the parameter sweep and the

9

workflow. In a parameter sweep, the same compu-tation element is independently performed by usingeach eligible point of some parameter domain asinput. In a workflow, a partially ordered set ofdata movement and computation elements is run tocompletion. An example of a hybrid model is theparameter optimization, in which the output of thecomputation is optimized within a given parameterdomain.

While these models are extremely powerful, theyfail to capture other important computations suchas postprocessing analysis, query-based computing,and interactive computing. Given a computationy ← P (x), examples for these cases include thefollowing:

• For each completed simulation in categoryC,compute the average of state variableyi andstore it.

• For each completed simulationPC(x), if theoutput matchesQ, re-run the simulation withparameter modification∂x.

• Plot the current state of each simulation.Restart a user-selected set of simulations fromtheir last checkpoint after altering the statevariablexi.

Workflows built within the GEMS framework aresupported by the ability to parameterize workflows,thus seeding the resulting execution. While existingprogramming tools such as shells support all of theoperations performed by workflow tools, workflowsare useful for three software engineering-relatedreasons:

• Encapsulation:Operations performed within aworkflow task form a logical group.

• Clarity: The task dependency structure may bedescribed by a simple graph, and the state of arunning instance may be similarly diagrammed.

• Restartability: Partially completed workflowsmay be restarted based on previously com-pleted, safely stored work. The ability toquickly determine the minimum amount of newwork that must be performed to renew anattempt to achieve a target or explore a newtarget limits the damage done by faults andenables exploratory interaction.

Any new workflow system functionality shouldnot impede these abilities: without them the usermight as well use a fully functional programminglanguage. Thus, the GEMS method alters this model

in only a few well-defined ways First, workflowtargets are parameter tuples, not files or opera-tions. The existence of these may be easily queried,as shown above. Second, workflow targets maybe parameterized, resulting in function call-liketasks. This results in several problems that must besolved by the new framework. Task parameters mustbe arithmetically manipulated and propagated intothe actual task execution. Additionally, operationswithin workflow targets must be properly param-eterized. Inputs and output file locations are alsoparameterized values that must be linked into theuser computation.

Consider a batch of scientific simulationsS, overwhich several random seeds may be used, and thesimulation time is broken into manageable, check-pointable segments. Each evaluationS(u, r, t) of thesimulation is defined by a user nameu, a randomseedr, and a time segmentt, for m random seedsand n segments. Each evaluation depends on theprevious evaluation with respect to time but withinthe same random seed group.

B. Dynamic Creation of Workflow Targets

In a static workflow system the user would haveto generate a Makefile-like script containingm× n

workflow tasks with hand-parameterized filenamesand other objects. In a parameterized workflowsystem this may be framed by simply stating thatS(u, r, t) : S(u, r, t − 1); that is, each segmentof execution time is dependent on the previous.A base caseS(u, r, t = 0) is inserted into thesystem as an initial simulation state or base case.A target S(sorin, 312, 100) may then be specified.The system would generate the 100 resulting tasksand execute them. Parameters are passed into usercode by filtering a user-specified configuration filewith sed-like operations. Then the user task is exe-cuted as specified by the workflow node. Thus eachworkflow task must contain a header, a dependencylist, a mapping from header parameters to metadatavalues, a mapping from configuration file tokensto header parameters, and an execution string. Theresulting example workload fills a rectangular pa-rameter space but includes dependency informationas shown in Figure 5a).

More complex, interactive workflow-like struc-tures may also be simply instantiated within thismodel. Consider the same molecular simulation

10

t

simulation segments for u

rt

r

simulation segments for u

a) b)

Fig. 5. (a) In a parameter sweep [6], [7], user jobs typicallyfill a square parameter space. (b) To allow for interactive parameter execution,the system has to allow the dynamic user creation of execution branches.

example with the addition of user steering: theuser may modify the force fields applied within theexperiment, thus forking a new trajectory throughthe parameter space as shown in Figure 5b). In thiscase, user requests simply create new targets, andtasks are launched with respect to the dependencystate of the whole workflow. The tree-like searchstructure is a simple consequence of a minor changeto the parameterized dependency rule, such as

S(u, r, t,branch = p) :

S(u, r, t− 1, branch = p) (1)

or S(identity = p, time = t− 1).

Thus, abranch parameter is added, and the segmentidentity code is referenced. Segments depend oneither the previous segment in timeor the branchpoint when appropriate.

V. COMPUTING IN A REPOSITORY OFREPLICAS

The data-driven grid models a compute grid asa set of data sources and sinks of interest, laid outas a potential workspace. Mobile jobs, submittedby data-aware schedulers, interact with thedatalandscape, consuming existing data objects andstoring output data records through unitary opera-tions calledtransforms[8]. Users of a opportunisticgrid of arbitrary size may havetemporaryaccessto a large variety of existing storage resources,accessible over standard APIs [9], [10]. However,individual users would typically have difficulty or-ganizing these sites into usable categories, ensuringdata survivability in the presence of churn, andefficiently using the resources as data sources andsinks.

A. Data Placement

While approximately optimal solutions have beenattempted for the job/data co-scheduling problem,we focus on the simple case of users attemptingto enable grid computing in small collaborativeprojects. Our model relies on an external, flat list ofavailable, independently administered storage sites.Users are then capable of organizing the sites intological clusters, making up a storage map. Thisreusable per-record process is technically less diffi-cult than typical job placement matchmaking. Themaps result in a mentally tangible data environmentin which the storage, access control, and data move-ment policies may be carried out.

Policy negotiation lends itself to existing workon resource matchmaking [11], however, we extendthis model in the storage case by enabling users tospecify cluster topology along with the eligibilitycriterion, called astorage map. This additionalinformation deepens the semantics offered by thegiven policy information, by labeling the eligiblesites as well as defining how to use them. Givena list of available storage sites, the user can selecteligible locations and group them into clusters. Intypical use cases, whole swaths of available ma-chines at collaborating universities can be pooledtogether, with simple categorization.

For example, using traditional matchmaking acontroller could be instructed to store two replicason a given list of eligible sites; with the storage map,one replica would be stored at each of two clusters,and data access operations from a client at eithercluster would be directed to the nearest replica. Thusthe simple augmentation can be used to reduce theoccurrence of wide-area data access performance

11

penalties and increase the availability of replicas inthe event of cluster outages. Other consequences ofthe storage map concept are discussed below.

B. Data Access

To satisfy these problems, we offer thedatasweepas a programming model. This model al-lows users to operate within adata landscapeby performing database-like operations. Applicationcheckpoints, job dependencies, user or automaticbranches, and ordinary stored data records may beencapsulated and utilized by the researcher. Whilethe high-level approach works with abstract datarecords stored within the storage map, the under-lying system still operates on the distributed fileservers, allowing the use of existing applicationcodes.

Each data record in the system is correlatedto a storage map indicating the cluster topologyused by the record. Combining the data sweepcomputation model with this information allowsrunning jobs to access replicas that arenearesttothe computation site with respect to the map. Thus,while not globally optimal, the user-level replicaplacement flexibility offered allows users to createlocally optimal systems for their work.

Compiled user codes are unable to accommodatethe variety of new and experimental APIs. Thus,new systems must provide tools to connect them tonew services. Our approach to this problem buildson previous work that creates abstractions withinUNIX-like structures. Since GEMS is primarily agrid controller, it does not deal with these struc-tures directly but provides tools to orchestrate theconnections. Additionally, our solutions reinforcethe generally held conception that coarse-grained,suboptimal performance is achievable by easilyportable software. In these examples, we considerdata access methods for scientific users launchinglarge batches of jobs that run on a compute net-work in which an opportunistic storage network isembedded. Experimental cases were performed ona simple archive creation workload and in an actualmolecular hyperdynamics application.

C. Archive Creation

The first experiment measured the time taken tocreate a simple tar file from a local data set used by areal world scientific application, the hyperdynamics

experiments. The resulting archive is 3.7 GB andcontains 13,934 files. The data storage sites ranLinux 2.6.9 on dual 2.4 GHz 64-bit AMD machines,all on the same institutional network. The GEMSserver was located on a dual 2.8 GHz AMD systemrunning Linux 2.4.27. The three methods were runand profiled; results are shown in Figure 6.

• G/PGEMS provides a data repository for runningscripts through traditionalget and put op-erations. Interleaving these within script oper-ations results in a data staging model similarto that used by Condor and other systems.However, since data records and replicas arestored among the compute sites, there is greatpotential for data parallelism and locality. Thissolution is highly portable as it relies only onthe GEMS client toolkit, a Java implementa-tion, but it also relies on significant local diskusage and data copying.

• PIPEGEMS creates datasourcesandsinksthat maybe targeted by input and output streams. Forexample, users may reserve larger amounts ofspace in the GEMS system than are availableon a local client machine, and then use areference to the reserved location as a streamedoutput target, enabling the creation of largearchives without large local space or data copy-ing. This method offers relatively high perfor-mance and an intermediate level of portabilityand complexity. Again, it uses only the GEMSclient tools, but it requires interprocess commu-nication connected by the user through UNIXpipes or a similar technique.

• VFSGEMS offers client tools to manage file-naming conventions used by the Parrot virtualfilesystem. This user space adapter providessystem call translation to reformulate ordinarydata access methods into distributed filesystemRPCs. GEMS clients simplify user access tothis tool when operating within the replicasystem. While this solution offers the mostfunctionality, it is available only on Linux.

The G/P method created the archive and storedit with the GEMSput repository insertion toolin separate steps. ThePIPE method consisted ofthree steps: creating a repository reservation with

12

G/P PIPE VFS0

100

200

300

400

500

600

Fig. 6. Archive creation times via various methods. In theG/Pcase, the turnaround time consists of two components, localarchivecreation (black) and archive tranfer to the repository (white). Theother methods perform both operations concurrently.

GEMSreserve; piping the output oftar into aChirp I/O forwarding tool; and on completion, com-mitting the repository record withGEMScommit.Since each GEMS method took less than 2 seconds,the time consumed is not visible. TheVFS methodcreated the record, usingGEMSrun to drive tarexecution inside aparrot environment, and man-aged the record construction internally.

Results show that streaming output methods areslightly better than the two-step method and thatmoving data through the pipe is slightly faster thanmoving data through the virtual filesystem.

D. Hyperdynamics

The next experiment measured the overheadof the GEMS model on a complex computation,molecular dynamics (MD). MD simulation [12] isa powerful and widely used tool to study molec-ular motion. Insight into chemical properties of amolecule may be obtained by observing rare confor-mational transitions from one metastable region ofthe simulated potential energy surface into another.Typical systems stay in one metastable state for along time before making a transition into anothermetastable state. Thus considerable computationalresources must be allocated to achieve the simu-lated timescale necessary to reach the transitions.Recently, many approximate methods have beendeveloped to extend the timescale of molecular sim-ulations. These methods include transition path sam-pling, the kinetic Monte Carlo method, the finite-temperature string method, and the hyperdynamics

TABLE IMD ENERGY SURFACE EXPLORATION APPROACHES

Name Approach ChallengeHYD-DEPTH Long run Numerical performance of

scalar systemHYD-BREADTH Branch search Scalability of distributed

systemHYD-EXPLORE Interactive Human interaction with dis-

tributed system

method [13].In this section, we apply GEMS to the hyper-

dynamics method. In hyperdynamics simulation,enhanced storage organization and rapid data ac-cess for spacious parameter sweeps are insufficientfeatures for the effective investigation of systembehavior. This steered method allows the user to biasthe simulation into areas of conformational spaceyet to be explored.

The hyperdynamics method treated here essen-tially consists of a search through a parameterspace of simulation parameters, including the levelof hyperdynamics bias force applied. The methodattempts to observe a rare long timescale eventquickly by applying additional bias forces to thesystem. The application produces timescale andentropy histograms that indicate whether the simu-lation has progressed to the point at which applyinganother bias potential level would be beneficial andfree from serious error. Since there is no analyticalmethod to make this determination, tools to enablead hoc exploration of the parameter space wererequired.

Our target application involved the generationof simulated molecular trajectories over a rangeof input parameters, including temperature (T ) anddensity (ρ). These trajectories are independent andthus are an ideal application for parameter sweeptoolkits. Figure 5a) diagrams this method by in-dicating simulationsegments- restartable chunksof simulation progress - as functions of the(T, ρ)input and time,t. Each trajectory, shown in thefigure as a row, is a sequence of segments overtime, each containing the simulated molecular stateover a given segment length and encapsulated in thestorage system. Applications described above suchas transition path sampling (TPS) have already beenimplemented in such a way [14].

The application described in this paper differs inthat only a subset of the whole parameter space is

13

Fig. 7. Graphical user interface representation of hyperdynamics segments. The tool automates simulation branches and restarts by settingup a hyperdynamics bias. Thumbnails show that entropy distributions in segment 3 and 4, level 0 are similar. Therefore, one can branchfrom segment 3 to level 1. At level 1, the bias will push the system away from the conformational space already traversed inlevel 0.

explored: it is too large to fully explore, and only apart is of interest. However, the area of interest is notknown in advance and must be determined by statis-tical analysis of previously computed segments. Theinteresting areas of the parameter space are enteredby branching from the existing segments, creatinga new trajectory that differs from the unmodifiedsequence in that a new bias potential, describedabove, is applied. Thus, additional metadata mustbe stamped on each segment to record the locationof the segment in the search tree, as diagrammed inFigure 5b).

Table I provides an overview of potential ap-proaches to this method. The first,HYD-DEPTH,represents the control case of using only traditionalmolecular dynamics. The second,HYD-BREADTH,automatically explores all possible applications ofthe method. While this method may employ massiveparallelism to obtain a given result quickly, wedemonstrate here that it wastes a great deal of re-sources. A solution is offered in theHYD-EXPLOREmethod, which allows for the controlled use ofparallelism to explore promising paths as well asto selectively prune useless searches. This methodrelies on the technical solution of distributed com-puting problems such as data management andorganization, user feedback and programmability,and job control. Our results demonstrate that user-steered hyperdynamics (HYD-EXPLORE) can im-

prove on traditional dynamics (HYD-DEPTH) aslong as the resource consumption of brute-forcetechniques (HYD-BREADTH) is restrained.

As an example, Figure 7 shows a combinationof parameter tags from the central database as wellas a view of plotted data files. These distributedhyperdynamics executions were followed remotelyby Matlab postprocessing, generating and storingsimulation output and plots on the remote coop-erative system. Parameters include the executionhostand outputtimescale, a hyperdynamics-specificindicator of work performed and the benefit of thenew algorithm. This client also uses parameter tagsto arrange the tiles in the frame, and it pulls imagefiles from the storage network to provide a high-level view of workflow progress.

In our present application, viewing output onthe fly is not enough. The timescale and entropyhistogram indicate whether the simulation has pro-gressed to the point at which applying another biaspotential level would be beneficial and free fromserious error. Because the algorithm under study isnew and there is no analytical method to make thisdetermination, tools to enablead hocexploration ofthe parameter space are required.

As a demonstration of the nontriviality of thisprocess, Figure 8 diagrams the timescale perfor-mance as a function of branch location on the timeaxis. Thus, the researcher controlling the simula-

14

Fig. 8. Performance ratioR for various branch points. The ratio isplotted above a diagram indicating two illustrated examplebranches.R is plotted as a function of branch time. A higher ratio indicatesmore efficient exploration of the simulated conformation space. Theperformance ratio is obtainable only as the result of a simulationsegment, necessitating interactive workflow control.

tion must monitor the output histograms for er-ror and smoothness while selecting branch pointsthat maximize simulation efficiency in terms oftimescale. Critically, applying additional bias levelsposes hazards. If the bias is too high in a metastableregion, the system will not reach a local equilibriumin the biased trajectory, and the timescale of thetrajectory will be incorrect. On the other hand, if weapply fewer bias levels, a longer simulation will berequired for the system to move from one metastableregion to another. It is not knowna priori howlong one will need to simulate in order to achievecorrect distribution ofS for the next bias level.Typically, one would like to run a set of independenthyperdynamics simulations, each with a different setof parameters, such as temperature, density, and biassettings.

Since the application of additional bias levelsincreases computation time per segment, we usea performance ratio(R) that indicates efficiency:work done measured by timescale per unit of CPUtime, or

R =timescale (simulated femtoseconds)

CPU time (real seconds).

(2)In this perspective, a set of normal single-

processor hyperdynamics simulations is individually

DistributedFabric

ComputingService

submission

inse

rtion

Workflow Instance

NotificationService

Potential Tasks(parameter sets)

(GEMS)notice

Fig. 9. Notification/submission loop for parameterized workflowinstances in the GEMS framework.

run at a baseline performance level. GEMS offersthe ability to easily exploit the available paral-lelism in the method on an opportunistic comput-ing/storage system. Any method to parallelize theset of sequential runs will accumulate some lostcommunication time; in this section we consider thecommunication time consumed as a whole, that is,the time that would not be consumed if running alltasks on a sequential system.

The GEMS framework was used to create anotification-based dependency loop, enabling or-dinary workflow programming on parameterizedGEMS records, implemented as a data sweep overthe required search targets, as diagrammed in Figure9. Since additional search jobs are based on statisticsobtained in previous jobs, a complex dependencystructure is automatically built from the the userdependency rule outlined in Equation (1).

0 100 200 300 400 500 600 7000

10

20

30

40

50

60

Time (minutes)

Num

ber

of C

oncu

rren

t Job

s

Fig. 10. Job parallelism over time in the hyperdynamics method.

15

0 2 4 6 8 100

0.05

0.1

0.15

Segment Number

I/O R

atio

Fig. 11. I/O RatioI (Equation 3) per segment. Averages are reportedwith 95% confidence intervals where appropriate.

E. Interpretation of Hyperdynamics Results

Running tasks alternate between I/O operationsand computation. Here, I/O operations consist ofcentralized metadata operations as well as datamovement operations. A checkpoint, for example,consists of a metadata insertion, a reservation, par-allel data movement, and record committal. Compu-tation is fully independent per node. An importantmeasure of system congestion is thus theI/O ratio(I), computed as follows:

I =Time spent in GEMS clients

Time spent in computation, (3)

where computation includes PROTOMOL and Mat-lab operations. This ratio is plottedper segmentin Figure 11. Thus, the scalability in terms ofnumber of leaves on the search tree is considered.Plotting the ratio in the segment domain indicatesthe scalability of the search algorithm.

Additionally, individual operations were profiledfor performance for a similar, shorter run up tosegment 4. The relative server response time asmeasured by the server for each operation is shownin Figure 12. Metadata operations were the mostcommon operation during the run and were also themost expensive.

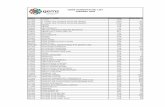

By extrapolating from the results achieved in thisexperiment, we can estimate the cost of performingthe experiment with another method. Results aretabulated in Table II.

• EmployingHYD-DEPTH would have requiredrunning a single job with traditional dynamics

Insertion

Notification

Metadata

Retrieval

Fig. 12. Centralized time consumed on the replica managementserver per operation.

to achieve the target timescale at which thedroplet was observed. This would result in 1.8hours of single-processor computation.

• Additionally, a full search of all pathways oflength 17 could have been performed, one ofwhich would have resulted in the observeddroplet. This would rely on the implementationof perfect parallelism on216 processors for thefinal leaves, and ignores the scalability issuesmentioned in the previous sections. This ideal-ized computation would result in total resourceconsumption of 5800 CPU-hours. Since thepath length is still 17, the turnaround time isagain only 1.4 hours, however.

This experiment demonstrates that while a cen-tralized metadata system may be used to efficientlymanage workflows that interleave metadata opera-tions with computation, when all tasks request in-tense synchronization and metadata information si-multaneously, performance is greatly affected. Thus,while a full automated search of the parameter spaceis potentially possible under nominal conditions, se-lective pruning and interaction with user-identifiableparameter regions should be attempted to gain betterresource utility on practical systems.

TABLE IIEXPERIMENTAL RESULTS.

Name Total (hours) Turnaround (hours)HYD-DEPTH 1.8* 1.8*HYD-BREADTH 5800* 1.4*HYD-EXPLORE 6.2 1.4

*Result extrapolated from experiment.

16

VI. COLLABORATION AND SECURITY

Extensible repositories combine user-definednaming schemes, storage strategies, and externallyowned and operated storage services into a viablescientific resource. Each operation in the systemcombines metadata operations on the centralizedsystem with data operations on distributed volunteersystems and funnels a wide, potential usage spacethrough enabling client functionality and tools.

Simple data tagging-frameworks are doomed toeventually expire. Different communities of re-searchers will emphasize different tags because ofthe varying importance of simulation parameters,local conventions in usage patterns, or obviousapplication-related factors. An extensible repositorywill provide the minimum data-tagging tools tomake collaboration possible, allowing for new usergroups to import existing data sets and namingschemes into the greater system.

The ability to add and remove external storageservices during normal system operation from thesystem is a fundamental feature. Users must be ableto determine storage sites that are eligible to storetheir data. Consequentially, extensible repositoriescannot impose data placement strategies on users.

A. Scientific Collaboration

Extensible repositories are motivated by the con-cept of collaborating scientists creating a commoncatalog and management system while maintaininglocal resource autonomy. Thus, a common groundis instantiated at the intersection of client requestsand authorized system services. User and site man-agement is a monumental problem in a large scalesystem, and must be localized by data set owners.The global set of users must be able to operatewithout global data definitions, user lists, host lists,and so forth, as these are likely to change rapidly.Administrative data structures are thus created ona per-record basis and represent a usage and man-agement policy followed by the greater system butenforced by the underlying systems trusted by theusers. A repository extended over existing resourcessuch as volunteered desktops must be able to respectthe ownership of the resource as well as the securityof the data objects.

The system allows for the interaction of usersin various roles, includingdata consumer, dataprovider, storage provider, and replica manager.

Note that a person may take on multiple roles. Eachhas limited knowledge of other users and limitedability to affect the whole system.

Individual users may find it complex to man-age dynamic replica locations, monitoring of user-defined network topology maps, authenticationschemes, and large-scale user groups. Thus, sim-plified concepts are applied and a generalized au-thentication scheme is used to enable scientific datasharing when desirable, and tight access restrictionotherwise.

B. User Management and Access Control

Scientific systems operators who wish to sharedata sets and storage resources with remote userswill find that allowing authorized access and pro-hibiting unauthorized access is of primary impor-tance. These problems are commonly treated assecurity issues. All users who attempt to access sys-tem components must be authenticated. In practice,superficial tests are performed to determine whetherthe user can satisfy a given test based on the subjectname claimed by the connecting party. Upon satis-faction, the system determines whether the subjectis eligible to perform the requisite operation byconsulting an access control list (ACL).

Wide-area extended repositories are designed tosupport a large number of volatile user groups, datarecord, and storage devices. The construction andmaintenance of a global static list of users andtheir authentication abilities are not practical. Thusthe authentication process is pushed away fromthe centralized system and onto the intermediatestorage systems with which the users interact. Anauthentication test may be performed on a storagesite relevant and trustworthy with respect to the userand record at hand. The successful completion ofthe test indicates that the operation is acceptable,and resulting changes are propagated up to thecentralized replica management system.

C. Semi-autonomous Regions

This functionality may be enabled by a carefulconsideration of all system stakeholders and throughthe use of appropriate procedures and data struc-tures. Data providersmay specify access controlrules use a commonly used tool, the ACL, withoutreferring to a global user list or authentication pro-tocol. Additionally, they may specify whichstorage

17

MetadataServerLocally Defined Users and Groups

Locally Defined Usage Policy

WAN Disk Management

Indirect Authentication

Interregional Access

Fig. 13. User-specified cluster topology information creates semi-autonomous regions on a per record basis. Users and storageproviders interact over authenticated connections. Although usershave locally defined identities, changes are propagated up to themetadata level as authorized by the record owner.

providersare eligible to host their data sets. Sincedata placement is dynamically controlled by thereplica manager, pattern matching-based specifica-tion structures are used to include or exclude serversand clusters for individual data records, creating amanageable per-record storage network topology.

The intersection of data and storage providerscreates a conceptualsemi-autonomous regioninwhich data lives. These constructs are autonomousin that the allowable authentication techniques anduser lists may be maintained without reference to aglobal center such as the replica manager or anotherregion.

Data consumersmust be able to navigate thedistributed data structure created by the data andstorage providers. Access to a given record requiressatisfaction of the record ACL with respect to agiven storage site. Thus read access is enabled in apairwise manner, without requiring regulation by thereplica manager. Users from multiple regions mayinteract via these direct connections by regulatingaccess control and consulting the centralized meta-data catalog, creating the possibility for efficient,direct data connections and blackboard-style datapublication. This creates the potential for simplifiedcollaboration.

D. Repository Access on the Grid

A founding design feature of grid computing isthe ability to allow access resources to users acrossadministrative domains. An administrative domainmay be commonly conceived as a local UNIX in-stallation managed by a system administrator. Grid

construction allows users outside the local systemto run jobs or allocate storage on the resource. Asystem administrator may enable these capabilitiesby first creating a local user that has access to theseservices, then installing a grid software system thatruns as this user. The new system maintains an in-dependent authentication and authorization schemeintended to scale to many users. For example, theGlobus system includes a public key authenticationsystem combined with a virtual organization modelto authorize grid operations [15].

This solves the basic grid security problem buthas certain limitations. It allows a new abstractionlayer - the grid security system - above the oper-ating system layer in a scalable way. However, itshoehorns users into a single authentication schemeorchestrated by administrators, not users. This addsto administrative workloads while restricting theability of users to share their access to resourceswith others. Typical systems do not allow users togrant privileges to another user without compromis-ing their own account by revealing a password orprivate key. Access control lists allow the additionuser names and permissions but are an authorizationscheme. GEMS provides a method to turn arbitraryaccess control lists into a globally visible authenti-cation scheme as well.

E. Use Cases

Consider a case in which research group lead-ers from distant universities desire to construct arelatively secure cooperative database, building onexisting (grid-enabled) resources. This problem isconcerned with constructing new grids from existinggrids or their fragments, ultimately creating a grid-of-grids. In the pre-grid era, they would have toagree on a global user list and propagate it out.Using grid tools, they could establish a global secu-rity authority and ensure that all components agreeto use it. Both solutions are problematic, as globaluser lists are extremely difficult to manage, and aglobalized security system may be overwhelming,limiting the ability of users to create subgroups oremploy previous methods (such as UNIX or DNSauthentication).

In the distant university case, the construction ofthe cooperative distributed database should not betaken to mean that within the database all recordsare equal from a security perspective. In fact, many

18

cases could arise in which users could agree to shareresources, say, in a pairwise way within the greaterstructure. This would involve selecting certain re-sources for use in the derived system and ensuringthat the security system works for the users andsystems involved: a local procedure that should haveno global side effects. This process again creates adata landscapein which a subgroup of users hasaccess to a subset of possible resourcesand datarecords. This use case creates a record-specific grid-within-grid abstraction.

F. Implementation

The creation of a semi-autonomous region startswith the well-known process of matchmaking, aprocess analogous to selecting rows from a databasetable. The process is augmented because it resultsin a new environment, one in which other users canparticipate. Our simplified implementation beginswith a small-scale user process: the selection ofthe requested resources. Resources may be orga-nized into clusters reflecting network topology orgeographical distance to enable certain functionalitydescribed below. This information is organized intoa storage mapand may be stored for later use.

The creation of a data landscape begins when auser combines a data record containing data filesand metadata tags with a storage map and ACL,resulting in aconfig. A typical use of a config isthe input and output files of a simulator program,combined with metadata such as the options passedto the program. This config is instantiated, registeredwith the greater system, and entrusted to its control.

The storage map defines the derived grid re-sources upon which the data files will reside, defin-ing the data landscape in terms of system-levelsecurity and data movement performance. The ACLis propagated to these storage sites and appliedto the appropriate files. While this approach maybe performed with existing tools, an importantchallenge remains: how does information from theconfig propagate up to the greater system? Usersmust be able to administer their data at the globallevel. For example, they must be able to delete aconfig and its underlying widely replicated files.To do this they must authenticate to the greatersystem. The ability to provide meta-grid control ofdiverse resources constitutes our solution to the gridintegration problem.

G. Rendition: An Enabling Technology

The process by which a user performs authen-ticated operations within the config data landscapeis called therendition protocol [3]. This protocolapplies in systems that implement the grid controllermodel, in which a controller manages the global pol-icy but is agnostic with respect to system specificssuch as a password list. In this case, the systemmay interact in an authenticated manner only withunderlying physical storage sites that implementdirect authentication protocols. Upon this fabric weintend to build an indirect protocol that enables thecontroller to authenticate a channel for a certain userwith respect to a config.

Thus, we reiterate our data-driven focus: Oper-ations in the system change the data landscape.Critical operations at the controller level must beauthenticated through a storage site for a config, be-cause only here is the ACL enforceable and the datastored and protected. The controller model explicitlyallows users to use old protocols to interact with theunderlying system, relying on direct authenticationmethods.

The rendition protocol solution allows users toobtain an initially anonymous channel to the con-troller. The user may then request access to modifya config, at which point the controller creates achallenge that must be satisfied for the executionof the operation. This challenge typically takes theform of a file operation with respect to the storagesite and ACL in question, such as the creation ofa numbered marker file in a directory from whichall users are restricted except those known to beauthorized for the operation by the ACL. Whenthe file is handed over, the controller is notified toinspect the satisfaction of the challenge, carry outthe operation, and return the appropriate notice.

This protocol may be thought of as similar toother indirect protocols that delegate authenticationto an external authority, but in fact rendition offersgreater efficacy. The simplicity of the scheme allowsordinary users to delegate authentication to thewhole storage fabric, a diverse array of heteroge-nous sites, each of which may implement a subsetof the available physical authentication protocols.This derivation of responsibility for security enablesusers to make use of local system knowledge tocreatead hoccollaborative systems without globalconsequences.

19

Storage Storage

Controller

USER CUSER B

Asynchronous replication

Insertion

Domain B

Domain A

Domain C

Job submission JOB B

DataAccess

Fig. 14. Simple three-domain collaboration.

As an example, consider the simple collaborationshown in Figure 14. In this case, userB inserts adata record with a config policy that allows storageand access at domainC. Collaboration and shareddata administration are possible even though eachuser is unable to authenticate at a remote site.Moreover, a job submitted by userB running indomainC may access data, perhaps using a simpleDNS-based authentication. This case reinforces thenotion that data replication may be viewed as anasynchronous job pre-staging process.

The rendition-based trust chain infrastructuresolves a common problem in trust delegation: trustdelegates must not receive too much informationfrom the delegator. Passwords and other credentialssimply cannot be passed to a third party. Like-wise, the authentication system cannot be globallyaffected by ordinary users. Yet users are able todelegate access to records via an ACL, and the con-fig data structure provides a physical authenticationtest.

VII. CONCLUSION

Observations and design features in this paperare based on experiences with the GEMS system, areplica management system originally designed asa cooperative chemistry simulation repository. TheGEMS model provides new practical solutions foractive research problems such as autonomous gridcontrol of disparate components, a flexible securitymodel driven by user needs, and services for dataaccess from a variety of possible clients.

GEMS enables the rapid construction ofad hocstorage grids that augment the usability of oppor-tunistic computing systems. The workflow tech-

niques presented here, integrated in the GEMStoolkit, improve the ability to browse intermediatesimulation results and guide workflow progress.

The new contribution here is the application ofGEMS concepts to general problems in distributedscientific computing, framed as grid integration andderivation problems. While a variety of tools exist toapproach these problems, the ad hoc storage grid aspresented here proposes a comprehensive solutionpackaged in a tangible, dynamic data landscape.Additionally, the collaboration concepts providea unified methodology for previously fragmentedarchetypes of matchmaking and access control -providing a platform upon which solutions may bebuilt.

ACKNOWLEDGMENTS

This research is supported by the Office of Ad-vanced Scientific Computing Research, Office ofScience, U.S. Dept. of Energy under Contract DE-AC02-06CH11357. Work is also supported by DOEwith agreement number DE-FC02-06ER25777.

REFERENCES

[1] Douglas Thain, Sander Klous, Justin Wozniak, Paul Brenner,Aaron Striegel, and Jesus Izaguirre, “Separating abstractionsfrom resources in a tactical storage system,” inProc. Super-computing, 2005.

[2] Justin M. Wozniak, Paul Brenner, Douglas Thain, AaronStriegel, and Jesus A. Izaguirre, “Applying feedback control to areplica management system,” inProc. Southeastern Symposiumon System Theory, 2006.

[3] Justin M. Wozniak, Paul Brenner, Douglas Thain, AaronStriegel, and Jesus A. Izaguirre, “Access control for a replicamanagement database,” inProc. Workshop on Storage Securityand Survivability, 2006.

[4] Justin M. Wozniak, Paul Brenner, Douglas Thain, AaronStriegel, and Jesus A. Izaguirre, “Making the best of a badsituation: Prioritized storage management in GEMS,”FutureGeneration Computer Systems, vol. 24, no. 1, 2008.

[5] Justin M. Wozniak, Paul Brenner, Douglas Thain, AaronStriegel, and Jesus A. Izaguirre, “Generosity and gluttonyinGEMS: Grid-Enabled Molecular Simulation,” inProc. HighPerformance Distributed Computing, 2005.

[6] David Abramson, Jon Giddy, and Lew Kotler, “High perfor-mance parametric modeling with Nimrod/G: Killer applicationfor the global grid,” in Proc. International Parallel andDistributed Processing Symposium, 2000.

[7] Paul Brenner, Justin M. Wozniak, Douglas Thain, AaronStriegel, Jeff W. Peng, and Jesus A. Izaguirre, “Biomolecularpath sampling enabled by processing in network storage,” inProc. Workshop on High Performance Computational Biology,2007.

[8] Ian Foster, Jens Voeckler, Michael Wilde, and Yong Zhao,“Chimera: A virtual data system for representing, querying, andautomating data derivation,” inProc. Scientific and StatisticalDatabase Management, 2002.

20

[9] Arcot Rajasekar, Michael Wan, Reagan Moore, GeorgeKremenek, and Tom Guptill, “Data grids, collections and gridbricks,” in Proc. Mass Storage Systems and Technologies, 2003.

[10] William Allcock, John Bresnahan an Rajkumar Kettimuthu,Michael Link, Catalin Dumitrescu, Ioan Raicu, and Ian Foster,“The Globus striped GridFTP framework and server,” inProc.Supercomputing, 2005.

[11] Rajesh Raman, Miron Livny, and Marvin H. Solomon, “Match-making: Distributed resource management for high throughputcomputing,” inProc. High Performance Distributed Computing,1998.

[12] Tamar Schlick, Molecular Modeling and Simulation - AnInterdisciplinary Guide, Springer-Verlag, New York, NY, 2002.

[13] X. Zhou, Y. Jiang, K. Kramer, H. Ziock, and S. Rasen-massen, “Hyperdynamics methods for entropic systems: Time-space compression and pair correlation function approxima-tion,” Physical Review E, vol. 74, 2006.

[14] Paul Brenner, Justin M. Wozniak, Douglas Thain, AaronStriegel, Jeff W. Peng, and Jesus A. Izaguirre, “Biomolecularcommittor probability calculation enabled by processing innetwork storage,”Parallel Computing, vol. 34, no. 11, 2008.

[15] Von Welch, Ian Foster, Carl Kesselman, Olle Mulmo, LauraPearlman, Steven Tuecke, Jarek Gawor, Sam Meder, and FrankSiebenlist, “X.509 proxy certificates for dynamic delegation,”in Proc. PKI R&D Workshop, 2004.

NOTICE

The submitted manuscript has been created byUChicago Argonne, LLC, Operator of ArgonneNational Laboratory (”Argonne”). Argonne, a U.S.Department of Energy Office of Science labora-tory, is operated under Contract No. DE-AC02-06CH11357. The U.S. Government retains for itself,and others acting on its behalf, a paid-up nonexclu-sive, irrevocable worldwide license in said articleto reproduce, prepare derivative works, distributecopies to the public, and perform publicly anddisplay publicly, by or on behalf of the Government.