U-Compare: A modular NLP workflow construction and evaluation system

Transcript of U-Compare: A modular NLP workflow construction and evaluation system

U-Compare: A modular NLPworkflow construction andevaluation system

Y. KanoM. Miwa

K. B. CohenL. E. Hunter

S. AnaniadouJ. Tsujii

During the development of natural language processing (NLP)applications, developers are often required to repeatedly performcertain tasks. Among these tasks, workflow comparison andevaluation are two of the most crucial because they help to discoverthe nature of NLP problems, which is important from both scientificand engineering perspectives. Although these tasks can potentially beautomated, developers tend to perform them manually, repeatedlywriting similar pieces of code. We developed tools to largelyautomate these subtasks. Promoting component reuse is another wayto further increase NLP development efficiency. Building on theinteroperability enhancing Unstructured Information ManagementArchitecture (UIMA) framework, we have collected a large library ofinteroperable resources, developed several workflow creationutilities, added a customizable comparison and evaluation system,and built visualization utilities. These tools are modularly designedto accommodate various use cases and potential reuse scenarios.By integrating all these features into our U-Compare system, wehope to increase NLP developer efficiency. Simple to use and directlyrunnable from a web browser, U-Compare has already found usesin a range of applications.

IntroductionNatural language processing (NLP) tasks are generallycomposite in nature, and therefore, developers often needto combine multiple preexisting components to build acomplete NLP system. Components solve particular NLPsubtasks, such as sentence splitting or part-of-speech (POS)tagging. Individually, these components are of relativelylittle use, and it is only through their combination intoworkflows that they achieve any measure of utility.There are many systems that support the construction

of such workflows, for example, Taverna [1], Galaxy [2],Kepler [3], General Architecture for Text Engineering(GATE) [4], and Unstructured Information ManagementArchitecture (UIMA) [5]. However, compliance with theseframeworks does not necessarily guarantee interoperabilityof components. Additionally, they generally requireknowledge of programming and a significant investmentof time on the part of users to construct workflows.Of these systems, GATE and UIMA have been specifically

designed for NLP and thus will be of most interest inthis paper.GATE provides many features to developers but is

basically intended as an integrated development environmentfor programmers (i.e., Eclipse** for NLP), whereas UIMAis intended to be more of a framework. A key differencebetween the two is that UIMA is component and workfloworiented, whereas GATE aims at directly assistingprogramming tasks. Another significant difference betweenthe two is the data-type hierarchy present in UIMA butabsent in GATE. Because of these factors, we selected UIMAas the basis for the system presented in this paper.UIMA is a generic framework widely used in the NLP

community to improve the interoperability of components.It defines, among other things, a standard workflow metadataformat. UIMA was originally developed by IBM [5] andis currently an open source project at Apache SoftwareFoundation [6] of which specification is defined as anopen international standard in the Organization for theAdvancement of Structured Information Standards (OASIS)[7]. Many developers provide UIMA resources, some of

�Copyright 2011 by International Business Machines Corporation. Copying in printed form for private use is permitted without payment of royalty provided that (1) each reproduction is done withoutalteration and (2) the Journal reference and IBM copyright notice are included on the first page. The title and abstract, but no other portions, of this paper may be copied by any means or distributed

royalty free without further permission by computer-based and other information-service systems. Permission to republish any other portion of this paper must be obtained from the Editor.

Y. KANO ET AL. 11 : 1IBM J. RES. & DEV. VOL. 55 NO. 3 PAPER 11 MAY/JUNE 2011

0018-8646/11/$5.00 B 2011 IBM

Digital Object Identifier: 10.1147/JRD.2011.2105691

which include BioNLP UIMA Component Repository [8],the JULIE lab UIMA component repository (JCoRe) by theJena University Language and Information EngineeringLaboratory (JULIELab) [9], Open Health NLP [10](Mayo Clinic and IBM), and UIMA-fr consortium [11](University of Nantes). UIMA is also commercially used by,for example, TEMIS and Thomson Reuters, in addition to itsoriginal developer, which is IBM.Despite all it offers, the UIMA framework is only

intended to provide an abstract-level formal framework forinteroperability. It leaves the implementation of its concretestructure to third-party developers, with differencesin concrete implementations potentially leading toincompatibilities between tools. Our belief is that the abstractlevel of interoperability offered by UIMA is not sufficient,and where possible, users also need to be provided with moreconcrete interoperability standards. More concrete standardswould usually place a compliance burden on users, but webelieve this burden can be largely relieved through the use ofNLP automated tools.Our automation system, U-Compare, has been designed in

a modular way compliant with the UIMA framework andallows users to reuse or customize any of its specific modulesindependently. The U-Compare system offers far more thanjust an automated workflow construction tool. An analysis ofworkflow results, particularly comparison and evaluation,is crucial to improve NLP systems by discovering what iswrong with particular workflows. In this paper, we describea virtual workflow architecture that calculates possiblecombinations of components and runs the combinations asvirtual workflows within a single UIMA workflow. Thisarchitecture allows users to compare existing UIMAcomponents without modification. In addition to thecomparison statistics, we provide visualizations ofcomparison results for instance-level analysis.U-Compare is an international joint initiative by the

University of Tokyo, the National Centre for Text Mining,and the University of Colorado. The whole system isavailable from the U-Compare website [12] under an opensource Lesser General Public License.In this paper, we begin by briefly explaining the

UIMA framework. We then describe the virtual workflowarchitecture for comparison and evaluation. Then, wedescribe the U-Compare platform, which is built oninteroperability and includes an easy workflow creationgraphical user interface (GUI) that allows users to createa workflow in a drag-and-drop manner. We conclude thatU-Compare offers a range of benefits to NLP applicationand component developers.

Unstructured Information ManagementArchitectureUIMA is an open framework specified as an internationalstandard by OASIS. Apache UIMA provides a reference

implementation as an open source project, with a pureJava** application programming interface (API) and a C++development kit. UIMA itself is intended to be purely aninteroperability framework for all types of unstructuredcontent such as text, audio, and video. Therefore, it is notintended to provide specific tools or type system definitionsto aid specific tasks. In this section, we briefly introduceUIMA, focusing on its applications to NLP.

Data structure and data type definitionsThe UIMA framework uses the Bstandoff annotation[ style.In this style, the underlying raw text of a document isgenerally kept unchanged during analysis, and the resultsof processing the text are added as annotations withreferences to their positions in the raw text. This contrastswith the in-line annotation style [e.g., Extensive MarkupLanguage (XML), Hypertext Markup Language (HTML),and Standard Generalized Markup Language (SGML)],which embeds additional information (tags) within the rawtext. The basic data structure in UIMA is the CommonAnalysis Structure (CAS), which holds both the raw text anda set of such standoff annotations. CAS can be saved to afile or transmitted in an XML metadata interchange (XMI)format.Every annotation should have an explicitly defined data

type. Data types should be defined in a type system as partof a hierarchy of other data types. Apache UIMA providesdefinitions for a range of built-in primitive data types, butdevelopers usually need to define more complete typesystems based on their individual needs. In the built-inApache UIMA type system, the top-level type is referredto as TOP. Below this, in the type hierarchy, we find theprimitive types such as int, float, String, Annotation, andFSArray (an array of any annotations). (Note that in thispaper, we represent type names and feature names in italics.)Types can have any number of Bfeatures,[ each of whichholds a primitive value or a reference to another annotationinstance. For example, the basic Annotation type has Bbegin[and Bend[ integer-valued features that associate instanceswith positions in the raw text.

Components and workflowsA UIMA component is the basic processing unit in UIMA.Each UIMA component receives and updates the CAS.Component metadata descriptions should specify theirinput and output capabilities, listing the types of annotationsthey take as input and the types of annotations that theymay produce as output. CASs are generated by a specialtype of UIMA component known as a Bcollection reader[and are then processed by other components known asBanalysis engines[ (AEs). The UIMA framework providesthe ability for components to be deployed either locallyor remotely as simple object access protocol (SOAP)and Vinci web services. Both locally and remotely

11 : 2 Y. KANO ET AL. IBM J. RES. & DEV. VOL. 55 NO. 3 PAPER 11 MAY/JUNE 2011

deployed components can be freely combined in UIMAworkflows.AEs may be either primitive or aggregate. Aggregate

AEs contain a list of references to Bchild[ AEs. In theaggregate AEs, flow controllers determine how to apply thesechild AEs to incoming CASs. Flow controllers can beimplemented for any processing order, for example,skipping, looping, and conditionals.A UIMA workflow is called a collection processing

engine (CPE). CPEs support error handling can automaticallyretry failed components and may terminate execution onceerrors exceed a specified threshold.

Virtual workflow and comparison generatorIt is desirable that a UIMA comparison architecture be ableto accept any valid UIMA component without the needfor modification. It should perform comparisons withinthe UIMA framework, and it should be as simple to use aspossible; ideally, everything should be automatic, exceptspecifying which components and which outputs to compare.Assume that we want to compare UIMA sentence

detectors A and B, which are type system compatible (detailsof which are described later). The most obvious strategy tocompare them would be to create a serial workflow that runsA, then B, and that then runs an additional component tocompare their outputs. However, this strategy has a majorproblem: There is no way to distinguish which Sentenceannotations were created by which sentence detector.This could be fixed by adding a new componentID featureto the Sentence-type definition in order to identify whichcomponent created each annotation. However, this wouldrequire that a common type system be used and would likelyrequire modification to the code of the sentence detectorcomponents.Additionally, if we had a tokenizer component C that

depended on the results of sentence detection, even theaddition of a componentID feature would not be sufficient.The workflow is now fA;Bg ! C, where {} means acomparison flow and the component C could use the outputof either A or B. A significant problem is encountered ifwe want to compare the performance of A or B with regardto how well C is able to perform based on their output.Not only is this a problem for comparison, but it also affectsthe normal serial workflows. Some components createmultiple types of annotations (e.g., Sentence and Token).If only the Sentence type was needed and a specific tokenizercomponent was added, we would end up with two sets ofToken annotations. This may cause problems for componentslater in the workflow, as they will receive duplicate inputannotations. A common cause of this problem is that manyinput corpora may have certain types already annotated.For example, if an input corpus already has Sentenceannotations, but a user wants to test a sentencedetector–tokenizer pair, then the tokenizer will be presented

with not only Sentence annotations from the sentencedetector but also those already present in the input corpus.An alternative to this first naive solution for comparison

would be to expand the original workflow into separateworkflows for each possible combination of components.This would avoid the problem of subsequent componentsin a workflow being presented with duplicate sets ofannotations and would require no modification to thecomponents. Unfortunately, this solution is neither efficientnor appropriate for the following reasons. First, althougheach of the components processes the same input text,dividing a workflow into individual workflows would requirethe creation of separate UIMA CASs for each workflow.Second, any shared initial segment of the original workflowbefore the comparison components would need to be runonce in each created workflow, despite that they would berunning on the same set of input data every time. Finally,the comparison part somehow needs to merge the resultsof all the created workflows together so that it can performcomparisons. This would require operations not easilypossible within the UIMA framework. The first two pointsmake such an approach highly inefficient computationally,and the final point causes problems for compatibility with theUIMA framework. Therefore, we also reject this approach.Another possible solution would be to use the UIMA

subjects of analysis (Sofa) feature, which allow the holdingof multiple sets of annotations separately within a singleCAS. This would allow multiple sets of annotations producedby the compared components to be held and then passedto subsequent components in the workflow as needed via acustom UIMA flow controller. Unfortunately, this solutionalso has several problems. First, each Sofa independentlyholds its document text, whereas we need to share the samedocument text between all components. This makes thissolution slightly inefficient, particularly when dealing withlarge documents. Second, components would need to bemodified to read and write a specific name of Sofa, witheach combination of components having a unique name.UIMA offers Sofa mapping, which could be used to avoidthis problem, but Sofa mapping is not available on remotelydeployed AEs. Finally, support for multiple Sofas in asingle CAS is only supported by some components, so ifwe were to adopt this solution, we would not be able tosupport all valid UIMA components. For these reasons,we also reject this solution.The solution that we eventually adopted is based on a

virtual workflow architecture that avoids all of the issuesencountered by the previously suggested solutions.The virtual workflow architecture that we propose does notforce components to use a specific type system or requireexisting components to be modified. This solution doesrequire that the input/output (I/O) capabilities of thecomponents be properly defined. The reasons for this aredescribed as follows.

Y. KANO ET AL. 11 : 3IBM J. RES. & DEV. VOL. 55 NO. 3 PAPER 11 MAY/JUNE 2011

Annotation group FSThe basic mechanism of the virtual workflow architecture isthat all collection reader and AE components in a workflowhave one or more corresponding AnnotationGroup featurestructures (FSs). Conceptually, the AnnotationGroup typeis used to store the outputs of that component in orderto keep them separate from the outputs of the same typegenerated elsewhere and to specify what inputs were used togenerate them. The inputs are specified by intercomponentdependences pointing to the AnnotationGroup instances fromwhich the inputs to that component were taken. Figure 1(a)shows an example diagram of these intercomponentdependences.As shown in the figure, two or more AnnotationGroup

instances will be created for a single AE when there are twoor more possible combinations of output capabilities thatsatisfy the input capabilities of that AE. There is no

duplication of annotations by storing outputs in this way,as each AnnotationGroup corresponds to a unique set ofprior AnnotationGroup instances.The set of AnnotationGroup instances present in a

CAS forms a directed acyclic graph dependence structureof intercomponent dependences. The path of dependencesfrom any source vertex to any terminal vertex representsa single virtual workflow. The result of a virtual workflowcan be obtained by taking the union of annotations presentin the AnnotationGroup instances along the correspondingpath. It should be noted that we assume that once annotationsare added to the CAS in a virtual workflow, they are notremoved in other components that correspond to othervertices; this assumption is safe because removal ofannotations is extremely uncommon in UIMA.We provide a special aggregate component called a

U-Compare parallel component. This parallel component is

Figure 1

(a) Example of interdependent AnnotationGroup instances. (b) Conceptual diagrams of a PPI system workflow showing component dependences (fromleft to right: general setting, basic pattern, complex tool pattern, and branch flow pattern). (CAS: Common Analysis Structure; PPI: protein-proteininteraction; POS: part of speech.)

11 : 4 Y. KANO ET AL. IBM J. RES. & DEV. VOL. 55 NO. 3 PAPER 11 MAY/JUNE 2011

used to explicitly specify a set of components that do notdepend on each other and allows predefined I/O capabilitiesof components to be ignored when required.

Type system for comparisons and componentdependence descriptionsThe I/O capabilities of the UIMA components, whichare represented as a list of types, are basically the onlymachine-readable information describing the behaviors ofthe UIMA components. Thus, the definition of a typesystem also influences what our system can deduce aboutcomponent behavior. If I/O capabilities are properly defined,our system can determine which components performcomparable tasks. For example, two different componentsthat both have Token-type outputs are valid targets forcomparison.Detecting components that can be compared is complex.

Figure 1(b) conceptually shows a protein–protein interaction(PPI) extraction system workflow. A PPI extraction systemdetects expressions describing PPIs from biomedical texts.In the example, the PPI extractor requires the outputs of asentence detector, tokenizer, POS tagger, and a proteinnamed entity recognizer (NER) and a syntactic parser asinput. If we include two or more components for some ofthe required tasks in the workflow (e.g., two sentencedetectors and three POS taggers), then any combinationof these tools will form a virtual workflow (e.g., giving2� 3 ¼ 6 virtual workflows). Matters are complicated bythe fact that a single tool may perform multiple tasks in asingle workflow [e.g., in Figure 1(b), the GENIA Taggerperforms tokenization, POS tagging, and NER recognition].Type systems should be designed with these

intercomponent dependencies in mind. For example,although both a tokenizer and a POS tagger outputBtokens,[ they represent semantically different objects thatmay appear together in a single workflow. For example,in the U-Compare type system, we define both Token andPOSToken, which allows us to better compare the outputsof components producing these types of annotations.

Virtual workflow processingUsing the virtual workflow architecture, a comparison canbe run within a single UIMA workflow. Figure 2 is adiagram of a comparison workflow with UIMA componentsshown as blocks. Assuming that components have hadtheir I/O capabilities specified, any UIMA component canbe included in such a workflow, the set of virtual workflowsis automatically generated, and their results are compared.In this section, we describe how virtual workflows areprocessed in a step-by-step manner, and in the followingsection, we look at how they are compared.The first component in a comparison workflow must be a

user-specified collection reader, as is the case with all UIMACPEworkflows. The collection reader runs as it normally does.

The second component should be an annotation grouper,with output capabilities matching those of the collectionreader. The role of the annotation grouper is to produce anAnnotationGroup instance based on the outputs of thecollection reader.Following the annotation grouper, we may have any

number of parallel aggregates, each of which is anaggregate component with a special flow controller.Figure 3 shows a block diagram of the processes inside aparallel component. The parallel component detects thosepreviously created AnnotationGroup instances that satisfythe input capabilities of each of its child componentsand creates a new CAS containing the required inputs forthat component based on the contents of the matching

Figure 3

Block diagram of parallel component processing. (CAS: CommonAnalysis Structure; FSs: feature structures.)

Figure 2

Block diagram of a comparison workflow. (AE: analysis engine.)

Y. KANO ET AL. 11 : 5IBM J. RES. & DEV. VOL. 55 NO. 3 PAPER 11 MAY/JUNE 2011

AnnotationGroup. It then runs the child on this newlycreated CAS and copies the results of this child processback into the original CAS, grouped into a newAnnotationGroup instance that links back to the originalAnnotationGroup from which the inputs were taken. Thisprocess is repeated for all possible input AnnotationGroupinstances matching the child components of each of theparallel aggregates. We will step through it in detail in theremainder of this section.The parallel aggregate itself is built as a standard

UIMA aggregate component that has aComparableAggregateFlowController as its flow controllerand a ComparableAnalysisEngineAdapter component as achild. When the parallel aggregate component receives aCAS, it selects the first of its child components and storesits I/O capabilities as FSs in the CAS. It then passes theCAS to the ComparableAnalysisEngineAdapter, which willuse the I/O information to determine which AnnotationGroupinstances in the CAS are possible inputs to that component.Using CAS is the only way a parent component cancommunicate with a child component within the UIMAframework.The ComparableAnalysisEngineAdapter then dynamically

calculates which AnnotationGroup instances in the CASsatisfy the input capabilities of the selected child component.This calculation is based on the AnnotationGroupinstances produced along the virtual workflow up to thatAnnotationGroup, which can be obtained tracing ancestors.Here, Btype A satisfies type B[ means that the type A is

equal to, or a descendent of, type B in the type-systemhierarchy. When the input capabilities of the selectedcomponent include multiple types, it is sometimes possible tosatisfy these capabilities not only by the output capabilityof a single AnnotationGroup but also by a combination ofoutput capability sets taken from several AnnotationGroupinstances. The ComparableAnalysisEngineAdapter calculatesall such possible combinations. It then creates a newCAS, and based on one calculated combination ofAnnotationGroup instances, it populates the new CAS with aset of annotation instances from those AnnotationGroupinstances in the original CAS. By creating a new CAS andcopying only the required annotations, the amount of datastored in the CAS can be reduced, which increasesperformance when transmitting CAS to remote services.The UIMA framework then calls the newCasProduced

method of the flow controller to determine where to runthe newly produced CAS. Our flow controller directs it torun the new CAS on the previously selected child componentof which I/O capabilities had been passed to theComparableAnalysisEngineAdapter.The child component runs as if it were in a simple

serial pipeline, using the newly created CAS and thecopied annotations as its input. After running the childcomponent, the flow controller specifies that the

ComparableAnalysisEngineAdapter should be run again.This time, the ComparableAnalysisEngineAdapter copiesany outputs of the recently run child component satisfying itsspecified output capabilities back into the original CAS aspart of a new AnnotationGroup. When copying theseannotations, links to annotations that existed in the originalCAS are relinked to the correct annotations in the originalCAS. Once this is completed, the newly created CAS isdiscarded.The ComparableAnalysisEngineAdapter then proceeds

to another combination of AnnotationGroup instancessatisfying the inputs of the selected child component,creates a new CAS, and repeats the aforementioned process.This is repeated with all combinations of AnnotationGroupcalculated by the ComparableAnalysisEngineAdapterto meet the components’ input capabilities. Theflow controller is then notified and selects anotherchild component’s I/O capabilities to pass to theComparableAnalysisEngineAdapter. This process alsorepeats until the flow controller has passed on the I/Ocapabilities of all of its child components.

Pluggable evaluation systemIn addition to the virtual workflow architecture previouslydescribed, we need a mechanism to perform comparisonsbetween the outputs of different virtual workflows. Theproblem is that there is a wide variety of comparison/evaluation metrics in NLP, even for a single type ofannotation. Given a pair of annotation results to becompared, the comparison process can be generalized intothe following steps.

1. Find a candidate list of annotations for each virtualworkflow annotation result.

2. Compare candidate annotations one by one, decidingwhether a pair of annotations is matched based on aspecific metric.

3. Calculate comparison statistics.

These steps are usually manually implemented for eachdifferent comparison that is required. We propose apluggable evaluation mechanism, where users only have toprovide the aforementioned steps (or choose from ourtemplates) and can directly plug in their own evaluationmetrics as UIMA components.By running a comparison workflow, we can obtain a set

of AnnotationGroup instances that include the output typesthat we are interested in comparing. We require anEvaluationIterator component to be added to the end ofthe workflow. This is an aggregate component with anassociate special flow controller that holds evaluationcomponents as its children. These evaluation componentseach define an evaluation metric. The EvaluationIteratorcalculates which metrics can be applied for each

11 : 6 Y. KANO ET AL. IBM J. RES. & DEV. VOL. 55 NO. 3 PAPER 11 MAY/JUNE 2011

AnnotationGroup result, based on the input capabilitiesof its child evaluation components. Multiple evaluationmetrics may be found to be applicable.Then, EvaluationIterator stores pairs of these

AnnotationGroup instances in a ComparisonSet-typeannotation as goldAnnotationGroup andtestAnnotationGroup fields. An evaluation componentshould calculate which pairs of annotations betweenTestAnnotations and GoldAnnotations are Bmatched[ basedon its own evaluation metric and store such MatchedPairsin the ComparisonSet. A visualization of this evaluationdata is discussed later.

U-Compare platformThe simplest workflow creation system would onlyrequire users to specify which components to run and inwhich order. Our U-Compare platform aims to make thispossible. We discuss features that are required to achieve thisgoal and then show our system, which allows users to createworkflows by simply dragging and dropping componentsinto place.

Interoperability for automationFirst, in addition to the formal interoperability of UIMA,we need semantic interoperability of components, whichcorresponds to them having compatible type systems. If thecomponents in a workflow are not type system compatible,they will not be able to build on each other’s results.U-Compare defines its own type system [13] that satisfies

the requirement for comparisons discussed earlier andcontains most types commonly used in NLP.Resource interoperability is also important. Resources not

only should be compatible from the type-system point ofview but also should be sufficiently reusable. If the I/Oconditions of resources are complex, the probability ofbeing able to combine arbitrary pairs of resources is low,making their reusability low. Thus, we claim that in orderto improve reusability, resources should ideally have verysimple I/O conditionsVthat is, simply a static list of I/O datatypes. During development, resources should be decomposedinto as many different components as possible, with their I/Ocapabilities clearly specified. This would make it possibleto calculate intercomponent dependences and improvereusability. U-Compare provides a component repositorydesigned in such a manner, which is currently the world’slargest open collection of type-system-compatible UIMAcomponents.Resources are far less useful if they work only on specific

platforms or in specific environments. Since both theApache UIMA API and the U-Compare platform areimplemented in pure Java, the entire platform is portableto any environment in which Java 6 is available. However,this is not sufficient for users because manual installation andconfiguration of resources is required, which may cause

problems due to platform misconfiguration and versionmismatches. U-Compare provides a loader called UCLoader,which is a very small Java program that automaticallydownloads, installs, and updates the U-Compare platformand its resource files. UCLoader can be launched direct froma web browser via a link on the U-Compare website andsaves users the potential problems caused by manualconfigurations. UCLoader caches resources on the localfile system so that after it has been run once, an activeInternet connection is no longer needed to runU-Compare.The U-Compare platform provides easy access to

components in the U-Compare component library.Components implemented in pure Java are locally deployed,and non-Java components are remotely deployed as webservices. From a user’s perspective, remotely deployedcomponents behave identically to locally deployedcomponents. All components included with U-Comparecan be used with other UIMA-based systems by adding ourjar files to the classpath and making use of the UIMAdescriptors provided with U-Compare.U-Compare is fully UIMA compatible; the U-Compare

type system and component repository are provided simplyas a default toolkit.

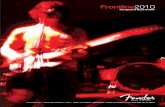

Workflow creation GUIIn the main U-Compare view, the workflow creation panel,users can easily create UIMA CPE workflows by simplydragging and dropping desired components from thecomponent library pane into the correct location in theworkflow pane (see Figure 4). This view also allows usersto edit and save component attributes including childrenof aggregates, to run workflows, and to export shareablecomponents and workflow packages.Third-party UIMA components can be easily added to the

U-Compare component library. After installing a third-partycomponent to the user’s machine, the user just has to add therequired resource files to the U-Compare classpath via theBadd to library[ menu in the U-Compare workflow GUI.U-Compare automatically searches for UIMA componentdescriptors based on these classpath entries and givesusers the choice to add any discovered components to thelibrary. Once added, third-party components can be freelyused in combination with native U-Compare components(type-system compatibility permitting).The U-Compare GUI also provides support for the creation

of the comparison workflows, as described earlier. Whena parallel component is added to a workflow, the GUIinternally switches to a comparison mode, automaticallyinserting the required annotation grouper and evaluationiterator components, and wrapping existing componentsinto parallel components. Users only need to specify whattypes of annotations and which components they want tocompare.

Y. KANO ET AL. 11 : 7IBM J. RES. & DEV. VOL. 55 NO. 3 PAPER 11 MAY/JUNE 2011

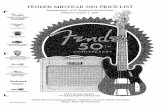

Statistics and visualizationsSince UIMA does not provide session handling features,sessions (results of workflow runs) must be handledoutside of UIMA.When a workflow is run from the workflow creation GUI,

the statistics of the results of that session are automaticallydisplayed. These statistics include runtime performancemeasures, individual and total annotations counts, and inthe case of comparison workflows, precision, recall, andF scores. Workflows from saved sessions can also be loadedinto the workflow configuration view.In addition to numerical statistics, the U-Compare

platform includes annotation visualization tools useful forNLP data (see Figure 5). These visualizations displayannotations as underlines and arcs superimposed on thedocument text and easily highlight differences discoveredduring component comparison.

Other features and applications of U-CompareUCLoader can run a specified workflow directly from thecommand line; this includes workflows created via theworkflow creation GUI. Additionally, U-Compareprovides UIMA components for standard I/O streams thatcommunicate in a simple standoff annotation format. Thisallows easy embedding of workflows into other systemsregardless of programming languages, as we did forTaverna [14].

We have built a distributed web service system thatdeploys any UIMA SOAP service to a cluster of machinesparallelized, which behaves as a single web servicecomponent. U-Compare has been used in many tasks by bothexpert and nonexpert users, by individuals, and as part ofworldwide challenges, such as protein–protein extractionfrom biological literature, BioNLP’09 shared task [15],Conference on Natural Language Learning (CoNLL) 2010shared task [16], BioCreative II.5 [17], linkage with Taverna[14], and Chemistry Using Text Annotations (CheTA) [18].

Conclusion and future workU-Compare is a modular NLP workflow creation systemspecifically designed to speed the process of creating, testing,and deploying NLP applications. It is built on a foundationof the UIMA framework and provides many of the toolsnecessary for NLP application development that UIMAcurrently lacks due to its more general nature. Those usersnot requiring this full set of capabilities will benefit fromU-Compare’s modular design, which allows many of itsinnovative features to be utilized independent of the platformas a whole. U-Compare has been already successfully usedin a variety of practical applications where it has allowedusers to achieve their goals with far less effort and with areduced probability of user error.As Apache UIMA now provides the UIMA Asynchronous

Scaleout (UIMA-AS) API as its next-generation scalability

Figure 4

Screenshot of the workflow creation graphical user interface.

11 : 8 Y. KANO ET AL. IBM J. RES. & DEV. VOL. 55 NO. 3 PAPER 11 MAY/JUNE 2011

layer, migration from CPE to UIMA-AS and from SOAPto ActiveMQ services would be the next important step.UIMA-AS parallelization and our virtual workflowarchitecture do not conflict but are complementary. Byintegrating these two, it is possible to decrease the responsetime by processing parallel components physically inparallel.

AcknowledgmentsThe authors would like to thank Luke McCrohon of theUniversity of Tokyo for the contributions to the graphicaluser interface designs and numerous valuable suggestionsregarding this manuscript; Dr. Bill Black, Dr. XinglongWang, and Dr. BalaKrishna Kolluru of the National Centrefor Text Mining (NaCTeM) for making their tools availablein U-Compare; William Baumgartner and Chris Roederof the University of Colorado for helping the type-systemdesign and providing and maintaining their UIMAcomponents. This work was supported in part by KAKENHI,Ministry of Education, Culture, Sports, Science andTechnology (MEXT), Japan, under Grant 18002007 andGrant 21500130 and in part by Biotechnology andBiological Sciences Research Council (BBSRC) underGrant BB/F006012/1 and Grant BB/G013160/1. NaCTeM issupported by the Joint Information Systems Committee(JISC), U.K.

**Trademark, service mark, or registered trademark of EclipseFoundation, Inc., or Sun Microsystems in the United States, othercountries, or both.

References1. D. Hull, K. Wolstencroft, R. Stevens, C. Goble, M. R. Pocock,

P. Li, and T. Oinn, BTaverna: A tool for building and runningworkflows of services,[ Nucleic Acids Res., vol. 34, no. Suppl 2,pp. W729–W732, Jul. 1, 2006.

2. D. Blankenberg, G. Von Kuster, N. Coraor, G. Ananda,R. Lazarus, M. Mangan, A. Nekrutenko, and J. Taylor, BGalaxy:A web-based genome analysis tool for experimentalists,[Curr. Protocols Mol. Biol., vol. Chapter 19, pp. 19.10-1–19.10-21,Jan. 2010.

3. I. Altintas, C. Berkley, E. Jaeger, M. Jones, B. Ludascher, andS. Mock, BKepler: An extensible system for design and executionof scientific workflows,[ in Proc. 16th Int. Conf. Sci. Stat.Database Manage., 2004, pp. 423–424.

4. H. Cunningham, D. Maynard, K. Bontcheva, and V. Tablan,BGATE: A framework and graphical development environmentfor robust NLP tools and applications,[ in Proc. 40th Anniv.Meeting Assoc. Comput. Linguistics, 2002, pp. 168–175.

5. D. Ferrucci, A. Lally, D. Gruhl, E. Epstein, M. Schor,J. W. Murdock, A. Frenkiel, E. W. Brown, T. Hampp,Y. Doganata, C. Welty, L. Amini, G. Kofman, L. Kozakov, andY. Mass, BTowards an interoperability standard for text andmulti-modal analytics,[ IBM, Yorktown Heights, NY, Res. Rep.RC24122, 2006.

6. Apache UIMA. [Online]. Available: http://uima.apache.org/7. OASIS UIMA TC. [Online]. Available: http://www.oasis-open.

org/committees/uima/8. W. A. Baumgartner, Jr., K. B. Cohen, and L. Hunter, BAn

open-source framework for large-scale, flexible evaluation ofbiomedical text mining systems,[ J. Biomed. Discov. Collab.,vol. 3, no. 1, p. 1, Jan. 2008, DOI: 10.1186/1747-5333-3-1.

9. U. Hahn, E. Buyko, R. Landefeld, M. Muhlhausen, M. Poprat,K. Tomanek, and J. Wermter, BAn overview of JCoRe, theJULIE Lab UIMA component repository,[ in Proc. LRECWorkshop, Towards Enhanc. Interoperability Large HLT Syst.:UIMA NLP, Marrakech, Morocco, 2008, pp. 1–8.

10. Open Health Natural Language Processing (OHNLP) Consortium.[Online]. Available: http://cabig-kc.nci.nih.gov/Vocab/KC/index.php/OHNLP

Figure 5

Screenshots of (a) comparison statistics between AImed corpus and three NERs. (b) U-Compare annotation viewer showing annotation spans asunderlines and relations as arcs with relation labels, where different types are shown as different colors.

Y. KANO ET AL. 11 : 9IBM J. RES. & DEV. VOL. 55 NO. 3 PAPER 11 MAY/JUNE 2011

11. N. Hernandez, F. Poulard, M. Vernier, and J. Rocheteau,BBuilding a French-speaking community around UIMA, gatheringresearch, education and industrial partners, mainly in NaturalLanguage Processing and Speech Recognizing domains,[ in Proc.LREC Workshop New Challenges NLP Frameworks, Valletta,Malta, 2010. [Online]. Available: http://hal.archives-ouvertes.fr/hal-00481459/en/.

12. U-Compare: Share and Compare Tools With UIMA. [Online].Available: http://u-compare.org/

13. Y. Kano, L. McCrohon, S. Ananiadou, and J. Tsujii, BIntegratedNLP evaluation system for pluggable evaluation metrics withextensive interoperable toolkit,[ in Proc. SETQA-NLP Workshop,NAACL-HLT, Boulder, CO, 2009, pp. 22–30.

14. Y. Kano, P. Dobson, M. Nakanishi, J. Tsujii, and S. Ananiadou,BText mining meets workflow: Linking U-Compare with Taverna,[Bioinformatics, vol. 26, no. 19, pp. 2486–2487, Oct. 2010.

15. J. D. Kim, T. Ohta, S. Pyysalo, Y. Kano, and J. Tsujii,BOverview of BioNLP’09 shared task on event extraction,[ inProc. BioNLP Workshop Companion Volume Shared Task,Boulder, CO, 2009, pp. 1–9.

16. R. Farkas, V. Vincze, G. Mora, J. Csirik, and G. Szarvas,BThe CoNLL 2010 shared task: Learning to detect hedges and theirscope in natural language text,[ in Proc. 14th Conf. CoNLL:Shared Task, Uppsala, Sweden, 2010, pp. 1–12.

17. R. Sætre, K. Yoshida, M. Miwa, T. Matsuzaki, Y. Kano, andJ. Tsujii, BAkaneRE relation extraction: Protein interaction andnormalization in the BioCreAtIvE II.5 challenge,[ in Proc.BioCreative II.5 Workshop Special Sessionj Digit. Annotations,Madrid, Spain, 2009, p. 33.

18. CheTA: Chemistry Using Text Annotations. [Online]. Available:http://nactem.ac.uk/cheta/

Received July 31, 2010; accepted for publicationDecember 15, 2010

Yoshinobu Kano Department of Computer Science,University of Tokyo, Bunkyo-ku, Tokyo 113-0033, Japan([email protected]). Mr. Kano received a B.S. degree in physicsin 2001 and an M.S. degree in information science and technologyin 2003, both from the University of Tokyo. He is a Research Associatein the Research Group led by Professor J. Tsujii at the Universityof Tokyo. He has been leading the U-Compare initiative designingand implementing the U-Compare system as the technical lead.He received an IBM Innovation Award in 2008.

Makoto Miwa Department of Computer Science, University ofTokyo, Bunkyo-ku, Tokyo 113-0033 Japan ([email protected]).Dr. Miwa received the M.Sc. and Ph.D. degrees in science fromthe University of Tokyo, Japan, in 2005 and 2008, respectively.He has since been working as a researcher at the University of Tokyo,under the supervision of Professor J. Tsujii. His research interestsinclude machine learning in natural language processing for biomedicaltexts (BioNLP) and computer games.

Kevin Bretonnel Cohen Computational Bioscience Program,University of Colorado School of Medicine, Aurora, CO 80045 USA([email protected]). Mr. Cohen is the Biomedical Text MiningGroup Lead of the Center for Computational Pharmacology at theUniversity of Colorado School of Medicine. He is also the LeadArtificial Intelligence Engineer at the MITRE Corporation, HumanLanguage Technology Division. He is a popular speaker at biomedicaltext mining and computational biology events and is the chairman ofthe Association for Computational Linguistics’ SIGBIOMED specialinterest group on biomedical natural language processing.

Lawrence E. Hunter Computational Bioscience Program,University of Colorado School of Medicine, Aurora, CO 80045 USA([email protected]). Dr. Hunter received a B.A. degree inpsychology from Yale University in 1982, and M.S. and Ph.D. degrees

in computer science from Yale University in 1987 and 1989,respectively. He is Professor and Director of the ComputationalBioscience Program at the University of Colorado School of Medicine.He subsequently joined the scientific staff at the U.S. NationalLibrary of Medicine (National Institutes of Health [NIH]) where in1994 he received the Regents Award for Scholarship and TechnicalAchievement. In 1999, he joined the National Cancer Institute (NIH)as chief of the Section on Biostatistics and Informatics and in 2000joined the faculty of the University of Colorado School of Medicine,where he has been working on computational methods for analysisof genome-scale molecular biology data. In 2003, the AmericanAssociation for Artificial Intelligence presented him with theEngelmore Prize for innovative applications of artificial intelligence.He is the author of two patents and more than 100 peer-reviewedscientific papers. He is a fellow of the American College of MedicalInformatics and a fellow of the International Society for ComputationalBiology.

Sophia Ananiadou School of Computer Science, University ofManchester and National Centre for Text Mining, M1 7DN, UK([email protected]). Professor Ananiadou isDirector of the National Centre for Text Mining (NaCTeM), andProfessor in Text Mining in the School of Computer Science,University of Manchester. Her current research includes terminologymanagement, text mining-based visualization of biochemical networks,data integration using text mining, text mining interoperability, andbuilding biomedical terminologies and automatic event extraction ofbioprocesses. She has been awarded the 2004 Daiwa Adrian prize andthe IBM UIMA innovation award (2006, 2007, and 2008) for herleading work on text mining interoperability in biomedicine. She hasmore than 160 publications in journals, conferences, and books.Her funding comes from EU, JISC, BBSRC, National Institutes ofHealth, and industry.

Jun’ichi Tsujii Department of Computer Science, University ofTokyo, Bunkyo-ku, Tokyo 113-0033 Japan ([email protected]).Dr. Tsujii received the B.Eng., M.Eng., and Ph.D. degrees in electricalengineering from Kyoto University, Japan, in 1971, 1973, and 1978,respectively. He was an assistant professor and associate professor atKyoto University, before taking up the position of professor ofcomputational linguistics at the University of Manchester Institutefor Science and Technology (UMIST) in 1988. Since 1995, he has beena professor in the Department of Computer Science at the Universityof Tokyo. He is also a professor of text mining at the University ofManchester (half-time) and the research director of the U.K. NationalCentre for Text mining (NaCTeM) since 2004. He was the presidentof the Association for Computational Linguistics (ACL) in 2006and is a permanent member of the International Committee onComputational Linguistics (ICCL) since 1992. He received an IBMScience Award in 1988, an IBM Faculty Award in 2005, and the Medalof Honor with Purple Ribbon by the Government of Japan in 2010.

11 : 10 Y. KANO ET AL. IBM J. RES. & DEV. VOL. 55 NO. 3 PAPER 11 MAY/JUNE 2011