The Record-Breaking Terabyte Sort on a Compaq Cluster

-

Upload

independent -

Category

Documents

-

view

0 -

download

0

Transcript of The Record-Breaking Terabyte Sort on a Compaq Cluster

The following paper was originally published in the

Proceedings of the 3rd USENIX Windows NT SymposiumSeattle, Washington, USA, July 12–13, 1999

T H E R E C O R D - B R E A K I N G T E R A B Y T E S O R T O N A C O M P A Q C L U S T E R

Samuel A. Fineberg and Pankaj Mehra

THE ADVANCED COMPUTING SYSTEMS ASSOCIATION

© 1999 by The USENIX AssociationAll Rights Reserved

For more information about the USENIX Association:Phone: 1 510 528 8649 FAX: 1 510 548 5738Email: [email protected] WWW: http://www.usenix.org

Rights to individual papers remain with the author or the author's employer. Permission is granted for noncommercialreproduction of the work for educational or research purposes. This copyright notice must be included in the reproduced paper.

USENIX acknowledges all trademarks herein.

The Record-Breaking Terabyte Sort on a Compaq ClusterSamuel A. Fineberg

[email protected] Mehra

[email protected] Tandem Labs

19333 Vallco Parkway, M/S CAC01-27Cupertino, CA 95014 USA

Abstract

Sandia National Laboratories (U.S. Department ofEnergy) and Compaq Computer Corporation built a72-node Windows NT cluster, which Sandia utilizes forproduction work contracted by the U.S. government.Recently, Sandia and Compaq's Tandem Divisioncollaborated on a project to run a 1-terabytecommercial-quality scalable sort on this cluster. Theaudited result was a new world record of 46.9 minutes,three times faster than the previous record held by a32-processor shared-memory UNIX system. Theexternal sort utilizes a unique, scalable algorithm thatallows near-linear cluster scalability. The sortapplication exploits several key hardware and softwaretechnologies; these include dense-racking Pentium IIbased servers, Windows NT Workstation 4.0, theVirtual Interface Architecture, and the ServerNet ISystem Area Network (SAN). The sort code was highlyCPU-efficient and stressed asynchronous andsequential I/O and IPC performance. The I/Operformance when combined with a high-performanceSAN, yielded supercomputer-class performance.

1. IntroductionHigh-performance sorting is important in manycommercial applications that require fast search andanalysis of large amounts of information (for example,data warehouse and Web searching solutions). Wepresent the design of a cluster and a commercial-qualitysorting algorithm that together achieved record-breaking performance for externally sorting a terabyteof data. The audited terabyte-sorting time of 46.9minutes was three times faster than the previous record[NyK97], which was achieved on a 32-processor SGIOrigin 2000.

The cluster consists of 72 Compaq Proliant serversrunning Microsoft Windows NT , although only 68 ofthe servers were utilized for the sort. It was built incollaboration with US Department of EnergyÕs SandiaNational Laboratories in New Mexico, where it iscurrently deployed for production work contracted by

the U.S. government. The cluster nodes communicateusing CompaqÕs ServerNet-I SAN (System AreaNetwork). The SAN topology uses unique dual-asymmetric network fabric technology for performanceand reliability [MeH99].

The external three-pass parallel sorting algorithm isalso expected to scale well on larger clusters. In fact,the algorithm was originally developed for CompaqÕsNonStopTM Kernel (NSK) based servers (and will beproductized on both NSK and Windows NT clusters).The program uses an application-specific portabilitylibrary, libPsrt, and a message-passing library, libVI.Section 4 describes the application softwarearchitecture in detail. LibVI builds upon the VIPrimitives Library specified by the Virtual InterfaceArchitecture (VIA) Specification [Com97]; it providesa robust, high-throughput, multi-threaded and thread-safe messaging layer with efficient waiting primitivesbased on NTÕs IO Completion Ports. LibPsrt buildsupon libVI and supports more application-specificoperations, such as remote I/O and memorymanagement.

2. Cluster Hardware ConfigurationThe cluster hardware consisted of rack mountedProliant servers, a ServerNet I SAN, and over 500disks. The disks were either internal to the nodes orplugged in to Compaq hot-pluggable drive enclosures.The total purchase price for a similarly configured 68-node cluster would be $1.28M.

This system consists of the following hardware:

Item QuantityServers 68 systems, 136 CPUs,

34GB RAMDisks 269 Wide Ultra SCSI-3

busses, 537 disks, 62external disk enclosures,4.5TB storage

ServerNet 68 dual-port ServerNet INICs, dual-fabric topologyutilizing 48 6-portServerNet I switches

Racking 22 racks, 12 KVM switches,2 rack mounted flat-panelmonitors

Operating System 67 Windows NTWorkstation, one 70-licenseWindows NT Server

Ethernet Network 68 Embedded 100BaseTNICs (included in theservers) attached to a singleCisco Catalyst 5500 switch

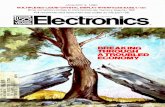

The costs break down as shown in Figure 1. As shownin the graph, the largest portion of our system's cost wasin disks and external disk enclosures. The secondlargest portion was the servers (including additionalCPUs and memory added onto the base serverconfiguration). The ServerNet network was only 16%of the system cost, including all of the cables, switches,and NICs. The racking gear, KVM (keyboard, video,mouse) switches, and the monitors used as systemconsoles contributed only 5% to the cost. The 72-portCisco Catalyst 5500 Ethernet switch made up 3% of thesystem cost, and finally, the Windows NT operatingsystem made up 2% of the system cost.

2.1 Compaq Proliant 1850R ServersThe full Sandia cluster contained 72 rack-mountedCompaq Proliant 1850R servers, each with:• Two 400MHz Intel Pentium II Processors• 512MB, 100Mhz SDRAM• One integrated and one PCI-card based dual-Ultra-

Wide SCSI-3 controller (for a total of 4 SCSIbusses)

• One dual-ported ServerNet I NIC

Of these nodes, 68 were actually used for the sort: 67 assort nodes and one as the sort manager. In addition, atotal of over 500 SCSI disks (in varying configurations)were distributed among the 67 sort nodes (nominally, 8disks per sort node — 7 for sorting and one for the OS).The disks were all striped using Windows NT’s ftdiskutility. Each node ran Windows NT Workstation 4.0Service Pack 3 (except the sort manager node which ran

NT Server). All nodes were running a ServerNet-specific software stack comprising Compaq ServerNet IVI (SnVie) version 1.1.10, ServerNet PCI AdapterDriver (SPAD) version 1.4.3, and ServerNet SANManager (SANMAN) version 1.1.

The Proliant 1850R nodes were ideal for this clusterbecause of several key features: high memorybandwidth, small rack footprint, and integrated remoteconsole for system management. Memory bandwidth of450MB/sec was measured with the STREAMSbenchmark on these nodes (using both CPUs). This isvital to achieving good sorting performance becausemuch of the time spent sorting is spent in merges,which tend to stress processor-memory bandwidth in amanner similar to the long-vector accesses modeled bythe STREAMS benchmark. The small footprint wasimportant in order to make such a large cluster feasible.Proliant 1850Rs take only 3U (about 5.25 in/13.3 cm)of rack space, and include 3 1-inch hot-pluggable drivebays, room for 2 internal drives, and 4 PCI slots.Finally, the integrated manageability features allowedus to monitor and reboot nodes from a single systemconsole using Compaq Insight Manager software.

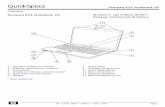

2.2 ServerNet I SANEach ServerNet I PCI NIC provides two ServerNetports: an “X” port and a “Y” port. Each port contains atransmitter and a receiver, both of which can operateconcurrently, driving a bi-directional parallel LVDScable in both directions simultaneously. The NICs inour system are interconnected by two fabrics of 6-portServerNet-I crossbar routers (see Figure 2). An “Xfabric” connects all the X ports, and a “Y fabric”connects all the Y ports. The two router fabrics arecomplete but asymmetric: each node interfaces withboth fabrics and each fabric interfaces with every node,but the topologies of the two fabrics are different.Therefore, each fabric provides a path between everysource-destination pair, but the path lengths (measured

Figure 1: Cluster cost breakdown

Racking5%

Disks41%

Servers33%

Operating System

2%

Ethernet3%

ServerNet16%

in router hops) of most paths differ from one fabric tothe other.

Traditionally, ServerNet topologies used two identicalfabrics for reliability (but not for performance) byswitching to a path in the Y fabric when the X fabricpath to a destination was down, and vice versa. Here,we utilize the two fabrics for reliability a n dperformance by configuring SnVie to ÒpreferÓ theshorter path when establishing a connection betweentwo processes on different end nodes. This reducesnetwork-routing latency (300 ns pipelined latency perpacket per ServerNet I router) by creating and usingpaths with fewer hops; we also lower the probability ofoutput-port contention (because each fabric handlesonly half of the total message traffic). Fault tolerance ismaintained by this approach because there are at leasttwo possible paths between each node pair, and trafficcan always be routed via the alternate path if thepreferred path fails.

3. The Sort AlgorithmDavid Cossock of Tandem Labs developed sort, merge,and parallel selection algorithms [Cos98] that wereused. The file to be sorted is distributed across the localfilesystems of the sort nodes, and sort processes areassigned to nodes. While the algorithm applies equallywell to variable-length records, the results reported hereare with files containing fixed-width records (80 byteslong) each containing an 8-byte (uniformly distributed)random integer key. The sort algorithm leaves thesorted data distributed among nodes such that the node-order concatenation of all the files consists of recordssorted by the integer key in either ascending ordescending order.

The three sort phases include the following:• Phase 1 (local-sort): each node sorts memory-

resident runs of the local partition.• Phase 2 (local-merge): the local runs are merged so

that each node has a completely sorted work file.

Figure 2 : Sandia network topology

To Yfabric

ToX fabric 512 MB

SDRAM

2 400MHzCPUs

ServerNet IDual-portedPCI NIC

6-port ServerNetCrossbar switch

Compaq ProliantServer

4 SCSIbusses,each with 2disks

PCIBus

Bisection Line (each switchon this line adds 3 links tothe bisection width)

6-port ServerNet Icrossbar switch

Y-Fabric(14bisectionlinks)

X-Fabric(10bisectionlinks)

• Phase 3a (partition): The sort processes execute aparallel selection protocol over ServerNet,determining (1) the upper and lower bounds oftheir ultimate sorted output partition, and (2) therelative byte addresses, within each node's workfile, of the beginning and end of records containingkeys between these bounds. This is the only part ofthe sort that utilizes the sort monitor process.

• Phase 3b (parallel-merge): Using remote I/O acrossthe ServerNet SAN, nodes merge pieces of each ofthe other nodesÕ work files to end up with theirportion of the sorted data.

While Phases 1 and 2 stress the system balance withineach node, stressing its CPU-memory and disk I/Osubsystems, Phase 3b stresses each nodeÕs I/O bus andthe cluster interconnect.

4. Communication Software ArchitectureThe architecture of the sort application is shown inFigure 3. Each sort node runs a Òsort processÓ, and thesort manager node runs the Òsort monitorÓ process.Each sort process contains two threads. A ÒsortingthreadÓ implements the sorting algorithm minus remoteI/O and interprocess communication (IPC), and aÒshadow threadÓ performs, in the background, IPC andremote I/O (on behalf of other sort processes). Each ofthese threads uses a library called libPsrt, whichprovides mechanisms for both IPC and remote I/O.Local I/O is handled directly by the sort thread itselfusing NTÕs asynchronous ReadFile and WriteFilesystem calls and event-based completion. All remoteI/O and IPC is also asynchronous and uses WindowsNT I/O Completion Ports (IOCPs) to determine

operation completion. This means that each sortingthread can issue commands, which can be IPC, remoteI/O, or inter-thread (within a process) communication,and then it can wait on its own I/O completion port forthe next completed operation or incoming request. Theshadow thread is essentially a dispatch loop thatreceives requests and completion notifications on oneIOCP and posts completion of local requests on anotherIOCP to be read by the local sorting thread. It also postscompletions of remotely requested operations on VIconnections (as ÒreplyÓ messages).

4.1 LibPsrtLibPsrt provides an RPC-like model, based on the I/Oarchitecture of CompaqÕs NSK operating system (note:we could not utilize Windows NT RPC because it did

not support the ServerNet SAN). In this model, sortand shadow threads issue ÒrequestsÓ to other threadsthat can be the same node or on any other node in thecluster. Each of the requests contains a command and adata portion. When the request is fulfilled, the threadsends a ÒreplyÓ, which also includes a data portion. Anexample of this is when the sort manager wants toobtain the ÒkeyÓ ranges in order to do partitioning inPhase 3a of sorting. It sends a message to each of thenodes with a Òkey requestÓ command and a blank datasegment. Each node replies to key request with its keysincluded in the replyÕs data segment. Because the sortprocess is not always ready to process requests, all IPCrequests are first handled by the shadow thread, whichforwards them to the sorting thread (i.e., it posts themto the sort threadÕs IOCP). Then, when it is ready, thesort thread reads these requests from its IOCP, and

ServerNet I NIC (rev. 1.5E)

Spad 1.4.3 SanMan 1.1

SnVie 1.0.10

libVI

libPsrt

User Libraries

Kernel

Sort Monitor

Application

Hardware

Sort Process

Figure 3 : Sort Communication Architecture

replies directly with the requested data. For remote I/Orequests, the shadow thread reads the local sort file andresponds directly to the requesting remote threadwithout involving the sort thread.

4.2 LibVI LibPsrt is built on top of libVI, a message passinglibrary built on the Virtual Interface Architecture(VIA). LibVI provides a traditional send/receive stylemessaging API. It is thread safe, and has support forasynchronous send/receive operations (eachimplemented as a synchronous call in its own thread).One key feature of libVI is its ability to post messagecompletions through Windows NT I/O completionports. For a more detailed description of libVI, see[Fin99].

4.3 ServerNet DriversLibVI uses SnVie, a kernel-level implementation of theVirtual Interface Architecture for ServerNet I hardware[Hor95]. It is intended as a porting vehicle formigration to ServerNet II [HeG98]1. While SnVieprovides all of the features of ServerNet II, it isimplemented in software. Therefore, it has lowerperformance than ServerNet II VI, which will beimplemented in hardware. In ServerNet II, VI datatransfers will occur without any kernel transitions.LibVI will run without modification on ServerNet IIwhen it becomes available. SnVie uses ServerNet I'sTNet Services API, which is supported by theServerNet PCI Adapter driver (SPAD). Name servicesupport for low-level ServerNet Node IDs is providedby the SAN Manager driver (SANMAN).

5 . Performance Studies with the SandiaCluster

5 . 1 Sequential I/O Performance withStriped Disk Partitions

Windows NT offers several mechanisms for issuing andcompleting I/O requests. In this section we presentsome performance measurements that were madeduring sort application development. These resultsrepresent filesystem and disk performance underWindows NT, but not the performance of the sortapplication code. However, we utilized thesemeasurements to make better choices whenimplementing the sort.

1for more information on ServerNet II seehttp://www.servernet.com

Riedel, et al [RiV98] report that striping large accessesacross multiple disks, using unbuffered I/O, and havingmany outstanding requests are the ways to optimizesequential disk performance. Benchmark code for theirwork is also available from Microsoft Research(http://research.microsoft.com/barc/Sequential_IO/).We augmented their benchmark code with support forIOCPs (I/O Completion Ports), an efficient mechanismfor notification of outstanding I/O requests. Riedel, et alhad found write performance saturating below readperformance.

Using this benchmark, we found that:

• The best performance was given by asynchronousunbuffered I/O using either event-based or IOCPsignaling;

• At 512KB I/O request size, peak read performanceand very nearly the peak write performance couldbe achieved with just 2 I/O requests outstanding atany given time; otherwise, 8 outstanding I/Orequests were needed for full throughput at 128KBI/O size; and

• We needed to stripe I/Os across either 2 10K-rpmor 3 7200-rpm disk drives in order to fully exploit a40 MB/s Ultra-Wide SCSI bus.

In fact, a single Seagate ST39102LW (9 GB, 10K-rpm)drive was measured at 18.43 MB/s at 128KB I/O sizewith 8 outstanding requests under an Adaptec SCSIcontroller.

The Compaq Proliant 1850R nodes have an integratedSymbios 53c875 dual-channel SCSI controller. With 310K-rpm drives striped across two SCSI strings, at512KB I/O size, and 20-deep asynchronous unbufferedI/O issue, we were able to reach a total bandwidth Ñreading or writing Ñ of 53 MB/s. The deployed systemhas a second dual-channel SCSI controller in the formof Symbios 53c876 PCI card. At about 110MB/s the32-bit 33-MHz PCI bus peaks; the four SCSI stringswith 3 disks each are therefore quite enough to exploitall available I/O bus bandwidth in the server.

5 . 2 Communication Performance withLibVI over ServerNet I VI

5.2.1 ServerNet Hardware Performanceand Scalability

Each ServerNet I link is rated at 50 MB/s in eachdirection. ServerNet I hardware-level packets containup to 64 bytes of payload and 16 bytes of header andCRC. Each packet specifies either a read/write request

or a read/write response. Only read responses and writerequests carry payload. Requests and responses occur inone-to-one correspondence; i.e., every request must beacknowledged by a response. It is easy to see that peakuni-directional data bandwidth on a ServerNet I link isat most 40 MB/s when we consider packetization andacknowledgement overheads; likewise, peak bi-directional data bandwidth on a ServerNet I link is 33.3MB/s.

Each node is allowed to have up to 8 request packetsoutstanding. The SPAD driver configures this limit to 4.The ServerNet I NIC responds to requests receivedfrom the network by performing PCI read or writeoperations. It is important to note that, irrespective ofthe request type, every request-response sequenceinvolves exactly one PCI (memory space) readtransaction and one write transaction. While fewer than4 requests are outstanding, an end node can continue togenerate requests. Once the limit is reached, an endnode can only issue responses; the next request mustwait for a response.

Data transfer occurs when an initiating node uses itsBlock Transfer Engine (BTE) to initiate a request; atthe target node, the Access Validation and Translation(AVT) logic carries out the specified operation andgenerates a response. ServerNet routers performwormhole routing, allowing packet transfer to occur ina pipelined fashion: Each packet travels through thenetwork as a train of symbols; the routing logic ispipelined so that within 300 nanoseconds of arriving atan input FIFO on the switch, the head of the trainemerges at an output port with the remaining symbolsfollowing one per 50 MHz clock. So long as four ormore requests can be completed per round-trip time, thepipeline will continue to run efficiently.

There are three principal sources of Òpipeline bubblesÓin ServerNet I: (1) PCI bus first-byte read latency, (2)single-threadedness of the BTE engine, and (3) output-port contention in routers.

The first of these reduces the average PCI efficiency ofServerNet I NICs, pushing the 32-bit 33-MHz PCI businto saturation as soon as a node hits 33 MB/s uni-directional outbound traffic or 19 MB/s bi-directionaltraffic. PCI saturation can delay generation of responsepackets, causing packet round-trip times to shoot up.

The second causes the BTE to idle when all 4 requestsare outstanding, pushing the network interface into anoutstanding request (OR) limited mode, where the peakbandwidth of a connection drops to 4*64/RTT MB/swhere RTT is the hardware round-trip time for arequest-response pair in microseconds. Another

property of a single-threaded BTE is its inability to useboth fabrics simultaneously; at best, it can support staticload balancing of connections between fabrics.

Output-port contention occurs in ServerNet routerswhen two packets try to go out of the same router port;one of them is blocked, causing other traffic behind it toback up. Thus, output-port contention causescongestion in the network, lowering the networkÕs linkutilization and throughput and increasing the RTT ofpackets. We hasten to note that ServerNet II has muchbetter performance characteristics on all three counts.

5.2.2 LibVI Performance and ScalabilityIn this section we present raw performancemeasurements of the libVI communication libraryrunning over ServerNet I VI. We measured both point-to-point and all-to-all performance, though the sortprimarily stresses the all-to-all bandwidth or thenetwork.

5.2.2.1 Point-to-point latency andbandwidth

To measure point-to-point performance a simple ping-pong test was utilized. This test consisted of oneprocess sending a message of a given size to anotherprocess, then that process sending the message back.The time for this operation was halved to get the one-way send time. For this test, all data were sentsynchronously using blocking send and receivefunctions. In addition, all graphs in this paper use theVIA send/receive-based long message protocol (ratherthan the VIA remote-DMA based protocol) asdescribed in [Fin99] because it performed better for sortphase 3b.

LibVIÕs basic message latency was about 138microseconds. Note that ServerNet I VI is a softwareimplementation, and this latency is system dependent.

0

5

10

15

20

25

30

0 200000 400000 600000 800000 1000000

Message Size (bytes)

Ban

dw

idth

(M

Byte

s/s

ec)

Figure 4 : Point-to-point libVI bandwidth

Latencies between 80 and 200 microseconds have beenobserved with libVI on different systems. While thisvariation should decrease with ServerNet IIÕs hardwareVIA implementation, PCI and memory latency as wellas CPU speed will always cause this to vary.

One of the primary advantages of VIA based networksis their ability to transfer long messages using DMA,freeing the processor to perform computation or toservice short messages. LibVI was designed tominimize CPU utilization, and maximize the use ofDMA. This was done primarily by using ÒwaitÓcommands to check for message completion, ratherthan polling for completion. This implementationresults in extremely low CPU utilization. For longmessages, libVIÕs CPU utilization was less than 5%running at full bandwidth. Even for short messages,CPU utilization was quite low. However, this was doneat the cost of message latency. It is likely that lowerlatency could be achieved with a polling-based short-message protocol, or a protocol that does not have anadditional thread involved in message receipt.However, our protocol seeks to maximize concurrencyand message throughput, a feature well suited to data-intensive applications. In fact, this sort was quiteinsensitive to latency. Sort performance was almostentirely a function of SAN and disk bandwidth. Withsuch a low CPU utilization, it is possible to effectivelyoverlap operations in the CPU with communication toan extent not possible with most message-passinglibraries and networks.

Message bandwidth is shown in Figure 4. The point-to-point bandwidth achieved was 30MB/s. This differencebetween this and the Òachievable peakÓ of 33.3MB/secwas due to the Òlong-messageÓ protocol used for thismeasurement.

LibVI internally can utilize either VIAÕs send/receivemechanism or its remote DMA mechanism fortransferring messages larger than 56 bytes (smallmessages are always transferred with send/receive). Inour experiments, VIA send/receive delivered betteraggregate bandwidth when many nodes werecommunicating with many nodes simultaneously.However, point-to-point bandwidth of the send/receivebased protocol was about 10% worse than the remoteDMA based protocol (with only one pair of nodescommunicating across the entire cluster). Because thesort performance was more dependant on aggregatebandwidth, we chose to use libVIÕs send/receive basedlong-message protocol for the sort. This protocol is acompile-time internal option for libVI, and does notchange libVIÕs semantics or its API.

5.2.2.2 Aggregate bandwidthOne of the key metrics against which libVI wasbenchmarked is aggregate bandwidth. This wasespecially important for the Terabyte Sort code, whichincluded a parallel-merge phase limited by thenetworkÕs aggregate bandwidth. While these resultswere limited by the networking hardware utilized in theSandia cluster, we also found some non-obviousprotocol effects.

The aggregate bandwidth test utilizes the IOCPsend/receive routines. The test initially issues one512K byte send to every other process and one 512Kbyte receive from every process (the 512KB messagesize was chosen because it was the block size used inthe parallel-merge phase of the sort). Then, it sits in aloop waiting on the IOCP for one of the operations tocomplete. As operations complete, the program re-issues the same operation (either or an asynchronoussend or receive) to or from the same process. Itcontinues to re-issue the operations until each specificsend and receive has been issued 50 times. This resultsin the transmission of 25MB*<# of procs> of data bothin and out of every process in the cluster. Becausemessages are issued in completion order, thecommunication pattern is random and there is noattempt made to alleviate hot-spots. This unstructuredcommunication is very similar to the sortÕs parallelmerge, and was a good predictor of sortingperformance.

We ran this test with one process per node across theset of nodes from 0 to N-1 (where N is the total numberof processes on which we ran the test). This resulted inthe performance shown by the squares in Figure 5 (i.e.,the ÒunrestrictedÓ line). As can be seen from the graph,scaling is relatively linear, but per-node bandwidth isbelow the peak ServerNet bandwidth of 33MB/sec perdirection.

Figure 5 : Long message aggregatebandwidth protocol comparison

0

200

400

600

0 20 40 60

Processes

Ag

gre

gat

e B

and

wid

th

(MB

ytes

/sec

)

restrictedunrestricted

In fact, we were achieving only about 8 MB/sec/node(bi-directional) vs. the ÒachievableÓ maximum, 19MB/sec (bi-directional). The primary cause of this wasthat the network was not a fully connected crossbar.The topology we utilized was only capable of about _of the peak bandwidth, so we should only be able toattain about 9.5MB/sec/node.

However, 8MB/sec/node was still slower than what weexpected. This turned out to be from node contention.Essentially, if two or more nodes are sending orreceiving data from a node in the system, then they willreceive it slower than if they had sequentialized theiraccess to that node. This is particularly true for thesort, where every node is likely to have multiplesend/receive operations that are ready to begin at anygiven time. While it is combinatorially unlikely that 3or more nodes will be reading from or writing to anynodeÕs ServerNet NIC, it is quite likely that there willalways be some NIC that has at least two nodescontending for it. Unfortunately, it was impossible toorchestrate the communication in the sort code to fullyalleviate this problem, but it was possible to imposerestrictions on the libVI long-message protocol.

The changes to the libVI communication protocolintroduced ÒrestrictionsÓ in different portions of theprotocol. First, consider the send/receive protocol. Inthe standard ÒunrestrictedÓ protocol, it is possible to besimultaneously receiving a message from and sending amessage to every other node in the cluster. However,we can impose a lock that restricts this to a singleÒlong-messageÓ send and a single Òlong-messageÓreceive active at any given time. The results of thesechanges are shown in the diamonds in Figure 5. As canbe seen, for small numbers of nodes, the unrestrictedprotocols performed slightly better, but for largernumber of nodes, the restricted send/receive performedsignificantly better. This difference was an increase inthe bi-directional bandwidth of about 1MB/sec/node forthe full machine, raising performance to about9MB/sec/node. Therefore, the restricted send/receiveprotocol was used for the Terabyte Sort. However, fortypical applications that do not perform the sameamount of unstructured all-to-all communication, theunrestricted protocol is recommended since it performsbetter in the more common case.

5.3 Sort Application PerformanceThe following sorting performance was obtained on 68nodes of our 72-node cluster. One node was dedicatedto a sort monitoring process. Each of the remaining 67nodes owned a partition consisting of 205,132,767 80-byte records (with 8-byte integer keys) for a total of16,410,621,360 bytes per node. The benchmark sorts atotal of 13,743,895,389 records in 46.9 minutes. The

breakdown of this sort time is shown in Figure 6 andfurther described in the following sections.

5.3.1 Process Launch and ConnectionEstablishment

The initial 25 seconds of the sort were lost in processlaunching and communication initialization betweensort processes. While this time may seem excessive, itwas in fact the result of a significant amount of tuning.When the job starts the following must occur:• the sort monitor must start the sort processes on

each of the 67 sort nodes;• the sort processes must establish VI connections

with each of the other sort processes and the sortmonitor; and finally,

• the sort monitor must send initial sort parameters toeach of the sort processes.

The remote process launch was achieved with a customRPC server on each of the sort nodes. This RPC serverimplemented a remote Òcreate processÓ API, similar tothe standard Win32 CreateProcess system call. Then,the nodes had to establish VI connections. BecauselibVI emulates a connectionless model, all VIconnections must be established in advance, before thesort algorithm can start. Unfortunately, the connectionmechanism in ServerNet I VI is inefficient, and highlytiming dependent. While it is expected that theconnection mechanism for ServerNet II will be better,connection establishment overhead remains one of theweaknesses of the VI Architecture standard, and it isunlikely it will ever be fast. If this were a significantperformance problem for the sort, we could haveoverlapped part of the connection establishment processwith Phase I of sorting. However, that would haverequired significant modification to libVI, which wasundesirable in order to keep the code maintainable.

5.3.2 Local In-Core Sorting and MergingEach node sorted approximately 16 GB of data in 13:05to 13:45 minutes. As data were read and written onceduring sort and once during merge, 64 GB of net diskaccess was accomplished at a net I/O rate of 5.3 GB/s

Process Launch0.9%

Parallel Selection

0.2%ParallelMerge69.6%

Local In-Core

Sort/Merge29.3%

Figure 6 : Breakdown of terabyte sort time

on 67 nodes, or 78.3 MB/s per node on 7 disks spreadacross 4 SCSI strings on a single PCI bus. One of thestripe sets consisted of 4 7200-rpm drives on 2 SCSIstrings in an external disk enclosure. The other stripeset consisted of two internal and one hot-pluggable10K-rpm drives installed in the server. These were alsospread across two SCSI strings (the drive containing thesystemÕs OS shared the SCSI string with the single hot-pluggable 10K disk). In addition to this, a few of thestripe sets used additional disks to make up forunderperforming drives. As noted above, this drivecount is below what is needed to saturate the SCSIbuses. Peak I/O rates observed while sorting weretherefore disk-count limited. Performance on this phaseduring stress periods was also PCI limited; with dual-PCI systems the cluster would have hit an average I/Orate of 7.5 GB/s.

The application uses multi-threading and asynchronousfile operations to provide input/output concurrencyduring the local sort and merge phases. The 16 GB dataare treated as 116 runs of about 138 MB each. Each sortprocess, as discussed above, maintains 30 MB ofoutstanding requests with 2 MB buffers. Multipleasynchronous bulk reads are issued during the sortphase, while the double-buffered merge input consistsof 116 concurrent 1.25 MB requests. The merge phaseis seek-count limited (which could be optimized furtheron large-memory systems by utilizing larger buffersand doing fewer seeks). In spite of multi-threading, thesort phase incurs occasional CPU delays.

CPU UtilizationSort Phase (out of 2.0)

Local Sort 1.3Local Merge 0.65Parallel Merge 0.18-0.22

Table 1 : Sort CPU Utilization

CPU utilization for the entire sort was low, and it islikely that we could have achieved similar results withuniprocessor nodes. The results are summarized inTable 1. The Òlocal sortÓ was the only phase of the sortthat had a CPU utilization of more than one, and theÒlocal mergeÓ used only _ as much CPU as the localsort.

With the advent of Ultra2 Wide (i.e., 80 MB/sec orfaster) SCSI and Fiber Channel SCSI systems, and dual,wider, and/or faster PCI busses available in manyservers, we expect the time for these phases to dropbelow five minutes on newer systems.

5.3.3 Parallel Selection and PartitioningUsing a highly efficient parallel selection procedure, thesort took only 4-6 seconds to conduct a three roundprotocol of bounding, k-th record sampling, andmerging, to determine what output partition neededwhich piece of each nodeÕs sorted data.

5.3.4 Parallel MergeEssentially, this phase involves a global data movementin which almost every byte of data moves across thenetwork in a random all-to-all communication pattern.This is the phase where the ServerNet I SAN and eachnodeÕs PCI bus were stressed. Approximately a terabyteof data moved across the cluster in 32.6 minutes. Thismeans that each node merged at about 8MB/sec for atotal rate of 535MB/sec. To achieve this, each node hadto simultaneously read its disk at 8MB/sec, write itsdisk at 8MB/sec, and sustain 8MB/sec of bi-directionalbandwidth through its ServerNet NIC. The totalbandwidth through the network was 535MB/sec. Thiswas about 88% of the aggregate ServerNet bandwidthmeasured in Section 5.2, despite the increased load onthe PCI bus due to SCSI disk transfers.

Because most of the real work in this phase was I/O orIPC, and both SCSI and ServerNet have very low CPUutilization, the total CPU for this phase was 0.18-0.22across both CPUs (as shown in Table 1). While this isfar less than the 2.0 CPUs we had available, we haveobserved that libVIÕs performance does dropsubstantially in a uniprocessor environment. This is dueto the overhead associated with handling I/O and IPCinterrupts and executing application code on the sameprocessor.

The performance of this phase was PCI limited; highfirst-byte latencies on PCI bus drive small-transferefficiency in to the 25% range ServerNet IÕs smallpacket size caused the inevitable PCI reads Ñ 1 per 64-byte packet Ñ to operate at this low efficiency.

The limiting effects of the PCI bus were furthermagnified by the single-threadedness of BTE. Thenetwork operated in outstanding request exhaustedmode: requests could not be issued waiting forresponses to earlier requests. Simulation of ourtopology under the Phase 3b workload [ShA99]showed that at 4 KB transfer size, a 50/50 mix of readand write requests, and a request injection rate of 47004KB-requests per second, the min, max and meanvalues of packet RTT were, respectively, 3.34, 682 and25.8 microseconds. Therefore, whenever ServerNet INIC went into outstanding request (OR) exhaustion(due to all 4 unacknowledged packets outstanding),performance dropped below 10 MB/sec bi-directional.

Simulations also showed that the network dynamicsconsisted of periodic phase transitions into and out ofthis OR-exhausted mode.

In addition, the 4-in 2-out topology at the periphery ofthe network was necessary to keep the router countslow. Output-port contention caused by trafficconcentration further limited the utilization of thebisection links.

Even so, a sustained bisection bandwidth of 535MBpswas achieved by the topology primarily because theasymmetric-fabric architecture kept the hop-counts low,thereby reducing the round-trip times. Many of thelessons learned about ServerNet IÕs large-scaleperformance influenced the design of ServerNet II.

With a dual-PCI server, and two ServerNet I NICs pernode, the time for this phase would have been cut inhalf, falling to 15 minutes, without requiring anychange in network topology. Coupled with 4-way 10Krpm disk striping per partition, estimated total sort timewould be below 25 minutes.

With ServerNet II NICs (multi-threaded engine,efficient PCI operation due to 512-byte packet size,faster 125 MB/s links), 64-bit PCI busses, andServerNet IIÕs 12-port routers (which will drop inter-node distance to 2 hops), we expect Phase 3 time todrop below 10 minutes, to a point where even remoteI/O will be limited by disk speeds.

To summarize, while the current result stands at 46.9minutes to sort a terabyte of data, we envisage a time ofabout 15 minutes Ñ a three-fold improvement Ñ usingcommodity hardware available within the next fewmonths. It is important to note that our sort algorithm,libPsrt and libVI are architected for scalability andefficiency; that is why, improvements in price andperformance of sorting are expected to closely trackforthcoming improvements in cost and performance ofhardware.

6. SummaryThis paper described the hardware and software usedfor Compaq's Sandia Terabyte Sort benchmark. TheSort exploited several key technologies includingdense-racking Intel Pentium II based Compaq servers,Ultra-Wide SCSI disks and dual-channel controllers,Microsoft Windows NT 4.0, Compaq ServerNet I SAN,and the Virtual Interface Architecture. Thesetechnologies combined with a unique scalable sortingalgorithm and highly efficient communication software,deliver high performance at a fraction of the cost ofother solutions.

7. AcknowledgmentsThe authors of this paper would like to thank DavidCossock, John Peck, and Don Wilson of CompaqTandem Labs for their contributions to the sortapplication. Thanks to Jim Hamrick and Jim Lavalleefor help with configuring and debugging ServerNet Iand SnVie. In addition, we would like to thank MiltClauser, Carl Diegert, and Kevin Kelsey of SandiaNational Laboratories for purchasing the cluster,helping us put it together, and allowing us to use it forthis work.

8. References[Com97] Compaq Computer Corporation, Intel, and

Microsoft, Virtual Interface ArchitectureSpecification, Version 1.0, December 1997,http://www.viarch.org.

[Cos98] Cossock, D., " Method and Apparatus forParal lel Sort ing using Paral lelSelection/Partitioning," Compaq ComputerCorporation, patent application filedNovember 1998.

[Fin99] Fineberg, S., Implementing a VIA-basedMessage Passing Library on Windows NT,Revision 1.0, Compaq Tandem Labs,January 1999.

[RiV98] Riedel, E., van Ingen, C., Gray, J.,ÒSequential I/O on Windows NT 4.0 ÑAchieving Top Performance,Ó 2nd USENIXWindows NT Symposium Proceedings, pp.1-10, August 1998.

[HeG98] Heirich, A., Garcia, D., Knowles, M., andHorst, W., ÒServerNet-II: a ReliableInterconnect for Scalable HighPerformance Cluster Computing,Ó ParallelComputing, submitted for publication.

[Hor95] Horst, R. ÒTNet: a Reliable System AreaNetwork,Ó IEEE Micro, Volume 15,Number 1, pp. 37-45, February 1995.

[MeH99] Mehra, P. and Horst, R.W., "SwitchNetwork using Asymmetric Multi-Switch/Multi-Switch-Group Interconnect,"Compaq Computer Corporation, patentapplication filed March 1999.

[NyK97] Nyberg, C, Koester, C., Gray, J., Nsort: aParallel Sorting program for NUMA andSMP Machines, Ordinal Technologies, Inc.whi te paper , November 1997,http://www.ordinal.com.

[ShA99] Shurbanov, V., Avresky, D.R., Mehra, P.,Horst, R., ÒComparative Evaluation andAnalysis of System-Area NetworkTopologies,Ó 1999 International ParallelProcessing Symposium, submitted forreview.