Procedural Generation of 3D Models Based on 2D Model Sheets

-

Upload

khangminh22 -

Category

Documents

-

view

3 -

download

0

Transcript of Procedural Generation of 3D Models Based on 2D Model Sheets

Model SheetsProcedural Generation of 3D Models Based on 2D

Academic year 2019-2020

Master of Science in Computer Science Engineering

Master's dissertation submitted in order to obtain the academic degree of

Counsellor: Ignace SaenenSupervisor: Prof. dr. Peter Lambert

Student number: 01000509Robin Arys

Model SheetsProcedural Generation of 3D Models Based on 2D

Academic year 2019-2020

Master of Science in Computer Science Engineering

Master's dissertation submitted in order to obtain the academic degree of

Counsellor: Ignace SaenenSupervisor: Prof. dr. Peter Lambert

Student number: 01000509Robin Arys

Admission to loan

The author gives permission to make this master dissertation available for consultation

and to copy parts of this master dissertation for personal use. In all cases of other use, the

copyright terms have to be respected, in particular with regard to the obligation to state

explicitly the source when quoting results from this master dissertation.

Robin Arys, June 2020

Preface

I would like to express my sincere gratitude to my counsellor, Ignace Saenen, for the usefulfeedback and suggestions, and for putting up with my many questions.Next to my counsellor, I would also like to thank my supervisor, Prof. dr. Peter Lambert,for allowing me to pursue the topic I proposed and for being understanding when it tooklonger than I initially envisioned.

I would like to thank both of my parents. Even though they’re no longer here physically,I know they would be proud right now.Besides my parents, I would also like to thank the rest of my family and all of my friendsfor the support they have provided me during some very difficult times.And in particular, I would like to thank my girlfriend, Jelena, for putting up with me forover a decade now.

Robin Arys, June 2020

Procedural Generation of 3D ModelsBased on 2D Model Sheets

Robin ArysSupervisor: Prof. dr. Peter Lambert

Counsellor: Ignace Saenen

Abstract

This thesis outlines how existing computer vision methods, multi-view stereo reconstruction

methods to be more precise, can be used to procedurally generate 3D models from 2D model

sheets. It outlines the challenges that arise when using computer vision methods on model

sheets instead of photographs, as well as how these challenges can be mitigated. For each

step of the proposed method, alternative methods are described and their performance

is compared on model sheets. The proposed method is evaluated on computer generated

model sheets and some pointers are given towards possible future research. The method

used to generate model sheet-like renders from a 3D model is also described.

Keywords

Model Sheets, Sketch-Based Modelling, Computer Vision, Multi-View Stereo Reconstruc-

tion, 3D Model Generation

Procedural Generation of 3D Models Based on 2DModel Sheets

Robin Arys

Supervisor(s): Prof. dr. Peter Lambert, Ignace Saenen

Abstract—This article tries to outline how existing multi-view stereo re-construction methods can be used to procedurally generate 3D models from2D model sheets. This method has been tested on different computer gen-erated model sheets.

Keywords— Model Sheets, Computer Vision, Multi-View Stereo Recon-struction, 3D Model Generation, Sketch-Based Modelling

I. INTRODUCTION

A typical procedure for the geometric modelling of three-dimensional models is to start with a simple primitive such

as a cube or sphere, and to gradually construct a more complexmodel through successive transformations or a combination ofmultiple primitives. To aid 3D modellers in this and to keepdesigns consistent across a production, character and prop de-signers create drawings of the model that has to be designedfrom different angles and in different poses, the so-called modelsheets.

The problem with this method is that it takes a lot of work toget from a simple cube to a high-polycount character model. Inthis abstract, a method is proposed that will procedurally gen-erate a rough draft for the final model that can serve as a betterstarting point for 3D modellers by leveraging the model sheetsthat are already provided by the designers.

The proposed method makes use of existing multi-view stereoreconstruction methods that have been fine-tuned to performbetter on model sheets. This method has been evaluated on dif-ferent computer generated model sheets.

II. GENERATING 3D MODELS FROM 2D MODEL SHEETS

The proposed method requires at least three different modelsheet views of the same model, all at different angles, but theseviews do not necessarily need to be equiangular. Pairs are cre-ated from these model sheet views, each pair containing twoadjacent angles. For each of those image-pairs, the underlyingepipolar geometry is recovered through the fundamental matrixand used to reconstruct a disparity map for each view in thepair. After this, all disparity maps for the same view that weregenerated in different image-pairs are combined to get a betterdisparity map for that view.

A. Feature Detection and Matching

The first step in multi-view stereo reconstruction methods isalways to find as much corresponding corners between the dif-ferent views as possible. These correspondences will then beused to recover the fundamental matrix describing the epipolargeometry that is underlying these views. Usually in multi-viewcomputer vision problems, there are an abundance of features to

detect and match. Since model sheets contain no texture infor-mation that can be used by the feature detector, but rather con-tain only edges and large, blank spaces, detecting good featureson a model sheet is a lot harder.

In the following step, the proposed method will use an eight-point algorithm to recover the fundamental matrix. This meansthat at least 8 correspondences are needed between both imagesin each image-pair. Eight corresponding points is the bare min-imum necessary to make the algorithm work but adding morecorrespondences is desired, since that will increase the qualityof the fundamental matrix estimation that can be calculated inthe next step.

The combination of a Shi-Tomasi feature detector [1] withan ORB feature descriptor (Oriented FAST, Rotated BRIEF)[2], matched with a simple brute-force algorithm using a cross-checking validity test provides the best results when match-ing features between model sheets. The Shi-Tomasi detectordoes not use a lot of texture information when detecting cornersand thus produces more stable results when used on texturelessmodel sheets than other popular feature detectors who do use alot of texture information. The ORB feature descriptor worksby computing binary strings for each feature by comparing theintensities of pairs of points around that feature. This method isnot only very fast but also performs well on model sheets. Sincethe amount of features that can be reliably found in most modelsheets is relatively low (in the magnitude of tens to a couplehundreds), a basic brute-force algorithm can be used to matchfeatures between both images in each image-pair. Using a cross-checking validity test in the brute-force matching algorithm in-creases the quality of the estimation for the fundamental matrix.

B. Recovering The Fundamental Matrix

To recover the best possible estimation of the underlying fun-damental matrix, RANSAC resampling is used in conjunctionwith a 7-point algorithm. RANSAC (Random Sample Consen-sus) is a resampling technique that generates candidate solutionsby using subsets of the minimum number of data points requiredto estimate the underlying model parameters [3].

Once the epipolar geometry has been recovered for eachimage-pair through a fundamental matrix estimation, this infor-mation can be used to determine projective transformations H1

- Hn and H ′1 - H ′n, with n the amount of image-pairs, that willrectify the model sheets. In each pair of rectified images, allcorresponding epipolar lines will become completely horizontaland at the same height. This transformation will make it a loteasier to calculate the disparity map in the next step.

C. Generating the 3D model

A disparity map shows the distance between pixels in bothimages of a pair that represent the same feature in the pictured3D scene.

Once each image-pair has been rectified, the rectified modelsheets can be used to create two disparity maps for each image-pair. One disparity map can be calculated for the disparity fromthe left to the right view and one can be calculated for the dispar-ity from the right to the left view. Since each model sheet is partof two different image-pairs (except for the left-most and right-most views), this means that for each model sheet two differentdisparity maps can be calculated.

Before calculating the disparity map itself, a Sobel filter isused on the rectified images. After disparity values have beenfound, different post-filtering methods are applied to them in arefining pass. These include a uniqueness check which defines amargin by which the minimum cost function should ”win” fromthe second-best value, quadratic interpolation to fill in somemissing areas, and speckle filtering to make the disparity mapmore smooth and filter out inconsistent pixels.

For calculating the disparity values, Hirschmuller’s SemiglobalMatching algorithm is used with some minor computation opti-misations. These optimisations consist of making the algorithmsingle-pass and thus only considering five directions instead ofeight, matching blocks instead of individual pixels, and using asimpler cost metric than the one described by Hirschmuller [4].

Since the resulting disparity maps are calculated from the rec-tified model sheets, they need to be derectified to get the dispar-ity maps for the original model sheets. This can be done easilyby applying the inverse of the transformations H1 - Hn and H ′1- H ′n that were used in the rectification step to each respectivedisparity map.

The disparity value for each pixel can be associated withdepth values through three-dimensional projection. This allowsus to directly translate each disparity map into a point cloud.A convex hull algorithm (Quick Hull) can then be run on thepoint cloud to generate a full 3D model of the scene. The recon-structed scene, however, is always determined only up to a sim-ilarity transformation (rotation, translation, and scaling). Thisis because it has been reconstructed from images of the scene,and images do not contain (enough) information about how thepictured scene behaves when compared to the rest of the world.

III. EVALUATION USING COMPUTER-GENERATED MODELSHEETS

In order to be able to evaluate the proposed method, a groundtruth disparity map is needed. The ground truth disparity mapsin this thesis were generated using a depth shader in Blender2.79. Since the model sheets use an orthographic projection, thedisparity values of the model sheet are the multiplicative inverseof their depth values and can thus be calculated in a shader pro-gram. The model sheets themselves were generated using theFreestyle function in Blender 2.79’s Cycles renderer. A blackFreestyle line with an absolute thickness of 1.5 pixels and acrease angle left at the default 134.43◦ was combined with acompletely white object material with its emission strength setto 1 so the 3D model’s faces would be uniformly white, mimick-

ing the typical black-and-white line-art style of real model sheetdrawings.

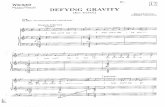

Fig. 1. Model sheet generated using the Freestyle function of the Cycles ren-derer in Blender 2.79.

A model sheet generated with this method for the Utah Teapotmodel can be seen in figure 1, while the ground truth disparitymap for this model can be found in figure 2. Figure 3 containsthe disparity map generated with the proposed method for thismodel sheet.

Fig. 2. Ground truth disparity map generated for the model sheet shown infigure 1.

Fig. 3. Ground truth disparity map generated for the model sheet shown infigure 1.

IV. CONCLUSION

The proposed method shows some promising results whenused on model sheets, but the final models are currently not yetusable in production environments. With a lot of manual tweak-ing, it is possible to get some good approximations though, aswas shown for the Utah Teapot dataset.

The method used to automatically generate model sheets pro-vides some nice looking results. This method could even be usedas a stylistic choice in a 3D production such as a video game oranimated movie or in further research regarding this topic.

REFERENCES

[1] Shi, Jianbo and Tomasi, Carlo, Good features to track, IEEE ComputerSociety Conference on Computer Vision and Pattern Recognition, pp. 593-600, 1994.

[2] Rublee, Ethan and Rabaud, Vincent and Konolige, Kurt and Bradski, Gary,ORB: An efficient alternative to SIFT or SURF, 2011 International confer-ence on computer vision, pp. 2564-2571, 2011.

[3] Derpanis, Konstantinos G., Overview of the RANSAC Algorithm, ImageRochester NY, vol. 4-1, pp. 2-3, 2010.

[4] Hirschmuller, Heiko, Stereo Processing by Semiglobal Matching and Mu-tual Information, IEEE Transactions on Pattern Analysis and Machine In-telligence, vol. 30-2, pp. 328-341, 2007.

Contents

Admission to loan iv

Preface v

Abstract vi

Extended abstract vii

Contents ix

1 Introduction 1

2 Related Work 52.1 Sketch-Based Modelling . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6

2.1.1 Evocative Modelling . . . . . . . . . . . . . . . . . . . . . . . . . . 62.1.2 Constructive Modelling . . . . . . . . . . . . . . . . . . . . . . . . . 9

2.2 Computer Vision . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 122.2.1 Epipolar Geometry . . . . . . . . . . . . . . . . . . . . . . . . . . . 122.2.2 Corner Detection . . . . . . . . . . . . . . . . . . . . . . . . . . . . 132.2.3 Stereo Matching . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 162.2.4 The Fundamental Matrix . . . . . . . . . . . . . . . . . . . . . . . . 182.2.5 Projective Rectification . . . . . . . . . . . . . . . . . . . . . . . . . 212.2.6 Disparity Map . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 22

3 Methodology 253.1 Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 273.2 Model Sheets . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 273.3 Corner Detection and Matching . . . . . . . . . . . . . . . . . . . . . . . . 313.4 Epipolar Geometry . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 333.5 Image Rectification . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 343.6 Disparity Map . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 353.7 Point Cloud and Convex Hull . . . . . . . . . . . . . . . . . . . . . . . . . 36

ix

CONTENTS CONTENTS

3.8 Frameworks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 37

4 Method Evaluation 394.1 Corner Detection and Matching . . . . . . . . . . . . . . . . . . . . . . . . 39

4.1.1 Quality metrics . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 404.1.2 Template based detectors . . . . . . . . . . . . . . . . . . . . . . . 434.1.3 Gradient based detectors . . . . . . . . . . . . . . . . . . . . . . . . 454.1.4 SIFT and SURF detectors . . . . . . . . . . . . . . . . . . . . . . . 464.1.5 Feature descriptors . . . . . . . . . . . . . . . . . . . . . . . . . . . 474.1.6 Corner matching . . . . . . . . . . . . . . . . . . . . . . . . . . . . 48

4.2 Epipolar Geometry . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 504.3 Rectification . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 514.4 Disparity Map . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 52

4.4.1 Evaluating the Global Method . . . . . . . . . . . . . . . . . . . . . 57

5 Conclusion 64

6 Future Work 656.1 Possible Alternatives . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 656.2 Possible Extensions and Adaptations . . . . . . . . . . . . . . . . . . . . . 66

x

List of Figures

1.1 An example of a model sheet used for 2D animation in Walt Disney’s ani-mated shortWinnie the Pooh and the Honey Tree. © 1966 The Walt DisneyCompany. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2

1.2 An example of a model sheet used for 3D modelling in the animated movieSuperman/Batman: Apocalypse and a corresponding 3D model, reproducedfrom [3]. Model sheet: © 2010 Warner Bros. Animation ‖ 3D model: ©2017 David Dias . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3

2.1 If the user inputs the strokes on the left side, the SKETCH system willproduce the primitives on the right (reproduced from [14]). . . . . . . . . . 7

2.2 A template retrieval system matching sketches to predefined 3D models.(reproduced from [15]). . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8

2.3 An example of a mechanical object, constructed in SolidWorks (reproducedfrom [18]). . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10

2.4 A skeleton and its corresponding polygonised implicit surface in BlobMaker,a free-form modelling system (reproduced from [20]). . . . . . . . . . . . . 11

2.5 Iterative sketch-based modelling. Surface details and additive augmenta-tions are added to an existing model (reproduced from [12]). . . . . . . . . 11

2.6 The epipolar geometry between two views of the same scene. The point xis projected at xL and xR in the two views. (reproduced from [22]). . . . . 12

2.7 One of the disparity maps from the Tsukuba dataset [68]. . . . . . . . . . . 23

3.1 A comparison between more classical computer vision datasets and modelsheets . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

3.2 The different components of the proposed method as well as the input andoutput at each step, and the feedback loops that can be used to optimise animplementation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 28

3.3 Blender model sheet-like renders for a very basic 3D character. . . . . . . . 29

xi

LIST OF FIGURES LIST OF FIGURES

3.4 A recreation of the model sheets from figure 1.2, albeit using a slightlydifferent mesh. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 29

3.5 One of the ground-truth depth maps rendered with Blender 2.79. . . . . . 303.6 Blender’s node-based compositing tool as it was set up to generate the

ground-truth depth maps. . . . . . . . . . . . . . . . . . . . . . . . . . . . 313.7 Performance of different feature detectors for different viewpoint changes.

Graph reproduced from [41]. . . . . . . . . . . . . . . . . . . . . . . . . . . 323.8 A rectified image-pair with its rectified matches. . . . . . . . . . . . . . . . 343.9 A disparity map retrieved with the proposed method. . . . . . . . . . . . . 363.10 A disparity map generated from the model sheets shown in figure 1.2. . . . 37

4.1 Rectified matches for one of the generated model sheets for different featuredetectors and the corresponding rectification quality metrics. The rotationangle between both model sheet views is 5◦. . . . . . . . . . . . . . . . . . 42

4.2 The model sheet views used in this test. From left to right, the camerarotation is 40◦, 45◦, and 50◦. . . . . . . . . . . . . . . . . . . . . . . . . . . 43

4.3 Different quality metrics in function of the parameters of the 7-point RANSACalgorithm. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 51

4.4 A typical disparity map found with local-approach block matchers on modelsheets. The spaces between lines are empty. . . . . . . . . . . . . . . . . . 52

4.6 The disparity quality metrics in function of the algorithm’s block size. . . . 564.8 The left-view and right-view disparity maps, and their combination. . . . . 564.5 Some of the methods that were tried unsuccessfully to improve the quality

of local-approach matchers on model sheets. . . . . . . . . . . . . . . . . . 594.7 Disparity maps generated with large and less-large block sizes, as well as

their ground truth disparity map. . . . . . . . . . . . . . . . . . . . . . . . 60

xii

List of Tables

4.1 A comparison of the performance of different feature detectors on modelsheets using the ORB descriptor and a brute-force matching algorithm. . . 41

4.2 A comparison of the performance of different corner detectors on modelsheets using different descriptors. MSD = Mean Sampson distance, SEV =Smallest eigenvalue. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 47

4.3 The different feature detectors and descriptors that were evaluated, the nameof their OpenCV implementations, and the parameters that were used duringthe evaluations. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 49

4.4 A comparison of the difference in quality metrics when enabling or disablingcross-checking in the brute-force matcher for the Shi-Tomasi/ORB combi-nation. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 50

4.5 A comparison of OpenCV’s default Semiglobal Matching parameters andthe parameters proposed for use on model sheets. . . . . . . . . . . . . . . 55

4.6 The datasets used to evaluate the global method. . . . . . . . . . . . . . . 614.7 The reconstructed disparity maps for all evaluated datasets. . . . . . . . . 624.8 The parameters used to generate the results in table 4.7. . . . . . . . . . . 63

xiii

Listings

4.1 Calculating the correlation between disparity maps . . . . . . . . . . . . . 534.2 Implementation of the Middlebury bad 2.0 metric . . . . . . . . . . . . . . 54

xiv

Chapter 1

Introduction

Model sheets are precisely drawn groups of pictures that outline the size and constructionof a prop or character’s design from a number of viewing perspectives. They are createdin order to both accurately maintain detail and to keep designs uniform across a produc-tion whilst different people are working on them [1]. Model sheets are used both in 2Danimations and in 3D modelling.

In 2D animation, model sheets enable a number of animators working across a produc-tion to achieve consistency in representation across multiple shots. Model sheets used for2D animation often contain different facial expressions, different poses, and annotations toa production’s animators about how to develop and use particular features of a character,as can be seen in figure 1.1 [2].

During the production of a 3D video game or animated movie, model sheets are sentto the modelling department who use them to create the final character models [1]. Figure1.2 shows an example of a model sheet used during the production of a 3D animated movieand a professional reproduction of that character. Model sheets can contain full colour andshading but usually just consist of line drawings. The rest of this thesis will mainly focuson model sheets used during 3D modelling, only showing a character’s rotation withoutdifferent poses or facial expressions.

A typical procedure for the geometric modelling of three-dimensional models is to startwith a simple primitive such as a cube or sphere, and to gradually construct a more complexmodel through successive transformations or a combination of multiple primitives [4].

This method of 3D modelling is called primitive-based modelling and is a type of polyg-onal modelling. There exist other methods for creating (non-polygonal) 3D models suchas NURBS (non-uniform rational Bezier splines), subdivision surfaces, constructive solid

1

INTRODUCTION

(a) The model sheet showing poses and facial expressions.

(b) A still showing one of the finalised animations described on the modelsheet.

Figure 1.1: An example of a model sheet used for 2D animation in Walt Disney’s animated shortWinnie the Pooh and the Honey Tree. © 1966 The Walt Disney Company.

2

INTRODUCTION

Figure 1.2: An example of a model sheet used for 3D modelling in the animated movie Super-man/Batman: Apocalypse and a corresponding 3D model, reproduced from [3].Model sheet: © 2010 Warner Bros. Animation ‖ 3D model: © 2017 David Dias

3

INTRODUCTION

geometry, and implicit surfaces, but objects using these representations often need to beconverted into polygonal forms for visualisation and computation anyway since today’sgraphics hardware is highly specialised in displaying polygons at interactive rates [5]. Be-cause today’s graphics hardware is so specialised in displaying polygons, methods likeNURBS are rarely used for video games and other interactive media [6].

The drawback to primitive-based modelling, however, is that models built in a primitive-based way tend to create more faces than necessary to define their structure because therecan be no overlap in a pure primitive-modelled object. Instead, each part of an object isincised into its immediate neighbours. Another drawback is that for shapes other than theprimitives themselves it can be difficult to position each point exactly correctly [7]. Also,a lot of work has to be done to get from a simple primitive to a high-polygon-count full3D model.

This thesis poses that the process of primitive-based geometric modelling of three-dimensional models that is used today could be improved by providing a better startingpoint for 3D modellers than a simple primitive by leveraging the model sheets they alreadyreceive from the animators and designers. This thesis proposes a new method, usingmultiple existing computer vision methods, that can use existing model sheets to generatea rough draft for a three-dimensional model that can then be further refined by 3D artists.In order to accomplish this, the currently existing related work will first be examined inchapter 2. Afterwards, the methodology used to accomplish this goal will be described inchapter 3. In chapter 4, the proposed method will be evaluated on different model sheetsbefore coming to a conclusion in chapter 5 and providing some pointers for possible futureresearch in chapter 6.

4

Chapter 2

Related Work

Biederman in [8] researched the psychological theory of human image understanding. Heconcluded that all components that are postulated to be the critical units for recognitionare edge-based and can thus be depicted by a line drawing. Colour, brightness, and textureare secondary routes for recognition. Biederman’s findings are important for animators andother visual artists, but this more psychological side is not the focus of this work. In thiswork, we will instead focus more on the research done by Barrow and Tenenbaum in [9] and[10]. In these publications, Barrow and Tenenbaum, did research around the developmentof a computer model for interpreting two-dimensional line drawings as three-dimensionalsurfaces and surface boundaries. Their model interprets each line separately as a three-dimensional space curve that then gets assigned a local surface normal [10].

Another, maybe more surprising but also highly relevant research domain is that ofarchitecture. Using 3D building models is extremely helpful throughout the architectureengineering and construction life cycle. Such models let designers and architects virtuallywalk through a project to get a more intuitive perspective on their work. Digital models ofbuildings can check a design’s validity by running computer simulations of energy, lighting,fire, and other characteristics. These models are also used in real estate, virtual city tours,and video gaming. Because of their wide applicability, researchers and CAD developershave been trying to automate and accelerate conversion of 2D architectural drawings into3D models. Since paper floor plans still dominate the architectural workflow, these au-tomatic plan conversion systems must also be able to process raster images. In order todo this, they use a combination of image-processing and pattern-recognition techniques.These systems lack generality though. They are typically constrained to a small set ofpredefined symbols [11].

5

RELATED WORK 2.1 Sketch-Based Modelling

The works of Barrow and Tenenbaum, and the work done in the architectural field arepart of two larger research fields. These fields are both also closely related to the problemof reconstructing 3D models from model sheets.

The first of these fields explores sketch-based modelling, a technique that automates orassists the process of translating a 2D sketch into a 3D model [12].

The second field is that of computer vision, which explores techniques to constructexplicit, meaningful descriptions of physical objects from images by using intrinsic infor-mation that may reliably be extracted from the input, similar to how the human visualsystem works [13].

This thesis positions itself on the cross-section of both research topics. The proposedmethod will draw inspiration from existing computer vision techniques to reconstruct 3Dmodels by extracting information from the 2D sketches of those models. The next sectionsin this chapter will further explore work that was previously done in these two researchfields and how that research could apply to the topic of this thesis.

2.1 Sketch-Based Modelling

Sketch-based modelling techniques enable people to create complex 3D models in a waythat is as intuitive as possible for humans [12]. As such, most techniques try to make usersinput as few information as possible, usually in the form of simple, 2D strokes. Thesestrokes can then be converted to 3D models in one of two ways: either through evocativemodelling, where multiple, prebuilt 3D objects are combined in a 3D scene to approximatethe desired 3D model, or through constructive modelling, which uses a rule-based approachto build the entire 3D model as one whole object [12]. Both have their own advantagesand disadvantages.

2.1.1 Evocative Modelling

Evocative modelling systems are systems that contain a collection of pre-existing 3D shapesand that approximate a complex model by placing these shapes according to the user’sdirectives. Within evocative modelling systems there’s again a distinction between twosubsets:

• iconic systems map a set of 3D primitives to simple strokes. In the SKETCH applica-tion [14], for example, users can create and place 3D models in a 3D scene by forming

6

RELATED WORK 2.1 Sketch-Based Modelling

sequences of five different types of strokes that are then interpreted and replaced by13 different primitives. A subset of these strokes and their resulting primitives can beseen in figure 2.1. This small number of primitive objects often requires the user tocombine multiple primitives together to create the object they want to create. Onlyusing primitives even precludes some complex objects – freeform surfaces, for exam-ple – from being made at all [14]. Additionally, when combining different primitivesto form a more complex model, the end result will contain vertices that lie on the"inside" of the object, and thus aren’t visible. However, these invisible vertices arestill stored and rendered by the system since the modelling system only knows howto work with the set of pre-existing primitives. This results in a worse mesh topologyin the end and a worse performance.

Figure 2.1: If the user inputs the strokes on the left side, the SKETCH system will produce theprimitives on the right (reproduced from [14]).

• The other main subset of evocative modelling systems are template systems. Tem-plate systems contain a set of, possibly very detailed, 3D template objects that canbe placed in the 3D scene in any way the users wants. For example, by dragging anddropping the objects around to the desired rotation, orientation, and scale. An ex-ample of the "Magic Canvas" template system can be seen in figure 2.2. This systemtries to automatically match multiple models from its database to 2D sketches andlets the user pick one of these models to place in the scene [15].

While both of these evocative modelling systems are good at allowing the user to create

7

RELATED WORK 2.1 Sketch-Based Modelling

Figure 2.2: A template retrieval system matching sketches to predefined 3D models. (reproducedfrom [15]).

a 3D model without the requirement to have any modelling knowledge, all evocative systemsshare at least one big issue: they’re based on prior knowledge in the form of a databaseof prebuilt 3D shapes. Users of evocative systems are limited to the prebuilt 3D shapesincluded with the system. Using a pre-existing database takes up a lot of memory andtakes time to search for the wanted shapes during usage. Both of these problems becomeworse as the size of the included database increases. In some evocative modelling systems,such as Kenney’s Asset Forge [16], users are allowed to add their own shapes. This howeverrequires them to have modelling knowledge, thus defeating the purpose of allowing usersto create a 3D model without the requirement to have any modelling knowledge.

Template systems can, for example, contain a database of domain-specific models, thusallowing the user to create very detailed models or 3D scenes within a certain domain butnot in other domains that might require a database of different models. For example, inarchitecture there are a number of existing systems that contain a database of differenttypes of walls, doors, windows, and furniture. Since architects already have a set way ofrepresenting each of these in their 2D drawings, template-based modelling systems cantranslate those icons to one of their pre-built templates to create a 3D model from anarchitectural drawing [11]. This same template system, however, won’t be very usefulwhen trying to design a 3D model for an industrial machine. Systems to model industrialmachines don’t need doors and windows, they needs gears and screws, and other mechanicalcomponents.

Iconic systems on the other hand do allow the user to create models from differentdomains with the same library, but the user generally won’t be able to add the levelof detail they want to since they’re limited to simple primitives. Using iconic systems,users only have access to primitives like cubes, spheres, and cylinders, instead of morecomplicated models like windows and furniture, or gears and screws.

8

RELATED WORK 2.1 Sketch-Based Modelling

The easiest way to improve evocative modelling systems is by including a bigger objectlibrary, but this in turn also leads to larger performance and resource requirements toprocess, match, and store these objects. The requirements of a bigger evocative modellingsystem become larger regardless of whether or not the user actually needs those extraobjects for the 3D model or scene they’re trying to create [12].

2.1.2 Constructive Modelling

Whereas evocative modelling systems need to have prior knowledge of some 3D shapes tobuild a new 3D model, constructive systems use a set of rules to procedurally generate acompletely new 3D shape based on some 2D drawing [17]. Constructive modelling systemsare thus not restricted by a set database of pre-existing objects, like evocative modellingsystems are, but rather by the robustness of the ruleset that is used, and by the ability of thesystem’s interface to expose the full potential [12]. Because constructive modelling systemsare not restricted by a database of pre-existing objects but rather by the robustness of theruleset that they use to generate 3D shapes, they generally allow for more applications indifferent fields but are also much harder to use correctly. In order to use a constructivemodelling system to its full potential, the user might want to edit, delete, or add new rulesto the existing ruleset. Editing the ruleset of a constructive modelling system requires theuser to have pre-existing knowledge about how these systems work, and possibly requiresthem to understand the language that was used to design the original ruleset. Such a strongrequirement is not feasible for everyone working in a complex domain context without firstreceiving proper training and insight into the system itself [12].

In order to reduce the total amount of possible interpretations of a given 2D drawing,constructive modelling systems sometimes constrain the complexity of their ruleset. Bydoing so they are again exploiting prior knowledge about the domain in which these systemswill be used, similar to template modelling systems [12]. We can define three main groupsof possible drawing interpretations within constructive modelling systems:

• Mechanical objects consist mainly of hard-edged, planar objects. These are the kindsof modelling systems that are often used in engineering fields, where the surfaces ofobjects are usually flat, and corners and edges are well-defined. These systems havelimited support for curved strokes, since their reconstruction is primarily based on astraight-line representation [12]. The design and specification of engineered objects isan important application of computer modelling. As such, it was one of the first re-

9

RELATED WORK 2.1 Sketch-Based Modelling

Figure 2.3: An example of a mechanical object, constructed in SolidWorks (reproduced from[18]).

search fields within the field of sketch-based modelling [12], [19]. However, nowadaysthe usage of solid modelling CAD (Computer Aided Design) systems has becomemore prevalent, although some designers still prefer to use constructive modellingtechniques since they can use their intuition more effectively when sketching thanthey can when using a solid modeller [19].

• Free-form objects are almost the exact opposite of mechanical objects in that theyconsist of smooth, more natural looking objects. There are different ways to createfree-form design systems, but one of the more prevalent methods is to use a skeleton-based approach that tries to reconstruct a model’s skeleton based on contour sketches.Popular systems that use this skeleton-based technique, like BlobMaker [20], usevariational implicit surfaces. Because of their variational implicit surfaces, free-formobjects created with skeleton-based free-form design systems are limited to closedsurfaces and completely smooth shapes [20]. An example of the skeleton-based free-form modelling technique in BlobMaker can be found in figure 2.4. Other free-formmodelling systems, such as Teddy [4], use a triangulated mesh that can be easilymodified but are limited to constructing a single object and don’t support hierarchywithin that object [4], [20].

• Multi-view systems typically interpret the strokes in a 2D drawing as object bound-aries. Different drawings can then be interpreted as different views of the sameobjects, or a single image can be combined with a set epipolar geometry to allow theuser to "sketch in 3D" by drawing in a different view than the original sketch’s view.However, sketching in 3D without interactive feedback is difficult for most humans,since our visual system is built around 2D stimuli. Thus, most systems only imple-ment simple reconstruction within an iterative modelling paradigm. That is, rather

10

RELATED WORK 2.1 Sketch-Based Modelling

Figure 2.4: A skeleton and its corresponding polygonised implicit surface in BlobMaker, a free-form modelling system (reproduced from [20]).

than the user creating actual 3D sketches or multiple sketches of the same objectobject, they can reconstruct a single sketch, rotate the model, sketch a new part ora deformation, ad infinitum until the desired result is achieved [12]. An example ofwhat one iteration can look like in an iterative sketch-based modelling technique isshow in figure 2.5.

Figure 2.5: Iterative sketch-based modelling. Surface details and additive augmentations areadded to an existing model (reproduced from [12]).

Since model sheets already contain drawings of different views of the same three-dimensional object, the remainder of this chapter will focus on this last set of constructive

11

RELATED WORK 2.2 Computer Vision

modelling systems: the multi-view systems.

2.2 Computer Vision

The field of computer vision focuses on ways to automatically extract information fromimages [13]. One of the applications of computer vision — the one that is most relevant tothis dissertation — is the field of multi-view stereo reconstruction, where algorithms areused to try to reconstruct a complete 3D object model from a collection of images takenfrom known camera points [21].

2.2.1 Epipolar Geometry

Figure 2.6: The epipolar geometry between two views of the same scene. The point x is projectedat xL and xR in the two views. (reproduced from [22]).

Consider the situation shown in figure 2.6 of a common scene viewed by two pinholecameras. The locus of all points that map to the point xL in the left image consists ofa straight line through the centre of the camera used in the left view. As seen from thesecond camera, this straight line of points that map to xL maps to a straight line in theright image known as an epipolar line. Any point in the right view matching the left view’spoint xL must lie on this epipolar line. All epipolar lines in the right view correspondingto points in the left view meet in one point eR, called the epipole. The epipole is thepoint where the centre of projection of the left camera would be visible in the right image.Similarly, there is an epipole eL in the left view defined by reversing the roles of the two

12

RELATED WORK 2.2 Computer Vision

views in the above discussion [23]. Epipoles don’t necessarily lie in another view, it ispossible that one camera is not able to see the centre of projection of every other camera.

There exists a projective mapping from points in the left view to epipolar lines in theright view and vice-versa. Furthermore, there exists a 3x3 matrix F called the fundamentalmatrix which maps points in one view to the corresponding epipolar line in the other viewaccording to the mapping x 7→ Fx, and thus defines the epipolar geometry between bothimages [23]. It can be proven that the fundamental matrix between two images can becomputed from correspondences of those images’ scene points alone, without requiringknowledge of the cameras’ internal parameters or relative pose [24]. However, exact pointcorrespondences often cannot be retrieved from an image, since a three-dimensional scenepoint can be projected onto multiple pixels or fall in between pixels in one of the views.Because the exact correspondences cannot always be retrieved, the exact fundamentalmatrix cannot always be recovered and an estimation must be used.

The accuracy of an estimation for the fundamental matrix (and subsequently the epipo-lar geometry) is crucial for applications such as 3D reconstruction. The fundamental matrixestimation accuracy is a function of the number of correct point correspondences attainedbetween the images that are being evaluated [25]. Therefore, 3D reconstruction systemsmust strive to establish as much correct correspondences between images as possible. Tothis end, a corner detector is first applied to each image to extract high curvature points.A classical correlation technique is then used to establish matching candidates between thetwo images [26]. There exist multiple techniques to detect corners in images, we will nowbriefly explore the ones most relevant to multi-view reconstruction.

2.2.2 Corner Detection

Corner detection is a low-level processing step which serves as the essential part for com-puter vision based applications [27]. When recovering the epipolar geometry between twoviews of the same scene, a corner detector must first be applied to each view separatelyin order to be able to establish correspondences between images [26]. Thus, using a goodcorner detector is pivotal to recovering a good estimation for the fundamental matrix andthe epipolar geometry it describes.

There exist three different classes of corner detectors: gradient based, template based,and contour based methods [27]. Each of these classes will be briefly described next.

13

RELATED WORK 2.2 Computer Vision

Gradient Based Corner Detection

Gradient based corner detection is based on gradient calculations. Most of the earliercorner detectors are gradient based detectors. Gradient based corner detectors suffer fromnoise-sensitivity and a high computational complexity [27]. One of the most-used gradientbased corner detectors is the Harris detector [28]. Other gradient based corner detectorsproposed in early papers are the LTK (Lucas-Tomasi-Kanade) [29] and Shi-Tomasi [30]corner detectors. The main difference between these detectors lies in the implementationof their cornerness measurement function, the function which calculates how likely it isthat a given pixel is part of a corner [27].

Template Based Corner Detection

Template based corner detectors find corners by comparing the intensity of surroundingpixels with that of the centre pixel that is being evaluated. Templates are first defined andplaced around the centre pixels. The cornerness measurement function is devised from therelations of surrounding/centring pixel intensities. The computational cost for templatebased corner detection is relatively lower than that of gradient based methods, which makesthese methods faster. In recent years, templates are combined with machine learningtechniques (decision trees in particular) for fast corner detection. Examples of templatebased corner detectors include SUSAN (Smallest Univalue Segment Assimilating Nucleus),which is a more traditional template based corner detector [31], and FAST (Features fromAccelerated Segment Test), which uses machine learning in the form of a decision tree [32],and its derivations FAST-ER [33] and AGAST (Adaptive and Generic Accelerated SegmentTest) [34]. Although the application of machine learning helps reduce the computationalcost of corner detection, it can also cause database-dependent problems when the trainingdata does not cover all possible corners. Template based detectors can detect a largernumber of corners than gradient based methods. Since more points lead to more reliablematching, the larger number of detected corners is preferred in wide baseline matching [27].However, the number of detected corners is not stable under different imaging conditionsfor template based corner detectors [35]. Another disadvantage of template based methodsis the lack of effective and precise cornerness measurements [27].

14

RELATED WORK 2.2 Computer Vision

Contour Based Corner Detection

Contour based corner detection is based on the result of contour and boundary detec-tion. These methods aim to find the points with maximum curvature in the planar curveswhich compose of edges. Traditional contour based corners are specified in binary edgemaps. Curve smoothing and curvature estimation are two essential steps for these detec-tors, while Gaussian filters are the most widely used smoothing function. Examples ofcontour based corner detectors include DoG-curve (Difference of Gaussians curve) [36],DoH (Determinant of the Hessian) [37], ANDD (Anisotropic Directional Derivative) [38],Hyperbola fitting [39], and ACJ (A Contrario Junction) detection [40]. Contour basedcorner detection is much different from gradient and template based methods in both de-tection framework and application area. Their detected corners are more applied in shapeanalysis and shape based image compression, rather than wide baseline matching [27].

Feature Descriptors

After corners have been detected, a feature descriptor is used. A descriptor is a vector thatcharacterises the local image appearance around the location identified by a corner detector.After a descriptor is calculated, that corner can then be matched with one or more cornersin other images [41]. The most widely used descriptor, SIFT (Scale-Invariant FeatureTransform) is computed from gradient information [42]. Local appearance is describedby histograms of gradients, which provides a degree of robustness to translation errors[41], [43]. The SIFT algorithm also defines a corner detector, but the SIFT detector usesa Difference of Gaussians method, which is a contour based corner detection method andthus less suitable for wide baseline matching [27], [42]. SURF (Speeded Up Robust Features)is another popular algorithm containing both a feature detector and a feature descriptor.The SURF algorithm was inspired by SIFT but is faster while still providing a similarperformance [43]. The SURF detector, also called the Fast-Hessian detector, is a regiondetector instead of a corner detector however [43], and thus not suitable for retrieving theepipolar geometry between images [26]. Both the SIFT and SURF algorithms are patentedin the US, although the SIFT patent has recently expired on April 18th, 2020 [44], [45].

More recently, there has been an increasing amount of research into binary descriptorssuch as BRIEF (Binary Robust Independent Elementary Features) [46]. Binary descriptorsrun in a fraction of the time required by SURF and similar algorithms, while still having asimilar or better performance and a reduced memory footprint [27], [46]. Binary descriptors

15

RELATED WORK 2.2 Computer Vision

work by computing binary strings for every detected feature by comparing the intensitiesof pairs of points around that feature. Furthermore, the descriptor similarity for BRIEFdescriptions can be evaluated using the Hamming distance instead of the L2 norm, whichis used in SIFT and SURF. Calculating a Hamming distance is very efficient [46]. ORB(Oriented FAST and Rotated BRIEF ) [47] and BRISK (Binary Robust Invariant ScalableKeypoints) [48] take the process of feature description one step further by integrating cornerdetection based on binary decision trees with binary feature descriptors. The attachedbinary description helps reduce the storage burden and time cost for matching, thereforeboth saving time and storage [27]. ORB, for example, is two orders of magnitude fasterthan SIFT while performing just as well in most situations [47].

2.2.3 Stereo Matching

Once a set of corners, and their matching descriptors, have been found for each image, theyneed to be matched with the corners in other images. These matches can then later beused to calculate an estimation of the underlying fundamental matrix for that image-pair.Searching for nearest neighbour matches is the most computationally expensive part ofmany computer vision algorithms [49].

The solution to a matching problem can be found by using a brute-force matchingalgorithm. A brute-force matching algorithm works by comparing every feature from imageI1 to every feature from image I2. Feature F1 in I1 is said to match feature F2 in I2 if thedistance between their descriptors is minimal, i.e. if the descriptor for F2 is the closest tothe descriptor for F1 according to some distance metric.

The distance metric used in matching algorithms is dependent on the feature descriptorsthat were used. The two main metrics used for the distance between features are theHamming distance and the L2 distance. The Hamming distance uses only bit manipulationsand is thus very fast but only usable with binary descriptors [50]. The distance betweenvector-based descriptors, like SIFT and SURF, can be calculated using the L2 distancewhich is slower and doesn’t work for binary descriptors.

In order to increase the quality of matches, each match may need to pass some testbefore it can be validated. An often-used, strict match validity test is called cross-checking.When using cross-checking, it is not sufficient if the descriptor for F2 is the closest to thedescriptor for F1, the reverse must also be true before a match can be validated. Thisvalidity test allows to greatly reduce the probability of error [26].

16

RELATED WORK 2.2 Computer Vision

Another often-used match validity test is Lowe’s ratio test. Lowe’s ratio test comparesthe ratio of the distance between F1 and F2 to the distance between F1 and F ′2, where F2 isthe best match for F1 while F ′2 is the second best match for F1. A match is only validatedwhen this ratio falls below a pre-defined threshold, i.e. the best match is significantlycloser than the second-best match. The reasoning behind Lowe’s ratio test is that for falsematches, there will likely be a number of other false matches within similar distances givena high dimensional feature space [42].

Since some features may have no matches, e.g. because they are occluded in one of theimages, a threshold can be used for the "furthest distance" that is still considered a matchto further reduce the number of false positives [51].

As brute-force algorithms need to compare every feature from image I1 to every featurefrom image I2, they are quadratic in the number of extracted features, which makes itimpractical for most applications [51]. This high cost has generated an interest in heuris-tic algorithms that perform approximate nearest neighbour search. Heuristic algorithmssometimes return non-optimal neighbours but can be orders of magnitude faster than exactsearches, while still providing near-optimal accuracy [49]. There exist hundreds of differ-ent approximate nearest neighbour search algorithms [51]. Initially, the most widely usedalgorithm for nearest-neighbour search was the kd-tree [52], which works well for exactnearest neighbour search in low-dimensional data, but quickly loses its effectiveness as di-mensionality increases [49]. kd-Trees work by dividing the multi-dimensional feature spacealong alternating axis-aligned hyperplanes, choosing the threshold along each axis so as tomaximise some criterion [53]. Later, this algorithm was adapted by [54] to use multiplerandomised kd-trees as a means to speed up approximate nearest-neighbour search, per-forming well over a wide range of problems [49]. The algorithm proposed by [54] usingrandomised kd-trees was further adapted by [49] into the FLANN matcher (Fast Libraryfor Approximate Nearest Neighbours). The FLANN matching algorithm works by main-taining a single priority queue across all randomised kd-trees so search can be ordered byincreasing distance to each bin boundary. The degree of approximation is determined byexamining a fixed number of leaf nodes, at which point the search is terminated and thebest candidates are returned [49].

17

RELATED WORK 2.2 Computer Vision

2.2.4 The Fundamental Matrix

The fundamental matrix F is a homogeneous 3x3 matrix which defines the epipolar geom-etry between two different views of the same three-dimensional scene. The fundamentalmatrix was first described in [55] as a generalisation of the essential matrix first described adecade earlier in [56]. The essential matrix of an image-pair is dependent on the calibrationof the cameras used to capture both images, while the fundamental matrix is completelyindependent of the cameras or scene structure [24]. The fundamental matrix is the only ge-ometric information available from two uncalibrated images [26], it can be computed fromcorrespondences of image scene points alone, without requiring knowledge of the cameras’internal parameters or relative pose [24]. Recovering the fundamental matrix is one of themost crucial steps of many computer vision algorithms [26].

It can be proven that the fundamental matrix F is only defined up to a scale factor[26]. Because scaling is not significant, the 3x3 matrix F has eight remaining independentratios. However, it can also be proven that F satisfies the constraint

detF = 0, (2.1)

which means that the fundamental matrix F only has seven degrees of freedom [24]. Thatequation (2.1) holds true can also be seen intuitively in that F can map multiple points tothe same epipolar line, which means there can exist no inverse mapping and thus F mustnot be invertible, which means that its determinant must be zero [24].

Given a pair of matching points x and x′ , the fact that x′ lies on the epipolar line Fx

means that

(x′)TFx = 0. (2.2)

Since the matrix F only has seven degrees of freedom, it is possible to determine F bysolving a set of linear equations of the form (2.2) given at least 7 point matches [23].

As discussed earlier in section 2.2.1, the accuracy of the fundamental matrix estimateis a function of the number of correct correspondences attained between images [25]. Mostalgorithms rely on a minimum of eight such correspondences, such as Harley’s originalalgorithm [55], [57]. There exist techniques that can estimate the epipolar geometry withas few as five point correspondences by exploiting additional knowledge about the cameralocations and the projected scene [24], [58].

18

RELATED WORK 2.2 Computer Vision

Algorithms for estimating the fundamental matrix between two images have proven tobe very sensitive to noise [26]. Noise is introduced by outliers in the initial correspondences.Outliers can be caused by two things:

• Bad locations. In the estimation of the fundamental matrix, the location error ofa point of interest is assumed to exhibit Gaussian behaviour. This assumption isreasonable since the error in localisation for most points of interest is small (withinone or two pixels), but a few points are possibly incorrectly localised (more thanthree pixels). These points will severely degrade the accuracy of the fundamentalmatrix estimation [26].

• False matches. In the establishment of correspondences, only heuristics have beenused. Because the only geometric constraint, i.e. the epipolar constraint in terms ofthe fundamental matrix, is not yet available, many matches are possibly false. Thesewill completely spoil the estimation process, and the final estimate of the fundamentalmatrix will be useless [26].

These outliers will severely affect the precision of the fundamental matrix estimation ifwe use them directly to calculate the estimation [26]. Additionally, If more correspondencesare found than needed for a given algorithm, then they may not all be fully compatible withone projective transformation, and the best fitting transformation will have to be selectedgiven the data [24]. Both detecting outliers and selecting the best fitting fundamentalmatrix estimation for all inliers can be done by finding the transformation that minimisessome cost function. Two popular robust estimation methods that accomplish these goalsare the Random Sample Consensus (RANSAC) method and the Least Median Squares(LMedS) method.

Robust estimation methods

RANSAC (Random Sample Consensus) is a resampling technique that generates candidatesolutions for a problem by using subsets of the minimum number of data points required toestimate the underlying model parameters [59]. This minimum amount of correspondencesrequired depends on the calculation method used, so when using a 7-point fundamentalmatrix calculation algorithm for example, subsets of seven correspondences are used. TheRANSAC technique randomly selects a number of these minimal subsets of point corre-spondences to determine the fundamental matrix for each subset, and then finds the best

19

RELATED WORK 2.2 Computer Vision

fundamental matrix from those that is most consistent with the entire set of point corre-spondences [60]. Unlike conventional sampling techniques that use as much of the data aspossible to obtain an initial solution and then proceed to prune outliers, RANSAC usesthe smallest set possible and proceeds to enlarge this set with consistent data points [59],[61]. Using this resampling technique, a RANSAC algorithm can find both a solution thatagrees with as much data points as possible as well as remove all outliers at the same time.

LMedS (Least Median Squares) is a resampling technique similar to RANSAC exceptfor the way in which it determines the best solution. LMedS evaluates the solution ofeach subset in terms of the median of the squared errors of the entire data set. Errorsare quantified by the distance of a point to its corresponding epipolar line [26]. A LMedSalgorithm chooses the solution which minimises this median error of the symmetric epipolardistances of the data set [62].

Both RANSAC and LMedS perform badly when the number of outliers gets above 50%,so finding matches of a good quality is still important when using a resampling technique[63].

Quality metrics

There exist multiple error criteria that can be used as quality metrics for the estimation ofa fundamental matrix. The most widely used error criteria are the reprojection error, thesymmetric epipolar distance, and the Sampson distance [64].

• The reprojection error is regarded as the gold standard criterion. The reprojectionerror for corresponding points x and x′ is defined as the minimum distance neededto bring the 4-dimensional vector (x, x′) into perfect correspondence with the hyper-surface implicitly defined by equation (2.2) [24], [64]. The reprojection error canbe calculated using Hartley-Sturm triangulation which involves finding the roots ofa polynomial of degree 6, making the reprojection error expensive to compute [64],[65].

• The symmetric epipolar distance is an error criterion that measures the geometricdistance of each point to the epipolar line of its corresponding point. This is the mostwidely used error criterion because it is intuitive and easy to compute [64]. However,it was proven in [64] that the symmetric epipolar distance error provides a biasedestimate of the gold standard criterion, the reprojection error.

20

RELATED WORK 2.2 Computer Vision

• The Sampson distance provides a first-order approximation of the reprojection error.The Sampson error for corresponding points x and x′ is defined as the distancebetween the 4-dimensional vector (x, x′) and its Sampson correction. The Sampsonerror provides a good estimation for the reprojection error for relatively small errors,i.e. reprojection errors smaller than 100 pixels [64].

2.2.5 Projective Rectification

Once the epipolar geometry has been recovered through the fundamental matrix, it can beused to calculate a projective transformationH that maps the epipole of an image to a pointat infinity [24]. The transformation matrix for this rectification transformation is differentfor both views of an image-pair. Generally, H is used as the name of the rectificationmatrix for the left view, while H ′ is used as the name of the rectification matrix for theright view. By mapping the epipoles to a point at infinity, the transformations H and H ′

will transform a pair of images so that all corresponding epipolar lines become horizontaland at the same height [23], [24].

The projective transformations introduce distortions in the rectified images, more specif-ically skewness and aspect/scale distortions. The rectification transformations must bechosen uniquely to minimise distortions and to maintain as accurately as possible thestructure of the original images. Having as little distortions in the rectified images aspossible helps during subsequent stages, such as matching to calculate the disparity map,ensuring local areas are not unnecessarily warped. Since the rectification transformation iscompletely defined by the fundamental matrix, the rectification quality is directly relatedto the quality of the fundamental matrix [66].

The distortions introduced in the rectified images can be quantified and used as met-rics for the quality of the rectification transformation, and, since the rectification trans-formation is completely defined by the fundamental matrix, also as quality metrics for thefundamental matrix estimation [66]:

• The orthogonality of the rectification transformation is a measure for the skewnessdistortion introduced by the rectification. The orthogonality should ideally be 90◦,and can be calculated as follows [66]:

1. Specify four points a = (w/2, 0, 1)T , b = (w, h/2, 1)T , c = (w/2, h, 1)T , andd = (0, h/2, 1)T with w and h the width and height of the original image.

21

RELATED WORK 2.2 Computer Vision

2. Transform the points a, b, c, and d according to the rectification transformationH: a = Ha, b = Hb, c = Hc, and d = Hd.

3. Define x = b− d and y = c− a.

4. The orthogonality of the rectification is given by the angle between x and y:Eo = cos−1( x·y

|x||y|).

• The aspect ratio of the rectification transformation is a measure for the aspect/scaledistortion introduced by the rectification. The aspect ratio should ideally be 1, andcan be calculated as follows [66]:

1. Specify the four corner points a = (0, 0, 1)T , b = (w, 0, 1)T , c = (w, h, 1)T , andd = (0, h, 1)T with w and h the width and height of the original image.

2. Transform the points a, b, c, and d according to the rectification transformationH: a = Ha, b = Hb, c = Hc, and d = Hd.

3. Define x = b− d and y = c− a.

4. The aspect ratio of the rectification is given by: Ea =√

xT xyT y

.

Since all points map to a horizontal epipolar line at the same height in the otherimage, corresponding scene points in both images will be located close to each other afterrectification. Any remaining disparity between matching points will be along the horizontalepipolar line, which greatly simplifies the calculations needed to retrieve the disparity mapbetween both images [23], [24].

2.2.6 Disparity Map

After transforming a pair of images by the appropriate projective rectification transfor-mation, the original situation is reduced to the epipolar geometry produced by a pair ofidentical cameras placed side by side with their principal axes parallel. Because of thisrectification transformation, the search for matching points is vastly simplified by the sim-ple epipolar structure and the near-correspondence of the two images [24]. This allowsa disparity map to be calculated between both images. A disparity map represents thedistance between corresponding pixels that are horizontally shifted between the left imageand right image [67]. Figure 2.7 shows one of the ground truth disparity maps that is partof the Tsukuba dataset.

22

RELATED WORK 2.2 Computer Vision

Figure 2.7: One of the disparity maps from the Tsukuba dataset [68].

In general, stereo vision disparity map algorithms can be classified into two categoriesbased on their approach to computing their disparity values:

• Algorithms using a local approach, also known as area-based or window-based ap-proaches. In these algorithms, the disparity computation at a given point dependsonly on the intensity values within a predefined support window. Thus, these methodsconsider only local information and as such have a lower computational complexityand a shorter run time. For each pixel in the image, the corresponding disparityvalue with the minimum cost is assigned. The matching cost is aggregated via a sumor an average over the support window [67].

• Algorithms using a global approach treat disparity calculations as a problem of min-imising a global energy function for all disparity values at once. These global energyfunctions usually consist of two terms, a data term, which penalises solutions thatare inconsistent with the target data, and a smoothness term, which enforces thepiece-wise smoothing assumption with neighbouring pixels. This smoothness term isdesigned to retain smoothness in disparity among pixels in the same region. Globalmethods produce good results but are computationally expensive and thus have alonger run time [67].

In [69], Hirschmüller describes a method he calls Semiglobal Matching, which uses amix between a local and a global approach. Hirschmüller’s Semiglobal Matching algorithmuses a pixelwise, mutual information-based matching cost for compensating differences ofinput images. Instead of minimising a true global cost function, as a global approachwould do, Semiglobal Matching performs a fast approximation of a global cost function

23

RELATED WORK 2.2 Computer Vision

by using pathwise optimisations in all directions starting with the pixel being evaluated.Hirschmüller states that the number of paths used to approximate the global cost functionshould at least be eight, but suggests using 16 paths for providing good coverage of a 2Dimage [69].

Once a disparity value is calculated for every pixel in an image, a refinement pass canbe done over the entire disparity map. The purpose of the refinement pass is to reducenoise and improve the quality of the disparity map. Typically, the refinement step consistsof regularisation and occlusion filling or interpolation. The overall noise is reduced throughfiltering out inconsistent pixels and small variations among pixels in the disparity map. Inareas in which the disparity is unclear, occlusion filling or interpolation is used. Occludedregions are typically filled in with disparities similar to those of the background or tex-tureless areas. Occluded regions are detected by using left-right consistency checks. If thematching algorithm rejects disparities with low confidence, then an interpolation algorithmcan estimate approximations to the correct disparities based on their local neighbourhoods[67].

The disparity value for a pixel is inversely proportional to its depth value [70]. Disparityvalues can thus be associated with depth values through three-dimensional projection [67].A disparity map can thus be transformed into a depth map of the original scene, which canbe translated directly into a point cloud. The reconstructed scene, however, is determinedby its images only up to a similarity transformation. The rotation, translation, and scalingof the scene as a whole relative to the world cannot be determined by its images only [24].

24

Chapter 3

Methodology

The idea behind the proposed method is to draw upon as much as possible from existingmulti-view computer vision methods. However, there are three main differences betweenthe proposed method and more classical multi-view computer vision methods.

Firstly, the different model sheet views are formed by rotating the subject aroundits centre, while most classical computer vision problems form their different views bymoving the camera in front of the subject. The different model sheet views could alsobe interpreted as a camera rotation around the subject at a set distance, while the morecommon, classical problem could be interpreted as a camera translation at a set distance,although this translation is sometimes combined with a slight rotation.

Secondly, existing computer vision methods use a lot of texture information. Modelsheets are usually black-and-white line drawings, so they don’t contain texture information.Not only do they not contain texture information, model sheets are also very sparse whencompared to other types of images. Model sheets consist of a lot of large, empty regions,which makes the feature detection step a lot more difficult as there are less features todetect. This can be clearly seen in figure 3.1, which contains both images from a famouscomputer vision dataset as well as a typical model sheet.

Lastly, model sheets are drawn using an orthographic projection, while computer visionmethods often use perspective projections, as that is how both the human visual systemand real cameras work. This will make some parts of the proposed method a bit easier, asthere is no distortion in the model sheets caused by a perspective projection, but it willalso make other parts trickier, as a lot of existing computer vision algorithms work withperspective projections.

25

METHODOLOGY

(a) Amore classical computer vision dataset (the Tsukuba UniversityDataset [68]).

(b) Model sheets used in the production of the movie Atlantis: TheLost Empire.© 2001 The Walt Disney Company.

Figure 3.1: A comparison between more classical computer vision datasets and model sheets

26

METHODOLOGY 3.1 Overview

3.1 Overview

Figure 3.2 shows an overview of the method proposed in this dissertation. The proposedmethod requires at least three different model sheet views of the same model, all at differentangles, but these views do not necessarily need to be equiangular. Pairs are created fromthese model sheet views, each pair containing two adjacent angles. For each of those image-pairs, the underlying epipolar geometry is recovered through the fundamental matrix andused to reconstruct a disparity map for each view in that pair. After this, all disparitymaps for the same view that were generated in different image-pairs are combined to geta better disparity map for that view. This disparity map is then converted to a 3D pointcloud. If desired, the final point clouds could then be merged together and a convex hullcould be generated for the combined point cloud to get a full 3D object.

This chapter will go through each of these steps, starting with the model sheet require-ments, explain how each step works and what data is used to communicate between thedifferent steps. Finally, an overview will be given of the different frameworks and softwarethat were used while building a prototype implementing the proposed method.

3.2 Model Sheets

In order to be able to evaluate the proposed method, it would be useful to generate custommodel sheets. When using generated model sheets, the reconstructed 3D model can becompared to the original 3D model used to generate the model sheets in order to evaluatethe proposed method.

The way 3D objects were rendered to generate the model sheets used in this thesis, isby using the "Freestyle" function in Blender 2.79’s Cycles renderer. A black Freestyle linewith an absolute thickness of 1.5 pixels and a crease angle left at the default 134.43◦ wascombined with a completely white object material with its emission strength set to 1 so the3D model’s faces would be uniformly white, mimicking the typical black-and-white line-artstyle of real model sheet drawings. Since model sheets are drawn without perspective [71],an orthographic camera was used with scale 7.3, placed at a horizontal (XY ) distance of8 units from the centre of the model. The camera is always rotated to look straight at thecentre of the model. The camera’s near and far clipping planes were left at 0.1 and 100.0,the default Blender values. The renderer and camera settings were chosen by visually

27

METHODOLOGY 3.2 Model Sheets

Corner Detection and Matching

Image Rectification

Disparity Map

Epipolar Geometry

Quality metrics

Point Cloud

Convex Hull

Quality metrics

Model Sheets

Corner matches per image-pair

Fundamental matrices per image-pair

Model sheets

Rectified model sheets

Disparity maps per model sheet

Point cloud

Figure 3.2: The different components of the proposed method as well as the input and output ateach step, and the feedback loops that can be used to optimise an implementation

comparing the renders to actual model sheets, and by trying to make them as visuallysimilar as possible. The model sheet views are rendered with a colour depth of 8 bits,no compression, and at a resolution of 1920 by 1080 pixels but scaled down by 50% afterrendering, so the final images have a resolution of 960 by 540. The images are scaled downafter rendering to reduce memory requirements during execution of the method prototypeand to reduce the effect of aliasing in the final images. Model sheet renders were generatedfor a set of simple 3D objects with the camera rotating around the models in angles from0 to 90 degrees with increments of 5 degrees. As illustrated in figure 3.3, the model sheetsgenerated in this manner visually resemble actual model sheets.

In order to be able to compare generated model sheets to real model sheets, a modelsheet resembling the one in figure 1.2 was recreated using this method. The generated

28

METHODOLOGY 3.2 Model Sheets

Figure 3.3: Blender model sheet-like renders for a very basic 3D character.

model sheet can be seen in figure 3.4 and uses a slightly different, but similar, modelto the model sheets in figure 1.2. In order to make the comparison a bit easier, thegenerated model sheet uses two different colours, similar to those in figure 1.2, instead ofthe pure black-and-white model sheets that will be used in the remainder of this thesis. Bycomparing figures 1.2 and 3.4, it is clear that the hand-drawn model sheets contain moredetails and – in this case – also some slight shading, which is missing from the generatedmodel sheets. The shading and colouring in figure 1.2 is not present in most model sheets,though, as can be seen in figures 1.1a and 3.1b.

Figure 3.4: A recreation of the model sheets from figure 1.2, albeit using a slightly differentmesh.

29

METHODOLOGY 3.2 Model Sheets

For each model sheet view, the corresponding ground-truth disparity map was renderedwith the same camera settings, the same camera position, and at the same resolution. Sincethe generated model views use orthographic projections, and since the projected disparityis inversely proportionate to the depth of the 3D model (see section 2.2.6), disparity mapscan be generated by inverting depth maps of the models. The disparity map was generatedby using Blender’s compositing tools to map the normalised depth of the model to a linearwhite-to-black gradient, as can be seen in figure 3.6. To reduce visual artefacts in thedepth map caused by limitations of Blender’s compositing tools, an alpha scissor was usedto remove all pixels with an alpha value below 0.9. The colour profile for the depth maprenders was set to "None" instead of Blender’s default sRGB profile, to make the depth mapimage linear in its grayscale value instead of logarithmic. One of the resulting ground-truthdepth maps can be found in figure 3.5.

Figure 3.5: One of the ground-truth depth maps rendered with Blender 2.79.

Hand-drawn model sheets will need to be cleaned up before they can be used in theproposed method. They should no longer contain pencil markings and measurements suchas those on figure 3.1b. The subject should be in the exact same pose and should havethe exact same expression in each view, which isn’t always the case in model sheets usedin stylistically animated movies. Inconsistent regions in one of the model sheet views canthrow off the epipolar geometry and the disparity map stages of the proposed method.