File clustering using naming conventions for legacy systems

Transcript of File clustering using naming conventions for legacy systems

File Clustering Using Naming Conventions for Legacy Systems�

Nicolas Anquetil and Timothy Lethbridge

School of Information Technology and Engineering (SITE)150 Louis Pasteur, University of Ottawa

Canadafanquetil,[email protected]

Abstract

Decomposing complex software

systems into conceptually indepen-

dent subsystems represents a signi�-

cant software engineering activity that

receives considerable research atten-

tion. Most of the research in this do-

main deals with the source code; try-

ing to cluster together �les which are

conceptually related. In this paper we

propose using a more informal source

of information: �le names.

We present an experiment which

shows that �le naming convention is

the best �le clustering criteria for the

software system we are studying.

Based on the experiment results,

we also sketch a method to build a con-

ceptual browser on a software system.

Introduction

Maintaining legacy software systems is a prob-lem which many companies face. To help soft-ware engineers in this task, researchers are try-ing to provide tools to help extract the designstructure of the software system using whateversource of information is available. Clustering�les into subsystems is considered an impor-tant part of this activity. It allows the softwareengineers to concentrate on the part of the sys-tem they are interested in, it provides a highlevel view of the system, it allows to relate thecode to application domain concepts.

�

This work is supported by NSERC and Mitel Corporatineering Research (CSER). The IBM contact for CSER is Pa

1

Our research is part of a project that aimsto develop tools to help software engineers moree�ectively maintain software. An important re-quirement of this project is that we try to workin close relationship with the people actuallymaintaining software in order to uncover ideasthat will be readily applicable in the industry.

Much of the research in the �le clusteringdomain deals with the source code; trying toextract design concepts from it. However, westudied a legacy software system, and noticedthat software engineers were organizing �les ac-cording to some �le naming convention. In thispaper we propose to use this more informalsource of information to extract subsystems.

To set the context of this research, we will�rst give an overview of the project we are en-gaged in.

In a second stage, we will review some clus-tering criterion we have identi�ed in the liter-ature. We will compare them to the one wechose: �le naming convention.

Part of the comparison is based on experi-ments we conducted with our particular legacysystem. We will present the experiments anddiscuss their results.

Finally we will sketch a method to build aconceptual browser that uses �le naming con-vention as a �le clustering criterion.

on and sponsored by the Consortium for Software Engi-

trick Finnigan.

1 The project

\Does the method scale up?" is a recurringquestion about software reverse-engineering re-search. This is also true in the �le clusteringdomain. A key aspect of our project is to dealwith an actual software system in a real worldcompany, to ensure that scaling up is not aproblem.

Our goal is to help software engineers tomaintain a legacy telecommunication system.As is the rule in this �eld, we face many di�-culties:

� It is a very large system (' 3500 �les, 1.5million lines of code).

� It is an old system (over 15 years old).

� The code has undergone many modi�ca-tions and still evolves.

� The software is a main revenue source forthe company and therefore is of the ut-most importance.

� Nobody has a global understanding of thesystem (mainly because of its size).

� The software engineers are very busy andcannot be distracted from their mainte-nance task for extended periods of time.

A project goal is to design an environmentthat will help software engineers to browse thecode, �nd closely related �les or understandspeci�c parts of the system.

The experiment reported in this paper ismainly concerned with �le clustering, an activ-ity which consists of breaking a large set of �les(the entire software system) down into coher-ent subsets. These subsets are called subsys-tems. Identifying subsystems is an importantpart of the software design process. In reverse-engineering, being able to identify subsystemsis a necessary step towards design recovery.

File clustering is a popular research domain;however the conditions associated with thisproject create a set of particular constraints:

� Because the system is large, we cannotengage in lengthy analyses of source code.

� Because the software engineers are busypeople, we cannot a�ord to disturb themtoo often.

2

� Because we are dealing with a real worldsystem, we must cope with the usual di�-culties associated to real world problems(e.g. size, noise in the data).

On the other hand, because we aim at build-ing a browser, the precision of the generatedsubsystems is not as critical as for other do-mains like re-engineering where a key require-ment is precise preservation of semantics.

2 Criteria for �le clustering

When dealing with legacy system, one usuallymakes strong assumptions about the existingconstraints:

� external documentation is nonexistent,

� comments are obsolete and misguiding,

� software design information is not avail-able.

Given this, there are two possible ap-proaches to subsystem discovery (see for exam-ple [13]):

The top-down approach consists of analyz-ing the domain to discover its conceptsand then trying to match part of the codewith these concepts.

The bottom-up approach consists of ana-lyzing the code and trying to cluster theparts that pertain to the same concepts.

It is generally admitted that a successful so-lution should use both approaches. Howeverthe top-down one is very di�cult. It is costlybecause it implies \extracting" domain knowl-edge from experts during long interviews. It isalso domain speci�c which limits the potentialfor reuse.

We propose a bottom-up approach thatcould help in building a model of the appli-cation domain (usual outcome of the top-downapproach).

Considering the constraints listed above,the natural way to perform the bottom-up ap-proach is to look at the very material softwareengineers deal with every day: the source code.

However, we propose to use another sourceof information: �le names. We shall explainwhy we made this choice and discuss its poten-tial advantages and drawbacks.

We will also compare it to other �le cluster-ing criteria, including source code.

2.1 Relevance of the �le name cri-

terion

One of the primary goals of our project is tobridge the gap between academic research andindustrial needs. As such, before trying to ex-tract subsystems, we wanted to get a betteridea what the software engineers considered asubsystem. We asked them to give us examplesof subsystems they were familiar with. Foursoftware engineers provided us with 10 subsys-tems covering 140 �les. The experience of thesoftware engineers with the system ranges froma few months to several years.

Studying each subsystem, it was obviousthat their members displayed a strong simi-larity among their names. For each subsys-tem, concept names or concept abbreviationslike \q2000" (the name of a particular subsys-tem), \list" (in a list manager subsystem) or\cp" (for \call processing") could be found inall the �le names.

We were led to conclude that the companyis using some kind of �le naming convention tomark the subsystems.

This conclusion goes against the commonassumption that source code is the only rel-evant source of information. Because severalsoftware engineers contributed to this smallsampling, it seems very likely that this prop-erty applies to the whole system.

We thus decided to experiment with �lenames as our �le clustering criterion.

One can make several objections to thischoice:

1. If �le names are actually marking subsys-tems in the software we are studying, isthere any chance to generalize the resultsto other systems?

2. If the subsystems are marked using �lenames, they must be well known. There-fore, why should we need a tool to extractthem?

3

3. If a �le name is representative of its orig-inal subsystem (when it was created),does this necessarily mean its contentsstill logically belongs in that subsystemafter extensive maintenance has been ap-plied?

First, we do not think that the system weare working on is such an exception. In a recentpaper, Tzerpos [14] also noted that \it is verycommon for large software products to followsuch a naming convention (e.g. the �rst twoletters of a �le name might identify the subsys-tem to which this �le should belong)".

Merlo et al. [9] also noticed that routinenames could be related to application domainconcepts. This work will be mentioned in thenext section.

It seems unlikely that companies can suc-cessfully maintain huge software systems foryears with constantly renewed maintenanceteams without relying on some kind of struc-turing technique. We do not pretend �le nam-ing convention is the sole possibility, but it isone of them. Hierarchical directories is anothercommonly used one (e.g. in the Linux project[7]).

To the second objection, we may answerthat one of the reasons the company is sponsor-ing our research is that nobody in the companyhas a complete understanding of the system.However, not being aware of all the existingsubsystems, software engineers are still able togenerate �le names that respect some informalnaming convention. For this, they only needlocal understanding of the system structure. A�le name is derived from already existing �lesthat are close to the new one. For example,when a �le \activmon" was split in two, thesecond part (new �le) was named \activmonr".Other similar examples may be found.

Finally maintenance can cause a �le to driftaway from its original purpose. But this canonly be a very slow process and it will mainlybe perceptible with regard to �ne details. Thehigh level abstract view of the �le should re-main relevant.

Because �le names are short (� 9 charac-ters in our case), they may express very littleinformation. If this information describes thepurpose of the �le, it may only do it at a very

high level of abstraction. Therefore �le namesmay express only very generic concepts, whichbarely change over the time.

Using �le names as �le clustering criterionalso brings an advantage that source code doesnot have: The clusters they allow one to createare readily understandable by the software en-gineers, one can name clusters after those sub-strings of �le names that cluster members havein common: \q2000", \2ks" or \mnms" referto application domain concepts that they canunderstand.

2.2 Other �le clustering criteria

In last International Workshop on ProgramComprehension [5] only one paper on programcomprehension (out of nine papers) was basedon other source than code analysis: it usedthe comments. The paper was concerned withbuilding an application domain knowledge base[12].

The reason for this predominance of sourcecode over other sources of information is thatone usually assumes that it is the only reli-able one. Documentation, either internal (com-ments) or external is usually considered out-ofdate or lacking.

But source code provides information of lowabstraction level. This makes it mandatory toinvolve a human expert in the process; for ex-ample to �lter the candidate clusters or to as-sociate these clusters with domain concepts.

Very few researchers have considered or pro-posed using other sources of information:

� Merlo [9] states that \Many sources of in-formation may need to be used duringthe [design] recovery process". He usedcomments and the mnemonics of identi-�ers names to extract application domainconcepts.

� Tzerpos [14] identi�es �le naming conven-tion as a possible criterion for �le clus-tering. However he does not propose anysolution to do so.

� Sayyad-Shirabad [12] uses comments toextract application domain concepts tobuild a knowledge base.

4

To our knowledge, documentation (otherthan comments) has never been used, but itcould be considered as well.

The advantages of these informal sources ofinformation over source code would be:

� They refer to application domain con-cepts in a more direct way because theycontain words or abbreviations that arereadily understandable by domain ex-perts.

� They are of a higher abstraction level.

Before experimenting with the above men-tioned criteria, we will brie y discuss their rel-ative strengths and weaknesses as comparedwith �le names:

Identi�er names (used by Merlo [9]) are use-ful to extract application domain con-cepts, but our experiments show poor re-sults for �le clustering.

It must be noted that identi�er names aremore easy to deal with than �le names be-cause they may be longer and also allowthe use of \words markers" (\ " or cap-ital letters). We shall come back to thisproblem in section x4.1.

Hierarchical directories are also easier todeal with because concepts correspondto individual levels in the hierarchy. Alimitation is that directories form a tree,whereas �le names may refer to severalindependent concepts, possibly forming ageneral graph of concepts.

Comments (used by Merlo [9] and Sayyad-Shirabad [12]) may pertain to di�erentparts of the code. A �rst di�culty con-sists in identifying comments pertainingto a whole �le, or to a particular rou-tine, type de�nition, etc. Commentsalso have the same problem as any freestyle (in natural language) documents:nouns have synonyms, verbs are conju-gated, etc.

3 Experiment with �le clus-

tering criteria

To provide a more objective comparison of the�le clustering criteria, we measured their ef-�ciency in retrieving the example subsystemsalready mentioned.

Before proposing an experiment design tocompare the criteria, we will explain how wehave been using the �le name criterion.

Finally we will present and discuss the ex-periments' results.

3.1 Using the �le name criterion

Tzerpos [14] suggested using �le names as aclustering criterion, however he did not imple-ment the idea. Merlo [9] did experiment withidenti�ers names, but we already explainedthat they are much easier to deal with becausethey contain \word markers". These markersare rarer in �le names: in our system, only 19�les out of 3500 contained the \ " characterand none contain upper case letters. Becausenothing indicates that \listaud" should be de-composed in \list" and \aud" (for audit)|, weneed a way to guess it or to work around theproblem.

We call the part of a �le name that refers toa concept (\list" or \aud") abbreviations, eventhough some of them are full words.

Tzerpos proposes to use a simple patternmatcher, but this implies we know what abbre-viations to look for. This is not our case. In-stead, we propose to work around the problemby relying on statistical techniques.

Our method consists in decomposing the �lenames into all the substrings of a given lengththey contain. These strings are called n-grams[6]; for a length of 3, one speaks of 3-grams. Forexample the �le name \qlistmgr" contains thefollowing 3-grams: \qli", \lis", \ist", \stm",\tmg" and \mgr".

N-grams are all the substrings of a givenlength that can be extracted from a name. Ab-breviations are part of the names that relateto a speci�c concept. The length of abbrevia-tions is not �xed, although with our method,we cannot extract abbreviations shorter thanthe n-grams we are working with (see below).

5

Two �le names sharing some abbreviations(e.g. \qlistmgr" and \listaud") will have oneor more n-grams in common (here \lis" and\ist"). The more n-grams two �le names havein common, the closer they are.

The length of the n-grams must be chosecarefully. Excessive length will forbid shortabbreviations from coming out and excessivebrevity will mean the n-grams will not be sig-ni�cant enough.

For example for a length of 5 characters(too long), abbreviations up to 4 characterswill produce no results: \qlistmgr" has the 5-grams: \qlist", \listm", \istmg", \stmgr" and\listaud" has: \lista", \istau", \staud". Thetwo �les no longer have any n-gram in com-mon.

With 2-grams (too short) \tm" will be com-mon to the names \listmgr" and \initmem"which is only fortuitous.

The right length would be the same as thatof the most common abbreviations. In our case,abbreviations are mainly of 2, 3 and 4 charac-ters. There appears to be a signi�cant num-ber of 2-characters abbreviations, although wehave no precise statistics on this. Nevertheless,decomposing the �le names into 3-grams gavegood results (see section x3.3).

From discussions with the software engi-neers it also appeared that the position of anabbreviation in the �le name have some signi�-cance. To try to cope with this issue, we addedtwo virtual letters, at the beginning (\̂ ") andthe end (\$") of the names. This proved usefuland improved the results. It also has the inter-esting outcome that some 2-characters abbre-viations are taken into account if they appearat the beginning or the end of �le names. Forexample, the abbreviation \cp" often appearsat the beginning of �le names, therefore, the3-gram \̂ cp" is shared by all these names.

3.2 Experiments design

We will now describe the experiment we de-signed to compare all the �le clustering crite-ria.

The �rst step was to try to de�ne whatthe software engineers considered a subsystem.Four software engineers proposed 10 subsys-tems containing 140 �les. The subsystems are

small, their size varied from 5 �les to 40 �les.The experiment consists of trying to gener-

ate some subsystems and compare them withthe examples we were provided with. We willprefer the criterion that allows us to extractsubsystem closest to the examples.

For each of the 140 �les:

1. Use the selected �le as the \seed".

2. Find all other �les close enough to theseed (according to the tested criterion) tobelong to the same subsystem.

3. Compare this set of �les with the actualsubsystem.

This algorithm is similar to what is done inInformation Retrieval [11, 15]: given a base of\documents" (the system's �les), �nd all thedocuments (a subset of the �les) relevant to aquery (the seed �le).

Although it was for a di�erent purpose, weshould note that Information Retrieval tech-niques have already been used in software en-gineering by Patel [10]. His purpose was not tocompare criteria but to de�ne a module cohe-sion measure.

One measures the e�ciency of InformationRetrieval techniques by comparing the resultsthey give to known queries with known results.The e�ciency is measured using two metrics:precision and recall.

Recall is the percentage of relevant �les(subsystem's actual �les) that the system didextract.

recall =retrieved members

actual members� 100

Precision is the percentage of relevant �lesamong the ones retrieved by the system.

precision =retrieved members

total retrieved� 100

A recall of 100% is easily achieved by re-trieving all �les (thus all the subsystem's actual�les will be extracted), but the precision will bevery poor. Conversely, by extracting only the\seed", the precision rate will be 100% (the �lebelongs to its own subsystem), but the recallwill be correspondingly low. One must look forthe right balance between high precision and

6

high recall. Presumably, we should favor re-call because it is more important to present thesoftware engineers all the potentially useful �les(even if there is some noise) rather than pre-senting only those we are sure are useful �lesbut missing some of them.

We used Smart [8], an Information Re-trieval tool designed for such experiments. Thetool ranks �les according to their similarity tothe seed. Doing so, it does not actually de�nesubsystems, for one seed it would potentiallyextract all the �les, only a lot of them wouldhave a null similarity. One way to actually de-�ne a subsystem, is to decide on a threshold.Only �les ranked higher than the threshold willbe in the subsystem.

However, we did not want to use an arbi-trary algorithm to set the boundaries of eachsubsystem because it could introduce noise intothe measures.

We grouped all �les with the same namebut di�erent extensions (e.g. \.pas", \.if" inour case, \.c" \.h" for C �les) into one virtual�le. For some criteria, this was mandatory, forexample for the \included �les" criterion (seebelow), only header �les (\.h" in C) containthe information. This also has the advantageof reducing the number of �les (from 3500 to1800).

The criteria we tested are the following:

File names: Each �le name is indexed withthe n-gram it contains as explained in sec-tion x3.1. We experimented with n-gramsof length 2, 3, 4 and 5.

Routine names: As a mean of comparisonwith Merlo's work [9], we decomposed thenames of routines declared in each �le ac-cording to the word markers they contain(\ " and capital letters). We clusteredtogether the �les containing the same ab-breviations thus obtained.

Included �les: This is our \source code" cri-terion. In [10], Patel proposes to clustertogether routines that refer to the sametypes. We tried to do the same thing onthe �le level.

All comments: Files are compared accordingto their comments. The smart system of-fers mechanisms to deal with free style

text. It may discard the common words(adverbs, articles), and try to put otherwords in a canonical form (for exampleby suppressing \s" and \ing" at the endof words).

\Summary" comment: Many �les (' 70%of all �les and ' 84% of the known sub-systems' �les) have a summary commentat the beginning of the �le that describesthe main purpose of the �le as opposedto that of routines.

References in documentation: We ex-tracted all the �le names referred to inthe documentation. Each �le is indexedwith all the documents that refer to it.

3.3 Results

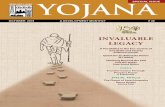

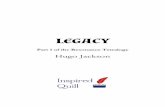

Figure 1 presents the results for all criteria ex-perimented with. For each criterion, the curvegives di�erent recall rates and the correspond-ing precision rates.

As we want to promote recall over precision(see x3.2), a good indicator of a criterion's ef-�ciency would be the precision rate for 100%recall (right hand side of the graph).

The �le name criterion with 3-grams endsup with a precision of 73.1%. It means thatgiven a subsystem of 10 �les, on average, these10 �les are ranked among the 14 most similarto any of them. We consider this result satis-factory enough.

The \Included �les" criterion ends up with15.4% precision. This means that on average,the 10 �les would be ranked among the 65 mostsimilar to any of them. This is not acceptable,although one could consider that looking at 65�les out of 3500 is not so bad a result.

It is no surprise that the �le name crite-rion is the best one. We already noticed thatthe subsystems showed some similarity betweentheir �le names.

Among the di�erent n-gram for the �lename criterion, length 3 is the best one as al-ready mentioned (�nal precision = 73.1%).

Length 4 also gives good results (�nal pre-cision = 69.3%) although we know there aremany 3 and 2-characters abbreviations in the�le names. This may be a particularity of our

7

sample which contains only two small subsys-tems with 2-characters abbreviations, and onesmall subsystem with 3-characters abbrevia-tions. Thus more than 78% of the �les exper-imented with have abbreviations of 4 or morecharacters.

As expected, 5-grams give very poor results(�nal precision = 3%).

Results for 2-grams came as a surprise (�-nal precision = 64.5%) Although our subsys-tem sampling tends to favor long abbreviations,the results are quite good (on average, 10 �leswould be ranked in the 16 most similar to anyof them). This would suggest that noise intro-duced by the inevitable fortuitous similaritieswhen using so short n-grams is not that impor-tant.

The other criteria are by far worst than �lename. One should take some precautions in in-terpreting these results, there may be severalvalid structures for the system. Our sample fa-vors the �le name criterion (because softwareengineers do so), this does not mean it is theonly valid one.

The source code criterion (indexing on in-cluded �les) is the least bad of the alternatives(�nal precision = 15.4%).

Documentation is the next one (�nal pre-cision = 6.6%). This could be blamed on thegeneral nature of the documentation used. An-other experiment should be made with onlythose documents concerned with the designstructure.

Abbreviations found in routine names havea �nal precision of 5.5%. Maybe this is to blameon the di�erence of abstraction level betweenthe �les we clustered and the routines these ab-breviations describe.

Finally, results for the comments are sim-ilar, whether we considered them all or onlythe �le \summary comment" (respective �nalprecisions 3% and 2.6%).

4 A \conceptual" browser

The method we used to compare �le cluster-ing criteria did not actually extract subsys-tems. Rather for each seed �le it was given,it proposed a (potentially very long) list of �lesranked by similarity with the seed.

But our goal was to built a browser basedon the subsystems extracted. This sectionpresents an early work in this direction. Thiswork is by no means completed, and some ques-tions remain open. However, the early resultsare encouraging.

A straightforward solution would have beento cluster together all �les sharing some com-mon n-gram(s). Each cluster would have de�nea subsystem named with the abbreviation com-mon to all of its member �les. The subsystemand the name can be considered to form a con-cept.

However experience shows this methodgives many meaningless \concepts" becausethere are many fortuitous n-grams appearing indi�erent �le names. For example all the namescontaining \diag" (diagnosis) and \dial" sharethe 3-gram \dia" which means nothing for thesoftware engineers.

Other candidate abbreviations can be mis-leading, for example, \clock" and \block" aretwo abbreviations found in �le names, but theirintersection, \lock" is not a concept referredto. This example is more dangerous than the

8

�rst one because the candidate abbreviation isa word and therefore seems to be valid.

The �rst step in building the conceptualbrowser will be to �lter the substrings.

The second step is to assign to each �le allthe concepts its name contains. This may be adi�cult task; for example assuming \lock" wasa valid concept, we would not want to assign itto a �le containing the \clock" concept.

4.1 Abbreviation �ltering

The �rst step to get a list of candidate abbre-viations is to extract all the n-grams commonto several �le names. We will then try to �lterout those candidate abbreviations which do notcorrespond to concepts.

There are several algorithms that couldcluster the �les names and exhibit the candi-date abbreviations they have in common. Wechose an algorithm from Godin (see for example[3, 4]) to built a Galois lattice. This algorithmclusters together all the �le names which sharesome n-grams. This structure has the propertythat all possible clusters are extracted. This

0

10

20

30

40

50

60

70

80

90

100

0 10 20 30 40 50 60 70 80 90

prec

isio

n (%

)

recall (%)100

File name-3 File name-4

File name-2

Included files

Refs. in docum Routines’ name File name-5 Comments Summary

Figure 1: Recall and precision rates for various �le clustering criteria

would not be the case for most of the cluster-ing algorithms. Because they build trees, theyhave to select only part of the possible clustersand discard the others ([1, 2]).

Using the Galois lattice, we are able to ex-tract all groups of names possessing some sim-ilar n-gram. A �rst �ltering process discardthose clusters that do not represent a single ab-breviation:

� All the n-grams may not be connected,for example: \memmonstr" and \mon-pastra" form a cluster with the 3-grams:\mon" and \str" but do not share a singleabbreviation.

� All the n-grams may be connected dif-ferently in the �le names, for example\gwaititem" and \partition" form a clus-ter with the 3-grams: \iti" and \tit" butit is \itit" in one �le and \titi" in theother.

A second �ltering process will try to discardthose candidate abbreviations which are not ac-tual concepts. Our solution is to look for eachcandidate abbreviation in a \dictionnary", theproblem being to �nd a dictionary containingthe application domain concepts and the par-ticular mnemonics used in the company.

For this, we propose to use the comments.For each cluster, we look for the associated can-didate abbreviation in the comments. We onlykeep those abbreviations that we found in thecomments. To avoid going through megabytesof comments for each candidate abbreviation,we only search the comments in the membersof the given cluster.

This allows us to solve such problems as thetwo presented earlier. No �le containing the\dia" substring in its name had it in its com-ments, whereas \dial" and \diag" where found,same thing for \lock" and \block" or \clock".

Numerous other abbreviations speci�c tothe company are found in the comments suchas \q2000", \cp", etc. Unfortunately, others,like \svr" for \server", \mgr" for \manager"etc. do not appear in the comments, instead,the full word is used. We tried to look for themamong the abbreviations used in identi�ers, butthe results are not satisfactory.

From 1808 �le names, the Galois lattice ex-tracted 3410 clusters. Among them, 667 were

9

discarded during the �rst �ltering process (nosingle abbreviations in a cluster), leaving 2743candidate abbreviations.

The second �ltering process (searching forthe candidate abbreviations in a \dictionnary")found 501 in the comments and 327 in the iden-ti�er names, 279 being found in both. Whichleaves us with 549 recognized abbreviations.

4.2 File name decomposition

The reason for extracting abbreviations was tounderstand the �le names: \actmnutsg" means\act" (for activity), \mn" (for monitor), \ut"(for utilities) and \sg" (a product name).

We call the splitting of a �le name in all theabbreviations it contains a split.

File names rarely contain word markerstherefore, we have to guess how a name like\actmnutsg" must be split. The task is ren-dered more di�cult by the following facts:

� one concept may be abbreviated in di�er-ent ways: \activity", \activ" and \act" or\mon" and \mn" for monitor.

� a short abbreviation (e.g. \lock") mayappear inside another longer one (e.g.\clock") or between two abbreviations.

The ideal solution would be to have an ex-tensive dictionary of all abbreviations. Giventhis, we believe it would be possible to �nd aunique correct decomposition for the great ma-jority of �le names.

However, building such a dictionary wouldbe a di�cult task, comparable to building anapplication domain knowledge base. Maintain-ing this dictionary would be a hard task as well.We chose a more lightweight solution where thework is done automatically, and we accept thepossibility of errors. These errors or of twokinds:

� we split the �le name using a wrong ab-breviation, for example in \actmnutsg",we could detect \mnu", abbreviationwhich means \menu" whereas it's in fact\mn" and \ut"

� we miss an abbreviation, like \mn" in theabove example.

The �rst problem does not seem critical be-cause while browsing the system the softwareengineers will be able to correct these errorseasily. If the system present them with 10 �les(out of 1800), it's an easy task for them to de-tect the few wrong ones.

The second problem seems more serious be-cause it could cause the software engineers tomiss a �le (possibly precisely the one with a bugin it). To solve this we decided to propose morethan one possible split of a �le name, thereforeincreasing a bit the �rst problem, but we sawit is not critical.

Our algorithm for splitting the �le namesconsists of generating all possible splits withthe abbreviations. All remaining characters(not belonging to any abbreviation) are leftalone (free characters). For example, assum-ing we recognize \act", \mnu" and/or \ut", thepossible splits for \actmnutsg" will be:

� \act", \mnu", \t", \s" and \g"

� \act", \m", \n", \ut", \s" and \g"

We then rate the splits according to somefunction. Hopefully, the best rating corre-sponds to the correct split.

We �rst tried to generate splits only consid-ering the recognized abbreviations (both fromthe comments and the identi�ers names). Buttoo few recognized abbreviations are availableand the results did not satis�ed us.

We opted for another solution which con-sists of generating the splits with all the candi-date abbreviations (recognized or not), awarethat a lot of them are erroneous. The splitswith more recognized abbreviations (from com-ments or routine names) get a higher rating.

The rating function includes several crite-ria:

� Number of abbreviation in the split, theless, the better. The rationale behind thisis to discard those splits with a lot of freecharacters.

� Proportion of recognized abbreviations ina split, the higher, the better.

� Source for recognized abbreviation. Thisis intended as an improvement upon thelast criterion. One can put di�erent

10

weights on comments and routine names.In our case, we were also recognizingabbreviations which were �le names bythemselves. But we gave a smaller weightto this source because we did not want toaccept splits consisting of only 1 abbrevi-ation (the �le name itself). A tendencythat is already enforced by the �rst crite-rion.

To try to give the reader an objective ideaof the results, we present, in table 1, splits forthe �rst 10 �les in alphabetical order . The ta-ble gives the two best rated splits for each �lealong with the correct one.

Usually when there is a recognized abbrevi-ation, it appears in one of the two best ratedsplit (generally in the �rst one). Errors arisebecause we do not have enough recognized ab-breviations, which is due to the following prob-lems:

� Because we used 3-grams, all 2 characterslong abbreviations are not extracted andtherefore cannot be subsequently recog-nized.

� A lot of correct abbreviations are still notrecognized.

The solution to the �rst problem is to use2-grams instead of 3-grams. This should workas we saw in section x3.3 that 2-grams give rel-atively good results.

A solution to the second problem could beto add a new �ltering of the candidate abbrevi-ations after the splitting. In table 1, we see that\activmon" is splitted correctly even though\activ" is not a recognized abbreviations. Af-ter the split, we could add \activ" as a newrecognized abbreviation, and do the split again.Thus, by iterating over splitting and �ltering,we may be able to improve our results.

Conclusion and future work

Discovering subsystems in a legacy softwaresystem is an important research issue. Mostof the research tries to do so by analysing thesource code because it is considered the onlyrelevant source of information on the actualstate of the software.

bre.

One of the requirements of our project is totry to work in close relationship with the peo-ple that are actually maintaining legacy soft-ware. By studying them, we hope to discovernew ideas that will be readily applicable in theindustry.

Thus, while studying a telecommunicationlegacy software, and the software engineerswho maintain it, we discovered their de�nitionof subsystems was mainly based on the �les'names. This goes against the commonly ac-cepted idea that source code is the sole reliablesource of information when doing �le cluster-ing.

We experimented some �le clustering crite-ria and proved that the �le name criterion wasthe most likely to rediscover what the softwareengineers called a subsystem.

Although this result could be a particular-ity of the software we are studying, we gavesome reasons to believe that it could be morewidely applicable.

Based on these results, we proposed amethod to build a conceptual browser on thesoftware system. The browser is based on split-ting �le names retrieved as members of subsys-tems into their logical components. This partof the research is still in an early stage and weproposed several possible improvements.

Thanks

The authors would like to thank the softwareengineers at Mitel who participated in this

11

research by providing us with data to studyand by discussing ideas with us. We are alsoindebted to Janice Singer an Jelber Sayyad-Shirabad for fruitful discussions we had withthem.

About the authors

Nicolas Anquetil recently completed is Ph.D. atthe Universit�e de Montr�eal and is now workingas a research associate and part time professorat the university of Ottawa.

Timothy C. Lethbridge is an Assistant Pro-fessor in the newly-formed School of Informa-tion Technology and Engineering (SITE) at theUniversity of Ottawa. He leads the Knowledge-Based Reverse Engineering group, which is oneof the projects sponsored by the Consortiumfor Software Engineering Research.

The authors can me reached byemail at [email protected] [email protected]. The URL for theproject is http://www.site.uottawa.ca/ tcl/k

References

[1] N. Anquetil and J. Vaucher. Acquisi-tion et classi�cation de concepts pour lar�eutilisation. In 4�eme Colloque Interna-

tional en informatique cognitive des organ-

isations / 4th International Conference on

Cognitive and Computer Sciences for Or-

ganizations, pages 463{472, 1255, Carr�e

�le name best split 2nd best split correct splitabrvdial ab rv dial ab rv di al abrv dial (abbreviated-dialing)accessdta access dta acc es sd ta access dta (accesses-resource-data)accessfwd access fwd acc ess fwd access fwd (access-call-forwarding-data)accntcode acc nt code ac cnt code accnt code (account-code)activity activ ity activ it y activity (activity-control)activmon activ mon activ m on activ mon (activity-monitor)activmonr act i vmo nr activ mon r activ mon r (activity-monitor-redundant-system)actmnuts act mnu ts ac t mnu ts act mn ut s (activity-monitor-utilities-s)actmnutsg actm nut sg act mnu tsg act mn ut sg (activity-monitor-utilities-sg)actmonsrv act mon srv act monsrv act mon srv (activity{monitor-server)

Table 1: Splits for the 10 �rst �le names. Upper case abbreviations are the recognized ones. Note:\sg" and \s" are two product names.

Phillips Bureau 602, Montr�eal (Qu�ebec)Canada H3B 3G1, 1993. ICO, GIRICO.

[2] N. Anquetil and J. Vaucher. Extract-ing Hierarchical graphs of concepts froman object set : Comparison of two meth-ods. In Knowledge Acquisition Workshop,

ICCS'94, 1994.

[3] R. Godin, R. Missaoui, and H. Alaoui.Incremental Algorithms for Updating theGalois Lattice of a Binary Relation. Tech-nical Report 155, Universit�e du Qu�ebec �aMontr�eal, septembre 1991.

[4] Robert Godin and Hafedh Mili. Build-ing and Maintaining Analysis-Level ClassHierarchies Using Galois Lattices. ACM

SIGplan Notices, 28(10):394{410, 1993.OOPSLA'93 Proceedings.

[5] IEEE. 5th International Workshop on

Program Comprehension. IEEE comp. soc.press, may 1997.

[6] Roy E. Kimbrell. Searching for text? sendan n-gram! Byte, 13(5):197, may 1988.

[7] DEBIAN Gnu/Linux web page.http://www.debian.org.

[8] Chris Buckley (maintainor). Smartv11.0. Available via anonymousftp from ftp.cs.cornell.edu, inpub/smart/smart.11.0.tar.Z.

[9] Etore Merlo, Ian McAdam, and Renato DeMori. Source code informal information

12

analysis using connectionnist models. InRuzena Bajcsy, editor, IJCAI'93, Interna-tional Joint Conference on Arti�cial Intel-

ligence, volume 2, pages 1339{44. Los Al-tos, Calif., 1993.

[10] Sukesh Patel, William Chu, and Rich Bax-ter. A Measure for Composite ModuleCohesion. In 14th International Confer-

ence on Software Engineering. ACM SIG-Soft/IEEE Comp. Soc. Press, 1992.

[11] G. Salton andM.J. McGill. Introduction to

Modern Information Retrieval. McGraw-Hill Book Company, 1983.

[12] Jelber Sayyad-Shirabad, Timothy C.Lethbridge, and Steve Lyon. A LittleKnowledge Can Go a Long Way TowardsProgram Understanding. In 5th Interna-

tional Workshop on Program Comprehen-

sion, pages 111{117. IEEE Comp. Soc.Press, mai 1997.

[13] Scott R. Tilley, Santanu Paul, and Den-nis B. Smith. Towards a Framework forProgram Understanding. In Fourth Work-

shp on Program Comprehension, pages 19{28. IEEE Comp. Soc. Press, mar 1996.

[14] Vassilios Tzerpos and Ric C. Holt. TheOrphan Adoption Problem in ArchitectureMaintenance. Submited to Working Con-ference on Reverse Engineering, oct 1997.

[15] C.J. van Rijsbergen. Information Re-

trieval. Butterworths, London, 1979.