Examining The Use Of First Principles Of Instruction By ...

-

Upload

khangminh22 -

Category

Documents

-

view

0 -

download

0

Transcript of Examining The Use Of First Principles Of Instruction By ...

Florida State University Libraries

Electronic Theses, Treatises and Dissertations The Graduate School

2012

Examining the Use of First Principles ofInstruction by Instructional Designersin a Short-Term, High Volume, RapidProduction of Online K-12 TeacherProfessional Development ModulesAnne M. Mendenhall

Follow this and additional works at the FSU Digital Library. For more information, please contact [email protected]

THE FLORIDA STATE UNIVERSITY

COLLEGE OF EDUCATION

EXAMINING THE USE OF FIRST PRINCIPLES OF INSTRUCTION BY

INSTRUCTIONAL DESIGNERS IN A SHORT-TERM, HIGH VOLUME, RAPID

PRODUCTION OF ONLINE K-12 TEACHER PROFESSIONAL DEVELOPMENT

MODULES

By

ANNE M. MENDENHALL

A Dissertation submitted to the Department of Educational Psychology and Learning Systems

in partial fulfillment of the requirements for the degree of

Doctor of Philosophy

Degree Awarded: Fall Semester, 2012

ii

Anne Mendenhall defended this dissertation on August 1, 2012.

The members of the supervisory committee were:

Tristan E. Johnson

Professor Co-Directing Dissertation

James D. Klein

Professor Co-Directing Dissertation

Jonathan Adams

University Representative

Vanessa P. Dennen

Committee Member

The Graduate School has verified and approved the above-named committee members,

and certifies that the dissertation has been approved in accordance with university

requirements.

iii

For my Mom and Dad for their never-ending support and unconditional love.

For Brayden, Lynzy, Makayla, Kylee, and Sidney. Here’s hoping you find the same joy

and satisfaction I did while pursuing your own dreams.

iv

ACKNOWLEDGEMENTS

Reflecting on my many experiences through the PhD process, I’d have to say that

working with Dr. Tristan Johnson and Dr. Jim Klein have been the most remarkable.

Both Tristan and Jim have gone above and beyond to see me through the dissertation

process. It is with my deepest gratitude that I thank them both for the commitment,

sacrifice, expertise, council and advice, support, and not to mention their sense of humor.

I am so grateful for the phone call I received many years ago from Tristan

encouraging me to apply to the Instructional Systems program at Florida State

University. The experiences gained through attending FSU and working with Tristan for

nearly 5 years have been incredible and a treasured blessing. His consistent support,

encouragement, and positive attitude have carried me through. I can honestly say that the

road to PhD-hood has been a remarkable journey and I have truly “enjoy[ed] the journey”

(Oaks & Oaks, 2009, p. 31), because of Tristan.

I am so thankful for Dr. Jim Klein and his willingness to step in and provide an

incredible amount of support and expertise. His invaluable feedback, kind demeanor,

encouragement, and advice became my lifeline as I wrapped up my journey at FSU. I’ve

really enjoyed our conversations and meetings. I wish I had more time to learn from him.

I am so grateful for Jim introducing me to my new love – Design and Development

Research. I remember telling my coworkers and friends, after the first meeting I had with

Jim, about how he changed my life by introducing me to this type of research. I’d like to

thank my committee members Dr. Vanessa Dennen and Dr. Jonathan Adams for their

expertise and council. Their insight and perspectives were very valuable and contributed

greatly to my success in the PhD program. Their expertise on qualitative research has

helped me see things at different angles.

My parents James (Jim) and Evelyn (Evie) Mendenhall have been by far my

biggest supporters and sources of unconditional love (along with Hermione the dog).

They have instilled in me the ability to work hard and recognizing the value of work.

They also taught me to recognize my divine worth and to know God and to seek His

v

council. Words cannot express my love and gratitude for the two best parents a girl could

have. Thank you for sharing this journey with me.

A special Mahalo Nui Loa goes out to Dave and Kate Merrill and Bob Hayden.

All three played an instrumental part in this journey. They too, provided lots of council,

advice, and support. I’m so grateful to have them as part of my ohana. Another special

thank you goes out to the many friends who have provided encouragement and support to

name a few (I wish I could mention them all by name): ChanMin Kim, Gordon and

Jennifer Mills & Family, Kylia and Brian Barabash, the FSU PhD ABD group, my

brothers Rob and Scott and their families, and to the cohort of PhD and masters students

who have made this journey fun and meaningful. A special thanks goes to Alison Moore,

Kayla Wenting Jiang, Faiza Al-Jabry, and my Habitat Tracker coworkers for their

assistance and feedback. Last but not least, thank you to those who worked countless

hours above and beyond the call of duty designing and developing the professional

development modules used for this study.

vi

TABLE OF CONTENTS

List of Tables ................................................................................................................................. ix

List of Figures ..................................................................................................................................x

Abstract .......................................................................................................................................... xi

1. CHAPTER ONE INTRODUCTION ......................................................................................1

Purpose of Study ............................................................................................................3

Research Questions .......................................................................................................4

Significance of the Study ...............................................................................................4

2. CHAPTER TWO LITERATURE REVIEW ..........................................................................7

Differentiating Theories, Models, and Principles ..........................................................7

Instructional Systems Design Models ............................................................................8

Benefits of ISD Models ...................................................................................10

Challenges and Criticisms of ISD Models .......................................................10

Theoretical Foundations of ISD Models ..........................................................12

First Principles of Instruction .......................................................................................16

Activation .........................................................................................................19

Demonstration ..................................................................................................20

Application .......................................................................................................21

Integration ........................................................................................................22

Problem or Task-Centered ...............................................................................21

Use of First Principles of Instruction ...............................................................23

Research on First Principles of Instruction ......................................................25

Instructional Designer Decision-Making .....................................................................27

Design and Development Research .............................................................................29

3. CHAPTER THREE METHODOLOGY ..............................................................................35

Research Design ...........................................................................................................35

Participants ...................................................................................................................36

Setting and Materials ...................................................................................................37

vii

Data Sources ................................................................................................................42

Instrumentation ............................................................................................................44

Procedures ....................................................................................................................45

Data Analysis ...............................................................................................................46

Trustworthiness ............................................................................................................49

4. CHAPTER FOUR RESULTS ..............................................................................................52

Conditions Under Which First Principles Were Used .................................................52

Instructional Design Setting .............................................................................52

Decisions Regarding First Principles ...........................................................................60

Decision-Making Power ..................................................................................60

Types of Design Decisions ..............................................................................61

Instructional Design Decisions ........................................................................63

Factors Affecting Decisions .............................................................................69

Level of Understanding First Principles ......................................................................75

Frequency of First Principles Incorporated in Modules ..............................................77

Summary .......................................................................................................79

5. CHAPTER FIVE DISCUSSION ..........................................................................................81

General Research Question ..........................................................................................81

Supporting Research Question 1 ..................................................................................83

Supporting Research Question 2 ..................................................................................87

Supporting Research Question 3 ..................................................................................94

Supporting Research Question 4 ..................................................................................95

Limitations ........................................................................................................98

Future Research ........................................................................................................99

Conclusion ........................................................................................................99

APPENDIX A SCIENCE AND MATH STANDARDS INSTRUCTIONAL MODULES .......101

APPENDIX B DEMOGRAPHICS AND DESIGN KNOWLEDGE SURVEY .........................103

APPENDIX C INTERVIEW PROTOCOL AND QUESTIONS ................................................107

APPENDIX D MODULES RANDOMLY SELECTED FOR EVALUATION .........................109

APPENDIX E FIRST PRINCIPLES OF INSTRUCTION KNOWLEDGE SURVEY ..............110

viii

APPENDIX F MODULE EVALUATION SHEET ...................................................................113

APPENDIX G RECRUITMENT E-MAIL .................................................................................114

APPENDIX H CONSENT FORM ..............................................................................................115

APPENDIX I SCORING PROTOCOL AND RUBRIC FOR FPI SURVEY .............................118

APPENDIX J SAMPLE PROGRAM LOGIC AND STORYBOARD TEMPLATES ..............126

APPENDIX K HUMAN SUBJECTS APPROVAL MEMORANDUM ....................................131

APPENDIX L PRINCIPLE INVESTIGATOR APPROVAL MEMORANDUM .....................132

APPENDIX M PERMISSION TO USE FIGURES ....................................................................134

REFERENCES ......................................................................................................................136

BIOGRAPHICAL SKETCH .......................................................................................................145

ix

LIST OF TABLES

Table 2.1 Gagné’s Nine Events of Instruction ...............................................................................16 Table 3.1: Topics and Sub-topics ...................................................................................................47 Table 3.2: Data Collection and Analysis .......................................................................................49 Table 4.1: Instructional Designers Working Hours .......................................................................54 Table 4.2 Instructional Designers Demographics ........................................................................56 Table 4.3 Means and Standard Deviations of Years of Experience .............................................57 Table 4.4 Training Materials Use and Level of Understanding Results .......................................59 Table 4.5 First Principles of Instruction Knowledge Survey Scores .............................................76 Table 4.6 First Principles of Instruction Knowledge Survey Scores by Roles ..............................77 Table 4.7 Module Evaluation Frequency Counts .........................................................................78 Table 4.8 Percentage and Instances Ranges of the Use of First Principles .................................79 Table 5.1 Possible Strategy Sequence for Teaching Components .................................................82 Table 5.2 Means and Standard Deviations of Years of Experience ..............................................84 Table 5.3 Percentage and Instances Ranges of the use of First Principles ...................................88 Table 5.4 Comparisons of Gardner’s (2011) Module with 6-8 Grade Science and H.S.

Earth and Space Science Modules .................................................................................................96

x

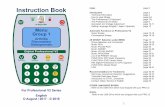

LIST OF FIGURES

Figure 2.1 ADDIE model is a systematic approach to instruction ................................................14 Figure 2.2 Dick and Carey Systems Approach Model ...................................................................15 Figure 2.3 Pebble-in-the-Pond Model ...........................................................................................15 Figure 2.4 First Principles of Instruction ......................................................................................17 Figure 2.5 Framework of Merrill’s (2002a, 2008) First Principles of Instruction .......................19 Figure 2.6 General information is located directly next to the demonstration/specific

portrayal, which guides the learner from the concept being taught to the demonstration of

that concept (Mendenhall et al., 2006b) ........................................................................................21 Figure 3.1 The text is represented as bullet-points and is located on the left, while the

specific instance is shown on the right (Johnson, Mendenhall, et al., 2011) ................................38 Figure 3.2 In this example, which illustrates unpacking a benchmark, the steps and

specific portrayal is on the right (Johnson, Mendenhall, et al., 2011) .........................................38 Figure 3.3 Videos are sometimes used for practice activities .......................................................39 Figure 3.4 Organizational Hierarchy of Participants ...................................................................40

xi

ABSTRACT

Merrill (2002a) created a set of fundamental principles of instruction that can lead

to effective, efficient, and engaging (e3) instruction. The First Principles of Instruction

(Merrill, 2002a) are a prescriptive set of interrelated instructional design practices that

consist of activating prior knowledge, using specific portrayals to demonstrate

component skills, application of newly acquired knowledge and skills, and integrating the

new knowledge and skills into the learner’s world. The central underlying principle is

contextualizing instruction based on real-world tasks. Merrill (in press) hypothesizes that

if one or more of the First Principles are not implemented, then a diminution of learning

and performance will occur. There are only a few studies that indicate the efficaciousness

of the First Principles of Instruction. However, most claims of efficacy in the application

and usage of the principles are anecdotal and empirically unsubstantiated. This

phenomenon is not isolated to the First Principles of Instruction.

Claims of effectiveness made by ISD model users have taken precedence over

empirically validating ISD models. This phenomenon can be attributed to a lack of

comprehensive model validation procedures as well as time restraints and other limited

resources (Richey, 2005). Richey (2005) posits that theorists and model developers tend

to postulate the validity of a model due to its logicality and being supported by literature,

as is the case with the First Principles of Instruction. Likewise, designers tend to equate

the validity of a model with an appropriate fit within their environment; that is, if using

the model is easy, addresses client needs, supports workplace restraints, and the resulting

product satisfies the client then the model is viewed as being valid (Gustafson & Branch,

2002; Richey, 2005).

Richey and Klein (2007) emphasis the importance of conducting design and

development research in order to validate the use of instructional design models, which

includes the fundamental principles (e.g., First Principles of Instruction) that underlie

instructional design models. These principles and models require research that is rigorous

and assesses the model’s applicability instead of relying on unsubstantiated testimonials

xii

of usefulness and effectiveness (Gustafson & Branch, 2002). In order to validate the use

of principles and models researchers need to explore and describe the usage of the

principles and models to determine the degree of implementation in different settings

(Richey & Klein, 2007).

The purpose of this study was to examine the use of the First Principles of

Instruction (Merrill, 2002a) and the decisions made by instructional designers —

including project leads, team leads, and designers-by-assignment. The investigation of

the use of the First Principles was part of an effort to determine if these principles were

conducive to being implemented during a fast-paced project that required the design and

development of a large number of online modules. The predominant research question for

this study was: How were the First Principles of Instruction used by instructional

designers, in a short-term, high volume, rapid production of online K-12 teacher

professional development modules? Four supporting questions were also addressed: 1)

What were the conditions under which the First Principles of Instruction were used? 2)

What design decisions were made during the project? 3) What is the level of

understanding of the First Principles by instructional designers? 4) How frequently do the

modules incorporate the First Principles of Instruction?

This case study involved 15 participants who were all instructional designers and

designers-by-assignment that worked on 49 science and math professional development

modules for K-12 teachers within a short 11-week time period. Participant interviews,

extant data —project management documents, e-mail communications, personal

observations, recordings of meetings, participant surveys, and the evaluation of nine

online modules consisted of the data collected in this design and development research

study. The results indicated the First Principles of Instruction were not used at the level

expected by the lead designer and may not be conducive to being applied as described by

Merrill (2002a, 2007a, 2009a, 2009b) in this case. The frequency of use of the First

Principles in the modules showed an overuse of the Activation/Tell principle in

relationship to the number of Demonstrations/Show and Application/Ask applications.

Results also indicated that the project requirements, personnel, designer experience, the

physical setting, and training and meetings contributed to decision-making and ultimately

to the use and misuse of the First Principles of Instruction.

1

CHAPTER ONE

INTRODUCTION

One of the key tenets of Instructional Systems Design (ISD) is to create

instruction that is efficient, effective, and engaging (e3) in order to promote learning,

improve performance, and motivate learners (Merrill, in press). ISD consists of

systematic processes with interrelated components that move toward a common goal. The

components include learners, instructional materials, learning environments, instructors

and facilitators (Dick, Carey, & Carey, 2005). In addition, instructional designers and

developers are integral components within the system. Instructional designers and

developers have a common goal of producing e3 instruction. However, even with the

intent of producing e3 instruction, there are many cases where the instruction didn’t meet

the criteria to be efficient, effective, and/or engaging (Merrill, 2009b). Merrill (in press)

asserts that one of the greatest hindrances to e3 instruction is that too often the only

requirement for instructional designers is content knowledge and not an understanding of

the principles of ISD. Likewise, the lack of e3 instruction is also blamed on instructional

designer’s decisions (Rowland, 1993), which can lead to uncontrolled or non-systematic

approaches to designing instruction (Visscher-Voerman, 1999). According to van den

Akker, Boersma, and Nies (1990; as cited in Visscher-Voerman, 1999) there is evidence

that the design processes could be improved when instruction doesn’t fully meet e3

standards.

One design practice that can improve the impact of instruction is the appropriate

use of ISD models. ISD models can provide structure and order and are used to create a

good standard in designing instruction (Richey, 2005). ISD models can provide

immediate value (Dick, Carey, & Carey, 2005) by regulating the instructional design

process and guiding the instructional designer into creating e3 instruction (Gustafson &

Branch, 2002). There have been a myriad of ISD models created since the 1970’s

(Gustafson & Branch, 2002) and most of these models encompass a fundamental set of

2

principles including principles of analysis, design, development, implementation, and

evaluation (Gustafson & Branch, 2002; Branch & Merrill, 2012). These basic set of

principles can be situated in multiple ways within an ISD model and the degree and

method of embodiment within a model can determine how effective, efficient, and

engaging the instructional intervention will be (Merrill, in press). In addition to

implementation of ISD principles, a model needs to be grounded in theory. Gustafson and

Branch (2002) claim that the “greater the compatibility between an [ISD] model and its

contextual, theoretical, philosophical, and phenomenological origins, the greater the

potential for success in constructing effective learning environments” (pg. 16). Even

though many ISD models are representative of effectual theories of learning, instruction,

and design there are challenges associated with ISD models and their use.

The challenges begin with model selection. Due to the diversity of instructional

design projects, performance problems, and learning environments it can be difficult to

choose an appropriate model to solve all of the design problems in a project (Visscher-

Voerman, 1999). Some ISD models have been characterized as restrictive, stifling,

passive, inflexible, lacking adaptability, or too simple (Branch, 1997; Wedman &

Tessmer, 1993). Other professionals criticize that some ISD models are “clumsy” and

they take too long to implement in a “speed-maddened” world of ISD (Gordon & Zemke,

2000). Difficulty implementing a model during a fast-paced design project can be

especially challenging with a team of novice designers (Richey, 1995). Some training

professionals assert that rigidly following ISD models hinder instructional designers’

creativity and the models do not address attitudinal or motivational elements (Gordon &

Zemke, 2000) which result in ineffective, inefficient, and disengaging instruction. Other

ISD professionals acquiesce on some criticisms; however, they assert that most criticisms

are based upon a few poor examples of inappropriate model choice and application. In

particular, the focus during the application of the models were activity-driven instead of

outcome or goal-driven (Zemke & Rossett, 2002). Furthermore, Merrill, Barclay, & Van

Schaak (2008) posit that it is the failure to implement fundamental underlying principles

of instruction, within a model, that is the cause of ineffective, inefficient, and disengaging

instruction.

3

Merrill (2002a) created a set of fundamental principles of instruction that are

believed to create e3 instruction. The First Principles of Instruction (see Merrill, 2002a,

2007a, 2007b, 2009a, 2009b) are a prescriptive set of interrelated instructional design

principles that consist of activating prior knowledge, using specific portrayals to

demonstrate component skills, application of newly acquired knowledge and skills, and

integrating the new knowledge and skills into the learner’s world. The central underlying

principle is contextualizing the instruction based on real-world tasks. Merrill (in press)

hypothesizes that if one or more of the First Principles are not implemented then a

diminution of learning and performance will occur. However, there are only a few studies

that indicate the efficaciousness of the First Principles of Instruction (see Frick, Chadha,

Watson, Wang, & Green, 2009; Gardner, 2011; Rosenburg-Kima, 2012; Thomson,

2002). Most claims of efficacy of ISD models as well as the First Principles of Instruction

are anecdotal and empirically unsubstantiated.

Claims of effectiveness made by ISD model users have taken precedence over

empirically validating ISD models. This phenomenon can be attributed to a lack of

comprehensive model validation procedures as well as time restraints and other limited

resources (Richey, 2005). Richey (2005) posits that theorists and model developers tend

to postulate the validity of a model due to its logicality and being supported by literature,

as is the case with the First Principles of Instruction. Likewise, designers tend to equate

the validity of a model with an appropriate fit within their environment; that is, if using

the model is easy, addresses client needs, supports workplace restraints, and the resulting

product satisfies the client then the model is viewed as being valid (Gustafson & Branch,

2002; Richey, 2005). Richey and Klein (2007) suggest that design and development

research, specifically model research, could validate the effectiveness of instructional

design principles, models, and processes.

Purpose of Study

The purpose of this study was to investigate the use of First Principles of

Instruction and the design and development decisions made by instructional designers to

determine if these principles are conducive to being implemented in a short-term, high

volume, rapid production of teacher professional development modules.

4

The short-term, high volume nature of the project refers to the project’s short 11-

week timeline and the creation of 49 online modules within that timeframe. The rapid

production of the modules refers to the processes taken to complete the modules in a

systematic way in order to meet the deadline. The instructional design project, used as the

context for this research study, employed nearly 30 instructional designers and designers-

by-assignment (i.e. designers who have not have formal training or education in

instructional design) to create a set of online professional development modules for K-12

teachers. The modules instructed teachers on the newly adopted and revised state science

and math standards and benchmarks. In addition, the modules contain instructional

strategies teachers can use to fulfill the math and science standards and benchmarks in

their classrooms. The First Principles of Instruction were used as a framework to create

these modules because these principles were centered on real-world tasks that seemed to

be applicable to the content and context of this instructional design project.

Research Questions

The primary research question that was addressed in this study is: How were the

First Principles of Instruction used by instructional designers, in a short-term, high

volume, rapid production of online K-12 teacher professional development modules?

Supporting Research Question 1: What are the conditions (i.e. client

restrictions, resource limitations, instructional design setting) under which the First

Principles of Instruction were used?

Supporting Research Question 2: What design decisions regarding the First

Principles of Instruction were made during the project?

Supporting Research Question 3: What is the level of understanding of the First

Principles of Instruction by instructional designers?

Supporting Research Question 4: How frequently do the modules incorporate

the First Principles of Instruction?

Significance of the Study

Most previous studies that are associated with the First Principles of Instruction

were experimental or quasi-experimental designs where the First Principles of Instruction

were used as the treatment condition and compared it with a topic-centered or controlled

5

condition (Francom, 2011; Rosenberg-Kima, 2012). Other studies explored the use of

First Principles of Instruction as a framework for active learning (Gardner, 2011b) or

examined the relationship between novice and expert instructional designers and their use

of the First Principles of Instruction (Rauchfuss, 2010). While these studies contribute to

the instructional systems design field and to the understanding of the First Principles of

Instruction, more research should be conducted in order to validate the use of the First

Principles of Instruction within different situations. In order to support or refute the claim

that the principles can be implemented “in any delivery system or using any instructional

architecture” (Clark, 2003 as cited in Merrill, Barclay, & van Schaak, 2008) instructional

design and development research should be conducted.

Van den Akker and Kuiper (2008) posit the need to conduct more of this type of

research in order to encompass the expanding view of instructional design, which

includes educational design. Educational design can incorporate additional teaching and

learning components like, the role of the teacher. They also claim the need for more

“interactive and developmental” approaches that supports the development and

refinement of instructional design models (see pp. 745-746). Richey and Klein (2007)

concur with the importance of conducting design and development research in order to

validate the use of instructional design models, which includes the fundamental principles

(i.e. First Principles of Instruction) that underlie such models.

These principles and models require research that is rigorous and assesses their

applicability instead of relying on unsubstantiated testimonials of usefulness and

effectiveness (Gustafson & Branch, 2002). In order to validate the use of principles and

models, researchers should explore and describe the use of the principles and models to

determine the degree of implementation in different settings (Richey & Klein, 2007).

As stated previously, this study aims to explore the application of the First

Principles of Instruction and design decisions made by instructional designers, of varying

skill and experience levels, in the production of online teacher professional development

modules. The rapid-pace, high volume of modules, and the very short timeline are

characteristics of the instructional design setting. This study is significant because of the

need to substantiate claims of efficacy made by model developers and model users

(Richey, 2005). The answers to the research questions may provide some insight to ISD

6

practitioners and researchers about how novice and expert instructional designers apply

the First Principles of Instruction in a fast-paced environment.

7

CHAPTER TWO

LITERATURE REVIEW

This chapter provides an overview of Instructional Systems Design (ISD) models

and their theoretical foundations. In addition, the benefits and challenges will be

discussed as well as the need to conduct research on the development and use of models

so as to provide substantiation and validation. Also, the First Principles of Instruction will

be discussed in detail. While the categorization of the First Principles of Instruction is

questionable (i.e. theory? model? or simply principles?) the assertion is made that these

prescriptive principles can also fall under the category of a model or even possibly a

theory. Next, there is a review of literature regarding expert and novice decision-making

skills and choices. Finally, this chapter concludes with a description of design and

development research and the need to conduct model use and validation research.

Differentiating Theories, Models, and Principles

Throughout the literature, it should be noted, that people interchangeably use the

terms theory, model, and principle. There is a fine line between each of these terms that

can, understandably, cause confusion. Following these paragraphs that define a theory,

model, and principle there will be a more lengthy definition of models provided along

with a few significant theories that have influenced the development of ISD models. In

the proceeding paragraphs theories, models, and principles will be briefly defined in an

effort to support a claim that the First Principles of Instruction can be viewed as an ISD

conceptual framework that includes both model and principle characteristics.

Theory. Reigeluth (1983) defines a theory as a “set of principles that are

systematically integrated and are a means to explain and predict instructional

phenomena” (p. 21). Andrews and Goodson (1980) explain that a model can incorporate

multiple theories and theories help us to more fully understand the learning environment.

Hersey, Blanchard, and Dewey (2001) state that a theory “attempts to explain why things

happen as they do…and is not designed to recreate events” (p.172). In the ISD field,

8

theories have been developed to explain how learning occurs. These theories have been

developed as a result of observing behavioral changes and the processes and triggers that

brought about that change (Driscoll, 2005). Theories are used to predict the outcome of a

series of events (Richey, Klein, & Tracey, 2011).

Models. Hersey, Blanchard, and Dewey (2001) affirm that a model “is a pattern

of already existing events that can be learned and therefore repeated” (p. 172). A model is

used to describe the application of a theory and as stated previously a model can

encompass many theories. Models are used and adapted by practitioners (Reigeluth,

1983) whereas scholars, generally, conduct theory development.

Principles. Principles are described as being a relationship that is “ always true

under appropriate conditions regardless of program or practice” (Merrill, Barclay, & Van

Schaack, 2008, p. 175). Reigeluth (1983) defines principles as “a relationship between

two actions or changes” (p. 14). He categorizes the relationships as correlational, causal,

deterministic, or probabilistic. A relationship may be correlational when there is no

indication of which action is affected by another action and causal when there is an

indication of which action is influenced by another action (Reigeluth, 1983).

Deterministic relationships is when the cause “always has the stated effect” and

probabilistic is when the relationship often or sometimes “has the stated effect” (p. 14).

Instructional Systems Design Models

Models are used in most disciplines as communication tools that represent ideas,

patterns, processes, and cycles. Models may help to visualize things that are difficult to

see, reveal gaps in our knowledge, and can help make predictions (Ryder, n.d.; Severin &

Tankard, 2001). Models are often exclusive to particular situations (Gustafson & Branch,

2002; Rothwell & Kazanas, 2008) and not generalizable across domains or environments.

Richey, Klein, & Tracey (2011) define models as “representations of reality

presented with a degree of structure and order, and… are typically idealized” (p. 8).

Deutsch (1952) characterizes models as being “structured symbols of operating rules

which is supposed to match a set of relevant points in an existing structure or process” (p.

357). He adds that models are necessary for understanding complex systems and

processes (Deutsch, 1952). Others define models as graphical representations (Andrews

9

& Goodson, 1980) of phenomena (physical phenomena, complex forms, systematic

functions & processes) that occur in the real world (Gustafson & Branch, 2002; Severin

& Tankard; 2001).

Most ISD models encompass a related set of tasks that involve some type of

analysis, selection of pedagogical strategies, learning activities and assessments,

developing teaching and learning materials, execution of the instruction, and evaluating

for instructional effectiveness and learning (Gustafson & Branch, 2002; Branch &

Merrill, 2012). Andrews and Goodson (1980) state that ISD models contain descriptive,

prescriptive, predictive, and explanatory components. Some ISD models use verbal

descriptions of pedagogical criteria and selection processes; other models use graphical

analogies to show a set of prescribed steps and verbal descriptions of procedures.

Descriptive models illustrate a specific learning environment and how it’s related

components will be affected (Edmonds, Branch, & Mukherjee, 1994). Prescriptive

models, on the other hand, provide a framework for how the learning environment can be

created or adapted to ensure the outcomes are brought forth (Edmonds, Branch, &

Mukherjee, 1994; Reigeluth, 1983).

Reigeluth (1983) defines one type of ISD model, the instructional model, as “an

integrated set of strategy components” (p. 21) like sequencing of content, use of

examples, practice, and motivation elements, which differ from instructional

development or process models like ADDIE. Further, he states that instructional models

may be fixed (descriptive) or adaptive (prescriptive). When an instructional model is

fixed the description stays the same despite the learner’s role. Whereas, an adaptive

model prescribes variations taking into account the learner’s role and responses during

instruction (Reigeluth, 1983).

Some scholars believe that, embedded within ISD models, there is a predictive

power— when the model is applied appropriately, it can predict that the instruction will

be effective (Andrews & Goodson, 1980; Gagné, Wager, Golas, & Keller, 2005). On the

contrary, Edmonds, Branch, and Mukherjee (1994) claim that one of the main criticisms

of ISD models is that they don’t have predictive power and lack methods that predict

success in specific situations (see p. 55). Gustafson and Branch (2002) addressed several

assumptions about ISD models. Among those assumptions they assert that there is not a

10

single ISD model that is perfectly suited to fit the majority of design and development

environments (Gustafson & Branch, 2002; Zemke & Rossett, 2002). Consequently,

instructional designers should be knowledgeable and skilled enough to apply and adapt

the models to fit specific project requirements and environments.

Benefits of ISD Models

The ultimate goal of instruction is to improve performance. Benefits of using ISD

models include “facilitate[ing] intentional learning” (Gagné et al., 2005, p. 1) and

providing standardization that supports good instructional design practices (Richey,

2005). ISD Models are used to assist instructional designers in the planning, designing

and developing, and the implementation of instruction. As stated previously, ISD models

can be beneficial in communicating complex ideas and processes (Richey, 2005; Ryder,

n.d.). Being able to communicate with stakeholders while developing instruction may

prevent unnecessary challenges in the future. Using ISD models can provide “immediate

value” (Gagné et al., 2005, p. 2) by providing assistance to instructional design

practitioners by offering necessary guidance through detailed prescriptive steps and

descriptions (Reigeluth & Carr-Chellman, 2009; Richey, Klein, & Tracey; 2011) and can

“inspire” instructional designers as they solve the complex problems of ISD (Kirschner,

Carr, van Merriënboer, & Sloep, 2002). The use of ISD models can contribute to the

refinement of the model and improvement of the theory it was based upon (Andrews &

Goodson, 1980) thus contributing to the improvement of teaching and learning and the

advancement of the instructional design knowledge base.

Challenges and Criticisms of ISD Models

Some ISD model authors and theorists claim their models are universal and can

be applied in many types of environments and under various conditions. However, in

reality, most models are situation specific (Gustafson & Branch, 2002; Visscher-

Voerman & Gustafson, 2004). Visscher-Voerman (1999) reported on several studies

about the instructional design activities designers participated in and her findings

indicated that instructional designers did not follow all of the steps as prescribed in ISD

models. Not following all of the prescribed steps can be a detriment to the quality of

instruction since it has been stated an ISD model can “predict” that the instruction will be

11

effective (Andrews & Goodson, 1980) however, that only stands true when the model is

applied appropriately (Merrill, in press). The application of a model during a fast-paced

instructional design project can be particularly taxing on novice instructional designers

(Richey, 2005) thus, affecting the quality of the instruction.

Since the 1970’s there has been a proliferation of ISD models causing some

difficulty in the selection of a model that can help solve the instructional design problem

appositely (Edmonds, Branch, & Mukherjee, 1994; Gustafson & Branch, 2002; Visscher-

Voerman, 1999). Furthermore, most ISD models have never been validated for efficacy

and usefulness (Andrews & Goodson, 1980; Gustafson & Branch, 2002; Richey, 2005)

causing designers to be reluctant to adopt and adapt the model in fear of risking the

success of a project (Andrews & Goodson, 1980). In addition, some designers may have

a strong persuasion to one learning theory or ISD model and will try to use and adapt that

model in most design projects they are involved with (Andrews & Goodson, 1980)

without taking into consideration the specificity of the design project. Other critics find

that the use of ISD models can thwart instructional designers creativity. Andrews and

Goodson (1980) assert that instructional designers should understand how and why the

model was developed in order to determine the model’s appropriateness for the situation.

One study that supports these criticisms was conducted by Branch (1997)

examining the graphic elements of instruction design models. Participants for this study

included 31 graduate students, half of whom were majoring in Instructional Technology

and nearly all the participants were unfamiliar with many of the details relating to ISD.

Branch’s participants were randomly assigned to one of three groups. Each group

reviewed the same diagrams but each in a different order. The diagrams were boxes [Dick

and Carey Model (1996)], ovals [Edmonds, Branch, & Mukherjee (1994)], or a mix of

both boxes and ovals [adapted from Edmonds, Branch, & Mukherjee (1994)]. The

participants were asked to provide descriptive words for each of the diagrams. The most

common descriptive words were confusing, flowing, and linear. Branch (1997) came to

the conclusion that many of the ISD model diagrams were “interpreted as stifling,

passive, lock-step and simple” (p. 429).

12

As illustrated previously, there are many challenges and criticisms of ISD models.

However, the benefits of providing guidance to instructional designers, especially novice

or designers-by-assignment, may outweigh the challenges of using ISD models.

Theoretical Foundations of ISD Models

Most ISD models are grounded in theory including behavioral learning theory,

cognitive learning theory, general systems theory, and instructional theory. ISD models

are often influenced by multiple theories. For example, a particular ISD model may have

steps that include all of the following: (1) the teacher’s role in the classroom, specifically

management and disciplining students (behaviorisms), (2) the student’s role in

understanding their own knowledge levels and deficiencies (cognitive learning theory),

(3) the school’s role in the cycle of evaluation (general systems theory), and (4) the peer’s

role in facilitating learning by providing feedback to their classmate (instructional

learning theory).

Behavioral Learning Theory. Seel and Dijkstra (1997) state that ISD models are

generally based on planning and evaluation that is characterized in the stimulus-response

theory and stimulus control, which is a reminiscent of behaviorism. The central theme

behind behavioral learning theory, simply put, is B. F. Skinner’s belief that learning can

be understood through observing cues of a learner within his or her environment

(Driscoll, 2005, 2012). Characteristics of a model based upon behavioral learning theory

can include conducting a skill analysis and determining the component skills necessary to

change a behavior and improve performance (Gropper, 1983). An element of the 4C/ID

model (van Merriënboer, Clark, and de Croock, 2002) that was influenced by

behaviorism is the emphasis on the “integration and coordinated performance of task-

specific constituent skills” (p. 39).

Cognitive Learning Theory. Cognitive approaches to teaching and learning

foster the acquisition of knowledge and attainment of higher-order thinking skills

(Tennyson & Rasch, 1988). Cognitive psychologists and theorists asservate the mental

processes are what explain how learning occurs (Richey, Klein, & Tracey, 2011). Jerome

Bruner, a cognitive psychologist, suggested that one factor for human development (i.e.

knowing when a child has developed; the endpoint) is thinking and a well-developed and

intelligent mind that can think at higher levels and make predictions (Driscoll, 2005).

13

Sink (2008) states that cognitive learning theory provides instructional designers with the

“conditions that make it more likely learners will acquire the thinking strategies (p. 205)”

necessary to achieve in the workplace and in other learning environments.

Robert Gagné created a taxonomy of learning outcomes and learning

conditions in addition to the Nine Events of Instruction (Driscoll, 2005) all of which

have a foundation in Cognitive Learning Theory. The learning outcomes consist of

(1) Verbal information

(2) Intellectual skills

(3) Psychomotor skills

(4) Attitudes, and

(5) Cognitive strategies (Reiser, 2007).

In particular, verbal information, intellectual skills, and cognitive strategies

emphasize cognitive development. Verbal information strategies include

memorization and recall (Driscoll, 2005; Gagné et al., 2005), mnemonics, and

rehearsals (Richey, Klein, & Tracey, 2011). Intellectual skills, as described by Gagné

et al. (2005), is the basis for formal education and the skills to develop can range

from skills appropriate for early childhood (e.g. vocabulary development) to higher

education (e.g. advanced mathematical calculations for engineers, educational

research techniques). Cognitive strategies are the “capabilities that govern the

individual’s own learning, remembering, and thinking behavior” (p. 50). Cognitive

strategies are usually domain specific and are developed through experience. One

strategy to promote the cognitive strategies outcome is to use real‐world cases that

foster critical thinking and strengthen problem‐solving skills (Gagné et al., 2005;

Tennyson & Rasch, 1988).

General Systems Theory. Most ISD models describe systematic processes for

designing instruction and are based upon general systems theory (Edmonds, Branch, &

Mukherjee, 1994). A system consists of interdependent groups of things that interact

regularly and perform functions consistently toward a common goal. In a system each

component is critical to the successful functioning of the system (Dick, Carey, & Carey,

2005; Edmonds, Branch, & Mukherjee, 1994; Richey, Klein, & Tracey, 2011).

14

General systems theory is also known as a systems approach (Richey, Klein, &

Tracey, 2011). A systems approach to instructional design generally consists of various

analyses, defining learning and performance objectives, designing and developing

interventions, implementation of the intervention, and formative and summative

evaluations (Dick, Carey, & Carey, 2005; Richey, Klein, & Tracey, 2011). The most well

known models based on general systems theory are the ADDIE model (Figure 2.1) and

the Dick and Carey model (Figure 2.2). A lesser-known instructional design model is

Merrill’s (2002b) Pebble-in-the-Pond model (Figure 2.3), which describes a systematic

approach to applying the First Principles of Instruction.

Figure 2.1. The ADDIE model is a systematic approach to instruction. Diagram from (Gustafson & Branch, 2002, p. 3).

15

Instructional Theory. Instructional theory was the predecessor of instructional

systems design theories and models (Richey, Klein, & Tracey, 2011). Instructional theory

explains the principles of curriculum design and student learning including the

identification and alignment of learning objectives with instructional strategies, content

selection, sequencing of content, assessments, and feedback (Richey, Klein, & Tracey,

Figure 2.2. Dick and Carey Systems Approach Model. Diagram from (Dick, Carey, & Carey, 2005).

Figure 2.3. Merrill’s (2002b) Pebble-in-the-Pond Model.

16

2011). Instructional theory “offers explicit guidance on how to better help people learn

and develop… kinds of learning and development may include cognitive, emotional,

social, physical, and spiritual” (Reigeluth, 1983, p. 5). Gagné’s Nine Events of

Instruction (see Table 2.1) is one example of an instructional model that has a foundation

in instructional theory because of its emphasis on student learning and the alignment of

instructional strategies with learning outcomes and the conditions of learning. Some

specific element in the Nine Events that directly correlate with instructional theory

include determining and informing learners of the learning objectives, determining

appropriate instructional sequencing of the content and presenting the content, eliciting

performance, and providing feedback to the learner.

Table 2.1

Gagné’s Nine Events of Instruction

1 Gaining Attention

2 Informing Learners of the Objective

3 Stimulating Prior Recall

4 Presenting the Content

5 Providing Learning Guidance

6 Eliciting Performance

7 Providing Feedback

8 Assessing Performance

9 Enhancing Retention and Transfer

(Driscoll, 2005, p. 373)

First Principles of Instruction

Below a detailed description of the First Principles of Instruction, developed by

Merrill (2002a), is presented. Merrill asserts that this set of prescriptive principles is just

that, principles and not a model. The literature review challenges that assertion and for

the purposes of this research the First Principles of Instruction will be viewed as a model.

17

The literature written by Merrill about the First Principles of Instruction has evolved to

include more descriptions, prescribed sequencing, and graphical analogies; all of which

are characteristics of models. In a later publication Merrill (2009d) expanded his original

graphical representation of the First Principles of Instruction to include arrows that

illustrates (see Figure 2.4) a “four-phase cycle of instruction” (p. 52) providing further

evidence that this set of prescriptive principles can also be viewed as a model.

In an open dialog with Dr. M. David Merrill, a leader in the Instructional Systems

Design field and author of the First Principles of Instruction, at the 2003 Association for

Educational Communication and Technology (AECT) International Convention an

audience member asked Dr. Merrill about his concerns for the ISD field and the practice

of instructional design and development (Spector, Ohrazda, Van Schaack, & Wiley,

2005). Merrill’s response was two-fold. First, he expressed a concern that most

instruction was being designed and developed by “designers-by-assignment.” Designers-

by-assignment are individuals who are creating instruction and doing instructional design

tasks without being formally trained in instructional design (Merrill, 2007a). Further,

Merrill asserts that graduates of instructional design programs were not actually

Figure 2.4. The First Principles of Instruction, an illustration of the four-phase cycle. Diagram from (Merrill, 2009d).

18

designing instruction and developing instructional design expertise but working as project

managers and supervisors of “designers-by-assignment” (Merrill & Wilson, 2007;

Spector, et al., 2005). Merrill (2007a; in Spector, et al., 2005) claims that 95% of all

instructional design work is created by designers-by-assignment which may be the cause

of so much instruction being ineffective, inefficient, and disengaging. Second, Merrill

states that as a field, ISD’s “real value proposition is not training developers; it’s studying

the process of instruction… [The] value is making instruction more effective and more

efficient no matter how we deliver it or what instructional architecture we use. We ought

to be studying the underlying process of instruction” (Spector et al., 2005, p. 309).

Recognizing the need to determine what the underlying processes and

fundamental truths were in ISD, Merrill sought to systematically review the abundance of

ISD theories and models, research on learning and instruction, and common instructional

design practices (Merrill, 2002a, 2009a, 2009b) with the intent to discover the basic

truths of instruction and learning. Merrill assimilated the literature and identified a set of

basic principles that theorists, model authors, ISD leaders, as well as researchers and

practitioners could agree upon (Merrill, 2009c). A principle is a proposition or

relationship that is true under “appropriate conditions regardless of the methods or

models which implement” the principles (Merrill, 2009d, p. 43). The main criterion for

the inclusion of a principle was that it had to support e3 learning—effectiveness and

efficiency in learning as well as promote learner engagement (Merrill, 2009d).

Subsequent criteria included the general applicability of the principle in common

instructional design methods, programs and environments (Merrill, 2002a).

As a result of this lengthy review, five fundamental principles of teaching and

learning were identified and complied to create the First Principles of Instruction (see

Figure 2.5). The five principles encompassed in the First Principles of instruction

include: (1) problem or task-centered, (2) activation, (3) demonstration, (4) application,

and (5) integration. These principles are defined as (Merrill, 2002a, pp. 45-50):

(1) Problem or task-centered– Learning is promoted when learners are

engaged in solving real-world problems

(2) Activation Phase– Learning is promoted when relevant previous

experience is activated

19

(3) Demonstration Phase– Learning is promoted when instruction

demonstrates what is to be learned rather than merely telling

information about what is to be learned

(4) Application Phase– Learning is promoted when learners are

required to use their new knowledge or skill to solve problems

(5) Integration Phase– Learning is promoted when learners are

encouraged to integrate (transfer) the new knowledge or skill into

their everyday life

Activation

A learner’s prior knowledge is said to be one of the most robust factors that

contribute to the acquisition of new knowledge and skill development consequently

leading to higher levels of achievement (Lazarowitz & Lieb, 2005; Todorova & Mills,

2011). Merely having a learner recall information and previous experiences is not

Figure 2.5. Framework of Merrill’s (2002a, 2008) First Principles of Instruction. *Merrill initially used the term problem-centered and later added the term task-centered.

20

sufficient to stimulate a pertinent mental model that is necessary to construct new

knowledge (Merrill, in press). Using inappropriate strategies to activate prior knowledge

can have an adverse affect on a learner’s ability to achieve by allowing the learner to

recall a mental model that is not relevant (Merrill, in press; Todorova & Mills, 2011).

Todorova & Mills (2011) posit that effective instructional strategies, to activate

prior knowledge, should “ build positive and consistent knowledge” and lessen or

eliminate the damaging influence of misconceptions (p. 23). Lazarowitz and Lieb (2005)

suggest using a formative assessment to determine precisely what learners’ prior

knowledge is and then develop strategies to build upon the varying levels of learners’

prior knowledge. Merrill (2009d) asserts that learners sharing prior experiences with their

peers enhance activation of prior knowledge. In addition, a key strategy is to ensure there

is some type of facilitation to ensure that appropriate mental models are being activated

Merrill (2009d).

Demonstration

Merrill selected the demonstration or “show me” principle in order to emphasize

the great importance of showing learners how to apply the component skills instead of

just telling the learners what to do (Merrill, in press). Demonstrations can provide a

meaningful context to general information, help learners develop causal explanations

(Straits & Wilke, 2006), and augment a learner’s imagination (Driscoll, 2005). The use of

demonstrations can be used to attract the learner’s attention by arousing perceptual

curiosity (Keller & Deimann, 2012) and sustaining curiosity by coupling demonstrations

with problem-solving activities (Driscoll, 2005).

Examples and non-examples can be used to demonstrate concepts; step-by-step

process should be shown to demonstrate procedures; modeling is a technique used to

demonstrate behaviors; and graphic organizers, charts, and models can be used to portray

processes (Merrill, 2002a). The proximity of the information and the demonstration,

whether it is proximity of time or location, is equally as important as the demonstration

itself. Mendenhall, Buhanan, Suhaka, Mills, Gibson, and Merrill (2006a, 2006b) (see

Figure 2.6) designed the interface of an online entrepreneurship course to guide learners

in “processing the [general] information and for attending to the critical aspects of the

demonstration in a specific [portrayal]” (Merrill, in press, p. 11). The presentation of

21

general information is located on the left and the demonstration/portrayal is on the right

side allowing the learners to see the direct relationship between the concepts and the

demonstration of the concepts.

Application

Merrill (2002a, 2007b) uses the term application to denote instructional

interactions or practice of knowledge and skills that are being taught during instruction.

After a component skill is taught and demonstrated the learner should be provided with

multiple opportunities to apply their new knowledge. During the application phase

learners should be given guidance (Merrill, 2002a). Guidance should be diminished as

learners become more proficient during practice and guidance should be withdrawn after

the learner demonstrates their ability to complete the tasks on their own (Driscoll, 2005).

Part of guiding the learner is to provide valuable feedback along the way. Feedback

should be corrective, specific, and result in improved performance (Merrill, 2007a).

Figure 2.6. General information is located directly next to the demonstration/specific portrayal, which guides the learner from the concept being taught to the demonstration of that concept (Mendenhall et al., 2006b).

22

Integration

In order for the transfer of knowledge and skills to occur, a learner must be

provided with an opportunity to apply the newly acquired knowledge and skills in a novel

situation. “Learning from integration is enhanced when learners create, invent, or explore

personal ways to use their new knowledge or skill” (Merrill, 2009d, p.53). In addition,

learning from integration is promoted when learners are given opportunities to go public

with their new knowledge and skills by demonstrating their new skills, pondering and

reflecting on experiences, discussing the things they learned, and defending their

knowledge and skills (Merrill, 2009d; in press).

Problem or Task-Centered

A problem-centered or task-centered approach engages the learner in solving

authentic real-world problems or completing real-world tasks. Merrill (2002a, 2007a,

2007b, 2009, in press) states that knowledge acquisition and skill development occur

when the learner is actively engaged in solving real-world problems or tasks. When

learners are solving real-world problems they are more motivated to learn because

learners find relevance within the authentic environment (Mendenhall et al., 2006a;

Merrill, 2009b; Keller, 2010). An authentic real-world problem is one that can be ill-

structured (Jonassen, 1997), doesn’t usually have a specific outcome or single solution

(Merrill, 2007b), requires the same cognitive demands as if the learner was in the “real-

world” (Savery & Duffy, 1995), and is something the learner can anticipate to confront

later (Merrill, 2007b). Ideally, instruction should contain a progression of problems from

simple to complex with guidance occurring significantly more at the beginning of the

instruction and gradually diminishing to where the learner completes a problem on their

own (Mendenhall, et al., 2006a; Merrill, 2009b).

Merrill prescribes several steps to assist instructional designers in the appropriate

selection of real-world problems and tasks as well as the component skills necessary to

complete the real-world problems and tasks (see Merrill, 2007b; Merrill, in press).

Reigeluth and Carr-Chellman (2009) assert that instructional designers and especially

designers–by–assignment require guidance when trying to apply these principles in

various situations in order to obtain e3 learning. Collins & Margaryan (2005) state that

23

while the First Principles of Instruction is beneficial criteria when designing instruction,

they may not be completely universal as claimed by Merrill (2002a) however, and may

need to be adapted to fit specific needs in various situations. In the following section, the

use of the First Principles of Instruction by researchers and practitioners will be

discussed.

Use of First Principles of Instruction

Gardner (2009, 2010, 2011a, 2011b) has conducted considerable research and

development using the First Principles of Instruction. Gardner (2011a) recognized the

difficulty in applying these principles in real instructional design settings thus he created

a worksheet to assist instructors, who are often untrained in instructional design (i.e.

designers-by-assignment). The worksheet consists of a series of questions and

subsequent strategies on how to apply the principles. Gardner (2010) takes the

instructor/designer-by-assignment through each of the principles asking various

questions. The worksheet contains questions like, “What real-world, relevant problem or

task will the learners be able to perform when they finish this lesson or unit?” “How will

your students preview what they learn?” “How will you show the learners how to

perform real-world problems or tasks?” (p. 22).

Gardner and Jeon (2009) discuss the design and development decisions they made

while creating online training on using a suite of administrative tools (e.g. financial aid,

registration, etc.) for a large university. They describe the conditions (i.e. environment,

client requirements, obstacles) under which they were to apply the First Principles of

Instruction and the decisions they made in order to work around those conditions.

Gardner (2011a) conducted a study on how award-winning professors apply the

First Principles of Instruction in face-to-face courses. The participants of this study

included one professor from each of the following departments: (a) Family, Consumer,

and Human Development, (b) Marketing, Nutrition and Food Science, and (c)

Economics. For the activation phase the professors applied the following strategies to

activate prior knowledge: (1) identified outcomes from prerequisite courses and used that

as the foundation to build the new knowledge; (2) in-class review of course content

presented in prior class sessions; and (3) began each class by asking questions to students

about concepts taught previously and then proceeded to ask more abstract and complex

24

questions. For the demonstration phase, some professors used worked examples to show

how to calculate complex calculations while another professor from the Family,

Consumer, and Human Development department, had her students demonstrate their

lesson plans they developed by teaching a class at a local pre-school. Each student had an

opportunity to teach, then observe and evaluate each other. During the application phase,

these same students were given real-world case studies and discussed the implications of

the cases. The professor used reflection for the integration phase, having students openly

reflected on their experiences and share those experiences with their peers.

Mendenhall, et al (2006a, 2006b) developed an online entrepreneurship course

using the First Principles of Instruction. They describe their use of First Principles of

Instruction emphasizing the progression of problems used in the instruction. Working

closely with their subject matter experts (SME) the instructional designers and SMEs

determined to use real-world cases to help learners create business plans and eventually

starting their own businesses. The progression of whole tasks begins with a simple

business and business plan (i.e. pig farm), to a slightly more complex business plans (i.e.

service business; retail business) all the way to a very complex business plan (i.e.

restaurant business). Mendenhall et al. (2006) also emphasizes how the demonstrations

are used and the practice (i.e. application phase) the learners will engage in during the

instruction.

A pilot study, of the Entrepreneurship course, was conducted among

undergraduate students who were enrolled in a core of business classes that taught the

same concepts (i.e. finances, marketing, business plan writing) as the online

entrepreneurship course. Some participants (module group) were asked to go through the

modules and take a post-test while others (control group) were just given the post-test

without going through the online modules. Seven out of 12 participants in the module

group received a score of 80% or above on the post-test, with six of the module group

participants having received a 90% or above. All eight of the control group participants

received 80% or above with only three having received a 90% or more. The results

indicate that the module using the First Principles of Instruction may be just as effective

as the business core classes.

25

Kim, Mendenhall, and Johnson (2010) described a conceptual framework of how

to apply the First Principles of Instruction in an online English writing course. They

identified a series of problems/whole-tasks that are scaffolded from simple writing tasks

to complex writing tasks. Using a “content-first” approach the learners would see a

completed example of the whole-task before beginning the modules. They applied the

activation principle by choosing a problem for learners to solve from something they use

everyday, e-mail. The learners activated their prior knowledge by writing a procedural

essay on how to open an e-mail. The knowledge and skills gained from the first whole

task are taken into account and used as the foundation for the second whole task. Thus,

building upon prior knowledge each time a learner begins a new whole task. This team of

instructional designers chose to use examples and non-examples as the demonstration

technique. For the application phase the instructional designers chose to have the learners

evaluate their peer’s writing assignments and provide feedback as well as complete a

writing assignment of their own. Finally, for the integration phase students are to

complete a new writing task using their newly acquired skills.

One common theme among most of the above descriptions was working closely

with SMEs to determine an appropriate set of whole tasks and the component skills

associated with the whole tasks. Also it is important to note that the SMEs were not

working as the instructional designers but their role was to provide content to the

instructional designers so the designers could make pedagogical decisions and apply the

First Principles of Instruction. Another noteworthy observation is that not all of the

literature, about the use of First Principles of Instruction mentioned previously, describes

each phase of the First Principles of Instruction or the instructional designers decisions in

full detail.

Research on First Principles of Instruction

Rauchfuss (2010) conducted an exploratory study that examined the correlation

between years of formal instructional design training, experience, and the use of the First

Principles of Instruction. The sample for this study included instructional designers that

had designed and/or developed a course within one year before the study. The designers

for this study represented the military, corporate, and higher education. Rauchfuss

evaluated the courses, submitted by instructional designers, using Merrill’s (2009b) e3

26

evaluation rubric. Participants were given a questionnaire about their years of experience

and formal instructional design training. The scores from the course evaluations and

questionnaire were correlated. The results indicated there were no significant correlations

found between years of experience and years of formal training. Yet, there was a

significant correlation between years of experience and the use of First Principles of

Instruction (i.e. course evaluation scores). Upon further examination, Rauchfuss (2010)

discovered that novice and expert instructional designers applied the demonstration

principle equally but expert instructional designers were more likely to use the other

principles (i.e. activation, application, integration, problem-centered).

Collins and Margaryan (2005) used the First Principles of Instruction as the basis

for creating a model for designing and evaluating courses developed and used in their

organization. They expanded the First Principles of Instruction to include workplace

specific elements (e.g. collaboration, supervisory and stakeholder involvement,

technology, accommodation of individual learner needs). There were 68 workplace

related courses evaluated using, what Collins and Margaryan (2005) called, the Merrill+

evaluation criteria. Results indicated that on average the courses scored acceptable or

higher (on a scale of 1 to 5, acceptable is 3 and above and a score of 4 and above

indicates an advanced level of application of the principle). Specifically the application of

the problem-centered, application, and integration phases scored the highest while the

activation and demonstration phases scored 2.7 and 2.6 respectively.

Most of the research relating to the First Principles of Instruction is quantitative

using experimental, quasi-experimental, or exploratory methods that looked at various

learning outcomes like self-direction, motivation levels, and improved performance, (see

Gardner, 2011b; Francom, 2011; Rosenberg-Kima, 2012; Thomson, 2002). Very little

research has been conducted on how instructional designers use the First Principles of

Instruction, their design decisions, or the ecological validity of the application of these

principles. While the research mentioned previously is important and necessary in the

validation of the First Principles of Instruction, significantly more research needs to be

conducted. In order to validate the universality and feasibility of applying the principles

research needs to be conducted under conditions that are not controlled and experimental

but under conditions that are natural and dynamic.

27

Instructional Designer Decision-Making

Instructional Systems Design (ISD) is a complex, ill-structured, problem-solving

activity (Jonassen, 1997) that involves decision-making procedures (Winn, 1990). The

instructional design process is dependent upon the decisions that instructional designers

make (Rowland, 1993). Decisions made by instructional designers vary significantly

between novice and expert instructional designers; furthermore, variations among expert

designers are also apparent (Rowland, 1993). Research has indicated that expertise, in

other domains (and presumably in ISD) doesn’t equate to good decision-making 100% of

the time and that sometimes experts tend to make inadequate decisions that are

“inaccurate and unreliable” (Shanteau, 1992, p. 11). Shanteau (1992) posits that previous

research that indicates that most experts consistently make poor decisions is deficient. He

states that decision-making is situation specific and dependent on the skills and abilities