[] IEEE 1120-2004 - IEEE Guide for the Planning, D(Book Fi org)

2006 IEEE Symposium on Computational Intelligence and ...

-

Upload

khangminh22 -

Category

Documents

-

view

0 -

download

0

Transcript of 2006 IEEE Symposium on Computational Intelligence and ...

2006 IEEE Symposium on Computational Intelligence and

Games

CIG’06

May 22-24 2006

Reno/Lake Tahoe, USA

Sushil Louis and Graham Kendall (editors)

IEEE Catalog Number: 06EX1415 ISBN: 1-4244-0464-9 Library of Congress: 2006926422 Copyright and Reprint Permission: Abstracting is permitted with credit to the source. Libraries are permitted to photocopy beyond the limit of U.S. copyright law for private use of patrons those articles in this volume that carry a code at the bottom of the first page, provided the per-copy fee indicated in the code is paid through Copyright Clearance Center, 222 Rosewood Drive, Danvers, MA 01923. For other copying, reprint or republication permission, write to IEEE Copyrights Manager, IEEE Operations Center, 445 Hoes Lane, P.O. Box 1331, Piscataway, NJ 08855-1331. All rights reserved. Copyright ©2006 by the Institute of Electrical and Electronics Engineers

1-4244-0464-9/06/$20.00 2006 IEEE. 2 CIG'06 (May 22-24 2006)

Contents Preface … … … … … … … … … … … … … … … … … … … … … … … … … … … 5

Acknowledgements … … … … … … … … … … … … … … … … … … … … … … 6

Program Committee … … … … … … … … … … … … … … … … … … … … …. 7

Plenary Presentations Looking Back at Deep Blue Murray Campbell, Member of the Deep Blue Team, IBM TJ Watson Research Center. … …… 9 Challenges for Game AI Ian Lane Davis, CEO of MAD DOC software ………….…………………………………………… 9 Beyond Entertainment: AI Challenges for Serious Games Michael Van Lent, Institute for Creative Technologies ……………………….…………………. 9

Oral Presentations ChessBrain II – A Hierarchical Infrastructure for Distributed Inhomogeneous Speed-Critical Computation Colin M. Frayn, Carlos Justiniano and Kevin Lew ………………………………………………..13 Grid-Robot Drivers: an Evolutionary Multi-agent Virtual Robotics Task Daniel Ashlock …………………………………………………………………………………………… 19 Optimizations of data structures, heuristics and algorithms for path-finding on maps Tristan Cazenave …………………………………………………………………………………………. 27 Decentralized Decision Making in the Game of Tic-tac-toe Edwin Soedarmadji ………………………………………………………………………………………. 34 Integration and Evaluation of Exploration-Based Learning in Games Igor V. Karpov, Thomas D’Silva, Craig Varrichio, Kenneth O. Stanley, Risto Miikkulainen ….. 39 A Coevolutionary Model for The Virus Game P.I.Cowling, M.H.Naveed and M.A. Hossain ………………………………………………………… 45 Temporal Difference Learning Versus Co-Evolution for Acquiring Othello Position Evaluation Simon M. Lucas and Thomas P. Runarsson …………………………………………………………… 52 The Effect of Using Match History on the Evolution of RoboCup Soccer Team Strategies Tomoharu Nakashima, Masahiro Takatani, Hisao Ishibuchi and Manabu Nii ………………….. 60 Training Bao Game-Playing Agents using Coevolutionary Particle Johan Conradie and Andries P. Engelbrecht …………………………………………………………. 67 Towards the Co-Evolution of Influence Map Tree Based Strategy Game Players Chris Miles and Sushil J. Louis ………………………………………………………………………… 75 A Player for Tactical Air Strike Games Using Evolutionary Computation Aaron J. Rice, John R. McDonnell, Andy Spydell and Stewart Stremler …………………………. 83 Exploiting Sensor Symmetries in Example-based Training for Intelligent Agents Bobby D. Bryant and Risto Miikkulainen ……………………………………………………………… 90 Using Wearable Sensors for Real-Time Recognition Tasks in Games of Martial Arts – An Initial Experiment Ernst A. Heinz, Kai S. Kunze, Matthias Gruber, David Bannach and Paul Lukowicz …………… 98 Self-Adapting Payoff Matrices in Repeated Interactions Siang Y. Chong and Xin Yao …………………………………………………………………………….. 103 Training Function Stacks to play the Iterated Prisoner’s Dilemma Daniel Ashlock …………………………………………………………………………………………….. 111 Optimization Problem Solving using Predator/Prey Games and Cultural Algorithms Robert G. Reynolds, Mostafa Ali and Raja’ S. Alomari ……………………………………………… 119

1-4244-0464-9/06/$20.00 2006 IEEE. 3 CIG'06 (May 22-24 2006)

Capturing The Information Conveyed By Opponents’ Betting Behavior in Poker Eric Saund …………………………………………………………………………………………………. 126 Modeling Children’s Entertainment in the Playware Playground Georgios N. Yannakakis, Henrik Hautop Lund and John Hallam ………………………………….. 134 NPCs and Chatterbots with Personality and Emotional Response Dana Vrajitoru …………………………………………………………………………………………….. 142 A Behavior-Based Architecture for Realistic Autonomous Ship Control Adam Olenderski, Monica Nicolescu and Sushil J. Louis …………………………………………….148 Modelling and Simulation of Combat ID - the INCIDER Model Vincent A., Dean D., Hynd K., Mistry B. and Syms P. ……………………………………………….. 156 Evolving Adaptive Play for the Game of Spoof Using Genetic Programming Mark Wittkamp and Luigi Barone ………………………………………………………………………. 164 A Comparison of Different Adaptive Learning Techniques for Opponent Modelling in the Game of Guess It Anthony Di Pietro, Luigi Barone, and Lyndon While ……………………………………………….. 173 Improving Artificial Intelligence In a Motocross Game Benoit Chaper and Colin Fyfe …………………………………………………………………………… 181 Monte-Carlo Go Reinforcement Learning Experiments Bruno Bouzy and Guillaume Chaslot …………………………………………………………………… 187

Poster Presentations Optimal Strategies of the Iterated Prisoner’s Dilemma Problem for Multiple Conflicting Objectives Shashi Mittal and Kalyanmoy Deb ……………………………………………………………………… 197 Trappy Minimax - using Iterative Deepening to Identify and Set Traps in Two-Player Games V. Scott Gordon and Ahmed Reda ………………………………………………………………………. 205 Evaluating Individual Player Strategies in a Collaborative Incomplete-Information Agent-Based Game Playing Environment Andrés Gómez de Silva Garza …………………………………………………………………………… 211 Highly Volatile Game Tree Search in Chain Reaction Dafyd Jenkins and Colin Frayn …………………………………………………………………………. 217 Towards Generation of Complex Game Worlds Telmo L. T. Menezes, Tiago R. Baptista, and Ernesto J. F. Costa ………………………………….. 224 The Blondie25 Chess Program Competes Against Fritz 8.0 and a Human Chess Master David B. Fogel, Timothy J. Hays, Sarah L. Hahn and James Quon ……………………………….. 230 Anomaly Detection in Magnetic Motion Capture using a 2-Layer SOM network Iain Miller, Stephen McGlinchey and Benoit Chaperot ……………………………………………… 236 Intelligent Battle Gaming Pragmatics with Belief Network Trees Carl G. Looney …………………………………………………………………………………………….. 243 Fun in Slots Kevin Burns ………………………………………………………………………………………………… 249 Style in Poker Kevin Burns ………………………………………………………………………………………………… 257 Voronoi game on graphs and its complexity Sachio Teramoto, Erik D. Demaine and Ryuhei Uehara …………………………………………….. 265 Evolving Warriors for the Nano Core Ernesto Sanchez, Massimiliano Schillaci and Giovanni Squillero …………………………………. 272

Author Index … … … … … … … … … … … … … … … … … … … … … … … … 280

1-4244-0464-9/06/$20.00 2006 IEEE. 4 CIG'06 (May 22-24 2006)

Preface The 2006 IEEE Symposium on Computational Intelligence and Games is the second in a series of annual meetings. This volume contains 34 papers scheduled to be presented at the conference as well as 12 posters. There are several papers focusing on computational intelligence in board games. In addition, there seems to be increased interest signified by a growing number of papers relating to evolutionary computing applications in 3D computer games. Papers are organized approximately in the order in which they will be presented. This year, we have contributions from Africa, Asia, Europe, and the Americas. We would like to welcome you all to Reno/Lake Tahoe and we hope you have a productive and enjoyable symposium. Next year’s symposium is in Hawaii – see you there in April 2007. Sushil Louis and Graham Kendall The University of Nevada, Reno, USA and The University of Nottingham, UK

1-4244-0464-9/06/$20.00 2006 IEEE. 5 CIG'06 (May 22-24 2006)

Acknowledgements This symposium could not have taken place without the help of a great many people and organisations. We would like to thank the IEEE Computational Intelligence Society for supporting this second symposium. Their knowledge and experience was tremendous in helping us manage the symposium. We again used the on-line review system developed by Tomasz Cholewo. The system worked perfectly and Tom was always on hand to answer any queries we had. We would like to thank our plenary speakers (Murray Campbell, Ian Lane Davis and Michael Van Lent). Their participation at this event was an important element of the symposium and we are grateful for their time and expertise. The program committee (see next page) reviewed all the papers in a timely and professional manner. We realise that we have been fortunate to have internationally recognised figures in computational intelligence and games represented at this symposium. Sushil Louis and Graham Kendall The University of Nevada, Reno, USA and The University of Nottingham, UK

1-4244-0464-9/06/$20.00 2006 IEEE. 6 CIG'06 (May 22-24 2006)

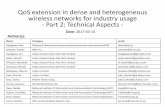

Program Committee

• David Aha, Naval Research Laboratory, USA • Peter Angeline, Quantam Leap Innovations, Inc, USA • Daniel Ashlock, University of Guelph, Canada • Ian Badcoe, NavisWorks Ltd, UK • Luigi Barone, The University of Western Australia, Australia • Bir Bhanu, University of California at Riverside, USA • Darse Billings, University of Alberta, Canada • Alan Blair, University of New South Wales, Australia • Misty Blowers, Air Force Research Laboratory, USA • Bruno Bouzy, Universite Paris 5, France • Kevin Burns, MITRE, USA • Murray Campbell, IBM T.J. Watson Research Center, USA • Darryl Charles, University of Ulster, UK • Ke Chen, The University of Manchester, UK • Sung-Bae Cho, Yonsei University, Korea • Abdenour Elrhalibi, Liverpool John Moores University, UK • Andries Engelbrecht, University of Pretoria, South Africa • Thomas English, The Tom English Project, USA • Maria Fasli, University of Essex, UK • David Fogel, Natural Selection, Inc., USA • Colin Frayn, CERCIA, University of Birmingham, UK • Colin Fyfe, University of Paisley, UK • Andres Gomez de Silva Garza, Instituto Tecnologico Autonomo de Mexico

(ITAM), Mexico • Scott Gordon, California State University Sacramento, USA • Tim Hays, Natural Selection, Inc., USA • Ernst A. Heinz, UMIT, Austria • Phil Hingston, Edith Cowan University, Australia • Evan Hughes, Cranfield University, UK • Hisao Ishibuchi, Osaka Prefecture University, Japan • Graham Kendall, The University of Nottingham, UK • Andruid Kerne, Texas A and M Interface Ecology Lab, USA • Howard Landman, Ageia Technologies, USA • Sushil Louis, University of Nevada, Reno, USA • Simon Lucas, University of Essex, UK • Stephen McGlinchey, University of Paisley, UK • Risto Miikkulainen, The University of Texas at Austin, USA • Chris Miles, University of Nevada, Reno, USA • Martin Müller, University of Alberta, Canada • Monica Nicolescu, University of Nevada, Reno, USA • Jeffrey Ridder, Innovating Systems, Inc., USA • Thomas Runarsson, University of Iceland, Iceland • Kristian Spoerer, The University of Nottingham, UK • Giovanni Squillero, Politecnico di Torino, Italy • Du Zhang, California State University, USA

1-4244-0464-9/06/$20.00 2006 IEEE. 7 CIG'06 (May 22-24 2006)

Plenary Speakers Murray Campbell Member of the Deep Blue Team, IBM TJ Watson Research Center Looking Back at Deep Blue It has been nine years since IBM Research's Deep Blue defeated Garry Kasparov, the then-reigning world chess champion, in an epic six-game match that was closely watched by millions. In this talk I will present the background that led up to the decisive match, review the match itself, and discuss some of the broader implications of Deep Blue's victory. Issues I will cover include Deep Blue's connections to high-performance computing, what "intelligence" really means, and the roles that games play in the fields of artificial intelligence, education, and entertainment. Ian Lane Davis CEO of MAD DOC software Challenges for Game AI. The Video Game industry has grown rapidly in the last few years, and the demand for more compelling and convincing characters, opponents, and comrades in games has made AI one of the hottest areas for research in games. Additionally, the AI and simulation techniques found in games have broad application in "serious" simulations of all sorts, and game developers find a lot of common areas of interest with academic and industry researchers. In this talk, I will give an overview of the AI problems found in both First Person and Strategy games, and tie this into areas of AI outside of the video game industry. Video Games turn out to be the ultimate laboratory for developing the most advanced and successful AI techniques, and we'll look at the current state of the art as well as the open problems now and in the near future Michael Van Lent Institute for Creative Technologies Beyond Entertainment: AI Challenges for Serious Games In the commercial video game industry artificial intelligence (AI) is starting to rival graphics as the key technology component that sells games. Most game reviews comment on the quality of the title's artificial intelligence for better or worse. Games with innovative AI, such as The Sims and F.E.A.R., are often top sellers. As a result game studios are actively exploring new AI techniques that fit within the many constraints of the commercial development process. Serious games, which focus on non-entertainment goals such as education, training, and communication, pose different AI challenges and have different constraints. The University of Southern California's Institute for Creative Technologies has developed ten different serious games, largely focused on military training, and has a number of research efforts focused on artificial intelligence for serious games. While these research efforts focus on the AI requirements of serious games, they often suggest innovations that have potential applications in the entertainment game industry as well.

1-4244-0464-9/06/$20.00 2006 IEEE. 9 CIG'06 (May 22-24 2006)

ChessBrain II – A Hierarchical Infrastructure for Distributed Inhomogeneous Speed-Critical Computation

Colin M. Frayn, Carlos Justiniano, Kevin Lew

Abstract—The ChessBrain project currently holds an official Guinness World Record for the largest number of computers used to play one single game of chess. In this paper, we cover the latest developments in the ChessBrain project, which now includes the use of a highly scalable, hierarchically distributed communications model.

I. INTRODUCTION & BACKGROUND HE ChessBrain project was initially created to investigate the feasibility of massively distributed,

inhomogeneous, speed-critical computation via the Internet. The game of chess lends itself extremely well to such an experiment by virtue of the innately parallel nature of game tree analysis, allowing many autonomous contributors to concurrently and independently evaluate segments of the game tree. With diminishing returns coming from increased search speed, we believe that distributed computation is a valuable avenue to pursue for all manner of substantial tree-search problems. ChessBrain is among the class of applications which leverage volunteered distributed computing resources to address the need for considerable computing power. Earlier projects include the distributed.net (Prime number search) and the SETI@home project which is focused on the distributed analysis of radio signals.

Unlike similar projects which are content to receive processed results within days and weeks, ChessBrain requires feedback in real-time due to the presence of an actual time bound game. We believe that ChessBrain is the first project of its kind to address many of the challenges posed by stringent time limits in distributed calculations – a nearly ubiquitous feature of game-playing situations.

In the two years since ChessBrain played its first match,

we have been working on a second generation framework into which we can host the same chess-playing AI structure, but which will enable us to make far better use of that same

AI and will permit efficient access to a far wider range of contributors, including locally networked machines and dedicated compute clusters.

Manuscript received December 17, 2005. C. M. Frayn is with the Centre of Excellence for Computational

Intelligence and Applications (CERCIA), School of Computer Science, University of Birmingham, UK. (e-mail: [email protected].)

C. Justiniano is a senior member of the Artificial Intelligence group at Countrywide Financial Corporation (CFC) by day and an independent researcher and open source contributor by night (e-mail: [email protected])

Kevin Lew is a software architect at CCH. In his spare time he builds Beowulf Clusters. (e-mail: [email protected])

During the first demonstration match, the ChessBrain

central server received work units from 2,070 machines in 56 different countries. Far more machines attempted to connect, but were unable to do so due to our reliance on a single central server. Our primary goal for ChessBrain II was to address this critical issue in a way that allowed for far greater scalability and removed much of the communication related processing overhead that was present in earlier versions.

As a result, we chose a hierarchical model, which we

explain in detail in the following section. This model recursively distributes the workload thus freeing the central server from much of its prior time-consuming maintenance and communications management tasks.

II. PARALLEL GAME TREE SEARCH

We included the basic algorithms for parallel game tree search in our earlier papers[1,2,3], and they have been covered in detail in the literature. The ChessBrain project’s core distributed search uses the APHID algorithm[4]. It implements an incremental, iterative deepening search, firstly locally on the server and then, after a certain fixed time, within the distribution loop. During this latter phase, the top few ply of the search tree are analysed repeatedly with new leaf nodes being distributed for analysis as soon as they arise. Information received from the distributed network is then incorporated into the search tree, with branches immediately being extended or pruned as necessary.

Leaf nodes are distributed to PeerNodes as work units. These encode the current position to be analysed and the depth to which it should be searched. Work units are distributed to the connected PeerNodes on a request basis, though they are also ranked in order of estimated complexity using intelligent extrapolation from their recorded complexity at previous, shallower depths. In this way, the most complex work units can be distributed to the most powerful PeerNodes. Work units that are estimated to be far too complex to be searched within a reasonable time are further subdivided by one ply, and the resulting, shallower child nodes are distributed instead. This is illustrated in figure 1.

T

1-4244-0464-9/06/$20.00 2006 IEEE. 13 CIG'06 (May 22-24 2006)

Fig. 1: Distributed chess tree search

If a node in the parent tree returns a fail-high (beta-cut) value from a PeerNode search, we then prune the remainder of the work units from that branch. This indicates that the position searched by the PeerNode proved very strong for the opponent, and therefore that the parent position should never have been allowed to arise. In this situation, we can cease analysis of the parent position and return an approximate upper limit for the score. PeerNodes working on these work units receive an abort signal, and they return immediately to retrieve a new, useful work unit.

III. CHESSBRAIN II

A. Motivation The motivation behind ChessBrain II is to enable far greater scalability, whilst also improving the overall efficiency compared with the earlier version. Whilst ChessBrain I was able to support well over 2,000 remote machines, the lessons learned from the original design have enabled us to develop an improved infrastructure, which is suitable for a diverse range of applications.

B. Technical Configuration ChessBrain II utilizes a custom server application, called msgCourier, which enables the construction of a hierarchical network topology that is designed to reduce network latency through the use of clustering as outlined in figure 2. The resulting topology introduces network hubs, the importance of which to graph theory has also been well covered in research superseding the random graph research of Erdos and Renyi and in the social network research of Milgram. In brief, well placed communications hubs help create small

world effects which radically improve the effectiveness of networked communication. [5, 6]. The ChessBrain II system consists of three server applications, a SuperNode, ClusterNode and PeerNode.

Component Purpose SuperNode

Central server. Interfaces with the actual game being played. Manages work unit partitioning and distribution.

ClusterNode

Manages communities of local and distributed PeerNode servers.

PeerNode

Compute node servers. Performs work unit processing.

Table 1. Server Types The central server no longer distributes work units

directly to the PeerNodes, as was the case with ChessBrain I, instead work units are sent to an array of first-level ClusterNodes, operated by trusted community members. These ClusterNodes contain no chess-playing code and behave as network hubs (relay points) through which the complete set of work units can be passed.

Fig. 2: ChessBrain II configuration

Each ClusterNode contains a complete listing of all

PeerNodes connected to it, together with a profiling score to determine the approximate CPU speed of the PeerNode, exactly as in ChessBrain I. Each PeerNode connects to one and only one ClusterNode

The ClusterNodes, having been allocated a selection of

individual work units by the SuperNode, then divide up these work units as they see fit based on the profiling data

1-4244-0464-9/06/$20.00 2006 IEEE. 14 CIG'06 (May 22-24 2006)

that they obtain from their own network of PeerNodes. The primary considerations are that the work units are distributed to sufficient machines to ensure a reliable reply within the time required, plus to ensure that the work units perceived to require a greater computation effort are allocated to those PeerNodes deemed most fit to analyse them.

In subsequent versions, we intend to move some of the

chess logic from the SuperNode onto the ClusterNodes, further reducing the communications overhead. Our anticipation is that the SuperNode will divide up the initial position into large tree chunks, and then distribute just these positions to the ClusterNodes. The ClusterNodes will then further subdivide the given positions, allocating the leaf nodes to the attached PeerNodes as it sees fit, and accumulating the returned results as and when they arrive. The ClusterNodes will then return a single result to the central SuperNode, instead of many.

C. ChessBrain II Communication Protocols Early versions of ChessBrain relied on industry standard

XML data encoding first using XMLRPC, and later using SOAP. The decision to use SOAP was driven by a desire for interoperability with emerging web services. However, the need to streamline communication has steered us toward minimizing our use of XML in favour of economical string based S-Expressions[7].

To further streamline communication we've implemented

a compact communication protocol similar to the Session Initiation Protocol (SIP)[8] for use in LAN and cluster environments where we favour the use of connectionless UDP rather than stream-based TCP communication.

The ChessBrain I communication protocol consisted of

XML content which was first compressed using ZLib compression and then encrypted using the AES Rijndael cipher. Although each PeerNode was quickly able to decrypt and decompress the payload content, the burden was clearly on the SuperNode server where each message to and from a PeerNode required encryption and compression operations. The situation was compounded by the fact that each PeerNode communication occurred directly with a single central SuperNode server.

With ChessBrain II we’ve eliminated direct PeerNode

communication with the central SuperNode and introduced the concept of batch jobs, which combine multiple jobs into a single communication package. The reduction in messaging reduces the impact to the TCP stack while the grouping of jobs greatly improves the compression ratio.

D. Architecture Advantages The most significant architectural change to ChessBrain involves the introduction of network hubs called ClusterNodes, as outlined in section IIIB.

ChessBrain I used a single SuperNode server to handle

the remote coordination of hundreds of machines. Each dispatched job required a direct session involving the exchange of multiple messages between the SuperNode and its PeerNode clients. With ChessBrain II, jobs are distributed from a central server at distributedchess.net to remote ClusterNodes, which in turn manage local communities of PeerNodes. Each ClusterNode receives a batch of jobs, which it can directly dispatch to local PeerNodes thereby eliminating the need for individual PeerNode to communicate directly with the central server. This is necessary to harness a compute cluster effectively. Each ClusterNode collects completed jobs and batches them for return shipment to the central SuperNode server. The efficient use of ClusterNode hubs and job batching results in a reduced load on the central server, efficient use of clusters, reduced network lag, and improved fault tolerance.

We envision that ClusterNodes will largely be used by individuals desiring to cluster local machines. Indeed during the use of ChessBrain I we detected locally networked machines containing five to eighty machines. Most local networks in existence today support connection speeds between 10 to 1000 MBit per second, with the lower end of the spectrum devoted to wireless networks, and the higher end devoted to corporate networks, research networks and compute clusters. ChessBrain II is designed to utilise cluster machines by taking full advantage of local intranet network speeds and only using slower Internet connections to communicate with the SuperNode when necessary.

If we assume that there are roughly as many PeerNodes connected to each ClusterNode as there are ClusterNodes, then effectively the communications costs for each Cluster node, and indeed the SuperNode itself, is reduced to its square root. So, with total node count N, instead of one single bottleneck of size N, we now have approximately (sqrt(N)+1) bottlenecks, each of size sqrt(N). When addressing scalability issues, this is a definite advantage, allowing us to move from an effective node limit of approximately 2,000 to around one million machines.

E. Architecture Drawbacks

It is only fair to consider the drawbacks of the above architecture and to explain why it may not be suitable for every gaming application.

Firstly, as with any distributed computation environment,

there is a substantial overhead introduced by remote communication. Indeed, communication costs increase as the number of available remote machines increases. ChessBrain I involved a single server solution that was overburdened as an unexpectedly large number of remote machines became available. Communication overhead on

1-4244-0464-9/06/$20.00 2006 IEEE. 15 CIG'06 (May 22-24 2006)

ChessBrain I reached approximately one minute per move under peak conditions. However, with the experience gained since that first exhibition match, and with the subsequent redesign of ChessBrain I, we have reduced the overhead to less than ten seconds per move.

The presence of communication overhead means that

shorter time scale games are not currently suitable for distributed computation. However, games that favour a higher quality of play over speed of play are likely to make good use of distributed computation.

Anyone who has ever attempted to write a fully-functioning alpha-beta pruning chess search algorithm featuring a multitude of unsafe pruning algorithms such as null-move, will immediately appreciate the complexity of debugging a search anomaly produced from a network of several thousand computers, each of which is running a number of local tree searches and returning their results asynchronously. Some of the complexities of such an approach are covered in [9].

Adding hierarchical distribution increases complexity,

and highlights the importance of considering how a distributed application will be tested early in the design phase. With ChessBrain II we’ve had to build specialized testing applications in order to identify and correct serious flaws which might have otherwise proceeded undetected. Such a suite of testing tools is invaluable for a distributed application of this size.

F. Comparison with alternative parallel implementations

Other approaches towards parallelising search problems focus primarily on tightly-coupled compute clusters with shared memory. The aim of this paper is not to offer a thorough analysis of the advantages and drawbacks of remotely distributed search versus supercomputer or cluster-based search. The main advantages of this method over that used by, for example, the Deep Blue project [10] and the more recent Hydra project are as follows:

• Processing power – With many entirely separable applications, parallelising the search is a simple way to get extra processing power for very little extra overhead. For chess, the parallelisation procedure is highly inefficient when compared to serial search, but we chose this application because of its inherent difficulty, our own interest and its public image.

• Distributed memory – With many machines contributing to the search, the total memory of the system is increased massively. Though there is much repetition and redundancy, this still partly overcomes the extra practical barrier imposed by the finite size of a transposition table in conventional search.

• Availability – the framework described in this paper is applicable to a wide range of projects requiring

substantial computing power. Not everyone has access to a supercomputer or a substantial Beowulf cluster.

• Costs – It’s easier to appeal to 10,000 people to freely contribute resources than it is to convince one person to fund a 10,000 node cluster.

Drawbacks include:

• Communication overheads – time is lost in sending/receiving the results from PeerNodes.

• Loss of shared memory – In games such as chess, the use of shared memory for a transposition table is highly beneficial. Losing this (amongst other cases) introduces many overheads into the search time [11]

• Lack of control – the project manager has only a very limited control over whether or not the contributors choose to participate on any one occasion.

• Debugging – This becomes horrendously complicated, as explained above.

• Software support – The project managers must offer support on installing and configuring the software on remote machines.

• Vulnerability – The distributed network is vulnerable to attacks from hackers, and must also ensure that malicious PeerNode operators are unable to sabotage the search results.

At the present time, we are not aware of any other effort to evaluate game trees in a distributed style over the internet.

G. Comparison with other Chess projects

We are often asked to compare ChessBrain with more famous Chess machines such as Deep Blue and the more recent Hydra project. A direct comparison is particularly difficult as ChessBrain relies on considerably slower communication and commodity hardware. In contrast, both Deep Blue and Hydra are based on a hardware-assisted brute force approach. A more reasonable comparison would be between distributed chess applications running on GRIDs and distributed clusters.

H. The need for MsgCourier

While considering architectural requirements for ChessBrain II, we investigated a number of potential frameworks including the Berkeley Open Infrastructure for Network Computing (BOINC) project. BOINC is a software application platform designed to simplify the construction of public computing projects and is presently in use by the SETI@home project, CERN’s Large Hadron Collider project and other high-profile distributed computing projects[12].

After extensive consideration we concluded that

ChessBrain's unique requirements necessitated the

1-4244-0464-9/06/$20.00 2006 IEEE. 16 CIG'06 (May 22-24 2006)

construction of a new underlying server application technology[13]. One of our requirements for ChessBrain II's software is that it must be a completely self-contained application that is free of external application dependencies. In addition, our solution must be available for use on both Microsoft Windows and Linux based servers, while requiring near zero configuration. The rationale behind these requirements is that ChessBrain II allows some of our contributors to host ClusterNode servers. It is critically important that our contributors feel comfortable with installing and operating the project software. We found that BOINC requires a greater level of system knowledge than we're realistically able to impose on our contributors. Lastly, BOINC was designed with a client and server methodology in mind, while our emerging requirements for ChessBrain II include Peer-to-Peer functionality.

Well over a year ago we began work on the Message Courier (msgCourier) application server in support of ChessBrain II. MsgCourier is designed to support speed critical computation using efficient network communication and enables clustering, which significantly improves overall efficiency. Unlike other technologies, msgCourier is designed to enable ad-hoc machine clusters and to leverage existing Beowulf clusters.

MsgCourier is a hybrid server application that combines

message queuing, HTTP server and P2P features. When we embarked on this approach there were few such commercial server applications. Today, Microsoft has release SQL Server 2005 which combines a SQL Engine, HTTP server and messaging server features. The industry demands for performance necessitates the consideration of hybrid servers.

We chose to build msgCourier independently of

ChessBrain (and free of chess related functionality) in the hopes that it would prove useful to other researchers.

The following were a few of our primary design considerations:

• A hybrid application server, combining message queuing and dispatching with support for store and forward functionality.

• Multithreaded concurrent connection server design able to support thousands of concurrent connections.

• High-speed message based communication using TCP and UDP transport.

• Built-in P2P functionality for self-organization and clustering, service adverting and subscribing.

• Ease of deployment with minimal configuration requirements.

• Built-in security features which are comparable to the use of SSL and or SSH.

The msgCourier project is under continued development.

We are keen to emphasize here that the relevance of the

ChessBrain project is not just to the specific field of computer chess, but to any distributed computation project. Hence, we believe that the msgCourier software is a valuable contribution to all areas of computationally intensive research. The direct application here demonstrates that the framework is also flexible enough to operate within gaming scenarios, where results are required on demand at high speed and with high fidelity, often in highly unpredictable search situations.

More information on the msgCourier project is available

at http://www.msgcourier.com

CONCLUSIONS : THE FUTURE OF DISTRIBUTED GAMING

Distributed computation offers the potential for deeper game tree analysis for a variety of potential gaming applications. In particular, it overcomes the restrictions imposed by Moore’s law, producing substantial gains for any game-playing code that is primarily computationally limited. For games such as Go, the effective contribution is reduced as the branching factor is so high that such games are algorithmically limited rather than computationally limited in most cases.

Speed-critical distributed computation also has many clear

applications within the financial sector where rapid decisions must be made, often based on approximate or inadequate data.

During the past decade we’ve seen high profile Man vs.

Machine exhibitions. We feel that the general public will eventually lose interest in exhibitions where a single human player competes against a machine which is virtually indistinguishable from the common personal desktop computer. Not since Deep Blue has any Man vs. Machine event really captured the public’s imagination.

We feel strongly that the future of Man vs. Machine

competitions will migrate toward a format where a human team competes against a distributed network. Such events will take place over the Internet with distributed human members collaborating remotely from their native countries. This exhibition format will likely capture the public’s imagination as it more closely resembles themes played out in popular science fiction.

On the ChessBrain project we’ve learned the importance

of capturing the public’s imagination for without their support massively distributed computation would not be economically feasible[14]. Generally, a project is only as good as the contributors that it is able to attract. This entire field of research – that of attracting distributed computation teams to a project – seems remarkably underdeveloped in the literature, despite the fact that it has an arguably greater effect on the success of any distributed project than any

1-4244-0464-9/06/$20.00 2006 IEEE. 17 CIG'06 (May 22-24 2006)

degree of algorithmic sophistication. More work in this area seems extremely important, though it lies firmly within the realms of psychology and sociology rather than pure computer science.

We’ve completed preliminary testing on small clusters with the support of ChessBrain community members [15]. During the first quarter of 2006 we intend to release a major update of our project software when we will begin large-scale public testing of ChessBrain II. We expect ChessBrain II to be fully operational by the second quarter of 2006.

We are actively preparing for a second demonstration match between ChessBrain II and a leading international chess grandmaster within the next 12 months. Anyone wishing to contribute to this event is welcome to contact the authors at the addresses supplied.

ACKNOWLEDGMENT We would like to acknowledge the hundreds of

international contributors who have supported the ChessBrain project over the past four years. In addition, and by name, we would like to thank Cedric Griss and the Distributed Computing Foundation; Kenneth Geisshirt and the Danish Unix users Group; Peter Wilson; Gavin M. Roy with EHPG Networks; and Y3K Secure Enterprise Software Inc., whose outstanding support enabled us to establish a world record and to further contribute to this emerging field. Colin Frayn is currently supported by a grant from Advantage West Midlands (UK).

REFERENCES [1] Frayn, C.M. & Justiniano, C., “The ChessBrain Project – Massively

Distributed Inhomogeneous Speed-Critical Computation”, Proceedings IC-SEC, Singapore, 2004

[2] Justiniano, C. & Frayn, C.M. “The ChessBrain Project: A Global Effort To Build The World's Largest Chess SuperComputer”, 2003 ICGA Journal, Vol. 26, No. 2, 132-138

[3] Justiniano, C. “ChessBrain: A Linux-Based Distributed Computing Experiment”, 2003 Linux Journal, September 2003

[4] Brockington, M. “Asynchronous Parallel Game-Tree Search”, 1997 PhD Thesis, University of Alberta, Dept. of Computer Science

[5] Barabasi, A-L. “Linked: The new Science of Networks”, 2002. Cambridge, MA: Perseus

[6] Gladwell, M. “The Tipping Point”, 2000. Boston: Little and Company [7] Rivest, R. L., “S-Expressions”., MIT Theory group,

http://theory.lcs.mit.edu/~rivest/sexp.txt [8] Session Initiation Protocol (SIP) http://www.cs.columbia.edu/sip/ [9] Feldmann, R., Mysliwietz, P., Monien, B., “A Fully Distributed Chess

Program”, Advances in Computer Chess 6, 1991 [10] Campbell, M., Joseph Hoane Jr., A., Hsu, F., “Deep Blue”, Artificial

Intelligence 134 (1-2), 2002. [11] Feldmann, R., Mysliwietz, P., Monien, B., “Studying overheads in

massively parallel MIN/MAX-tree evaluation”, Proc. 6th annual ACM symposium on Parallel algorithms and architectures , 1994

[12] Berkeley Open Infrastructure for Network Computing (BOINC) project: http://boinc.berkeley.edu/

[13] Justiniano, C., “Tapping the Matrix: Revisited”, BoF LinuxForum, Copenhagen 2005

[14] Justiniano, C., “Tapping the Matrix”, 2004, O’Reilly Network Technical Articles, 16th, 23rd April 2004. http://www.oreillynet.com/pub/au/1812

[15] Lew, K., Justiniano, C., Frayn, C.M., “Early experiences with clusters and compute farms in ChessBrain II”. BoF LinuxForum, Copenhagen 2005

1-4244-0464-9/06/$20.00 2006 IEEE. 18 CIG'06 (May 22-24 2006)

Grid-Robot Drivers:an Evolutionary Multi-agent Virtual Robotics Task

Daniel AshlockDepartment of Mathematics and Statistics

University of GuelphGuelph, Ontario, Canada, N1G 2W1

ABSTRACT

Beginning with artificial ants and including such tasks asTartarus, software agents that are situated on a grid havebeen a staple of evolutionary computation. This manuscriptintroduces a grid-robot problem in which the agents simulatesingle or multiple drivers on a two-lane interstate freeway thatmay have obstructions. The drivers are represented as If-Skip-Action lists, a linear genetic programming structure. With onedriver present, the problem is similar to an artificial ant task,requiring only that the grid robot learn where fixed obstaclesare placed. When multiple drivers are present, the process ofdriving can be cast as a game similar to the prisoner’s dilemma.The relative advantage to be gained from inducing anothervehicle to crash is analogous to defection in the prisoner’sdilemma. The game differs from prisoner’s dilemma in thatdefecting is a complex learned behavior, not simply a movethe grid robot may choose. A skilled opponent may doge anattempt at “defection”. Six sets of experiments with up to fivedrivers and two fixed obstacles are performed in this study.In multi-driver simulations evolution locates a diversity ofbehaviors within the context of the driver task.

I. INTRODUCTION

The problem of evolving a controller for a model race caris one that has been examined before in the evolutionary com-putation literature [10]. Evolved controllers have competed atthe Congress on Evolutionary Computation in a competitionorganized by Simon Lucas for the last several years. This paperintroduces a grid-robot version of the car racing problem, adiscrete version of the problem. A grid robot is a virtual robotthat lives on a grid made of squares. The robot has a positionand heading on the the grid and shares it with other roboticagents and both fixed and movable obstacles. Examples ofother grid-robot problems are the Tartarus task [11], [1], [3],[6], [2] in which a robot living on a 6 × 6 grid is awardedfitness for pushing boxes against the wall, the Herbivore [2]task in which a grid-robot forages for food, and the North Wallbuilder task [4], [2] in which a grid robot tries to retrieve boxesfrom a delivery site and use them to build a wall. Grid robotshave also been used in studying the theoretical biology of apredator-prey system [5].

The motivation for this research is the creation of a simpleframework in which evolutionary algorithms can be used toinvestigate the game theory of driving. In this case “simple”means a simulation with low computational cost in whicha large number of evolutionary runs can be performed in ashort time to survey the strategy space and obtain statisticalperspective. The idea occurred to the author during drivesback and forth between Southern Ontario and Iowa. In theregions of Michigan, Indiana, and Illinois at the southerntip of Lake Michigan the interstate system is in a state ofalmost continuous repair. This situation often leads to twostreams of traffic becoming one. This, in turn, creates anarena in which informal behavioral experiments take placein an often infuriating fashion. The goal of this research isa modeling environment in which this situation can be studiedwith evolved software agents. This study presents the basicframework and examines the impact of varying the numberof obstacles and drivers on the road. The remainder of themanuscript is organized as follows. Section II specifies a two-lane grid-robot driver problem with variable numbers of carsand obstructions. Section III gives the representation usedto encode the evolvable grid-robot drivers. Section IV givesthe design of the experiments performed. Section V givesthe results of the experiments and performs some analysis.Section VI draws conclusions and outlines the next steps inthis research.

II. THE GRID-ROBOT DRIVER PROBLEM

A grid robot is a virtual robot embedded in a grid environ-ment. The grid for the robotic drivers is a 2×N grid with thelong dimension wrapping to form an arbitrarily long periodicroad. The road contains other grid-robot drivers and obstacleswith a fixed position. Examples of driving grids are shown inFigure 1.

Henceforth grid-robot drivers will be called drivers. A driveron the grid has a position along the long dimension of the grid,a lane, and a speed. The driver is given as inputs the distanceto the next other driver and the next barrier both in his ownand in the other lane. In addition the driver is given his ownspeed. Distances to the next obstacle and driver are limited toa sight distance Ds. If no driver or obstacle is visible withinsight distance for a given lane then the input reports a value

1-4244-0464-9/06/$20.00 2006 IEEE. 19 CIG'06 (May 22-24 2006)

0123456789012345689===================> #-------------------

===================

012345678901234567890123456789==============================> > > > > #------------------------------

#==============================

Fig. 1. Above are segments of the periodic grids for the driving agents. Thestarting position of driving agents on the grid is shown with > while barriersare denoted by a #. The environment has two lanes.

of zero. On a given time step, a driver may take one of eightactions:

1) Slow down two, to a minimum speed of zero.2) Slow down one, to a minimum speed of zero.3) Do not change speed.4) Speed up one, to a maximum speed of Smax.5) Slow down two, to a minimum speed of zero, while

changing lanes.6) Slow down one, to a minimum speed of zero, while

changing lanes.7) Do not change speed while changing lanes.8) Speed up one while changing lanes.Algorithm 1 specifies how driver speed and position are

updated. Updating happens by time-steps with each driverexecuting one action and moving a number of grids equalto its speed in each time-step. On a time step each driversaction is computed, and then the drivers move in a randomorder that is recomputed each time step. If a driver is tryingto change lanes, it does so the first time it is trying to moveforward and there is a space open beside it in the other lane.Movement is in speed order as well as random order in thefollowing sense. Counting down from the highest speed, alldrivers with a speed at least the current speed in the countdownadvance one grid. If a driver advances into a grid occupiedby another driver or an obstacle, then the advancing driver“crashes” and is removed from the simulation. This is not thetypical result of a crash, that only the nominally guilty partyshould suffer. The exploration of other alternatives such asboth drivers being removed or a probabilistic result are leftfor subsequent studies.

This updating algorithm was chosen because it is simple andapproximates continuous movement as well as possible given

the grid environment. Other than crashes, it is impossible todrive off the road because the lanes are “‘current” and “other”rather than “left” and “right.”

Algorithm 1:

Initialize driver positions as in Figure 1Initialize driver speed to zeroFor T steps

Shuffle drivers into a random orderFor all drivers in random order

Compute each driver’s actionIf action includes lane-change

Set driver lane change flagChange speed as per actionFor(s=Smax down to 1)

If(driver speed at least s)If(lane-change flag and possible)

Change lanes, reset flagEnd IfAdvance driver one squareIf grid is occupied

crash, driver is removed from gridEnd If

End IfEnd For

End forEnd For

The fitness of a driver in the current study is the distance(number of grids) it drives in a fixed number of time stepsT . Crashing thus results in a fitness penalty, because fitnessevaluation for the crashing agent ends early. When multipledrivers are present, it is possible to slow down abruptly orlane change in front of another driver. The lack of an inputreporting the distance to the nearest driver behind makes thesesorts of behaviors harder, but not impossible.

Experiments with single drivers are similar to artificial antproblems [8], [9], because the driver must simply learn a fixedset of obstacles, not a complex and changing environmentpopulated by other drivers. The driver problems are simplerthan artificial ant problems because they are essentially one-dimensional in character and have fewer obstacles than the anthas pieces of food to locate. They are more complex in that thedrivers can see more of their world than artificial ants and inthat drivers must deal with a more complex simulated physicsinvolving velocity. Nevertheless both artificial ant and singledriver problems are optimization tasks. Once learned, a setof correctly times turns or lane changes form an unchangingoptima solution to their respective problems.

As will become apparent in Section V the driver problemshave very different character when the number of obstaclesand other drivers are changed. The values for the simulationparameters Ds, Smax, and T are given in the ExperimentalDesign section.

1-4244-0464-9/06/$20.00 2006 IEEE. 20 CIG'06 (May 22-24 2006)

III. ISAC LIST DRIVERS

The evolvable structure used to control the driver is an ISAclist. The ISAc list used in this manuscript is a generalizationof the one presented in [1], [5], and [2]. An ISAc list is anarray of ISAc nodes. An ISAC node is a hextuple

(a, b, c, t, act, jmp)

where a and b are indexes into the set of inputs available toto the driver, c is a constant in the range 0 ≤ c ≤ Ds, t

is the type of the Boolean test used by the node, act is anaction that the ISAc list may take, and jmp is a specificationof which position in the list to jump to if the action happensto be a jump action. An ISAc list comes equipped with a setof Boolean tests available to each node. The tests available tothe nodes in this study have types 0-3 and are, respectively,v[a] < v[b], v[a] < c, v[a] ≤ v[b], and v[a] ≤ c. The arrayv[] is the set of inputs available to the driver. Execution in anISAc list is controlled by the instruction pointer.

The instruction pointer starts at the beginning of the ISAclist, indexing the zeroth node. Using the entries a, b, c, t, act,and jmp of that node, inputs v[a] and v[b] are retrieved forthe current driver, and the Boolean test of type t is applied tov[a] and v[b] or a and c, as appropriate. Recall that the vectorv[] holds the distance from the driver to the next driver andobstacle ahead in each lane as well as the driver’s currentspeed, a total of five inputs.. If the test is true, then theaction in the act field of the node is performed; otherwise,nothing is done. If that action is “jump,” the contents of thejmp field are loaded into the instruction pointer. Any otheraction is executed according to its own type, as describedsubsequently. After execution of any action, the instructionpointer is incremented. Pseudocode for the basic ISAc listexecution loop is given in Algorithm 2.

Algorithm 2:IP← 0 //Set Instruction Pointer to 0.Get Inputs; //Put initial values in data vector.Repeat //ISAc evaluation loop

With ISAc[IP] do //with the current ISAc node,If test(v[a],v[b],c) then //Conditionally

PerformAction(act); //perform actionUpdate Inputs; //Revise the data vectorIP ← IP + 1 //Increment instruction pointerifIP > Length IP ← 0 //Wrap at end

Until Done;

There are 3 types of actions used in the act field of anISAc node. The first is the NOP action, which does nothing.The inclusion of the NOP action is inspired by experience inmachine-language programming. An ISAc list that has beenevolving for a while will have its performance tied to thepattern of jumps it has chosen. If new instructions are inserted,then the target addresses of many of the jumps change. An“insert an instruction” mutation operator could be tied to a “re-number all the jumps” routine, but this is clumsy. In particular,it would create problems when the crossover operator wasapplied is one parent had been renumbered and the other had

not. Instead a “do nothing” instruction serves as a placeholder.Instructions can be inserted by mutating a NOP instruction,and they can be deleted by mutating the instruction to bedeleted into a NOP instruction. Both of these mutations arepossible without the annoyance of renumbering everything.The second type of action is the jmp or jump action that movesthe instruction pointer, changing the flow of control. The thirdtype of actions is an exterior action that causes an ISAc list togenerate an action relevant to the simulation. In this study thereare eight external actions corresponding to the eight possibleactions a driver can take. There are thus 10 total actions; donothing, jump, and the eight possible simulation actions.

ISAc lists are initialized at random, filling in uniformlyselected, semantically valid values to the six fields of eachnode. This means that it is not difficult to create an ISAc listthat can run indefinitely without generating an exterior action.To prevent such “infinite loop” behaviors, there is a maximumnumber of ISAc nodes an ISAc list may execute. This limit iscalled the node limit for the ISAc list. If it exceeds this limitthe list has timed out and is removed from the simulation asif the driver it was controlling had crashed.

ISAc lists are a form of genetic programming [7] that usesa fixed-size linear data structure. The data structure has beenspecified: it remains to specify the variation operators. Theevolution used in this study uses two variation operators. Thefirst is two-point crossover operating on the array of ISAcnodes with the nodes treated as atomic objects. The secondis a mutation operator that first selects a node uniformly atrandom and then modifies one of its six fields to a new, validvalue, also selected uniformly at random.

The ISAc lists presented here are a generalization of earlierdesigns in that they permit multiple Boolean tests to beavailable rather than a single test and in that they haveconstants localized to the nodes. The constants also have agreater range of values. Previously, ISAc lists used constantsas additional inputs. This means that when the number ofconstants is large there is a high probability of two constantsbeing compared. The grid-robot driving problem uses moreconstants (0-Ds = 8) than inputs (5) and so has an enhancedprobability of comparing constants in the original design forISAc lists.

IV. EXPERIMENTAL DESIGN

The evolutionary algorithm used in this study operates on apopulation of 120 ISAc lists functioning as drivers. Each ISAclist has 30 nodes. The model of evolution is single tournamentselection [2] with tournament size four. The model of evolutionis generational. The population is shuffled into groups of fourdrivers. The two best in each group of four are copied over thetwo worst. The copies undergo crossover and a single mutationeach. Evolution continues for 1000 generations in each run.

For fitness evaluation the following parameters are used.Drivers execute T = 100 time steps with a maximum speedof Smax = 6, a sight distance of Ds = 8, and a node limitof 2000 ISAc nodes. A collection of six experiments wereperformed using the two grids shown in Figure 1 as well as

1-4244-0464-9/06/$20.00 2006 IEEE. 21 CIG'06 (May 22-24 2006)

TABLE I

NUMBERS OF CARS AND OBSTACLES IN THE SIX EXPERIMENTS

PERFORMED IN THIS STUDY.

Experiment Cars Obstacles1 1 12 1 23 2 14 2 25 5 06 5 1

a grid with no obstacles. The details of these experimentsare given in Table I. One hundred evolutionary runs wereperformed in each experiment. The fitness evaluation in theseexperiments used one driver and one obstacle, one driver andtwo obstacles, two drivers and one obstacle, two drivers andtwo obstacles, five drivers and no obstacles, and five driversand one obstacle, respectively.

V. RESULTS AND ANALYSIS

Figure 2 shows the trajectory of crashes as a function ofgenerations. Figure 3 shows the trajectory of driver speed asa function of generations. Evolution functioned nominally inthe sense that the number of crashes decreased and speedincreased. The degree of these effects varied significantlybetween experiments.

The results suggest that the grid-robot driver environmentreplicates some features of actual driving.

• A driver by himself can learn to drive rapidly whilemissing isolated obstacles. The runs with one driver andone obstacle achieved the maximum possible speed for itsbest fitness and had relatively few crashes. Note that somecrashes are inevitable because half the population consistsof drivers newly produced by crossover and mutation.

• A single driver with frequent obstacles is still fairlysafe and drives quickly, but not at top speed. The runswith an obstacle in each lane and one driver achievedabout 60% of the maximum speed on average and even-tually had the average number of crashes decrease to afairly low level.

• Hell is other drivers. The very slowest populationswere those with five cars and the number of crashesstayed persistently above a level that could be explainedby new, mutant drivers. The experiment using five carswith one obstacle was significantly slower than all othersets of runs, and using five cars with no obstacles wassignificantly slower than all the remaining runs.

• Obstacles are far more dangerous if there are otherdrivers. The runs with two cars and two obstacles ex-perienced a significantly higher level of crashes than anyother type of run. The five-car one-obstacle runs did not

have an exceptional level of crashes, but this was achievedby driving at about 1

12of the maximum possible speed.

Examples of runs from each of the six experiments showingthe mean competent fitness, best fitness, and number of crashesappear in Figure 4. These runs are not entirely typical, ratherthey were chosen to demonstrate interesting dynamics. Meancompetent fitness was first used, as far as the author isaware, in [6], though the name for it is coined here. Inany evolutionary computation system with disruptive variationoperators, the fitness of newly created creatures is often quitelow. For the drivers both variation operators can, in somecircumstances, create drivers that cheerfully accelerate into thefirst obstacle, yielding a fitness less than 1% of the maximumpossible. In order to get a statistic with lower variation andmore information than mean fitness over the entire population,mean fitness is instead computed over only those creaturesthat have survived one round of selection. This removes thesubstantial majority of the completely incompetent mutants’sfitness values from the mean-fitness statistic.

The plot shown for one vehicle and one obstacle is atyp-ically bad in the sense that the mean and best fitness didnot arrive at 585 within five generations. The fitness of 585results from a driver simply stepping on the accelerator whilemanaging to avoid obstacles. In the run shown, a locallyoptimal (slower) strategy dominated for about 30 generations.A small increase in the number of crashes accompanies thetakeover by a fitness 585 strategy near generation 50. Thissuggests that the initial slowness of the run resulted from astrategy that had trouble avoiding the obstacle at top speed.The bump in the crash statistic suggests intermediate forms,perhaps created by crossover, that had higher speed and alsoa good chance of hitting the obstacle. With only one car, thefitness function is deterministic, and so a crash is geneticallypredestined.

Examining the plot for one car with two obstacles, thepresence of a bump in the crash statistic accompanying inno-vation is far more pronounced. Such a crash-bump appears ingenerations 10, 25, and 90, each accompanied by a noticeablejump in the best fitness. The model of evolution used is elitistwith the best two drivers at no risk of death. With one driverthe determinism of fitness evaluation yields a fitness ratchet.The mean competent fitness simply chases the best fitnessefficiently in both sets of runs with a single driver.

All four plots with multiple drivers exhibit maximum fitnessthat falls back. This is typical behavior for multiple driverruns. When multiple drivers are present, a relative fitnessadvantage may be obtained either by having a high absolutefitness (driving fast without crashing) or by getting the otherdrivers to crash. Cursory examination of the ISAc lists showsthat actions with lane changes are more common for thedrivers evolved in multiple-driver runs. Crashes are far morecommon in multiple-driver runs; the presence of an excess oflane changing, especially in the five-driver no-obstacle runs,strongly suggests that many of the crashes are strategic.

The random shuffling of driver action order in multiple-driver runs makes fitness evaluation stochastic: running the

1-4244-0464-9/06/$20.00 2006 IEEE. 22 CIG'06 (May 22-24 2006)

0

20

40

60

80

100

120

0 20 40 60 80 100

Cra

shes

generations

95% Confidence, number of crashes per generation

2 cars 2 obstacles

1 car 1 obstacle

5 cars 1 obstacle

Other runs

Fig. 2. This figure shows confidence intervals on the number of crashes in each generation for all six experiments for the first 100 generations. After 100generations there is no substantial change in the average number of crashes.

same set of drivers twice need not yield the same fitnessnumbers. In addition to being stochastic the multiple driverfitness evaluation is co-evolutionary: fitness of an individualdepends on the identity of the others being co-evaluated. Thismeans that the evolutionary dynamics should be substantiallymore complex in the multiple-driver runs. Examination of theplots suggest they are. The time for mean competent fitnessto catch best fitness is longer, and both the fitness and crashstatistics jump around more.

The two-car two-obstacle run shows a not uncommonbehavior from about generations 30-120 in which the numberof crashes exceeds the best fitness. This could mean thatcompetitive crash-induction exceeds speed as a source ofrelative fitness in some epochs. In spite of its initial “DeathRace 2000” character, this run eventually steadies down toachieve a fairly high fitness, well above the average for two-driver two-obstacles runs. The two five-driver plots shownare among the most fit in their respective experiments. Thebump near generation 130 in the five-driver one-obstacle runis a common feature that seems to represent a momentaryadvantage in getting others to crash that is rapidly lost.

VI. CONCLUSIONS AND NEXT STEPS

As presented, the driving task for grid robots can be run asan optimization task with a single driver or as a co-evolvinggame with multiple drivers. In many instances the systemlocates fast drivers. The multiple-driver experiments do not

achieve as high a speed on average, perhaps because crashingother drivers is a more efficient means of achieving highfitness. Even in the very simple system presented in this study,the presence of multiple drivers enables complex evolutionarydynamics.

A. Why would drivers ever cooperate?

In the prisoner’s dilemma, two agents are given a choice ofcooperating or defecting. They make their choices of whichmove to make simultaneously. The highest payoff T comesfrom successful defection into cooperation by an opponent.The other half of a defect-cooperate pair receives the lowestpossible payoff S. The other payoffs are D for mutual defectionand C for mutual cooperation. In order to be prisoner’sdilemma, the payoffs must satisfy the following inequalities:

S ≤ D ≤ C ≤ T (1)

andS + T ≤ 2C. (2)

The game that drivers play fails to be precisely prisoner’sdilemma on grounds that it is not a simultaneous game. Thedriver that manages to crash his opponent removes the oppo-nent from the game, making reciprocal defection impossible.Attempted reciprocal defection, however, is possible. A pairof drivers in close proximity on the grid could be thoughtof as playing a two-player game very similar to prisoner’s

1-4244-0464-9/06/$20.00 2006 IEEE. 23 CIG'06 (May 22-24 2006)

0

100

200

300

400

500

600

0 50 100 150 200

Fitn

ess

generations

95% Confidence, population mean distance driven

1 car 1 obstacle

1 car 2 obstacles

5 cars 1 obstacle

All other runs

0

20

40

60

80

100

120

140

160

180

200

0 50 100 150 200

Fitn

ess

generations

95% Confidence, population mean distance driven

Five cars, no obstacles

Two cars, one and two obstacles

Fig. 3. This figure shows confidence intervals on the distance driven by the drivers for all six experiments (upper plot) and the three experiments in themiddle of the distribution (lower plot) for the first 200 generations. After 200 generations there is no substantial change in the average number of crashes.

dilemma with moves attempt to crash the other player (defect)and do not attempt to crash the other player (cooperate). Ifboth players cooperate, then both remain on the track driving.While this could lead to a maximal payoff, its expectation islower because of the congestion caused by the presence of theother driver. A successful crash results in a lower payoff forthe driver that crashes, although how much lower depends onhow far into the evaluation period the driver is. A successfulcrash also clears the way for the driver that did not crash,creating a less congested roadway. If both players decide to

try to crash one another, then the expected payoff of bothplayers is changed, probably down.

The lack of mutual defection and the lack of simultaneitymake the grid-robot driver task with multiple drivers some-thing that is clearly not a strict prisoner’s dilemma. The gameof deciding whether or not to attempt to crash one’s opponenthas many of the same elements as prisoner’s dilemma, andin fact of the one-shot prisoner’s dilemma. This makes themultiple driver runs with high fitness somewhat puzzling.Evolution of the one-shot prisoner’s dilemma leads, almost

1-4244-0464-9/06/$20.00 2006 IEEE. 24 CIG'06 (May 22-24 2006)

0

100

200

300

400

500

600

0 50 100 150 200 250

Fitn

ess

or C

rash

es

Generations

Mean and best fitness and number of crashes, run0

One car, One obstacle AverageBest

Crashes

0

100

200

300

400

500

600

0 50 100 150 200 250

Fitn

ess

or C

rash

es

Generations

Mean and best fitness and number of crashes, run34

One car, two obstacles AverageBest

Crashes

0

100

200

300

400

500

600

0 50 100 150 200 250

Fitn

ess

or C

rash

es

Generations

Mean and best fitness and number of crashes, run38

Two cars, one obstacle AverageBest

Crashes

0

100

200

300

400

500

600

0 50 100 150 200 250

Fitn

ess

or C

rash

es

Generations

Mean and best fitness and number of crashes, run24

Two cars, two obstacles AverageBest

Crashes

0

100

200

300

400

500

600

0 50 100 150 200 250

Fitn

ess

or C

rash

es

Generations

Mean and best fitness and number of crashes, run29

Five cars, no obstacle AverageBest

Crashes

0

100

200

300

400

500

600

0 50 100 150 200 250

Fitn

ess

or C

rash

es

Generations

Mean and best fitness and number of crashes, run74

Five cars, one obstacle AverageBest

Crashes

Fig. 4. Examples of runs from all six experiments showing mean competent and best fitness as well as the number of crashes. Crashes and fitness are bothcounted as integers and, happily, are of similar enough scale to be displayed on the same axis.

1-4244-0464-9/06/$20.00 2006 IEEE. 25 CIG'06 (May 22-24 2006)

inexorably, to a population of mutually defecting agents. Itis conjectured that non-simultaneity is the key differencethat permits cooperation among drivers. An attempt to crashanother driver requires some preparation. With sight distancemore than maximum speed, a driver can see another driverahead of it and avoid it. If a driver just misses another drivera couple times via lane-changing, then it may deduce thatan attempted crash is in progress. This will manifest as ashort distance to the next car in its own lane several times.In this case the driver could choose to slow down, cedingsome fitness to substantially lower the probability of a crash.Behavior consisting in slowing down to avoid a wild driver isconsistent with the very low speeds observed in the runs withfive drivers.

B. A need for visualization.

The speculation in the preceding section points firmly tothe need for a visualization tool to aid in analysis. Such atool, with the ability to load evolved drivers and design boardson which to test them, would permit resolution of some ofthe questions about the behavior of the evolved drivers. Inaddition such a tool would be valuable for fish-out-of-wateranalysis of the drivers. Examining a driver evolved in a five-driver world by itself on a grid would permit a researcherto check and see if the low speed of drivers in the five-driverworld is genetically hard-coded or the result of congestion. Thevisualization tool might also be an entree into making a gamewhere evolved and designed vehicles compete against oneanother, a further application of this piece of computationalintelligence to games.

It seems likely that drivers within a population evolve aparticular “culture,” akin to the rules of the road for drivers inthe United States of Canada. This could be tested in a ham-handed way by juxtaposing drivers from distinct populationsand examining the crash and fitness statistics in the resultinggroups of drivers. An understanding or comparison of the self-organized cultures would require a good visualization tool.

C. Angel drivers: a next step.

The motivating situation for this research is observationof human drivers on sections of a two-lane interstate that isunder repair. Thus far, negative behavior has been emphasized,largely because it was what arose under the selfish imperativeof a fitness function which rewards driving farther than theother drivers on the road with you. A next step is to modelhelpful drivers. What fitness function might permit evolutionto search for those atypical drivers that help traffic movesmoothly and quickly past an obstruction?

The following is proposed. The current study yields adiverse set of 600 best-of-run drivers that exhibit various“typical driver” behaviors. Placing a substantial number ofthese driver on the grid, without permitting them to evolve,yields a training ground for angel drivers. The fitness of anangel driver is the total distance moved by all drivers in thesimulation. This rewards not only the promotion of traffic flowbut the avoidance of crashes by any driver. There is a chance,

if the total number of drivers is small, that an angel driverwould remove itself from the simulation in order to reducecongestion.

Interesting questions for an angel driver simulation include:

• What fraction of angels is required to see improvement?• What behavior would arise if no “typical” drivers were

present?• Are angels among the slowest drivers within their fitness

evaluation cohorts?• Would an angel ever identify and terminate a very trou-

blesome driver?• Are there general-purpose angel drivers or are they spe-

cific to one culture of “typical” drivers?

Many other experiments are possible within the frameworkpresented here: co-evolving difficult patterns of obstacles oroptimizing to produce psychotic drivers whose fitness is thenumber of crashes they cause. It is also possible to generalizethe problem to include more lanes, entrance and exit ramps,and other sources of automotive chaos.

VII. ACKNOWLEDGMENTS

The author would like to thank the University of GuelphDepartment of Mathematics and Statistics for its support ofthis research. The author acknowledges the contribution ofvarious rude and helpful drivers on the interstate system southof Lake Michigan who inspired this work.

REFERENCES

[1] Dan Ashlock and Mark Joenks. ISAc lists, a different representation forprogram induction. In Genetic Programming 98, proceedings of the thirdannual genetic programming conference., pages 3–10, San Francisco,1998. Morgan Kaufmann.

[2] Daniel Ashlock. Evolutionary Computation for Opimization and Mod-eling. Springer, New York, 2006.

[3] Daniel Ashlock and Jennifer Freeman. A pure finite state baselinefor tartarus. In Proceedings of the 2000 Congress on EvolutionaryComputation, pages 1223–1230. IEEE Press, 2000.

[4] Daniel Ashlock and James I. Lathrop. Program induction: Building awall. In Proceedings of the 2004 Congress on Evolutionary Computa-tion, volume 2, pages 1844–1850. IEEE Press, 2004.

[5] Daniel Ashlock and Adam Sherk. Non-local adaptation of artificialpredators and prey. In Proceedings of the 2005 Congress on EvolutionaryComputation, volume 1, pages 98–105. IEEE Press, 2005.

[6] Daniel Ashlock, Stephen Willson, and Nicole Leahy. Coevolutionand tartus. In Proceedings of the 2004 Congress on EvolutionaryComputation, volume 2, pages 1618–1624. IEEE Press, 2004.

[7] Wolfgang Banzhaf, Peter Nordin, Robert E. Keller, and Frank D.Francone. Genetic Programming : An Introduction : On the AutomaticEvolution of Computer Programs and Its Applications. Morgan Kauf-mann, San Francisco, 1998.

[8] John R. Koza. Genetic Programming. The MIT Press, Cambridge, MA,1992.

[9] William B. Langdon and Riccardo Poli. Foundations of GeneticProgramming. Springer, New York.

[10] T. P. Runarsson and S. M. Lucas. Evolving controllers for simulatedcar racing. In Proceedings of the 2005 Congress on EvolutionaryComputation, volume 1, pages 1906–1913, Piscataway NJ, 2005. IEEEPress.

[11] Astro Teller. The evolution of mental models. In Kenneth Kinnear,editor, Advances in Genetic Programming, chapter 9. The MIT Press,1994.

1-4244-0464-9/06/$20.00 2006 IEEE. 26 CIG'06 (May 22-24 2006)

Optimizations of data structures, heuristics and algorithms for

path-finding on maps

Tristan CazenaveLabo IA

Dept. InformatiqueUniversite Paris 8, Saint-Denis, France

Abstract— This paper presents some optimizations of A* andIDA* for pathfinding on maps. The best optimal pathfinder wepresent can be up to seven times faster than the commonlyused pathfinders as shown by experimental results. We alsopresent algorithms based on IDA* that can be even fasterat the cost of optimality. The optimizations concern the datastructures used for the open nodes, the admissible heuristic andthe re-expansion of points. We uncover a problem related tothe non re-expansion of dead-ends for sub-optimal IDA*, andwe provide a way to repair it.

Keywords: path-finding, game maps, mazes, A-star, IDA*

I. INTRODUCTION

Path-finding is an important part of many applications,including commercial games and robot navigation. In gamesit is important to use an optimized path-finding algorithmbecause the CPU resources are also needed by other algo-rithms, and because many games are real-time. The particularproblem addressed in this paper is grid-based path-finding.It is often used in real-time strategy games for example, inorder to find the shortest path for an agent to its ������� locationon the map.

A* [1] is the standard algorithm for finding shortest paths.The usual heuristic associated to A* is the Manhattan heuris-tic. We present data structures and heuristics that enable A*to be up to seven times faster than the usual implementation.

The contributions of this paper are :� It is better to use an array of stacks than a priority queue

for maintaining the open nodes of an A* search.� The ���� �������� heuristic is introduced, and is shown

to perform better than the Manhattan heuristic and thanthe ALT heuristic [2].

� It is useful for IDA* to maintain a two-step lazy cacheof the length of the shortest paths found so far.

� Adding a constant to the next threshold of IDA* enableslarge speed-ups at the cost of optimality. However thelengths of the paths are within 2% of the optimal. Sub-optimal IDA* can be competitive with A*.

� Recording the minimum f for each searched locationis useful to find dead-ends, but it can cut valid pathswhen used with a sufficiently large added constant to thethreshold of IDA*. Our program can detect and repairthe problem.

Section two describes related work. Section three presentsoptimizations related to the choice of the best open node.

Section four deals with the re-expansion of points. Sectionfive presents the different admissible heuristics we havetested. Section six details experimental results. Section sevenconcludes and outlines future work.

II. RELATED WORK