Strategies for Improving Neural Net Generalisation

Transcript of Strategies for Improving Neural Net Generalisation

Neural Comput & Applic (1995)3:27-37 (~) 1995 Springer-Verlag London Limited Neural

Computing & Applications

Strategies for Improving Neural Net Generalisation

D e r e k P a r t r i d g e and Nia l l Gr i f f i th

Department of Computer Science, University of Exeter, Exeter, UK

We address the problem of training multilayer perceptrons to instantiate a target function. In parti- cular, we explore the accuracy of the trained network on a test set of previously unseen patterns - the generalisation ability of the trained network. We systematically evaluate alternative strategies designed to improve the generalisation performance. The basic idea is to generate a diverse set of networks, each of which is designed to be an implementation of the target function. We then have a set of trained, alternative versions - a version set. The goal is to achieve 'useful diversity' within this set, and thus generate potential for improved generalisation per- formance of the set as a whole when compared to the performance of any individual version. We define this notion of 'useful diversity', we define a metric for it, we explore a number of ways of generating it, and we present the results of an empirical study of a number of strategies for exploiting it to achieve maximum generalisation performance. The strategies encompass statistical measures as well as a 'selector- net' approach which proves to be particularly promis- ing. The selector net is a form of 'metanet" that operates in conjunction with a version set.

Keywords: Multilayer perceptrons; Backpropag- ation; Generalisation; Generalisation diversity; Majority vote; Selector-net; Metanet; Version set

Received for publication 16 May 1994 Correspondence and offprint requests to: D. Partridge, Depart- ment of Computer Science, University of Exeter, Exeter EX4 4PT, UK. (email: [email protected])

1. Introduction

Feedforward networks, such as multilayer per- ceptrons, can be trained using the backpropagation algorithm to learn certain functions. Classically, such nets are trained to convergence (according to some criterion of acceptable error tolerance) with a set of training patterns, and then the trained net's generalisation properties are explored using a further set of patterns, the generalisation set. For all but the simplest problems, the trained nets seldom exhibit 100% generalisation, i.e. they seldom classify correctly all of the patterns in the generalisation set.

In this paper, we introduce and evaluate several strategies designed to improve the degree of general- isation obtained. The strategies are all based upon the use of a set of alternative trained nets (a version set) and techniques that exploit the degree of generalisation embodied by the population of nets as a whole. This is expected to be higher than that exhibited by any individual net in isolation, provided that:

1. The population of alternative version nets contain some 'useful diversity' (to be defined below).

2. The processing technique employed exploits the sort of diversity that the nets exhibit.

We test, compare and evaluate several statistical measures as well as a 'selector-net' strategy for optimising population performance on a generalis- ation test. From an analysis of the results obtained we generate both guidelines for the production of 'optimal' version sets, and for the exploitation of the diversity obtained in order to maximise generalisation performance.

28 D. Partridge and N. Griffith

2. Generalisation Differences in Version Sets

The initial stimulus for the idea of constructing a set of alternative versions for a given target problem is the much-repeated observation that network training is sensitive to a number of the learning parameters (e.g. specific randomisation of link weights). Thus different initial setups will lead to differently generalising nets. So by varying aspects of the training process (such as weight initialisation or architecture of net) a variety of 'different' trained nets is to be expected - a version set.

If, in such a set, none of the versions generalises correctly to 100% and they are all different, this difference must reside in the property that they each make different errors when presented with the test (or generalisation) set of patterns. This observation suggests that if we could engineer the difference between versions so that one version generalises correctly when another does not, the version set as a whole can potentially generalise better than any individual version in isolation. This observation, and the following statistical measures, are derived from Littlewood and Miller's [1] theoreti- cal work on multiversion software engineering which makes the point that 'methodological diversity' may reduce common errors and so increase overall system reliability.

If ~ the target function is the set of relations {f}, and the functions actually learned by the trained versions Va, Va, ..., Vw are the sets of relations {f~}, {fa}, ... {fN}, respectively, then this version set exhibits useful diversity if

N

= U 01 n {f,} i = 1

and ~

factual > #fmax,

where

fmax = {f} fq {fi}

when {f} 13 ~ } is the largest set resulting from intersecting {f} with each of the {f,-}s, and we aspire to obtain the situation where,

= Oq) u G}... {fu}. Unfortunately, as with so many aspects of neural computing, there is no known way to maximise useful diversity in a version set. There is, however,

1 The cardinality of set S is '~S.

a simple statistical measure of the diversity exhibited by a version set.

Non-diversity occurs when each version makes precisely the same mistakes. Notice that if two versions do make precisely the same errors when tested for generalisation, there is nothing to be gained, with respect to generalisation improvement, from consideration of the two nets together rather than either one separately.

The notion of useful diversity correlates well with the statistical notion of independence with respect to version failure. Consider two types of probability estimate that may be computed from the generalis- ation performance of a version set:

1. The probability that a randomly-selected version fails on a randomly-selected test pattern, p(1 fail).

2. The probability that two randomly-selected ver- sions both fail on a randomly-selected test pattern, p(2 both fail).

If our version set is, in fact, non-diverse (i.e. exactly the same errors are made by all N versions), then p(2 both fail) equals p(1 fail). The statistical definition of independence, however, says that two failure events are independent if, and only if

p(2 both fail) = p(1 fail) * p(1 fail).

Intuitively, we can interpret this independence condition as a lack of common failures in the version set. For it specifies that if one version fails on a test pattern any second version is no more (and no less) likely to also fail - the failure events are independent. The presence of common errors running through the version set would mean that if one version fails on a given test pattern, then the likelihood of failure of a second version on the same test pattern is increased. The presence of common errors in a version set (NB. this is the same as lack of useful diversity) will result in actual probability of 2-version failure, Pact(2 both fail), that is greater than the independence assumption would predict, p(1 fail) a, call it pina(2 both fail). And in the limiting case of a totally non-diverse set

Pact(2 bothfail) = p(1 fail).

So, by computing and comparing the three prob- ability estimates p(1 fail), pact(2 both fail) and pina(2 both fail) we obtain a measure of the useful diversity within a given version set. How do we compute these quantities?

N versions are tested on a large generalisation set of test patterns, and we record which versions fail on which test patterns. This allows us to compute the probability of exactly n versions failing on a

Strategies for Improving Neural Net Generalisation 29

randomly selected test, Pn. For example, if we find that 31 test patterns out of 1000 failed on precisely two versions (any two versions), then

31 - 0.031. P 2 - 1000

This calculation can be repeated for n = 1, 2 ... N giving the set of probabilities p , , P2, ... PN. Now

p(1 fail) N

= ~ prob (exactly n versions fail on this input n = l

and chosen version is one o f the failures) N

= E prob (chosen version failslexactly n versions n = l

fail) * prob (exactly n versions fail) N n

= E ~ * P n " n = l

The other probability estimate that needs to be computed from the data is Pact (2 both fail):

Pact(2 both fail) N

= ~'~ prob (exactly n versions fail on this input n = 2

and the two chosen ones are both failures) N

=E n ~ 2

prob (chosen versions both faillexactly n

versions fail) * prob (exactly n versions fail)

~-'N n , ( n - - 1 ) ,

= Z~ ~r~v ( N - l ) p'" n = 2

Finally, pind(2 both fail) is simply p(1 fail)L The measure of useful diversity in a version set

is then the difference between the actual 2-version failure probability and single-version failure, with pind(2 both fail) as a target to aim for well on the way to maximum diversity (when actual two-version failure is zero). A suitable metric is Generalisation Diversity (GD) [2], which is the ratio of actual diversity to maximum possible. The GD of a version set proves to be an indicator of the potential for generalisation improvement that resides in the set as a whole rather than in any individual network

GD = p ( l fail) - Pact(2 both fail) p( l fail)

This measure has a minimum value of 0 (when all versions in a set are identical with respect to a generalisation test), and a maximum value of 1 (when all versions are maximally different, i.e. every pattern failure is unique to one of the versions).

3. Useful Statistical Measures

Having obtained useful diversity within a version set, the next problem is to find an effective way of realising its potential in order to maximise the generalisation performance of the set as a whole.

As a basis against which to assess the worth of a proposed selection strategy, we use the statistic p(1 fail), which is, in effect, the average generalisation performance of a version set - actually (1 - p(1 fail)) is the average generalisation, pav(correct). This provides a baseline measure of the generalisation to be expected from a single net constructed and trained in the same way as the version set.

The necessary statistical measures are simplified if we restrict our study to boolean functions, for then the net output is either wrong (equivalent to 'fail') or right. Since there is only one wrong answer and one right answer, wrong answers must all agree, and so must all right answers.

3.1. Choose the Best One

However, once the trouble has been taken to generate and test a version set, then the obvious strategy is to select and use the one version that exhibits the best generalisation performance; call this Pmax(Correct) which is simply the best generalisation performance of an individual version within the version set, and generalisation performance is

(number o f generalisation tests correct) (total number o f tests)

It will, of course, be the case that Pmax(correct) > pay(correct), but in a version set containing useful diversity other version nets generalize correctly on occasions when pm~x(correct) does not. The main point of diverse version sets, then, is to use the whole set to exploit the fact that the set as a whole 'covers' the target function better than any single version does. The questions then are: how might this be done? And what improvements can be realised?

3.2. Use Simple Majority Vote

An obvious way to attempt to exploit diversity is to use a majority voting procedure. Let k be (N - majority number), then

prob(majority correct) = prob(at most k fail)

= prob(either exactly 0 fail o__r

exactly 1 fails o_rr.., or

30 D. Partridge and N. Griffith

exactly k fail) k

= E P i " i=0

This is not quite the statistic that we want, because when N is even we will not always obtain a majority outcome from the version set. We need to take into account the probability that a majority will be obtained. And in the current case of boolean functions there are just two components of a majority agreement: the majority are right and the majority are wrong.

We are now in a position to frame the required statistic:

prob( chosen answer is correct given a majority decision)

prob(majority correct) prob(majority outcome)

_ prob(majority correct) 1 - prob(no majority)

prob(majority correct) 1 - prob(tied decision)

prob(majority correct) 1 - prob(exactly N/2 versions fail)"

This expression simplifies in different ways depen- dent upon whether N is odd or even. When N is odd then the denominator is unity, because with only two outcomes there must always be a majority in agreement - prob(tied decision)=O. But when N is even it will be less than or equal to unity, because a majority outcome may not always occur - a tie with half right and half wrong is a possibility. This gives us two distinct cases:

prob(majority correct given a majority decision)

f prob(majority correct) ~ - - 1 - - P N / 2 - - whenNiseven, I - - ~prob(majority correct) when N is odd.

For this strategy to work well, it would need to be the case that common errors were restricted to a minority of versions. It is thus expected that a majority-vote strategy will be most productive when diversity is high but the range of individual version generalisation performance is small, i.e. the differ- ence between pr,~(correct) and pav(correct) is small.

Apart from simple majority vote, there are many other majority-vote strategies which can be summarised as a majority of any subset of versions, e.g. a majority of three versions out of 10. We

have not explored any of these varieties of majority voting strategies.

Beyond capitalising on majority-vote decisions, it may be possible to also exploit consistent and correct, but minority-vote, behaviours in a version set. One way to do this might be to train a 'selector- net ' to learn such minority-vote decisions.

4. Selector-Net Strategy

We will now describe one possible strategy, using a 'selector-net' , to utilise some of the insights described above to improve the generalisation performance exhibited by a version set. In this context, the selector-net is defined as a network which uses the outputs from a version set, concat- enated together, as its input. The input to the selector-net may - optionally - include the original input patterns to the function as well as the outputs of the version set on these same input patterns. In either case, the selector-net input is mapped to the output associated with the original input. Thus, the basic form of selector-net is conceived as a switch - or vote collection system - in which the solutions arrived at over a number of network versions are discriminated. The architecture is outlined in Fig. 1.

Fig. 1. A selector-net architecture showing outputs from original function versions plus original input patterns mapping to target.

Strategies for Improving Neural Net Generalisation 31

4.1. Selector-Net Input

The input to a selector-net can be constructed in various ways. In this study we explored the following four input forms:

1. Using Version Set Ouputs Alone la. A vector comprising the version-set outputs (NO); one vector for each input/target pair (ITP), is mapped to the target of the ITP. Each version output for an ITP is thresholded to be 0 or 1 - in this case the threshold was 0.5.

lb . A vector of version-set outputs, one vector for each ITP, is mapped to the target of the ITP. Each individual version output, used unthresholded, is a rational number n, where 0 -< n - 1.

2. Using Version Set Outputs + Original Inputs 2a. The original input vectors (OI)are concatenated with the vector of thresholded version-set outputs (NO). The OI vector contains rational numbers, 0 -< n -< 1, while the version-set output (NO) is a binary vector.

2b. OI is concatenated with the unthresholded version-set output. In this situation the input to the selector-net will be a vector in which all values will b e 0 _ n _ < l .

Other ways of constructing input for the selector- net include normalising version-set output and original input pattern before and/or after they have been concatenated. However, we have restricted our initial study to only these four 'forms'.

5. An Exploratory Study

A non-trivial, well-defined function (known as LIC 1) was chosen as the problem upon which to test our generalisation improvement strategies. The problem is to determine whether the euclidean distance between two points (each specified by x-y coordinates) is greater than some given LENGTH. There are thus five inputs to the nets, xl , YI, x2, Y2 and LENGTH, each given as a decimal number between 0.0 and 1.0 to three decimal places. The output is simply true or false. There is nothing special about this function except that it forms part of a larger software engineering problem to which we are applying neural computing techniques (see elsewhere [3] for details).

An initial investigation concentrated on training selector-nets on input patterns constructed in the ways outlined above (forms (la)-(2b)) which, of course, includes the training and testing of a number

of version sets. The six version sets used were derived from three basic version sets, called A, W and T. These version sets consisted of 10 version networks each:

The A set varied the number of output units between 1 and 10 while holding constant: the initial conditions (weight seed 9), the number of hidden units (10), and the training set (1000 patterns, randomly selected using seed 1 in the ITP generator).

The W set varied the initial conditions by varying the weight seed while holding network architecture constant (5-10-3, input-hidden-out- put, respectively) 2 and using a single training set (the same one as for set A).

Individual versions in the T set were each trained with a different 1000 patterns (using seeds 1 through 10) while holding the network architec- ture and initial conditions constant (5-10-3 and weight seed 9, respectively).

A further two sets, each containing 1000 patterns, were randomly generated (using seeds 11 and 13, respectively): the selector-net-training set, and a 'selection' set (used for further version-set selection, see below). These were presented to the nets comprising the A, W and T sets, producing two sets of results for all 30 networks.

Three further populations of 10 versions each were then extracted from the A, W and T popu- lations. These were

the R set - 10 versions, randomly selected from sets A, W and T

the PL set - the 10 versions with the lowest coincident failures extracted from the A, W and T sets when tested with the 1000-pattern selector- net-training set

the PT set - the 10 versions with the lowest coincident failures extracted from the A, W and T sets when tested with the 1000-pattern 'selection' set.

5.1. Selector-Net Training

In all there were 24 input files - six sets of versions each examined with the four input forms. Each training file was used to train a family of nine selector-nets - created across three weight seeds

2 The use of three output units in these simulations, which require only one, was part of another aspect of the studies investigating speed of learning. It does not affect the results presented.

32 D. Partridge and N. Griffith

(1 35 82) by three hidden unit counts (3 6 9). All the selector-nets were trained for 5000 cycles through the 1000-pattern selector-net-training set (5 000000 pattern presentations). In general, it was found that the majority of patterns were learned in the first 500 cycles. The averages and maximums of the percentages of patterns learned over the parametric variations for each of the component version sets and input forms is shown in Table 2 (upper half).

In general, the selector-nets are unable to learn all the patterns although they are often very close and a few do learn the training set 100%.

5.2. Version Set Generalisation

The generalisation ability of the nets (both individual version nets and selector-nets) was explored using a test set of 161 051 patterns. This set was generated to exhibit complete, uniform coverage of the prob- lem space - 11 evenly spaced points (i.e. 0 to 1 in steps of 0.1) were identified for each of the five inputs, and all combinations of these points were used to provide the test input patterns (161,051 = 115).

The generalisation diversity and performance indicators for the test of the six version sets are given in Table 1 (all tabulated generalisation figures are given as percentages). A first point to note is that there is a trend, of improvement of generalisation performance of sets as a whole when set against the performances of individual versions, that correlates with increasing GD value. In particular, it can be seen that the majority-vote statistic realises over 2% improvement in generalisation (in comparison to the worst individual net) in the highest diversity case, set T. Set against this, however, is the fact that the majority-vote statistic realises a higher generalisation performance than the best individual net in only one case, set PT. More will be said about this point in the final discussion section.

Of particular interest for direct comparison pur- poses are the three highest GD sets (set T with GD of 0.554, set PT with GD of 0.550 and set R with GD of 0.549). Notice that it is the PT set which exhibits a majority-vote result that exceeds the performance of the best individual version (and it is the only set to do this). The reason for this reversal of casual expectation (i.e. it is to be expected of the highest GD set rather than a lower one) is to be found in the variety of the individual versions that comprise each set. The versions in the T set exhibit the widest range of generalisation performance of any of our six sets - the range is from 92.41% to 94.67%, i.e. 2.26%. In the PT set this difference is only 1.58%. It is this wide range of performance that undermines the effectiveness of the majority-vote approach despite the relatively high GD value. However, the R set, which has a GD value very close to that of the PT set, has a 'max-rain' value (i.e. maximum minus minimum generalisation in Table 1) only slightly larger, and yet fails to exhibit a majority-vote performance that is higher than the best individual performance. But notice that the 'max-av' (i.e. maximum minus average generalisation) value for the R set is 50% higher than for the PT set (0.73% as against 0.49%), which also supports the earlier comment about the effectiveness of the majority-vote statistic, i.e. it will be effective when diversity is high and the range of individual nets is small. The 'max-av' column in Table 1 appears to be a useful measure of this range characteristic.

5.3. Selector-Net Generalisation

We now consider the generalisation achievements of the various selector-net forms in conjunction with each of the six version sets.

The results of this comparison are interesting, while not coinciding with intuition. The Table 2

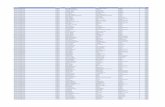

Table 1. Generalisation performance of version sets and improvements realised (test 161 051 structured vectors).

Version Sets Improvement

Average Maximum Minimum Majority GD Max-min Max-av Maj-av Maj-max Maj-min

A 93.91 94.55 93.33 94.24 0.443 1.22 0.64 0.33 -0.31 0.91 W 94.11 94.76 93.46 94.15 0.384 1.30 0.65 0.04 -0.61 0.69 T 93.77 94.67 92.41 94.54 0.554 2.26 0.90 0.77 -0.13 2.13 PL 94.21 94.76 93.18 94.52 0.500 1.58 0.55 0.31 -0.24 1.34 PT 94.27 94.76 93.18 94.90 0.550 1.58 0.49 0.63 0.14 1.72 R 93.94 94.67 93.04 94.58 0.549 1.63 0.73 0.64 - 0.09 1.54

Strategies for Improving Neural Net Generalisation 33

Table 2. Average and maximum generalisation performance for the six version sets for LIC 1, compared to average and maximum performances for the four different forms of selector-net.

Training - 1000 random vectors

Version sets la lb 2a 2b

Pop av. max. av. max. av. max. av. max. av. max.

A 94.87 95.90 97.90 98.50 99.51 99.90 99.63 99.90 99.60 99.80 W 95.28 96.20 98.91 99.40 99.47 99.90 99.67 99.80 99.80 99.90 T 94.38 95.90 99.72 99.80 99.76 99.90 99.84 100.0 99.81 100.0 PL 95.62 96.20 99.09 99.20 99.64 100.0 99.69 99.70 99.72 99.80 PT 95.11 96.20 99.38 99.50 99.81 99.90 99.86 100.00 99.99 100.0 R 94.82 95.90 99.53 99.70 99.90 100.0 99.90 100.0 98.30 99.90

Test - 161051 structured vectors

Version sets la lb 2a 2b

Pop av. max. av. max. av. max. av. max. avo max

A 93.91 94.55 94.97 95.60 95.98 96.24 95.79 96.00 96.10 96.20 W 94.11 94.76 95.98 96.45 96.08 96.57 96.54 96.73 96.69 96.81 T 93.66 94.67 97.11 97.38 97.24 97.57 96.93 97.29 96.94 97.37 PL 94.21 94.76 96.82 97.11 97.09 97.26 97.13 97.27 97.30 97.34 PT 94.27 94.76 96.64 96.78 97.45 97.57 97.09 97.18 97.20 97.24 R 93.94 94.67 96.82 96.98 97.18 97.39 96.88 97.07 97.48 97.63

shows the average and maximum generalisation performance for both the solution nets (the version sets) and the selector-nets (in each of the four input forms). In addition, this table shows these performance figures for both the selector-net training set (which is, in effect, a test set for the individual solution nets), and the selector-net test set. The test set generalises 2 % - 3 % less accurately than the training set (see lower half of Table 2). Notice also that the version sets generalise about 1% lower on the large, structured test set when compared to the 1000-pattern, random set (compare 'version set' columns for lower and upper halves of the table as the selector-net-training set is a test set for the individual versions). This indicates that the struc- tured test set is more 'demanding' than a randomly- selected pattern set, as might be expected from a set that contains all the extreme patterns (i.e. all combinations of the boundary conditions of the problem space).

However , the first question that needs answering is whether or not the selector-net solutions deliver better generalisation performance than the popu- lations of networks they are derived from.

The result of the majority-vote statistic applied

to the generalisation results (of both the version sets and the selector-nets based on them) is given in Table 3. As can be seen the selector-nets improve generalisation performance in all cases - the majority vote from a set of selector-nets is bet ter than a majority vote taken from the set of individual solution nets. A more demanding test is a compari- son of average selector-net performance against the maximum and majority-vote performances of the sets of solution nets. This data is given in Table 4. As can be seen, again in all cases the average selector-net generalisation performance is better than is obtained from their component version sets.

The question is what underlies these figures. And the main question to be answered is, perhaps, why is the selector-net strategy so effective?

There are a number of obvious difficulties that the selector-nets face:

1. Inconsistent data, both within the training set, and between training and testing sets; the nature and degree of inconsistency varies between the four input forms:

Form la strictly, no inconsistent data, but only marginal differences in unthresholded output values

34 D. Partridge and N. Griffith

Table 3. Majority vote statistics for the six version sets for LIC 1, compared to the majority vote statistic for the four different forms of selector-net (best figure for each form is underlined).

Training 1000 random vectors

Version set la lb 2a 2b

A 95.40 98.30 99.50 99.60 99.60 W 95.40 98.80 99.60 99.80 99.90 T 94.60 99.70 99.80 99.80 99.90 PL 96.50 99.20 99.60 99~70 99.70 PT 96.30 99.50 99.80 99.80 100.0 R 95.40 99.70 99.90 100.0 99.80

Test - 161051 structured vectors

Version set la lb 2a 2b

A 94.24 95.28 96.11 95.67 96.11 W 94.15 96.02 96.33 96.74 96.89 T 94.54 97.13 97.30 97.15 97.06 PL 94.52 96.96 97.24 97.24 97.32 PT 94.90 96.66 97.47 97.14 97.21 R 94.58 96.92 97.24 97.02 97.56

(but proximity of actual output value to target is not necessarily indicative of reliability of output)

Form lb totally inconsistent data; this is because any majority vote for one class can be identical to a minority vote for the other class e.g. for 10 nets, the thresholded output, and thus selector-net input, might be two patterns thus:

1 0 1 0 1 1 1 0 1 1 1 ~ 1 (Majority Vote for True)

1 0 1 0 1 1 1 0 1 1 l ~ 0 ( M i n o r i t y V o t e f o r False)

If two such pattern pairings are in the training data then the selector-net will not be able to learn this one-to-two mapping.

Forms 2a & 2b inconsistent data potentially disambi- guated by the original input; however, the idea that the original input will help appears quite tenuous - these are the very patterns that the individual version nets found difficult to learn, and thus the disambiguating characteristics may be deeply 'buried' in the raw inputs which were five real numbers. 2. Position-insensitive minority patterns, e.g. any 3 out of 10 ls map to 1. 3. Position-sensitive minority patterns, e.g. a specific pattern of 3 ls, such as 1000010001, maps to 1,

but any other 3 out of 10 ls maps to 0 (i.e. it is a majority vote for 0).

However, if some subset of the 10 version nets can reliably compute a partial subclass of 'difficult' inputs (say inputs where one coordinate point is at the origin, i.e. 0.0, 0.0, x2 Yz, LENGTH or xl, Yl, 0.0, 0.0, LENGTH), the selector-net might be expected to learn this.

6. Related Work

There are many studies that address some aspect of generalisation in neural networks, but we know of none that address it from the same viewpoint as the current paper, i.e. from the viewpoint of generalisation improvement through version-set diversity. In fact, the closest work in this regard is from conventional software engineering, where it has long been believed that more reliability might be realised in a software system composed of a number of independently developed versions. This was, in fact, a major stimulus to the current study, and has been summarised elsewhere [4]. However, the most recent statistical model to be developed as a result of several substantial empirical studies in multiversion software engineering [1] did, as already noted, provide the basis for some of our current approaches.

Within the neural network domain, Denker et al. [5] provided some quantitative measures for the potential scope for generalisation that a given network architecture exhibits. And from almost the opposite direction (to our empirically driven studies) Holden [6, 7] has made progress towards a theoreti- cal quantification of the generalisation to be expected from a consideration of the network architecture together with certain characteristics of the training data (notably size of set and distribution within the target function). It remains to be seen whether the emerging theoretical predictions can be complemented with empirical results such as ours. One problem appears to be that multilayer perceptrons are not the sort of neural nets that succumb easily to this particular theoretical treat- ment - radial basis functions are more amenable and thus preferred.

Current studies of ours [8] indicate that a couple of percent increase in the generalisation performance of individual nets (i.e. the nets of the version set) can be achieved by systematic selection of training patterns rather than a purely random training set, as used in the study reported.

Work by Sharkey and Sharkey [9] on adaptive

Strategies for Improving Neural Net Generalisation

Table 4. Comparison of average performance of selector-nets with average, maximum and majority-vote performance of the component version sets (best forms are underlined).

35

Test 161051 structured vectors

Version sets Selector-nets

Average Maximum Majority la lb 2a 2b Average Average Average Average

A 93.91 94.55 94.24 94.97 95.98 95.79 96.10 W 94.11 94.76 94.15 95.98 96.08 96.54 96.69 T 93.77 94.67 94.54 97.11 97.24 96.93 96.94 PL 94.21 94.76 94.52 96.82 97.09 97.13 97.30 PT 94.27 94.76 94.90 96.64 97.45 97.09 97.20 R 93.94 94.67 94.58 96.82 97.18 96.88 97.48

Selector-Net Improvement

Pop Statistic la lb 2a 2b

A av. max. maJ.

W av. max. maj .

T av. max. maj .

PL av. max. maj.

PT av. max. maj .

R av. max. maj .

1.06 2.07 1.88 2.19 0.42 1.43 1.24 1.55 0.73 1.74 1.55 1.86 1.87 1.97 2.43 2.58 1.22 1.32 1.78 1.93 1.83 1.93 2.39 2.54 3.34 3.47 3.16 3.17 2.44 2.57 2.26 2.27 2.57 2.70 2.39 2.54 2.61 2.88 2.92 3.09 2.06 2.33 2.37 2.54 2.30 2.57 2.61 2.78 2.37 3.18 2.82 2.93 1.88 2.69 2.33 2.44 1.74 2.55 2.19 2.30 2.88 3.24 2.94 3.54 2.15 2.51 2.21 2.81 2.24 2.60 2.30 2.90

generalisation has explored the phenomenon of transfer of generalisation performance , f rom nets trained in one domain to application of the same nets in another. They quantified the amount of ' task structure' a trained net extracted and to what degree it was transferred between tasks.

7. Discussion

As stated earlier, we were somewhat surprised at the consistency of generalisation improvement delivered by all selector-net experiments. To explore this result, we under took a detailed analysis of the training of several of the selector-nets (one based on the T version set, and one based on the PL set).

The training data for the PL-based selector-nets included just four instances (out of 1000 total) of minority-vote patterns; only one of which was involved in a contradiction with an instance of precisely the same pat tern (of version-net outputs, i.e. selector-net input) mapping to the other output. Just two of the minority-vote patterns were instances of the same position-insensitive minority vote (4 ls mapping to 1). Very little position sensitivity was found in the solution net outputs. Only 66 distinct patterns of ls and 0s were found in the 1000 selector-net training patterns - 1024 are possible. There were only 4 instances of contradictions in the complete training set, but they occurred in classes that totalled 502 instances, i.e. over 50% of the training data. The main contributor here was

36 D. Partridge and N. Griffith

that the class of 10 ls mapping to 1 (488 instances) contained a contradictory instance of 10 ls mapping to 0!

Selector-net training involving the T version set revealed 12 instances of minority-vote patterns, including nine instances in one class (4 ls mapping to 0) but only two being exact repeats, i.e. almost no position sensitivity. This population generated only one contradictory pattern - 9 ls mapping to 0 in conjunction with 34 instances of 9 ls mapping to 1. Looking at position sensitivity in this class, the contradictory pattern was a precise repeat of just five of the 34 instances. Again, in general, very little position sensitivity was found. The set contained 86 distinct patterns, two of which (the 10 ls and the 10 0s) accounted for 867 of the 1000 training patterns.

The detailed analysis of the selector-net training data confirms that there were inconsistencies within it, but very few of them. In addition, it highlights the fact that the vast bulk of training was for the large majority votes, very little of the training data was actually training minority-vote behaviour. One final point revealed by this analysis is that it provides support for the crucial assumption underlying the majority vote statistic: indifference with respect to which actual version is correct, i.e. the probability that, say, seven out of 10 are correct means any seven not a particular seven. This is the implication from our failure to find any significant amount of position-sensitive minority votes, although it must be made clear that no firm general conclusions can be based on so few instances of minority-vote situations.

There are, of course, two different types of minority-vote behaviour possible; we have called them 'position-sensitive' and 'positive-insensitive' which can be viewed as a counting task. And while, in a general sense, we expect a selector-net strategy to be able to improve on a simple majority-vote statistic by learning, in addition, the minority-vote behaviours, it is not entirely clear which (or both) of these types of minority-vote behaviour are to be expected or 'encouraged' within the version sets.

Clearly, any position-insensitive minority vote (say, 3 ls mapping to 1) is also likely to coincide with a majority vote (in this case, 7 0s mapping to 0), and hence generate inconsistencies in the selec- tor-net training data. There is much more scope for position-sensitive minority votes that are systemati- cally distinguishable from majority votes (e.g. the pattern 1110000000 might map to 1, while all other possibilities for 7 0s and 3 ls map to 0). An argument for the production of position-sensitive minority votes in a version set can be based upon

the notion that certain individual versions are trained as 'specialists' in some subset of the function, then we might expect a selector-net to learn these specialisms in its component version set. As a result the trained selector-net would be expected to give priority to certain versions when it detects particular subset characteristics in the original input (selector- net forms (2a) and (2b)).

But given the absence of position-sensitive min- ority votes within the selector-net training data, and given the empirical fact that all selector-nets generalise consistently better than a majority-vote statistic on their component version sets, we must conclude that the selector-net strategy is able to learn (and thus capitalize on) the small amount of minority-vote behaviour present in the training sets. And this largely position-insensitive data is learned in addition to the majority-vote data, despite the presence of a few inconsistencies in the training set.

We might also observe that for most of the version sets, the majority-vote reliability was slightly inferior to the performance of the best version in the set. The exception was the PT version set. But set against this seemingly clear indication that the majority-vote strategy is not worth pursuing, we might note several points:

1. The inferiority of the majority-vote strategy is small (maximum 0.61%).

2. The GD in our version sets was low (maximum of 0.554 for sets T, PT, and R), and it is only when GD exceeds 0.5 that the majority-vote statistic really begins to pay off.

3. There is a question of the 'stability' of the best individual net in a version set versus the consistency of a majority-vote statistic over the complete set. Inspection of the results from three test sets revealed inconclusive evidence about the 'stability' of the best version in a set - within the A set the same version was always the best, but otherwise the data pointed in opposite directions, especially for the T, PL, PT and R sets: between two test sets the same net was always the best for all six version sets, but between both other pairs of test sets the same net was never the best.

4. The majority-vote generalisation was always better than the average generalisation of the version sets.

5. Comparison of the results of the T and PT sets supports the supposition that, in addition to GD value, the range of the generalisation performances of the individual versions should be taken into account - a minimum range coupled with maximum

Strategies for Improving Neural Net Generalisation 37

GD characterises the most potentially exploitable situation.

In sum, if only one net is trained then some 'average' generalisation performance can be expected (although it might be a worst case). To ensure that worst cases are avoided, several versions need to be trained and compared. However, once this is done, a version set is then available, and other options for obtaining the best generalisation performance open up.

This initial study clearly favours the use of a selector-net strategy as the most reliable way to improve generalisation performance. And within the selector-net approaches it clearly favours form (2b), in which the selector-net receives maximum information: unthresholded version-set outputs con- catenated with original input patterns as a selector- net input. Adoption of a selector-net strategy clearly requires a further commitment of resources (over and above the extra commitment required to generate a version set rather than a single version), but the pay-off seems to be non-trivial - 2%-3% improvement of generalisation performance on ver- sions that already generalise at about the 95% level. There remains, however, much to be understood in the selector-net strategy, but with the emergence of further understanding we may expect even more effective exploitation of the idea.

The question of how best to construct an effective version set to use is also not simply answered, except that it should not be an A or a W set. The other four version sets are not easily separable with respect to ability to support the selector-net strategy. The R set is dearly the best in conjunction with the best selector-net form (2b). When the R set and the (2b) selector-net input form are combined we see the greatest improvements (3.54%, 2.81% and 2.90% improvements over average, maximum and majority vote, respectively), and the highest average selector-net generalisation performance (97.48%). And this is despite the R set having a

relatively low average generalisation performance (only 93.94%, three versions sets were higher than this).

Acknowledgements. Thanks to Stuart Jackson who ran the original simulations used in this study, to Bill Yates who devised and generated the 161051 test set, and to Wojtek Krzanowski for his help with the statistics. This research was funded by SERC/DTI Grant GR/H85427. Noel Sharkey first suggested that the use of some sort of metanet might be a good strategy.

References

1. Littlewood B, Miller DR. Conceptual modeling of coincident failures in multiversion software. IEEE Trans Software Eng 1989; 15(12)

2. Partridge D. Network generalization differences quant- ified. Technical Report 291, Department of Computer Science, University of Exeter, 1994

3. Partridge D, Sharkey NE. Use of neural computing in multiversion software reliability. In Redmill F, Anderson T. (eds). Technology and Assessment of Safety-Critical Systems. Springer-Verlag, London, 1994, pp 224-235

4. Partridge D, Sharkey NE. Neural computing for software reliability. Expert Systems, 1994

5. Denker J, Schwartz D, Wittner B, Solla S, Howard R, Jackel L, Hopfield J. Large automatic learning rule extraction and generalisation. Complex Systems, 1987; 1

6. Holden SB. Neural networks and the VC dimension. Technical Report CUED/F-INFENG/TR.119, Depart- ment of Engineering, University of Cambridge, 1992

7. Holden SB, Rayner PJW. Generalization and PAC learning: some new results for the class of generalized single layer networks. IEEE Trans Neural Networks 1994 (in press)

8. Partridge D, Collins T. Neural net training: random versus systematic. Technical Report 302, Department of Computer Science, University of Exeter, 1994. (To appear in: JG Taylor (ed). Adaptive Computing and Information Processing: Neural Networks)

9. Sharkey NE, Sharkey AJC. Adaptive generalisation. Technical Report 256, Department of ,Computer Sci- ence, University of Exeter, 1993